Abstract

Purpose

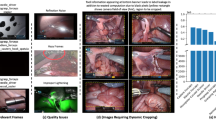

A fully automated surgical tool detection framework is proposed for endoscopic video streams. State-of-the-art surgical tool detection methods rely on supervised one-vs-all or multi-class classification techniques, completely ignoring the co-occurrence relationship of the tools and the associated class imbalance.

Methods

In this paper, we formulate tool detection as a multi-label classification task where tool co-occurrences are treated as separate classes. In addition, imbalance on tool co-occurrences is analyzed and stratification techniques are employed to address the imbalance during convolutional neural network (CNN) training. Moreover, temporal smoothing is introduced as an online post-processing step to enhance runtime prediction.

Results

Quantitative analysis is performed on the M2CAI16 tool detection dataset to highlight the importance of stratification, temporal smoothing and the overall framework for tool detection.

Conclusion

The analysis on tool imbalance, backed by the empirical results, indicates the need and superiority of the proposed framework over state-of-the-art techniques.

Similar content being viewed by others

References

Allan M, Chang PL, Ourselin S, Hawkes DJ, Sridhar A, Kelly J, Stoyanov D (2015) Image based surgical instrument pose estimation with multi-class labelling and optical flow. In: International conference on medical image computing and computer-assisted intervention, Springer, pp 331–338

Blum T, Feußner H, Navab N (2010) Modeling and segmentation of surgical workflow from laparoscopic video. In: International conference on medical image computing and computer-assisted intervention, Springer, pp 400–407

Bouget D, Benenson R, Omran M, Riffaud L, Schiele B, Jannin P (2015) Detecting surgical tools by modelling local appearance and global shape. IEEE Trans Med Imaging 34(12):2603–2617

Charte F, Rivera AJ, del Jesus MJ, Herrera F (2015) Addressing imbalance in multilabel classification: measures and random resampling algorithms. Neurocomputing 163:3–16

Donaldson MS, Corrigan JM, Kohn LT (2000) To err is human: building a safer health system, vol 6. National Academies Press, Washington

Gu Z, Gu L, Eils R, Schlesner M, Brors B (2014) circlize implements and enhances circular visualization in R. Bioinformatics. Oxford Univ Press, p btu393

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, pp 1097–1105

Lex A, Gehlenborg N, Strobelt H, Vuillemot R, Pfister H (2014) Upset: visualization of intersecting sets. IEEE Trans Visual Comput Graphics 20(12):1983–1992

Padoy N, Blum T, Ahmadi SA, Feussner H, Berger MO, Navab N (2012) Statistical modeling and recognition of surgical workflow. Med Image Anal 16(3):632–641

Raju A, Wang S, Huang J (2016) M2cai surgical tool detection challenge report. http://camma.u-strasbg.fr/m2cai2016/reports/Raju-Tool.pdf

Sahu M, Moerman D, Mewes P, Mountney P, Rose G (2016a) Instrument state recognition and tracking for effective control of robotized laparoscopic systems. Int J Mech Eng Rob Res 5(1):33

Sahu M, Mukhopadhyay A, Szengel A, Zachow S (2016b) Tool and phase recognition using contextual CNN features. arXiv preprint arXiv:1610.08854

Sechidis K, Tsoumakas G, Vlahavas I (2011) On the stratification of multi-label data. In: Joint European conference on machine learning and knowledge discovery in databases, Springer, pp 145–158

Speidel S, Benzko J, Krappe S, Sudra G, Azad P, Müller-Stich BP, Gutt C, Dillmann R (2009) Automatic classification of minimally invasive instruments based on endoscopic image sequences. In: SPIE medical imaging, International society for optics and photonics, p 72,610A

Sznitman R, Becker C, Fua P (2014) Fast part-based classification for instrument detection in minimally invasive surgery. In: International conference on medical image computing and computer-assisted intervention, Springer, pp 692–699

Twinanda AP, Mutter D, Marescaux J, de Mathelin M, Padoy N (2016a) Single- and multi-task architectures for tool presence detection challenge at M2CAI 2016. arXiv preprint arXiv:1610.08851

Twinanda AP, Shehata S, Mutter D, Marescaux J, de Mathelin M, Padoy N (2016b) Endonet: a deep architecture for recognition tasks on laparoscopic videos. arXiv preprint arXiv:1602.03012

Voros S, Long JA, Cinquin P (2007) Automatic detection of instruments in laparoscopic images: a first step towards high-level command of robotic endoscopic holders. Int J Rob Res 26(11–12):1173–1190

Zappella L, Béjar B, Hager G, Vidal R (2013) Surgical gesture classification from video and kinematic data. Med Image Anal 17(7):732–745

Acknowledgements

This study was funded by German Federal Ministry of Education and Research (BMBF) under the project BIOPASS (Grant No. 16 5V 7257).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Human and animal rights statement

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed consent

This article contains patient data from a publically available dataset.

Rights and permissions

About this article

Cite this article

Sahu, M., Mukhopadhyay, A., Szengel, A. et al. Addressing multi-label imbalance problem of surgical tool detection using CNN. Int J CARS 12, 1013–1020 (2017). https://doi.org/10.1007/s11548-017-1565-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-017-1565-x