Abstract

Test items for which the item score reflects a sequential or IRTree modeling outcome are considered. For such items, we argue that item-specific factors, although not empirically measurable, will often be present across stages of the same item. In this paper, we present a conceptual model that incorporates such factors. We use the model to demonstrate how the varying conditional distributions of item-specific factors across stages become absorbed into the stage-specific item discrimination and difficulty parameters, creating ambiguity in the interpretations of item and person parameters beyond the first stage. We discuss implications in relation to various applications considered in the literature, including methodological studies of (1) repeated attempt items; (2) answer change/review, (3) on-demand item hints; (4) item skipping behavior; and (5) Likert scale items. Our own empirical applications, as well as several examples published in the literature, show patterns of violations of item parameter invariance across stages that are highly suggestive of item-specific factors. For applications using sequential or IRTree models as analytical models, or for which the resulting item score might be viewed as outcomes of such a process, we recommend (1) regular inspection of data or analytic results for empirical evidence (or theoretical expectations) of item-specific factors; and (2) sensitivity analyses to evaluate the implications of item-specific factors for the intended inferences or applications.

Similar content being viewed by others

References

Akkermans, W. (2000). Modelling sequentially scored item responses. British Journal of Mathematical and Statistical Psychology, 53(1), 83–98. https://doi.org/10.1348/000711000159196

Bechger, T. M., & Akkermans, W. (2001). A note on the equivalence of the graded response model and the sequential model. Psychometrika, 66(3), 461–463. https://doi.org/10.1007/BF02294445

Bergner, Y., Choi, I., & Castellano, K. E. (2019). Item response models for multiple attempts with incomplete data. Journal of Educational Measurement, 56(2), 415–436. https://doi.org/10.1111/jedm.12214

Böckenholt, U. (2012). Modeling multiple response processes in judgment and choice. Psychological Methods, 17(4), 665. https://doi.org/10.1037/a0028111

Böckenholt, U., & Meiser, T. (2017). Response style analysis with threshold and multi-process IRT models: A review and tutorial. British Journal of Mathematical and Statistical Psychology, 70(1), 159–181. https://doi.org/10.1111/bmsp.12086

Bolsinova, M., Deonovic, B., Arieli-Attali, M., Settles, B., Hagiwara, M., & Maris, G. (2022). Measurement of ability in adaptive learning and assessment systems when learners use on-demand hints. Applied Psychological Measurement, 46(3), 219–235. https://doi.org/10.1177/01466216221084208

Culpepper, S. A. (2014). If at first you don’t succeed, try, try again: Applications of sequential IRT models to cognitive assessments. Applied Psychological Measurement, 38(8), 632–644. https://doi.org/10.1177/0146621614536464

De Boeck, P., & Wilson, M. (2014). Multidimensional explanatory item response modeling. In Handbook of item response theory modeling (pp. 270–289). Routledge. https://doi.org/10.4324/9781315736013-22

Debeer, D., Janssen, R., & De Boeck, P. (2017). Modeling skipped and not-reached items using IRTrees. Journal of Educational Measurement, 54(3), 333–363. https://doi.org/10.1111/jedm.12147

Hemker, B. T., Andries van der Ark, L., & Sijtsma, K. (2001). On measurement properties of continuation ratio models. Psychometrika, 66(4), 487–506. https://doi.org/10.1007/BF02296191

Henninger, M., & Plieninger, H. (2021). Different styles, different times: How response times can inform our knowledge about the response process in rating scale measurement. Assessment, 28(5), 1301–1319. https://doi.org/10.1177/1073191119900003

Jeon, M., & De Boeck, P. (2016). A generalized item response tree model for psychological assessments. Behavior Research Methods, 48(3), 1070–1085. https://doi.org/10.3758/s13428-015-0631-y

Jeon, M., & De Boeck, P. (2019). Evaluation on types of invariance in studying extreme response bias with an IRTree approach. British Journal of Mathematical and Statistical Psychology, 72(3), 517–537. https://doi.org/10.1111/bmsp.12182

Jeon, M., De Boeck, P., & van der Linden, W. (2017). Modeling answer change behavior: An application of a generalized item response tree model. Journal of Educational and Behavioral Statistics, 42(4), 467–490. https://doi.org/10.3102/1076998616688015

Jeon, M., Rijmen, F., & Rabe-Hesketh, S. (2014). Flexible item response theory modeling with FLIRT. Applied Psychological Measurement, 38(5), 404–405. https://doi.org/10.1177/0146621614524982

Khorramdel, L., & von Davier, M. (2014). Measuring response styles across the big five: A multiscale extension of an approach using multinomial processing trees. Multivariate Behavioral Research, 49(2), 161–177. https://doi.org/10.1080/00273171.2013.866536

Kim, N., & Bolt, D. M. (2021). A mixture irtree model for extreme response style: Accounting for response process uncertainty. Educational and Psychological Measurement, 81(1), 131–154. https://doi.org/10.1177/0013164420913915

Kim, Y. (2020). Partial identification of answer reviewing effects in multiple-choice exams. Journal of Educational Measurement, 57(4), 511–526. https://doi.org/10.1111/jedm.12259

Masters, G. N. (1982). A rasch model for partial credit scoring. Psychometrika, 47(2), 149–174. https://doi.org/10.1007/BF02296272

Meiser, T., Plieninger, H., & Henninger, M. (2019). IRTree models with ordinal and multidimensional decision nodes for response styles and trait-based rating responses. British Journal of Mathematical and Statistical Psychology, 72(3), 501–516. https://doi.org/10.1111/bmsp.12158

Mellenbergh, G. J. (1995). Conceptual notes on models for discrete polytomous item responses. Applied Psychological Measurement, 19(1), 91–100. https://doi.org/10.1177/014662169501900110

Plieninger, H. (2021). Developing and applying IR-tree models: Guidelines, caveats, and an extension to multiple groups. Organizational Research Methods, 24(3), 654–670. https://doi.org/10.1177/1094428120911096

Rizopoulos, D. (2007). ltm: An R package for latent variable modeling and item response analysis. Journal of Statistical Software, 17, 1–25. https://doi.org/10.18637/jss.v017.i05

Samejima, F. (1969). Estimation of latent ability using a response pattern of graded scores. Psychometrika, 34, 1–97. https://doi.org/10.1007/BF03372160

Samejima, F. (1995). Acceleration model in the heterogeneous case of the general graded response model. Psychometrika, 60(4), 549–572. https://doi.org/10.1007/BF02294328

Stocking, M. L., & Lord, F. M. (1983). Developing a common metric in item response theory. Applied Psychological Measurement, 7(2), 201–210. https://doi.org/10.1177/014662168300700208

Train, K.E. (2009). Discrete choice methods with simulation (2nd ed.). Cambridge University Press. https://doi.org/10.1017/CBO9780511805271

Tutz, G. (1990). Sequential item response models with an ordered response. British Journal of Mathematical and Statistical Psychology, 43(1), 39–55. https://doi.org/10.1111/j.2044-8317.1990.tb00925.x

Tutz, G. (1997). Sequential models for ordered responses. In Handbook of modern item response theory (pp. 139–152). https://doi.org/10.1007/978-1-4757-2691-6_8

van der Linden, W. J., Jeon, M., & Ferrara, S. (2011). A paradox in the study of the benefits of test-item review. Journal of Educational Measurement, 48(4), 380–398. https://doi.org/10.1111/j.1745-3984.2011.00151.x

Verhelst, N. D., Glas, C. A., & De Vries, H. (1997). A steps model to analyze partial credit. In Handbook of modern item response theory (pp. 123–138). https://doi.org/10.1007/978-1-4757-2691-6_7

Wainer, H., Bradlow, E. T., & Wang, X. (2007). Testlet response theory and its applications. Cambridge University Press. https://doi.org/10.1017/cbo9780511618765

Zhang, S., Bergner, Y., DiTrapani, J., & Jeon, M. (2021). Modeling the interaction between resilience and ability in assessments with allowances for multiple attempts. Computers in Human Behavior, 122, 106847. https://doi.org/10.1016/j.chb.2021.106847

Acknowledgements

This research was performed using the compute resources and assistance of the UW-Madison Center For High Throughput Computing (CHTC) in the Department of Computer Sciences. The CHTC is supported by UW-Madison, the Advanced Computing Initiative, the Wisconsin Alumni Research Foundation, the Wisconsin Institutes for Discovery, and the National Science Foundation, and is an active member of the OSG Consortium, which is supported by the National Science Foundation and the U.S. Department of Energy’s Office of Science.

Funding

No funding was received to assist with the preparation of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

1.1 Example Constructed Response Items Scored Using 0, 1, 2 Scoring, Trends in Mathematics and Science Study (TIMSS)

Appendix B

1.1 Graphical Display of IRTree Models for Several Measurement Applications

IRTree model of answer changes (Jeon et al., 2017).

IRTree model of skipping behavior (Debeer et al., 2017).

IRTree model, three category Likert rating scale item, verbal aggression dataset (Jeon and De Boeck, 2016).

Appendix C

Sensitivity of Scoring in the Presence of On-Demand Hints to Item-Specific Factors

Bolsinova et al. (2022) consider a multinomial logistic model for test administration conditions where examinees can request on-demand hints. The approach considers separate scores for four possible outcomes on each item: (1) incorrect response without hint (\(IH_-\)), (2) incorrect response with hint (\(IH_+\)), (3) correct response with hint (\(CH_+\)), and (4) correct response without hint (\(CH_-\)). The result is scoring that accommodates responses obtained both with and without hints.

Bolsinova et al. examine and compare a variety of models before arriving at a result that implies scoring \(IH_-\) and \(IH_+\) equivalently, with a higher score for \(CH_+\), and the highest score for \(CH_-\). In other words, incorrect answers, whether with or without hints, were found equally indicative of lower ability, a correct answer without hint as indicative of highest ability, and a correct answer with hint as somewhere in-between.

Although their functions about scoring are arrived at through the application of a multinomial logistic model, the paper also considers an IRTree model, which arguably provides the best psychological representation of the response process associated with on-demand hint selection. Under an IRTree representation, Stage 1 represents the hint use decision (Yes, No), and Stages 2 and 3 represent the final item score (Correct, Incorrect) under conditions of Hint (Stage 2) or No Hint (Stage 3). The different stages can be modeled using different latent traits or the same latent trait. For simplicity, we assume the same latent trait \(\theta \) applies across stages.

We seek here to show how the presence/absence of item-specific factors, despite not being directly observable, has the potential to significantly alter the scoring as estimated using a multinomial logistic model. This illustration demonstrates our claim (in the conclusion of the main paper) that the issue of item-specific factors transcends applications using sequential or IRTree models as analytic models, as this application uses a multinomial logistic model.

First, we note that when considered in the context of real data, as in Bolsinova et al. , there is evidence for the presence of item-specific factors. As already noted in the main paper, the Bolsinova et al. Fig. 1 shows that when evaluated against a single overall latent proficiency, the majority of items show higher estimated difficulties when administered with hints compared to without hints. Unless the hints tend to hurt item performance (which we assume is unlikely), such results suggest that those respondents requesting hints tend to have lower levels on an item-specific factor (\(\eta _j\)) compared to respondents not requesting hints. The lower mean level on \(\eta _j\) for respondents that request hints is absorbed into the item difficulty estimate of the “with hint” item difficulty, making the items often appear more difficult for respondents that request hints.

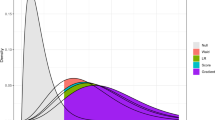

Second, we conduct a sensitivity analysis to show how the presence/absence of an item-specific factor can significantly alter the scoring function implied by a multinomial logistic model. Across scenarios with and without item-specific factors, our data generating approach maintains (1) consistent effects in the relative difficulties of the items when administered with and without hints, and (2) consistent effects in the relationship between the latent proficiency \(\theta \) and examinee requests for hints. Because of the psychological plausibility of IRTrees in this context, we conduct our sensitivity analysis using an IRTree model of the form in Fig. 12 as a data generating model. We consider two scenarios: (1) item-specific factors are present across stages (implying the decision to request hint is related to the correct response outcome beyond the influence of \(\theta \)) versus (2) item specific factors are not present across stages (the decision to request a hint is NOT related to the final outcome beyond effects accounted for by \(\theta \)).

IRTree model of on-demand hints (Bolsinova et al., 2022).

Under Scenario 1 (item-specific factors present), we simulate data from the following model for 10,000 respondents to 10 items. We assume \(\theta \sim \mathcal {N}(0,1)\). At Stage 1, the stage in which the decision about hint is made, we simulate for each item independent \(\eta _j\sim \mathcal {N}(0,1)\), and assume \(b_j=0\) for all items. Using the item-specific factor model, we consider the hint decision (\(Y_j=1\) implies hint; \(=0\) implies no hint), to be a stochastic outcome whose probability is maximized when the probability of correct response without a hint is .5, and that the probability reduces as the probability of correct response moves away from .5, either toward 1.0 or 0. Consistent with the reasoning in Bolsinova et al. (2022), this implies that lower \(\theta \) examinees are more inclined to request a hint when they have a higher item specific factor \(\eta _j\) (i.e., they feel they can achieve a correct answer with the support of a hint), while higher \(\theta \) examinees are more inclined to request a hint when they have a lower item specific factor \(\eta _j\) (i.e., they are unlikely to correctly answer the item without the hint).

Assuming a maximum probability of hint selection equal to .75, this produces a Stage 1 model of

At Stages 2 (where hint is not requested) and 3 (where hint is requested), we model the probability of correct response using the item-specific factor model (\(\Pr (X_j=1|Y_j=0,\theta ,\eta _j)\) for Stage 2, \(\Pr (X_j=1|Y_j=1,\theta ,\eta _j)\) for Stage 3) where the item difficulties at Stage 2 are all \(b_j=0\), but the difficulties at Stage 3 are set at \(b_j=-1.5\), so as to reflect the effect of the hint in reducing item difficulty.

The only feature distinguishing Scenario 2 from Scenario 1 is whether the \(\eta _j\) at Stage 1 is the same as at Stages 2 and 3, or whether we assume \(\eta _{1j}\) at Stage 1 are independent of the \(\eta _{2j}=\eta _{3j}\) that apply at Stages 2 and 3. Across scenarios, preserving the presence of a common \(\eta _j\) across stages allows the psychometric phenomenon occurring within each stage to be identical; the only difference being the unobservable dependence of the item-specific factors across stages induced by Scenario 1.

For data generated under each scenario, we fit a multinomial logistic model and report the scoring function for each analysis, as provided in Table 3. The scoring function reports the empirically best score for each score category on each item according to the model.

Note that while the \(IH_+\) and \(CH_-\) scores are fixed for identification, we observe very different forms of relative scoring for \(IH_-\) and \(CH_+\) depending on the presence or absence of item specific factors. Scenario 1 (item-specific factors present) provides much more credit for \(CH_+\) relative to \(IH_-\), while Scenario 2 (no item-specific factors) gives more credit to \(IH_-\) than \(CH_+\). Importantly, the difference occurs despite the consistent influences of (1) \(\theta \) on hint selection and (2) \(\theta \) on response correctness across scenarios, and thus the difference can be attributed to the role item-specific factors play in simultaneously affecting both hint requests and ultimate response correctness. In the context of the multinomial logistic model, such a result can be attributed to the way in which the item-specific factors introduce correlations between the error terms of the category propensities, a violation of its independence of irrelevant alternatives assumption.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lyu, W., Bolt, D.M. & Westby, S. Exploring the Effects of Item-Specific Factors in Sequential and IRTree Models. Psychometrika 88, 745–775 (2023). https://doi.org/10.1007/s11336-023-09912-x

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11336-023-09912-x