Abstract

Due to increasing requirement of computing capability, the graphics processor unit and CUDA are used to build a higher-performance computing environment. The graphics processing unit (GPU) is necessary for building the high-performance computing environment because of its high computing performance. CUDA, a parallel computing platform and programming model created by NVIDIA, utilizes some parallel construction concepts to upgrade performance, such as hierarchical thread blocks, shared memory, and barrier synchronization. The GPU and CUDA are also used in cloud computing, because they can provide high-performance computing capabilities. Virtualization plays a very important part in the cloud architecture. Virtual machines built with the NVIDIA graphics card can use CUDA to provide virtual machine computing capability. This makes virtual machine have not only virtual CPUs, but also physical graphics processors to do computations. InfiniBand is faster than Ethernet as the transmission medium. In the past, virtual machine cannot use direct InfiniBand, but now, many virtualization platforms can do it, that brings transmission speed improvement between virtual machines. In this work, we use many graphics processing units to build a high-performance computing cloud cluster. Then, we compare performance of using direct InfiniBand with that of using indirect InfiniBand transmission performance by running High Performance Linpack benchmark. In this work, we use a well-known virtualization platform, i.e., VMware to do experiments in this paper. And then, we use GPU passthrough and InfiniBand virtual and 10 Gb Ethernet passthrough to improve performance of the virtual cluster.

Similar content being viewed by others

References

GPGPU (2017) http://gpgpu.org

Virtualization (2017) https://www.vmware.com/solutions/virtualization.html

Ghadekar PP, Chopade NB (2016) Content based dynamic texture analysis and synthesis based on SPIHT with GPU. J Inf Process Syst (JIPS) 12(1):46–56

Yang C-T, Wang H-Y, Ou W-S, Liu Y-T, Hsu C-H (2012) On implementation of gpu virtualization using pci pass-through. In: Proceedings of the 4th IEEE International Conference on Cloud Computing Technology and Science (CloudCom), Dec 2012

Moon Y, Yu H, Gil JM, Lim J (2017) A slave ants based ant colony optimization algorithm for task scheduling in cloud computing environments. Human-centric Comput Inf Sci (HCIS) 7(1):28

Ma YM, Lee CR, Chung YC (2012) InfiniBand virtualization on KVM. In: Proceedings of the 4th IEEE International Conference on Cloud Computing Technology and Science (CloudCom), Dec 2012

Subramoni H, Kandalla K, Vienne J, Sur S, Barth B, Tomko K, Mclay R, Schulz K, Panda DK (2011) Design and evaluation of network topology-/speed- aware broadcast algorithms for InfiniBand clusters. In: Proceedings of the 2011 IEEE International Conference on Cluster Computing, Sept 2011

Kandalla KC, Subramoni H, Vienne J, Raikar SP, Tomko K, Sur S, Panda DK (2011) Designing non-blocking broadcast with collective offload on InfiniBand clusters: a case study with HPL. In: Proceedings of the 19th IEEE Annual Symposium on High Performance Interconnects, (HotI 2011), Aug 2011

Vienne J, Chen J, Wasi-Ur-Rahman M, Islam NS, Subramoni H, Panda DK (2012) Performance analysis and evaluation of InfiniBand FDR and 40GigE RoCE on HPC and cloud computing systems. In: Proceedings of the 2012 IEEE 20th Annual Symposium on High-Performance Interconnects, (Hot 2012), Aug 2012

Islam NS, Rahman MW, Jose J, Rajachandrasekar R, Wang H, Subramoni H, Murthy C, Panda DK (2012) High performance RDMA-based design of HDFS over InfiniBand. In: Proceedings of the International Conference on High Performance Computing, Networking, Storage and Analysis (SC ’12), Nov 2012

Huh JH, Seo K (2016) Design and test bed experiments of server operation system using virtualization technology. Human-centric Comput Inf Sci (HCIS) 6(1):1

Mohd-Hilmi MN, Al-Laila MH, Malim H, Ahamed NH (2016) Accelerating group fusion for ligand-based virtual screening on multi-core and many-core platforms. J Inf Process Syst (JIPS) 12(4):724–740

Choi M, Park JH (2017) Feasibility and performance analysis of RDMA transfer through PCI express. J Inf Process Syst (JIPS) 13(1):95–103

Yang CT, Liu JC, Wang HY, Hsu CH (2014) Implementation of GPU virtualization using PCI pass-through mechanism. J Supercomput 68(1):183–213

Han TD, Abdelrahman TS (2011) hiCUDA: high-level GPGPU programming. IEEE Trans Parall Distrib Syst 22(1):78–90

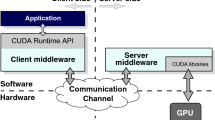

Duato J, Pea AJ, Silla F, Fernndez JC, Mayo R, Quintana-Ort ES (2011) Enabling CUDA acceleration within virtual machines using rCUDA. In: Proceedings of the 2011 18th International Conference on High Performance Computing (HiPC), Dec 2011

Duato J, Pena AJ, Silla F, Mayo R, Quintana-Orti ES (2011) Performance of CUDA virtualized remote GPUs in high performance clusters. In: Proceedings of the 2011 International Conference on Parallel Processing (ICPP), Sept 2011

Duato J, Pea AJ, Silla F, Mayo R, Quintana-Ort ES (2010) rCUDA: reducing the number of GPU-based accelerators in high performance clusters. In: Proceedings of the 2010 International Conference on High Performance Computing And Simulation (HPCS), Jun 2010

Shi L, Chen H, Sun J, Li K (2012) vCUDA: GPU-accelerated high-performance computing in virtual machines. IEEE Trans Comput 61(6):804–816

Gupta V, Gavrilovska A, Schwan K, Kharche H, Tolia N, Talwar V, Ranganathan P (2009) GViM: GPU-accelerated virtual machines. In: Proceedings of the 3rd ACM Workshop on System-level Virtualization for High Performance Computing (HPCVirt ’09), Mar 2009

Giunta G, Montella R, Agrillo G, Coviello G (2010) A GPGPU Transparent virtualization component for high performance computing clouds. In: D’Ambra P, Guarracino M, Talia D (eds) Proceedings of the 16th International Euro-Par Conference on Parallel Processing: Part I (EuroPar’10). Springer, Berlin, pp 379–391

Suzuki Y, Kato S, Yamada H, Kono K (2016) GPUvm: GPU virtualization at the hypervisor. IEEE Trans Comput 65(9):2752–2766

Hong CH, Spence I, Nikolopoulos DS (2017) FairGV: fair and fast GPU virtualization. IEEE Trans Parallel Distrib Syst 28(12):3472–3485

Kao C-Y, Hung W-S, Wang Y-L, Liu P, Wu J-J (2015) GPGPU virtualization system using nvidia kepler series GPU. J Internet Technol 16(2015):525–531

Prades J, Varghese B, Reao C, Silla F (2017) Multi-tenant virtual GPUs for optimising performance of a financial risk application. J Parallel Distrib Comput 108:28–44

Faraji I, Mirsadeghi SH, Afsahi A (2017) Exploiting heterogeneity of communication channels for efficient GPU selection on multi-GPU nodes. Parallel Comput 68:3–16

Escudero-Sahuquillo J, Garcia PJ, Quiles FJ, Maglione-Mathey G, Duato J (2018) Feasible enhancements to congestion control in InfiniBand-based networks. J Parallel Distrib Comput 112:35–52

Peltz Jr, P, Fields P (2016) HPC system acceptance: controlled chaos. In: Proceedings of the International Conference on High Performance Computing, Networking, Storage and Analysis (SC ’16), Nov 2016

Council HA (2009) Interconnect Analysis: 10GigE and InfiniBand in high performance computing. HPC Advisory Council, Technical Report

Acknowledgements

This work was sponsored by the Ministry of Science and Technology (MOST), Taiwan, under Grants Numbers 104-2221-E-029-010-MY3, 105-2634-E-029-001, and 105-2622-E-029-003-CC3.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yang, CT., Chen, ST., Lo, YS. et al. On construction of a virtual GPU cluster with InfiniBand and 10 Gb Ethernet virtualization. J Supercomput 74, 6876–6897 (2018). https://doi.org/10.1007/s11227-018-2484-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-018-2484-5