Abstract

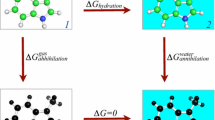

General multivariate distributions are notoriously expensive to sample from, particularly the high-dimensional posterior distributions in PDE-constrained inverse problems. This paper develops a sampler for arbitrary continuous multivariate distributions that is based on low-rank surrogates in the tensor train format, a methodology that has been exploited for many years for scalable, high-dimensional density function approximation in quantum physics and chemistry. We build upon recent developments of the cross approximation algorithms in linear algebra to construct a tensor train approximation to the target probability density function using a small number of function evaluations. For sufficiently smooth distributions, the storage required for accurate tensor train approximations is moderate, scaling linearly with dimension. In turn, the structure of the tensor train surrogate allows sampling by an efficient conditional distribution method since marginal distributions are computable with linear complexity in dimension. Expected values of non-smooth quantities of interest, with respect to the surrogate distribution, can be estimated using transformed independent uniformly-random seeds that provide Monte Carlo quadrature or transformed points from a quasi-Monte Carlo lattice to give more efficient quasi-Monte Carlo quadrature. Unbiased estimates may be calculated by correcting the transformed random seeds using a Metropolis–Hastings accept/reject step, while the quasi-Monte Carlo quadrature may be corrected either by a control-variate strategy or by importance weighting. We show that the error in the tensor train approximation propagates linearly into the Metropolis–Hastings rejection rate and the integrated autocorrelation time of the resulting Markov chain; thus, the integrated autocorrelation time may be made arbitrarily close to 1, implying that, asymptotic in sample size, the cost per effectively independent sample is one target density evaluation plus the cheap tensor train surrogate proposal that has linear cost with dimension. These methods are demonstrated in three computed examples: fitting failure time of shock absorbers; a PDE-constrained inverse diffusion problem; and sampling from the Rosenbrock distribution. The delayed rejection adaptive Metropolis (DRAM) algorithm is used as a benchmark. In all computed examples, the importance weight-corrected quasi-Monte Carlo quadrature performs best and is more efficient than DRAM by orders of magnitude across a wide range of approximation accuracies and sample sizes. Indeed, all the methods developed here significantly outperform DRAM in all computed examples.

Similar content being viewed by others

1 Introduction

We present an algorithm for efficient MCMC when the target distribution is a continuous multivariate distribution with known, tractable probability density function (PDF) \(\pi (x)\) defined for x in a region in \({\mathbb {R}}^d\). Beyond a fixed function approximation phase that has cost that scales linearly with dimension d, independent draws from \(\pi \) cost (a fraction more than) one function evaluation per independent sample. We give a basic form of the algorithm that generates random samples from \(\pi \) and variants that allow efficient quadrature using quasi-Monte Carlo constructions and/or importance weighting.

There are currently few general-purpose options for sampling from multivariate distributions with no special form, particularly if one is seeking a black box sampler that does not require case-specific tuning. We commonly encounter such distributions as the posterior distribution in a Bayesian analysis of a nonlinear inverse problem (Fox and Nicholls 1997; Fox et al. 2013; Dodwell et al. 2015) (also see the example in Sect. 5.3), or as the marginal posterior distribution over hyperparameters in a linear Gaussian inverse problem (Fox and Norton 2016), see also Norton et al. (2018). This work is motivated by the desire to compute inference in those examples, though the samplers and quadrature methods we present here are applicable to arbitrary continuous distributions, which could be non-Gaussian, or multimodal, and unnormalized; for example, see the example in Sect. 5.1. In target applications, the aim of sampling is often to implement Monte Carlo integration to compute summary statistics of the posterior distribution over an unobserved quantity of interest (QoI). For applications in inverse problems, the state variable is typically high-dimensional, thus requiring computation of high-dimensional quadratures (Stuart 2010), even when the QoI is low-dimensional.

Efficient black box samplers exist for some special classes of distributions. Most notable amongst multivariate distributions are multivariate normal (MVN) distributions, with fixed covariance or precision matrix, for which efficient, automatic sampling is available using stochastic variants of efficient algorithms for solving systems of equations in the covariance or precision matrix; methods based on direct solvers, using Cholesky factoring, can be found in Rue (2001) and Rue and Held (2005), while more recently samplers based on accelerated iterative solvers have been developed; see Fox and Parker (2017) and references therein. For non-Gaussian distributions, virtually all samplers are variants of Metropolis–Hastings (MH) MCMC with a random walk proposal, of which there are many variants (Brooks et al. 2011). These algorithms are geometrically convergent, at best, so they can be very slow for our target applications. Two black box versions are the delayed acceptance adaptive Metropolis (DRAM) (Haario et al. 2006) and the t-walk (Christen and Fox 2010). Both of these algorithms require multiple evaluations of the target PDF per effectively independent output sample, with that number growing roughly linearly or worse with dimension, even for simple distributions such as MVN [see Norton et al. (2018) for the cost of these algorithms].

Computational sampling from univariate distributions is effectively a solved problem due to developments of the adaptive rejection sampler (ARS) (Gilks and Wild 1992), such as independent doubly adaptive rejection Metropolis sampling (\(\hbox {IA}^2\)RMS) (Martino et al. 2015). These algorithms approximate the univariate PDF using simple functions, with the approximation improving (adaptively) as the algorithm progresses to achieve efficient sampling. The ARS, which is restricted to log-convex PDFs, builds a piecewise linear upper bound to the log of the PDF and hence bounds the PDF, to give an efficient proposal in a rejection sampler.Footnote 1 The \(\hbox {IA}^2\)RMS has no restriction on the PDF and uses a sequence of simple function approximations to the PDF or log PDF, such as piecewise constant or piecewise linear approximations that converge in distribution to the PDF as the algorithm progresses. Sampling from these approximations is easy in this univariate case, whether approximating the PDF or log PDF, using the inverse cumulative transformation method (Devroye 1986; Johnson 1987; Hörmann et al. 2004), with samples providing independence proposals to a Metropolis–Hastings accept/reject step that ensures the correct equilibrium distribution. Distributional convergence of the approximation implies that, asymptotic in sample size, just one PDF evaluation is required per independent sample.Footnote 2

The sampler developed here is inspired, to some extent, by \(\hbox {IA}^2\)RMS, in that it uses function approximation methods to approximate the multivariate PDF in a way that then allows cheap simulation from the approximation. Specifically, we use an interpolation in tensor train (TT) representation that may be made arbitrarily accurate, with sampling via the conditional distribution method that is the multivariate extension of inverse cumulative transformation sampling for univariate distributions (Johnson 1987). The conditional distribution method requires computing integrals of the multivariate PDF \(\pi (x_1,\ldots ,x_d)\), over subsets of variables \(x_k,\ldots ,x_d\) for \(k=2,\ldots ,d\), in order to obtain univariate marginal–conditional distributions. Per se, this problem is as difficult as the original quadrature. By using the TT decomposition (Oseledets 2011b), this integration can be performed efficiently, and each univariate marginal-conditional distribution can then be easily sampled using its inverse cumulative distribution function (CDF). Since the inverse cumulative transform is isoprobabilistic, the resulting samples are exact for the interpolated probability tensor, which is, however, an approximation to the original target PDF. We provide bounds on the sampling error based on the approximation errors of the TT decomposition and discretization, and thus are able to trade accuracy for compute time.

An accurate approximation to the PDF allows the almost exact samples to be used directly, while a less expensive approximation may be used to produce independence proposals for a MH accept/reject step that ‘corrects’ the distribution. The conditional distribution sampler may also be seeded with quasi-Monte Carlo points in the unit cube to implement quasi-Monte Carlo quadrature that is corrected by a multilevel MCMC scheme or by importance weighting. These variants are discussed in Sect. 4. We find that the combination of quasi-Monte Carlo seed points combined with importance-weighted quadrature gives the best performance in computed examples.

The attraction of approximating the PDF in TT format is that the computational cost of the construction, the storage requirements and the operations required for conditional distribution method sampling from the distributional approximation all scale linearly with dimension; see Sect. 3. In contrast, direct calculation or naïve representations lead to exponential cost for each of these tasks. This is a remarkable feature of the TT representation, and this is why the recent introduction of low-rank hierarchical tensor methods, such as TT (Oseledets and Tyrtyshnikov 2010; Oseledets 2011a, b, 2013), is a significant development in scientific computing for multidimensional problems.

Thus, the basic sampler we present here differs from \(\hbox {IA}^2\)RMS in two important aspects (beyond being able to handle multivariate distributions): we approximate the PDF and not the log PDF, and the sampler is not adaptive. The PDF is approximated because operations available on the TT representation that have cost that scales linearly with dimension include those required for performing the conditional distribution sampling (see Sect. 3), while it is not clear how to perform sampling when the log PDF is approximated in the multivariate case. Further, current methods for TT representation do not include convenient and cheap schemes for updating a TT representation using a single new evaluation. Hence, the algorithm we present consists of two steps: in a set-up phase, the TT approximation to \(\pi (\cdot )\) is constructed; then, that fixed approximation is used to generate samples. Hence, unlike the univariate samplers mentioned above, the TT approximation and samplers presented here are restricted to distributions with bounded, known support. While it is simple to define coordinate transformations \({\mathbb {R}}\mapsto [0,1]\) to represent a distribution on the (bounded) unit cube, efficient sampling still requires locating the appreciable support of the distribution; indeed, that is often a significant task when performing sampling. We do not consider such transformations here. Despite this restriction, the method advances sample-based inference in some problems of substantial interest, as shown in the computed examples in Sect. 5.

Approximation of the multivariate target distribution can be recommended for the following two cases: first, the quantity of interest may be very poorly representable in the TT format, and hence, direct tensor product integration of the QoI, as suggested in Eigel et al. (2018), is not possible. The most remarkable example is the indicator function, which occurs in the computation of the probability of an event. If the jump of the indicator function is not aligned to the coordinate axes, the cost of its TT approximation might grow exponentially in the number of variables. Then, Monte Carlo quadrature becomes the only possibility, with the quadrature error depending on the particular distribution of the samples. When the target density function admits a TT approximation with a modest storage, the cumulative transform method can produce optimally distributed samples at a low cost. Secondly, even when a fast growth of the TT storage prevents accurate computation of the density function, the TT surrogate distributed samples can still be used as proposals in the MH algorithm or with importance weighting. Even a crude approximation to the PDF with \(10\%\) error can produce the acceptance rate of \(90\%\) and the integrated autocorrelation time of 1.2, which is close enough to the best possible practical MCMC. The relationship between approximation error and acceptance rate is formalized in Sect. 4.2.

The paper is structured as follows: in Sect. 2, we review the conditional sampling method used to sample from the multivariate TT-interpolated approximation. Some background on the TT decomposition is presented in Sect. 3. A metropolized algorithm that uses the TT surrogate for sampling from the target distribution is presented in Sect. 4, as well as methods for unbiased quadrature that utilize a two-level algorithm, importance weighting and quasi-Monte Carlo seed points. Several numerical examples are presented in Sect. 5: Sect. 5.1 shows posterior estimation of a shock absorber failure probability; Sect. 5.2 demonstrates efficient sampling when the Rosenbrock function is the log target density, which is a synthetic ‘banana-shaped’ PDF that presents difficulties to random walk MCMC samplers; and Sect. 5.3 demonstrates posterior inference in a classical inverse problem in subsurface flow. In each of the numerical examples, scaling for the TT-based sampling and quadrature is shown, in comparison with DRAM (Haario et al. 2006), as well as (in Sect. 5.3) with direct quasi-Monte Carlo quadrature.

2 Conditional distribution sampling method

The conditional distribution method (Devroye 1986; Johnson 1987; Hörmann et al. 2004) reduces the task of generating a d-dimensional random vector into a sequence of d univariate generation tasks.

Let \(\left( X_1,\ldots ,X_d\right) \) be a continuous random vector with a probability density function \(\pi (x_1,\ldots ,x_d)\). To simplify the presentation, we assume in this section that \(\pi \) is normalized. The density function can be written as a product of conditional densities,

where \(\pi _k(x_k| x_1\ldots ,x_{k-1})\) is a conditional density given by

in terms of the marginal densities,

where \(k=1,\ldots ,d\). To simplify the notation we set \(p_{0}=1\). The conditional distribution method then generates \(\left( x_1,\ldots ,x_d\right) \sim \pi \) by sampling from each of the univariate conditional densities in turn:

This follows by straightforward manipulation of the definitions of marginal and conditional distributions.

To generate the univariate samples in the algorithm above, we use the inverse cumulative transformation method. Thus, our algorithm coincides with the inverse Rosenblatt transformation (Rosenblatt 1952) from the d-dimensional unit cube to the state space of \(\pi \). The standard conditional distribution method uses independent samples distributed uniformly in the unit cube as seeds for the transformation to produce independent draws from \(\pi \). This generalizes the inverse cumulative transformation method for univariate distributions. Later, we will also use quasi-random points to implement quasi-Monte Carlo quadrature for evaluating expectations with respect to \(\pi \).

When the analytic inverse of each univariate cumulative distribution function is not available, a straightforward numerical procedure is to discretize the univariate density on a grid, with approximate sampling carried out using a polynomial interpolation. In that case, the normalization, i.e. the denominator in (1), is not necessary as normalization of the numerical approximation is evaluated, allowing sampling from an unnormalized marginal density (2), directly.

The main difficulty with the conditional distribution method for multivariate random generation is obtaining all necessary marginal densities, which requires the high-dimensional integral over \(x_{k+1}\ldots x_d\) in (2). In general, this calculation can be extremely costly. Even a simple discretization of the argument of the marginal densities (2), or the conditional–marginal densities (1), leads to exponential cost with dimension.

To overcome this cost, we precompute an approximation of \(\pi (x_1,\ldots ,x_d)\) in a compressed representation that allows fast computation of integrals in (2) and subsequent sampling from the conditionals in (1). In the next sections, we introduce the TT decomposition and the related TT-cross algorithm (Oseledets and Tyrtyshnikov 2010) for building a TT approximation to \(\pi \). Moreover, we show that the separated form of the TT representation allows an efficient integration in (2), with cost that scales linearly with dimension.

3 TT approximation of the target distribution

Tensor decompositions trace back to the low-rank skeleton decompositions of matrices, which can in turn be computed by the singular value decomposition (SVD). Any matrix \(P \in {\mathbb {R}}^{n\times m}\) (e.g. a bivariate discrete distribution) admits a SVD \(P = U\varSigma V^\top \), where U, V are orthonormal matrices of singular vectors and \(\varSigma \) is a diagonal matrix of non-negative singular values. If the matrix is of low rank, \(r:=\mathrm {rank}~P<\min (m,n)\), the bottom right corner of \(\varSigma \) is zero, so we can truncate the SVD to \(U_r \varSigma _r V_r^\top \), where \(U_r,V_r\) contain only the first r columns and \(\varSigma _r\) contains only the principal \(r\times r\) submatrix. However, we can also approximate the given matrix P by a truncated decomposition of lower rank; the Eckart–Young theorem (Golub and Van Loan 2013) ensures the optimality of the rank-r SVD approximation among all possible rank-r approximations. Naturally, \(U_r\) and \(V_r\) contain only \((n+m)r\) elements in contrast to nm elements in P. This process can be extended to build low-rank decompositions of multivariate distributions, which we will describe next.

3.1 Interpolated TT decomposition

Throughout the paper, we approximate the target PDF by an interpolated TT decomposition (Oseledets 2011b),

that is a sum of products of the univariate functions \(\pi ^{(k)}_{\alpha _{k-1},\alpha _k}(x_k)\), \(k=1,2,\ldots ,d\) indexed by \(\alpha _k=1,\ldots ,r_k\). The \(r_k\), \(k=0,\ldots ,d\), are called TT ranks, with \(r_0=r_d=1\) (because \(\pi \) is scalar-valued), but \(r_1,\ldots ,r_{d-1}\) can be larger. The efficiency of this representation relies on the TT ranks being bounded by some (smallish) number r, as discussed later.

The TT decomposition natively represents a tensor, or d-dimensional array of values. The function approximation (3) is obtained by first approximating the tensor that results from discretizing the PDF \(\pi (x_1,\ldots ,x_d)\) by collocation on a tensor product of univariate grids. Let \(x_k^{i_k} \in {\mathbb {R}}\), with \(i_k=1,\ldots ,n_k\) and \(x_k^{1}< \cdots < x_k^{n_k}\), define independent univariate grids in each variable, and let \({{\hat{\pi }}}(i_1,i_2,\ldots ,i_d) = \pi (x_1^{i_1},x_2^{i_2},\ldots ,x_d^{i_d})\). The TT representation is

with TT blocks\({{\hat{\pi }}}^{(k)}\). Each TT block is a collection of \(r_{k-1}r_k\) vectors of length \(n_k\), i.e. \({{\hat{\pi }}}^{(k)}(i_k) = \pi ^{(k)}(x_k^{i_k})\) is a three-dimensional tensor of size \(r_{k-1} \times n_k \times r_k\). If we assume that all \(n_k \le n\) and \(r_k \le r\) for some uniform bounds \(n,r \in {\mathbb {N}}\), the storage cost of (4) can be estimated by \(dnr^2\) which is linear in the number of variables. In contrast, the number of elements in the tensor of nodal values \({{\hat{\pi }}}(i_1,\ldots ,i_d)\) grows exponentially in d and quickly becomes prohibitively large with increasing d.

The continuous approximation of \(\pi \) (3) is given by a piecewise polynomial interpolation of nodal values or TT blocks. For example, in the linear case we have

for \(x_k^{i_k} \le x_k \le x_k^{i_k+1},\) which induces the corresponding multilinear approximation \({{\tilde{\pi }}}\) of \(\pi \) in (3).

If the individual terms \(\pi ^{(k)}_{\alpha _{k-1},\alpha _k}(x_k)\) are normalized PDFs, the TT approximation in (3) may be viewed as a mixture distribution. However, the TT decomposition can be more general and may also include negative terms. Moreover, at some locations where \(\pi (x)\) is close to zero the whole approximation \({{\tilde{\pi }}}(x)\) may take (small) negative values. This will be circumvented by explicitly taking absolute values in the conditional distribution sampling method; see Sec. 4.1.

The interpolated TT approximation to \(\pi \) in (3) required several choices. First a coordinate system must be chosen, then an ordering of coordinates, then a rectangular region that contains the (appreciable) support of the PDF and then univariate grids for each coordinate within the rectangular region. Each of these choices affects the TT ranks and hence the efficiency of the TT representation in terms of storage size versus accuracy of the approximation that is also chosen; see later. In this sense, the sampler that we develop is not ‘black box’. However, as we demonstrate in the computed examples, an unsophisticated choice for each of these steps already leads to a computational method for sampling and evaluating expectations that is substantially more efficient than existing MCMC algorithms. Smart choices for each of these steps could lead to further improvements.

The rationale behind the independent discretization of all variables is the rapid convergence of tensor product Gaussian quadrature rules. If \(\pi (x)\) is analytic with respect to all variables, the error of the Gaussian quadrature converges exponentially in n. A straightforward summation of \(n^d\) quadrature terms would imply a cost of \({\mathcal {O}}(|\log \varepsilon |^{d})\) for accuracy \(\varepsilon \). In contrast, the TT ranks often depend logarithmically on \(\varepsilon \) under the same assumptions on \(\pi (x)\) (Tyrtyshnikov 2003; Khoromskij 2006; Schneider and Uschmajew 2013), leading to \({\mathcal {O}}(d|\log \varepsilon |^{3})\) cost of the TT integration, since the integration of the TT decomposition factorizes into one-dimensional integrals over the TT blocks. This can also be significantly cheaper than the \({\mathcal {O}}(\varepsilon ^{-2})\) cost of Monte Carlo quadrature.

In general, it is difficult to deduce sharp bounds for the TT ranks. Empirically, low ranks occur in the situation of ‘weakly’ dependent variables. For example, if \(x_1,\ldots ,x_d\) correspond to independent random quantities, the PDF factorizes into a single product of univariate densities, which corresponds to the simplest case, \(r=1\) in (3). Thus, a numerical algorithm that can robustly reveal the ranks is indispensable.

3.2 TT-cross approximation

A quasi-optimal approximation of \({{\hat{\pi }}}\) for a given TT rank, in the Frobenius norm, is available via the truncated singular value decomposition (SVD) (Oseledets 2011b). However, the SVD requires storage of the full tensor which is not affordable in many dimensions. A practical method needs to be able to compute the representation (3) using only a few evaluations of \(\pi \). A workhorse algorithm of this kind is the alternating TT-cross method (Oseledets and Tyrtyshnikov 2010). That builds on the skeleton decomposition of a matrix (Goreinov et al. 1997). It represents an \(n\times m\) matrix P of rank r as the cross (in MATLAB-like notation)

of r columns and rows, where \({\mathcal {I}}\) and \({\mathcal {J}}\) are two index sets of cardinality r such that \(P({\mathcal {I}},{\mathcal {J}})\) (the intersection matrix) is non-singular. If \(r\ll n,m\), this decomposition requires computing only \((n+m-r)r\ll nm\) elements of the original matrix. The SVD may be used for choosing the cross (5), though with greater cost, as noted above.

The TT-cross approximation may now be constructed by reducing the sequence of unfolding matrices \({{\hat{\pi }}}_k=[{\hat{\pi }}(i_1,\ldots ,i_k;i_{k+1},\ldots ,i_d)]\) that have the first k indices grouped together to index rows and the remaining indices grouped to index columns. We begin with \({{\hat{\pi }}}_1\).

We start with a set \({\mathcal {I}}_{>1} = \{(i_2^{\alpha _1},\ldots ,i_d^{\alpha _1})\}_{\alpha _1=1}^{r_1}\) of \(r_1\)\((d-1)\)-tuples such that \({{\hat{\pi }}}(:,{\mathcal {I}}_{>1})\) forms a ‘good’ basis for the rows of \({{\hat{\pi }}}_1\) (in the \(i_1\) variable) and choose a set \({\mathcal {I}}_{<2} = \{i_1^{\alpha _1}\}_{\alpha _1=1}^{r_1}\) of \(r_1\) row indices such that the volume (the modulus of the determinant) of the \(r_1 \times r_1\) submatrix \({{\hat{\pi }}}({\mathcal {I}}_{<2},{\mathcal {I}}_{>1})\) is maximized. This can be achieved in \({\mathcal {O}}(nr_1^2)\) operations using the maxvol algorithm (Goreinov et al. 2010). The first discrete TT block \({{\hat{\pi }}}^{(1)}\) is then assembled from the rectangular \(n \times r_1\) matrix \({{\hat{\pi }}}(:,{\mathcal {I}}_{>1}){{\hat{\pi }}}({\mathcal {I}}_{<2},{\mathcal {I}}_{>1})^{-1}\), and the reduced tensor \([{{\hat{\pi }}}_{>1}({\alpha _1}, i_2,\ldots ,i_d)] = [{{\hat{\pi }}}(i_1^{\alpha _1}, i_2,\ldots ,i_d)]\) is passed on to the next step of the TT-cross. In a practical algorithm, to ensure numerical stability all these operations are actually carried out using QR decompositions of the matrices (Oseledets and Tyrtyshnikov 2010).

In the kth step, we assume that we are given the reduction \({{\hat{\pi }}}_{>k-1}(\alpha _{k-1},i_k,\ldots ,i_d)\) from the previous step, as well as two sets \({\mathcal {I}}_{<k} = \{(i_1^{\alpha _{k-1}},\ldots ,i_{k-1}^{\alpha _{k-1}})\}_{\alpha _{k-1}=1}^{r_{k-1}}\) and \({\mathcal {I}}_{>k} = \{(i_{k+1}^{\alpha _k},\ldots ,i_d^{\alpha _k})\}_{\alpha _k=1}^{r_k}\) containing, resp., \(r_{k-1}\)\((k-1)\)-tuples and \(r_k\)\((d-k)\)-tuples. The unfolding tensor \([{{\hat{\pi }}}_{>k-1}({\alpha _{k-1}}, i_k;~{\mathcal {I}}_{>k})]\) can then be seen as a \(r_{k-1}n \times r_k\) rectangular matrix and the maxvol algorithm can be applied again to produce a set of row positions \(\{\alpha _{k-1}^{\alpha _k}, i_k^{\alpha _k}\}_{\alpha _k=1}^{r_k}\), which upon replacing \(\alpha _{k-1}^{\alpha _k}\) with the corresponding indices from \({\mathcal {I}}_{<k}\) leads to the next index set \({\mathcal {I}}_{<k+1}=\{(i_1^{\alpha _{k}},\ldots ,i_{k}^{\alpha _{k}})\}_{\alpha _{k}=1}^{r_{k}}\). The induction is completed by taking \({{\hat{\pi }}}^{(d)} = {{\hat{\pi }}}_{>d-1}\).

This process can be also organized in the form of a binary tree, which gives rise to the so-called hierarchical Tucker cross algorithm (Ballani and Grasedyck 2015). In total, we need \({\mathcal {O}}(dnr^2)\) evaluations of \(\pi \) and \({\mathcal {O}}(dnr^3)\) additional operations for the computation of the maximum volume matrices.

The choice of the univariate grids, \(x_k^{1}< \cdots < x_k^{n_k}\), and of the initial index sets \({\mathcal {I}}_{>k}\) can be crucial. In this paper, we found that a uniform grid in each coordinate was sufficient, with even relatively coarse grids resulting in efficient sampling algorithms; see the numerical examples for details. Given any easy to sample reference distribution (e.g. uniform or Gaussian), it seems reasonable to initialize \({\mathcal {I}}_{>k}\) with independent realizations of that distribution. (We could also expand the grids with reference samples, though we did not do that.) If the target function \(\pi \) admits an exact TT decomposition with TT ranks not greater than \(r_1,\ldots ,r_{d-1}\), and all unfolding matrices have ranks not smaller than the TT ranks of \(\pi \), the cross iteration outlined above reconstructs \({{\hat{\pi }}}\)exactly (Oseledets and Tyrtyshnikov 2010). This is still a rare exception though, since most functions have infinite exact TT ranks, even if they can be approximated by a TT decomposition with a small error and low ranks. Nevertheless, the cross iteration, initialized with slightly overestimated values \(r_1,\ldots ,r_{d-1}\), can deliver a good approximation, if a function is regular enough (Ballani and Grasedyck 2015; Dolgov and Scheichl 2019).

This might be not the case for localized probability density functions. For example, for a heavy-tailed function \((1+x_1^2+\cdots +x_d^2)^{-1/2}\) one might try to produce \({\mathcal {I}}_{>k}\) from a uniform distribution in a cube \([0,a]^d\) with a sufficiently large a. However, since this function is localized in an exponentially small volume \([0,\varepsilon ]^d\), uniform index sets deliver a poor TT decomposition, worse for larger a and d.

In this situation, it is crucial to use fine grids and refine the sets \({\mathcal {I}}_{<k},{\mathcal {I}}_{>k}\) by conducting several TT-cross iterations, going back and forth over the TT blocks and optimizing the sets by the maxvol algorithm. For example, after computing \({{\hat{\pi }}}^{(d)} = {{\hat{\pi }}}_{>d-1}\), we ‘reverse’ the algorithm and consider the unfolding matrices with indices \(\{(i_1^{\alpha _{d-1}},\ldots ,i_{d-1}^{\alpha _{d-1}})\}_{\alpha _{d-1}=1}^{r_{d-1}} = {\mathcal {I}}_{<d}\). Applying the maxvol algorithm to the columns of a \(r_{d-1} \times n\) matrix \({{\hat{\pi }}}^{(d)}\), we obtain a refined set of points \({\mathcal {I}}_{>d-1} = \{i_d^{\alpha _{d-1}}\}_{\alpha _{d-1}=1}^{r_{d-1}}\). The recursion continues from \(k=d\) to \(k=1\), optimizing the right sets \({\mathcal {I}}_{>k}\), while taking the left sets \({\mathcal {I}}_{<k}\) from the previous (forward) iteration. After several iterations, both \({\mathcal {I}}_{<k}\) and \({\mathcal {I}}_{>k}\) will be optimized to the particular target function, even if the initial index sets gave a poor approximation.

This adaptation of points goes hand in hand with the adaptation of ranks. If the initial ranks \(r_1,\ldots ,r_{d-1}\) were too large for the desired accuracy, they can be reduced. However, we can also increase the ranks by computing the unfolding matrix \(\left[ {{\hat{\pi }}}({\mathcal {I}}_{<k}, i_k;~i_{k+1}^{\alpha _k},\ldots ,i_{d}^{\alpha _k})\right] \) on some enriched index set \(\{(i_{k+1}^{\alpha _k},\ldots ,i_d^{\alpha _k})\}_{\alpha _{k}=1}^{r_{k}+\rho }\), by augmenting the original index set \({\mathcal {I}}_{>k}\) with an auxiliary set \({\mathcal {I}}_{>k}^{aux}\) and increasing the kth TT rank from \(r_k\) to \(r_k+\rho \). The auxiliary set can be chosen at random (Oseledets 2011a) or using a surrogate for the error (Dolgov and Savostyanov 2014). The pseudocode of the entire TT-cross method is listed in Algorithm 1. For uniformity, we let \({\mathcal {I}}_{<1} = {\mathcal {I}}_{>d} = \emptyset \).

Systematically using the enrichment scheme, we can even employ a different approach moving away from truncating ranks. Instead, we start with a low-rank initial guess and increase the ranks until the desired accuracy is met. We have found that this approach is often more accurate in numerical experiments. The relative cost of the two approaches depends on the application.

4 Sampling algorithms based on TT Surrogates

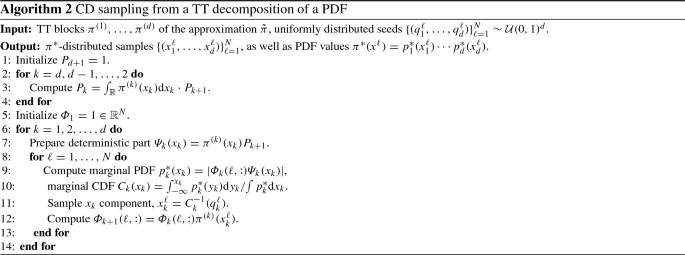

4.1 Conditional distribution sampling (TT-CD)

One of the main contributions of this paper is to show that conditional distribution method is feasible, and efficient, once a PDF has been put into TT format. This section presents those calculations.

First, we describe the computation of the marginal PDFs \(p_k\), defined in (2), given \(\pi \) in a TT format (3). Note that integrals over the variable \(x_p\) appear in all conditionals (2) with \(k<p\). The TT format allows to compute the \(r_{k-1} \times 1\) vector \(P_k\) required for evaluating the marginal PDF \(p_{k-1}\) by the following algorithm.

Since \(\pi ^{(k)}(x_k) \in {\mathbb {R}}^{r_{k-1} \times r_k}\) for each fixed \(x_k\), the integral \(\int \pi ^{(k)}(x_k) \text {d}x_k\) is a \(r_{k-1} \times r_k\) matrix, where \(\alpha _{k-1}\) is the row index and \(\alpha _k\) is the column index. Hence, we can write Line 3 as the matrix–vector product,

Assuming n quadrature points for each \(x_k\), and the uniform rank bound \(r_k \le r\), the asymptotic complexity of this algorithm is \({\mathcal {O}}(dnr^2)\).

The first marginal PDF is approximated by \(p_1^*(x_1) = |\pi ^{(1)}(x_1) P_2|\). We take the absolute value because the TT approximation \({{\tilde{\pi }}}\) (and hence, \(\pi ^{(1)}(x_1) P_2\)) may be negative at some locations. In the kth step of the sampling procedure, the marginal PDF also requires the first \(k-1\) TT blocks, restricted to the components of the sample that are already determined,Footnote 3

However, since the loop goes sequentially from \(k=1\) to \(k=d\), the sampled TT blocks can be accumulated in the same fashion as the integrals \(P_k\). Again, we take the absolute value to ensure positivity. The overall method for drawing N samples is written in Algorithm 2. Note that if \({{\tilde{\pi }}}\) is negative at any points, the actual density \(\pi ^{*}\) at \(x^\ell \), which is the product of marginal PDFs computed in each step, may slightly differ from \({{\tilde{\pi }}}\).

The error induced by taking the absolute values in Line 9 of Algorithm 2 is of the order of the TT approximation error. The approximate marginal probability \(\tilde{p}_k(x_k) = \varPhi _k(\ell ,:) \varPsi _k(x_k)\) is produced from \({{\tilde{\pi }}}(x)\) by integration; hence, if \(\pi (x)-{{\tilde{\pi }}}(x) = {\mathcal {O}}(\varepsilon )\) due to the TT approximation, we also have \(|{{\tilde{p}}}_k(x_k) - p_k(x_k)| \le C \varepsilon \Vert p_k\Vert _\infty \) for the marginals, for some \(C>0\) independent of \(\varepsilon \), where \(\Vert p_k\Vert _\infty := {\text {ess}}\sup _{\xi _k} p_k(\xi _k)\). Then, for all \(x_k\) that satisfy \(p_k(x_k) \ge C \varepsilon \Vert p_k\Vert _\infty \), we have

Hence, \({{\tilde{p}}}_k\) can only be negative where \(p_k\) is small and we have \(-C \varepsilon \Vert p_k\Vert _\infty \le {{\tilde{p}}}_k(x_k) \le 0\). The error in taking the modulus in Line 9 of Algorithm 2 can then be estimated as follows:

The sample-independent prefactor of the marginal PDF in Line 7 requires \({\mathcal {O}}(dnr^2)\) operations. The marginal PDF in Line 9 can then be computed with \({\mathcal {O}}(dNnr)\) cost. The cost of the CDF computation in Line 10 depends on the quadrature scheme used. For a piecewise spline approximation or for the barycentric Gauss formula, the cost for both \(C_k\) and \(C_k^{-1}\) is \({\mathcal {O}}(dNn)\). The complexity of computing the conditional PDF values \(\varPhi _{k+1}\) depends on how \({{\tilde{\pi }}}\) is interpolated onto \(x_k^\ell \). Global Lagrange interpolation requires \({\mathcal {O}}(nr^2)\) cost per sample, whereas local interpolation is independent of n, requiring only \({\mathcal {O}}(r^2)\) operations. In our numerical experiments, we have found piecewise linear interpolation on a uniform grid to be sufficient. In summary, the total complexity is

4.2 Metropolis–Hastings correction (TT-MH)

For the TT-CD sampling procedure in Algorithm 2 to be fast, the TT ranks r should be as small as possible. Since the joint PDF is typically a complicated multivariate function, its TT ranks may grow fast with the increasing accuracy. On the other hand, low accuracy is typically sufficient if we ‘correct’ the distribution using the Metropolis–Hastings (MH) algorithm to ensure that the samples are distributed according to the target distribution \(\pi \). Thus, we first propose to use a coarse TT approximation together with TT-CD sampling as independence proposals in a MH algorithm.Footnote 4

When the current state is x and the new proposal is \(x'\), the next state is determined by the stochastic iteration that first computes the Metropolis–Hastings ratio

and the proposal is accepted with probability

putting the new state \(x=x'\); otherwise, \(x'\) is rejected and the chain remains at x. Note that in the actual computations we calculate \(h(x,x')\) using the log-densities \(\log \pi (x)\) and \(\log \pi ^{*}(x) = \log p_1^*(x_1) +\cdots +\log p_d^*(x_d)\) (see Algorithm 2) for stability reasons.

As efficiency indicators of this MH algorithm for estimating the expected value \({\mathbb {E}}_\pi g\) of some functional g(x), we consider the acceptance rate and the integrated autocorrelation time. In this section, we study how they depend on the approximation error in the PDF. Throughout we must assume that \(\pi \) is absolutely continuous with respect to \(\pi ^*\), which guarantees reversibility with respect to \(\pi \) (Tierney 1998), and that we can evaluate the importance ratio \(w(x)=\pi (x)/\pi ^*(x)\). We require that \(w^*\equiv \Vert w \Vert _\infty < \infty \), which is equivalent to uniform geometric convergence (and ergodicity) of the chain (Roberts and Rosenthal 2011). (The essential supremum may be taken with respect to \(\pi \) or \(\pi ^*\).)

To simplify the presentation in this subsection, we assume again (without loss of generality) that the density is normalized.

Lemma 1

Suppose that \(\pi (x)\) is normalized and that the mean absolute error in the TT-CD sampling density satisfies

Then the rejection rate is bounded by \(\varepsilon \), i.e.

where the expectation is taken over the chain.

Proof

Using ergodicity of the chain,

Since \(1-\alpha \le |1-h|\),

where the second step uses the triangle inequality. Integrating both sides with respect to x and \(x'\), we obtain the claim of the lemma. \(\square \)

This lemma indicates that the rejection rate decreases proportionally to \(\varepsilon \), where \(\varepsilon \) is the total error due to approximating \(\pi \) by a low-rank TT decomposition \({\tilde{\pi }}\), interpolating discrete values of \({{\tilde{\pi }}}\) on a grid, and taking the absolute values in Algorithm 2, Line 9.

Lemma 1 assumed a mean absolute error. We need the stronger statement of local relative error, which is \(w^*<\infty \), to bound the integrated autocorrelation time (IACT) (Wolff 2004), defined as

where \(\rho _{gg}(t)\) is the autocorrelation coefficient for the chain in statistic g at lag t. Defined like this, \(\tau \ge 1\) can be considered as a reduction factor in the efficiency of a particular MCMC chain compared to an ideal independent chain, asymptotically as the length of the chain goes to infinity. Note that \(w^*<\infty \) implies that TT-MH is uniformly ergodic, but conversely the MCMC is not even geometrically ergodic if \(w^*=\infty \) (Mengersen and Tweedie 1996, Thm. 2.1).

Lemma 2

When \(w^*<\infty \), for any \(g\in L^2(\pi )\),

where \(a=1-1/w^*\).

Proof

Without loss of generality, we may consider \(g\in L_0^2(\pi )\), i.e. \(E_{\pi }[g]=0\) (see, for example, Mira and Geyer 1999). Consider the transition kernel

(This is the chain that proposes from \(\pi \) and accepts with probability \((1-a)\).) \(P_{a}\) has a simple spectrum, consisting of 1, with right eigenvector \({\mathbf {1}}\), and a for the orthogonal compliment. Hence, the asymptotic variance in a central limit theorem (CLT) for the chain in \(g\in L_0^2(\pi )\) induced by \(P_a\) may be evaluated using the spectral measure (see, for example, Mira and Geyer 1999; Häggström and Rosenthal 2007), which reads \({\mathcal {E}}_g(S)=\delta _a(S)\) in this case, giving IACT equal to \((1+a)/(1-a)\). The transition kernel for the TT-MH chain is (Smith and Tierney 1996, Thm. 1 & Lem. 3)

with \(\lambda \) given by Smith and Tierney (1996, Eq (5)). Since \(\min (1/w(x),1/w(y))\ge 1/w^*\), P dominates \(P_{a}\), in the sense of Peskun ordering (Tierney 1998; Mira and Geyer 1999), i.e. the off-diagonal terms in P are greater or equal than those in \(P_{a}\), and hence, the IACT using P is less or equal than that using \(P_a\) (Mira and Geyer 1999, Thm 3.4). \(\square \)

For discrete state spaces, the result in Lemma 2 follows directly from Mira (2001, Eqn. (2.1)), while one could argue that this is sufficient for practical computation since computers are finite-dimensional.

The TT-cross method tends to introduce a more or less uniform error of magnitude \(\varepsilon \)on average. For regions where \(\pi (x)\gg \varepsilon \), this leads to a bounded importance ratio \(w(x) \le 1 + {\mathcal {O}}(\varepsilon )\). When \(\pi (x) \ll \varepsilon \), we will typically have \(\pi ^*(x) = {\mathcal {O}}(\varepsilon )\) and \(w(x)<1\). However, if \(\pi (x) \approx \varepsilon \) and a negative error of order \(\varepsilon \) is committed, the two may cancel, resulting in a small \(\pi ^*(x)\) and consequently in a large w(x). Numerical experiments demonstrate that \(w^*-1\) can indeed be much larger than the \(L_1\)-norm error used in Lemma 1 (see Fig. 1). However, these cancellations (and hence the equality in \(\min (1/w(x),1/w(y))\ge 1/w^*\)) seem to be rare (see Fig. 2). Moreover, the practical IACT tends to be much smaller than the upper bound given by Lemma 2.

4.3 QMC samples and importance weights (TT-qIW)

Due to the central limit theorem, the rate of convergence of the statistical error of a Monte Carlo estimator for \({\mathbb {E}}_\pi g\), as the number of samples \(N \rightarrow \infty \), is limited to \({\mathcal {O}}(N^{-1/2})\). The IACT of the chain induced by a MH sampler, such as the TT-MH sampler in the previous section, only affects the constant in this estimate.

Thus, it is tempting to use more structured quadrature points to obtain a better convergence rate. For example, the TT approximation of \(\pi \) provides the possibility to reduce the inherent multivariate integrals to a sequence of univariate integrals, as we did when forming the marginal distributions in Sec. 4.1, and use, for example, Gauss quadrature. Another option is to note that the TT-CD map is also well defined for other seed points, such as those taken from a quasi-Monte Carlo (QMC) rule, that is, \(\{(q_1^\ell ,\ldots ,q_d^\ell )\}_{\ell =1}^{N}\) in Algorithm 2 are taken from a QMC lattice in \([0,1]^d\), rather than i.i.d. samples from \({\mathcal {U}}(0,1)^d\). Under certain assumptions on the smoothness of the quantity of interest, the QMC quadrature can give an error that converges with order \(N^{-1}\) instead of \(N^{-1/2}\) when \(N \rightarrow \infty \) (Niederreiter 1978; Dick et al. 2013). However, both those approaches provide estimates for \({\mathbb {E}}_{\pi ^{*}} g\), which are biased due to the TT approximation, and this bias cannot be ‘corrected’ using a MH step, as for i.i.d. seeds. On the other hand, there are no suitable convergence results for MH algorithms based on QMC proposals.

A classical way to remove the bias in the estimate is via importance re-weighting. Writing the expectation as an integral, then multiplying and dividing by the approximate density function, gives

where \(Z=\int \pi (x) \text {d}x\) is the normalization constant and \(w(x) = \pi (x)/\pi ^{*}(x)\) is the importance weight. That is, the expectation of g with respect to \(\pi \) equals the expectation of the weighted function g(x)w(x) with respect to the approximate density \(\pi ^{*}\). The normalization constant can be rewritten as \( Z = \int w(x) \pi ^{*}(x) \text {d}x\,. \) Similarly to (7), we calculate \(w(x) = \exp \left( \log \pi (x) - \log \pi ^{*}(x)\right) \) using log-densities in practice.

Thus, given a set of samples \(\{x^{\ell }\}_{\ell =1}^{N} \sim \pi ^{*}\) produced using the TT-CD algorithm, either from a set of i.i.d. samples on \([0,1]^d\) or from a QMC lattice, we compute

Note that, since \(x^{\ell } \sim \pi ^{*}\), the weight \(w (x^{\ell }) < \infty \) with probability 1, and hence, the importance quadrature (10) is well defined. The convergence depends on the distance between \(|\pi ^{*}-\pi |\) and on the choice of samples \(x^{\ell }\). Most importantly, if the seeds \(\{q^\ell \}\) for the TT-CD samples \(\{x^{\ell }\}\) in Algorithm 2 are chosen according to a randomized QMC rule, and the integrand g(x)w(x) is sufficiently smooth, we can expect a rate of convergence close to \({\mathcal {O}}(N^{-1})\), the estimator is unbiased, and under the right smoothness assumptions, the convergence rate is dimension independent (Dick et al. 2013).

4.4 Multilevel acceleration

Following recent works on multilevel MCMC (Hoang et al. 2013; Dodwell et al. 2015), we can also use the (cheap) surrogate \(\pi ^{*}\) as a type of control variate to achieve variance reduction in the estimator.

In addition to \(\pi ^*\), we may also have a cheap ‘surrogate’ \(\tilde{g}\) for the integrand g. For example, in Sect. 5.3, we will build a TT surrogate \({{\tilde{u}}}_h(\theta )\) of the FE solution \(u_h(\theta )\) of the stochastic diffusion equation, as a function of the stochastic parameters \(\theta \), that allows for a cheap approximation \({{\tilde{g}}}(\theta ) = \phi ({{\tilde{u}}}_h)\) of any functional \(g(\theta )=\phi (u_h)\) of the PDE solution, without having to solve the PDE for each sample. Otherwise, let \({{\tilde{g}}} = g\).

To exploit the multilevel ideas, we observe that

As in the previous section, given a set of \(N_0\) samples \(\{x^{\ell }\}_{\ell =1}^{N_0}\sim \pi ^{*}\) produced using the TT-CD algorithm, the first term in (11) and (12) can be estimated by

Since the expected value in (13) is with respect to \(\pi ^{*}\), no MH correction is necessary. Moreover, we can use, as in Sect. 4.3, QMC seed points \(\{q^\ell \} \subset [0,1]^d\) for the TT-CD samples \(\{x^{\ell }\}\) in Algorithm 2, leading to a much faster convergence of the estimator with respect to \(N_0\). Alternatively, if both \(\tilde{\pi }\) and \({{\tilde{g}}}\) are represented in the TT format, (13) can be computed without sampling as the dot product of TT tensors as in Eigel et al. (2018).

In fact, if the evaluation of \({{\tilde{g}}}\) is significantly faster than the evaluation of g, as in the stochastic diffusion problem below, the cost of estimating the first term in (11) and (12) becomes entirely negligible.

To estimate the second term in (11) and (12), we now proceed as in Sects. 4.2 and 4.3, respectively.

First consider a set of i.i.d. samples \(\{x^{\ell }\}_{\ell =1}^{N_1} \sim \pi ^*\), computed using Algorithm 2, and let \(\{x_{\text {MH}}^{\ell }\}_{\ell =1}^{N_1}\) be the Markov chain of samples distributed according to \(\pi \) after Metropolis–Hastings ‘correction’ of \(\{x^{\ell }\}_{\ell =1}^{N_1}\) using the acceptance probability defined in (7). We can define the following unbiased estimator:

If \(\pi ^{*}\approx \pi \) and \({{\tilde{g}}} \approx g\), the pairs of samples \(({{\tilde{g}}}(x^\ell ), g(x_{\text {MH}}^{\ell }))\) are strongly, positively correlated, and thus, the variance of \(g(x^\ell _{\text {MH}}) - {{\tilde{g}}}(x^{\ell })\) is much smaller than the variance of \(g(x^\ell _{\text {MH}})\). As a consequence, the number of samples \(N_1\) necessary to achieve a prescribed statistical error can be chosen significantly smaller than in Sect. 4.2.

Alternatively, consider now the second term in (12) and let \(\{x^{\ell }\}_{\ell =1}^{N_1}\) be obtained via Algorithm 2 from a set of \(N_1\) randomized QMC seed points \(\{q^\ell \}_{\ell =1}^{N_1} \subset [0,1]^d\). Then we can define the following unbiased estimator:

Again, if \(\pi ^{*}\approx \pi \) and \({{\tilde{g}}} \approx g\) then \(w\approx 1\) and the variance of \(g(x^\ell ) w(x^\ell ) / {{\tilde{Z}}} - {{\tilde{g}}}(x^\ell )\) is small, so that the number of samples \(N_1\) can be chosen significantly smaller than the number N of samples in (10). Moreover, since \({{\tilde{Z}}} = 1 + \frac{1}{N_1} \sum _{\ell =1}^{N_1} (w(x^\ell ) - 1)\) and \({\mathbb {V}}_{\pi ^{*}}[w - 1]\) is small, a small value for \(N_1\) is also sufficient for the calculation of \({{\tilde{Z}}}\) in (10). If \(g w/ {{\tilde{Z}}} - {{\tilde{g}}}\) is sufficiently smooth, the rate of convergence of the sampling error as \(N_1 \rightarrow \infty \) should again be close to \({\mathcal {O}}(N_1^{-1})\). However, in contrast to the estimator in (10), we do not observe that better rate of convergence for the difference estimator in (15).

It would be possible to further optimize the complexity of the estimators in (13), (14) and (15) by a judicious choice of the TT accuracy \(\varepsilon \), as well as the numbers of samples \(N_0\) and \(N_1\), There is of course also scope for full multilevel estimators as in Hoang et al. (2013); Dodwell et al. (2015). In particular, the values of \(N_0\) and \(N_1\) can be determined by an adaptive greedy procedure (Kuo et al. 2017), which compares empirical variances and costs of the two levels and doubles \(N_\ell \) on the level that has the maximum profit. However, we will not consider this further and leave it for future works.

5 Numerical examples

5.1 Shock absorber reliability

In this section, we demonstrate our algorithm on a problem of reliability estimation of a shock absorber. The time to failure of a type of shock absorber depends on some environmental conditions (covariates) such as humidity and temperature. We use data (O’Connor and Kleyner 2012) on the distance (in kilometres) to failure for 38 vehicle shock absorbers. Since there were no values of any covariates in this example, the values of D covariates were synthetically generated from the standard normal distribution as this would correspond to the case in which the covariates have been standardized to have zero mean and variance equal to one. The accelerated failure time regression model (Meeker and Escobar 1998) is widely used for reliability estimation with covariates. We use an accelerated failure time Weibull regression model, which was described as reasonable for these data in Meeker and Escobar (1998), where the density of time to failure is of the form

and where \(\theta _1,\theta _2\) are unknown scale and shape hyperparameters, respectively. The covariates are assumed to affect the failure time distribution only through the scale parameter \(\theta _1\), via a standard logarithmic link function, that is

where \(x_k\) are the covariates. The \(D+2\) unknown parameters \(\beta _0,\ldots ,\beta _{D}\) and \(\theta _2\) must be inferred from the observation data on the covariates \(x_k\) and the failure times, which in this example are subject to right censoring (marked with \(^+\)). The set \(T_f\) of failure times is given by:

6700 | 6950\(^+\) | 7820\(^+\) | 8790\(^+\) | 9120 |

9660\(^+\) | 9820\(^+\) | 11310\(^+\) | 11690\(^+\) | 11850\(^+\) |

11880\(^+\) | 12140\(^+\) | 12200 | 12870\(^+\) | 13150 |

13330\(^+\) | 13470\(^+\) | 14040\(^+\) | 14300 | 17520 |

17540\(^+\) | 17890\(^+\) | 18420\(^+\) | 18960\(^+\) | 18980\(^+\) |

19410\(^+\) | 20100 | 20100\(^+\) | 20150\(^+\) | 20320\(^+\) |

20900 | 22700 | 23490\(^+\) | 26510 | 27410\(^+\) |

27490 | 27890\(^+\) | 28100\(^+\) |

To perform Bayesian inference on the unknown parameters, we use the prior specifications in Gutierrez-Pulido et al. (2005), namely an s-normal Gamma distribution \(\pi _0(\beta _0,\ldots ,\beta _D, \theta _2)\) given by

where \(\gamma = 2.2932\), \(\alpha = 6.8757\), \(m_0 = \log (30796)\), \(\sigma _0^2 = 0.1563\), \(m_1=\cdots =m_D=0\), \(\sigma _1=\cdots =\sigma _D=1\) and Z is the normalization constant. The parameter ranges

are large enough to treat the probability outside as negligible.

The (unnormalized) Bayesian posterior density function is given by a product of Weibull probabilities, evaluated at each observation in \(T_f\), and the prior distribution, i.e.

where

The formula for the censored case arises from the fact that the contribution of a censored measurement is the probability that t exceeds the measured value, that is, \(P(t\ge t^{+}|\theta _1,\theta _2) = \int _{t^{+}}^{\infty } f(t|\theta _1,\theta _2)dt\). We introduce n uniform discretization points in \(\beta _0,\ldots ,\beta _D\) and \(\theta _2\) and compute the TT-cross approximation of the discretized density \(\pi (\beta _0,\ldots ,\beta _D,\theta _2)\).

We consider two quantities of interest, the right \(95\%\) mean quantile and the right \(95\%\) quantile of the mean distribution, i.e.

respectively. The nonlinear constraint in the computation of the second quantile is solved by Newton’s method. To estimate the quadrature error, we perform 32 runs of each experiment and compute an average relative error over all runs, i.e.

where \(\iota \) and \(\ell \) enumerate different runs.

The error in the mean quantile is estimated similarly, and then, the average of those two error estimates is used in all our convergence studies. We used quantiles as the quantity of interest in order to illustrate that the TT surrogate captures the tails correctly.

5.1.1 Accuracy of TT approximation and CD sampler

We start by analysing the TT-MH sampling procedure, as described in Sect. 4.2. First, we consider how the errors in \({{\tilde{\pi }}}\) due to the tensor approximation and discretization propagate into the quality of the MCMC chain produced by the MH algorithm, i.e. the rate of rejections and the integrated autocorrelation time. The chain length is always set to \(N=2^{20}\), and the results are averaged over 32 runs. We choose a relatively low dimensionality \(D=2\), since it allows us to approximate \(\pi \) up to a high accuracy.

In Fig. 1, we vary the number of grid points n, fixing the relative stopping tolerance for the TT-cross algorithm at \(\delta =10^{-5}\), as well as benchmarking the algorithm for different thresholds \(\delta \), fixing \(n=512\). We track the relative empirical standard deviation of the TT approximation,

that can be computed exactly in the TT representation, as well as an importance-weighted QMC approximation to the \(L_1\)-norm error used in Lemma 1,

As shown in Lemma 1, the rejection rate is expected to be proportional to the approximation error in \(L_1\) norm, as this error goes to zero. The TT approximation is computed on a tensor grid with n vertices and uses linear interpolation to evaluate \({{\tilde{\pi }}}\) at intermediate values. Thus, it can be expected that the convergence of the interpolation error, as \(n \rightarrow \infty \), should be of \({\mathcal {O}}(n^{-2})\), provided \(\pi \) is sufficiently smooth. Figure 1 (top left) shows that the rejection rate converges with \({\mathcal {O}}(n^{-2})\), suggesting that this is the case here. Figure 1 (bottom left) also suggests that the rejection rate is proportional to the TT approximation error when it is greater than the interpolation error.

The behaviour of the importance ratio and the integrated autocorrelation time (IACT) is more complicated. The IACT \(\tau \) is tracked in Fig. 1 as well, while the essential supremum \(w^*\) of the importance ratio is shown in Table 1. The TT-cross algorithm tries to reduce the average approximation error. Pointwise relative error, however, is not guaranteed to be bounded. Although \(w^* \rightarrow 1\) as \(\delta \rightarrow 0\), it is orders of magnitude larger than \({\mathcal {E}}_{L_1}\). Regardless, Lemma 2 seems to give a too pessimistic estimate for the IACT, as the actual value \(\tau -1\) is much smaller than \(w^*-1\) and behaves similarly to the rejection rate. The reason for this is that very large values of w are quite rare, as we show in Fig. 2.

The complexity of the TT-cross algorithm (in terms of both the number of evaluations of \(\pi \) and the computational time) grows only very mildly (sublinearly) with \(\delta \) and n (notice the log-polynomial scale in Fig. 1, right). This makes the TT approach also well scalable for high accuracies.

5.1.2 Convergence studies and comparison with DRAM

Now we investigate the convergence of the quantiles and compare TT-MH with the delayed rejection adaptive Metropolis (DRAM) algorithm (Haario et al. 2006). The initial covariance for DRAM is chosen to be the identity matrix. In order to eliminate the effect of the burn-in period, we do not include the first N/4 elements of the DRAM chain in the computation of the quantiles. However, we will study the actual burn-in time empirically to have a fairer comparison of the ‘set-up cost’ of the two methods.

First, in Table 2, we fix \(D=6\) covariates and vary the discretization grid n and the TT approximation threshold \(\delta \). We present the rejection rates and the IACTs for TT-MH, with \(n=12\), 16 and 32 grid points in each direction, using values of \(\delta =0.5\) and \(\delta =0.05\), as well as for DRAM. In addition, we also give the set-up cost in terms of numbers of evaluations of \(\pi \), i.e. the number of points needed to construct the TT approximation via the TT-cross algorithm for TT-MH and the burn-in in DRAM. The latter is estimated as the point of stabilization of 6 moments of \(\beta \) and \(\theta _2\), approximated by averaging over \(2^{14}\) random initial guesses. The coarsest TT approximation requires about \(4 \cdot 10^4\) evaluations, whereas DRAM needs a burn-in of about \(5 \cdot 10^4\) steps.

Next, in Fig. 3 (left) we show the estimate \({\mathcal {E}}_q\) of the quadrature error defined in (17) for the two quantities of interest in (16), versus the total number N of samples in the MCMC chain, which is varied from \(2^{10}\) to \(2^{23}\). We see that both MH methods (i.e. TT-MH and DRAM) converge with a rate of \(N^{-1/2}\), as expected. To keep the set-up cost of the TT approximation low, we only consider fairly crude TT approximations (as in Table 2). However, all our approximations deliver a smaller sampling error for TT-MH than for DRAM when measured against the number of samples and an even greater reduction when plotted against CPU time (Fig. 3, right). More accurate TT approximations require more evaluations of \(\pi \) during the set-up in TT-cross, up to \(2.5 \cdot 10^5\) for \(\delta =0.05\) and \(n=32\). This set-up cost is clearly visible in the vertical offset of the curves in Fig. 3 (right). Notice that only N/4 initial DRAM samples are discarded in Fig. 3, which is much smaller than the actual burn-in for \(N<10^5\). The reason for this is that we want to compare the methods based on their best possible performance for the desired quadrature error \({\mathcal {E}}_q\), regardless of the actual distribution the samples belong to for small N. Therefore, DRAM has zero set-up cost in Fig. 3 (right) in the limit of \(N\rightarrow 1\). In spite of that, TT-MH is much faster than DRAM for the same number of evaluations, which yields a significant difference in terms of the total CPU time.

There are several reasons for this. For higher TT accuracies, the gains are mainly due to the significantly lower IACT of TT-MH, leading to a much better statistical efficiency of the MCMC chain. But also for low TT accuracies, the IACT of the TT-MH algorithm is still a fraction of that for DRAM. A further reason is the vectorization that is exploited in TT-cross, where a block of \({\mathcal {O}}(nr^2)\) samples is evaluated in each step. In DRAM, the function needs to be evaluated point by point in order to perform the rejection. Therefore, the number of distinct calls to \(\pi \) in TT-cross is much smaller than N, reducing the corresponding overhead in MATLAB. In compiled languages (C, Fortran) on a single CPU, the difference may be less significant. However, parallel implementations will also benefit from the blocking, especially when each sample is expensive. More accurate TT approximations are worthwhile to compute if a highly accurate estimate of the expected value is required, since in that case the length of the MCMC chain will dominate the number of samples in the set-up phase.

In Fig. 3, we also present results with the TT-qIW approach described in Sec. 4.3, where the approximate density \(\pi ^{*}\) is used as an importance weight and where the expected value and the normalizing constant are estimated via QMC quadrature. In particular, we use a randomized rank-1 lattice rule with product weight parameters \(\gamma _k = 1/k^2\). The generating vector was taken from the file lattice-39102-1024-1048576.3600, available at http://web.maths.unsw.edu.au/~fkuo/. Due to the non-smooth dependence of quantiles on the covariates, the rate of convergence for TT-qIW with respect to N is not improved in this example, but in absolute terms it consistently outperforms TT-MH, leading to even bigger gains over DRAM.

Finally, we fix the TT and the MCMC parameters to \(n=16\), \(\delta =0.05\) and \(N=2^{22}\) and vary the number of covariates D, and hence, the total dimensionality \(d=D+2\). In Fig. 4, we show the error in the quantiles, the number of evaluations of \(\pi \), as well as the autocorrelation times and TT ranks. We see that the TT ranks are almost independent of d, and the TT-MH approach remains more efficient than DRAM over a wide range of dimensions.

5.2 Rosenbrock function

As a benchmark example with particularly long tails (and hence potentially large autocorrelation times in MCMC), we consider the PDF induced by the Rosenbrock function \(\pi (\theta ) \propto \exp \left( -\frac{1}{2} r(\theta )\right) \), where

The dimension d can be increased arbitrarily. The parameters for the TT approximation are chosen to be \(\delta =3 \cdot 10^{-3}\) and \(n=128\) for \(\theta _1,\ldots ,\theta _{d-2}\), \(n=512\) for \(\theta _{d-1}\) and \(n=4096\) for \(\theta _d\). Each \(\theta _k\) is restricted to a finite interval \([-a_k,a_k]\), where \(a_d=200\), \(a_{d-1}=7\) and \(a_k = 2\) otherwise.

Figure 5 shows certain projections of \(N=2^{17}\) sampling points produced with TT-MH and DRAM for \(d=32\). We see that although the density function is reasonably compact and isotropic in the first variables, it is highly concentrated in the last variable. DRAM requires a significant number of burn-in iterations, which are shown in Fig. 5 (middle and right) as the red cloud of samples that are not overlapped by blue ones. In order to eliminate the burn-in in DRAM, we compute \(2^{20}\) samples and discard the first quarter of the chain. The difference is even more significant if we look at the integrated autocorrelation times in Table 3. For TT-MH, the IACT stays close to 1 for all considered dimensions, while for DRAM it exceeds 100 for larger d.

5.3 Inverse diffusion problem

Finally, we use our new TT-CD sampler to explore the posterior distribution arising from a Bayesian formulation of an infinite-dimensional inverse problem, as formalized in Stuart (2010).

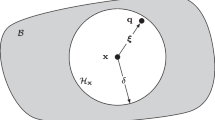

Let X and V be two infinite-dimensional function spaces—it is sufficient to consider separable Banach spaces—and let \(\mathcal G: X \rightarrow V\) be a (measurable and well posed) forward map. Consider the inverse problem of finding \(\kappa \in X\), an input to \({\mathcal {G}}\), given some noisy observations \(y \in {\mathbb {R}}^{m_0}\) of some functionals of the output \(u \in V\). In particular, we assume a (measurable) observation operator \(Q: V \rightarrow {\mathbb {R}}^{m_0}\), such that

where \(\eta \in {\mathbb {R}}^{m_0}\) is a zero-mean random variable that denotes the observational noise. The inverse problem is clearly under-determined when \(m_0 \ll \text {dim} (X)\), and in most mathematical models, the inverse of the map \({\mathcal {G}}\) is ill-posed.

We do not consider prior modelling in any detail and present here a stylized Bayesian formulation designed to highlight the computational structure and cost. We simply state a prior measure \(\mu _0\), to model \(\kappa \) in the absence of observations y. The posterior distribution \(\mu ^y\) over \(\kappa | y\), the unknown coefficients conditioned on observed data, is given by Bayes’ theorem for general measure spaces,

where the left-hand side is the Radon–Nikodym derivative, L is the likelihood function and Z is the normalizing constant (Stuart 2010).

For computing, we have to work with a finite-dimensional approximation \(\kappa _d \in X_d \subset X\) of the latent field \(\kappa \) such that \(\text {dim} (X_d) = d \in {\mathbb {N}}\) and define \(\kappa _d\) as a deterministic function of a d-dimensional parameter \(\theta := (\theta _1,\ldots ,\theta _d)\). Typically, we require that \(\kappa _d \rightarrow \kappa \) as \(d \rightarrow \infty \), but we will not focus on that convergence here and instead fix \(d \gg 1\). To be able to apply the TT representation, we set \(\theta _k \in [a_k,b_k]\) with \(a_k < b_k\), for all \(k=1,\ldots ,d\), and then, \(\kappa _d\) maps the tensor product domain \(\varGamma _d := \prod _{k=1}^d [a_k,b_k]\) to \(X_d\). We denote by \(\pi _0(\theta )\) and \(\pi (\theta ) = \pi ^y(\theta )\) the probability density functions of the pullback measures of the prior and posterior measures \(\mu _0\) and \(\mu ^y\) under the map \(\kappa _d: \varGamma _d \rightarrow X_d\), respectively, and specify that map so that \(\pi _0(\theta ) = 1/|\varGamma _d|\), i.e. the prior distribution over \(\theta \) is uniform.

We can then compute TT approximations of the posterior density \(\pi (\theta )\) as in the previous examples by using Bayes’ formula (21), i.e.

Consider some quantity of interest in the form of another functional \(F: V \rightarrow {\mathbb {R}}\) of the model output \(\mathcal G(\kappa _d)\). The posterior expectation of F, conditioned on measured y, can be computed as

5.3.1 Stylized elliptic problem and parametrization

As an example, we consider the forward map defined by the stochastic diffusion equation

with Dirichlet boundary conditions \(u|_{x_1=0}=1\) and \(u|_{x_1=1}=0\), as well as homogeneous Neumann conditions otherwise (Scheichl et al. 2017), which depends on an unknown (parametrized) diffusion coefficient \(\kappa _d \in X_d \subset L_\infty (D)\).

For this example, we take each of the parameters \(\theta _k\), \(k=1,\ldots ,d,\) to be uniformly distributed on \([-\sqrt{3},\sqrt{3}]\). Then, for any \(\theta \in \varGamma _d\) and \(x = (x_1,x_2) \in D\), the logarithm of the diffusion coefficient at x is defined by the following expansion:

The expansion is similar to the one proposed in Eigel et al. (2014) and mimics the asymptotic behaviour of the Karhunen–Loève expansion of random fields with Matérn covariance function and smoothness parameter \(\nu \) in two dimensions, in which the norms of the individual terms decay algebraically with the same rate. However, realizations do not have the same qualitative features and we use it purely to demonstrate the computational efficiency of our new TT samplers.

To discretize the partial differential equation (PDE) in (23), we tessellate the spatial domain D with a uniform rectangular grid \(T_h\) with mesh size h. Then, we approximate the exact solution \(u \in V := H^1(D)\) that satisfies the Dirichlet boundary conditions with the continuous, piecewise bilinear finite element (FE) approximation \(u_h \in V_h\) associated with \(T_h\). To find \(u_h\), we solve the resulting Galerkin system using a sparse direct solver.

For this example, we take the observations to be \(m_0\) noisy local averages of the PDE solution over some subsets \(D_{i} \subset D\), \(i=1,\ldots ,m_0\), i.e.

We take observation noise to be additive, distributed as i.i.d. zero-mean Gaussian noise with variance \(\sigma _n^2\), giving the likelihood function,

and posterior distribution \(\pi (\theta ) = \frac{1}{Z} L(\theta )\), with the normalization constant \(Z = \int \nolimits _{[-\sqrt{3},\sqrt{3}]^d} L(\theta ) d\theta .\)

In our experiments, the sets \(D_{i}\) are square domains with side length \(2/(\sqrt{m_0}+1)\), centred at the interior vertices of a uniform Cartesian grid on \(D=[0,1]^2\) with grid size \(1/(\sqrt{m_0}+1)\), that form an overlapping partition of D. We consider an academic problem with synthetic data for these \(m_0\) local averages from some ‘true’ value \(\theta _*\). In particular, we evaluate the observation operator at \(\theta _* =(\theta _0,,\theta _0,\ldots ,\theta _0)\), for some fixed \(0 \not =\theta _0 \in (-\sqrt{3},\sqrt{3})\), and synthesize data by then adding independent normally distributed noise \(\eta _* \sim {\mathcal {N}}(0,\sigma _n^2 I)\), such that \(y = Q({\mathcal {G}}(\theta _*)) + \eta _*\).

We consider two quantities of interest. The first is the average flux at \(x^1=1\). This can be computed as Teckentrup et al. (2013)

where \(w_h \in V_h\) is any FE function that satisfies the Dirichlet conditions at \(x^1=0\) and \(x^1=1\). This formula for the average flux is a smooth function of \(\theta \), which ensures a fast convergence for QMC-based quadrature rules, with an order close to \(N^{-1}\). However, we also consider the discontinuous indicator function \({\mathbb {I}}_{F(\theta )>1.5}\), to estimate the probability that the average flux in (25) becomes larger than 1.5, i.e.

As we shall see, the non-smoothness of \({\mathbb {I}}_{F(\theta )>1.5}\) reduces the order of convergence of the QMC quadrature to the basic Monte Carlo rate \(N^{-1/2}\). For the same reason, this function lacks a low-rank TT decomposition, and hence, we cannot compute its expectation using a tensor product quadrature directly. The mean field flux \(F|_{\theta =0}=1\) (in the units used) and the probability \(P_{F>1.5}\) are both of the order of 0.1.

The default parameters used in the stochastic model and for function approximation are shown in Table 4. We will make it clear when we change any of those default parameters.

The TT approximation \({{\tilde{\pi }}}\) can be computed directly by the TT-cross algorithm, as in the previous examples. For a TT tolerance of \(\delta = 0.1\), this requires about \(10^4-10^5\) evaluations of \(\pi \). However, since here the computation of each value of \(\pi (\theta )\) involves the numerical solution of the PDE (23) this leads to a significant set-up time. This set-up time can be hugely reduced, by first building a TT approximation \({{\tilde{u}}}_h(\cdot ,\theta )\) of the FE solution \(u_h(\cdot ,\theta )\) and then using \({{\tilde{u}}}_h(\cdot ,\theta )\) in the TT-cross algorithm for building \({{\tilde{\pi }}}\) instead of \(u_h(\cdot ,\theta )\).

It was shown in Dolgov and Scheichl (2019) that a highly accurate approximation of \(u_h(\cdot ,\theta )\) in the TT format can be computed using a variant of the TT-cross algorithm, the alternating least-squares cross (ALS-cross) algorithm, that only requires \({\mathcal {O}}(r)\) PDE solves, if the TT ranks to approximate \(u_h(\cdot ,\theta )\) up to the discretization error are bounded by r. Moreover, the rank grows only logarithmically with the required accuracy. We will see, below, that \(r < 100\) for this model problem for \(h=2^{-6}\), significantly reducing the number of PDE solves required in the set-up phase.

Since the observation operator Q consists of integrals of the PDE solution over subdomains of the spatial domain D, when applied to a function given in TT format it can be evaluated at a cost that is smaller than r PDE solves on \(T_h\) without any increase in the TT rank (Dolgov and Scheichl 2019). Finally, to compute an approximation of \(\pi \) via the TT-cross algorithm we use the significantly cheaper TT surrogate \(Q({{\tilde{u}}}_h(\cdot ,\theta ))\) in each evaluation of \(\pi (\theta )\) instead of computing the actual FE solution \(u_h(\cdot ,\theta )\). Since \({{\tilde{u}}}_h(\cdot ,\theta )\) is accurate up to the FE discretization error in \(V_h\)—which in this model problem for \(h=2^{-6}\) is of \({\mathcal {O}}(10^{-4})\)—this has essentially no impact on the accuracy of the resulting TT approximation \({{\tilde{\pi }}}\) (especially for TT accuracy \(\delta =0.1\)).

5.3.2 Set-up cost and accuracy of TT approximation

As in the shock absorber example, we test how the quality of the Markov chain produced by TT-MH depends on the error between \({{\tilde{\pi }}}\) and \(\pi \). In Fig. 6 (left), we show the rejection rates, IACT and error estimates (18), (19) for different stopping tolerances \(\delta \) and grid sizes n. In the top plot, we fix \(\delta =10^{-3}\) and vary n, while in the bottom plot, n is fixed to 512 and \(\delta \) is varied. The other model parameters are set according to Table 4, and the chain length is \(N=2^{16}\). The behaviour is as in the shock absorber example and as predicted in Lemma 1.

In Fig. 6 (right), we demonstrate the benefit of first computing a TT approximation \({{\tilde{u}}}_h(\cdot ,\theta )\) of the FE solution \(u_h(\cdot ,\theta )\), as described in the previous subsection. We see that the TT ranks to approximate \(u_h\) are significantly smaller than the TT ranks to approximate the density \(\pi \) to the same accuracy. In both cases, the TT ranks are independent of n, for n sufficiently large, and they show only a logarithmic dependence on the TT accuracy \(\delta \). However, the growth is significantly faster for \(\pi ^{*}\) than for \({{\tilde{u}}}_h\). For the default parameters in Table 4, the ranks of \({{\tilde{u}}}_h(\cdot ,\theta )\) and \(\pi ^{*}(\theta )\) are 26 and 82, respectively, and the numbers of function evaluations to build the TT surrogates are about 100 and about 53,000, respectively. The advantage is that with the surrogate \({{\tilde{u}}}_h\) available those 53,000 evaluations of \(\pi \), using \({{\tilde{u}}}_h\) instead of \(u_h\) in the data misfit functional, are significantly cheaper and the PDE only has to be actually solved 100 times.

5.3.3 Convergence of the expected quantities of interest

In this section, we investigate the convergence of estimates of the expected value of the quantities of interest and the computational complexity of the different methods. For the TT approximation of the density function \(\pi \), we fix \(n=32\) and \(\delta =0.1\). For the TT approximation of \(u_h\), we choose a TT tolerance of \(10^{-4}\), which is equal to the discretization error for \(h=2^{-6}\).

To compute the posterior expectations of the QoIs in (22), we compare two approaches that use our TT-CD sampling procedure:

- [TT-MH]

(Sec. 4.2) Metropolis–Hastings with independence proposals sampled via the TT-CD sampling procedure from the approximate distribution \(\pi ^{*}\).

- [TT-qIW]

(Sec. 4.3) Using the approximate density \(\pi ^{*}\) as an importance weight and estimating the expected value and the normalizing constant via a randomized QMC lattice rule.

Moreover, we test the two-level versions of both methods described in Sect. 4.4.

To benchmark the TT approaches, we use again DRAM with the initial covariance chosen to be the identity and discard the first N/4 samples. However, as a second benchmark, we also compute the posterior expectation directly by applying QMC to the two terms in the ratio estimate (QMC-rat), as defined in (22) and analysed in Scheichl et al. (2017). The QMC method in TT-qIW is again the randomized rank-1 lattice rule with product weights \(\gamma _k = 1/k^2\) and generating vector from the file lattice-39102-1024-1048576.3600 at http://web.maths.unsw.edu.au/~fkuo/. In order to reduce random fluctuations in the results, we average 16 runs of each approach in each experiment. The rejection rate and the IACT for TT-MH and DRAM are shown in Table 5. Notice that the autocorrelation times of DRAM for the coordinates \(\theta \) and for the quantity of interest F differ significantly, since the latter coordinates have a weaker influence on F.

In Fig. 7, we present the relative errors in the quantities of interest versus the chain length N together with reference slopes. For the expected value \({\mathbb {E}}_{\pi }[F]\) of the flux in Fig. 7 (left), the QMC ratio estimator (QMC-rat) converges with a rate close to linear in 1/N, so that it becomes competitive with the TT approaches for higher accuracies. However, by far the most effective approach is TT-qIW, where the TT approximation \(\pi ^{*}\) is used as an importance weight in a QMC ratio estimator. Asymptotically, the convergence rate for TT-qIW is also \({\mathcal {O}}(N^{-1})\) for \({\mathbb {E}}_{\pi }[F]\) and the effectivity of the estimator is almost two orders of magnitude better than that of DRAM. All the other TT-based approaches and DRAM converge, as expected, with the standard MC order \(N^{-1/2}\). For the non-smooth indicator function employed in \(P_{F>1.5}\) in Fig. 7 (right), the relative performance of the different approaches is similar, although the QMC-rat estimator now also converges with the MC rate of order \({\mathcal {O}}(N^{-1/2})\). Somewhat surprisingly, the TT-qIW method seems to converge slightly better than \({\mathcal {O}}(N^{-1/2})\) also for \(P_{F>1.5}\) and outperforms all other approaches by an order of magnitude.

The results in Fig. 7 are all computed for the same spatial resolution of the forward model. In a practical inverse problem, for the best efficiency, all errors (due to truncation, discretization and sampling) are typically equilibrated. Thus, it is useful to estimate the spatial discretization error. We achieve this by computing the posterior expectations of the QoIs on three discretization grids (with TT-qIW and \(N=2^{18}\)) and by using these to estimate the error via Runge’s rule. The estimated error for \(h=2^{-6}\) is plotted as a horizontal dashed line in Fig. 7. We see that with the TT-qIW method \(N=2^{13}\) samples are sufficient to obtain a sampling error of the order of the discretization error for \({\mathbb {E}}_{\pi }[F]\), while all other approaches require at least \(N=2^{17}\) samples (up to \(N > 2^{21}\) for DRAM).

In addition to DRAM, we also consider a version of the Metropolis-adjusted Langevin (MALA) algorithm with adapted empirical covariance matrix as a preconditioner (Atchadé 2006). However, the latter components of the gradient are rather small and give little information about the geometry. This makes the MALA convergence comparable to that of DRAM. Moreover, each step of MALA is about twice more expensive than a step of DRAM due to the computation of the gradient of \(u_h(\cdot ,\theta )\) in \(\nabla \log \pi (\theta )\) in addition to \(u_h\).