Abstract

Higuchi’s method of determining fractal dimension is an important, well-used, research tool that, compared to many other methods, gives rapid, efficient, and robust estimations for the range of possible fractal dimensions. One major shortcoming in applying the method is the correct choice of tuning parameter (kmax); a poor choice can generate spurious results, and there is no agreed upon methodology to solve this issue. We analyze multiple instances of synthetic fractal signals to minimize an error metric. This allows us to offer a new and general method that allows determination, a priori, of the best value for the tuning parameter, for a particular length data set. We demonstrate its use on physical data, by calculating fractal dimensions for a shell model of the nonlinear dynamics of MHD turbulence, and severe acute respiratory syndrome coronavirus 2 isolate Wuhan-Hu-1 from the family Coronaviridae.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Since the seminal work of Mandelbrot and Van Ness [27], the characterization of data in terms of fractal properties has found near ubiquitous and enduring use in diverse research areas, including research within the fields of engineering [48], hydrology [21, 50], geology [4, 34], physics [40], space science [7, 41], medicine [17, 28], economics [13], financial markets [44] and many more. Fractal properties in nature and human dynamics arguably have served to yield increased understanding and improvement on human society.

Higuchi’s method [18] is a widely applied time-domain technique to determine fractal properties of complex non-periodic, nonstationary physical data [12, 35, 49]. That is, the method can accurately calculate the fractal dimension of time series. Higuchi initially developed it to study large-scale turbulent fluctuations of the interplanetary magnetic field. It is a modification to the method of Burlaga and Klein [5] in which fluctuation properties of turbulent space plasmas can be studied beyond the inertial range. It is simple to implement, efficient, and can rapidly achieve accurate and stable values of fractal dimension, even in noisy, nonstationary data [25]. The fractal dimension calculated via the Higuchi method is called the Higuchi fractal dimension (HFD). Since its initial development, the Higuchi method has been applied to numerous fields of research. In medicine, for instance, it has found widespread use to detect and classify epileptic EEG signals [26], human locomotion [36], and in engineering, it has been used to detect faults in rolling bearings [48]. One difficulty in using the Higuchi method is that certain parameters must be applied to the method, and inappropriate parameter selection results in spurious calculation of fractal properties. Although the method has been used for decades and is widely employed at present, there is an absence of consensus of the appropriate method to determine the parameters that must be input. In this paper, we expose this weakness of the Higuchi method so that there is wider appreciation of its limits and suggests how to solve the drawbacks of this method when applied to different types of scientific data.

The HFD computed depends on the length of the time series, and an internal tuning factor kmax. Higuchi’s original paper did not elaborate on the selection of the tuning factor but illustrated the method with kmax = 211 for time series having length N = 217. Subsequent authors used similar values for the tuning factor but we will show that the tuning factor plays a crucial role in estimation the HFD. Higuchi’s method, if applied appropriately, can reliably find the time series fractal dimension. However, if the tuning factor is incorrectly selected, the method is compromised from the outset.

How is the researcher to determine the appropriate tuning factor for their study that will optimize the calculation of a stable HFD, if it exists? In addition, how does the selection of the factor influence the value of the computed HFD? The literature is vague in answering these questions, and to do so is the main thrust of our research. Multiple studies have addressed the issue of proper selection of tuning factor kmax. Accardo et al. [2] applied the method in their study of electroencephalograms and sought the most suitable pair of (kmax, N). They experimented with kmax = 3–10 on time series with lengths from N = 50–1000 and settled on an optimum kmax = 6. Some papers recommend plotting the HFD versus a range of kmax, and then selecting the appropriate kmax at the location where the calculated HFD approaches a local maximum or asymptote, which can be considered a saturation point [11, 39]. However, there is no reason that in every instance, the HFD will reach a saturation point. Paramanathan and Uthayakumar [31] proposed to determine the tuning factor kmax based on a size–measure relationship that employed a recursive length of the signal from different scales of measurement. Gomolka et al. [16] selected kmax on the basis of statistical tests that allowed the best discrimination between already known diabetic and healthy subjects. But in the absence of such additional data between systems in different dynamic states (e.g. health or pathology), how can one select the correct tuning parameter?

In this paper, we will try to answer these questions in a general way that is helpful to the community of researchers who utilize the Higuchi method. The organization of the paper is as follows. We will generate artificial time series with well-specified fractal dimension and then compare the HFD computed from these data for different values of the tuning parameter kmax. We will demonstrate the results on several examples of physical data.

2 Data and method

In order to investigate the optimization of the Higuchi method, we turn to the generation of synthetic time series with known fractal properties, to see how well the method performs. One difficulty resides in the production of truly fractal time series of given dimension, which is a non-trivial task [20]. Therefore, studies must concern themselves with the adequacy of the data-generating algorithms in addition to the fractal dimension estimation algorithms. We will consider synthetic time series realizations of processes with perfect and controlled scale invariance, viz. signals that have only a single type of scaling. Many other theoretical data types exist that have been used to analyze signals that lack local scaling regularity, but rather have a regularity which varies in time or space [24, 42]. There is also a recent effort to generalize the Higuchi method to distinguish monofractal from multifractal dynamics based on relatively short time series [6].

In this paper, we will limit the research to study of well-understood synthetic data with monofractal scaling. To illustrate how a monofractal scaling exponent can be derived, we consider fractional Brownian motion (fBm) which is characterized by a single stable fractal dimension and is a continuous-time random process [27]. Next, we research these data and compare the fractal dimension recovered using the Higuchi algorithm with the theoretical fractal dimension. The synthetic data time series can be written in terms of stochastic integrals of time integrations of fractional Gaussian noise:

here W is a stationary and ergodic random white noise process with zero mean defined on (− ∞, ∞). In the above equation, \({\varvec{H}}\in (0, 1)\) is known as the Hurst exponent. The time series Hurst exponent is related to signal roughness averaged over multiple length scales. The higher the value of H, the smoother is the time series, and the longer trends tend to continue. For values closer to zero, the time series rapidly fluctuates, as shown in Fig. 1. The covariance function of the noisy signal can be expressed by:

so that \({\varvec{B}}_{{\varvec{H}}} \left( 0 \right) \equiv 0\) and \({\text{var}} \{ {\varvec{B}}_{{\varvec{H}}} \left( {\varvec{t}} \right)\} = {\varvec{t}}^{{2{\varvec{H}}}}\). For H = 1/2, the white noise process reduces to the well-known random walk. The theoretical relationship between the Hurst exponent, H, and the Higuchi fractal dimension, HFD, is HFD \(= 2 - {\varvec{H}}\), with values of HFD between 1 and 2.

Examples of synthetic time series from the Davies and Harte [9] method, characterized by Hurst exponent H = 0.3, 0.5, 0.7, 0.9 (top to bottom)

We consider four different method generators of processes having long-range dependence to generate synthetic series with exact fractal dimension. First, we consider an exact wavelet-based method. This is based on a biorthogonal wavelet method proposed by Meyer and Sellan [1, 3] and implemented in MATLAB software and the wfbm calling function. The second is the method of Davies and Harte [9] whose generation process uses a fast Fourier transform basis and embeds the covariance matrix of the increments of the fractional Brownian motion in a circulant matrix. The third category of synthetic simulated data is produced using the Wood-Chan circulant matrix method [45], which is a generalization of the previous method [8]. The fourth set of data are simulated using the Hosking method [19], also known as the Durbin or Levinson method [23], which utilizes the well-known conditional distribution of the multivariate Gaussian distribution on a recursive scheme to generate samples based on the explicit covariance structure. All these methods of producing simulated data are considered exact methods because they completely capture the covariance structure and produce a true realization of series with a single scaling parameter.

Figure 1 shows various examples of time series produced via the Davies and Harte [9] method. The smoothest curve corresponds to H = 0.9, which implies high probability to observe long periods with increments of same sign. The roughest curve corresponds to H = 0.1, which is sub-diffusive, with high probability that increments feature long sequences of oscillating sign. The curves show data for Hurst exponents H = 0.3, 0.5, 0.7, 0.9, from top to bottom.

For each of the four data-generating methods, we create 100 unique time series, of differing lengths up to maximum length 500,000 data points, for Hurst exponents H = 0.1, 0.3, 0.5, 0.7, 0.9. Thus, for each time series length N, we have 500 unique simulations of fBm for each method. This produces 44,000 data sets in total, for experimentation. We next apply the Higuchi method to each of these time series with an exact fractal dimension (FD) to determine how well the Higuchi method is able to accurately recover the theoretical value compared to the derived HFD.

Next, we describe the Higuchi method. The Higuchi method takes a signal, discretized into the form of a time series, \({\varvec{x}}\left( 1 \right), {\varvec{x}}\left( 2 \right), \ldots , {\varvec{x}}\left( {\varvec{N}} \right)\) and, from this series, derives a new time series, \({\varvec{X}}_{{\varvec{k}}}^{{\varvec{m}}}\), defined as:

here [] represents the integer part of the enclosed value. The integer \({\varvec{m}} = 1,2, \ldots ,{\varvec{k}}\) is the start time, and \({\varvec{k}}\) is the time interval, with \({\varvec{k}} = 1, \ldots ,{\varvec{k}}_{{{\mathbf{max}}}} ;{\varvec{k}}_{{{\mathbf{max}}}}\) is a free tuning parameter. This means that given time interval equal to \({\varvec{k}}\), spawns \({\varvec{k}}\)-sets of new time series. For instance, if \({\varvec{k}} = 10\) and the time series has length \({\varvec{N}} = 1000\), the following new time series are derived from the original data:

These curves have lengths defined by:

The final term in the numerator is a normalization factor, \({\varvec{N}} - \frac{1}{{\left[ {\frac{{{\varvec{N}} - {\varvec{m}}}}{{\varvec{k}}}} \right]}} \cdot {\varvec{k}}\). The length of the curve for the time interval \({\varvec{k}}\) is then defined as the average over the \({\varvec{k}}\) sets of \({\varvec{L}}_{{\varvec{m}}} \left( {\varvec{k}} \right):\)

In cases when this equation scales according to the rule \({\varvec{L}}\left( {\varvec{k}} \right) \propto {\varvec{k}}^{{ - {\mathbf{HF}}{\varvec{D}}}} ,\) we consider the time series to behave as a fractal with dimension HFD. Thus, the HFD is the slope of the straight line that fits the curve of ln(L(k)) versus ln(1/k). Figure 2 shows the L(k) curve from simulated data for the fractal dimension FD = 1.7 (corresponding to H = 0.3) time series data in Fig. 1. The corresponding curve of HFD(kmax) is shown in Fig. 3.

Curve showing the relationship between HFD and kmax for the FD = 1.7 time series shown in Fig. 1

We now turn to finding the best tuning parameter, kmax, for the set of data we have simulated. As discussed in the Introduction, a common way to determine the tuning parameter relies upon finding the location, in plots like Fig. 3, of HFD versus a range of kmax, where the calculated HFD approaches a local maximum or asymptote [11, 39]. We will call this a tuning curve. In Fig. 3, which is for the time series with HFD = 1.7, there is only one local maximum which is located at kmax = 7 which produces a negligible error of 0.5%. There are three places where the Higuchi method finds a best value is achieved for this simulation, viz. kmax = 4, 14, 727. In this particular instantiation of a fBm, the most effective tuning parameter would thus be kmax = 4, 14, or 727. The easiest method would be to use the smallest kmax since this results in the least computational effort. However, in this case, using the local maxima method yields an acceptable estimate result with little additional effort.

3 Results

There is no reason to expect that a local maxima exists in every case in a tuning curve and is therefore searching through these curves for asymptotes is not a general or practical method to determine the best tuning parameter kmax. For instance, Fig. 4 shows the tuning curves for HFD = 1.9, 1.5, 1.3, 1.1 computed from the simulated data of Fig. 1. The black horizontal dashed line in each subplot shows the theoretical value of the fractal dimension. There is not always a local maximum or an asymptotic convergence to a set value of HFD. For HFD = 1.9, a peak occurs but only near kmax ~ 5000; the region of the plateau is found at the tuning parameter that yields the largest error in fractal dimension. This indicates that in this fBm realization, a much smaller kmax would be appropriate.

Curves showing the relationship between HFD and kmax for the HFD = 1.9, 1.5, 1.3, 1.1 time series shown in Fig. 1. The dashed horizontal curves show the theoretical value for the HFD

We now turn to analyzing the simulated realizations of fBm. The smallest time series length we select has N = 1000, and the largest has N = 500,000 data points and compute the HFD for each of these series, as a function of tuning parameter kmax. We use values between kmax = 2 and kmax = N/2. This gives a new data set comprised of HFD values as a function of the time series length, and the tuning parameter, yielding HFD = HFD(N, kmax). The error to be minimized is written by:

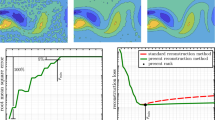

The previous equation gives the percentage error to be averaged over all synthetic time series simulations to yield a general result for all simulation data considered. As researchers do not generally know a priori which method of simulating artificial data most closely follows the statistics of any particular physical or research data set, it is appropriate to use a range of synthetic simulated time series with known fractal dimension, as an average result gives the most general answer. Figure 5 shows surface plots comparing the percentage error HFD versus the tuning parameter kmax and time series length, N for the theoretical HFD = 1.7 for each of the four simulation methods described in the previous section. The curve of least error is shown as a thick grey line.

Surface showing the average percentage error between the Higuchi method fractal dimension and theoretical FD = 1.7 averaged over 100 datasets of different lengths, N. The curve of least error is shown a Wavelet generation method, b Wood-Chan method, c Davies–Harte method, and d Hosking method. The curve of least error is shown as a thick grey line

Each method of simulation yields a different curve of least error. Figure 5b, which is the curve for the Wood-Chan circulant matrix method [45], is the lowest overall error. The Hosking [19] method yields HFD values with the greatest errors (Fig. 5d). Overall, the location of the minimum error curve varies widely depending on the generation algorithm for the synthetic data.

By taking a geometric mean of these minimum error curves for all HFD values, we derive a best-fit curve using a sum of sines function since this gave a simple function with few terms and a fit with small sum squared error. Figure 6 shows the relationship between the time series length and the tuning parameter, for different HFD values, and the dashed curve shows the best fit, given by the following equation:

here [] represents the integer part of the enclosed function value. Table 1 shows the parameter values for the best-fit. Figure 6 shows that for short time series, the use of a plateau criterion to select the kmax tuning parameter will result in the use of values smaller than those proposed by this generalized study. For example, in Fig. 1, a time series of length N = 20,000 is used. Figure 3 shows the curve of HFD versus kmax. Our fitting function yields kmax = 47 for this length data set.

4 Applications

In this section, we present two applications of the Higuchi method with the corrections applied to determining the appropriate tuning parameter. The first is a shell model of the nonlinear dynamics of MHD turbulence. We effect this via simplified approximations of the Navier–Stokes fluid equations [15, 30, 47]. We use the MHD Gledzer–Ohkitani–Yamada (GOY) shell model, which captures the intermittent dynamics of the energy cascade in MHD turbulence [22] as it moves along through the shells in a front-like manner.

Shell models of MHD turbulence are an example of dynamical systems incorporating simplified versions of the Navier–Stokes or MHD turbulence equations. They attempt to conserve some of the invariants in the limit of no dissipation. We use the SHELL-ATM code [4] to produce a time series of length N = 500,000 of the magnetic energy dissipation rate (\(\in_{b}\)) as a function of time obtained in the MHD shell model (Fig. 7a). The model is described in detail in Lepreti et al. [22]. In short, the SHELL-ATM model makes it possible to obtain rapid simulations of MHD turbulence in volumes in which a longitudinal magnetic field dominates. Model construction begins via division of the wave-vector space (k-space) into a number, N, of discrete shells with known radius \(k_{n} = k_{0} 2^{n}\) (n = 0, 1, …, N) [14]. Each shell is then assigned complex dynamical Elsässer-like fields \(u_{n} \left( t \right)\) and \(b_{n} \left( t \right)\), which represent longitudinal velocity increments and magnetic field increments. The magnetic energy dissipation rate is defined by

where η is the kinematic resistivity. To find the solutions to the above equations, we solve the equations

and

where ν is the kinematic viscosity, and \(\left( {f_{n} ,g_{n} } \right)\) are forcing terms operating on the magnetic and velocity increments. The symbol * represents a complex conjugate. The forcing terms are calculated from the Langevin equation driven by a Gaussian white noise.

These data in Fig. 7a display clear intermittent bursts of dissipated energy. Figure 7b shows average curve length versus scale size, k, for the time series. Figure 7c shows the relationship between HFD and kmax. There is no asymptote which may indicate an appropriate value of kmax. We now use Eq. (*) to select the appropriate tuning parameter kmax determined from our prior analysis for data featuring a single fractal scaling, for varying lengths, N, of the time series. Figure 7d shows the computed HFD selected. There is a variation in the fractal dimension with values being estimated as smaller from shorter lengths of the time series, and overall HFD ~ 1.04–1.13.

The second data example is that of the severe acute respiratory syndrome coronavirus 2 isolate Wuhan-Hu-1. Wu et al. [46] reported on the identification of the novel RNA virus strain from the family Coronaviridae, which is designated here ‘WH-Human-1’ coronavirus. We obtained these data from the National Center for Biotechnology Information (NCBI), which is part of the United States National Library of Medicine (NLM), a branch of the National Institutes of Health (NIH).

To analyze the fractal patterns in the genome one must convert the nucleotide sequence from a symbolic sequence, meaning A,G,C,T into a time series. We follow the Peng [32] method in which DNA is represented as a “random walk” with two parameters ruling the direction of the “walk” and the resulting dynamics. We start with the first nucleotide. If it is a pyrimidine base, we move up one position. Every subsequent pyrimidine base moves up one position. When a purine base is encountered in the series the walk steps down one position. The nucleotide distance from the first nucleotide is then plotted versus the displacement, as in Fig. 8a. Figure 8b shows average curve length versus scale size, k, for the time series. Figure 8c shows the relationship shows the computed HFD against tuning parameter kmax from the whole time series of length N = 29,903. In this case, there is a distinct asymptote at kmax = 20, which yields HFD = 1.497. To test our method, we again use Eq. (*) to select the appropriate tuning parameter kmax, for varying lengths, N, of the time series. Figure 8d shows the computed HFD selected. There is no statistically significant variation in the fractal dimension with values being estimated at HFD ~ 1.5.

a WH-Human-1 complete genome represented by the Peng [32] method. b Average curve length versus scale size, k

Our analysis shows that the fractal dimension of WH-Human-1 coronavirus genome is different from its fractal dimension computed from electron microscopic and atomic force microscopic images of 40 coronaviruses (CoV), as reported by Swapna et al. [37] who found a scale-invariant dimension of 1.820. This indicated that the images of the virus feature higher complexity and greater roughness than the pattern we have detected in the genome.

5 Conclusions

Higuchi’s method to compute the fractal dimension of physical signals is widely used in research. However, a major difficulty in applying the method is the correct choice of tuning parameter (kmax) to compute the most accurate results. Poor selection of kmax can result in values of the fractal dimension that are spurious, and this can result in potentially invalid interpretations of data. In the past researchers have used various ad hoc methods to determine the appropriate tuning parameter for their particular data. We have shown that a method such as seeking a convergence of the computed HFD to a plateau is not in general a valid procedure as not every data instance shows the HFD estimate reaches a plateau.

In this paper, we have sought to find a more general method of determining, a priori, the optimum tuning parameter kmax for a time series of length N. To study this problem, we generated synthetic time series of known HFD and applied the Higuchi method to each, averaging results over the different fbm within HFD = [1.9, 1.7, 1.5, 1.3, 1.1] categories. These data allow the calculation of curves showing where in (N, kmax)-space the most appropriate tuning parameter should be selected. We found that fractal dimension calculation via the Higuchi method is sensitive to both the tuning parameter kmax and also the length of the time series. We derive a best-fit curve fitting the location of the average minimum HFD error to provide researchers with an efficient method of estimating and appropriate kmax, given their particular dataset.

We applied the modified method to two physical cases, one from physics and one from bioinformatics. In the latter case, we considered the Coronaviridae genome of the severe acute respiratory syndrome coronavirus 2 isolate Wuhan-Hu-1, first reported by Wu et al. [46]. Our analysis of this data showed strong evidence of monofractality (Fig. 8b) with HFD ~ 1.5 (Fig. 8d).

In the former case, we computed the magnetic energy dissipation rate from a shell model of the nonlinear dynamics of MHD turbulence. We used simplified approximations of the Navier–Stokes fluid equations [15, 30, 47], in particular the MHD Gledzer–Ohkitani–Yamada (GOY) shell model, and found HFD ~ 1.10 (Fig. 7d). These data have been reported to feature a multifractal scaling [33], and this is consistent with our results in Fig. 8b which show evidence of nonlinear behaviour, which is possibly a reason why there is about a 10 per cent variation in the HFD calculation (Fig. 7d).

It is clear that accurate calculation of fractal dimension can be a delicate process and is influenced not only by the method used, but also by the nature of the data. Studies must therefore concern themselves not only with the type of data, but also with the adequacy of the data-generating algorithms, and fractal estimation algorithms. We considered only synthetic time series realizations of processes with perfect and controlled scale invariance, viz. signals that have only a single type of scaling. However, many other theoretical data types exist. For instance, numerous geophysical signals do not have local scaling regularity, but rather have a regularity which varies in time or space [10, 24]. Data that are multifractal require a variety of scaling exponents to fully describe the dynamics, and methods to generalize the Higuchi method to these more complex data types are going forward at present [6].

Data availability

The data that support the findings of this study are available from the corresponding author, JAW, upon reasonable request.

References

Abry, P., Sellan, F.: The wavelet-based synthesis for the fractional Brownian motion proposed by F. Sellan and Y. Meyer: remarks and fast implementation. Appl. and Comput. Harmonic Anal. 3(4), 377–383 (1996)

Accardo, A., et al.: Use of the fractal dimension for the analysis of electroencephalographic time series. Biol. Cybern. 77(5), 339–350 (1997)

Bardet, J.-M., Lang, G., Oppenheim, G., Philippe, A., Stoev, S., Taqqu, M.S.: Generators of long-range dependence processes: a survey. Theory Appl. Long-Range Depend. 579–623. (2003)

Buchlin, E., Velli, M.: Shell models of RMHD turbulence and the heating of solar coronal loops. Strophys. J. (2007). https://doi.org/10.1086/512765

Burlaga, L.F., Klein, L.W.: Fractal structure of the interplanetary magnetic field. J. Geophys. Res. 91(A1), 347–350 (1986)

Carrizales-Velazquez, C., Donner, R. V., Guzmán-Vargas, L.: Generalization of Higuchi's fractal dimension for multifractal analysis of time series with limited length. arXiv preprint http://arxiv.org/abs/2105.11055. (2021)

Cersosimo, D.O., Wanliss, J.A.: Initial studies of high latitude magnetic field data during different magnetospheric conditions. Earth Planets Space 59(1), 39–43 (2007)

Coeurjolly, J.-F.: Simulation and identification of the fractional Brownian motion: a bibliographic and comparative study. J. Stat. Softw. 5(7), 1–53 (2000). https://doi.org/10.18637/jss.v005.i07

Davies, R.B., Harte, D.S.: Tests for HURST effect. Biometrika 74(1), 95–101 (1987)

Dobias, P., Wanliss, J.A.: Intermittency of storms and substorms: Is it related to the critical behaviour? Ann. Geophys. 27(5), 2011–2018 (2009). https://doi.org/10.5194/angeo-27-2011-2009

Doyle, T.L.A.: Discriminating between elderly and young using a fractal dimension analysis of centre of pressure. Int. J. Med. Sci. 1(1), 11–20 (2004)

Esteller, R., Vachtsevanos, G., Echauz, J., Litt, B.: A comparison of waveform fractal dimension algorithms. IEEE Trans. Circ. Syst. I Fundam. Theory Appl. 48, 177–183 (2001)

Fama, E.F., Cochrane, J.H., Moskowitz, T.J.: The Fama Portfolio: Selected Papers of Eugene F. Fama. University of Chicago Press, Chicago (2021). https://doi.org/10.7208/9780226426983

Giuliani, P., Carbone, V.: A note on shell models for MHD turbulence. Europhys. Lett. 43, 527–532 (1998). https://doi.org/10.1209/epl/i1998-00386-y

Gledzer, E.B.: System of hydrodynamic type allowing 2 quadratic integrals of motion. Sov. Phys. Dokl. SSSR 18, 216–217 (1973)

Gomolka, R.S., et al.: higuchi fractal dimension of heart rate variability during percutaneous auricular vagus nerve stimulation in healthy and diabetic subjects. Front. Physiol. 9, 1162 (2018). https://doi.org/10.3389/fphys.2018.01162

Grizzi, F., Castello, A., Qehajaj, D., Russo, C., Lopci, E.: The complexity and fractal geometry of nuclear medicine images. Mol. Imag. Biol. 21(3), 401–409 (2019)

Higuchi, T.: Approach to an irregular time series on the basis of the fractal theory. Phys. D 31, 277–283 (1988)

Hosking, J.R.M.: Modeling persistence in hydrological time series using fractional differencing. Water Resour. Res. 20(12), 1898–1908 (1984)

Kijima, M., Chun M.T.: Fractional Brownian Motions in Financial Models and Their Monte Carlo Simulation, Theory and Applications of Monte Carlo Simulations. (Victor (Wai Kin) Chan, IntechOpen (2013). doi:https://doi.org/10.5772/53568

Koutsoyiannis, D.: Time’s arrow in stochastic characterization and simulation of atmospheric and hydrological processes. Hydrol. Sci. J. 64, 1013–1037 (2019)

Lepreti, F., Carbone, V., Giuliani, P., Sorriso-Valvo, L., Veltri, P.: Statistical properties of dissipation bursts within turbulence: solar flares and geomagnetic activity. Planet. Space Sci. 52, 957–962 (2004). https://doi.org/10.1016/j.pss.2004.03.001

Levinson, N.: The wiener rms error criterion in filter design and prediction. J. Math. Phys. 25(1947), 261–278 (1947)

Lévy Véhel, J.: Beyond multifractional Brownian motion: new stochastic models for geophysical modelling. Nonlinear Process. Geophys. 20, 643–655 (2013). https://doi.org/10.5194/npg-20-643-2013

Liehr, L., Massopust, P.: On the mathematical validity of the Higuchi method. Phys. D 402, 132265 (2020). https://doi.org/10.1016/j.physd.2019.132265

Lu, X.J., Zhang, J.Q., Huang, S.F., Jun, L., Ye, M.Q., Wang, M.S.: Detection and classification of epileptic EEG signals by the methods of nonlinear dynamics. Chaos Solitons Fract. 151, 111032 (2021). https://doi.org/10.1016/j.chaos.2021.111032

Mandelbrot, B., Van Ness, J.W.: Fractional brownian motions: fractional noises and applications. SIAM Rev. 10(4), 422–437 (1968)

Mitsutake, G., Otsuka, K., Oinuma, S., Ferguson, I., Cornélissen, G., Wanliss, J., Halberg, F.: Does exposure to an artificial ULF magnetic field affect blood pressure, heart rate variability and mood? Biomed. Pharmacother. 58, S20–S27 (2004). https://doi.org/10.1016/S0753-3322(04)80004-0

Nobukawa, S., Yamanishi, T., Nishimura, H., Wada, Y., Kikuchi, M., Takahashi, T.: Atypical temporal-scale-specific fractal changes in Alzheimer’s disease EEG and their relevance to cognitive decline. Cogn. Neurodyn. 13(1), 1–11 (2019). https://doi.org/10.1007/s11571-018-9509-x

Obukhov, A.M.: Some general properties of equations describing the dynamics of the atmosphere. In: Academy of Sciences, USSR, Izvestiya, Atmospheric and Oceanic Physics, vol. 7, pp. 695–704. (1971)

Paramanathan, P., Uthayakumar, R.: Application of fractal theory in analysis of human electroencephalographic signals. Comput. Biol. Med. 38(3), 372–378 (2008)

Peng, C.-K., Buldyrev, S.V., Goldberger, A.L., Havlin, S., Sciortino, S., Simons, M., Stanley, H.E.: Longrange correlations in nucleotide sequences. Nature 356, 168–170 (1992). https://doi.org/10.1038/356168a0

Pisarenko, D., Biferale, L., Courvoisier, D., Frisch, U., Vergassola, M.: Further results on multifractality in shell models. Phys. Fluids A 5(10), 2533–2538 (1993)

Ranguelov, B., Ivanov, Y.: Fractal properties of the elements of plate tectonics. J. Min. Geol. Sci. 60(1), 83–89 (2017)

Salazar-Varas, R., Vazquez, R.A.: Time-invariant EEG classification based on the fractal dimension. In: Castro, F., Miranda-Jiménez, S., González-Mendoza, M. (eds.) Advances in Computational Intelligence. MICAI 2017. Lecture Notes in Computer Science, vol. 10633. Springer, Cham (2018). doi:https://doi.org/10.1007/978-3-030-02840-4_26

Santuz, A., Akay, T.: Fractal analysis of muscle activity patterns during locomotion: pitfalls and how to avoid them. J. Neurophy. 124(4), 1083–1091 (2020). https://doi.org/10.1152/jn.00360.2020

Swapna, M.S., Sreejyothi, S., Raj, V., et al.: Is SARS CoV-2 a multifractal?—unveiling the fractality and fractal structure. Braz. J. Phys. 51, 731–737 (2021). https://doi.org/10.1007/s13538-020-00844-w

Turcotte, D. L.: Fractals and Chaos in Geology and Geophysics. Cambridge University Press (1992)

Wajnsztejn, R., et al.: Higuchi fractal dimension applied to RR intervals in children with attention deficit hyperactivity disorder. J. Hum. Growth Dev. 26, 147–153 (2016). https://doi.org/10.7322/jhgd.119256

Wang, W., Moore, M.A., Katzgraber, H.G.: Fractal dimension of interfaces in Edwards-Anderson spin glasses for up to six space dimensions. Phys. Rev. E 97(3), 032104 (2018)

Wanliss, J.A., Reynolds, M.A.: Measurement of the stochasticity of low-latitude geomagnetic temporal variations. Ann. Geophys. 21, 2025 (2003)

Wanliss, J.A., Shiokawa, K., Yumoto, K.: Latitudinal variation of stochastic properties of the geomagnetic field. Nonlinear Process. Geophys. 21(2), 347–356 (2014)

Wanliss, J., Arriaza, R.H., Wanliss, G., Gordon, S.: Optimization of the higuchi method. Int. J. Res. -GRANTHAALAYAH 9(11), 202–213 (2021). https://doi.org/10.29121/granthaalayah.v9.i11.2021.4393

Wątorek, M., Drożdż, S., Kwapień, J., Minati, L., Oświęcimka, P., Stanuszek, M.: Multiscale characteristics of the emerging global cryptocurrency market. Phys. Rep. 901(2021), 1–82 (2021). https://doi.org/10.1016/j.physrep.2020.10.005

Wood, A.T.A., Chan, G.: Simulation of stationary Gaussian processes in [0, 1]d. J. Comput. Graph. Stat. 3(4), 409–432 (1994)

Wu, F., Zhao, S., Yu, B., et al.: A new coronavirus associated with human respiratory disease in China. Nature 579, 265–269 (2020). https://doi.org/10.1038/s41586-020-2008-3

Yamada, M., Ohkitani, K.: Lyapunov spectrum of a model of two-dimensional turbulence. Phys. Rev. Lett. 60, 983–986 (1988). https://doi.org/10.1103/PhysRevLett.60.983

Yang, X., Xiang, Y., Jiang, B.: On multi-fault detection of rolling bearing through probabilistic principal component analysis denoising and Higuchi fractal dimension transformation. J. Vib. Control (2021). https://doi.org/10.1177/1077546321989527

Yilmaz, A., Unal, G.: Multiscale Higuchi’s fractal dimension method. Nonlinear Dyn. 101, 1441–1455 (2020). https://doi.org/10.1007/s11071-020-05826-w

Zuo, R., Cheng, Q., Agterberg, F.P., Xia, Q.: Application of singularity mapping technique to identify local anomalies using stream sediment geochemical data, a case study from Gangdese, Tibet, western China. J. Geochem. Explor. 101(3), 225–235 (2009). https://doi.org/10.1016/j.gexplo.2008.08.003

Acknowledgements

National Science Foundation Award AGS-2053689 and SC-INBRE.

Funding

The authors have not disclosed any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wanliss, J.A., Wanliss, G.E. Efficient calculation of fractal properties via the Higuchi method. Nonlinear Dyn 109, 2893–2904 (2022). https://doi.org/10.1007/s11071-022-07353-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-022-07353-2