Abstract

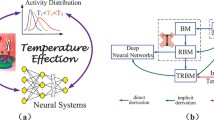

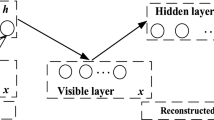

Deep learning techniques have been paramount in the last years, mainly due to their outstanding results in a number of applications, that range from speech recognition to face-based user identification. Despite other techniques employed for such purposes, Deep Boltzmann Machines (DBMs) are among the most used ones, which are composed of layers of Restricted Boltzmann Machines stacked on top of each other. In this work, we evaluate the concept of temperature in DBMs, which play a key role in Boltzmann-related distributions, but it has never been considered in this context up to date. Therefore, the main contribution of this paper is to take into account this information, as well as the impact of replacing a standard Sigmoid function by another one and to evaluate their influence in DBMs considering the task of binary image reconstruction. We expect this work can foster future research considering the usage of different temperatures during learning in DBMs.

Similar content being viewed by others

Notes

Notice that \(\tilde{\mathbf{v }}\) and \(\tilde{\mathbf{h }}\) are obtained by sampling from h and \(\tilde{\mathbf{v }}\), respectively.

The images are originally available in grayscale with resolution of \(28\times 28\), but they were reduced to \(14\times 14\) images.

The original training set was reduced to \(2\%\) of its former size, which corresponds to 1200 images.

Notice all parameters and architectures have been empirically chosen [21].

One sampling iteration was used for all learning algorithms.

We did not fine-tune parameters using back-propagation, since the main goal of this paper is to show the temperature does affect the behavior of DBMs.

References

Bekenstein JD (1973) Black holes and entropy. Phys Rev D 7(8):2333

Beraldo e Silva L, Lima M, Sodré L, Perez J (2014) Statistical mechanics of self-gravitating systems: mixing as a criterion for indistinguishability. Phys Rev D 90(12):123004

Duong CN, Luu K, Quach KG, Bui TD (2015) Beyond principal components: deep Boltzmann machines for face modeling. In: 2015 IEEE conference on computer vision and pattern recognition, CVPR’15, pp 4786–4794

Gadjiev B, Progulova T (2015) Origin of generalized entropies and generalized statistical mechanics for superstatistical multifractal systems. In: International workshop on Bayesian inference and maximum entropy methods in science and engineering, vol 1641, pp 595–602

Goh H, Thome N, Cord M, Lim JH (2012) Unsupervised and Supervised Visual Codes with Restricted Boltzmann Machines. In: Proceedings of computer vision—ECCV 2012: 12th European conference on computer vision, Florence, Italy, October 7–13, 2012, Part V. Springer, Berlin, pp 298–311. doi:10.1007/978-3-642-33715-4_22

Gompertz B (1825) On the nature of the function expressive of the law of human mortality, and on a new mode of determining the value of life contingencies. Philos Trans R Soc Lond 115:513–583

Gordon BL (2002) Maxwell–Boltzmann statistics and the metaphysics of modality. Synthese 133(3):393–417

Hinton G, Salakhutdinov R (2006) Reducing the dimensionality of data with neural networks. Science 313(5786):504–507

Hinton G, Salakhutdinov R (2011) Discovering binary codes for documents by learning deep generative models. Top Cogn Sci 3(1):74–91

Hinton GE (2002) Training products of experts by minimizing contrastive divergence. Neural Comput 14(8):1771–1800

Hinton GE (2012) A practical guide to training restricted Boltzmann machines. In: Montavon G, Orr GB, Müller K-R (eds) Neural networks: tricks of the trade, 2nd edn. Springer, Berlin, pp 599–619. doi:10.1007/978-3-642-35289-8_32

Hinton GE, Osindero S, Teh YW (2006) A fast learning algorithm for deep belief nets. Neural Comput 18(7):1527–1554

Kennedy J (2011) Particle swarm optimization. In: Sammut C, Webb GI (eds) Encyclopedia of machine learning. Springer, pp 760–766

Larochelle H, Mandel M, Pascanu R, Bengio Y (2012) Learning algorithms for the classification restricted Boltzmann machine. J Mach Learn Res 13(1):643–669

LeCun Y, Bengio Y, Hinton GE (2015) Deep learning. Nature 521:436–444

Li G, Deng L, Xu Y, Wen C, Wang W, Pei J, Shi L (2016) Temperature based restricted Boltzmann machines. Sci Rep 6(19):133

Mendes G, Ribeiro M, Mendes R, Lenzi E, Nobre F (2015) Nonlinear Kramers equation associated with nonextensive statistical mechanics. Phys Rev E 91(5):052–106

Niven RK (2005) Exact Maxwell–Boltzmann, Bose–Einstein and fermi-dirac statistics. Phys Lett A 342(4):286–293

Papa JP, Rosa GH, Costa KAP, Marana AN, Scheirer W, Cox DD (2015) On the model selection of Bernoulli restricted Boltzmann machines through harmony search. In: Proceedings of the genetic and evolutionary computation conference, GECCO’15. ACM, New York, pp 1449–1450

Papa JP, Rosa GH, Marana AN, Scheirer W, Cox DD (2015) Model selection for discriminative restricted boltzmann machines through meta-heuristic techniques. J Comput Sci 9:14–18

Papa JP, Scheirer W, Cox DD (2016) Fine-tuning deep belief networks using harmony search. Appl Soft Comput 46:875–885

Ranzato M, Boureau Y, Cun Y (2008) Sparse feature learning for deep belief networks. In: Platt J, Koller D, Singer Y, Roweis S (eds) Advances in neural information processing systems, vol 20. Curran Associates Inc., Red Hook, pp 1185–1192

Rényi, A. (1961) On measures of entropy and information. In: Neyman J (ed) Proceedings IV Berkeley symposium on mathematical statistics and probability, vol 1. pp 547–561

Salakhutdinov R, Hinton G (2009) Semantic hashing. Int J Approx Reason 50(7):969–978

Salakhutdinov R, Hinton GE (2009) Deep Boltzmann machines. In: Neil L, Mark R (eds) AISTATS, vol 1. Microtome Publishing, Brookline, MA, pp 3

Salakhutdinov R, Hinton GE (2012) An efficient learning procedure for deep Boltzmann machines. Neural Comput 24(8):1967–2006

Schmidhuber J (2015) Deep learning in neural networks: an overview. Neural Netw 61:85–117

Shim JW, Gatignol R (2010) Robust thermal boundary conditions applicable to a wall along which temperature varies in lattice-gas cellular automata. Phys Rev E 81(4):046–703

Smolensky P (1986) Information processing in dynamical systems: foundations of harmony theory. In: Technical reports, DTIC Document

Sohn K, Lee H, Yan X (2015) Learning structured output representation using deep conditional generative models. In: Cortes C, Lawrence ND, Lee DD, Sugiyama M, Garnett R (eds) Advances in neural information processing systems, vol 28. Curran Associates Inc., Red Hook, pp 3465–3473

Srivastava N, Hinton GE, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15(1):1929–1958

Tieleman T (2008) Training restricted Boltzmann machines using approximations to the likelihood gradient. In: Proceedings of the 25th international conference on machine learning, ICML ’08. ACM, New York, pp 1064–1071 (2008)

Tomczak JM, Gonczarek A (2016) Learning invariant features using subspace restricted Boltzmann machine. Neural Process Lett 9896:1–10

Wicht B, Fischer A, Hennebert J (2016) On CPU performance optimization of restricted Boltzmann machine and convolutional rbm. In: IAPR workshop on artificial neural networks in pattern recognition. Springer, pp 163–174 (2016)

Wilcoxon F (1945) Individual comparisons by ranking methods. Biom Bull 1(6):80–83. doi:10.2307/3001968

Acknowledgements

The authors would like to thank FAPESP Grants #2014/16250-9, #2014/12236-1, and #2016/19403-6, Capes and CNPq Grant #306166/2014-3.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Passos, L.A., Papa, J.P. Temperature-Based Deep Boltzmann Machines. Neural Process Lett 48, 95–107 (2018). https://doi.org/10.1007/s11063-017-9707-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-017-9707-2