Abstract

Visual Question Answering(VQA), an important task to evaluate the cross-modal understanding capability of an Artificial Intelligence model, has been a hot research topic in both computer vision and natural language processing communities. Recently, graph-based models have received growing interest in VQA, for its potential of modeling the relationships between objects as well as its formidable interpretability. Nonetheless, those solutions mainly define the similarity between objects as their semantical relationships, while largely ignoring the critical point that the difference between objects can provide more information for establishing the relationship between nodes in the graph. To achieve this, we propose an object-difference based graph learner, which learns question-adaptive semantic relations by calculating inter-object difference under the guidance of questions. With the learned relationships, the input image can be represented as an object graph encoded with structural dependencies between objects. In addition, existing graph-based models leverage the pre-extracted object boxes by the object detection model as node features for convenience, but they are suffering from the redundancy problem. To reduce the redundant objects, we introduce a soft-attention mechanism to magnify the question-related objects. Moreover, we incorporate our object-difference based graph learner into the soft-attention based Graph Convolutional Networks to capture question-specific objects and their interactions for answer prediction. Our experimental results on the VQA 2.0 dataset demonstrate that our model gives significantly better performance than baseline methods.

Similar content being viewed by others

References

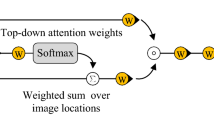

Anderson P, He X, Buehler C, Teney D, Johnson M, Gould S, Zhang L (2018) Bottom-up and top-down attention for image captioning and visual question answering. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6077–6086

Antol S, Agrawal A, Lu J, Mitchell M, Batra D, Lawrence Zitnick C, Parikh D (2015) Vqa: visual question answering. In: Proceedings of the IEEE international conference on computer vision, pp 2425–2433

Bordes A, Usunier N, Garcia-Duran A, Weston J, Yakhnenko O (2013) Translating embeddings for modeling multi-relational data. In: Advances in neural information processing systems, pp 2787–2795

Cheng Z, Ding Y, He X, Zhu L, Song X, Kankanhalli MS (2018) Aˆ 3ncf: an adaptive aspect attention model for rating prediction. In: IJCAI, pp 3748–3754

Cheng Z, Chang X, Zhu L, Kanjirathinkal RC, Kankanhalli M (2019) Mmalfm: explainable recommendation by leveraging reviews and images. ACM Trans Inform Syst (TOIS) 37(2):16

Cho K, Van Merriënboer B, Bahdanau D, Bengio Y (2014) On the properties of neural machine translation: encoder-decoder app.roaches. arXiv:https://arxiv.org/abs/14091259

Goyal Y, Khot T, Summers-Stay D, Batra D, Parikh D (2017) Making the v in vqa matter: elevating the role of image understanding in visual question answering. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6904–6913

Ilievski I, Feng J (2017) Multimodal learning and reasoning for visual question answering. In: Advances in neural information processing systems, pp 551–562

Kazemi V, Elqursh A (2017) Show, ask, attend, and answer: a strong baseline for visual question answering. arXiv:https://arxiv.org/abs/170403162

Kim JH, Lee SW, Kwak D, Heo MO, Kim J, Ha JW, Zhang BT (2016) Multimodal residual learning for visual qa. In: Advances in neural information processing systems, pp 361–369

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. arXiv:https://arxiv.org/abs/14126980

Kipf TN, Welling M (2016) Semi-supervised classification with graph convolutional networks. arXiv:https://arxiv.org/abs/160902907

Li G, Su H, Zhu W (2017) Incorporating external knowledge to answer open-domain visual questions with dynamic memory networks. arXiv:https://arxiv.org/abs/171200733

Liao L, Ma Y, He X, Hong R, Chua Ts (2018) Knowledge-aware multimodal dialogue systems. In: 2018 ACM Multimedia conference on multimedia conference. ACM, pp 801–809

Liu AA, Nie WZ, Gao Y, Su YT (2016) Multi-modal clique-graph matching for view-based 3d model retrieval. IEEE Trans Image Process 25(5):2103–2116

Liu AA, Su YT, Nie WZ, Kankanhalli M (2017) Hierarchical clustering multi-task learning for joint human action grouping and recognition. IEEE Trans Pattern Anal Mach Intell 39(1):102–114

Liu AA, Nie WZ, Gao Y, Su YT (2018) View-based 3-d model retrieval: a benchmark. IEEE Trans Cybern 48(3):916–928

Liu J, Zhai G, Liu A, Yang X, Zhao X, Chen CW (2018) Ipad: intensity potential for adaptive de-quantization. IEEE Trans Image Process 27(10):4860–4872

Lu J, Yang J, Batra D, Parikh D (2016) Hierarchical co-attention for visual question answering. Advances in Neural Information Processing Systems (NIPS), 2

Malinowski M, Rohrbach M, Fritz M (2015) Ask your neurons: a neural-based approach to answering questions about images. In: Proceedings of the IEEE international conference on computer vision, pp 1–9

Nam H, Ha JW, Kim J (2017) Dual attention networks for multimodal reasoning and matching. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 299–307

Narasimhan M, Lazebnik S, Schwing A (2018) Out of the box: reasoning with graph convolution nets for factual visual question answering. In: Advances in neural information processing systems, pp 2654–2665

Norcliffe-Brown W, Vafeias S, Parisot S (2018) Learning conditioned graph structures for interpretable visual question answering. In: Advances in neural information processing systems, pp 8334–8343

Pennington J, Socher R, Manning C (2014) Glove: global vectors for word representation. In: Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), pp 1532–1543

Ren S, He K, Girshick R, Sun J (2015) Faster r-cnn: towards real-time object detection with region proposal networks. In: Advances in neural information processing systems, pp 91–99

Shang C, Liu Q, Chen KS, Sun J, Lu J, Yi J, Bi J (2018) Edge attention-based multi-relational graph convolutional networks. arXiv:https://arxiv.org/abs/180204944

Shih KJ, Singh S, Hoiem D (2016) Where to look: focus regions for visual question answering. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4613–4621

Tang S, Li Y, Deng L, Zhang Y (2017) Object localization based on proposal fusion. IEEE Trans Multimed 19(9):2105–2116

Teney D, Liu L, van den Hengel A (2017) Graph-structured representations for visual question answering. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1–9

Teney D, Anderson P, He X, van den Hengel A (2018) Tips and tricks for visual question answering: learnings from the 2017 challenge. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4223–4232

Wang P, Wu Q, Shen C, Dick A, van den Hengel A (2018) Fvqa: fact-based visual question answering. IEEE Trans Pattern Anal Mach Intell 40(10):2413–2427

Wu Q, Wang P, Shen C, Dick A, van den Hengel A (2016) Ask me anything: free-form visual question answering based on knowledge from external sources. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4622–4630

Wu Q, Teney D, Wang P, Shen C, Dick A, van den Hengel A (2017) Visual question answering: a survey of methods and datasets. Comput Vis Image Underst 163:21–40

Wu Q, Shen C, Wang P, Dick A, van den Hengel A (2018) Image captioning and visual question answering based on attributes and external knowledge. IEEE Trans Pattern Anal Mach Intell 40(6):1367–1381

Wu C, Liu J, Wang X, Dong X (2018) Object-difference attention: a simple relational attention for visual question answering. In: 2018 ACM Multimedia conference on multimedia conference. ACM, pp 519–527

Yan S, Xiong Y, Lin D (2018) Spatial temporal graph convolutional networks for skeleton-based action recognition. In: Thirty-Second AAAI conference on artificial intelligence

Yang Z, He X, Gao J, Deng L, Smola A (2016) Stacked attention networks for image question answering. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 21–29

Yang Z, Yu J, Yang C, Qin Z, Hu Y (2018) Multi-modal learning with prior visual relation reasoning. arXiv:https://arxiv.org/abs/181209681

Yang X, Zhang H, Cai J (2018) Shuffle-then-assemble: learning object-agnostic visual relationship features. In: Proceedings of the European conference on computer vision (ECCV), pp 36–52

Zhang Y, Hare J, Prügel-Bennett A (2018) Learning to count objects in natural images for visual question answering. arXiv:https://arxiv.org/abs/180205766

Acknowledgements

Zhendong Mao is the corresponding author. This work was supported by the National Key Research and Development Program of China (grant No. 2016QY03D0505) and the National Natural Science Foundation of China (grant No. U19A2057).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhu, X., Mao, Z., Chen, Z. et al. Object-difference drived graph convolutional networks for visual question answering. Multimed Tools Appl 80, 16247–16265 (2021). https://doi.org/10.1007/s11042-020-08790-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-08790-0