Abstract

We present a general framework for dealing with set heterogeneity in data and learning problems, which is able to exploit low complexity components. The main ingredients are (i) A definition of complexity for elements of a convex union that takes into account the complexities of their individual composition – this is used to cover the heterogeneous convex union; and (ii) Upper bounds on the complexities of restricted subsets. We demonstrate this approach in two different application areas, highlighting their conceptual connection. (1) In random projection based dimensionality reduction, we obtain improved bounds on the uniform preservation of Euclidean norms and distances when low complexity components are present in the union. (2) In statistical learning, our generalisation bounds justify heterogeneous ensemble learning methods that were incompletely understood before. We exemplify empirical results with boosting type random subspace and random projection ensembles that implement our bounds.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We are interested in data and learning problems of a heterogeneous nature, which we will describe shortly. Let m be a positive integer, and consider a sequence \({\mathbb {S}}= ({\mathbb {S}}_j)_{j \in [m]}\) consisting of bounded subsets of a vector space. The convex union \({\overline{{\mathbb {S}}}}\) is defined to be the convex hull of the union of these sets,

where \(\Delta _\tau := \{ (\alpha _t)_{t \in [\tau ]} \in [0,1]^\tau : \sum _{t \in [\tau ]}\alpha _t= 1\}\) is the simplex for \(\tau \in {\mathbb {N}}\).

In dimensionality reduction, random projection (RP) is a universal and computationally convenient method that enjoys near-isometry. The distortion of Euclidean norms and distances depends on the complexity of the set being projected (see Liaw et al. (2017) and references therein). Now suppose some high dimensional data resides in a set of the form (1). What can be said about simultaneous preservation of norms and distances? The complexity of the union grows with its highest complexity component. We would like to take advantage of heterogeneity to better exploit the presence of low complexity components.

In statistical learning (SL), suppose we want to learn a weighted ensemble where base learners belong to different complexity classes. The ensemble predictor then belongs to a function class of the form (1) – for instance, learning a weighted ensemble of random subspace classifiers, as raised in the future work section of Tian and Feng (2021). What simultaneous (i.e. worst-case) generalisation guarantees can be given?

To tackle these problems, it is helpful to observe their common structure. Both problems can be described by a certain stochastic process – an infinite collection of random variables \(\{X_s\}_{s \in S}\), indexed by the elements of a bounded set S. In the RP task, the index-set \(S \subset \mathbb {R}^d\) is a set of points in a high dimensional space, and the source of randomness is the RP map R, a random matrix taking values in \(\mathbb {R}^{k\times d}\) with independent rows drawn from a known distribution. We are interested in norm-preservation, i.e. the discrepancy between the norm of a point before and after RP, so the collection of random variables of interest is \(\{ \sqrt{k}\Vert s\Vert _2 - \Vert Rs\Vert _2\}_{s\in S}\), and we would like to guarantee that all of these discrepancies are small simultaneously, with high probability.

In the SL task, the index-set is a set of functions \({{\mathcal {H}}}\) (the hypothesis class), and the source of randomness is a training sample \(\{(X_1,Y_1),\dots , (X_n,Y_n)\}\), drawn i.i.d. from an unknown distribution. We are interested in generalisation, i.e. the discrepancy between true error and sample error, so the infinite collection of random variables of interest is \(\{\mathbb {E}_{X,Y}[\mathcal {L}(f(X),Y)] - \frac{1}{n}\sum _{i=1}^n \mathcal {L}(f(X_i),Y_i)\}_{f\in {{\mathcal {H}}}}\), where \({\mathcal L}\) is a loss function. Again, we want all of these discrepancies to be small simultaneously, with high probability.

This analogy suggests dealing with the problem of index-set heterogeneity in both tasks in a unified way. The index-sets will be of the form (1), and we appeal to empirical process theory to link the processes of interest with canonical processes whose suprema can be bounded.

1.1 Related work

The use of empirical process theory to provide low distortion guarantees for random projections of bounded sets was pioneered by the work of (Klartag and Mendelson 2005), and further refined by others – see Liaw et al. (2017) and references therein for a relatively recent treatment. These results extend the celebrated Johnson-Lindenstrauss (JL) lemma from finite sets to infinite sets. They allow simultaneous high probability statements to be made about Euclidean norm preservation of all points of the set being projected, and these guarantees depend on a notion of metric complexity of the set. For this reason, these bounds are more capable of explaining empirically observed low distortion in application areas where the underlying data support has a low intrinsic dimension, or a simple intrinsic structure, such as images or text data (Bingham and Mannila 2001). However, these existing bounds do not cater to the heterogeneity of the data support, so the presence of any small high-complexity component still renders them lose. This observation will be made precise in the sequel.

Empirical process theory is also a cornerstone in statistical learning, where it is widely used to provide uniform generalisation guarantees for learning problems (Boucheron et al. 2013) via Rademacher and Gaussian complexities. Uniform generalisation bounds ascertain, under certain conditions, that with high probability the training data does not mislead the learning algorithm. However, for complex models like heterogeneous ensembles of interest to practitioners, theory is scarce (Parnell et al. 2020, Cortes et al. 2014), Tian and Feng 2021) and a general unifying treatment is missing.

In both of the above domains, classic theory considers a single homogeneous index-set in the underlying empirical process, ignoring any heterogeneity of its subsets. The complexity of a union of sets of differing complexities grows linearly with the complexity of the most complex one, consequently by this approach one obtains uniform bounds that grow linearly with the complexity of the most complex component set. However, in many natural situations one would expect predominantly lower complexity components – for instance, data may lie mostly (though not exclusively) on low dimensional structures, or the required hypothesis class has mostly (though not exclusively) low-complexity. This class of problems motivates our approach.

1.2 Contributions

In this paper, we develop a general unifying framework that allows us to formulate simultaneous high-probability bounds over all elements of a convex union of sets of differing complexity, taking advantage of any low-complexity components. The main contributions are summarised below.

-

We introduce a notion of complexity for elements of the convex union, defined as a weighted average of complexities of constituent sets. This serves to cover the convex union with sets of increasing complexity and treat each individually.

-

We bound the supremum of a weighted combination of canonical subgaussian processes, which serves as a tool to bound the complexities of restricted subsets of the convex union.

We demonstrate our approach in two different areas, highlighting their conceptual connection in our framework, namely random projection based dimensionality reduction, and statistical learning of heterogeneous ensembles.

-

In dimensionality reduction, heterogeneity of the data support brings improvement in simultaneous norm-preservation guarantees when points have some low complexity constitution, improving on results from Liaw et al. (2017).

-

In statistical learning, our bounds justify and guide principled heterogeneous weighted ensemble construction more generally than previous work has, and we exemplify regularised gradient boosting type random subspace & random projection ensembles for high dimensional learning.

2 Theory

We begin with preliminaries, and develop some theory in Sects. 2.1 and 2.2.

Definition 1

(Sub-Gaussian right tail) A random variable X is said to have a sub-Gaussian right tail with parameter \(\sigma >0\) if \(\mathbb {P}( X >\xi )\le e^{-\xi ^2/2 \sigma ^2}\) for all \(\xi >0\). Let \(\mathcal {R}(\sigma ^2)\) denote the collection of such random variables.

A sub-Gaussian right tail implies an expectation upper bound, as integrating the tail inequality yields \(\mathbb {E}(X) \le \int _0^{\infty }\mathbb {P}( X>\xi )d\xi \le \int _0^{\infty }e^{-\xi ^2/2 \sigma ^2}d\xi = \sigma \sqrt{\pi /{2}}\). Hence, the class \(\mathcal {R}(\sigma ^2)\) will serve as useful generalisation of sub-Gaussian random variables X, for which both tails decay quickly i.e. \(\vert X \vert \in \mathcal {R}(\sigma ^2)\).

Example 1

A univariate Gaussian random variable X with mean \(\mu\) and variance \(\sigma ^2\) satisfies \(X-\mu \in \mathcal {R}(\sigma ^2)\).

In the sequel, we shall be concerned with canonical stochastic processes \(\{X_s\}_{s \in S}\) indexed by a bounded set S, and their suprema \(Z:=\sup _{s \in S}X_s\). In many useful cases these suprema turn out to have sub-Gaussian right tail.

Example 2

(Suprema of Gaussian processes) Given a bounded set \(S \subset \mathbb {R}^n\) let \(\{X_s\}_{s \in S}\) be the Gaussian process obtained by taking a standard normal vector \({g} \sim {\mathbb {N}}(0,I_n)\) and setting \(X_s: = \left\langle s,{g}\right\rangle\). The expectation of the supremum \(\mathfrak {G}(S):= \mathbb {E}[\sup _{s \in S}X_s]\) is referred to as the Gaussian width of S. The Borell-TIS inequality (Boucheron et al. 2013), Theorem 5.8) yields \(\sup _{s \in S} \{ X_s-\mathfrak {G}(S)\} \in \mathcal {R}( \sup _{s \in S}\sum _{i \in [n]}s_i^2)\).

Example 3

(Suprema of Rademacher processes) Given a bounded set \(S \subset \mathbb {R}^n\), let \(\{X_s\}_{s \in S}\) be the Rademacher process obtained by letting \({\gamma }=(\gamma _i)_{i \in [n]}\) be an i.i.d. random sequence with \(\gamma _i\) chosen uniformly from \(\{-1,+1\}\), and \(X_s:= \left\langle s,{\gamma }\right\rangle\). Then expectation of the supremum \(\mathfrak {R}(S):= \mathbb {E}[\sup _{s \in S}X_s]\) is referred to as the Rademacher width of S. By McDiarmard’s inequality, \(\sup _{s \in S}X_s-\mathfrak {R}(S) \in \mathcal {R}( {\sum _{i \in [n]}\sup _{s \in S}s_i^2})\). We also have \(\sup _{s \in S} \{X_s-\mathfrak {R}(S)\} \in \mathcal {R}( 8 \cdot \sup _{s \in S}\sum _{i \in [n]}s_i^2)\) (Wainwright 2019, Example 3.5). Whilst the latter bound is sometimes tighter, we will rely primarily on the former bound in what follows.

2.1 Empirical processes over heterogeneous sets

Here we give a general result that will allow us to bound the complexity of certain subsets of a convex union. Consider the canonical stochastic process whose index set is the convex union of our interest. The next lemma bounds the supremum and its expectation for the resulting mixture process, subject to constraints, by showing that this supremum has sub-Gaussian right tail.

Lemma 1

(Supremum of mixture process) Suppose that for each \(j \in [m]\) we have a real-valued stochastic process \(\{X^j_s\}_{s \in {\mathbb {S}}_j}\) with supremum \(Z_j:=\sup _{s \in {\mathbb {S}}_j}X^j_s\), and that for each \(j \in [m]\) there exist \(\mu _j, \sigma _j \in (0,\infty )\) with \(Z_j - \mu _j \in \mathcal {R}(\sigma _j^2)\). Given \(\mu\), \(\sigma >0\) we consider the following random variable

where the supremum runs over all \(\tau \in {\mathbb {N}}\), \((j_t)_{t \in [\tau ]} \in [m]^\tau\), \((s_t)_{t \in [\tau ]} \in \prod _{t\in [\tau ]} {\mathbb {S}}_{j_t}\) and \((\alpha _t)_{t \in [\tau ]} \in (0,\infty )^\tau\) satisfying the specified constraints. It follows that \({\overline{Z}}_{\mu ,\sigma } -(\mu +\sigma \sqrt{2\log m}) \in \mathcal {R}(\sigma ^2)\) and \(\mathbb {E}\left( {\overline{Z}}_{\mu ,\sigma }\right) \le \mu +\sigma \cdot (\sqrt{2\log m}+\sqrt{\pi /2})\).

Proof

For each \(\delta \in (0,1)\) we define \(\mathcal {E}_{\delta }:=\bigcap _{j \in [m]}\left\{ Z_j \le \mu _j+\sigma _j \sqrt{2\log \left( {m}/{\delta }\right) } \right\}\). By \(Z_j - \mu _j \in \mathcal {R}(\sigma _j^2)\), combined with the union bound, we have \(\mathbb {P}\left( \mathcal {E}_{\delta }\right) \ge 1-\delta\). Next we observe that, on the event \(\mathcal {E}_{\delta }\), for any \(\tau \in {\mathbb {N}}\), \((\alpha _t)_{t \in [\tau ]} \in (0,\infty )^\tau\), \((j_t)_{t \in [\tau ]} \in [m]^\tau\) and \((s_t)_{t \in [\tau ]} \in \prod _{t\in [\tau ]} {\mathbb {S}}_{j_t}\) satisfying \(\sum _{t \in [\tau ]}\alpha _t \cdot \mu _{j_t} \le \mu\) and \(\sum _{t \in [\tau ]}\alpha _t \cdot \sigma _{j_t} \le \sigma\) we have,

Hence, on the event \(\mathcal {E}_{\delta }\), we have \({\overline{Z}}_{\mu ,\sigma }\le \mu +\sigma \sqrt{2\log m}+ \sigma \sqrt{2\log (1/\delta )}\). Since \(\mathbb {P}\left( \mathcal {E}_{\delta }\right) \ge 1-\delta\) for each \(\delta \in (0,1)\) we deduce that \({\overline{Z}}_{\mu ,\sigma } -(\mu +\sigma \sqrt{2\log m}) \in \mathcal {R}(\sigma ^2)\). The expectation bound follows by integrating the tail inequality. \(\square\)

2.2 Element-wise complexity-restricted subsets

We define a notion of complexity for elements of a convex union as follows.

Definition 2

(Gaussian widths for elements of the convex hull of a union) Given \({\mathbb {S}}=({\mathbb {S}}_j)_{j \in [m]}\) consisting of bounded sets \({\mathbb {S}}_j\subset \mathbb {R}^d\) and \(s \in \overline{{\mathbb {S}}}\), we define

where the infimum is over all \(\tau \in {\mathbb {N}}\), \((j_t)_{t \in [\tau ]} \in [m]^\tau\), \((s_t)_{t \in [\tau ]} \in \prod _{t\in [\tau ]} {\mathbb {S}}_{j_t}\) and \((\alpha _t)_{t \in [\tau ]} \in \Delta _\tau\). Similarly, we define \(\mathfrak {R}_{{\mathbb {S}}}(s)\) with \(\mathfrak {R}({\mathbb {S}}_{j_t})\) in place of \(\mathfrak {G}({\mathbb {S}}_{j_t})\).

This will be useful in obtaining high probability bounds that hold simultaneously for all elements of the convex union, yet provide individual guarantees for each – a key idea in our approach. Note that an element \(s\in \overline{{\mathbb {S}}}\) may have multiple representations as a convex combination; the infimum breaks ties in favour of the most parsimonious one. The convex coefficients \((\alpha _t)_{t\in [\tau ]}\) that realise the infimum in this definition depend on the individual element s. Note also that the complexity \(\mathfrak {G}_{{\mathbb {S}}}(s)\) of an element \(s \in \overline{{\mathbb {S}}}\) depends crucially upon the sequence of sets \({\mathbb {S}}\) with respect to which the complexity is quantified. Indeed, if the sequence of sets contains \(\{s\}\), we would have \(\mathfrak {G}_{{\mathbb {S}}}(s)=0\).

The following result shows the utility of element-wise complexities.

Theorem 1

(Element-wise complexity bounds) Suppose we have a sequence \({\mathbb {S}}=({\mathbb {S}}_j)_{j \in [m]}\) consisting of sets \({\mathbb {S}}_j\subset \mathbb {R}^d\) with \(\max _{j\in [m]}\sup _{s\in {\mathbb {S}}_j}\Vert s\Vert _2 \le b\) for some \(b>0\). Then, for all \(\kappa \in (0,\infty )\), we have

Moreover, if \({\mathbb {S}}_j \subseteq [-r,r]^n\) for all \(j\in [n]\), then for all \(\kappa >0\), then

Eq. (3) also holds with \(\mathfrak {G}\) in place of \(\mathfrak {R}\), using (2), since \(\sup _{s \in [-r,r]^n} \le \sqrt{n}r\).

Proof

Both bounds are instances of Lemma 1, using the sub-Gaussian right tail properties described in Examples 2 and 3. Take \(g\sim {{\mathcal {N}}}(0,I_n)\); for each \(t\in [\tau ]\) and \(j_t\in [m]\), take the canonical process \(\{X_{s_t}^{j_t}\}_{s_t\in {\mathbb {S}}_{j_t}}\) with \(X_{s_t}^{j_t}:=\langle s_t,g \rangle\). By Example 2, \(\sup _{s_t\in {\mathbb {S}}_{j_t}}X_{s_t}^{j_t}\) has sub-Gaussian right tail with parameter \(\sigma _{j_t}= \sup _{s\in {\mathbb {S}}_{j_t}}\Vert s\Vert _2\). By Definition 2 and Lemma 1 with \(\sigma :=b\), \(\mu _{j_t}:=\mathfrak {G}({\mathbb {S}}_{j_t})\), \(\mu :=\kappa\), (2) follows. Now, let \(\gamma\) be a sequence of n i.i.d. Rademacher variables. For each \(t\in [\tau ]\), \(j_t\in [m]\) take the canonical process \(\{X_{s_t}^{j_t}\}_{s_t\in {\mathbb {S}}_{j_t}}\) with \(X_{s_t}^{j_t}:=\langle \gamma ,s_t \rangle\). By Example 3, \(\sup _{s_t\in {\mathbb {S}}_{j_t}}X_{s_t}^{j_t}\) has sub-Gaussian right tail with parameter \(\sigma _{j_t}^2=\sup _{s \in {\mathbb {S}}_{j_t}}\sum _{i \in [n]}s_i^2\le \sum _{i \in [n]}\sup _{s \in {\mathbb {S}}_j} s_i^2 \le n \cdot r^2\). Hence, Definition 2 combined with Lemma 1 with \(\sigma :=r\sqrt{n}, \mu _{j_t}:=\mathfrak {G}({\mathbb {S}}_{j_t}), \mu :=\kappa\), gives (3). \(\square\)

Furthermore, using element-wise complexities we can cover the convex union with sets of increasing complexity, allowing us to deal with each in turn.

Lemma 2

(Covering the convex union) Take \(\epsilon >0\), \(L:= \max _{j \in [m]}\lceil \mathfrak {G}({\mathbb {S}}_j)/\epsilon \rceil\) and let \(T_l:=\{s\in \overline{{\mathbb {S}}}: (l-1) \cdot \epsilon \le \mathfrak {G}_{{\mathbb {S}}}(s) \le l\cdot \epsilon \}\) for \(l\in [L]\). Then, \(\overline{{\mathbb {S}}}\subseteq \bigcup _{l=1}^LT_l\).

A similar result holds with \(\mathfrak {R}\) in place of \(\mathfrak {G}\).

Proof

By Definition 2, for \(s \in {\overline{{\mathbb {S}}}}\), \(0 \le \mathfrak {G}_{{\mathbb {S}}}(s) \le \max _{j \in [m]}\mathfrak {G}({\mathbb {S}}_j)\le L \cdot \epsilon\), so \(s \in \bigcup _{l=1}^LT_l\). \(\square\)

The next sections rely on Theorem 1 combined with the covering approach of Lemma 2.

3 Dimension reduction for heterogeneous sets

Here we consider random projection (RP) based dimensionality reduction of sets of the form (1) in some high dimensional Euclidean ambient space, with component regions each having their own predominantly simple structure together with various higher complexity noise components. This is a realistic scenario in real world data (Wright and Ma 2022). Dimensionality reduction is often desirable before a time-consuming processing of the data, and RP is a convenient approach, oblivious to the data, with useful distance-preservation guarantees. However, there is a gap in understanding what makes RP preserve structure more accurately. We apply our theory to this problem.

Recall that a \(k\times d\) random matrix R, is said to be isotropic if every row \(R_{i:}\) of R satisfies \(\mathbb {E}\left[ R_{i:}^\top R_{i:}\right] =I_d\). The sub-Gaussian norm \(\Vert \cdot \Vert _{\psi _2}\) of a random matrix R is defined as

We shall make use of the following result.

Lemma 3

(Liaw et al. 2017) There exists a universal constant \(C_{\mathfrak {L}}>0\) such that for any isotropic \(k\times d\) random matrix R, any set \(S \subset \mathbb {R}^d\) and \(\delta \in (0,1)\), the following holds with probability at least \(1-\delta\),

The main result of this section is the following simultaneous bound for norm preservation.

Theorem 2

(Norm preservation in the convex union) Suppose we have an isotropic \(k\times d\) random matrix R and a sequence of sets \({\mathbb {S}}=({\mathbb {S}}_j)_{j \in [m]}\) with \({\mathbb {S}}_j \subseteq \mathbb {R}^d\) and let \(\overline{{\mathbb {S}}}\) denote the convex union (1). Suppose further that \(\max _{j\in [m]}\sup _{s\in {\mathbb {S}}_j}\Vert s\Vert _2 \le b\) for some \(b>0\). Given any \(\delta \in (0,1)\), with probability at least \(1-\delta\), the following holds simultaneously for all points \(s\in \overline{{\mathbb {S}}}\),

The two dominant terms are in a tradeoff in the above bound; these are the element-wise complexity \(\mathfrak {G}_{{\mathbb {S}}}(s)\) (cf. Definition 2), and a logarithmic function of the number of component sets m in the union. Indeed, if the union consists of many low complexity sets, then the latter quantity will increase, while if it consists of fewer high complexity sets then the former will increase.

Proof of Theorem 2

Let \(\epsilon >0\) (to be chosen later), and \(L=\max _{j \in [m]}\lceil \mathfrak {G}({\mathbb {S}}_j)/\epsilon \rceil\) and define sets \(T_l:=\{s\in \overline{{\mathbb {S}}}: (l-1)\cdot \epsilon \le \mathfrak {G}_{{\mathbb {S}}}(s) \le l\cdot \epsilon \}\), for \(l \in [L]\), as in Lemma 2, so \(\overline{{\mathbb {S}}}\subseteq \bigcup _{l=1}^L T_l\). By the first part of Theorem 1, for each \(l\in [L]\) we have

We apply Lemma 3 to each \(T_l\) and take union bound, so the following holds w.p. \(1-\delta\) for all \(l\in [L]\) and \(s\in T_l\),

Finally, we take \(\epsilon =b\), note \(1< \sqrt{\pi /2}\) and use \(\sqrt{x}+\sqrt{y}\le \sqrt{2(x+y)}\) twice. \(\square\)

The \(\log (m)\) term in Theorem 2 is the price to pay for a bound which holds simultaneously over all convex combinations. Let us compare the obtained bound with the alternative of applying Liaw et al. (2017) directly to the convex union, which would give us

where the latter bound follows from (2). Crucially, in Theorem 2 the maximal complexity \(\max _{j \in [m]}\mathfrak {G}({\mathbb {S}}_j)\) only appears under a log in our bound. By contrast, the above bound scales linearly with this quantity.

Figure 1 exemplifies the tightening of our bound in low complexity regions of the data support in comparison with the previous uniform bound of Liaw et al. (2017).

Example comparison of bounds on norm preservation in a union of three linear subspaces of dimensions 5, 150 and 400 respectively in the ambient space \(\mathbb {R}^{500}\) using Gaussian RP. With probability at least 0.95, we have simultaneously holding low distortion guarantees in the union of these, such that the guarantee is tighter in lower complexity subspaces at the expense of a negligible increase in the highest complexity subspace. In contrast, the previous bound (Liaw et al.2017) gives the same guarantee everywhere in the union

4 Learning in heterogeneous function classes

In this section we apply the second part of Theorem 1 to heterogeneous function classes. Let \(\mathcal {X}\) be the instance space (a measurable space). Throughout, we denote by \(\mathcal {M}(\mathcal {X},{{\mathcal {V}}})\) the set of (measurable) functions with domain \(\mathcal {X}\) and co-domain \({\mathcal V}\). First, let us recall some classic complexity measures for function classes. Given a class \(\mathcal {H}\subseteq \mathcal {M}(\mathcal {X},\mathbb {R})\), and a sequence of points \(\varvec{x}=(x_1,\cdots x_n)_{i\in [n]} \in \mathcal {X}^n\), the empirical Gaussian width \(\hat{\mathfrak {G}}_n\left( \mathcal {H},\varvec{x}\right)\) and empirical Rademacher width \(\hat{\mathfrak {R}}_n\left( \mathcal {H},\varvec{x}\right)\) are defined as

where g and \({\gamma }\) are n-dimensional standard Gaussian and Rademacher random variables respectively. The uniform (worst-case) Gaussian width \(\mathfrak {G}_{n}^{*}\left( \mathcal {H}\right)\) and uniform Rademacher width \(\mathfrak {R}_{n}^{*}\left( \mathcal {H}\right)\) are defined as

The uniform complexities are useful in obtaining faster rates than \(\mathcal {O}(n^{1/2})\), see Theorem 4.

Finally, given a distribution \(P_X\) on \(\mathcal {X}\), and \(\mathcal {H}\subseteq \mathcal {M}(\mathcal {X},\mathbb {R})\), the Gaussian width \(\mathfrak {G}_{n}\left( \mathcal {H},P_X\right)\) and Rademacher width \(\mathfrak {R}_{n}\left( \mathcal {H},P_X\right)\) are

where the expectation is taken over a random sample \(\varvec{X}=(X_1,\cdots ,X_n)\), consisting of n independent random variables \(X_i\) with distribution \(P_X\).

We can now define our element-wise complexities. Suppose we have a sequence \({\varvec{\mathcal {H}}}=(\mathcal {H}_j)_{j \in [m]}\) of function classes \(\mathcal {H}_j \subseteq \mathcal {M}(\mathcal {X},\mathbb {R})\) and let \(\overline{{\varvec{\mathcal {H}}}}:=\text {conv}(\bigcup _{j\in [m]}\mathcal {H}_j)\) be the convex union. Given a function \(f \in \overline{{\varvec{\mathcal {H}}}}\),

where each infimum runs over all \(\tau \in {\mathbb {N}}\), \((j_t)_{t \in [\tau ]} \in [m]^\tau\), all \((h_t)_{t \in [\tau ]} \in \prod _{t\in [\tau ]} \mathcal {H}_{j_t}\) and \((\alpha _t)_{t \in [\tau ]} \in \Delta _\tau\). We can also make corresponding definitions for \(\hat{\mathfrak {G}}_{{\varvec{\mathcal {H}}},n}(f,\varvec{x})\), \(\mathfrak {G}_{{\varvec{\mathcal {H}}},n}(f,P_X)\) and \(\mathfrak {G}_{{\varvec{\mathcal {H}}},n}^{*}(f)\); the results that follow hold unchanged.

The following lemma extends Theorem 1 to these element-complexities.

Lemma 4

(Element-wise complexity bounds for function classes) Take \(n,m \in {\mathbb {N}}\) and \(\beta >0\). Given \(\varvec{x}=(x_i)_{i \in [n]}\in \mathcal {X}^n\),

Moreover, the bound (4) also holds with any one of \(\hat{\mathfrak {G}}_{{\varvec{\mathcal {H}}},n}\left( \cdot , \varvec{x}\right)\), \(\mathfrak {R}_{{\varvec{\mathcal {H}}},n}^{*}\left( \cdot \right)\), \(\mathfrak {G}_{{\varvec{\mathcal {H}}},n}^{*}\left( \cdot \right)\) in place of \(\hat{\mathfrak {R}}_{{\varvec{\mathcal {H}}},n}\left( \cdot , \varvec{x}\right)\). In addition, given any distribution \(P_X\) on \(\mathcal {X}\),

Moreover, the bound (5) also holds with \(\mathfrak {G}_{{\varvec{\mathcal {H}}},n}(\cdot ,P_X)\) in place of \(\mathfrak {R}_{{\varvec{\mathcal {H}}},n}(\cdot ,P_X)\).

The proof is given in the Appendix. The bound for empirical widths follows directly from Theorem 1, and the others will be reduced to these by using concentration of the empirical widths around its expectation, and for the uniform complexities this reduction will follow simply from its definition.

4.1 Learning with a Lipschitz loss

In this section we focus on the problem of supervised learning. Suppose we have a measurable input data space \(\mathcal {X}\) and an output space \(\mathcal {Y}\subseteq \mathbb {R}\). Suppose further that we have a tuple of random variables (X, Y), where X takes values in \(\mathcal {X}\), and Y takes values in \(\mathcal {Y}\), with joint distribution \(P\), and marginal \(P_X\) over X. The learning task is defined in terms of a loss function \(\mathcal {L}: \mathbb {R}\times \mathcal {Y}\rightarrow [0,B]\). The goal of the learner is to obtain a measurable mapping \(f:\mathcal {X}\rightarrow \mathbb {R}\) with low risk, \(\mathcal {E}_{\mathcal {L}}\left( f\right) \equiv \mathcal {E}_{\mathcal {L}}\left( f,P\right) := \mathbb {E}_{(X,Y) \sim P}\left[ \mathcal {L}(f(X),Y)\right]\). Whilst the distribution \(P\) is unknown, the learner does have access to a data set \(\mathcal {D}:=\{(X_i,Y_i)\}_{i \in [n]}\), where \((X_i,Y_i)\) are independent copies of (X, Y), and computes the empirical risk, \(\hat{\mathcal {E}}_{\mathcal {L}}\left( f\right) \equiv \hat{\mathcal {E}}_{\mathcal {L}}\left( f,\mathcal {D}\right) := \frac{1}{n}\sum _{i \in [n]}\mathcal {L}(f(X_i),Y_i)\). This setting includes both binary classification, where \(\mathcal {Y}= \{-1,+1\}\) and regression where \(\mathcal {Y}=\mathbb {R}\).

The main result of this section is the following simultaneous upper bound for weighted heterogeneous ensembles, given in terms of our element-wise Rademacher width of individual predictors.

Theorem 3

Suppose we have a bounded, \(\Lambda\)-Lipschitz loss function \(\mathcal {L}:\mathbb {R}\times \mathcal {Y}\rightarrow [0,B]\) along with a sequence of function classes \({\varvec{\mathcal {H}}}=(\mathcal {H}_j)_{j \in [m]}\) with each \(\mathcal {H}_j \subseteq \mathcal {M}(\mathcal {X},[-\beta ,\beta ])\). Given \(n \in {\mathbb {N}}\), \(\delta \in (0,1)\), with probability at least \(1-\delta\), both of the following holds for all \(f \in \overline{{\varvec{\mathcal {H}}}}\),

Proof

For each \(l \in [n]\), let \(\mathcal {F}_l:=\left\{ f \in \overline{{\varvec{\mathcal {H}}}}: (l-1)\cdot \epsilon \le \mathfrak {R}_{{\varvec{\mathcal {H}}},n}\left( f,P_X\right) \le l \cdot \epsilon \right\}\) where \(\epsilon :=B/(2\Lambda n)\). By Talagrand’s contraction lemma (Mohri et al. 2012, Lemma 5.1), we have \(\hat{\mathfrak {R}}_n\left( \mathcal {L}\circ \mathcal {H},\mathcal {D}\right) \le \Lambda \cdot \hat{\mathfrak {R}}_n\left( \mathcal {H},\varvec{X}\right)\). Moreover, by Lemma 4 for each \(l \in [n]\),

Thus, by the classic Rademacher bound (Mohri et al. 2012, Theorem 3.3) combined with a union bound, the following holds with probability at least \(1-\delta\) for all \(l \in [n]\) and \(f\in \mathcal {F}_l\),

and \(\sqrt{{\log (n/\delta )}/(2n)}+{1}/{n}\le \sqrt{{2\log (en/\delta )}/{n}}\) for all \(n\ge 3\). This proves the first bound in Theorem 3 for all \(f \in \bigcup _{l=1}^n\mathcal {F}_l\) and \(n \ge 3\). On the other hand, if \(f \in \overline{{\varvec{\mathcal {H}}}} \backslash \bigcup _{l=1}^n\mathcal {F}_l\) or \(n \le 2\) then \(\max \{2 \Lambda \cdot \mathfrak {R}_{{\varvec{\mathcal {H}}},n}\left( f,P_X\right) , B\sqrt{{2\log (en/\delta )}/{n}}\} \ge B\), in which case the bound in Theorem 3 follows from \(\sup _{(u,y)\in \mathbb {R}\times \mathcal {Y}}\mathcal {L}(u,y) \le B\), which completes the proof of the first bound in Theorem 3. The second bound may be proved by a similar argument exploiting (4). \(\square\)

4.2 Learning with a self-bounding Lipschitz loss

To further demonstrate the generality of our theory, here we apply Theorem 4 to multi-output learning, and show how to obtain a heterogeneous ensemble with good generalisation as well as favourable rates.

We begin with the problem-specific preliminaries. The main result of this section is Theorem 5.

The label space is \(\mathcal {Y}\subseteq \{0,1\}^{Q}\), where \(Q\), the number of classes, can be very large in applications, but the number of simultaneous positive labels for an instance is typically much smaller, resulting in q-sparse binary vectors \(\mathbb {Y}(q):= \{(y_j)_{j \in [Q]} \in \{0,1\}^{Q}:\sum _{j \in [Q]}y_j \le q\}\), where \(q \le Q\). The following definition from Reeve and Kabán (2020) was shown to explain favourable rates for learning multi-output problems, ranging from slow rate \(n^{-1/2}\), in the case of general Lipschitz losses, to fast rates \(n^{-1}\).

Definition 3

(Self-bounding Lipschitz condition) A loss function \(\mathcal {L}: \mathbb {R}\times \mathcal {Y}\rightarrow \mathbb {R}\) is said to be \((\lambda ,\theta )\)-self-bounding Lipschitz for \(\lambda >0,\theta \in [0,1/2]\) if for all \(y \in \mathcal {Y}\) and \(u,u' \in \mathbb {R}\), \(\vert \mathcal {L}(u,y)-\mathcal {L}(u',y)\vert \le \lambda \cdot { \max \{ \mathcal {L}(u,y),\mathcal {L}(u',y)\}^{\theta }} \cdot \left\| u-u'\right\| _{\infty }\).

A nice example associated with fast rates is the pick-all-labels loss (Menon et al. 2019), which generalises the multinomial logistic loss to multi-label problems.

Example 4

(Pick-all-labels) Given \(\mathcal {Y}= \mathbb {Y}(q)\), the pick-all-labels loss \(\mathcal {L}:\mathbb {R}\times \mathcal {Y}\rightarrow [0,\infty )\) is defined by \(\mathcal {L}(u,y):= \sum _{l \in [Q]} y_l \log \left( \sum _{j \in [Q]} \exp (u_j-u_l)\right)\), where \(u = (u_j)_{j \in [Q]} \in \mathbb {R}\) and \(y = (y_j)_{j \in [Q]} \in \mathcal {Y}\). As shown in (Reeve and Kabán, 2020), \(\mathcal {L}\) is \((\lambda ,\theta )\)-self-bounding Lipschitz with \(\lambda = 2 \sqrt{q}\) and \(\theta = 1/2\).

To capture the complexity of a multi-output function class \(\mathcal {H}\subseteq \mathcal {M}(\mathcal {X},\mathbb {R}^{Q})\), its projected class is defined as \(\Pi \circ \mathcal {H}:= \{ \Pi \circ f: f \in \mathcal {H}\}\subseteq \mathcal {M}(\mathcal {X}\times [Q],\mathbb {R})\), where \(\Pi \circ f: \mathcal {X}\times [Q] \rightarrow \mathbb {R}\) defined by \((\Pi \circ f) (x,\ell )= \pi _\ell (f(x))\), and \(\pi _\ell : \mathbb {R}^{Q}\rightarrow \mathbb {R}\) is the \(\ell\)-th coordinate projection.

We shall make use of the following optimistic-rate bound from Reeve and Kabán (2020).

Theorem 4

(Reeve and Kabán, 2020) Suppose we have a multi-output function class \(\mathcal {H}\subseteq \mathcal {M}(\mathcal {X},[-\beta ,\beta ]^Q)\) along with a \((\lambda ,\theta )\)-self-bounding Lipschitz loss \(\mathcal {L}:\mathbb {R}^Q \times \mathcal {Y}\rightarrow [0,B]\) for some \(\lambda >0,\theta \in [0,1/2]\). Given any \(\delta \in (0,1)\), with probability at least \(1-\delta\), the following bounds hold for all \(f \in \mathcal {H}\)

where K is a numerical constant, and \(\Gamma _{n,Q,\delta }^{\lambda ,\theta }(\mathcal {H}):=\)

With these preliminaries in place, we consider convex combinations of multi-output functions. Let us suppose \({\mathbf {\mathcal {H}}}:=(\mathcal {H}_j)_{j\in [m]}\) consists of multi-output functions \(\mathcal {H}_j \subseteq \mathcal {M}(\mathcal {X},[-\beta ,\beta ]^Q)\). Note that \(\Pi\) is linear, so if \(f \in \text {conv}\left( \bigcup _{j \in [m]}\mathcal {H}_j\right)\) then we also have \(\Pi \circ f \in \text {conv}\left( \bigcup _{j \in [m]}\Pi \circ \mathcal {H}_j\right)\), and hence we can quantify the complexity of f through \(\mathfrak {R}^*_{\Pi \circ {\mathbf {\mathcal {H}}},nQ}(\Pi \circ f)\), where \(\Pi \circ {\mathbf {\mathcal {H}}}:=(\Pi \circ \mathcal {H}_j)_{j\in [m]}\), which leads to the following result.

Theorem 5

Consider a \((\lambda ,\theta )\)-self-bounding Lipschitz loss \(\mathcal {L}:\mathbb {R}^Q \times \mathcal {Y}\rightarrow [0,B]\) for some \(\lambda \in (0,\infty )\), \(\theta \in [0,1/2]\) and \(B\ \in [1,\infty )\), along with multi-output function classes \(\mathcal {H}_j \subseteq \mathcal {M}(\mathcal {X},[-\beta ,\beta ]^Q)\) for \(j \in [m]\) and \(\overline{{\varvec{\mathcal {H}}}}:=\text {conv}\left( \bigcup _{j \in [m]}\mathcal {H}_j\right)\). Given any \(\delta \in (0,1)\), with probability at least \(1-\delta\), for all \(f\in \overline{{\varvec{\mathcal {H}}}}\),

where K is a numerical constant and \(\Gamma _{n,Q,\delta }^{\lambda ,\theta }(f):=\)

\(\left( \lambda \left( {Q}^{\frac{1}{2}} \log ^{\frac{3}{2}} \left( e\beta nQ\right) \left( \mathfrak {R}^*_{\Pi \circ {\varvec{\mathcal {H}}},nQ}(\Pi \circ f) + \beta \sqrt{\frac{\log m}{nQ}}\right) + \frac{1}{\sqrt{n}}\right) \right) ^{\frac{1}{1-\theta }} +\frac{4B\log ({n}/\delta ) }{n}.\)

We note that often \(\mathfrak {R}^{*}_{nQ}(\Pi \circ \mathcal {F})=\tilde{\mathcal {O}}(\{{nQ}\}^{-1/2})\), so the dependence on the number of classes Q is, up to the mild factor \(\log ^{\frac{3}{2(1-\theta )}}(Q)\), only though the self-bounding Lipschitz constant \(\lambda\). Hence in Example 4 there is no further dependence on Q, but only q. Since \(\theta =1/2\), we also have fast rates for multi-label heterogeneous ensembles with very large numbers of labels, provided the individual label vectors are sufficiently sparse.

Proof

(Proof of Theorem 5) Take \(\epsilon >0, L\in {\mathbb {N}}\) (to be determined later), and for each \(l\in [L]\),

By Theorem 4 combined with the union bound, the following holds with probability at least \(1-\delta\) for all \(l\in [L]\) and \(f\in \mathcal {F}_l\),

Moreover, by Lemma 4, for each \(l \in [L]\) we have \(\mathfrak {R}^{*}_{\Pi \circ {\varvec{\mathcal {H}}},nQ}\left( \Pi \circ \mathcal {F}_l\right) \le l\cdot \epsilon + {\beta }\cdot \left( \sqrt{ \frac{2\log m}{nQ}}+\sqrt{\frac{\pi }{2nQ}}\right)\). Hence, for any \(\ell \in [L]\) and \(f \in \mathcal {F}_{\ell }\) we have \(\Gamma _{n,Q,\delta /L}^{\lambda ,\theta }(\mathcal {F}_l)\le\)

Moreover, since \(f \in \mathcal {F}_l\), we have \(l\cdot \epsilon \le \mathfrak {R}^{*}_{\Pi \circ {\varvec{\mathcal {H}}},nQ}(\Pi \circ f)+\epsilon\). Hence, choosing \(L=n\) and \(\epsilon =B/n\) yields the required bound when n is sufficiently large that \(\epsilon \le \epsilon ^{1-\theta }\). On the other hand, if \(\epsilon >1\), so \(n<B\), then the bound is immediate. \(\square\)

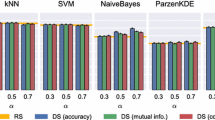

4.3 Algorithmic consequences and numerical experiments

We exemplify and assess the use of our generalisation bounds empirically by turning Theorems 3 and 5 into learning algorithms for binary and multi-label classification problems, by minimising the bounds. We implement these as regularised gradient boosting with random subspace and random projection based base learners. Such ensembles are heterogeneous, since each base class is defined on a different subspace of the ambient input space.

For concreteness and simplicity, we consider generalised linear model base learners. Denoting by \(\Theta :=\{a,b,v,w\}\) the parameters, a base learner has the form \(h(x, \Theta ) = a\tanh (x^\top w + v) + b\), where \(a,b,v\in \mathbb {R}\), and \(w\in \mathcal {X}\). For multi-label problems, \(w\in \mathcal {X}^Q\) and \(\tanh (\cdot )\) is computed component-wise. To ensure that h has bounded outputs, we constrain the magnitudes of a and b. We do not regularise the weight vectors w, as the random dimensionality reduction itself performs a regularisation role. Thus, with k-dimensional inputs, a binary classification base class of this form has Rademacher width of order \((k/n)^{1/2}\), a multi-label base class has its \(\Gamma _{n,Q,\delta }^{\lambda ,\theta }\) of order \((\lambda k/n)^{\frac{1}{2(1-\theta )}}\), and neither the exponents nor n affect the minimisation. This translates into easy-to-compute individual penalties for each base learner. The pseudo-code of the resulting algorithm is given in Algorithm 1. Other base learners are of course possible, and their Rademacher width would then be replacing this penalty term. However our goal is to assess in principle the ability of our bounds to turn into competitive learning algorithms. We generated \(k_t\) for \(t\in [\tau ]\) for the base learners independently from a skew distribution proportional to \(-\log (U)\) where \(U\sim \text {Uniform}(0,1)\), re-scaled these between 1 and half of the rank of the data matrix, and rounded them to the closest integers. This favours simpler base models, both for efficiency and to avoid large penalty terms.

In addition to our heterogeneous ensembles, we also tested regularised gradient boosting on the original data; this is a homogeneous ensemble that performs all computations in the original high dimensional space. For comparisons we chose the closest related existing methods as follows. For binary classification we compare with adaboost, logitboost, and with the top results obtained by Tian and Feng (2021) by the methods RASE\({}_1\)-LDA, RASE\({}_1\)-kNN, RP-ens-LDA, RP-ens-kNN, as well as the classic Random Forest. For multi-label classification, we compare with existing multi-label ensembles: COCOA (Zhang et al. 2015), ECC (Read et al. 2011), and fRAkEL (Kimura et al. 2016) provided by the MLC-Toolbox (Kimura et al. 2017).

We use data sets previously employed by our competitors: the largest two real-world data sets from Tian and Feng (2021), and 5 benchmark multi-label data sets from Zhang et al. (2015), Read et al. (2011) and Kimura et al. (2016). The data characteristics are given in Tables 1 and 2. We stantardised all data sets to zero mean and unit variance. In binary problems we tested different training set sizes, following Tian and Feng (2021), leaving the rest of the data for testing. In multi-label problems we used 80% of the data for training and 20% for testing. We did not do any feature selection, to avoid external effects in assessing the informativeness of our bounds, while the RASE methods do so and hence might have some advantage in comparisons. In particular, the RASE algorithms use 200 evenly weighted base learners each selected from 500 trained candidates and meanwhile collecting information for feature selection – this totals 10,000 trained base learners – while we just train 1000 base learners in gradient boosting fashion. We have set \(\eta\) by 5-fold cross-validation in \(\{ 10^{-7}, 10^{-5},10^{-3},10^{-1},0\}\cdot n^{-1/2}\).

The misclassification rates obtained on the binary problems are summarised in Table 3 with both exponential and logistic loss functions. The shrinkage parameter was set to 0.1, which is a common choice in gradient boosting algorithms. The multi-label results are given in Table 4, with the pick-all-labels loss function – here the values represent the average area under the ROC curve (AUC) over the labels (higher is better). We present results with shrinkage \(\epsilon =0.1\) as well as without shrinkage (\(\epsilon =1\)); our heterogeneous ensembles appear more robust to the setting of this parameter than homogeneous gradient boosting, where shrinkage is known to have a role in preventing overfitting.

From Tables 3 and 4 we see that our regularised heterogeneous ensembles (s reg = regularised random subspace gradient boosting; g reg = regularised random projection gradient boosting) consistently display good performance, even best performance in several cases. The regularised high-dimensional gradient boosting (HD reg) is only sometimes better and only marginally – despite it performs the computations to train all base learners in the full dimensional input space. The logistic loss worked better than exponential on these data, likely because of noise. Interestingly, the random subspace setting of our ensembles tended to work better than random projections, which is good news both computationally and from interpretability considerations. We also see that un-regularised models (Adaboost and Logitboost) sometimes display erratic behaviour, especially in the small sample regime. RASE performs very well in general, as its in-built feature selection also has a regularisation effect. One could mimic this with our boosting-type random subspace ensemble, especially when interpretability is at premium, although we have not pursued this here. Based on these results, we conclude that our heterogeneous random subspace ensemble is a safe-bet competitive approach.

5 Relation to previous work and discussion

The following corollary shows that, with a specific example loss function, our Theorem 3 recovers a result of Cortes et al. (2014), termed as “deep boosting”.

Example 5

(The margin loss and the ramp loss) Consider binary classifcation, we have \(\mathcal {Y}= \{-1,+1\}\). The ramp loss is a Lipschitz upper bound for the zero–one loss, which is in turn upper bounded by the margin loss. More precisely, for \(\rho \in [0,1]\), the \(\rho\)-ramp loss is defined by \(\mathcal {L}_{\rho }^{r}(u,y):= \min \{1,\max \{0,1-(1/\rho )\cdot u\cdot y\}\}\) and the margin loss is defined by \(\mathcal {L}_{\rho }(u,y):= \mathbf {1}\{u\cdot y \le \rho \}\). The \(\mathcal {L}_{\rho }^{r}\) is \(1/\rho\)-Lipschitz and \(\mathcal {L}_{0,1}(u,y)\le \mathcal {L}_{\rho }^{r}(u,y)\le \mathcal {L}_{\rho }(u,y)\) for all \(u \in \mathbb {R}\) and \(y \in \mathcal {Y}\).

Corollary 1

Consider a sequence of function classes \({\varvec{\mathcal {H}}}= (\mathcal {H}_j)_{j \in [m]}\) with \(\mathcal {H}_j \subseteq \mathcal {M}(\mathcal {X},[-1,1])\). Given \(\delta \in (0,1)\), with probability \(1-\delta\), the following holds for all \(f=\sum _{t \in [\tau ]}\alpha _t \cdot h_{j_t} \in \overline{{\varvec{\mathcal {H}}}}\) with \((\alpha _t)_{t \in [\tau ]} \in \Delta _\tau\) and \(h_{j_t} \in \mathcal {H}_{j_t}\),

A similar result holds with \(\hat{\mathfrak {R}}_n(\mathcal {H}_{j_t},P_X)\) in place of \(\mathfrak {R}(\mathcal {H}_{j_t},P_X)\).

Proof

Follows straightforwardly from Theorem 3 applied to Example 5 and relaxing the infimum in our definition of element-wise complexities. \(\square\)

Corollary 1 is closely related to Theorem 1 of Cortes, (2014), which contains a similar result with a different proof. We can also relate our Theorem 5 to multi-class “deep boosting” given in Kuznetsov et al. (2014) in the special case of \(q=1\). Their bound grows linearly with the number of classes Q, while ours can exploit label-sparsity; their rate is \(n^{1/2}\), while ours allow significantly tighter bounds when the empirical error is sufficiently low and the sample size sufficiently large.

Foremost, our theoretical framework is general and widely applicable whenever heterogeneous geometric sets are of interest. The main benefit of our approach is to allow for a unified analysis which can be straightforwardly extended, and it justifies heterogeneous ensemble constructions beyond the previous theory. For instance SnapBoost (Parnell et al. 2020) considered a mix of trees and kernel methods in gradient boosting and was empirically found very successful.

The bound suggests a regularisation should be included in the training of each base learner, proportional to the Rademacher complexity of its class. Of course the more data we have for training the less the effect of this will be – SnapBoost did not include a regularisation but trained on very large data sets. In relatively small sample settings (as we consider in Sect. 4.3) the regularisation suggested by the bound is expected to be more essential. However, we need to reckon that Rademacher complexity is hard to compute in practice, one typically resorts to upper bounds, therefore over-regularising can be a concern. This may be somewhat countered by including a balancing regularisation parameter that may be tuned by cross-validation.

6 Conclusions

We presented a general approach to deal with set heterogeneity in high probability uniform bounds, which is able to exploit low complexity components. We applied this to tighten norm preservation guarantees in random projections, and to justify and guide heterogeneous ensemble construction in statistical learning. We also exemplified concrete use cases by turning our generalisation bounds into a practical learning algorithms with competitive performance.

Data availability

Not applicable.

Code availability

Research code is available from https://github.com/jakramate/rpgboost.

References

Bingham, E., & Mannila, H. (2001) . Random projection in dimensionality reduction: applications to image and text data. In: Proceedings of the 7th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 245–250 . ACM

Boucheron, S., Lugosi, G., & Massart, P. (2013). Concentration Inequalities: A Nonasymptotic Theory of Independence. UK: Oxford University Press.

Cannings, T.I., & Samworth, R.J. (2017). Random–projection ensemble classification series B statistical methodology. Journal of the Royal Statistical Society

Cortes, C., Mohri, M., & Syed, U. (2014). Deep boosting. In: International Conference on Machine Learning, pp. 1179–1187

Kimura, K., Kudo, M., Sun, L., & Koujaku, S. (2016). Fast random k-labelsets for large-scale multi-label classification. In: ICPR, pp. 438–443. IEEE

Kimura, K., Sun, L., & Kudo, M. (2017). MLC Toolbox: A MATLAB/OCTAVE Library for Multi-Label Classification. arXiv

Klartag, B., & Mendelson, S. (2005). Empirical processes and random projections. Journal of Functional Analysis, 225(1), 229–245.

Kuznetsov, V., Mohri, M., & Syed, U. (2014). Multi-class deep boosting. Advances in Neural Information Processing Systems, 27, 2501–2509.

Liaw, C., Mehrabian, A., Plan, Y., & Vershynin, R. (2017) . A simple tool for bounding the deviation of random matrices on geometric sets. In: Geometric Aspects of Functional Analysis, pp. 277–299. Springer,

Menon, A.K., Rawat, A.S., Reddi, S., & Kumar, S. (2019). Multilabel reductions: What is my loss optimising? In: Advances in Neural Information Processing Systems, pp. 10599–10610

Mohri, M., Rostamizadeh, A., & Talwalkar, A. (2012). Foundations of Machine Learning. UK: MIT press.

Parnell, T.P., et al. (2020) . Snapboost: A heterogeneous boosting machine. In: Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6-12, 2020

Read, J., Pfahringer, B., Holmes, G., & Frank, E. (2011). Classifier chains for multi-label classification. Machine Learning, 85, 333–359.

Reeve, H.W.J., & Kabán, A. (2020). Optimistic bounds for multi-output learning. In: Proceedings of the 37th International Conference on Machine Learning, ICML 2020, 13-18 July 2020, Virtual Event. Proceedings of Machine Learning Research, vol. 119, pp. 8030–8040. PMLR,

Tian, Y., & Feng, Y. (2021). Rase: Random subspace ensemble classification. Journal of Machine Learning Research, 22(45), 1–93.

Wainwright, M.J. (2019) . High-dimensional Statistics: A Non-asymptotic Viewpoint vol. 48. Cambridge University Press

Wright, J., & Ma, Y. (2022). High-Dimensional Data Analysis with Low-Dimensional Models: Principles, Computation, and Applications. UK: Cambridge University Press.

Zhang, M.-L., Li, Y.-K., & Liu, X.-Y. (2015). Towards class-imbalance aware multi-label learning. In: Proceedings of the 24th International Conference on Artificial Intelligence. IJCAI’15, pp. 4041–4047. AAAI Press

Acknowledgements

AK & HR acknowledge the generous support of EPSRC, though the Fellowship grant EP/P004245/1. Part of the computations for Sect. 4.3 were performed using the University of Birmingham’s BlueBEAR HPC service.

Author information

Authors and Affiliations

Contributions

Conception and design - HR & AK; software and data analysis - JB & AK; supervision - AK; writing - HR & AK

Corresponding author

Ethics declarations

Conflicts of interests

The authors declare that they have no conflicts of interest or competing interests relating to the content of this article.

Ethics approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Additional information

Editors: Yu-Feng Li and Prateek Jain.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

Proof of Lemma 4

Given \(\varvec{x} \in \mathcal {X}^n\), the bound for \(\hat{\mathfrak {R}}_n\left( \cdot , \varvec{x}\right)\) holds by the second part of Theorem 1 with \({\mathbb {S}}_j = \left\{ n^{-1}\cdot \left( h(x_i)\right) _{i \in [n]} \right\} _{h \in \mathcal {H}_j}\) and \(r=\beta /n\).

To prove the bound for \(\mathfrak {R}_{n}^{*}\) we observe that, for any fixed \(\varvec{x} \in \mathcal {X}^n\), we have

Indeed, take \(f \in \overline{{\varvec{\mathcal {H}}}}\) with \(\mathfrak {R}_{{\varvec{\mathcal {H}}},n}^{*}\left( f\right) < \kappa\). It follows that \(f=\sum _{t \in [\tau ]}\alpha _t \cdot h_t\) with \(\sum _{t \in [\tau ]}\alpha _t \cdot \mathfrak {R}_{n}^{*}\left( \mathcal {H}_{j_t}\right) < \kappa\) where \((j_t)_{t \in [\tau ]} \in [m]^\tau\), \((h_t)_{t \in [\tau ]} \in \prod _{t\in [\tau ]} \mathcal {H}_{j_t}\) and \((\alpha _t)_{t \in [\tau ]} \in \Delta _\tau\). Given that \(\hat{\mathfrak {R}}_n\left( \mathcal {H}_{j_t},\varvec{x}\right) \le \mathfrak {R}_{n}^{*}\left( \mathcal {H}_{j_t}\right)\) it follows that \(\sum _{t \in [\tau ]}\alpha _t \cdot \hat{\mathfrak {R}}_n\left( \mathcal {H}_{j_t},\varvec{x}\right) \le \sum _{t \in [\tau ]}\alpha _t \cdot \mathfrak {R}_{n}^{*}\left( \mathcal {H}_{j_t}\right) < \kappa\), and so \(\hat{\mathfrak {R}}_{{\varvec{\mathcal {H}}},n}\left( f,\varvec{x}\right) < \kappa\), which proves the claim (7). Hence, applying the bound for \(\hat{\mathfrak {R}}_n\left( \cdot , \varvec{x}\right)\) we have

Taking a supremum over all \(\varvec{x} \in \mathcal {X}^n\) we deduce the bound

The corresponding bound with \(\mathfrak {G}_{n}^{*}\) in place of \(\mathfrak {R}_{n}^{*}\) may be proved similarly.

To prove the bound for \(\mathfrak {R}_{n}\) (5) we first apply McDiarmid’s inequality (cf. (Mohri et al., 2012), (3.14)) combined with the union bound to deduce that with probability at least \(1-\delta\) the following holds for all \(j \in [m]\),

Let \(\xi (\delta ):= \beta \cdot \sqrt{{2\log (m/\delta )}/{n}}\). Now suppose \(\varvec{X}\) satisfies (8) and take \(f \in \overline{{\varvec{\mathcal {H}}}}\) with \(\mathfrak {R}_{{\varvec{\mathcal {H}}},n}\left( f,P\right) < \kappa\). It follows that \(f=\sum _{t \in [\tau ]}\alpha _t \cdot h_t\) with \(\sum _{t \in [\tau ]}\alpha _t \cdot \mathfrak {R}_{n}\left( \mathcal {H}_{j_t},P\right) < \kappa\) where \(\tau \in {\mathbb {N}}\), \((j_t)_{t \in [\tau ]} \in [m]^\tau\), \((h_t)_{t \in [\tau ]} \in \prod _{t\in [\tau ]} \mathcal {H}_{j_t}\) and \((\alpha _t)_{t \in [\tau ]} \in \Delta _\tau\). By (8) we deduce that \(\sum _{t \in [\tau ]}\alpha _t \cdot \hat{\mathfrak {R}}_n\left( \mathcal {H}_{j_t},\varvec{X}\right) < \kappa +\xi (\delta )\). Hence, (8) implies that

Now applying again the bound in (4), with probability at least \(1-\delta\) we have

Hence, if we define a random variable Z by

we have \(Z \in \mathcal {R}(\beta ^2/n)\). By integrating the tail bound we deduce \(\mathbb {E}(Z) \le \beta \cdot \sqrt{\pi /2n}\). It follows from the definition of the average Rademacher width that

as required. The proof of the corresponding bound with \(\mathfrak {G}_{n}(\cdot ,P)\) in place of \(\mathfrak {G}_{n}(\cdot ,P)\) is similar, except for replacing McDiarmid’s inequality with Borell-TIS. \(\square\)

List of symbols

S | A generic geometric set |

\(\{X_s\}_{s\in S}\) | A stochastic process indexed by S |

\({\mathbb {S}}\) | A sequence \({\mathbb {S}}=({\mathbb {S}}_j)_{j \in [m]}\) of bounded sets \({\mathbb {S}}_j\) where, \(j\in [m]\) |

\(\overline{{\mathbb {S}}}\) | convex hull from \({\mathbb {S}}\), i.e. \(\text {conv}(\bigcup _{j\in [m]}{\mathbb {S}}_j)\) |

m | Number of sets in \({\mathbb {S}}\) |

\(\tau\) | Number of points defining a convex hull |

\(\Delta _\tau\) | \(\tau\)-dimensional simplex |

\((\alpha _t)_{t\in [\tau ]}\) | An element of \(\Delta _\tau\) |

\(\mathcal {R}(\cdot )\) | Set of all random variables with sub-Gaussian right tail |

g | Standard Gaussian vector |

\({\gamma }\) | i.i.d. Rademacher vector |

\(\mathfrak {G}(\cdot )\) | Gaussian width |

\(\mathfrak {R}(\cdot )\) | Rademacher width |

Z | Supremum of a stochastic process |

\({\overline{Z}}_{\mu ,\sigma }\) | Supremum of mixture process s.t. \(\mu ,\sigma\) dependent constraints |

\(\mathfrak {G}_{{\mathbb {S}}}(s)\) | Element-wise Gaussian complexity of \(s\in \overline{{\mathbb {S}}}\) |

\(\mathfrak {R}_{{\mathbb {S}}}(s)\) | Element-wise Rademacher complexity of \(s\in \overline{{\mathbb {S}}}\) |

b | Largest diameter of sets in \({\mathbb {S}}\) |

\([-r,r]^n\) | Hypercube shaped set used in Theorem 1 |

\(\kappa\) | Element-complexity constraint parameter in Theorem 1 |

L | An integer |

\((T_l)_{l\in [L]}\) | Sets of increasing complexity that cover \(\overline{{\mathbb {S}}}\) |

d | Data dimensionality |

k | Target dimension of RP, \(k\le d\) |

R | \(k\times d\) random matrix (RP map) |

\(\Vert R \Vert _{\psi _2}\) | Sub-Gaussian norm of R |

\(C_{\mathfrak {L}}\) | Constant introduced in Lemma 3 |

\(\mathcal {X}\) | Instance space (a measurable space) |

\(\mathcal {Y}\) | Label or target space, e.g. {-1,1} or \(\mathbb {R}\) or \(\mathbb {R}^Q\) |

\(\mathcal {M}(\mathcal {X},{{\mathcal {V}}})\) | All measurable functions with domain \(\mathcal {X}\) & co-domain \({{\mathcal {V}}}\) |

\(\mathcal {H}\) | A generic hypothesis class |

\([-\beta ,\beta ]\) | Range of values of hypothesis functions |

\(\varvec{x}\) | Non-random sequence of points, \((x_i)_{i\in [n]}\) |

\(P_X\) | Probability distribution on \(\mathcal {X}\) |

\(\varvec{X}\) | Random sequence of n points drawn i.i.d. from \(P_X\) |

\(\hat{\mathfrak {G}}_n\left( \cdot ,\varvec{x}\right)\) | Empirical Gaussian width of a function class |

\(\hat{\mathfrak {R}}_n\left( \cdot ,\varvec{x}\right)\) | Empirical Rademacher width of a function class |

\(\mathfrak {G}_{n}(\cdot ,P)\) | Gaussian width of a function class |

\(\mathfrak {R}_{n}(\cdot ,P)\) | Rademacher width of a function class |

\(\mathfrak {G}_{n}^{*}(\cdot )\) | Uniform Gaussian complexity of a function class |

\(\mathfrak {R}_{n}^{*}(\cdot )\) | Uniform Rademacher complexity of a function class |

\({\varvec{\mathcal {H}}}\) | A sequence of hypothesis classes \((\mathcal {H}_j)_{i\in [m]}\) |

\(\overline{{\varvec{\mathcal {H}}}}\) | Convex hull from \({\varvec{\mathcal {H}}}\), i.e. \(\text {conv}(\bigcup _{j\in [m]}\mathcal {H}_j)\) |

\(\hat{\mathfrak {R}}_{{\varvec{\mathcal {H}}},n}(f,\varvec{x})\) | Element-wise empirical Rademacher complexity of \(f \in \overline{{\varvec{\mathcal {H}}}}\) |

\(\mathfrak {R}_{{\varvec{\mathcal {H}}},n}(f,P)\) | Element-wise Rademacher complexity of \(f \in \overline{{\varvec{\mathcal {H}}}}\) |

\(\mathfrak {R}_{{\varvec{\mathcal {H}}},n}^{*}(f)\) | Element-wise uniform Rademacher complexity of \(f \in \overline{{\varvec{\mathcal {H}}}}\) |

\(\hat{\mathfrak {G}}_{{\varvec{\mathcal {H}}},n}(f,\varvec{x})\) | Element-wise empirical Gaussian complexity of \(f \in \overline{{\varvec{\mathcal {H}}}}\) |

\(\mathfrak {G}_{{\varvec{\mathcal {H}}},n}(f,P)\) | Element-wise Gaussian complexity of \(f \in \overline{{\varvec{\mathcal {H}}}}\) |

\(\mathfrak {G}_{{\varvec{\mathcal {H}}},n}^{*}(f)\) | Element-wise uniform Gaussian complexity of \(f \in \overline{{\varvec{\mathcal {H}}}}\) |

\(P\) | Probability distribution on \(\mathcal {X}\times \mathcal {Y}\) |

(X, Y) | A random tuple from \(\mathcal {X}\times \mathcal {Y}\) drawn from \(P\) |

\(\mathcal {L}\) | Loss function |

\(B\) | Largest value of \(\mathcal {L}\) |

\(\Lambda\) | Lipschitz constant of \(\mathcal {L}\) |

\(\mathcal {E}_{\mathcal {L}}(f)\) | Generalisation error (risk) of f |

n | Sample size |

\(\mathcal {D}\) | Training set drawn i.i.d. from \(P\) |

\(\hat{\mathcal {E}}_{\mathcal {L}}(f)\) | Training error (empirical risk) of f |

\((\mathcal {F}_l)_{l\in [L]}\) | Sets of increasing complexity that cover \(\overline{\mathcal {H}}\) |

Q | Number of classes in multi-label problems |

q | Maximum number of non-zero labels for an instance |

\(\mathbb {Y}(q)\) | Set of all label vectors with at most \(q\le Q\) non-zeros |

\((\lambda ,\theta )\) | Self-bounding Lipschitz parameters |

\(\pi _\ell (f)\) | \(f_{\ell }\), the \(\ell\)-th coordinate projection of a multi-output f |

\(\Pi \circ f\) | Projection of f: \(\mathcal {X}\times [Q] \rightarrow \mathbb {R}, (\Pi \circ f) (x,\ell )= \pi _\ell (f(x))\) |

\(\Pi \circ \mathcal {H}\) | Projected multi-output class, \(\{ \Pi \circ f: f \in \mathcal {H}\}\) |

\(\Gamma _{n,Q,\delta }^{\lambda ,\theta }(\mathcal {H})\) | Complexity of \(\mathcal {L}\circ \mathcal {H}\) when \(\mathcal {L}\) is self-\((\lambda ,\theta )\)-Lipschitz |

\(\Gamma _{n,Q,\delta }^{\lambda ,\theta }(f)\) | Element-complexity of \(f\in \overline{{\varvec{\mathcal {H}}}}\) with self-\((\lambda ,\theta )\)-Lipschitz loss |

\(\eta\) | Regularisation parameter in the algorithm |

\(\epsilon\) | Shrinkage parameter in the gradient boosting algorithm |

\(\Theta\) | \(\Theta =\{a,b,v,w\}\) base learner’s parameters |

\(\mathcal {L}_{0,1}\) | 0-1 loss |

\(\rho\) | Margin parameter, a value in [0, 1] |

\(\mathcal {L}_{\rho }^{r}\) | Ramp loss |

\(\mathcal {L}_{\rho }\) | Margin loss |

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Reeve, H.W.J., Kabán, A. & Bootkrajang, J. Heterogeneous sets in dimensionality reduction and ensemble learning. Mach Learn 113, 1683–1704 (2024). https://doi.org/10.1007/s10994-022-06254-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10994-022-06254-0