Abstract

This paper presents a novel author profiling method specially aimed at classifying social network users into the multidimensional perspectives for social business intelligence (SBI) applications. In this scenario, being the user profiles defined on demand for each particular SBI application, we cannot assume the existence of labelled datasets for training purposes. Thus, we propose an unsupervised method to obtain the required labelled datasets for training the profile classifiers. Contrary to other author profiling approaches in the literature, we only make use of the users’ descriptions, which are usually part of the metadata posts. We exhaustively evaluated the proposed method under four different tasks for multidimensional author profiling along with state-of-the-art text classifiers. We achieved performances around 88% and 98% of F1 score for a gold standard and a silver standard datasets respectively. Additionally, we compare our results to other supervised approaches previously proposed for two of our tasks, getting very close performances despite using an unsupervised method. To the best of our knowledge, this is the first method designed to label user profiles in an unsupervised way for training profile classifiers with a similar performance to fully supervised ones.

Similar content being viewed by others

1 Introduction

Social business intelligence (SBI) is the field that combines corporate data with user-generated contents with the aim of improving decision making within the companies (Berlanga et al., 2015; Gallinucci et al., 2015). Social business intelligence aims at analysing from different perspectives the texts that users generate as well as their reactions and interactions. Thus, social networks become a rich source of immediate information that can provide useful insights for businesses. Unfortunately, the main challenge of SBI lies in the effective management of the social network’s contents, which are very noisy, unstructured and dynamic by nature. Even when retrieving user posts with specific queries, the number of non-relevant and off-domain posts is too large to extract reliable and useful information.

In this paper, we apply author profiling (AP) in order to characterize both the contents generators and the audience that is interacting with these contents. This information is very useful to increase the quality of social media data for many different purposes (Aramburu et al., 2021). Our main aim is to build dynamic analysis dimensions that allow analysts to focus on particular user profiles according to their current goals. For example, an analysis whose target is to study the promotions in social media of certain products will mainly focus on authors with a professional profile. Profiles are also multidimensional by nature, that is, an author can be characterized from different perspectives like demographics, business roles, domains of interest, and so on.

In our previous work (Aramburu et al., 2021; Lanza-Cruz et al., 2018), we performed the classification of profiles by means of manually selected keywords. Although this method provided us the necessary information for quality assessment and global statistics, it is clearly deficient for multidimensional analysis because of its poor coverage. In this paper, we introduce a formal methodology that allows building the necessary profile classifiers from the analysts’ specifications.

Previous research in AP for social networks has been mainly focused on inferring from the users’ posts aspects like age, gender and language variety (Daelemans, et al., 2019; Pardo et al., 2016; Potthast, et al., 2019; Weren et al., 2014. Other studies aimed at specific user profiles such as campaign promoters, bots, influencers, political position and polarity (Amigó et al., 2014; Li et al., 2014; Nebot et al., 2018; Pennacchiotti & Popescu, 2011), among others. Most of these approaches rely on machine learning techniques, usually involving strong feature engineering efforts and the preparation of large training data (McCorriston et al., 2015; Mishra et al., 2018; Wood-Doughty et al., 2018). In a dynamic scenario, these approaches are not feasible because for any new profile we want to include in a SBI analysis, we would require the definition of a new training dataset. As far as we know, there is no approach able to deal with multiple and dynamic dimensions for user profiles as proposed in this paper.

In our approach, we start with a formal description of the multidimensional model associated with the user profiles of interest. Then, from this model, we automatically generate the datasets for training the corresponding author profile classifiers. In this way, analysts only intervene during the specification of the multidimensional model. Furthermore, analysts can evolve the multidimensional user profiles on demand by redefining the formal descriptions, and the system will automatically update the corresponding classifiers with the newly generated samples.

1.1 Research Objectives

The main objective of our research is to propose a novel approach to quickly constructing automatic profile classifiers suited to user-defined multidimensional SBI analysis perspectives.

Our main hypothesis is that we can automatically generate high-quality labelled datasets from unlabelled ones by applying two kinds of knowledge-based techniques, namely: word embeddings and ontologies. Thus, we can directly use the generated dataset to train the intended profile classifiers for the user-defined classes.

The specific objectives of our approach are as follows:

-

Define a formal description of the multidimensional model associated with the users’ profile classes.

-

Design a method for the extraction of semantic key bigrams from the analyst specifications so that a labelled dataset of user profiles can be generated.

-

Train and evaluate different user profile classifiers with the generated datasets.

1.2 Organization of the Paper

The rest of the paper is organized as follows. Section 2 discusses the related work and Section 3 proposes a multidimensional analysis model to classify user profiles. Section 4 presents a novel method to construct tagged datasets for author profiling with minimal human supervision. Section 5 describes the material used to develop our proposal, and Section 6 the methods used and the experimental settings. Specifically, the proposed classification tasks and the evaluation measures of the models are explained. Section 7 describes the model’s validation approaches and discusses the results. Finally, Section 8 presents the conclusions and future work.

2 Related Work

The problem of AP has been addressed in several scientific branches, especially in the fields of linguistics and natural language processing (NLP) with approaches based on the analysis of textual features extracted from documents. Classical AP approaches have proven to be effective on formally written texts, such as books or the written press. They combine textual attributes ranging from lexical features (e.g., content words and function words) to syntactical features (e.g., POS-based features). Author profiling approaches for social networks show that the most useful attributes are statistical combinations of textual content (e.g., discriminative words) and stylistic features (Schler et al., 2006; López-Monroy et al., 2015). Previous text classification methods combine textual information with other features such as the post metrics (e.g., followers, likes, etc.), user interaction patterns (McCorriston et al., 2015; Kim et al., 2017), as well as sentiment and temporal features for the detection of bots and promoters (Daelemans, et al., 2019; Li et al., 2014; Ouni et al., 2021). Other paper proposes an hybrid approach for identifying the spam profiles by combining social media analytics and bio inspired computing (Aswani et al., 2018). A different approach is used to categorize hacker users in online communities where users are grouped according to their posting patterns (Zhang et al., 2015).

Author profiling in social networks has been mainly addressed for the identification of demographic attributes such as age, gender and geolocation (Daelemans, et al., 2019; López-Santillán et al., 2020; Ouni et al., 2021; Rangel et al., 2021; Schlicht & Magnossão de Paula, 2021; Young et al., 2018). Other complex attributes such as personality traits and influence degree have been also treated extensively in the literature (Cervero et al., 2021; Kumar et al., 2018; Nebot et al., 2018; Rodríguez-Vidal et al., 2021; Schler et al., 2006; Weren et al., 2014). Its relevance and applicability in real-world scenarios can be seen in the different editions of the PAN International Competition on AP (Daelemans, et al., 2019; Pardo et al., 2016; Potthast et al., 2019).

For three years, the CLEF initiative RepLab has held a competitive evaluation of tasks in online reputation management. Among many relevant tasks, they developed solutions for Twitter author profiling, opinion makers identification, and reputational dimension classification (Carrillo-de-Albornoz et al., 2019). In the RepLab 2014 edition (Amigó et al., 2014), one of the objectives was author profiling in the automotive and banking domains. This initiative gives us a complementary view to PAN competition, as AP is more focused to the SBI perspective. In their solutions, researchers made use of a combination of features such as quantitative profile metadata, stylistic and behavioural features. Unfortunately, the obtained results did not exceed 50% in accuracy and MAAC evaluation measures, very close to the baselines. Despite the results, we consider this work a first approach to author profiling in Twitter, which served as the basis for further research (Potthast et al., 2019).

As previously mentioned, most approaches in AP have relied on machine learning with a strong feature engineering effort. Instead, recent techniques based on neural networks for text processing have allowed researchers to define easily new text classifiers as end-to-end solutions. However, only a few works involve the use of word/document embeddings in AP tasks. Markov et al. (2017) applied document embeddings to improve the classification performance in the PAN 2016 competition. López-Santillán et al. (2020) proposed a method to generate documents embeddings by means of evolving mathematical equations that integrate words frequency statistics, namely term frequency, TF-IDF, Information Gain and a new feature called relevance topic value. They employed Genetic Programming to weight word embeddings produced with different methods such as word2vec (Mikolov et al., 2013), fastText (Bojanowski et al., 2017) and BERT (Devlin et al., 2019). Then, they created a document embedding with their weighted averaging. They evaluated their proposed method over PAN’s datasets (from 2013 to 2018) to predict personal attributed of authors. Schlicht and Magnossão de Paula (2021) proposed a framework to identify hate speech spreaders by applying several variants of BERT sentence transformers over the users’ tweets. They also apply an attention mechanism to select important tweets for learning user profiles. For the classification task, they use the results of the PAN Profiling Hate Speech Spreader Task 2021 (Rangel et al., 2021) as a base line, improving them.

Another interesting perspective for SBI is the classification of profiles into individuals and organizations. To the best of our knowledge, three proposals have addressed this task in the literature. McCorriston et al. (2015) presented a study of organization demographics and behavior on Twitter, called Humanizr. They proposed a text classifier to distinguish between personal and organizational users. The training dataset was manually tagged through a crowdsourcing platform. As in PAN and RepLab, each tagged user is associated to a fixed number of posted tweets (in this case 200). Wood-Doughty et al. (2018) proposed Demographer, a tool for demographic inference of Twitter users. They emphasized the need of approaches that optimize computing resources in terms of time, number of data elements, and API accesses. Thus, they applied a minimum number of features in their predictive tasks, instead of a fixed number of posted tweets. For classifying person vs. organization users, they make use of n-grams and neural models trained with user names and profile features. They also proposed a series of ad-hoc methods to build a large labelled dataset in a semi-supervised way. For example, they extract individual accounts from Twitter lists organized by topic and use keywords such as “business” or “companies” to identify organization accounts. The dataset is manually verified by taking random samples. Finally, Wang et al. (2019) presented the method M3, aimed to identify gender, age and person-vs-organization users. M3 is a deep learning system that infers demographic attributes from social media profiles. This system only uses the user profile data, namely: profile image, username, screen name and biography. The model architecture comprises two separate models, a DenseNet for images, and a character-based neural network model for texts. This is a fully supervised approach and therefore requires a great effort for preparing the training datasets.

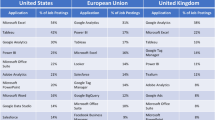

Table 1 shows as a summary the attributes most frequently used in the literature for the task of AP in social networks. The table separates the features extracted from the Post and User objects to train the predictive models. In general, results show that approaches relying on users’ descriptions allow generating larger training datasets and improve notably the classification results.

As main conclusion, existing AP approaches are time-consuming and demanding in terms of human resources, which is unfeasible for scenarios where classes must be dynamically redefined on demand like in SBI. In this work, we advocate the use of unsupervised methods for the construction of the training datasets and its combination with effective supervised methods for the continuous classification of profiles.

3 Knowledge-Centric Data Analysis

Social business intelligence mainly adopts the successful multidimensional data (MD) models defined for data warehouses (Kimball & Ross, 2013). MD models represent analytical data in the form of data cubes, which consist of dimensions for representing contexts, and measures for calculating indicators. Each position in a data cube is a fact and may contain several measures. Dimensions can be hierarchically organized into levels, which allow analysts to aggregate measures from different perspectives and detail levels. In (Berlanga et al., 2015) we proposed SLOD-BI, a multidimensional model for analysing social networks where two kinds of facts are regarded, namely: post facts and social facts. Social facts account for users and their interactions whereas post facts account for the user-generated contents. In this paper, we only focus on the social facts and therefore on the dimensions and metrics for users. Figure 1 shows different dimensions commonly associated with users as well as the new extended dimension for user profiling. This profile dimension consists of the necessary levels with which we can model users from complementary perspectives.

In the example of Fig. 1, we propose three levels, namely: Domain role, Group role and Business role. Domain role indicates whether the user’s activities belong to the application domain or not. The Group role indicates whether the user represents an individual or a collective entity. Finally, the Business role indicates the kind of professional activities the user is involved in.

Each profile dimension level contains the different labels with which a user can be associated. For example, the Domain role contains two labels: on-domain and off-domain. Additionally, a dummy label is included for the unknown label, which is represented with “*” for simplicity.

Levels are ordered according to the analyst’s requirements. In our example, we order levels according to their detail degree, namely: Domain role > Group role > Business role. In this way, we represent the specific labels of each level as a path following the specified order. For example, a journalist of the automotive domain is represented with the path ‘journalist.individual.on-domain’ whereas a cars magazine is represented as ‘journalist.collective.on-domain’. From now on, we use the letter “i” for “individual”, “c” for “·collective” and “a” for “on domain” to write concisely the paths of the multidimensional model.

When modelling the profile dimension, analysts must specify a description for each of the dimension level labels. These descriptions are used as the starting point to identify samples associated with the different profiles. Labels paths can be also described when they clearly denote an entity (e.g., a magazine as journalist.c.*).

Being part of SLOD-BI, all data is represented in a semantic framework. Thus, social facts are represented as ontologies. Dimensions and levels are represented as classes, which are properly related to represent the intended multidimensional model. In this way, this framework enables us to introduce further knowledge about the user profiles. For example, we can indicate that the class journalist is a subclass of the class professional. This knowledge can be very useful in case of label conflicts where the most general class can be taken as default.

Table 2 shows an example of users profile model. For our running example, we model six types of users for a Twitter data stream related to the automotive domain. Table 3 shows the mapping of the multidimensional hierarchy described in Table 2 with the class labels of the classifier model. We also include examples of user descriptions for these categories. The multidimensional hierarchy proposed to model the categories of users in Twitter allows us to represent in a simple way semantic relationships between users, organizations, and topics. This type of analysis and hierarchical labelling can describe many types of relationships that analysts can query from different perspectives during the development of new applications.

We codify the multidimensional model with the ontology description language OWL-DL (W3C Recommendation OWL, 2004). To represent the semantic relationships between concepts we have reused some properties of the Simple Knowledge Organization System (SKOS) (W3C Semantic Web, 2012) resource. Figure 2 shows the relationships between classes, where the generalization-specialization relationships represent the broader and narrower properties respectively.

4 Learning Models for Author Profiling

Task

The very goal of our work is to build a general, scalable and robust machine learning framework for large-scale classification of social network users according to a given multidimensional profile model as described in Section 3.

Solution Overview

Figure 3 shows the proposed framework, which consists of three main components. The first component is an unsupervised method to generate tagged user profiles (silver standard dataset, SS) based on some analysis dimensions. The profiles labelling method makes use of semantic knowledge and word embeddings tools for the construction of a language model per defined class. It takes as input a stream of Twitter user profiles and a small set of tagged words according to classes and context (defined through WordNet (Miller, 1995) semantic groups). The second component is a Dataset Debugger aimed at cleaning and fining errors according to a small set of knowledge rules. The third component is a machine-learning algorithm that learns a classification model from the updated dataset (SS’) which can be used to label a large dataset of user descriptions. The final step is the validation phase with a manually built gold standard dataset (GS).

4.1 The Method

The method we propose to generate labelled samples consists in iteratively identifying key bigrams for each target class of the multidimensional profile model (described in Section 3). The starting point is a set of seed unigrams (\({U}_{c}^{0}\)) for each class. The seed unigrams are eventually selected by the domain experts, who first pick up unigrams from the profile class names and descriptions. Optionally, knowledge resources like Wikipedia.org, BabelNet.org and relatedwords.org can be used by experts to get further interesting unigrams. In this way, experts can face the cold start issue of the unsupervised phase. Table 2 shows some examples of seed unigrams which are mainly associated to the classes name and description. We also assume that there is a corpus \(\mathbb{C}\) of unlabelled user descriptions, preferably related to the domain of analysis. Such a collection is easy to obtain from Twitter by fetching queries related to the domain of analysis. Let \(\mathbb{B}\) be the set of all bigrams \((u,v)\) with a skip of one word occurring in \(\mathbb{C}\).

The goal of our method is to find a set of key bigrams \({B}_{c}\subseteq \mathbb{B}\) for each profile class c. The datasets \({B}_{c}\) must be disjoint to each other in order to induce a partition in the unlabelled samples (clustering hypothesis) by means of representative and unambiguous bigrams. This assumption does not mean that a bigram in \({B}_{c}\) will always be associated with class c. As mentioned in Section 4.2., conflict resolution can change the class of a sample that was initially assigned to a class c due to a bigram. It must be also pointed out that bigrams of different classes can share unigrams. These properties allow the method to reduce the bias of the output labelled dataset.

We assume that there exists a semantic similarity function between unigrams. Similarity between bigrams is a function that combines the similarity of their components. We also need a function to measure the similarity between a bigram and a set of unigrams.

Unigrams and bigrams are related through synonymy \((x\sim y)\) and entailment \((x\to y)\) relationships. Synonymy can be applied to pairs of either unigrams or bigrams, whereas entailment is applied between a unigram and a bigram. Some examples of these relationships are as follows:

In our approach, the synonymy relationship is weaker than those defined in lexical resources like WordNet. Synonymy mainly account for highly similar unigrams according to some provided semantic similarity function. The entailment relationship indicates whether a bigram can be semantically derived from a unigram or not.

For extracting the key bigrams of each class, we define the following procedure:

-

For each class c:

-

Let \({U}_{c}\) be the list \({U}_{c}^{0}\) of seed unigrams of class c along with all their synonymous unigrams.

$${U}_{c}= \left\{u|\exists v\in {U}_{c}^{0}: u \sim v\right\}$$ -

For each unigram \(u\in {U}_{c}\), we define the set of bigrams associated to \(u\) and related to c as follows: \({B}_{u}^{c}=\left\{b|b\in \mathbb{B},\exists u\in {U}_{c}: u \rightarrow b \wedge \nexists {c}^{{\prime }},{c}^{{\prime }}\ne c:sim\left(b,{U}_{{c}^{{\prime }}}\right)>sim\left(b,{U}_{c}\right)\right\}\)

-

-

Finally, the set of key bigrams is defined as \({B}_{c}=\bigcup _{u\in {U}_{c}}{B}_{u}^{c}\)

Once key bigrams are determined for each class, we must ensure that the sets \({B}_{c}\) are disjoint from each other. Thus, if a bigram occurs in more than one class, then we assign it to its most similar class. In case we cannot decide its most similar class, then we discard the bigram. Table 4 shows an example of bigrams associated to multi-level profile classes.

4.1.1 Semantic Synonymy

In this paper, we propose the use of word embeddings and WordNet super-senses (Ciaramita & Johnson, 2003) to define the similarity and synonymy of unigrams and bigrams. Similarity between unigrams is calculated with fastText by using the cosine of the two unigram-embedding vectors:

Here \({x}_{e}\) and \({y}_{e}\) are the embedding vectors of \(x\) and \(y\) respectively. The threshold \({\delta }_{K}\) is set to the similarity of the K-most similar unigram for u. The value of K is a parameter that we will determine during model evaluations. We also train fastText to calculate the unigrams embedding vectors from the pool of profile descriptions \(\left(\mathbb{C}\right)\).

We define the entailment relationships in terms of the synonymy relationship along with the WordNet super-senses as follows:

Where \(ss\left(u\right)\) returns all super-senses associated to the unigram \(u\). In this definition, the super-sense condition aims at constraining the semantics of the bigram head with respect to the unigram that entails it. As a further constraint, we can indicate which super-senses are allowed at each profile level in order to improve the quality of generated bigrams. For example, for the collective class, restricting the allowed super-senses to “organization” entails more precise bigrams.

Finally, the similarity between sets/sequences of unigrams is defined as the pair-wise average similarity of their components. Thus, the similarity between a bigram \(b\) and a unigram set \(U\) is the average of all pair-wise unigrams of the bigram and the set.

4.1.2 Data Labelling

Once we find the key bigrams \({B}_{c}\) for each class c, we can assign labels to each sample \(d\in \mathbb{C}\). The labeling method is as follows:

-

1.

Let \(labels\left(d\right)\) be all classes that have at least one key bigram in the sample \(d\). Besides, this set must fulfill the following condition:

-

2.

We estimate the label distribution for each class \(c\in labels\left(d\right)\), that is, \(P\left(\left.c\right|d,{B}_{c}\right)\).

-

3.

We assign to the sample \(d\) the most likely labels for each profile level according to the previous distributions.

The condition in the first step ensures that each sample has its more likely class within the candidate classes derived from its bigrams.

If a sample contains more than one label in the same profile level, then we say that the sample contains a conflict. Some of these conflicts can be solved by applying the knowledge rules included in the multidimensional profile model (see Section 3). The defined rules must be designed to resolve frequent conflicts. Conflicts that cannot be resolved with the defined rules are excluded from training. In the next section, we show some examples of knowledge-based conflict resolution. Table 5 shows several conflicting bigrams that jointly appear in users descriptions.

In the automotive domain, the most conflicting classes are ‘journalist.i.*’, and ‘loversfans.i.*’. This is because many users describe their primary occupation along with their hobbies and interests, being journalism-related terms like reporter, editor, writer and blogger the most frequent ones in their descriptions. Additionally, we should consider classes related to the interest domain (e.g., car lovers) as complementary in presence of other classes. Next section explains how these implicit rules are applied to solve some conflicts.

Finally, all conflict-free labelled samples constitute the silver standard (SS) dataset, with which profile classifiers can be trained.

4.2 Detecting and Fixing Potential Labelling Conflicts

In order to increase the variety and complexity of the SS dataset, and considering that only conflict-free samples are regarded in the SS, we need to introduce some heuristics to solve frequent conflicts. More specifically, we make use of heuristics based on rules (Zanakis & Evans, 1981) to solve these frequent conflicts.

Given a conflict, a general rule of thumb is to assign the category of the most restrictive class, both at the class level and at the level of dimension granularity (see Table 6). The vocabulary used in the descriptions also gives clues about the specificity of class labels. Thus, vocabulary related to the domain will be much more frequent than that of some specific classes like journalist. Therefore, bigram frequencies provide us an easy way to decide which bigram is the most specific in a conflict.

We must point out that the quality of the result of the previous heuristic rules will depend on the quality of the bigrams associated with each class. On the other hand, the final decision can be modified depending on the perspective or dimension of the data we want to represent in our model. Low-frequency conflicts do not need to be analyzed, as they do not greatly determine the accuracy of the model. In this case, we should disregard samples with conflicts.

As an example of conflict resolution, the profile description “senior news reporter, daily star newspaper. views own. (email).” contains two conflicting bigrams: (news, reporter) and (daily, newspaper). The final decision is ‘journalist.i.*’ according to the rules shown in Fig. 4.

Finally, we also include a set of labelled unigrams that unambiguously imply a specific class or category. For example, frequent words like ‘I’ and ‘me’ imply the individual category. These unigrams allow us to detect some implicit conflicts that cannot be detected through bigrams. It is must be pointed out that only a few of these unigrams were included in the experiments to detect some frequent implicit conflicts.

5 Material and Statistics

To demonstrate the usefulness of the proposed method for AP and its applicability to different domains of analysis, we developed a series of experiments on three domains, namely: cars, tourism and medicine domains. For each domain we collected user profiles over a long-term stream of tweets, which has served as a basis of several analytical studies about SBI with very different proposals (Aramburu et al., 2020, 2021; Lanza-Cruz et al., 2018).

We generated each domain data stream with a series of keywords related with the corresponding domain. For example, for the automobile domain we used keywords representing different car models and brands. The data streams allowed capturing profiles comprising people who posted and interacted with the tweets. Table 7 shows the total number of unlabelled profiles obtained for each analysis domain. The dataset is pre-processed to replace all the links, mentions, emoticons by generic tags and to normalize the text to lower case.

In order to validate the results of the unsupervised labelling method and classification models, we ran three main experiment settings (see Section 7). Firstly, we validate the output of the unsupervised labelling method through the manual validation of a subset of the SS (ground truth set GT). In the second experiment setting, we evaluate the generated classification models taking as reference the ground truths generated for each domain. In addition, we run a test in the automotive domain using a gold standard (GS) dataset as reference during the validation phase.

The GS is a dataset built independently of the SS and manually labelled by human experts. We have built the GS in two steps. The first step is the automatic selection of a set of high-quality user descriptions. The second step is the manual selection and verification of the multi-level labels associated to them. In order to obtain high-quality profiles for authority classes, we organized a ranking based on the number of followers and verified user accounts. For the automotive domain, we also promote screen names associated to car brands or car components. All user accounts were checked to be active in Twitter. We also ensured that the dataset has a high heterogeneity degree by avoiding very similar descriptions to take part of the same class.

In a last experiment, we select the automotive domain to compare the resulting models against some state-of-the-art models related to some of the business dimensions we deal with. For this purpose, we again used the SS for training, but the evaluation was performed over the existing proposed datasets. Table 8 presents a summary of state-of-the-art and GS datasets.

Regarding the group perspective dimension (person-vs-organization), we evaluated our models over the curated dataset Humanizer (McCorriston et al., 2015), also used during models’ evaluation of Demographer (Wood-Doughty et al., 2018) and M3 (Wang et al., 2019). We gathered the user profiles of Humanizr datasets, 20,273 accounts in total, of which 18,922 (93.3%) were still available in Humanizr shared repository as of May 2021.

We also evaluated our results against the RepLab dataset (Amigó et al., 2014) with the revision of tags presented in (Nebot et al., 2018). In this way, the profiles corresponding to RepLab classes “ngo”, “celebrity”,“undecidable” and “sportsman” were removed from the evaluation because they do not map to our multi-dimensional profile model. We put together the RepLab classes “professional”, “employee”, “stockholder”, “investor”, and “company” under our “professional” class. We reported the evaluation scores for three class taxonomies, namely: “professional”, “journalist” and “public_institutions”, over the dataset corresponding to the automotive domain. Resulting alignments are shown in Table 9.

As an alternative, hypothesis verification can be achieved through visual data exploration (Keim & Ward, 2007), as well as, through automated techniques derived from statistics and machine learning. Figure 5 shows the results of applying T-SNE on two-dimensional transformed embeddings for the 6-class problem in the automotive domain. The embeddings were generated with fastText. The distribution of samples per class has been balanced (700 samples per class). From Fig. 5 we can see that most samples are clustered in their corresponding subgroups. If we analyse the figures from right to left, we first find the clusters of the three classes of category collective, while on the left the individual category profiles are grouped. The clusters of the classes ‘professional.i. *’ and ‘journalist.i. *’ are mixed due to the semantic similarity of the texts of their profiles. In general, the spatial representation of the embeddings is quite satisfactory.

6 Methods and Evaluation Metrics

In this section, we first describe all the methods and experimental settings used in our approach for the representation of texts and user profile classification. Then we describe the different classification tasks and finally the models evaluation measures are mentioned.

6.1 Methods

The method for the automatic construction of the SS makes use of fastText (Bojanowski et al., 2017) vectors for obtaining the semantic synonymy of words and bigrams (see Section 4.1). In this way, for each domain we create an unsupervised fastText model whose training corpus corresponds to the set of untagged user profiles (see Table 7). The trained word representations and training data set are then fed into the fastText classification model. To represent the embeddings of words and sentences we have selected fastText due to its simplicity, speed and efficiency. FastText is a library for efficient learning of word representations and sentence classification. One of its main contributions is that it uses the internal structure of the word to improve the representation of vectors. FastText enriches word vectors with sub-word (n-grams) information and word representation is learned by considering a window of left and right context words. The above allows the generation of embeddings for any word, even out-of-vocabulary words. This feature is very useful to represent embeddings of contents from social networks where text abbreviations are frequently used, new words arise, and spelling errors occur. Other methods like Word2Vec and Glove handle whole words but they can’t easily handle words they haven’t seen before. This fastText capability also allows capturing the underlying similarity between words, as for example can associate words that can be used in different contexts as “boxer” and “boxing”. FastText offers similarity functions based on cosine distance between vectors. This similarity is computed for all words in the pre-trained vocabulary, allowing us to obtain the nearest neighbours of a given word and use functions to find analogies between words. For the classification task, multinomial logistic regression is used, where the sentence/document vector corresponds to the features. In (Joulin et al., 2016) refers that experiments show that fastText is often on par with deep learning classifiers in terms of accuracy, and many orders of magnitude faster for training and evaluation.

FastText Unsupervised Model for Word Representations

After extensive testing of the model parameters, we set them as follows: CBoW with 100 dimensions, 10 epochs, minimal word occurrences of 5, negative sampling loss and number of negatives samples of 10, char n-gram between 5 and 8, and sampling threshold of 1e-05.

WordNet Super-Senses

In order to find the most similar words to a given unigram, it is not enough to compute the nearest neighbours in the embeddings space. This function helps to find related but not synonymous words. To solve this problem, we propose the use of the semantic resource WordNet super-senses. WordNet lexical database (Miller, 1995) is the most commonly used resource for sense relation in English and many other languages. Content words (nouns, verbs, adjectives and adverbs) are grouped into sets of near-synonyms called synsets, each expressing a distinct concept. WordNet organize all word senses into lexicographic categories also called super-senses of which 26 are for nouns, 15 for verbs, 3 for adjectives and 1 for adverbs. In the context of our task, we define that two words are similar if their cosine similarity exceeds a given threshold but they must also meet the condition of belonging to the same semantic group (super-sense category in WordNet). To do this, we look up each word in the WordNet super-senses resource and check that they both represent the same semantic group. In this way we can validate a higher degree of similarity between words.

We have applied three kinds of classifiers for the experiments, namely fastText, RoBERTa (Liu et al., 2019) and a zero-shot classifier (Romera-Paredes & Torr, 2015; Zhang et al., 2019). We also used a majority class classifier as baseline model in our experiments.

One of the proposed classifiers is based on transfer learning. Transfer learning has attracted extensive attention in the scientific literature. It is a deep learning approach that uses existing relevant knowledge to solve new tasks in related fields (Peng et al., 2020). The resulting model is fine-tuned by a small dataset that is used to perform a specific task. For this study we have selected RoBERTa, a pre-trained model developed by Facebook AI based on Google’s Bidirectional Encoder Representations from Transformers (BERT). RoBERTa has been shown better performance than BERT and LSTM models for Twitter text classification, even with small training datasets (Choudrie et al., 2021). RoBERTa is a robustly optimized BERT model (Devlin et al., 2019), whose pre-trained models have shown very effective for many downstream tasks like text classification. In the proposed experiments, we have just fine-tuned the pre-trained RoBERTa-base model with the SS dataset for author profile classification.

Zero-shot classification is aimed at categorizing data without using any training samples. In this paradigm, the user briefly describes each intended category, and the algorithm predicts for each instance its most likely category according to their semantics. This paradigm is well suited to our goal as we start with a brief description of each profile class, and then we aim to classify the incoming user descriptions into these categories.

The classification experiment settings are detailed as follows. For each domain, we trained a fastText classifier with the corresponding SS dataset, using the embeddings of the unsupervised fastText models. The empirical evaluation yielded the best results for the following parameters: minimal word occurrences of 2, char n-grams between 3 and 5, and 10 epochs. Then, we trained the following supervised models:

-

fastText 5-CV SS / RoBERTa 5-CV SS. A 5-fold stratified cross validation method was implemented for assessing a classifier trained and tested with the SS dataset.

-

fastText 5-CV GS / RoBERTa 5-CV GS. A 5-fold stratified cross validation strategy for assessing a classification model trained and tested with the GS dataset. It is a supervised classification model whose evaluation metrics serve as a reference to evaluate the models whose datasets were obtained in an unsupervised way.

-

fastText SS-GT / RoBERTa SS-GT. A classification model trained with the SS and tested with the GT.

-

fastText GT-GS / RoBERTa GT-GS. A classification model trained with the GT and tested with the GS.

-

fastText SS-GS / RoBERTa SS-GS. A classification model trained with the SS and tested with the GS.

-

Zero-Shot. We have used the new pipeline for zero-shot text classification included in the Hugging Face transformers package (Vaswani et al., 2017). Due to the computational cost of running this model on very large data sets, the evaluation was performed only over the gold standard dataset.

6.2 Classification Tasks

As the nature of the profiles is multi-level, we prepared four tasks and evaluated different predictive models for different class perspectives. The first model aims to classify profiles in the main perspectives shown in Table 2 (i.e., all levels expect the Domain role). This results into a 6-class problem.

The second model aims to classify only at the Business role level, becoming a 4-class classification problem (i.e., Journalist, Public Services, Professional and Lovers&Fans). In this way, the dataset labels are rewritten by keeping only the Business Role tag. For example, samples with tags ‘journalist.c.*’ and ‘journalist.i. *’ are rewritten to “journalist”. This approach makes it possible to check whether there is a strong semantic relationship between the defined perspectives and to simplify the classification of new data sets.

The third and fourth models are binary classification problems. They are aimed to classify at the Group role level (Collective vs. Individual) and Domain Role level (On Domain vs. Off Domain) respectively.

In the case of Group role, it is worth mentioning that we checked whether the 6-class classification model improved its prediction, since the rest of tags could offer information on the group level. Although the results were good enough, they did not overcome those of the binary model.

6.3 Evaluation Measures

The measures selected to evaluate the results are micro and macro averaged F1-score, or the micro-F1 and macro-F1 scores respectively. Moreover, micro-F1 is also the classifier’s overall accuracy, i.e., the proportion of correctly classified samples out of all the samples. The following always holds true for the micro-F1 case: micro-F1 = micro-precision = micro-recall = accuracy. The Area Under the Curve - Receiver Operating Characteristics (AUC-ROC) metric is also provided in a One-vs-Rest approach.

7 Evaluation Results

In this section, we test our different proposed models against current state of the art systems. There is no author classification system based on the proposed dimensions and levels for SBI; so, in a first tests stage we had used our own gold standard and ground truth datasets to evaluate our SS datasets and models for different classification tasks. In a second tests stage, we limited the evaluation to the classification perspectives of the only publicly available datasets of the state of the art.

7.1 Validation of the Unsupervised Labelling on the Three Domains

The problem of automatic tagging of Twitter profiles based on the detection of bigrams associated with different categories is a complex task. The interrelation between bigrams and unigrams associated with different classes makes it much more difficult to develop explicit rules to correctly identify the label of a profile. Therefore, human validation is required since it allows identifying the origin of potential errors and, if necessary, redefining class seed unigrams and conflict rules. Human validation is performed by a team of experts familiar with the domains and their business goals. Next, we describe the validation that have been assessed by the experts:

-

Cars Domain: We randomly selected 25 profiles per each of the 6 defined classes, resulting in 150 samples out of the 20,155 of the labelled profiles.

-

Tourism: We randomly selected 25 profiles per each of the 8 defined classes, resulting in 200 samples out of the 32,584 labelled profiles.

-

Medicine: We randomly selected 25 profiles per each of the 7 defined classes, resulting in 175 samples out of the 11,613 labelled profiles.

The three experts reviewed the same data individually and flagged the cases in which the assigned label was not correct. After checking the agreement in the categories assigned by the reviewers, the precision metric was used to evaluate the assessment.

The business dimensions proposed for the analysis are transversal to each domain. But within each domain the dimensions must be adjusted to their own categories or levels. For example, for the medicine domain (Aramburu et al., 2020), the category “professional.individual.medicine” represents all professionals in the health sector. On the other hand, the centers that offer health services are represented by a new higher-level category called “services.collective.medicine” that groups together both public and private services. In the case of the tourism domain, the category “professional.individual.tourism” represents all professionals in the tourism sector. While the category “professional.collective.tourism” represents companies within the sector. We have divided the “professional” dimension in the tourism sector into four subcategories in order to better identify the sets of associated profiles, namely: transportation, hotel, restaurant and entertainment. We have used these four new categories as part of the seed unigrams to identify the “tourism professional” dimension in the profiles. While to distinguish the individual and collective categories, the unigrams “professional” and “company” have been used, respectively.

Examples of bigrams of the class “professional.individual.tourism” are (tourism, professional) and (hotel, professional), whereas for “professional.collective.tourism” are (tourism, company) and (hotel, company).

Another interesting issue is that the initial unigrams represent categories at a very high level, like (tourism, professional), which allows carrying out a cold start of the models, without the need of having knowledge about the terminology used in the social network. Additionally, by adding seed unigrams specific to professional categories (e.g., waitress, hotelier, or stewardess) can improve the recall of the final model.

Table 10 shows the statistics of the obtained SS datasets for each domain. The table organizes the statistics of the results of different processes within the proposed method. The fields represent the dimensions of analysis shared by the proposed domains. In the table, the Professional and Journalist classes are represented up to the group level (collective and individual). On the other hand, the Services field represents the classes that are related to public and private services and will always be collective. Finally, the field “Other relevant audience” represents individuals that represent a type of audience interested in the domain. In the cases of the car and tourism domains, their audience is those categorized as Lovers&Fans of topics related to the domain. For the domain of medicine, we have grouped three categories of audience in this field: concerned (person interested in health issues, has survived an illness, etc.), student (a student of any school level) and religion (religious person). Table 11 shows the expert’s revision results of the validation sets for each domain (i.e., precision metric). During the manual check, a few accounts were detected that are suspended on Twitter. In the statistics shown, the suspended accounts have been counted as labeling errors.

The statistics in Table 11 demonstrate the advantages of using the proposed semi-automatic method, since starting with a few seed unigrams we obtain a large number of labelled samples. The number of seed unigrams mainly depends on the heterogeneity of the profile class (e.g., concerned people in Medicine). The proportion of conflicts generated during tagging usually indicates the complexity of each class. Conflicts are also useful to redefine if necessary the seed unigrams as well as further conflict resolution rules. It is worth mentioning that the richer the conflict resolution rules the more variety is achieved in the final SS dataset.

7.2 Validation of the Models

In this section, we evaluate different classification models with the objective of validating the proposed method for AP. First, we prepare a model for the 6-class task based on the 3 proposed analysis perspectives (Business role, Group role and Domain role). To validate the proposed data processing steps for obtaining a valid SS, we evaluated a fastText model at 4 main phases of the proposed method for different numbers of synonymy words (K parameter). The results showed that at each phase the accuracy of the resulting model was indeed increased.

For the cars domain experiment setting, we report the F1-micro and F1-macro scores for the different resulting models at each method phase for different K values, K= {1, 2, 3, 5, 10, 20, 30}. For each K, we test different models using fastText and RoBERTa, and the confidence intervals for 10 executions of each model were evaluated. We found no statistically difference between the different K values. To set a criterion for the model tests, we chose the value of K = 5, because its variance was slightly lower than the rest.

To validate the resulting classification models of the proposed method we run different experiment settings. Table 12 shows the evaluation, for each analysis domain, of fastText and RoBERTa models using a 5-fold stratified cross validation strategy trained with the corresponding SS, for the all tags classification task. The above evaluation was useful to check how close the prediction was to unsupervised labeling. These results are surely high because the train and test sets share textual patterns that are the bigrams and unigrams that generated them and this information is used by the classification models.

In order to check the predictive power of the model trained with the SS with respect to the GT, a model per domain was trained for predicting all the multidimensional tags. Table 13 shows the results for fastText and RoBERTa classifiers. Compared with the results of the manual validation of the GT subset (see Table 11), the trained models improve the scores of the labeling result, being very close to the decision of the human expert. The table also shows that RoBERTa outperforms fastText results only in the medicine domain. In the rest of the domains, both models have a similar performance.

The following experiments were performed only on the automotive domain. Tables 14 and 15 show the result scores, F1 and AUC-ROC scores respectively, for the models prepared for four different evaluation tasks, namely: all tags classification (6-class task), Business role (4-class task), Group role (2-class task) and Domain role (2-class task). To validate these models on an unseen set built independently of the SS, we ran a test using the GS dataset. In addition, any profile in the training set matching the GS was removed from the SS. The results are shown for the rows titled fastText SS-GS and RoBERTa SS-GS. To check if the results are good enough, we built different reference models trained with different ground-truth datasets (GT and GS) to be able to compare the prediction power of the trained models. If the results of SS dataset trained model are similar to the ground-truth dataset trained models, we can then claim the usefulness of the proposed unsupervised label method. Table 14 shows the results under the rows titled fastText 5-CV GS, RoBERTa 5-CV GS, fastText GT-GS, RoBERTa GT-GS, fastText GS-GT and RoBERTa GS-GT (refer to Section 6.1 to see the models details). Two baselines models are also presented in the table, the majority class and zero-shot classification. The best scores for the reference models have been marked in bold. On the other hand, the best results for the models trained with SS have been marked in bold and italics.

Notice that fastText and RoBERTa algorithms far exceed the baselines. FastText is an implementation that solves computing power problems, since it is a CPU efficient tool that allows models to be trained without the need for a GPU. On the other hand, BERT related models need the use of a GPU. In this sense, fastText models were trained in a server with 24 cores and 100Gb of RAM. The average time for training and predicting with fastText was 73 s. On the other hand, the RoBERTa models were trained on a server with 8 Intel7 cores, 64Gb of RAM, and a 24Gb RTX3090 GPU. For the training of 20 epochs, it took 121 s on average to train and predict. Both models performed very similarly in terms of effectiveness, so we performed an unpaired t-test, which confirmed that the difference in the results is not statistically significant. However, fastText trains much faster and requires less computational resources than RoBERTa (e.g., use of GPU). Therefore, it is enough to use the fastText model for the AP task under the described conditions.

The validation results of the models under the 5-CV GS strategy offer information on the degree of difficulty of making predictions on the GS dataset. The results are high because the models perform the test on a small subset of the GS dataset in each iteration. Based on these results, we verify that the models trained with the SS are not far from the best result of the reference models. On the other hand, models trained with SS outperform the models trained with the strategy GT-GS. The difference in the results may be due to the number of samples and words learned during the training. Also, comparing the strategies GS-GT with results on Table 13 for the automotive domain, the results of the models trained with the SS are better. The scores of the AUC-ROC metric (Table 15) confirm these results. As a conclusion, we can say that the proposed unsupervised labelling method is useful and effective.

7.3 Collective vs. Individual

We ran the following experiment to evaluate and compare our method for the task of distinguishing between individual and collective user profiles. For this purpose, we used the dataset given by (McCorriston et al., 2015) as gold standard. We evaluated the best models trained over the SS dataset for fastText and RoBERTa. In all experiments, the validation sets are the 100% of the data released by (McCorriston et al., 2015). The evaluation measures used were F1-micro, F1-macro and percentage of true positives per class. Table 16 shows the results of the evaluation of models trained with a balanced distribution of the classes of the silver standard dataset. Table 17 shows the results of the evaluation of trained models with the natural distribution of the SS classes. The exception is the Majority class method that works only with the class distribution.

According to the results of the previous tables, if we compare the percentage of hits per class, we can see that, for training with balanced classes, fastText performs much better than RoBERTa. However, when training with the full SS dataset, the best models were obtained for RoBERTa.

Table 18 shows the results reported by the state-of-the-art works for the prediction on the naturally distributed dataset. The M3 model presents the macro F1 value, while the other works report the accuracy value. The last two columns show the accuracy for each class.

While the state-of-the-art methods slightly outperforms our models in terms of global accuracy, all of them require significantly more data and features, as well as a manually curated dataset for training their models.

Comparing the results to (McCorriston et al., 2015), (Wood-Doughty et al., 2018) and (Wang et al., 2019), the best fastText model achieved 0.86 of accuracy when trained with a balanced SS subset. For the naturally distributed SS, fastText achieved the best score with 0.90 of accuracy, but predictions are very similar to the majority class baseline.

Finally, the models that best tradeoff offer for the two classes are those of RoBERTa. RoBERTa model achieved 0.85 of accuracy for the collective class compared to the best model of M3 with 0.807. Thus, our approach achieved the best score for the collective class, which is a major source of error in the other approaches in the literature (Wang et al., 2019).

7.4 RepLab Evaluation

In this section, we discuss the evaluation results on the RepLab 2014 for author profiling dataset. It is important to emphasize that RepLab’s author profiling task and ours are different. Firstly, some of the dimensions of RepLab are transversal to the categories of our SS dataset. For example, in RepLab, the classes journalist and professional contains both collective and individuals. Besides, RepLab consider the class company separately from professional. For the sake of simplification, we placed under our professional user profile the following RepLab classes: professional, stockholder, investor, employee and company. Besides, we discarded the Undecidable and NGO classes, as they do not fit well into our multidimensional model. We also removed the “sportsmen” class because it involves several classes of our profile model: athletes (professional.i.*), sport business (professional.c.*) and sports newspapers (journalist.c.*).

It must be noticed that the evaluation results are not comparable to those published in (Amigó et al., 2014) and (Nebot et al., 2018), since the models were trained on different types of data and features, with different class configurations. In our case, we trained the models with the user descriptions of the silver standard, and then evaluated with the RepLab user accounts mapped to our multi-dimensional model.

Tables 19 and 20 show the results obtained for the influencer subset and the overall RepLab dataset. Notice that the overall performance of our method is poorer than in the other datasets. After inspecting the RepLab dataset in depth, we realized that the categorization criteria of users in RepLab differed notably from ours. Experts in RepLab were more focused on the users’ “intentions” rather than their reported description. For example, a well-known TV media was tagged as NGO because its posts were usually related to regular campaigns related to social activities. As a result, author profiling in RepLab is a very hard task, and no approach in the literature achieved good results for this dataset (Nebot et al., 2018).

7.5 Discussion

The experiments allowed validating the starting hypothesis, demonstrating that the labeling of the most frequent bi-grams related to the predefined topics by class captures the semantics of the profiles associated with that class. From the manual labeling of a small seed of unigrams and bi-grams within a data stream, it was possible to build a large and representative dataset of the intended classes. According to the results, we can conclude that the vocabulary in the profiles is sufficient to learn signs of the different proposed business dimensions. An interesting finding was that our method significantly outperforms the Zero-shot text classification method used as baseline.

Compared to other approaches in the literature, our method performs well for the Group role perspective. Unfortunately, we only found one dataset for the Business role perspective: RepLab 2014. Our method performs poorly in this dataset similarly to other approaches in the literature. Our main conclusion is that this dataset was designed with different criteria, which are not correlated to user descriptions.

After performing an error analysis on the GS results, we found that most errors come from Lovers&Fans individuals misclassified as Professionals. This is because many professionals also express their interests and hobbies in their descriptions along with their professional occupation. Similar errors occur between the Journalist and Professional collective classes, where some specialized magazines can be identified as professional media. Indeed, as their goals and descriptions are very similar, the decision to classify a specialized magazine as Journalist or Professional is quite subjective. Therefore, analysts should decide which class best fits these conflicting samples accordingly to their tasks.

7.6 Limitations

Our approach has the following limitations. First, the work focuses on the multiclass classification problem, therefore only a single label can be assigned to each user profile. However, many Twitter users often list in their description all the roles they take on (e.g. different occupations, hobbies) and have taken on (e.g. previous occupations) in their life. When different roles are listed in the same profile, it becomes very difficult to identify the primary role. In (Sloan et al., 2015), when multiple occupations appear, the first mentioned one is selected. In RepLab, experts were mainly focused on classifying the user’s intention (through the analysis of their posts) rather than on the own profile description (see Section 7.4 and 7.5). Consequently, discrepancies and difficulties arise when only a single label (per dimension) is assigned to complex profiles. In our method, we make use of the conflict resolution rules to make decision on the final single label per profile dimension. A second limitation is that the proposed method can only be applied to well-formalized business domains, where prior knowledge is available regarding their organization, hierarchies, and relationships. On the other hand, there are other more abstract domains whose classes are more complex to model only with bigrams, such as the identification of influencers or the classification of hackers, bots, spammers and haters in social media. These types of tasks usually require to analyze more information like the interactions between users, posts text, network structure, etc. Finally, biases have not been treated in depth in this work. The biases can be conditioned by many factors, including the training datasets, the pre-trained word embeddings or the algorithm itself. In this work, we alleviate the problem of bias in the Dataset Debugger phase by applying conflict resolution rules. However, more research needs to be done for detecting possible bias in the labelled datasets as well as reducing its impact in the trained models.

8 Conclusions

This paper proposes a methodology for author profiling (AP) in Twitter based on social business intelligence roles. The method allows the unsupervised construction of a labelled dataset that serves as input to different text classification tasks. Unlike most approaches on AP for social networks, we do not analyze nor mine the tweets contents for inferring the author’s roles. Instead, we automatically build a training dataset from unlabelled user descriptions by making use of the multidimensional user profile knowledge model provided by the analysts.

Existing AP approaches are time-consuming and resource demanding. These solutions use ad-hoc machine learning methods based on large manually labelled training datasets, which are hard to build and difficult to be extended to other analysis tasks or contexts.

From the theoretical point of view, this study makes important contributions to the way AP is performed within social business intelligence (SBI) projects. Firstly, we propose a novel method for automatically building training datasets aimed at classifying user profiles in dynamic scenarios. Our method relies on semantic knowledge represented by ontologies provided by the analysts when starting a SBI project, from which basic linguistic information is extracted to identify candidate unlabelled user profiles. In this way, the generated training data are directly linked to the concepts represented in the knowledge multidimensional model (i.e., users’ roles). Thus, we can check consistency and conflicts in the training data. As a result, the proposed method contributes to the current state of the art with an integrated view of ontologies and predictive models for AP in social networks. Moreover, the method is able to deal in dynamic scenarios where the semantic knowledge needs to be updated to consider new roles or rejecting others. In this case, the method just builds a new training dataset and new predictive models for the updated knowledge. Another implication of this paper is that the use of the user profiles, instead of their posts and/or metrics, is enough for characterizing their business roles. Previous methods based on posts and metrics obtained very poor results due to the nature of social network contents, which is very redundant, usually shared, and heterogeneous. Empirical results on different domains demonstrated the usefulness and effectiveness of the proposed method.

One of the greatest difficulties that analysts face when addressing machine learning tasks is the difficulty to obtain a good enough training dataset. In a SBI project, user roles and categories are defined on demand, so we cannot assume the existence of labeled datasets for them. Thus, the main practical implication of this work is that our approach can help to identify relevant user groups with minimal human intervention. This approach helps to save time, to reduce human effort and economic resources to obtain the predictive models for user profiling. This results in a dynamic framework where target profiles can be easily adapted to new on-demand needs. Furthermore, our proposed method achieved competitive results in all tested datasets, including those of the state-of-the-art that treated some SBI perspectives like Group and Business roles.

The multidimensional AP approach responds to the needs for analysis based on dynamic dimensions that arise in social media. Multidimensional AP can help informational systems to characterize the audience of published popular topics and news. As an example, we used AP in the domain of intelligent health surveillance (Aramburu et al., 2020) for detecting fake topics related to health. In this scenario, fake topics were dominated by non-expert audiences, whereas true topics involved relevant users with professional roles. Data quality can be also greatly benefit from AP as it is a direct quality indicator of the data sources. Preliminary results presented in (Aramburu et al., 2021) showed that user roles are relevant for quantifying the quality of Twitter data streams. In both studies, we manually built the user groups, which was a difficult and time-consuming task. With the proposed method, the user groups are defined much more quickly and at a much larger extent.

Future work aims at improving the classification of certain user categories that were more difficult to predict due to mixed contents. On the other hand, the study of the social metrics that best model each of the defined classes would allow us to build tighter predictive models enabling us to classify a larger proportion of users, especially when the user has no description field. Finally, we plan to perform some contrasting study in order to evaluate how well the user descriptions fit with the contents they generate, as well as to find correlations between descriptions and other aspects like psychological traits and emotions of users.

Regarding the limitations section, we plan to evaluate different methods for bias correction like AFLite (Le Bras et al., 2020) to improve the variability of the resulting training datasets. We also plan to study multi-output classifiers for detecting secondary roles for complex profiles as well as assigning to them a probability distribution instead of a unique category.

References

Amigó, E., Carrillo-de-Albornoz, E., Chugur, I., Corujo, A., Gonzalo, J., Meij, E., de Rijke, M., & Spina, D. (2014). Overview of RepLab 2014: author profiling and reputation dimensions for online reputation management. In E. Kanoulas, M. Lupu, P. Clough, M. Sanderson, M. Hall, A. Hanbury, & E. Toms (Eds.), Information Access evaluation. Multilinguality, Multimodality, and Interaction (8685 vol.). Springer. Lecture Notes in Computer Science. https://doi.org/10.1007/978-3-319-11382-1_24

Aramburu, M. J., Berlanga, R., & Lanza-Cruz, I. (2021). Quality management in social business intelligence projects. In Proceedings of the 23rd International Conference on Enterprise Information Systems - Volume 1: ICEIS (pp. 320–327). https://doi.org/10.5220/0010495703200327

Aramburu, M. J., Berlanga, R., & Lanza-Cruz, I. (2020). Social media multidimensional analysis for intelligent health surveillance. International Journal of Environmental Research and Public Health, 17, 2289. https://doi.org/10.3390/ijerph17072289

Aswani, R., Kar, A. K., & Vigneswara Ilavarasan, P. (2018). Detection of spammers in twitter marketing: a hybrid approach using social media analytics and bio inspired computing. Information Systems Frontiers, 20, 515–530. https://doi.org/10.1007/s10796-017-9805-8

Berlanga, R., García-Moya, L., Nebot, V., Aramburu, M. J., Sanz, I., & Llidó, D. M. (2015). SLOD-BI: an open data infrastructure for enabling social business intelligence. International Journal of Data Warehousing and Mining (IJDWM), 11(4), 1–28. https://doi.org/10.4018/ijdwm.2015100101

Bojanowski, P., Grave, E., Joulin, A., & Mikolov, T. (2017). Enriching word vectors with subword information. Transactions of the Association for Computational Linguistics, 5, 135–146. arXiv:1607.04606v2.

Carrillo-de-Albornoz, J., Gonzalo, J., & Amigó, E. (2019). RepLab: an evaluation campaign for online monitoring systems. In N. Ferro & C. Peters (Eds.), Information Retrieval Evaluation in a Changing World. The Information Retrieval Series, vol 41. Springer. https://doi.org/10.1007/978-3-030-22948-1_20

Cervero, R., Rosso, P., & Pasi, G. (2021). Profiling fake news spreaders: personality and visual information Matter. In E. Métais, F. Meziane, H. Horacek, & E. Kapetanios (Eds.), Lecture notes in Computer Science (p. 12801). Springer. Natural Language Processing and Information Systems. https://doi.org/10.1007/978-3-030-80599-9_31

Choudrie, J., Patil, S., Kotecha, K., et al. (2021). Applying and understanding an advanced, novel deep learning approach: a covid 19, text based, emotions analysis study. Information Systems Frontiers, 23, 1431–1465. https://doi.org/10.1007/s10796-021-10152-6

Ciaramita, M., & Johnson, M. (2003). Supersense tagging of unknown nouns in WordNet. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, (pp 168-175). EMNLP 2003. https://aclanthology.org/W03-1022

Daelemans, W., et al. (2019). Overview of PAN 2019: bots and gender profiling, celebrity profiling, cross-domain authorship attribution and style change detection. In F. Crestani, et al. (Eds.), Lecture notes in Computer Science, vol11696, experimental IR meets multilinguality, multimodality, and Interaction. Springer. https://doi.org/10.1007/978-3-030-28577-7_30

Devlin, J., Chang, M., Lee, K., & Toutanova, K. (2019). BERT: Pre-training of deep bidirectional transformers for language understanding. North American Chapter of the Association for Computational Linguistics, NAACL.

Gallinucci, E., Golfarelli, M., & Rizzi, S. (2015). Advanced topic modeling for social business intelligence. Information Systems, 53, 87–106. https://doi.org/10.1016/j.is.2015.04.005

Joulin, A., Grave, E., Bojanowski, P., & Mikolov, T. (2016). Bag of tricks for efficient text classification. arXiv:1607.01759v3. https://doi.org/10.48550/arXiv.1607.01759

Keim, D., & Ward, M. (2007). Visualization. In M. Berthold & D. J. Hand (Eds) Intelligent data analysis. Springer.

Kim, A., Miano, T., Chew, R., Eggers, M., & Nonnemaker, J. (2017). Classification of twitter users who tweet about E-cigarettes. JMIR Public Health and Surveillance, 3. https://doi.org/10.2196/publichealth.8060

Kimball, R., & Ross, M. (2013). The data warehouse toolkit: the definitive guide to dimensional modeling. Wiley.

Kumar, U., Reganti, A. N., Maheshwari, T., et al. (2018). Inducing personalities and values from language use in social network communities. Information Systems Frontiers, 20, 1219–1240. https://doi.org/10.1007/s10796-017-9793-8

Lanza-Cruz, I., Berlanga, R., & Aramburu, M. J. (2018). Modeling analytical streams for social business intelligence. Informatics, 5, MDPI.

Le Bras, R., Swayamdipta, S., Bhagavatula, C., Zellers, R., Peters, M., Sabharwal, A., & Choi, Y. (2020, November). Adversarial filters of dataset biases. In International Conference on Machine Learning (pp. 1078–1088). PMLR.

Li, H., Mukherjee, A., Liu, B., Kornfield, R., & Emery, S. L. (2014). Detecting campaign promoters on twitter using markov random fields. 2014 IEEE International Conference on Data Mining, 290–299.

Liu, Y., Ott, M., Goyal, N., Du, J., Joshi, M., Chen, D., Levy, O., Lewis, M., Zettlemoyer, L., & Stoyanov, V. (2019). RoBERTa: a robustly optimized BERT pretraining approach. ArXiv, abs/1907.11692.

López-Monroy, A. P., Montes-y-Gómez, M., Escalante, H. J., Pineda, L. V., & Stamatatos, E. (2015). Discriminative subprofile-specific representations for author profiling in social media. Knowledge Based Systems, 89, 134–147.

López-Santillán, R., Montes-y-Gómez, M., González-Gurrola, L. C., Alonso, G. R., & Prieto-Ordaz, O. (2020). Richer document embeddings for author profiling tasks based on a heuristic search. Information Processing & Management, 57, 102227.

Markov, I., Gómez-Adorno, H., Posadas-Durán, J. P., Sidorov, G., & Gelbukh, A. (2017). Author profiling with Doc2vec neural network-based document embeddings. In O. Pichardo-Lagunas, & S. Miranda-Jiménez (Eds.), Advances in Soft Computing. Lecture notes in Computer Science (10062 vol.). Springer. https://doi.org/10.1007/978-3-319-62428-0_9

McCorriston, J., Jurgens, D., & Ruths, D. (2015). Organizations are users too: characterizing and detecting the presence of organizations on Twitter. International AAAI Conference on Web and Social Media, ICWSM.

Mikolov, T., Sutskever, I., Chen, K., Corrado, G. S., & Dean, J. (2013). Distributed representations of words and phrases and their compositionality. In Proceedings of a meeting held December 5-8, 2013. Advances in Neural Information Processing Systems 26: 27th Annual Conference on Neural Information Processing Systems 2013 (pp. 3111–3119). Lake Tahoe, Nevada, United States. https://proceedings.neurips.cc/paper/2013/hash/9aa42b31882ec039965f3c4923ce901b-Abstract.html

Miller, G. A. (1995). WordNet: a lexical database for English. Communications Of The Acm, 38, 39–41.

Mishra, P., Tredici, M. D., Yannakoudakis, H., & Shutova, E. (2018). Author profiling for abuse detection. International Conference on Computational Linguistics, COLING.

Nebot, V., Pardo, F. M., Berlanga, R., & Rosso, P. (2018). Identifying and classifying influencers in Twitter only with textual information. In M. Silberztein, F. Atigui, E. Kornyshova, E. Métais & F. Meziane. (Eds.), Lecture Notes in Computer Science, vol 10859, Natural Language Processing and Information Systems. NLDB 2018. Springer. https://doi.org/10.1007/978-3-319-91947-8_3

Ouni, S., Fkih, F., & Omri, M. (2021). Toward a new approach to author profiling based on the extraction of statistical features. Social Network Analysis and Mining, 11, 1–16. https://doi.org/10.1007/s13278-021-00768-6

Pardo, F.M., Rosso, P., Verhoeven, B., Daelemans, W., Potthast, M., & Stein, B. (2016). Overview of the 4th Author Profiling Task at PAN 2016: Cross-Genre Evaluations. In Series CEUR Workshop Proceedings vol.1609. Working Notes of CLEF 2016 - Conference and Labs of the Evaluation Forum (pp. 750-784). Evora, Portugal. CEURWS.org. http://ceur-ws.org/Vol-1609/16090750.pdf

Peng, D., Wang, Y., Liu, C., et al. (2020). TL-NER: a transfer learning model for chinese named entity recognition. Information Systems Frontiers, 22, 1291–1304. https://doi.org/10.1007/s10796-019-09932-y

Pennacchiotti, M., & Popescu, A. (2011). A machine learning approach to Twitter user classification. International AAAI Conference on Weblogs and Social Media, ICWSM.

Potthast, M., Rosso, P., Stamatatos, E., & Stein, B. (2019). A decade of shared tasks in digital text forensics at PAN. In L. Azzopardi, B. Stein, N. Fuhr, P. Mayr, C. Hauff, & D. Hiemstra, (Eds.), Lecture Notes in Computer Science, vol 11438. Springer. https://doi.org/10.1007/978-3-030-15719-7_39

Rangel, F., Sarracén, G. L., Chulvi, B., Fersini, E., & Rosso, P. (2021). Profiling hate speech spreaders on Twitter Task at PAN 2021. CLEF, CEUR-WS.org.

Rodríguez-Vidal, J., Carrillo-de-Albornoz, J., Gonzalo, J., & Plaza, L. (2021). Authority and priority signals in automatic summary generation for online reputation management. Journal of the Association for Information Science and Technology, 72, 583–594. https://doi.org/10.1002/asi.24425

Romera-Paredes, B., & Torr, P. H. (2015). An embarrassingly simple approach to zero-shot learning. International Conference on Machine Learning, ICML.

Schler, J., Koppel, M., Argamon, S. E., & Pennebaker, J. W. (2006). Effects of age and gender on blogging. AAAI Spring Symposium: Computational Approaches to Analyzing Weblogs.

Schlicht, I. B., & Magnossão de Paula, A., F. (2021). Unified and multilingual author profiling for detecting haters. Proceedings of the Working Notes of CLEF 2021 - Conference and Labs of the Evaluation Forum, 2936, 1837–1845. https://dblp.org/rec/conf/clef/SchlichtP21.bib

Sloan, L., Morgan, J., Burnap, P., & Williams, M. (2015). Who tweets? Deriving the demographic characteristics of age, occupation and social class from Twitter user meta-data. PLoS One, 10(3), e0115545.

Vaswani, A., Shazeer, N. M., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, L., & Polosukhin, I. (2017). Attention is all you need. Advances in neural information processing systems, (pp. 5998–6008). ArXiv, abs/1706.03762.

W3C Semantic Web (2012). SKOS. Simple knowledge organization System. https://www.w3.org/2004/02/skos/. Accessed 25 May 2021

W3C Recommendation OWL (2004). Web ontology language guide. https://www.w3.org/TR/owl-guide/. Accessed 14 Sept 2021