Abstract

In sequential decision problems, an act of learning cost-free evidence might be symptomatic, in the sense that performing this act itself provides evidence about states of the world it does nothing to causally promote. It is well known that orthodox causal decision theory, like its main rival evidential decision theory, may sanction such acts as rationally impermissible. This paper shows that, under plausible assumptions, a minimal version of ratificationist causal decision theory, known as principled ratificationism, fares better in this respect, for it never labels symptomatic acts of learning cost-free evidence as rationally impermissible.

Similar content being viewed by others

1 Introduction

It has long been recognized that orthodox causal decision theory (CDT) may violate the following intuitively attractive claim established in classical Leonard Savage’s (1954) decision theory:

Value of Evidence Your prior expectation of the maximal expected value calculated relative to your credence function revised in response to learning cost-free evidence is at least as high as the maximal expected value calculated relative to your credence function before revision.

In other words, when your goal is to choose a terminal actFootnote 1 that maximizes expected value, this claim says that expecting to learn cost-free evidence cannot lead you to expect to make your decision worse, and thus cost-free evidence is worth waiting for in advance of making your terminal decision.Footnote 2 It is intuitively attractive, for it accords with the common thought that learning evidence is advantageous, unless it is too costly.

Much like its main rival evidential decision theory (EDT),Footnote 3 CDT may violate Value of Evidence in sequential decision problems in which the act of learning cost-free evidence is symptomatic, in the sense that performing this act itself provides evidence about states of the world that it does nothing to causally promote (see Maher, 1990). More precisely, in these decision problems, it is not only that an outcome of your learning cost-free evidence changes your credences for states of the world, but also the act of learning itself has evidential bearing on these states. And CDT may sanction such acts as rationally impermissible. But when the act of learning cost-free evidence is not symptomatic, i.e. it is evidentially independent of states of the world, CDT always satisfies Value of Evidence (see Skyrms, 1990b; Arntzenius, 2008). Could there be a theory of rational choice that never sanctions symptomatic acts of learning cost-free evidence as rationally impermissible?

In this paper, I show that a modification of CDT, known as principled ratificationism,Footnote 4 never labels a symptomatic act of learning cost-free evidence as rationally impermissible whenever you face a choice between this act and the act of not learning cost-free evidence (Sect. 3). Principled ratificationism says that it is never rationally permissible to choose causally nonratifiable acts. And, as I will show, under plausible assumptions, the symptomatic act of learning cost-free evidence is always causally ratifiable. That is, on the supposition of performing it, this act cannot have lower causal expected value than the act of not learning cost-free evidence. In contrast, the act of not learning cost-free evidence may be causally nonratifiable (Sect. 4). Thus, while CDT may count symptomatic acts of learning cost-free evidence as rationally impermissible, principled ratificationism never does so, and it can even sanction such acts as uniquely rationally permissible. I explain why this is so by pointing to the fact that while CDT disregards any information that the symptomatic act of learning can itself provide, principled ratificationism always takes this information into account before issuing its recommendation (Sect. 5).

The main result of this article can also be read as showing that Value of Evidence can be extended to at least one type of probabilistic dependence between the act of learning cost-free evidence and states of the world. Value of Evidence has been established in the framework of Savage’s decision theory where it is assumed, albeit not explicitly in its formalism, that acts and states of the world are probabilistically independent. Thus, within the confines of this theory, Value of Evidence holds under the assumption that while an outcome of learning changes your credences for states of the world, the act of learning itself has no evidential bearing on these states. But when the act of learning evidence is symptomatic, we allow for the possibility that this act and some factors pertaining to states of the world are not probabilistically independent—they are evidentially dependent. And it is far from evident whether Value of Evidence can always hold under these circumstances. This paper shows that, under plausible assumptions, this can always be so once we allow our choice between the symptomatic act of learning cost-free evidence and the act of not learning to be guided by principled ratificationism.

2 EDT, CDT, and Principled Ratificationism

I will start by describing the main difference between two leading theories of rational choice: EDT and CDT. Against this background, I will then present a minimal version of causal ratificationism—principled ratificationism. In doing so, I will also introduce some basic terminology that will be used throughout the paper.

2.1 EDT and CDT

When you face a decision problem, you have some finite set of pairwise exclusive propositions describing available acts, \({\mathcal {A}}\). These are propositions that you can make true by deciding. When you deliberate what to do, there is also some finite set of mutually exclusive and jointly exhaustive propositions representing the possible states of the world, \({\mathcal {S}}\). You don’t know which proposition in \({\mathcal {S}}\) is true, but you have a credence function c—a probability function defined over an algebra of propositions \({\mathcal {F}}\) that includes \({\mathcal {S}}\) and \({\mathcal {A}}\)—which represents your degrees of belief (credences) for the propositions in \({\mathcal {F}}\).

Your possible acts produce more or less desirable outcomes. The outcome of performing act \(A \in {\mathcal {A}}\) in state of the world S is the conjunction \(S \wedge A\)—i.e. a proposition that fully specifies what you care about when you decide to do A in state S. These propositions form the domain of your value function V representing your desirability for any conjunction \(A \wedge S\)—i.e. it maps propositions describing outcomes to real numbers, where a higher real number represents a more desirable or valuable outcome.

EDT and CDT agree that an act \(A \in {\mathcal {A}}\) is rationally permissible if it maximizes some sort of expected value, yet they disagree on how this value should be defined. While EDT requires that you maximize evidential expected value, CDT requires you to maximize causal expected value.

In order to pin down a philosophically significant difference between these two notions of expected value, let us first introduce Richard Jeffrey’s (1965, p. 80) formula for the desirability of any proposition:

Desirability For any proposition \(X \in {\mathcal {F}}\) such that \(c\left( X\right) > 0\) and any set of propositions \({\mathcal {Y}}\) that partitions X:

$$\begin{aligned} V\left( X \right) {\mathop {=}\limits ^{\textrm{def}}} \sum _{Y \in {\mathcal {Y}}} c\left( Y \vert X \right) \cdot V(Y). \end{aligned}$$

That is, your value of X is your expectation of its value over some partition of X.

Now, since \(\left\{ A \wedge S: S \in {\mathcal {S}} \right\}\) is a partition of A, we get, from Desirability, the V-value of act A:

which is a weighted average of the values for \(A \wedge S\), where the weight is given by the conditional credence \(c\left( S\vert A \right)\). According to EDT, A’s evidential expected value is measured by V(A).

Let us also assume that each \(S \in {\mathcal {S}}\) can be understood as a conjunction \(K \wedge D\), where K belongs to a partition \({\mathcal {K}}\) containing propositions about the factors that are outside the causal influence of your acts, and D belongs to a partition \({\mathcal {D}}\) of propositions specifying the factors that are within the causal influence of your acts. Then, \(V\left( A \right)\) can be reformulated as follows:

Since the Ks describe factors outside the causal influence of your acts, the conditional credences \(c\left( K\vert A \right)\) used to weigh the values for \(A \wedge K\) cannot measure the extent to which performing A causally promotes desirable and undesirable outcomes. What they measure instead is the extent to which learning that you’ve performed A would merely indicate or be evidence for desirable and undesirable outcomes. V(A), thus, measures the extent to which performing A would be good or bad news for you, and EDT requires you to maximize A’s news value.

But orthodox causal decision theorists disagree. They claim that, in weighing the values for \(A \wedge S\), we should ignore any evidence that the performance of an act can give and should pay attention only to the extent to which the act causally promotes desirable and undesirable outcomes, i.e. to causal information which is encoded in \(c\left( D \vert A \wedge K \right)\). We should, thus, replace \(c\left( K\vert A\right)\), which provides only evidential information about which factors K an act A indicates, with \(c\left( K \right)\). Hence, A’s causal expected value, U(A), can be given as follows:Footnote 5

Thus, when you maximize \(U\left( A\right)\), you maximize a weighted average of the values for \(A \wedge K\), where the weight is given by the unconditional credence \(c\left( K\right)\).

Defenders of CDT believe that, in Newcomb-style decision problems,Footnote 6 the advice to ignore any evidence the performance of an act provides appears superior over EDT’s ‘irrational policy of managing the news’ (see Lewis, 1981, p. 5). For it enables us to avoid choosing acts that do not causally promote desirable outcomes, even if they are considered as providing the best evidence or news for them.

2.2 Principled Ratificationism

But consider the following decision problem due to Egan (2007):

PSYCHOPATH BUTTON: You can either push a button or do nothing. Pushing the button will cause all psychopaths to die while doing nothing will cause nothing. You are quite confident that you are not a psychopath and you would like to live in a world with no psychopaths. But you are also quite confident that only a psychopath would press the button. And you very strongly prefer living in a world with psychopaths to dying.

Here CDT recommends pushing the button, since you are very confident that you are not a psychopath, and hence that pushing will cause the desired outcome. But this advice appears problematic, for by deciding to push the button, you would give yourself evidence for thinking that you are a psychopath, and hence that you are very likely to cause your own death which is highly undesirable. Yet CDT tells us that we should ignore this evidence.

One important reason to doubt CDT’s verdict in this and similar decision problems,Footnote 7 is that it is unstable. That is, as your confidence that you will choose a particular act recommended by CDT increases, CDT will change its mind and start telling you that you ought not to choose this act. So once you will choose an act recommended by CDT, you will regret it, for, on the supposition of performing it, some other act would be recommended by CDT. Thus, on the supposition of pushing the button, this act has lower causal expected value than the act of doing nothing. In other words, pushing the button is causally nonratifiable.

To make the idea of causal ratifiability more precise, let us first define the notion of conditional causal expected value of act \(A_{i}\) given \(A_{j}\):

Given (4), we say that an act \(A_{i}\) is causally ratifiable iff there is no alternative \(A_{j}\) such that \(U\left( A_{j}\vert A_{i} \right) > U\left( A_{i}\vert A_{i} \right)\). Thus, \(A_{i}\) is causally ratifiable just in case it maximizes causal expected value on the supposition that it is decided upon.

There is another useful way to understand the idea of causal ratifiability. By performing an act \(A_{i}\), you can give yourself evidence about the degree to which you would expect \(A_{i}\) to do more to causally improve desirable outcomes than \(A_{j}\). Following Gallow (2020), call this evidence the improvement news and define it as:

That is, in a choice between \(A_{i}\) and \(A_{j}\), the improvement news given by \(A_{i}\) tells us how much you expect the U-value of \(A_{i}\) would differ from the U-value of \(A_{j}\) on the supposition that \(A_{i}\) is decided upon. And, as it is easy to observe, \(A_{i}\) is causally ratifiable in this choice situation just in case \(I_{A_{i}}\left( A_{i}, A_{j} \right) \ge 0\). So this is an act that you would expect to do the most to causally improve desirable outcomes, once you have chosen it.Footnote 8

To prevent unstable decisions, some believe that CDT should be supplemented with the causal ratifiability constraint (see, e.g. Weirich, 1985; Sobel, 1990). The minimal way by which the idea of causal ratifiability modifies CDT can be formulated as follows (see, e.g. Joyce, 2012):

Principled ratificationism: It is never rationally permissible to choose causally nonratifiable acts.

That is, if there is some act \(A_{j}\) such that \(U\left( A_{j}\vert A_{i} \right) > U\left( A_{i}\vert A_{i} \right)\), then \(A_{i}\) can be eliminated from the menu of acts that you can rationally choose, according to principled ratificationism.

Although seemingly attractive, principled ratificationism has been recently challenged as a way to prevent instability. Most importantly, in cases like PSYCHOPATH BUTTON, no act is causally ratifiable, and hence no act counts as rationally permissible by the lights of principled ratificationism. Yet it seems that refraining should be preferable to pushing the button. In general, principled ratificationism can lead to unpleasant rational dilemmas where no act is rationally permissible by the theory’s light, although some act seems preferable over the other.

But whether or not the above considerations provide a decisive strike against principled ratificationism, I will argue that there is another class of decision problems that motivates the use of principled ratificationism. And these decision problems do not necessarily involve the phenomenon of instability which triggered the emergence of causal ratificationism in the first place. Specifically, in the next section I will uncover an important advantage of principled ratificationism over CDT in a type of sequential decision problems in which a decision-maker has an opportunity to learn cost-free evidence before making a terminal decision. As I will show, principled ratificationism never sanctions symptomatic acts of learning cost-free evidence as rationally impermissible in this type of decision problems.

3 Learning Cost-Free Evidence and Principled Ratificationism

Suppose that you face a sequential two-stage decision problem involving learning cost-free evidence (L), e.g. making a cost-free observation. More precisely, at stage 1 you decide whether to choose the act of learning cost-free evidence, \(A_{L}\), or the act of not learning this evidence, \(A_{\bar{L}}\), where \(A_{L}, A_{\bar{L}} \in {\mathcal {A}}\). And at stage 2 you choose among terminal acts in some set \({\mathcal {B}}\).

We can also put this problem as follows. Suppose that learning experience \({\mathcal {E}}\) incurs no cost, i.e. it does not affect your desirabilites for every conjunction of terminal act in \({\mathcal {B}}\) and state \(K \in {\mathcal {K}}\). But once you decide to learn evidence, \({\mathcal {E}}\) would prompt a revision of your prior credence for any \(X \in {\mathcal {F}}\), c(X). This in turn will result in adopting a posterior credence for any X, \(c_{{\mathcal {E}}}(X)\), and in particular a posterior credence for any \(K \in {\mathcal {K}}\). Then, the question is whether you should choose a terminal act from \({\mathcal {B}}\) without learning this evidence or postpone this choice in order to learn this evidence and then select from the terminal acts in \({\mathcal {B}}\).

Importantly, we will allow for the possibility that the propositions Ks and the act of learning cost-free evidence are evidentially, but not causally dependent. This means that the the act of learning cost-free evidence, \(A_{L}\), is symptomatic—it is an indicator of states of the world that it does nothing to causally promote. That is, bearing in mind that the propositions Ks describe those factors of states that are causally independent of our acts, we assume that, for any \(K \in {\mathcal {K}}\), \(c\left( K\vert A_{L} \right) \ne c\left( K \right)\), which means that the act of learning cost-free evidence is only evidentially relevant to the Ks.

To illustrate this point, consider a simplified version of a sequential decision problem due to Maher (1990):

FINKELSTEIN: You are taking part in professor Finkelstein’s psychology experiment. Before you are two opaque boxes, one black and one white, and you are told that each box contains a quarter. You must choose one of the two acts: (B) bet that the quarter in the black box is lying heads up, or (\(\bar{B}\)) don’t bet that the quarter in the black box is lying heads up. If you bet and win, you gain the coins in both boxes (50 cents); if you bet and lose, you must pay \(\$1\). Before choosing, you are permitted to gather evidence by looking in the white box; you are not permitted to look in the black box. Based on previous psychological experiments of this type, you know that Finkelstein predicts reliably whether you will look in the white box. And you know that if he predicted that you will look in the white box, he left the quarter in the black box heads up. His prediction is believed to be \(60\%\) reliable. You can either postpone your choice between B and \(\bar{B}\), and look inside the white box first (\(A_{L}\)), or choose straightaway (\(A_{\bar{L}}\)) between B and \(\bar{B}\).

Let the relevant set of propositions describing states be \({\mathcal {K}} = \left\{ K_{H}, K_{\bar{H}}\right\}\), where \(K_{H}\) is the proposition that the quarter in the black box is heads up and \(K_{\bar{H}}\) is the proposition that the quarter in the black box is not heads up. And suppose that initially you are maximally unsure about whether the quarter in the black box is heads up and whether you will look inside the white box, so that \(c\left( K_{H}\right) = c\left( K_{\bar{H}} \right) = 0.5\) and \(c\left( A_{L}\right) = c\left( A_{\bar{L}} \right) = 0.5\).

Clearly, in FINKELSTEIN, looking inside the white box can’t affect whether or not the quarter in the black box is lying heads up, since this has been settled at the time of prediction. Hence, we have causal independence of the propositions \(K_{H}\) and \(K_{\bar{H}}\) on act \(A_{L}\). Yet looking inside the white box is symptomatic, for it is evidentially relevant to whether or not the quarter in the black box is heads up. That is, given that you believe Finkelstein’s prediction to be \(60\%\) reliable, your conditional credence for \(K_{H}\) given \(A_{L}\) is 0.6, and hence your conditional credence for \(K_{\bar{H}}\) given \(A_{L}\) is 0.4. Thus, we have that \(c\left( K_{H}\vert A_{L}\right) > c\left( K_{H}\right)\) and \(c\left( K_{\bar{H}}\vert A_{L}\right) < c\left( K_{\bar{H}}\right)\). Hence, we have evidential dependence of \(K_{H}\) and \(K_{\bar{H}}\) on act \(A_{L}\).

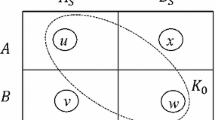

The structure of FINKELSTEIN and your desirabilities for act-state conjunctions at stage 2 of this problem are depicted in Fig. 1. So 1 is your desirability of getting 50 cents and \(-2\) is your desirability of losing 1 dollar.Footnote 9 Note that the square nodes represent the points of choice and the circle nodes indicate the points of uncertainty where all of the branches—possible outcomes \(R_{c_{H}}\) and \(R_{c_{\bar{H}}}\) of your learning experience—have a probability of occurring. Importantly, whichever act you choose at stage 1, this would not affect your desirabilites at stage 2.

So at node 0 you face a choice between learning cost-free evidence symptomatically, \(A_{L}\), and not learning this evidence, \(A_{\bar{L}}\). If you have chosen \(A_{\bar{L}}\), you would be deciding at node 1 between betting, B, and not betting, \(\bar{B}\), relative to your prior credences for \(K_{H}\) and \(K_{\bar{H}}\), \(c\left( K_{H} \right)\) and \(c\left( K_{\bar{H}} \right)\). And if you have chosen \(A_{L}\) at node 0, you would be deciding between B and \(\bar{B}\) relative your posterior credences for \(K_{H}\) and \(K_{\bar{H}}\). However, initially you are uncertain which posterior credence function you would adopt after your learning experience \({\mathcal {E}}\). If you learned that the quarter is heads up (i.e. \({\mathcal {E}} = H\)), you would adopt \(c_{H}\) as your posterior, and you will be choosing between the terminal acts at node 3. But if you learned that the quarter is not heads up (i.e. \({\mathcal {E}} = \bar{H}\)), you would adopt the posterior credence function \(c_{\bar{H}}\), and you will face your terminal decision problem at node 2. Which one you will adopt is a matter of uncertainty that would be resolved at the circle node: it will be that either proposition \(R_{c_{H}}\) is true or else that \(R_{c_{\bar{H}}}\) is true.

In the remainder of this section, I will show that, under plausible assumptions, symptomatic acts of learning cost-free evidence, like looking in the white box in FINKELSTEIN, are always causally ratifiable. Hence, they are always sanctioned as rationally permissible by principled ratificationism.

In order to make this result more precise, I assume the following:

-

(1)

Your prior and posterior credences over \({\mathcal {F}}\) are related as follows:

Reflection Suppose that \({\mathcal {C}}_{{\mathcal {E}}}\) is a finite set of posterior credence functions. Let \(R_{c_{{\mathcal {E}}}} \in {\mathcal {F}}\) be the proposition that your posterior credence function over \({\mathcal {F}}\) is given by \(c_{{\mathcal {E}}}\). Then, for any \(X \in {\mathcal {F}}\):

$$\begin{aligned} c\left( X\vert R_{c_{{\mathcal {E}}}}\right) = c_{{\mathcal {E}}}\left( X \right) . \end{aligned}$$ -

(2)

After stage 1 you will be certain which act \(A \in {\mathcal {A}}\) you have chosen. That is, we assume that, in your posterior credence distribution, for any \(A \in {\mathcal {A}}\):

$$\begin{aligned} c_{{\mathcal {E}}}(A) = 1. \end{aligned}$$ -

(3)

Whichever \(K \in {\mathcal {K}}\) is true, the extent to which you value the conjunction \(A \wedge R_{c_{{\mathcal {E}}}}\) coming out true is equal to the extent to which you value the truth of conjunction \(A \wedge B_{c_{{\mathcal {E}}}}\), where \(B_{c_{{\mathcal {E}}}}\) describes an act that would be chosen from \({\mathcal {B}}\) at stage 2 if \(R_{c_{{\mathcal {E}}}}\) were true. More precisely, for any \(K \in {\mathcal {K}}\), \(A \in {\mathcal {A}}\), \(B \in {\mathcal {B}}\) and \(c_{{\mathcal {E}}} \in {\mathcal {C}}_{{\mathcal {E}}}\):

$$\begin{aligned} V\left( A \wedge R_{c_{{\mathcal {E}}}} \wedge K \right) = V\left( A \wedge B_{c_{{\mathcal {E}}}} \wedge K \right) . \end{aligned}$$ -

(4)

The act of learning cost-free evidence is evidentially relevant to states of the world that are outside your causal control. That is, for any \(K \in {\mathcal {K}}\):

$$\begin{aligned} c\left( K \vert A_{L} \right) \ne c\left( K \right) . \end{aligned}$$ -

(5)

The act of not learning evidence is evidentially irrelevant to states of the world that are outside your causal control. That is, for any \(K \in {\mathcal {K}}\):

$$\begin{aligned} c\left( K \vert A_{\bar{L}} \right) = c\left( K \right) . \end{aligned}$$ -

(6)

The extent to which you value any outcome \(B_{c_{{\mathcal {E}}}} \wedge K\) at stage 2 cannot be altered by what you do at stage 1. That is, for any \(K \in {\mathcal {K}}\), \(B \in {\mathcal {B}}\), \(A \in {\mathcal {A}}\), and \(c_{{\mathcal {E}}} \in {\mathcal {C}}_{{\mathcal {E}}}\):

$$\begin{aligned} V\left( A \wedge B_{c_{{\mathcal {E}}}} \wedge K \right) = V\left( B_{c_{{\mathcal {E}}}} \wedge K \right) . \end{aligned}$$ -

(7)

States described by the propositions Ks are evidentially independent of the acts in B—i.e. for all \(B \in {\mathcal {B}}\) and \(K \in {\mathcal {K}}\), \(c\left( K \right) = c\left( K\vert B \right)\). If this is so, then an act \(B_{i} \in {\mathcal {B}}\) which is causally ratifiable is an act that maximizes causal expected value, that is, for any \(B_{j} \in {\mathcal {B}}\), \(U\left( B_{i}\vert B_{i} \right) \ge U\left( B_{j}\vert B_{i} \right) = U\left( B_{i} \right) \ge U\left( B_{j} \right)\).

Before moving on, a few remarks on assumptions (1) and (7) are in order. As to assumption (1), first, following Skyrms (1990a, p. 92), van Fraassen (1995) and Huttegger (2014), Huttegger (2013), I take Reflection to guide rational learning but not arbitrary types of belief change. That is, it regulates cases of genuine learning that should be distinguished from situations where one expects credences to change for other reasons, e.g. due to memory loss, brainwashing, or the influence of drugs. Thus, under this interpretation, you cannot violate Reflection and at the same time claim that you will form your posterior credences in an epistemically rational way. As shown in Huttegger (2013), this reading allows us to deflate the intuitive plausibility of many counterexamples to Reflection.Footnote 10

Second, it might be objected that by assuming Reflection, I have already established Value of Evidence. After all, as shown in Huttegger (2014) and in Huttegger and Nielsen (2020), Reflection and Value of Evidence are equivalent. Observe, however, that this equivalence has been established within the framework of Savage’s decision theory which assumes that states of the world are probabilistically independent of the acts. It is, thus, far from clear whether this result can be extended to decision theories that guide rational choice in situations in which an act of learning evidence and states of the world are probabilistically dependent.

To appreciate the importance of assumption (7), suppose that we drop it. Then, at stage 2 you may choose an act \(B_{i}\) which is evidentially relevant to the Ks. But if this is so, then you may not satisfy Reflection, contrary to assumption (1). After all, if you satisfy Reflection, it follows that your prior unconditional credence in K is an expected value of your anticipated posterior unconditional credences in K. That is, for any \(K \in {\mathcal {K}}\):

Yet, when at stage 2 act \(B_{i}\) is evidentially relevant to the Ks (so that \(c_{{\mathcal {E}}}\left( K \right) \ne c_{{\mathcal {E}}}\left( K\vert B_{i} \right)\)), you will determine \(B_{i}\)’s conditional causal expected value relative to your posterior conditional credence function, \(c_{{\mathcal {E}}}\left( K\vert B_{i} \right)\), so that \(U\left( B_{i}\vert B_{i} \right) = \sum _{K \in {\mathcal {K}}} c_{{\mathcal {E}}}\left( K\vert B_{i} \right) \cdot V\left( B_{i} \wedge K \right)\). But then it is not generally true that your prior conditional credence in K, \(c\left( K\vert B_{i} \right)\), is equal to the expected value of your anticipated posterior conditional credences in K, \(c_{{\mathcal {E}}}\left( K\vert B_{i} \right)\). Therefore, you may violate Eq. (6), and hence Reflection.Footnote 11

With our assumptions in mind, it is important to answer the following question: How should a rational agent reason through the stages of our decision scenario in order to decide between \(A_{L}\) and \(A_{\bar{L}}\)? To answer this question, this paper utilizes a particular approach to sequential choice known as the sophisticated choice.Footnote 12 In general, according to this approach, a rational agent should work backwards through the sequence of choices, determining first what she would choose at the final stage. Then, given her already predicted decision at the final stage, she should evaluate what will seem rational at the earlier stage, and iterate this procedure until she reaches the present stage.

Thus, in our two-stage decision scenario, the sophisticated chooser, who is guided by principled ratificationism, will determine at stage 1 the values for \(U\left( A_{L}\vert A_{\bar{L}} \right)\), \(U\left( A_{\bar{L}}\vert A_{\bar{L}} \right)\), \(U\left( A_{\bar{L}}\vert A_{L} \right)\) and \(U\left( A_{L}\vert A_{L} \right)\). But in doing so, she will take into account her anticipated choice at stage 2—i.e., her choice of an act from \({\mathcal {B}}\) which, by assumption (7), maximizes causal expected value relative to her anticipated posterior credence function (or posterior causal expected value), \(U_{c_{{\mathcal {E}}}}\). Observe also that the sophisticated-choice approach just sketched fits right our assumption (3). In particular, from the standpoint of evaluating the act of learning at stage 1, whenever you consider, as a sophisticated chooser, how desirable this act is when your posterior credence function is \(c_{{\mathcal {E}}}\) (or when a proposition \(R_{c_{{\mathcal {E}}}}\) is true), you in fact consider how desirable this act is when your terminal decision at stage 2 is to choose act \(B_{c_{{\mathcal {E}}}}\).

Following this procedure, let us show how one can determine \(U\left( A_{i}\vert A_{j} \right)\), for \(i, j = L, \bar{L}\).Footnote 13 Since \(\left\{ R_{c_{{\mathcal {E}}}}: c_{{\mathcal {E}}} \in {\mathcal {C}}_{{\mathcal {E}}}\right\}\) is a partition of some set of possible worlds \({\mathcal {W}}\), \(A_{i} \in {\mathcal {A}}\) is a disjunction of the propositions \(A_{i} \wedge R_{c_{{\mathcal {E}}}}\), \(A_{i} \equiv \bigvee _{c_{{\mathcal {E}}} \in {\mathcal {C}}_{{\mathcal {E}}}}\left( A_{i} \wedge R_{c_{{\mathcal {E}}}} \right)\). Hence, the U-value of \(A_{i}\) conditional on \(A_{j}\) can be given as follows:

Given assumptions (3) and (6), we can write Eq. (7) as:

Next, by probability theory, Eq. (8) becomes:

By assumptions (1) and (2), we have \(c\left( K \vert A_{i} \wedge R_{c_{{\mathcal {E}}}} \right) = c_{{\mathcal {E}}}\left( K\vert A_{i} \right) = c_{{\mathcal {E}}}\left( K \right)\).Footnote 14 Hence, Eq. (9) becomes:

Now for \(i = L\) and \(j = L\), by Eq. (10) we have:

That is, the U-value of the act of learning cost-free evidence symptomatically on the supposition that it is chosen is your prior expectation of the posterior causal expected value of act \(B_{c_{{\mathcal {E}}}}\), where \(B_{c_{{\mathcal {E}}}}\) is an act from \({\mathcal {B}}\) that you will choose if your posterior credence distribution were \(c_{{\mathcal {E}}}\). And this prior expectation is calculated relative to your prior credence distribution for the anticipated posterior credence functions described by the \(R_{c_{{\mathcal {E}}}}\)s, conditional on \(A_{L}\).

And for \(i = \bar{L}\) and \(j = L\), it follows from Eq. (10):

But note that when you decide not to learn cost-free evidence, you keep your prior credence function unchanged, and so \(R_{c_{{\mathcal {E}}}} = R_{c}\), for any \(c_{{\mathcal {E}}} \in {\mathcal {C}}_{{\mathcal {E}}}\). If so, then we can reasonably assume that you are certain that your prior credence function is given by c, and assume that your prior credence for \(R_{c}\) cannot be altered by conditionalizing on the proposition that you choose the act of not learning, \(A_{\bar{L}}\). Thus, \(c\left( R_{c}\vert A_{\bar{L}} \right) = c\left( R_{c} \right) = 1\). Hence, we can write \(U\left( A_{\bar{L}}\vert A_{L} \right)\) as:

That is, the U-value of the act of not learning cost-free evidence on the supposition that the act of learning this evidence symptomatically is chosen is a prior causal expected value of act \(B_{c}\), where \(B_{c}\) is an act from \({\mathcal {B}}\) that you choose relative to your prior credence function c. And this causal expected value is prior, for it is calculated relative to your prior conditional credences \(c\left( K\vert A_{L} \right)\).

Now, given \(U\left( A_{L}\vert A_{L} \right)\) and \(U\left( A_{\bar{L}}\vert A_{L} \right)\) so understood, one can prove the following (The proof is given in the Appendix):

Proposition

Suppose that assumptions (1)–(7) are satisfied in a choice between \(A_{L}\) and \(A_{\bar{L}}\). Then, it is the case that:

That is, when you face a choice between delaying a selection from the terminal acts in \({\mathcal {B}}\) to learn cost-free evidence symptomatically and selecting from these terminal acts without first learning, the former option cannot carry lower conditional causal expected value given the performance of your symptomatic learning. Or, performing the symptomatic act of learning cost-free evidence is always causally ratifiable.

As it is easy to observe, since \(U\left( A_{L}\vert A_{L} \right) \ge U\left( A_{\bar{L}}\vert A_{L} \right)\), it follows that the improvement news given by the act of learning cost-free evidence cannot be negative, \(I_{A_{L}}\left( A_{L}, A_{\bar{L}} \right) \ge 0\). That is, once you have chosen \(A_{L}\), you would expect that it cannot be worse at causally promoting desirable outcomes than \(A_{\bar{L}}\). Given that, by assumption (6), the act of learning cost-free evidence does not causally alter your desirabilities at stage 2, and given that you are a sophisticated chooser, this means in fact that once you have chosen \(A_{L}\), you would expect that your informed terminal decision at stage 2 cannot do worse at causally promoting desirable outcomes than your uninformed decision at that stage.

So, in FINKELSTEIN, once you have chosen the act of looking in the white box, you would expect that an act from \({\mathcal {B}}\) that maximizes causal expected value relative to your posterior credence function cannot be worse at causally promoting desirable outcomes than an act from \({\mathcal {B}}\) that maximizes causal expected value relative to your prior credence function.

Now, since the act of learning cost-free evidence symptomatically is always causally ratifiable, it is always rationally permissible, according to principled ratificationism. Hence, even if not learning cost-free evidence is also causally ratifiable, it is never the case that not learning cost-free evidence is obligatory when learning this evidence symptomatically is also an available act.

Given Eqs. (11), (13), and Proposition, we can also see that principled ratificationism satisfies Value of Evidence. That is, given assumptions (1)–(7), it is always the case that your prior expectation of an act B that maximizes causal expected value relative to your posterior credence function \(c_{{\mathcal {E}}}\) is at least as high as the maximal causal expected value of B calculated relative to your prior credence function c. So, by the lights of principled ratificationism, your prior expectation of an informed decision cannot be lower than the causal expected value of an uninformed decision.

There is, however, an important difference between Proposition and Value of Evidence established in Savage’s decision theory. In Savage’s framework, your prior expectation of an informed decision is calculated relative to a prior unconditional credence function \(c\left( \cdot \right)\). But within principled ratificationism, your prior expectation of an informed decision is calculated relative to your prior conditional credence function given the proposition \(A_{L}\), \(c\left( \cdot \vert A_{L} \right)\). This difference stems from the fact that the ratificationist always evaluates your expectation of the informed decision on the supposition that the symptomatic act of learning cost-free evidence, \(A_{L}\), is chosen.

4 Not Learning Cost-Free Evidence may be Causally Nonratifiable

In this section, I will show that in a choice between learning cost-free evidence symptomatically and not learning evidence at all, the latter may be causally nonratifiable. Hence, when you face this choice, principled ratificationism may sanction the act of learning cost-free evidence symptomatically as uniquely rationally permissible.

To show this, I will compare the conditional causal expected values \(U\left( A_{\bar{L}}\vert A_{\bar{L}} \right)\) and \(U\left( A_{L}\vert A_{\bar{L}} \right)\). First, by using Eq. (10) and letting \(i = \bar{L}\) and \(j = \bar{L}\), we can determine \(U\left( A_{\bar{L}}\vert A_{\bar{L}} \right)\) as follows:

And since by performing act \(A_{\bar{L}}\) you will not change your prior credence function, we have that \(R_{c_{{\mathcal {E}}}} = R_{c}\), for every \(c_{{\mathcal {E}}} \in {\mathcal {C}}_{{\mathcal {E}}}\). Also, as before, we can reasonably assume that \(c\left( R_{c}\vert A_{\bar{L}}\right) = c\left( R_{c}\right) = 1\). Hence, the above equation reduces to:

Thus, the U-value of the act of not learning cost-free evidence on the supposition that it is chosen is a prior causal expected value of act \(B_{c}\). This causal expected value is prior, for it is calculated relative to the prior credence function c.

Second, by letting \(i = L\) and \(j = \bar{L}\), from Eq. (10) we have that:

That is, the U-value of the act of learning cost-free evidence symptomatically on the supposition that the act of not learning this evidence is chosen is your prior expectation of the causal expected value of act \(B_{c_{{\mathcal {E}}}}\) relative to your posterior credences \(c_{{\mathcal {E}}}(K)\) multiplied by the ratio \(\frac{c\left( K \right) }{c\left( K\vert A_{L} \right) }\). And this prior expectation is calculated relative to your prior credence distribution for the anticipated posterior credence functions described by the \(R_{c_{{\mathcal {E}}}}\)s, conditional on \(A_{L}\).

To show that \(U\left( A_{\bar{L}}\vert A_{\bar{L}} \right)\) may be lower than \(U\left( A_{L}\vert A_{\bar{L}} \right)\), I will make the Finkelstein case more precise (see Fig. 2). In FINKELSTEIN, when you decide to learn cost-free evidence at stage 1, your looking in the white box can result in either learning the proposition that the quarter is heads up (H), or learning the proposition that the quarter is not heads up (\(\bar{H}\)). Hence, it seems natural to assume that you will change your prior credences by Bayesian conditionalization (BC). More precisely, if the evidence gleaned from your learning experience \({\mathcal {E}}\) is some proposition \(E \in {\mathcal {F}}\) (i.e. \({\mathcal {E}} = E\)), your posterior credence function should be given as follows:

BC For any \(X \in {\mathcal {F}}\):

$$\begin{aligned} c_{E}\left( X \right) = c\left( X\vert E\right) . \end{aligned}$$

So, by BC, your anticipated posterior credences, \(c_{H}\left( X \right)\) and \(c_{\bar{H}}\left( X \right)\), are given by your anticipated prior conditional credences \(c\left( X\vert H \right)\) and \(c\left( X\vert \bar{H} \right)\) respectively. And since, by assumption (1), you satisfy Reflection, we have that for any \(K \in {\mathcal {K}}\):

-

\(c\left( K\vert R_{c_{H}} \right) = c_{H}\left( K \right) = c\left( K\vert H \right)\).

-

\(c\left( K\vert R_{c_{\bar{H}}} \right) = c_{\bar{H}}\left( K \right) = c\left( K\vert \bar{H} \right)\).

More concretely, let us stipulate in FINKELSTEIN that \(c_{H}\left( K_{H} \right) = c\left( K_{H}\vert H \right) = 0.8\) and \(c_{\bar{H}}\left( K_{\bar{H}} \right) = c\left( K_{\bar{H}}\vert \bar{H} \right) = 0.6\). Then, as a sophisticated chooser, you will first calculate, for each act in \({\mathcal {B}}\), the posterior causal expected value, \(U_{c_{{\mathcal {E}}}}\left( B \right)\), as follows:

-

\(U_{c_{H}}\left( B \right) = c\left( K_{H}\vert H \right) \cdot V\left( K_{H} \wedge B \right) + c\left( K_{\bar{H}}\vert H \right) \cdot V\left( K_{\bar{H}} \wedge B \right) = 0.8\cdot 1 + 0.2\cdot -2 = 0.4.\)

-

\(U_{c_{\bar{H}}}\left( B \right) = c\left( K_{H}\vert \bar{H} \right) \cdot V\left( K_{H} \wedge B \right) + c\left( K_{\bar{H}}\vert \bar{H} \right) \cdot V\left( K_{\bar{H}} \wedge B \right) = 0.4\cdot 1 + 0.6\cdot -2 = -0.8.\)

-

\(U_{c_{H}}\left( \bar{B} \right) = c\left( K_{H}\vert H \right) \cdot V\left( K_{H} \wedge \bar{B} \right) + c\left( K_{\bar{H}}\vert H \right) \cdot V\left( K_{\bar{H}} \wedge \bar{B} \right) = 0.8\cdot 0 + 0.2\cdot 0 = 0.\)

-

\(U_{c_{\bar{H}}}\left( \bar{B} \right) = c\left( K_{H}\vert \bar{H} \right) \cdot V\left( K_{H} \wedge \bar{B} \right) + c\left( K_{\bar{H}}\vert \bar{H} \right) \cdot V\left( K_{\bar{H}} \wedge \bar{B} \right) = 0.4\cdot 0 + 0.6\cdot 0 = 0.\)

Thus, as \(U_{c_{{\mathcal {E}}}}\)-value maximizer at stage 2, you will choose act B if your anticipated posterior credence function were \(c_{H}\), because \(U_{c_{H}}\left( B \right) > U_{c_{H}}\left( \bar{B} \right)\). But since \(U_{c_{\bar{H}}}\left( \bar{B} \right) > U_{c_{\bar{H}}}\left( B \right)\), you will choose act \(\bar{B}\) if your anticipated posterior credence function were \(c_{\bar{H}}\). So \(B_{c_{H}} = B\) and \(B_{c_{\bar{H}}} = \bar{B}\).

Now, given what you will do at stage 2, you can calculate at stage 1 the values for \(U\left( A_{L}\vert A_{\bar{L}} \right)\) and \(U\left( A_{\bar{L}}\vert A_{\bar{L}} \right)\). To this end, let us also stipulate that:

-

\(c\left( R_{c_{H}} \right) = c\left( A_{L} \wedge H \right) = 0.25\)

-

\(c\left( R_{c_{\bar{H}}} \right) = c\left( A_{L} \wedge \bar{H} \right) = 0.25\),

which is consistent with our assumption that \(c\left( A_{\bar{L}} \right) =c\left( A_{L} \right) = 0.5\). So here we assume that your prior credence for the proposition that your posterior credence function over \({\mathcal {F}}\) is \(c_{H}\) (or \(c_{\bar{H}}\)) is your prior credence function for the conjunction that you will choose the act of learning cost-free evidence, \(A_{L}\), and that you will learn H (or \(\bar{H}\)).

Then, since \(B_{c_{H}} = B\) and \(B_{c_{\bar{H}}} = \bar{B}\), we can use Eq. (16) as follows:

But the act of not learning cost-free evidence, \(A_{\bar{L}}\), has lower conditional causal expected value given \(A_{\bar{L}}\) itself. This is so because \(B_{c} = \bar{B}\), and hence, by using Eq. (15), we getFootnote 15

Hence, since \(U\left( A_{\bar{L}}\vert A_{\bar{L}} \right) < U\left( A_{L}\vert A_{\bar{L}} \right)\), the act of not learning cost-free evidence is causally nonratifiable, and so it would be sanctioned as rationally impermissible by principled ratificationism.

5 Symptomatic Learning: CDT Versus Principled Ratificationism

Why is it that CDT may sanction symptomatic acts of learning cost-free evidence as rationally impermissible, while principled ratificationism never does so? Here, I will give one possible explanation.

As I said in Sect. 2, CDT ignores any evidence that the performance of an act itself provides. So, in sequential decision problems involving the act of learning cost-free evidence, it would ignore any information that this act could itself provide. Thus, if you follow CDT’s advice, you know at stage 1 that you ought to choose at stage 2 an act B that maximizes causal expected value relative to your posterior credence function \(c_{{\mathcal {E}}}\) which takes into account only an outcome of your learning experience \({\mathcal {E}}\). And this seems right when your act of learning would itself have no evidential bearing on states. But if it does have such evidential relevance, like in FINKELSTEIN, it influences, in addition to the outcome of your learning experience, your credences for states, and hence it bears on your expected values of the acts in \({\mathcal {B}}\) at stage 2. Consequently, when your learning is symptomatic, you would not maximize causal expected value at stage 2 as CDT would advise you to do, but in fact you will maximize a different kind of expected value. And, as I will show by means of an example, your prior expectation of this kind of expected value may be lower than the maximal causal expected value of your act calculated relative to your prior credences, and hence CDT may recommend against the symptomatic act of learning cost-free evidence.

More precisely, observe that, under the assumption that the act of not learning evidence, \(A_{\bar{L}}\), is evidentially irrelevant to the propositions \(K \in {\mathcal {K}}\), we have \(U(A_{L}\vert A_{\bar{L}}) = U\left( A_{L} \right)\).Footnote 16 Hence, since Eq. (16) satisfies this assumption, we can use it to determine the U-value of \(A_{L}\):

That is, given assumptions (1)–(7) and given that you are a sophisticated chooser, the U-value of \(A_{L}\) is your prior expectation of the expected V-value of \(B_{c_{{\mathcal {E}}}}\) calculated relative to \(\Delta _{K} \cdot c_{{\mathcal {E}}}\left( K \right)\), where \(\Delta _{K}\) is the ratio of old-to-new credences for K. So when \(A_{L}\) is symptomatic, we have \(\Delta _{K} \ne 1\), and hence:

That is, your expected value of \(B_{c_{{\mathcal {E}}}}\) at stage 2 is not equal to the causal expected value of this act relative to your posterior credence function \(c_{{\mathcal {E}}}\). Hence, given that \(B_{c_{{\mathcal {E}}}}\) is an act that maximizes causal expected value at stage 2 relative to \(c_{{\mathcal {E}}}\), if your learning is symptomatic, you cannot maximize causal expected value at stage 2, for:

To show that this may lead CDT to sanction your symptomatic act of learning as rationally impermissible, let us change the Finkelstein case slightly. That is, let us assume that you believe Finkelstein’s prediction to be \(90\%\) reliable. This means that your conditional credence for \(K_{H}\) given \(A_{L}\) is 0.9, and hence your conditional credence for \(K_{\bar{H}}\) given \(A_{L}\) is 0.1. Then, by using Eq. (19), the values for \(c_{H}\left( K_{H} \right) , c_{\bar{H}}\left( K_{\bar{H}} \right) , c\left( R_{c_{H}} \right)\), \(c\left( R_{c_{\bar{H}}} \right)\) from Sect. 4 and the fact that \(B_{c_{H}} = B\) and \(B_{c_{\bar{H}}} = \bar{B}\), we can calculate \(U\left( A_{L} \right)\) as follows:

Now, when the act of not learning evidence, \(A_{\bar{L}}\), is evidentially irrelevant to the propositions \(K \in {\mathcal {K}}\), we have that \(U(A_{\bar{L}}\vert A_{\bar{L}}) = U\left( A_{\bar{L}} \right)\). Since Eq. (15) holds under this assumption, we can use it to calculate \(A_{\bar{L}}\)’s causal expected value. And as shown in Sect. 4, since \(B_{c} = \bar{B}\), it follows that:

Thus, \(U\left( A_{L} \right) < U\left( A_{\bar{L}} \right)\), and so CDT recommends against \(A_{L}\).

Contrary to CDT, principled ratificationism does not ignore information that the performance of your symptomatic act of learning can provide. By our assumption (7), you also know at stage 1 that you ought to choose at stage 2 an act B that maximizes causal expected value relative to \(c_{{\mathcal {E}}}\). But since you are guided by principled ratificationism, you know this on the supposition that the symptomatic act of learning is chosen. So already at stage 1, you take into account information about how your symptomatic act of learning would influence your credences for states—i.e. information encoded in \(c\left( K\vert A_{L} \right)\). More precisely:

Now, given assumptions (1)–(7) of our sophisticated-choice approach, it turns out that once you follow this advice, you would in fact maximize causal expected value at stage 2, even if your act of learning is symptomatic. For it follows from Eq. (11) in Sect. 3 that B’s expected value at stage 2 is given by:

and hence the ratio \(\Delta _{K}\) does not in fact influence B’s posterior causal expected value at stage 2. And, as shown in the proof of Proposition, a prior expectation of B’s posterior causal expected value is at least as high as B’s prior causal expected value.

6 Conclusion

The idea of causal ratificationism in decision theory emerged originally as a possible remedy for the problem of CDT’s instability. In this paper, I have shown that there is another context—i.e. sequential decision problems—that motivates the use of causal ratificationism. In this context, it is well known that CDT may recommend against symptomatic acts of learning cost-free evidence, and hence it may violate Value of Evidence. I have shown that, under plausible assumptions, a minimal version of causal ratificationism known as principled ratificationism never sanctions symptomatic acts of learning cost-free evidence as rationally impermissible. This result in turn allows us to extend Value of Evidence to cases in which the act of learning evidence and states of the world are probabilistically dependent, in the sense of being evidentially dependent.

Of course, principled ratificationism is not the only way to equip CDT with the causal ratifiability constraint. Other versions of causal ratificationism proposed in the literature include, most notably, lexical ratificationism (see, e.g. Egan, 2007), iterated general ratificationism (Gustafsson, 2011), and graded ratificationism (Podgorski, 2022; Gallow, 2020; Barnett, 2022). These theories are believed to be important improvements over principled ratificationism either because they don’t lead to rational dilemmas in cases involving instability, or because they allow us to deflate arguments attempting to show that ratifiable acts are not, merely by virtue of being ratifiable, more choiceworthy than nonratifiable acts. Whether we might show that symptomatic acts of learning cost-free evidence are always rationally permissible by the lights of these theories is work for the future.

Notes

Throughout, ‘terminal act’ means an act that can be chosen by an agent at the final stage of a sequential decision problem.

This term is borrowed from Spencer and Wells (2019).

Note that when CDT compares the U-values of \(A_{i}\) and \(A_{j}\), it ignores the improvement news given by these acts. Instead, CDT focuses only on what might be called the expected improvement (see Gallow, 2020). In a choice between \(A_{i}\) and \(A_{j}\), the expected improvement of \(A_{i}\) can be defined as \(I\left( A_{i}, A_{j} \right) {\mathop {=}\limits ^{\textrm{def}}} \sum _{K \in {\mathcal {K}}} c\left( K \right) \cdot \left[ V\left( A_{i} \wedge K \right) - V\left( A_{j} \wedge K \right) \right] = U\left( A_{i} \right) - U\left( A_{j} \right)\), which tells you how much more than \(A_{j}\) you expect \(A_{i}\) to causally promote desirable outcomes. Given that \(A_{j}\)’s expected improvement is \(I\left( A_{j}, A_{i} \right) = -I\left( A_{i}, A_{j} \right)\), it is easy to observe that \(I\left( A_{i}, A_{j} \right) > -I\left( A_{i}, A_{j} \right)\) iff \(U\left( A_{i} \right) - U\left( A_{j} \right) > -\left[ U\left( A_{i} \right) - U\left( A_{j} \right) \right]\) iff \(U\left( A_{i} \right) > U\left( A_{j} \right)\).

Of course, since V is measured on an interval scale, any other pair of numbers for \(V\left( B \wedge K_{H} \right)\) and \(V\left( B \wedge K_{\bar{H}} \right)\) where the first is higher than the second would be an equivalent representation of these desirabilites.

For an excellent survey of these counterexamples, see Briggs (2009).

For a similar point, see Arntzenius (2008).

A similar sophisticated-choice analysis in the context of CDT has been used in Maher (1990).

Proof: By probability theory and Reflection, we have that \(c\left( K \vert A_{i} \wedge R_{c_{{\mathcal {E}}}} \right) = \frac{c\left( K \wedge A_{i} \wedge R_{c_{{\mathcal {E}}}}\right) }{c\left( A_{i} \wedge R_{c_{{\mathcal {E}}}}\right) } = \frac{c\left( K \wedge A_{i} \vert R_{c_{{\mathcal {E}}}}\right) \cdot c\left( R_{c_{{\mathcal {E}}}} \right) }{c\left( A_{i} \vert R_{c_{{\mathcal {E}}}}\right) \cdot c\left( R_{c_{{\mathcal {E}}}} \right) } = \frac{c_{{\mathcal {E}}}\left( K \wedge A_{i} \right) }{c_{{\mathcal {E}}}\left( A_{i} \right) } = c_{{\mathcal {E}}}\left( K \vert A_{i} \right)\). And, by assumption (2), we have that \(c_{{\mathcal {E}}}\left( A_{i} \right) = 1\), and so \(c_{{\mathcal {E}}}\left( K\vert A_{i} \right) = c_{{\mathcal {E}}}\left( K \right)\), as required.

When you decide not to learn, you choose an act \(B_{c}\), which is an act that maximizes prior causal expected value. Then, since \(U\left( B\right) = c\left( K_{H}\right) \cdot V\left( B \wedge K_{H} \right) + c\left( K_{\bar{H}}\right) \cdot V\left( B \wedge K_{\bar{H}} \right) = 0.5\cdot 1 + 0.5 \cdot -2 = -0.5 < U\left( \bar{B}\right) = c\left( K_{H}\right) \cdot V\left( \bar{B} \wedge K_{H} \right) + c\left( K_{\bar{H}}\right) \cdot V\left( \bar{B} \wedge K_{\bar{H}} \right) = 0.5\cdot 0 + 0.5 \cdot 0 = 0\), it is the case that \(B_{c} = \bar{B}\).

That is, since \(c\left( K\vert A_{\bar{L}} \right) = c\left( K \right)\) for all \(K \in {\mathcal {K}}\), we have \(U\left( A_{L}\vert A_{\bar{L}} \right) = \sum _{K \in {\mathcal {K}}} c\left( K\vert A_{\bar{L}} \right) \cdot V\left( A_{L} \wedge K \right) = \sum _{K \in {\mathcal {K}}} c\left( K \right) \cdot V\left( A_{L} \wedge K \right) = U\left( A_{L} \right)\).

References

Adams, E. W., & Rosenkrantz, R. D. (1980). Applying the Jeffrey decision model to rational betting and information acquisition. Theory and Decision, 12(1), 1–20.

Ahmed, A. (2014). Dicing with death. Analysis, 74(4), 587–592.

Arntzenius, F. (2008). No regrets, or: Edith Piaf revamps decision theory. Erkenntnis, 68(2), 277–297.

Barnett, D. J. (2022). Graded ratifiability. Journal of Philosophy, 119(2), 57–88.

Briggs, R. (2009). Distorted reflection. Philosophical Review, 118(1), 59–85.

Eells, E. (1982). Rational decision and causality. Cambridge: Cambridge University Press.

Egan, A. (2007). Some counterexamples to causal decision theory. Philosophical Review, 116(1), 93–114.

Gallow, J. D. (2020). The causal decision theorist’s guide to managing the news. The Journal of Philosophy, 117(3), 117–149.

Gibbard, A., & Harper, W. L. (1978). Counterfactuals and two kinds of expected utility. In A. Hooker, J. J. Leach, & E. F. McClennen (Eds.), Foundations and applications of decision theory (pp. 125–162). Dordrecht: D. Reidel.

Good, I. J. (1967). On the principle of total evidence. British Journal for the Philosophy of Science, 17(4), 319–321.

Gustafsson, J. E. (2011). A note in defence of ratificationism. Erkenntnis, 75(1), 147–150.

Hitchcock, C. (1996). Causal decision theory and decision-theoretic causation. Noûs, 30(4), 508–526.

Huttegger, S. M. (2013). In defence of reflection. Philosophy of Science, 80(3), 413–433.

Huttegger, S. M. (2014). Learning experiences and the value of knowledge. Philosophical Studies, 171(2), 279–288.

Huttegger, S. M., & Nielsen, M. (2020). Generalized learning and conditional expectation. Philosophy of Science, 87(5), 868–883.

Jeffrey, R. C. (1965). The logic of decision. Chicago, IL: University of Chicago Press.

Joyce, J. M. (1999). The foundations of causal decision theory. Cambridge: Cambridge University Press.

Joyce, J. M. (2012). Regret and instability in causal decision theory. Synthese, 187(1), 123–145.

Levi, I. (1991). Consequentialism and sequential choice. In M. Bacharach & S. Hurley (Eds.), Foundations of decision theory (pp. 92–146). Oxford: Basil Blackwell.

Lewis, D. (1981). Causal decision theory. Australasian Journal of Philosophy, 59(1), 5–30.

Maher, P. (1990). Symptomatic acts and the value of evidence in causal decision theory. Philosophy of Science, 57(3), 479–498.

Maher, P. (1992). Diachronic rationality. Philosophy of Science, 59(1), 120–141.

Nozick, R. (1969). Newcomb’s problem and two principles of choice. In N. Rescher (Ed.), Essays in honor of Carl G. Hempel (pp. 114–146). Dordrecht: Reidel.

Podgorski, A. (2022). Tournament decision theory. Noûs, 56(1), 176–203.

Raiffa, H., & Schlaifer, R. (1961). Applied statistical decision theory. Cambridge, MA: Harvard University Press.

Ramsey, F. P. (1990). Weight or the value of knowledge. British Journal for the Philosophy of Science, 41, 1–4.

Savage, L. J. (1954). The foundations of statistics. New York: Dover Publications.

Seidenfeld, T. (1988). Decision theory without independence or without ordering. Economics and Philosophy, 4(2), 267.

Skyrms, B. (1980). Causal necessity: A pragmatic investigation of the necessity of laws. New Haven: Yale University Press.

Skyrms, B. (1990a). The dynamics of rational deliberation. Cambridge: Harvard University Press.

Skyrms, B. (1990b). The value of knowledge. Minnesota Studies in the Philosophy of Science, 14, 245–266.

Sobel, J. H. (1990). Maximization, stability of decision, and actions in accordance with reason. Philosophy of Science, 57(1), 60–77.

Spencer, J., & Wells, I. (2019). Why take both boxes? Philosophy and Phenomenological Research, 99(1), 27–48.

van Fraassen, B. C. (1995). Belief and the problem of ulysses and the sirens. Philosophical Studies, 77(1), 7–37.

Weirich, P. (1985). Decision instability. Australasian Journal of Philosophy, 63(4), 465–472.

Wells, I. (2020). Evidence and rationalization. Philosophical Studies, 177(3), 845–864.

Funding

Funding for this research was provided by the National Science Centre, Poland (Grant No. 2017/26/D/HS1/00068).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author has no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Proof of Proposition

Below, I will show that the difference \(U\left( A_{L}\vert A_{L} \right) - U\left( A_{\bar{L}}\vert A_{L} \right)\) cannot have a negative value, and so \(U\left( A_{L}\vert A_{L} \right) \ge U\left( A_{\bar{L}}\vert A_{L} \right)\).

First, recall that at stage 2 you will choose act B which maximizes causal expected value U. Now, when you decide to gather evidence, you will maximize this value relative to your posterior credence function \(c_{{\mathcal {E}}}\). Hence, we can write \(U\left( A_{L}\vert A_{L} \right)\) as:

Second, since \(\left\{ A_{L} \wedge R_{c_{{\mathcal {E}}}}: c_{{\mathcal {E}}} \in {\mathcal {C}}_{{\mathcal {E}}}\right\}\) is a partition of \({\mathcal {W}}\) such act \(A_{L} \in {\mathcal {A}}\) is a disjunction of propositions \(A_{L} \wedge R_{c_{{\mathcal {E}}}}\), \(A_{L} \equiv \bigvee _{c_{{\mathcal {E}}} \in {\mathcal {C}}_{{\mathcal {E}}}}\left( A_{L} \wedge R_{c_{{\mathcal {E}}}} \right)\), we get from Eq. (13):

Then, by probability theory, assumptions (1) and (2), Eq. (27) becomes:

Now, for any \(c_{{\mathcal {E}}} \in {\mathcal {C}}_{{\mathcal {E}}}\):

where \(B_{c}\) is a maximizer of prior causal expected value. That is, if \(B = B_{c}\), the right-hand side difference in inequality (29) equals 0; and if \(B \ne B_{c}\), this difference is greater than 0. And inequality (29) continues to hold for the prior expectation of \(\max _{B \in {\mathcal {B}}}\sum _{K \in {\mathcal {K}}} c_{{\mathcal {E}}}\left( K \right) \cdot V\left( B \wedge K\right) - \sum _{K \in {\mathcal {K}}} c_{{\mathcal {E}}}\left( K\right) \cdot V\left( B_{c} \wedge K\right)\) relative to your conditional prior credences for the \(R_{c_{{\mathcal {E}}}}\)s given \(A_{L}\). Hence, we have:

Then, by (26) and (28), the above inequality becomes:

Hence:

as required. \(\square\)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dziurosz-Serafinowicz, P. The Value of Evidence and Ratificationism. Erkenn (2023). https://doi.org/10.1007/s10670-023-00746-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10670-023-00746-8