Abstract

Social sciences expose many cognitively complex, highly qualified, or fuzzy problems, whose resolution relies primarily on expert judgement rather than automated systems. One of such instances that we study in this work is a reflection analysis in the writings of student teachers. We share a hands-on experience on how these challenges can be successfully tackled in data collection for machine learning. Based on the novel deep learning architectures pre-trained for a general language understanding, we can reach an accuracy ranging from 76.56–79.37% on low-confidence samples to 97.56–100% on high confidence cases. We open-source all our resources and models, and use the models to analyse previously ungrounded hypotheses on reflection of university students. Our work provides a toolset for objective measurements of reflection in higher education writings, applicable in more than 100 other languages worldwide with a loss in accuracy measured between 0–4.2% Thanks to the outstanding accuracy of the deep models, the presented toolset allows for previously unavailable applications, such as providing semi-automated student feedback or measuring an effect of systematic changes in the educational process via the students’ response.

Similar content being viewed by others

Data Availability

All the resources and instructions for reproduction can be found in the repository of our library: https://github.com/EduMUNI/reflection-classification. Data set has been collected as part of this work and is available as well in anonymised version on the referenced repository with additional details in /data directory (this will be replaced by permanent, but not anonymised DOI in camera-ready version). The repository also contains reproducible sources for all our experiments:

• Training of the language models, which can be useful for adapting the model to significantly different domain of data.

• Qualitative evaluation of the trained language model on given set of data.

• Analyses: sources for a reproduction of the results of exploratory hypotheses introduced in Section 3.5 can be found in /analyses directory. Please refer to the main README of the referenced repository for specific steps to perform a reproduction.

Notes

We use the terms “machine learning” and “deep learning” algorithms. Machine learning commonly refers to a broader set of algorithms with the ability to adapt its parameters to given data automatically. Deep learning is a subset of machine learning identifying models composed of multiple layers of simple decision-making units that can together accurately model more complex, non-linear problems. Deep learning models are also referred to as “artificial neural networks”.

See the instructions in project repository on https://github.com/EduMUNI/reflection-classification

A detailed description of each category with examples can be found in our full annotation manual: https://github.com/EduMUNI/reflection-classification/blob/master/data/annotation_manual.pdf

https://github.com/EduMUNI/reflection-classification/tree/master/data (in camera-ready will be replaced by dataset DOI)

Abbreviations

- AWA:

-

Academic Writing Analysis

- CEReD:

-

Czech-English Reflective Dataset

- ELMo:

-

Embeddings from Language Models

- LIWC:

-

Linguistic inquiry and word count

- LR:

-

Linear Regression

- LSA:

-

Latent Semantic Analysis

- MultiLR:

-

Multinominal Linear Regression

- MultiNB:

-

Multiniminal Naive Bayes

- NB:

-

Näive Bayes

- NN:

-

Fully-connected neural network

- RF:

-

Random Forest

- SGD:

-

Stochastic Gradient Descent

- SVM:

-

Support Vector Machines

- TF-IDF:

-

Term frequency–inverse document frequency

References

Alger, C. (2006). ‘what went well, what didn’t go so well’: Growth of reflection in pre-service teachers. Reflective practice, 7(3), 287–301.

Arrastia, M. C., Rawls, E. S., Brinkerhoff, E. H., & Roehrig, A. D. (2014). The nature of elementary preservice teachers’ reflection during an early field experience. Reflective Practice, 15(4), 427–444.

Bahdanau, D., Cho, K., Bengio, Y. (2014) Neural machine translation by jointly learning to align and translate. arXiv preprint arXiv:1409.0473.

Bain, J. D., Mills, C., Ballantyne, R., & Packer, J. (2002). Developing reflection on practice through journal writing: Impacts of variations in the focus and level of feedback. Teachers and Teaching, 8(2), 171–196.

Bass, J., Sidebotham, M., Creedy, D., & Sweet, L. (2020). Midwifery students’ experiences and expectations of using a model of holistic reflection. Women and Birth, 33(4), 383–392.

Bates, D., Maechler, M., Bolker, B., Walker, S., Christensen, R. H. B., Singmann, H., & Grothendieck, G. (2012). Package ‘lme4’. R Foundation for Statistical Computing, Vienna, Austria: CRAN.

Bean, T. W., & Stevens, L. P. (2002). Scaffolding reflection for preservice and inservice teachers. Reflective Practice, 3(2), 205–218.

Beaumont, A., Al-Shaghdari, T. (2019) To what extent can text classification help with making inferences about students’ understanding. International conference on machine learning, optimization, and data science (372–383)

Bolton, G. (2010) Reflective practice: Writing and professional development. Sage publications

Boud, D., Keogh, R., & Walker, D. (1985). Reflection: Turning experience into learning. London: Kogan Page.

Bruno, A., Galuppo, L., & Gilardi, S. (2011). Evaluating the reflexive practices in a learning experience. European Journal of Psychology of Education, 26(4), 527–543.

Cardenas, D. G. (2014). Learning networks to enhance reflectivity: key elements for the design of a reflective network. International Journal of Educational Technology in Higher Education, 11(1), 32–48.

Carpenter, D., Geden, M., Rowe, J., Azevedo, R., Lester, J. (2020) Automated analysis of middle school students’ written reflections during game-based learning. International conference on artificial intelligence in education (67–78)

Cattaneo, A.A., Motta, E. (2020) “I reflect, therefore i am... a good professional”. on the relationship between reflection-on-action, reflection-in-action and professional performance in vocational education. Vocations and Learning (1–20)

Chang, C. C., Chen, C. C., & Chen, Y. H. (2012). Reflective behaviors under a web-based portfolio assessment environment for high school students in a computer course. Computers & Education, 58(1), 459–469.

Cheng, G. (2017) Towards an automatic classification system for supporting the development of critical reflective skills in L2 learning. Australasian Journal of Educational Technology 33(4)

Chou, P. N., & Chang, C. C. (2011). Effects of reflection category and reflection quality on learning outcomes during web-based portfolio assessment process: A case study of high school students in computer application course. Turkish Online Journal of Educational Technology-TOJET, 10(3), 101–114.

Cochran-Smith, M. (2005). Studying Teacher Education: What we know and need to know. Journal of Teacher Education, 54(4), 301–306. https://doi.org/10.1177/0022487105280116

Cohen-Sayag, E., & Fischl, D. (2012). Reflective writing in pre-service teachers’ teaching: What does it promote? Australian Journal of Teacher Education, 37(10), 2.

Colton, A. B., & Sparks-Langer, G. M. (1993). A conceptual framework to guide the development of teacher reflection and decision making. Journal of teacher education, 44(1), 45–54.

Conneau, A., Khandelwal, K., Goyal, N., Chaudhary, V., Wenzek, G., Guzmán, F., Stoyanov, V. (2020) Unsupervised cross-lingual representation learning at scale. Proceedings of the 58th annual meeting of the association for computational linguistics (8440–8451). Association for Computational Linguistics. https://aclanthology.org/2020.acl-main.747. https://doi.org/10.18653/v1/2020.acl-main.747. Accessed 16 June 2022

Conneau, A., Lample, G. (2019) Cross-lingual Language Model Pretraining. H.M. Wallach, H. Larochelle, A. Beygelzimer, F. D’Alché-Buc, E.B. Fox, R. Garnett. Advances in neural information processing systems 32: Annual conference on neural information processing systems 2019, neurips 2019, december 8-14, 2019, Vancouver, BC, Canada (7057–7067). Retrieved from https://papers.nips.cc/paper/2019/hash/c04c19c2c2474dbf5f7ac4372c5b9af1-Abstract.html. Accessed 16 June 2022

Cox, D. R. (1958). The regression analysis of binary sequences. Journal of the Royal Statistical Society: Series B (Methodological), 20(2), 215–232.

Cristobal, E., Flavian, C., & Guinaliu, M. (2007). Perceived e-service quality (PeSQ): Measurement validation and effects on consumer satisfaction and web site loyalty. Managing Service Quality: An International Journal, 17(3), 317–340. https://doi.org/10.1108/09604520710744326

Cui, Y., Wise, A. F., & Allen, K. L. (2019). Developing reflection analytics for health professions education: A multi-dimensional framework to align critical concepts with data features. Computers in Human Behavior, 100, 305–324.

Darling, L. F. (2001). Portfolio as practice: The narratives of emerging teachers. Teaching and Teacher Education, 17(1), 107–121.

Devlin, J., Chang, M.W., Lee, K., Toutanova, K. (2019) BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. Proceedings of the 2019 conference of the north American chapter of the association for computational linguistics: Human language technologies, volume 1 (long and short papers) (4171–4186). Minneapolis, Minnesota ACL. https://doi.org/10.18653/v1/N19-1423

Dyment, J. E., & O’Connell, T. S. (2011). Assessing the quality of reflection in student journals: A review of the research. Teaching in Higher Education, 16(1), 81–97. https://doi.org/10.1080/13562517.2010.507308

Fallon, M.A., Brown, S.C., Ackley, B.C. (2003) Reflection as a strategy for teaching performance-based assessment. Brock Education Journal 13(1)

Faraway, J. J. (2016). Extending the linear model with r: generalized linear, mixed effects and nonparametric regression models. CRC Press.

Finlay, L. (2008). Reflecting on reflective practice. PBPL Paper, 52, 1–27.

Fort, K. (2016) Collaborative annotation for reliable natural language processing: Technical and sociological aspects. Wiley

Fox, R. K., Dodman, S., & Holincheck, N. (2019). Moving beyond reflection in a hall of mirrors: developing critical reflective capacity in teachers and teacher educators. Reflective Practice, 20(3), 367–382.

Fox, R.K., White, C.S. (2010) Examining teachers’ development through critical reflection in an advanced master’s degree program. The purposes, practices, and professionalism of teacher reflectivity: Insights for twenty-first-century teachers and students (3–24)

García-Gorrostieta, J. M., López-López, A., & González-López, S. (2018). Automatic argument assessment of final project reports of computer engineering students. Computer Applications in Engineering Education, 26(5), 1217–1226.

Gelman, A., & Hill, J. (2006). Data analysis using regression and multilevel/hierarchical models. Cambridge: Cambridge University Press.

Gibson, A., Kitto, K., & Bruza, P. (2016). Towards the discovery of learner metacognition from reflective writing. Journal of Learning Analytics, 3(2), 22–36.

Hanafi, M. (2019) Perceptions of reflection on a pre-service primary teacher education programme in teaching english as a second language in an institute of teacher education in malaysia (Unpublished doctoral dissertation). Canterbury Christ Church University

Hartig, F. (2019) DHARMa: residual diagnostics for hierarchical (multi-level/mixed) regression models. R package version 0.2,4

Hatton, N., & Smith, D. (1995). Reflection in teacher education: Towards definition and implementation. Teaching and Teacher Education, 11(1), 33–49. https://doi.org/10.1016/0742-051x(94)00012-u

Hedlund, D. E. (1989). A dialogue with self: The journal as an educational tool. Journal of Humanistic Education and Development, 27(3), 105–13.

Hoffman, L., & Rovine, M. J. (2007). Multilevel models for the experimental psychologist: Foundations and illustrative examples. Behavior Research Methods, 39(1), 101–117.

Houston, C. R. (2016). Do scaffolding tools improve reflective writing in professional portfolios? a content analysis of reflective writing in an advanced preparation program. Action in Teacher Education, 38(4), 399–409.

Hu, X. (2017) Automated recognition of thinking orders in secondary school student writings. Learning: Research and Practice, 3(1) 30–41

Hume, A. (2009). A Personal Journey: Introducing Reflective Practice into Pre-Service Teacher Education to Improve Outcomes for Students. Teachers and Curriculum, 11, 21–28.

Jiang, J. (2017). Asymptotic analysis of mixed effects models: theory, applications, and open problems. CRC Press.

Jung, Y., Wise, A.F. (2020) How and how well do students reflect? multi-dimensional automated reflection assessment in health professions education. Proceedings of the Tenth International Conference on Learning Analytics & Knowledge (595–604)

King, P. M., & Kitchener, K. S. (2004). Reflective judgment: Theory and research on the development of epistemic assumptions through adulthood. Educational psychologist, 39(1), 5–18.

Kinsella, E. A. (2007). Embodied reflection and the epistemology of reflective practice. Journal of Philosophy of Education, 41(3), 395–409.

Klein, S. R. (2008). Holistic reflection in teacher education: Issues and strategies. Reflective Practice, 9(2), 111–121.

Knight, S., Shibani, A., Abel, S., Gibson, A., Ryan, P., Sutton, N., et al. (2020). Acawriter: A learning analytics tool for formative feedback on academic writing. Journal of Writing Research, 12(1), 141–186.

Kolb, D. (2014) Neprobádaný život nestojí za život. J. Nehyba, B. Lazarová (Eds.), Reflexe v procesu učení. desetkrát stejně a přece jinak. (23-30). Masaryk University Press

Kovanović, V., Joksimović, S., Mirriahi, N., Blaine, E., Gašević, D., Siemens, G., Dawson, S. (2018) Understand students’ self-reflections through learning analytics. Proceedings of the 8th international conference on learning analytics and knowledge (389–398)

Krol, C.A. (1996) Preservice Teacher Education Students’ Dialogue Journals: What Characterizes Students’ Reflective Writing and a Teacher’s Comments. ERIC. Retrieved from https://files.eric.ed.gov/fulltext/ED395911.pdf (Paper presented at the Annual Meeting of the Association of Teacher Educators (76th, St. Louis, MO). Accessed 16 June 2022

Kudo, T., Richardson, J. (2018) SentencePiece: A simple and language independent subword tokenizer and detokenizer for neural text processing. Proceedings of the 2018 conference on empirical methods in natural language processing: System demonstrations (66–71). Brussels, Belgium, Association for Computational Linguistics. Retrieved from https://aclanthology.org/D18-2012https://doi.org/10.18653/v1/D18-2012. Accessed 16 June 2022

LaBoskey, V. K. (1994). Development of reflective practice: A study of preservice teachers. New York: Teachers College Press.

Lan, Z., Chen, M., Goodman, S., Gimpel, K., Sharma, P., Soricut, R. (2020) ALBERT: A Lite BERT for Self-supervised Learning of Language Representations. Proceedings of International Conference on Learning Representations, ICLR. Retrieved from https://openreview.net/forum?id=H1eA7AEtvS. Accessed 16 June 2022

Larrivee, B., Cooper, J. (2006) An educator’s guide to teacher reflection. Houghton Mifflin. Retrieved from https://books.google.cz/books?id=tVaYL-x67ekC. Accessed 16 June 2022

Lee, H. J. (2005). Understanding and assessing preservice teachers’ reflective thinking. Teaching and Teacher Education, 21(6), 699–715.

Lee, I. (2008). Fostering preservice reflection through response journals. Teacher Education Quarterly, 35(1), 117–139.

Lepp, L., Kuusvek, A., Leijen, Ä., Pedaste, M., Kaziu, A. (2020) Written or video diary-which one to prefer in teacher education and why? 2020 ieee 20th international conference on advanced learning technologies (icalt) (276–278)

Lewis, M., Liu, Y., Goyal, N., Ghazvininejad, M., Mohamed, A., Levy, O., Zettlemoyer, L. (2020) BART: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. Proceedings of the 58th annual meeting of the association for computational linguistics (7871–7880). Association for Computational Linguistics. Retrieved from https://aclanthology.org/2020.acl-main.703. Accessed 16 June 2022

Lindroth, J.T. (2015) Reflective journals: A review of the literature. Update: Applications of Research in Music Education, 34(1)66–72. https://doi.org/10.1177/8755123314548046

Liu, Q., Zhang, S., Wang, Q., & Chen, W. (2017). Mining online discussion data for understanding teachers reflective thinking. IEEE Transactions on Learning Technologies, 11(2), 243–254.

Liu, Y., Ott, M., Goyal, N., Du, J., Joshi, M., Chen, D., Stoyanov, V. (2019) RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv:1907.11692

Loughran, J. (2007) Enacting a pedagogy of teacher education. Enacting a pedagogy of teacher education (11–25). Routledge

Loughran, J., & Corrigan, D. (1995). Teaching portfolios: A strategy for developing learning and teaching in preservice education. Teaching and teacher Education, 11(6), 565–577.

Magnusson, A., Skaug, H., Nielsen, A., Berg, C., Kristensen, K., Maechler, M., Brooks, M.M. (2017) Package ’glmmTMB’ Package ’glmmTMB’. R Package Version 0.2.0

Maloney, C., & Campbell-Evans, G. (2002). Using interactive journal writing as a strategy for professional growth. Asia-Pacific Journal of Teacher Education, 30(1), 39–50.

Mena-Marcos, J., Garcia-Rodriguez, M. L., & Tillema, H. (2013). Student teacher reflective writing: what does it reveal? European Journal of Teacher Education, 36(2), 147–163.

Moon, J. A. (2006). Learning journals: A handbook for reflective practice and professional development. London, Routledge.https://doi.org/10.4324/9780429448836-8

Nakayama, H., Kubo, T., Kamura, J., Taniguchi, Y., Liang, X. (2018) doccano: Text annotation tool for human. Software available from https://github.com/doccano/doccano. Accessed 16 June 2022

Nunnally, J. C., & Bernstein, I. H. (1994). Psychometric Theory. McGraw Hill.

Pasternak, D.L., Rigoni, K.K. (2015) Teaching reflective writing: thoughts on developing a reflective writing framework to support teacher candidates. Teaching/Writing: The Journal of Writing Teacher Education 4(1) 5

Pedro, J. Y. (2005). Reflection in teacher education: exploring pre-service teachers’ meanings of reflective practice. Reflective practice, 6(1), 49–66.

R Core Team (2020) R: A language and environment for statistical computing [Computer Software Manual]. Vienna, Austria. Retrieved from https://www.R-project.org/. Accessed 16 June 2022

Ryken, A. E., & Hamel, F. L. (2016). Looking again at “surface-level’’ reflections: Framing a competence view of early teacher thinking. Teacher Education Quarterly, 43(4), 31–53.

Schwitzgebel, E. (2010). Acting contrary to our professed beliefs or the gulf between occurrent judgment and dispositional belief. Pacific Philosophical Quarterly, 91(4), 531–553.

Shoffner, M. (2008). Informal reflection in pre-service teacher education. Reflective Practice, 9(2), 123–134.

Shum, S. B., Sándor, Á., Goldsmith, R., Bass, R., & McWilliams, M. (2017). Towards reflective writing analytics: rationale, methodology and preliminary results. Journal of Learning Analytics, 4(1), 58–84.

Spalding, E., Wilson, A., & Mewborn, D. (2002). Demystifying reflection: A study of pedagogical strategies that encourage reflective journal writing. Teachers college record, 104(7), 1393–1421.

Štefánik, M. & Nehyba, J. (2021). Czech-English Reflective Dataset (CEReD). (Version V1) [Data set]. GitHub. 11372/LRT-3573

Stiler, G.M., Philleo, T. (2003) Blogging and blogspots: An alternative format for encouraging reflective practice among preservice teachers. Education 123(4)

Stroup, W. W. (2012). Generalized linear mixed models: modern concepts, methods and applications. CRC Press.

Tan, J. (2013). Dialoguing written reflections to promote self-efficacy in student teachers. Reflective Practice, 14(6), 814–824.

Turc, I., Chang, M.W., Lee, K., Toutanova, K. (2019) Well-Read Students Learn Better: On the Importance of Pre-training Compact Models. https://www.youtube.com/watch?v=LoyyKVJgHKoarXiv:1908.08962. Accessed 16 June 2022

Ukrop, M., Švábenský, V., Nehyba, J. (2019) Reflective diary for professional development of novice teachers. Proceedings of the 50th ACM technical symposium on computer science education (1088–1094)

Ullmann, T. D. (2019). Automated analysis of reflection in writing: Validating machine learning approaches. International Journal of Artificial Intelligence in Education, 29(2), 217–257.

Van Rossum, G., & Drake, F. L. (2009). Python 3 Reference Manual. CA CreateSpace: Scotts Valley.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Polosukhin, I. (2017) Attention is All you Need. I. Guyon et al. (Eds,), Advances in neural information processing systems (Vol 30). Curran Associates, Inc. Retrieved from https://proceedings.neurips.cc/paper/2017/file/3f5ee243547dee91fbd053c1c4a8 45aa-Paper.pdf. Accessed 16 June 2022

Wallin, P., & Adawi, T. (2018). The reflective diary as a method for the formative assessment of self-regulated learning. European Journal of Engineering Education, 43(4), 507–521.

Ward, J. R., & McCotter, S. S. (2004). Reflection as a visible outcome for preservice teachers. Teaching and Teacher Education, 20(3), 243–257.

Whipp, J., Wesson, C., & Wiley, T. (1997). Supporting collaborative reflections: Case writing inanurban pds. Teaching Education, 9(1), 127–134.

Wilcox, B. L. (1996). Smart portfolios for teachers in training. Journal of Adolescent & Adult Literacy, 40(3), 172–179.

Wulff, P., Buschhüter, D., Westphal, A., Nowak, A., Becker, L., Robalino, H., & Borowski, A. (2021). Computer-Based Classification of Preservice Physics Teachers’ Written Reflections. Journal of Science Education and Technology, 30(1), 1–15.

Xie, Q., Dai, Z., Hovy, E., Luong, M.T., Le, Q.V. (2020) Unsupervised data augmentation for consistency training. arXiv: Learning. Retrieved from. arXiv: 1904.12848

Zawacki-Richter, O., Marín, V. I., Bond, M., & Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education-where are the educators? International Journal of Educational Technology in Higher Education, 16(1), 1–27.

Zeichner, K., & Wray, S. (2001). The teaching portfolio in US teacher education programs: What we know and what we need to know. Teaching and Teacher Education, 17(5), 613–621.

Acknowledgements

We wish to give special thanks to Vít Novotný for their help with LateX.

Funding

This publication was written at Masaryk university as part of the project “Analysis of Reflective Journals of Teacher Students” number MUNI/A/1238/2019 with the support of the Specific University Research Grant, as provided by the Ministry of Education, Youth and Sports of the Czech Republic in the year 2020.

Author information

Authors and Affiliations

Contributions

Both authors contributed equally in this work. Jan Nehyba identified the need for identification of reflection in the educational applications and proposed the specific application of the classifier for the purpose of exploratory hypotheses. Based on the related work, Michal Štefánik identified the technologies applicable for a classification of reflection, implemented the reproducible sources for the experiments and collected the results presented in this article.

Corresponding author

Ethics declarations

Conflicts of interest

Authors are required to disclose financial or non-financial interests that are directly or indirectly related to the work submitted for publication. Please refer to “Competing Interests and Funding ”below for more information on how to complete this section.

Appendices

A: Classifiers: technical details

In this section we describe technical specifics of training of the selected shallow and deep classifiers.

1.1 A.1: Data splits

To make sure that no sentences are present multiple times in our dataset, we start by removing the duplicate sentences regardless of the context. To minimize the chance of multiple occurrences of sentences that are close-to the same among the splits, we order the sentences alphabetically. We split the ordered list of sentences with their associated contexts in a ratio of 90:5:5 to a train, validation and test split, resulting in a total of 6,097 of training, 340 of validation and 340 of test sentences. We pick a validation set based on a confidence threshold matching the training confidence threshold, i.e. the classifier trained on sentence of minimal confidence of 4 is tuned on a validation set with a minimal threshold of 4 as well. Confidence filtering on all splits is applied only to the selected split so that it is reproducible and no samples can be exchanged between splits when testing on different confidence threshold.

1.2 A.2: Shallow classifiers

We report the tuned hyperparameters of the shallow classifiers, and report their comparative results of accuracy with their respective optimal parameters.

1.2.1 A.2.1: Preprocessing

To minimize a dimensionality of bag-of-words representation, we lowercase and remove the stemming all the words of sentence and its context. We limit the vocabulary of bag-of-words to top-n most-common words, except the stop-words occurring in more than \(50\%\) of sentences. We consider n as a hyperparameter of every shallow classifier.

The final bag-of-words representation with context, used for all the shallow classifiers, is a concatenation of a bag-of-words vector of a sentence and its context.

1.2.2 Hyperparameters

We have performed an exhaustive hyperparameter search on a validation data set of the training confidence, on the following parameters of shallow classifiers:

-

(Shared) use of context \(\in \{\text {True}, \text {False}\}\)

-

(Shared) preceding and succeeding context window size \(\in \{1, 2,\ldots , 5\}\),

-

(Shared) BoW size: limitations to top-n tokens: number of tokens \(\in \{100, 150,\ldots , 1000\}\)

-

(Shared) Tokenization for a specific language \(\in \{True, False\}\)

-

Random Forrest: depth \(\in \{2, 3, 4, 5\}\),

-

Random Forrest: number of estimators \(\in \{100, 200, \ldots , 500\}\)

-

SVM: kernel function \(\in \{linear, polynomial, radial\}\)

1.2.3 Results

Figure 6 shows results for all the evaluated classifiers with the hyperparameters listed in Section 1 tuned on a validation split.

B: Deep classifiers

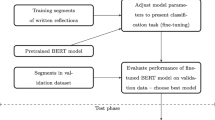

We extend the description of the training process from Section 3.4.2 with technical specifics relevant for reproduction or easier further customization.

We experiment with two multilingual representatives of transformer family: Multilingual BERT-base-cased (Devlin et al., 2019), and XLM-RoBERTa-large (Conneau et al., 2020). We split the samples using the same methodology as described in 1.

We segment the units of text based on Wordpiece (Turc et al., 2019) or Sentencepiece (Kudo & Richardson, 2018) model, respectively, built for the supported languages of the particular model. We utilize a context of the classified sentences in a concatenation, separated by a special symbol “<s>”, similarly to other sequence-pair problems, such as answer extraction, or entailment classification.

By default, we train the neural classifiers using an effective batch size of 32, i.e. the weights of the models are adjusted using the gradients aggregated over the 32 training samples. We set the warmup to 10% of the total training steps and by default, we schedule the training for 20 epochs. We use early-stopping on evaluation accuracy with a patience over 500 training steps. A training of a single classifier takes approximately 8 hours on two GPUs of Nvidia Tesla T4.

For final evaluation, we pick the model with the highest validation accuracy, as measured in every 50 training steps. Figure 7 shows a comparison of validation loss and accuracy of Multilingual-BERT-base-cased and XLM-RoBERTa-large trained on samples with minimal confidence threshold of 4.

Due to the computational complexity and the fact that we find our results quite consistent to adjustments of aforementioned parameters, we do not perform a systematic hyperparameter search of parameters of deep classifiers and set these only by our best knowledge and intuition.

C: Literature review of applying machine learning to reflective writing

D: Parameters of Generalized linear mixed models

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Nehyba, J., Štefánik, M. Applications of deep language models for reflective writings. Educ Inf Technol 28, 2961–2999 (2023). https://doi.org/10.1007/s10639-022-11254-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10639-022-11254-7