Abstract

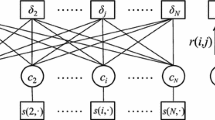

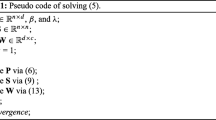

While spectral clustering can produce high-quality clusterings on small data sets, computational cost makes it infeasible for large data sets. Affinity Propagation (AP) has a limitation that it is hard to determine the value of parameter ‘preference’ which can lead to an optimal clustering solution. These problems limit the scope of application of the two methods. In this paper, we develop a novel fast two-stage spectral clustering framework with local and global consistency. Under this framework, we propose a Fast density-Weighted low-rank Approximation Spectral Clustering (FWASC) algorithm to address the above issues. The proposed algorithm is a high-quality graph partitioning method, and simultaneously considers both the local and global structure information contained in the data sets. Specifically, we first present a new Fast Two-Stage AP (FTSAP) algorithm to coarsen the input sparse graph and produce a small number of final representative exemplars, which is a simple and efficient sampling scheme. Then we present a density-weighted low-rank approximation spectral clustering algorithm to operate those representative exemplars on the global underlying structure of data manifold. Experimental results show that our algorithm outperforms the state-of-the-art spectral clustering and original AP algorithms in terms of speed, memory usage, and quality.

Similar content being viewed by others

References

Baker C (1977) The numerical treatment of integral equations. Clarendon, Oxford

Balasubramanian M, Schwartz EL, Tenenbaum JB, de Silva V, Langford JC (2002) The Isomap algorithm and topological stability. Science 295(5552): 7

Belabbas M-A, Wolfe PJ (2009) Spectral methods in machine learning and new strategies for very large datasets. Proc Natl Acad Sci USA 106: 369–374

Berkhin P (2002) Survey of clustering data mining techniques. Technical Report. Accrue Software. http://www.ee.ucr.edu/~barth/EE242/clustering_survey.pdf

Ding CHQ, He X, Zha H, Gu M, Simon HD (2001) A min–max cut algorithm for graph partitioning and data clustering. In: Proceedings of IEEE international conference on data mining (ICDM), pp 107–114

Donath WE, Hofmann AJ (1973) Lower bounds for the partitioning of graphs. IBM J Res Dev 17: 420–425

Drineas P, Mahoney MW (2005) On the Nyström method for approximating a Gram matrix for improved kernel-based learning. J Mach Learn Res 6: 2153–2175

Duda RO, Hart PE, Stork DG (2001) Pattern classification. Wiley, New York

Fei-Fei L, Fergus R, Perona P (2004) Learning generative visual models from few training examples: an incremental Bayesian approach tested on 101 object categories. In: IEEE conference on computer vision and pattern recognition. Workshop on generative model based vision, pp 178–178

Fowlkes C, Belongie S, Chung F, Malik J (2004) Spectral grouping using the Nyström method. IEEE Trans Pattern Anal Mach Intell 26(2): 214–225

Freitas ND, Wang Y, Mahdaviani M, Lang D (2006) Fast Krylov methods for N-body learning. Adv Neural Inf Process Syst 18: 251–258

Frey BJ, Dueck D (2007) Clustering by passing messages between data points. Science 305(5814): 972–976

Georghiades AS, Belhumeur PN, Kriegman DJ (2001) From few to many: illumination cone models for face recognition under variable lighting and pose. IEEE Trans Pattern Anal Mach Intell 23(6): 643–660

Givoni I, Frey BJ (2009) Semi-supervised affinity propagation with instance-level constraints. In: Proceedings of the 14th international workshop on Artificial Intelligence and Statistics (AISTATS), vol 5. JMLR W&CP, Clearwater Beach, FL, pp 161–168

Hagen L, Kahng A (1992) New spectral methods for ratio cut partitioning and clustering. IEEE Trans Computer Aided Des Integr Circuits Syst 11(9): 1074–1085

Han J, Kamber M (2001) Data mining: concepts and techniques. Morgan Kaufmann Publishers, San Francisco

Jain A, Murty M, Flynn P (1999) Data clustering: a review. ACM Comput Surv 31(3): 264–323

Jia Y, Wang J, Zhang C, Hua X (2008) Finding image exemplars using fast sparse affinity propagation. In: Proceedings of ACM multimedia, pp 639–642

Johnson W, Lindenstrauss J (1984) Extensions of Lipschitz mappings into a Hilbert space. Contemp Math 26: 189–206

Kschischang F, Frey BJ, Loeliger H-A (2001) Factor graphs and the sum-product algorithm. IEEE Trans Inform Theory 47(2): 498–519

Kumar S, Mohri M, Talwalkar A (2009) Sampling techniques for the Nyström method. In: Proceedings of the 14th international workshop on Artificial Intelligence and Statistics (AISTATS), vol 5. JMLR W&CP, Clearwater Beach, FL, pp 304–311

Lazebnik S, Schmid C, Ponce J (2006) Beyond bags of features: spatial pyramid matching for recognizing natural scene categories. In: Proceedings of IEEE conference on computer vision and pattern recognition (CVPR), pp 2169–2178

Lee JA, Verleysen M (2005) Nonlinear dimensionality reduction of data manifolds with essential loops. Neurocomputing 67(1): 29–53

Leone M, Sumedha , Weigt M (2007) Clustering by soft-constraint affinity propagation: applications to gene-expression data. Bioinformatics 23(20): 2708–2715

Liu T, Moore AW, Gray AG, Yang K (2005) An investigation of practical approximate nearest neighbor algorithms. Adv Neural Inf Process Syst 17: 825–832

Liu W, He J, Chang S (2010) Large graph construction for scalable semi-supervises learning. In: Proceedings of the 27th international conference on machine learning (ICML), pp 679-686

Madigan D, Raghavan N, Dumouchel W, Nason M, Posse C, Ridgeway G (2002) Likelihood-based data squashing: a modeling approach to instance construction. Data Min Knowl Discov 6: 173–190

Mahadevan S (2008) Fast spectral learning using Lanczos eigenspace projections. In: Proceedings of the 23rd national conference on artificial intelligence (AAAI), pp 1472–1475

Mitra P, Murthy CA, Pal SK (2002) Density-based multiscale data condensation. IEEE Trans Pattern Anal Mach Intell 24(6): 734–747

Ng A, Jordan M, Weiss Y (2002) On spectral clustering: analysis and an algorithm. Adv Neural Inf Process Syst 14: 849–856

Ouimet M, Bengio Y (2005) Greedy spectral embedding. In: Proceedings of the 10th international workshop on artificial intelligence and statistics (AISTATS), pp 253-260

Papadimitriou CH, Steiglitz K (1998) Combinatorial optimization: algorithms and complexity. Dover, New York

Shi J, Malik J (2000) Normalized cuts and image segmentation. IEEE Trans Pattern Anal Mach Intell 22(8): 888–905

Song Y, Chen W-Y, Bai H, Lin C-J, Chang EY (2008) Parallel spectral clustering. In: Proceedings of learning and principles and practice of knowledge discovery in databases (ECML/PKDD), pp 374–389

Strehl A, Ghosh J (2002) Cluster ensembles—a knowledge reuse framework for combining multiple partitions. J Mach Learn Res 3: 583–617

Talwalkar A, Kumar S, Rowley H (2008) Large-scale manifold learning. In: Proceedings of IEEE conference on computer vision and pattern recognition (CVPR), pp 1–8

Tenenbaum JB, de Silva V, Langford JC (2000) A global geometric framework for nonlinear dimensionality reduction. Science 290(5500): 2319–2323

Vidal R, Ma Y, Piazzi J (2004) A new GPCA algorithm for clustering subspaces by fitting, differentiating and dividing polynomials. In: Proceedings of IEEE conference on computer vision and pattern recognition (CVPR), pp 510–517

von Luxburg U (2007) A tutorial on spectral clustering. Stat Comput 17(4): 395–416

Wang F, Zhang C (2008) Label propagation through linear neighborhoods. IEEE Trans Knowl Data Eng 20(1): 55–67

Williams C, Seeger M (2001) Using the Nyström method to speed up kernel machines. Adv Neural Inf Process Syst 13: 682–688

Wittkop T, Baumbach J, Lobo F, Rahmann S (2007) Large scale clustering of protein sequences with FORCE–A layout based heuristic for weighted cluster editing. BMC Bioinformatics 8(1): 396

Wu M, Schölkopf B (2007) A local learning approach for clustering. Adv Neural Inf Process Syst 19: 1529–1536

Xiao J, Wang J, Tan P, Quan L (2007) Joint affinity propagation for multiple view segmentation. In: Proceedings of IEEE 11th international conference on computer vision (ICCV), pp 1–7

Xu R, Wunsch D (2005) Survey of clustering algorithms. IEEE Trans Neural Netw 16(3): 645–678

Yan D, Huang L, Jordan M (2009) Fast approximate spectral clustering. In: Proceedings of the 15th ACM SIGKDD international conference on knowledge discovery and data mining, pp 907–916

Yann L, Corinna C (2009) The MNIST database of handwritten digits. http://yann.lecun.com/exdb/mnist/

Zelnik-Manor L, Perona P (2005) Self-tuning spectral clustering. Adv Neural Inf Process Syst 17: 1601–1608

Zhang K, Kwok J (2008) Improved Nyström low rank approximation and error analysis. In: Proceedings of the 25th international conference on machine learning (ICML), pp 273–297

Zhang K, Kwok J (2009) Density-weighted Nyström method for computing large kernel Eigen-Systems. Neural Comput 21: 121–146

Zhou D, Bousquet O, Lal TN, Weston J, Schölkopf B (2004) Learning with local and global consistency. Adv Neural Inf Process Syst 16: 321–328

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible editor: Charu Aggarwal.

Rights and permissions

About this article

Cite this article

Shang, F., Jiao, L.C., Shi, J. et al. Fast density-weighted low-rank approximation spectral clustering. Data Min Knowl Disc 23, 345–378 (2011). https://doi.org/10.1007/s10618-010-0207-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10618-010-0207-5