Abstract

Synoptic weather typing and regression-based downscaling approaches have become popular in evaluating the impacts of climate change on a variety of environmental problems, particularly those involving extreme impacts. One of the reasons for the popularity of these approaches is their ability to categorize a complex set of meteorological variables into a coherent index, facilitating the projection of changes in frequency and intensity of future daily extreme weather events and/or their impacts. This paper illustrated the capability of the synoptic weather typing and regression methods to analyze climatic change impacts on a number of extreme weather events and environmental problems for south–central Canada, such as freezing rain, heavy rainfall, high-/low-streamflow events, air pollution, and human health. These statistical approaches are helpful in analyzing extreme events and projecting their impacts into the future through three major steps or analysis procedures: (1) historical simulation modeling to identify extreme weather events or their impacts, (2) statistical downscaling to provide station-scale future hourly/daily climate data, and (3) projecting changes in the frequency and intensity of future extreme weather events and their impacts under a changing climate. To realize these steps, it is first necessary to conceptualize the modeling of the meteorology, hydrology and impacts model variables of significance and to apply a number of linear/nonlinear regression techniques. Because the climate/weather validation process is critical, a formal model result verification process has been built into each of these three steps. With carefully chosen physically consistent and relevant variables, the results of the verification, based on historical observations of the outcome variables simulated by the models, show a very good agreement in all applications and extremes tested to date. Overall, the modeled results from climate change studies indicate that the frequency and intensity of future extreme weather events and their impacts are generally projected to significantly increase late this century over south–central Canada under a changing climate. The implications of these increases need be taken into consideration and integrated into policies and planning for adaptation strategies, including measures to incorporate climate change into engineering infrastructure design standards and disaster risk reduction measures. This paper briefly summarized these climate change research projects, focusing on the modeling methodologies and results, and attempted to use plain language to make the results more accessible and interesting to the broader informed audience. These research projects have been used to support decision-makers in south–central Canada when dealing with future extreme weather events under climate change.

Similar content being viewed by others

1 Introduction

It has become widely recognized that hot spells/heat waves, heavy precipitation, and severe winter and summer storm events may increase over most land areas of the globe due to a changing climate (Cubasch et al. 1995; Zwiers and Kharin 1998; Last and Chiotti 2001; Meehl and Tebaldi 2004; Riedel 2004; Kharin and Zwiers 2005; Medina-Ramón and Schwartz 2007). The Fourth Assessment Report of the Intergovernmental Panel on Climate Change (IPCC AR4) has indicated that the frequency and intensity of these extreme weather events are projected to very likely increase globally in this century (IPCC 2007a). It is further projected that climate change could induce elevated mortality/morbidity from heat waves, floods, and droughts as well as the heavy rainfall-related flooding risks (IPCC 2007b). It is also expected that many of these risks may be felt at the local to regional scales.

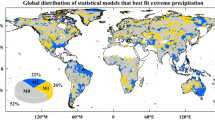

In light of these concerns at a local scale for the area of south–central Canada, Environment Canada has completed several research projects to project changes in the frequency and intensity of future extreme weather events and their impacts. For example, a three-year research project on climate change and human health was completed for four cities (Montreal, Ottawa, Toronto, and Windsor) in south–central Canada, in partnerships with Toronto Public Health, McMaster University, and the Public Health Agency of Canada. This project, funded by the Health Policy Research Program of Health Canada, attempted to evaluate differential and combined impacts of extreme temperatures and air pollution on human mortality under current and future climates (Cheng et al. 2007a, b, 2008a, b). Another three-year research project investigated climate change and extreme rainfall-related flooding risks in four river basins (Grand, Humber, Rideau, and Upper Thames) of southern Ontario, Canada. This project was led by Environment Canada and funded by the Government of Canada’s Climate Change Impacts and Adaptation Program (CCIAP), in partnerships with Conservation Ontario, Ontario Ministry of the Environment, Ontario Ministry of Natural Resources, and CGI Insurance Business, with the aim of projecting changes in the frequency and intensity of future daily heavy rainfall and high-/low-streamflow events (Cheng et al. 2008c, 2010, 2011a). Another study was completed to project the possible impacts of climate change on freezing rain in 15 cites of south–central Canada, as shown in Fig. 1 (Cheng et al. 2007c, 2011b).

While global climate models (GCMs) are an important tool in the assessment of climate change, most of their simulations are relevant for spatial and temporal scales much larger than those faced by most decision-makers. In general, decision-makers require guidance on future climate extremes at a local scale, requiring “downscaling” of the future climate information from the GCMs and even from the finer regional climate models (RCMs) to the stations or cities. Two fundamental approaches exist for downscaling of large-scale GCM simulations to a finer spatial resolution (Wilby and Wigley 1997; Wilby et al. 2002; Fowler et al. 2007). The first of these is a dynamical approach where a higher resolution climate model is embedded within a GCM. The second approach is to use statistical methods to establish empirical relationships between GCM-resolution climate variables and local observed station-scale climate variables of interest to decision-makers. In many cases, statistical methods may still need to be applied to link the dynamical downscaling or higher resolution regional scale climate model results to local climates and parameters of importance to local decision-makers (Fowler et al. 2007).

Of the statistical downscaling approaches, more sophisticated of the statistical downscaling methodologies can be classified into three groups: (1) regression-based models, (2) weather typing schemes, and (3) weather generators. Several key principles need to be considered in the application of statistical downscaling techniques in order to yield meaningful outputs for decision-makers, including the need for predictor variables that are physically meaningful, reproduced well by the GCMs and able to reflect processes responsible for climatic variability on a range of timescales (Fowler et al. 2007). In addition, Fowler et al. (2007) also concluded that statistical downscaling methods may be more appropriate when point values of extremes are needed for climate change impact and adaptation studies.

Although there has been a huge expansion of the downscaling literature, only about one third of all downscaling studies consider impacts (Fowler et al. 2007). Even within studies considering the various impacts from a changing climate, there often is little consideration given to adaptation research models designed for decision-making. Fowler et al. (2007) concluded that, in their assessment of climate change downscaling techniques, there is a need for applied research that is capable of outputting downscaled results that can be used to help decision-makers, stakeholders, and the public make informed, robust decisions on adaptation and mitigation strategies in the face of many uncertainties about the future. The approaches and results from the downscaling studies developed in collaboration with stakeholders that are briefly summarized in this paper are intended to make the results more accessible and interesting to a broader informed audience.

2 Data sources and treatment

To project potential climate change impacts on extreme weather events, a variety of observed datasets need to be considered, including meteorological, hydrological, air pollution and human mortality datasets as well as insurance claims and impacts databases. More detailed information on data sources, variables, and record length, can be found in Table 1. It should be noted that, while the different projects need different types of the data, some climatological data variables were commonly used for all of the projects, whether dealing with severe ice storms, heavy rainfall, extreme heat, mortality or insurance losses.

These climatological data variables were first treated for missing data of hourly surface meteorological observations, as shown in the first row of Table 1. When data were missing for three consecutive hours or less, the missing data were interpolated using a temporal linear method, whereas data were excluded from the analysis on a day where the hourly data were missing for four or more consecutive hours. Other types of observed data, such as daily air pollution concentrations and human mortality counts, were treated to remove inter-annual trends that were attributable to non-climatological factors in order to more effectively segregate and evaluate extreme temperature and air pollution related health risks. The data treatment methods and results are not shown in this current paper due to limitations of the space; for details, refer to publications (Cheng et al. 2007a, c, 2008a, c, 2010).

In addition to the use of historical climatological observations, the daily simulations from four GCM models and three greenhouse gas (GHG) emission scenarios were used in the studies (Table 1). These climate change models and scenarios include the Canadian GCMs—CGCM1 (IPCC IS92a) and CGCM2 (IPCC SRES A2 and B2), one U.S. GCM—GFDL-CM2.0 (IPCC SRES A2), and one German GCM—ECHAM5/MPI-OM (IPCC SRES A2). There are two reasons for including IPCC scenario IS92a as well as the later IPCC 3rd Assessment Report SRES scenario model simulations in the projects. First, when the studies were initially conducted, the IPCC AR4 scenarios were not available but later updates of the analyses from the IS92a scenarios with the SRES scenarios allowed consideration of the diversity of the scenarios and their changes with time. Second, for some weather variables, especially west–east and south–north wind components, the CGCM1 (IS92a) scenarios are comparable (not worse) than the CGCM3 (SRES A2) scenarios based on validation and comparisons of the daily data distributions from interpolated GCM historical runs and observations over a comparative time period (1961–2000) for Toronto. For these GCM scenarios and simulations, three time windows (1961–2000, 2046–2065, 2081–2100) were used in the analyses since these data were only available from the Web site of the Program for Climate Model Diagnosis and Intercomparison (PCMDI 2006). In addition, for the projections of future return-period values of annual maximum 3-day accumulated rainfall totals and annual maximum one-day streamflow volumes, three Canadian GCM transient model simulations (CGCM1 IS92a, CGCM2 A2 and B2) for the 100-year period (2001–2100) were considered.

The study area and locations for south–central Canada are shown in Fig. 1. Fourteen cities with hourly observed meteorological data at international airports in the province of Ontario and the city of Montreal in the province of Quebec were selected in the climate change and freezing rain study. For other projects, only some of these locations were considered. For example, four cities (Montreal, Ottawa, Toronto, Windsor) were selected for the climate change and human health project while the climate change and heavy rainfall-related flooding risk study selected four cities (Kitchener-Waterloo, London, Ottawa, Toronto) in combination with their four adjacent watersheds (Grand, Upper Thames, Rideau, Humber).

3 Analysis techniques

The principal methods and steps involved in the projects are summarized in Table 2. Three major steps or analysis procedures were required to complete each study: (1) historical analysis and/or simulation modeling to verify the historical extreme weather events, (2) regression-based downscaling involving the projection of station-scale future hourly/daily climate information, and (3) future projections of changes in the frequency and intensity of daily extreme weather events under a changing climate. The methodologies of each part are briefly described as follows.

3.1 Historical analysis

Transfer functions involved in future projections are typically used to relate historical climate variables to relevant outputs or impacts of interest to decision-makers. The historical analysis to obtain the transfer functions is comprised of: (1) an automated synoptic weather typing approach to classify daily weather types or air masses and (2) development of daily within-weather-type simulation models to verify historical extreme weather events. Synoptic weather typing approaches have become popular in evaluating the impacts of climate change on a variety of environmental problems. One of the reasons is its ability to categorize a complex set of meteorological variables as a coherent index (Yarnal 1993; Cheng and Kalkstein 1997), which facilitates climate change impacts analysis. The synoptic weather typing approaches include principal components analysis, average linkage clustering procedure, and discriminant function analysis (Table 2) and attempt to develop suitable classification solutions that minimize within-category variances and maximize between-category variances. Although the approaches of synoptic weather typing used in the studies are the same, the input data are different among the studies. For example, in the climate change and freezing rain study, hourly surface meteorological observations and six-hourly U.S. National Centers for Environmental Prediction (NCEP) upper-air reanalysis data listed in Table 1 were used to classify daily weather types (Cheng et al. 2004, 2007c, 2011b). However, for the heavy rainfall-related flooding project, only hourly surface observations were used (Cheng et al. 2010, 2011a). The climate change and human mortality project used six-hourly surface meteorological data observed at 03:00, 09:00, 15:00, and 21:00 local standard time (Cheng et al. 2007a, 2008a). The selection of hourly or six-hourly meteorological data used for a particular study was determined by the temporal resolution of the targeted weather events and verified using within-weather-type simulation models.

When developing statistical extreme-weather-event simulation and downscaling models, we have two key issues that need to be taken into consideration: (1) selection of appropriate regression methods and (2) selection of significant predictors and predicted variable(s) for decision-maker’s needs. A number of linear and nonlinear regression methods, listed in Table 2, were used to develop the simulation models for various extreme weather events. The different regression methods were employed for different meteorological variables since a given regression method is suitable only for a certain type of data with a specific distribution. For example, the cumulative logit regression approach is a more suitable analysis tool for use in developing a simulation model for ordered categorical data, such as total cloud cover (Allison 2000). On the other hand, when time-series data are used in developing a regression-based prediction model, autocorrelation correction regression should be used to take into consideration the serial correlation in the time-series data (SAS Institute Inc. 2006). In order to effectively select significant predictors, the modeling conceptualizations in meteorology and hydrology were carefully analyzed to identify the predictors with the most significant relationships with the predictand. For example, when developing daily rainfall simulation models, in addition to the standard meteorological variables, a number of the atmospheric stability indices were used (Cheng et al. 2010). A certain threshold level of these stability indices can be used as indicators of atmospheric instability and the conditions associated with the potential development of convective precipitation (Glickman 2000; Environment Canada 2002).

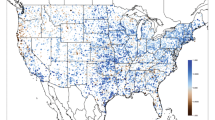

Two different kinds of the simulation models were developed to verify the occurrences of historical daily extreme weather events (e.g., freezing rain, Cheng et al. 2004, 2007c, 2011b) and to simulate the quantities of weather/environmental variables (e.g., daily streamflow volumes, air pollution concentrations, extreme temperature- and air pollution-related mortality, Cheng et al. 2007a, b, 2008a, b), separately in the studies. However, daily rainfall simulation modeling comprises both approaches altogether resulting in a two-step process: 1) cumulative logit regression to predict the occurrence of daily rainfall events and 2) using probability of the logit regression, a nonlinear regression procedure to simulate daily rainfall quantities (Cheng et al. 2010, 2011a). The 228 predictors used in development of daily rainfall event occurrence simulation models include not only the standard meteorological variables but also a number of the atmospheric stability indices. As described in the study by Cheng et al. (2010), the cumulative logit regression performed very well to verify historical daily rainfall events, with models’ concordances ranging from 0.82 to 0.96 (a perfect model would have a concordance value of 1.0). To effectively evaluate the performance of daily rainfall quantity simulation models, the four correctness levels of “excellent”, “good”, “fair” and “poor” were defined based on absolute difference between observed and simulated daily rainfall amounts. Cheng et al. (2010) have found that, across the four selected river basins shown in Fig. 1, the percentage of excellent and good daily rainfall simulations ranged from 62% to 84%. Further detailed information on the development of extreme-weather-event simulation models, including approaches for the selection of regression methods and predictors, can be found in publications by Cheng et al. (2004, 2007a, c, 2008a, 2010).

3.2 Regression-based downscaling

Approaches for projecting changes in the frequency and intensity of future extreme weather events require future hourly station-scale climate information of the standard meteorological variables used in synoptic weather typing and simulation modeling. These meteorological variables include surface and upper-air temperature, dew point temperature, west–east and south–north winds, sea-level air pressure, and total cloud cover. To derive future hourly station-scale climate data from GCM-scale simulations, Cheng et al. (2008c) developed a regression-based downscaling method. This downscaling method consisted of a two-step process: (1) spatially downscaling daily GCM simulations to the selected weather stations in south–central Canada and (2) temporally downscaling daily scenarios to hourly time steps. Similar to the development of extreme-weather-event simulation models, a number of linear and nonlinear regression methods, as listed in Table 2, were used to develop the downscaling “transfer functions”. Once again, the different regression methods were employed for different meteorological variables since a given regression method is suitable only for a certain type of data with a specific distribution.

As described in the study (Cheng et al. 2008c), the downscaling results showed that regression-based downscaling transfer functions performed very well in deriving daily and hourly station-scale climate information for all weather variables. For example, most of the daily downscaling transfer functions possess model R2s greater than 0.9 for surface air temperature, sea-level air pressure, upper-air temperature and winds; the corresponding model R2s for daily surface winds are generally greater than 0.8. The hourly downscaling transfer functions for surface air temperature, dew point temperature, and sea-level air pressure possess the highest model R2 (>0.95) of the weather elements. The functions for south–north wind component are the weakest model (model R2s ranging from 0.69 to 0.92 with half of them >0.89). For total cloud cover, hourly downscaling transfer functions developed using the cumulative logit regression have concordances ranging from 0.78 to 0.87 with over 75% >0.8. For more detailed information on the development and performance of downscaling transfer functions, refer to Cheng et al. (2008c).

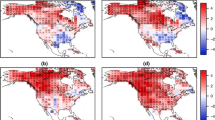

3.3 Future projections

Following downscaling of future hourly climate data, the synoptic weather typing and regression techniques were able to project changes in the frequency and intensity of future extreme weather events and their impacts. To achieve this, future daily weather types are needed and projected by applying synoptic weather typing methods using the downscaled future hourly climate data (Cheng et al. 2007b, c, 2008b, 2011a). To remove GCM model biases, future downscaled climate data were standardized using the mean and standard deviation of the downscaled GCM historical runs (1961–2000). As described by Cheng et al. (2008b, 2011a), the synoptic weather typing approach is appropriate for changing climate conditions since it can assign projected days with conditions above a threshold beyond the range of the historical observations into more extreme weather types. Using projections of daily synoptic weather types under a changing climate, the approach is able to project changes in the frequency and intensity of future extreme weather events and their impacts. As described in Table 2 and to reduce the uncertainties for decision-making, two independent methods were used in each study to project the possible impacts of climate change on extreme weather events. The first method was based on changes in the frequency of future extreme weather types relative to the historical weather types. The frequency and intensity of future daily extreme weather events were assumed to be directly proportional to change in frequency of future relevant weather types. The second method applied within-weather-type simulation models with downscaled future climate data to project changes in the frequency and intensity of future daily extreme weather events. Cheng et al. (2007b, c, 2008b, 2011a, b) provide more detailed information on these methodologies for projections of future extreme weather events and their impacts.

3.4 Model validation

All steps and principal methods described above, namely synoptic weather typing, extreme-weather-event simulation modeling, and regression-based downscaling, were validated against an independent dataset to ensure the models performed well and were not over-fitted (e.g., could be duplicated with another dataset). To achieve this, as indicated in Table 2, two validation methods were employed: 1) randomly selecting one-fourth to one-third of the total years as an independent dataset and 2) apply a “leave-one-year-out” cross-validation scheme. For both methods, the validation dataset was independent from the data sample used in the development of synoptic weather typing, the simulation models of the extreme weather events, and downscaling transfer functions. The first validation method was used for verification of synoptic weather types and validation of within-weather-type simulation modeling in heavy rainfall, freezing rain, air quality, and human health studies. The second method was used for validation of impacts and related physical variables such as streamflow simulation models and downscaling transfer functions.

In these studies, the validation approaches were applied using synoptic weather typing and the simulation models to verify historical weather types and extreme weather events (Table 2). The results of the verification, based on historical observations of the outcome variables or impacts (e.g. mortality rates) simulated by the models, showed a very good agreement, which indicates that the methods used in the projects were appropriate in development of extreme-weather-event simulation models (Cheng et al. 2007a, c, 2008a, 2010, 2011a, b). In addition, as described in Table 2, the performance of the downscaling transfer functions was evaluated for the following aspects:

-

1)

Validating downscaling transfer functions using a leave-one-year-out cross-validation scheme,

-

2)

Analyzing model R2s of downscaling transfer functions for both development and validation datasets,

-

3)

Comparing data distributions and diurnal/seasonal variations of downscaled GCM historical runs with observations over a comparative time period of 1961–2000,

-

4)

Examining extreme characteristics of the weather variables derived from downscaled GCM historical runs with observations, and

-

5)

Comparing against stakeholder’s expert judgement for consistency.

The results showed that regression-based downscaling methods performed very well in deriving future hourly station-scale climate information for all weather variables. For example, as shown in Table 3, the hourly downscaling transfer functions derived from both development and validation datasets possess model R2s >0.95 for surface air temperature, dew point temperature, and sea-level air pressure (Cheng et al. 2008b, c, 2011a).

4 Results

In summary, the studies on the various impacts of current and future climate extremes, whether for extreme precipitation, ice storms, heat, human mortality, flooding or other impacts, consisted of three steps: (1) historical analysis of climate extremes and their links (transfer functions) to impacts, (2) statistical downscaling of climate model simulations to scales and variables of significance to decision-makers, and (3) future projections under a changing climate. The major results for each of these three steps are summarized in this section, with more detailed information available from Cheng et al. (2004, 2007a, b, c, 2008a, b, c, 2010, 2011a, b). The results of the first two steps—historical analysis and statistical downscaling as well as development and validation of simulation models/downscaling transfer functions—are outlined in Table 3. The Table indicates that the simulation models and statistical downscaling transfer functions perform well when verified against historical extreme weather events and their impacts. The collaborating decision-makers who were involved in each of these studies later confirmed these conclusions verified the judgement of their experts. For example, streamflow simulation models showed that there were significant correlations between daily streamflow volumes and model simulations, with model R2s ranging from 0.61 to 0.62, 0.68 to 0.71, 0.71 to 0.74, 0.95 to 0.95 for the Humber, Grand, Upper Thames, Rideau River Basins, respectively. Over half of 80 air pollution simulation models across four selected cities (Montreal, Ottawa, Toronto, Windsor) in south–central Canada showed model R2 ≥ 0.6. Results from the freezing rain-related weather type study indicated that 75% and 100% of daily freezing rain events lasting ≥1 and ≥6 h/day were captured, respectively by the simulation models for the entire study period. In all of the studies, as indicated in Table 3, the simulation models were suitable to verify or duplicate extreme weather events and reliable to guide decision-making. The verifications of the simulation results, derived from both model development and validation datasets, were very similar and demonstrated significant skill in discrimination and prediction of quantities of daily/hourly meteorological variables and occurrences of daily extreme weather events.

Table 4 summarizes climate change projections of changes in the frequency and intensity of future extreme weather events late this century derived from these studies. The values of future changes, as shown with plus and minus signs in rightmost columns of Table 4, represent percentage increases and decreases, respectively, from the historical baselines listed as historical means in Table 4. Future changes of +100% and +200% indicate that the frequencies or intensities of the extreme weather events are projected to double and triple late this century compared to historical or current conditions. As illustration, across four selected river basins in south–central Canada, the number of days with rainfall ≥25 mm is projected to increase by about 10%–35% and 35%–50%, respectively over the periods 2046–2065 and 2081–2100 (from the “warm season” baseline period April–November, 1961–2002). For seasonal rainfall totals, the corresponding increases are projected to be about 15%–20% and 20%–30% by 2046–2065 and 2081–2100, respectively. The return values of annual maximum one-day streamflow volumes for the nine return periods studied (2, 5, 10, 15, 20, 25, 30, 50, 100 years) are projected to increase by 15%–35%, 25%–50%, and 30%–80%, respectively, with the ranges in the increases reflecting three 50-year periods of this century (2001–2050, 2026–2075, 2051–2100). Similarly, across four selected cities in south–central Canada (Montreal, Ottawa, Toronto, Windsor), heat-related mortality are projected to more than double and triple under a changing climate, respectively, by the middle and late of this century from the historical conditions over the period 1954–2000. For air pollution-related mortality rates driven by climate and weather conditions (i.e. atmospheric circulation patterns and heat or cold), the corresponding increases are projected to be 20%–30% and 30%–45% by the middle and late of this century, given that future air pollutant emissions remaining at the same level as that at the end of the 20th century. By the middle of this century, daily ice storm or freezing rain events for three colder months (December–February) are projected to increase by 70%–100%, 50%–70%, and 35%–45% in northern, eastern (including Montreal in Quebec) and southern Ontario (as shown in Fig. 1), respectively. The corresponding increases by the latter part of this century (2081–2100) are projected to be even greater: 115%–155%, 80%–110%, and 35%–55%, respectively, for northern, eastern and southern Ontario.

5 Conclusions and recommendation

The overarching purposes of these projects were to assess possible changes in the frequency and intensity of future extreme weather events late this century and to provide guidance to decision-makers in the development and selection of appropriate adaptation actions. Automated synoptic weather typing and a number of linear/nonlinear regression analyses were applied, altogether with downscaled future GCM climate data, to project future weather types and changes in the frequency and intensity of future extreme weather events and their impacts. In these projects, a formal validation and verification process of model results was built into all of the steps, including synoptic weather typing, historical extreme weather event simulation modeling, statistical downscaling, and projections of future extreme weather events and their impacts. The results of the verification, based on historical observations of the outcome variables simulated by the models, showed a very good agreement to observations and consistency with decision-maker’s expert judgement for all extreme weather events studied. As a result, it is proposed that a combination of synoptic weather typing, extreme weather event simulation modeling, and regression-based downscaling can be useful in projecting changes in the frequency and intensity of future extreme weather events and their impacts at a local scale or station scale.

Modeled results from these projects indicated that the frequency and intensity of future daily extreme weather events have the potential to significantly increase late this century under a changing climate. The implications of these increases need to be taken into consideration and integrated into planning, policies, and programs that deal with the longer term. For example, engineering infrastructure design standards and their embedded climatic design values need to consider climate extremes from the current as well as the future climates that are expected over the lifespans of the structures. Health policies and responses need to consider climate sensitivities for today’s climate conditions and to also factor in future climate change along with future social and demographic changes for current preventative actions. Air quality regulations, policies, and programs also need to be informed on links to current and future climate conditions. Similarly, disaster prevention or risk reduction actions need to be guided by current climate conditions and future projections on existing and emerging climate hazards in order to manage current and changing risks. Water resource and flood managers at the regional level need to plan for current and changing climate risks to water resources (i.e. low and high streamflow conditions) and to assess infrastructure designs, resilience, and flood reduction services supplied by surrounding landscapes and ecosystems as adaptation options to manage changing risks. The adaptation strategies and policies that will need to be developed to deal with the implications of changing climate extremes are many and will affect all sectors. As the IPCC (2007a) recently pointed out, “More extensive adaptation than is currently occurring is required to reduce vulnerability to future climate change.” One of the key components of developing effective adaptation options and plans to reduce meteorological and hydrological risks from the changing climate is to implement effective and meaningful early warning systems that result in stakeholder actions. Studies that can link climate existing extremes to impacts and decisions and inform policies and programs on changing and future extremes are essential for design of better risk reduction and early warning systems. Decision makers in south–central Canada are requesting defensible and relevant scientific guidance on future climate extremes in order to improve the resilience of infrastructure that is being constructed today and must withstand the climate extremes expected over the lifespan of the structures (decades). Decision-makers also need relevant scientific guidance to develop the adaptive capacity to reduce the multiple risks and realize some of the potential opportunities that may result from changing climate extremes.

References

Allison PD (2000) Logistic regression using the SAS system: theory and application. SAS Institute Inc, Cary, 288 pp

Cheng CS, Kalkstein LS (1997) Determination of climatological seasons for the East Coast of the U.S. using an air mass-based classification. Cli Res 8:107–116

Cheng CS, Auld H, Li G, Klaassen J, Tugwood B, Li Q (2004) An automated synoptic typing procedure to predict freezing rain: an application to Ottawa, Ontario, Canada. Weather Forecast 19:751–768

Cheng CS, Campbell M, Li Q, Li G, Auld H, Day N, Pengelly D, Gingrich S, Yap D (2007a) A synoptic climatological approach to assess climatic impact on air quality in south-central Canada. Part I: historical analysis. Water Air Soil Pollut 182:131–148

Cheng CS, Campbell M, Li Q, Li G, Auld H, Day N, Pengelly D, Gingrich S, Yap D (2007b) A synoptic climatological approach to assess climatic impact on air quality in south-central Canada. Part II: future estimates. Water Air Soil Pollut 182:117–130

Cheng CS, Auld H, Li G, Klaassen J, Li Q (2007c) Possible impacts of climate change on freezing rain in south-central Canada using downscaled future climate scenarios. Nat Hazards Earth Syst Sci 7:71–87

Cheng CS, Campbell M, Li Q, Li G, Auld H, Day N, Pengelly D, Gingrich S, Klaassen J, MacIver D, Comer N, Mao Y, Thompson W, Lin H (2008a) Differential and combined impacts of extreme temperatures and air pollution on human mortality in south–central Canada. Part I: historical analysis. Air Qual Atmos Health 1:209–222

Cheng CS, Campbell M, Li Q, Li G, Auld H, Day N, Pengelly D, Gingrich S, Klaassen J, MacIver D, Comer N, Mao Y, Thompson W, Lin H (2008b) Differential and combined impacts of extreme temperatures and air pollution on human mortality in south–central Canada. Part II: future estimates. Air Qual Atmos Health 1:223–235

Cheng CS, Li G, Li Q, Auld H (2008c) Statistical downscaling of hourly and daily climate scenarios for various meteorological variables in south-central Canada. Theor Appl Climatol 91:129–147

Cheng CS, Li G, Li Q, Auld H (2010) A synoptic weather typing approach to simulate daily rainfall and extremes in Ontario, Canada: potential for climate change projections. J Appl Meteor Climatol 49:845–866

Cheng CS, Li G, Li Q, Auld H (2011a) A synoptic weather typing approach to project future daily rainfall and extremes at local scale in Ontario, Canada. J Climate 24:3667–3685

Cheng CS, Li G, Auld H (2011b) Possible impacts of climate change on freezing rain using downscaled future climate conditions: updated for eastern Canada. Atmosphere-Ocean 49:8–21

Cubasch U, Waszkewitz J, Hegerl G, Perlwitz J (1995) Regional climate changes as simulated time-slice experiments. Clim Chang 31:273–304

Environment Canada (2002) Heavy Rainfall Check List (June 2002), http://wto105.tor.ec.gc.ca/web/ (an internal website, accessed July 2006)

Fowler HJ, Blenkinsopa S, Tebaldi C (2007) Review: linking climate change modelling to impacts studies: recent advances in downscaling techniques for hydrological modeling. Int J Climatol 27:1547–1578

Glickman TS (2000) Glossary of meteorology, 2nd edn. American Meteorological Society, Boston, 855 pp

IPCC (2007a) Summary for policymakers. In: Solomon S, Qin D, Manning M, Chen Z, Marquis M, Averyt KB, Tignor M, Miller HL (eds) Climate change 2007: the physical science basis. Contribution of Working Group I to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, United Kingdom and New York, NY, USA

IPCC (2007b) Summary for policymakers. In: Parry ML, Canziani OF, Palutikof JP, van der Linden PJ, Hanson CE (eds) Climate change 2007: impacts, adaptation and vulnerability. Contribution of Working Group II to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, UK, pp 7–22

Kharin VV, Zwiers FW (2005) Estimating extremes in transient climate change simulations. J Climate 18:1156–1173

Last J, Chiotti Q (2001) Climate change and health. ISUMA 2(4):62–69

Medina-Ramón M, Schwartz J (2007) Temperature, temperature extremes, and mortality: a study of acclimatisation and effect modification in 50 US cities. Occup Environ Med. doi:10.1136/oem.2007.033175

Meehl GA, Tebaldi C (2004) More intense, more frequent, and longer lasting heat waves in the 21st century. Science 305:994–997

PCMDI (2006) IPCC model output. http://www-pcmdi.llnl.gov/ipcc/about_ipcc.php (accessed July 2006)

Riedel D (2004) Chapter 9: human health and well-being. In: Lemmen DS, Warren FJ (eds) Climate change impacts and adaptation: A Canadian Perspective Report, Government of Canada 153–169

SAS Institute Inc (2006) The AUTOREG procedure: Regression with autocorrelation errors. http://support.sas.com/91doc/docMainpage.jsp (accessed October 2006)

Wilby RL, Wigley TML (1997) Downscaling general circulation model output: a review of methods and limitations. Prog Phys Geogr 21:530–548

Wilby RL, Dawson CW, Barrow EM (2002) SDSM—A decision support tool for the assessment of regional climate change impacts. Environ Model Softw 17:147–159

Yarnal B (1993) Synoptic climatology in environment analysis. Belhaven Press, London, p 195

Zwiers FW, Kharin VV (1998) Changes in the extremes of the climate simulated by CCC GCM2 under CO2 doubling. J Climate 11:2200–2222

Acknowledgments

The project of extreme temperature, air quality, and human mortality was funded through the Health Policy Research Program, Health Canada (6795-15-2001/4400011). The project of heavy rainfall-related flooding risks was funded by the Government of Canada’s Climate Change Impacts and Adaptation Program (CCIAP), administered by Natural Resources Canada (A901). The authors also acknowledge in-kind and financial support for all projects provided by Environment Canada. The views expressed herein are solely those of the authors and do not necessarily represent the views or official policy of Health Canada, Natural Resources Canada, or Environment Canada.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License ( https://creativecommons.org/licenses/by-nc/2.0 ), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Cheng, C.S., Auld, H., Li, Q. et al. Possible impacts of climate change on extreme weather events at local scale in south–central Canada. Climatic Change 112, 963–979 (2012). https://doi.org/10.1007/s10584-011-0252-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10584-011-0252-0