Abstract

The aims of the present meta-analysis were to (1) examine long-term effects of universal secondary school-based interventions on a broad range of competencies and problems and (2) analyze which intervention components were related to stronger or weaker intervention effects at follow-up. Fifty-four studies of controlled evaluations (283 effect sizes) reporting on 52 unique interventions were included. Long-term intervention effects were significant but small; effect sizes ranged from .08 to .23 in the intrapersonal domain (i.e., subjective psychological functioning) and from .10 to .19 in the interpersonal domain (i.e., social functioning). Intervention components were generally related to effects on specific outcomes. Some components (e.g., group discussions) were even related to both stronger and weaker effects depending on the assessed outcome. Moreover, components associated with long-term effects differed from those associated with short-term effects. Our findings underscore the importance of carefully selecting components to foster long-term development on specific outcomes.

PROSPERO registration number: CRD42019137981.

Similar content being viewed by others

Introduction

By stimulating students’ psychosocial functioning, schools can play an important role in both helping students develop competencies and preventing them from developing problems in the intrapersonal domain (i.e., the ability to manage own feelings, emotions and attitudes towards the self), and the interpersonal domain (i.e., the ability to have positive relationships, understand social situations, and respond appropriately in social contexts) (Barber, 2005; Pellegrino & Hilton, 2012; Shek & Leung, 2016). To this end, many universal school-based interventions addressing both domains have been designed. Although these interventions aim to enhance students’ long-term development, little is known about their long-term effects. Knowledge about long-term intervention effects is pivotal as initial intervention effects may be sustained, fade-out, or increase over time (Gottfredson et al., 2015). Despite the importance of this information, most studies that evaluate interventions measure intervention effects only directly after the interventions have ended. In the meta-analysis of Durlak and colleagues (2011), which examined the effectiveness of social-emotional school-based interventions, only 33 (15%) of the included studies collected data at least 6 months after the intervention ended. To increase our understanding of long-term effects of universal school-based interventions, the current meta-analysis had two aims: (1) to evaluate the long-term effectiveness of universal school-based interventions in the intra- and interpersonal domains and (2) to identify which intervention components were associated with stronger or weaker long-term intervention effects.

Long-Term Effects of Universal School-Based Interventions

The few studies that did examine long-term effects of universal school-based interventions showed that these effects appear to be modest and seem to fade-out over time. For instance, Dray et al. (2017) found in their systematic review of universal school-based resilience-focused interventions that the positive intervention effects on anxiety and depressive symptoms extinguished over time. Decreasing or no long-term intervention effects are also reported by Mackenzie and Williams (2018) in their review of universal school-based interventions promoting mental and emotional wellbeing in the UK as well as by Sklad et al. (2012) in their meta-analysis of school-based social, emotional, and behavioral interventions. Taylor et al. (2017) conducted a meta-analysis of long-term intervention effects of Social Emotional Learning (SEL) interventions, and while they found positive long-term intervention effects, these effects were generally smaller than the short-term effects of the same interventions on SEL skills, attitudes, positive social behavior, conduct problems, and emotional distress (Durlak et al., 2011).

Follow-up intervention effects seem especially modest for adolescents. Both Dray et al. (2017) and Taylor et al. (2017) found fewer and weaker long-term intervention effects for adolescents than for children on indicators of general wellbeing as well as indicators of a disadvantageous development, such as anxiety and psychological distress (e.g., Cohen’s d = 0.27 for 5–10 years old versus Cohen’s d = 0.12 for 11–13 years old on general wellbeing; Taylor et al., 2017). Moreover, Taylor et al. (2017) showed that only a small proportion of the included interventions (11 of the 82, or 13%) targeted adolescents (14–18 years old). While Dray et al. (2017) included more interventions targeting adolescents (38 of the 57, or 67%), these studies focused only on problematic outcomes such as depression and anxiety. This lack of a broad focus on adolescent development is remarkable, as adolescence is an important developmental phase in which youth start to consolidate their own identity and encounter increasing opportunities for social interactions (Barber, 2005). To gain insight in the extent to which universal school-based interventions can cultivate adolescents’ long-term psychosocial development, the current meta-analysis focused specifically on interventions targeting adolescents. Hence, we included only secondary school-based interventions, rather than interventions targeting both children and adolescents as in previous meta-analyses and systematic reviews (e.g., Dray et al., 2017; Taylor et al., 2017).

Furthermore, in contrast to previous meta-analyses, we made a distinction between outcomes in the intrapersonal domain and outcomes in the interpersonal domain. These two domains are related, but distinct. How people view themselves can affect the way they approach social interactions, and vice versa (Finkel & Vohs, 2006). However, where the intrapersonal domain reflects one’s subjective psychological functioning, the interpersonal domain reflects one’s social functioning (Dufner et al., 2019). We examined a broad range of outcomes regarding competencies and problems in both domains. Competencies reflect skills and capacities that may contribute to adolescents’ healthy psychosocial development, whereas problems are difficulties and vulnerabilities that can heighten adolescents’ chance of developing psychopathology (Van Orden et al., 2005). In addition, we tested the extent to which the duration of the follow-up period affected intervention effects. We hypothesized that school-based interventions establish small positive effects at follow-up and that these long-term intervention effects are moderated by the duration of follow-up period, with a longer follow-up period relating to a decline in intervention effects (i.e., fade-out; Dray et al., 2017; Mackenzie & Williams, 2018; Sklad et al., 2012; Taylor et al., 2017).

Intervention Components and Follow-Up Effects

Besides examining the effectiveness of universal secondary school-based interventions at follow-up, the present meta-analysis aimed to extend previous research by examining which intervention components were related to stronger or weaker intervention effects at follow-up. Previous meta-analyses examining school-based interventions examined few, if any, components and analyzed how these relate to intervention effects immediately after the intervention (e.g., Mertens et al., 2020; Sheridan et al., 2019). To increase our understanding of long-term intervention effects, we must unravel which intervention components are related to long-term effects. Therefore, we studied a broad range of components and how they relate to intervention effects at follow-up. Studying different types of components provides an elaborate overview of which components are interesting to study further when aiming to cultivate a specific outcome or domain. Based on this knowledge, we can optimize interventions through the addition of effective components and the elimination of components related to weaker long-term effects (Sheridan et al., 2019). In addition, this knowledge will enable schools to select and implement interventions according to their use of evidence-based components (Nocentini et al., 2015). As schools have limited time and resources to invest in implementing interventions, this guidance is of great practical value.

We focused on three types of intervention components: Content components, instructional components, and structural components. Content components represent specific skills that are taught to enhance positive outcomes, i.e., “what they learn”, such as emotion regulation and problem solving (Boustani et al., 2015). Instructional components represent techniques and information delivery methods applied by the facilitator of the intervention, i.e., “how they learn it”, such as modeling and multimedia use (Boustani et al., 2015). Structural components represent the structure of the intervention that may affect intervention effects, i.e., “how the intervention is set up”, such as parental involvement and the number of sessions (Lee et al., 2014).

All three types of components haven been related to short-term intervention effects. Regarding content components related to short-term intervention effects, Gaffney et al. (2021) found in their meta-analysis of anti-bullying interventions that the component of ‘teaching social-emotional skills’ was related to weaker effects on bullying and victimization. The meta-analysis of universal school-based interventions of Mertens et al. (2020) looked at more specific components of teaching social-emotional skills and found that focusing on emotion regulation, assertiveness, and/or cognitive coping were related to weaker effects. On the other hand, focusing on insight building and problem solving related to stronger effects. Concerning instructional components and short-term effects, using the component of ‘an active learning approach’ (i.e., methods in which students interact with others and perform tasks) is consistently related to stronger intervention effects. For instance, practicing the new skills learned during the intervention as well as modeling of desired behaviors have been associated with stronger effects in, respectively, universal school-based interventions (Mertens et al., 2020) and family-school interventions (Sheridan et al., 2019). Regarding structural components and short-term effects, longer and more extensive school-based interventions seem, in general, to be associated with stronger intervention effects. Implementing more sessions and involving more people in the intervention (e.g., whole-school approach, parental involvement) has been related to stronger effects on students’ mental health (Mertens et al., 2020; Sheridan et al., 2019), school climate (Mertens et al., 2020), and bullying (Gaffney et al., 2021; Mertens et al., 2020; Sheridan et al., 2019).

Although these meta-analyses provide a useful overview of intervention components related to short-term effects, little is known about components related to long-term intervention effects. It is important to study associations between components and short-term as well as long-term effects, as these associations may differ. For instance, a component unrelated to short-term effects may be related to long-term effects, thus representing a ‘sleeper effect’ in which intervention effects emerge or increase over time after the conclusion of the intervention (Bell et al., 2013; Van Aar et al., 2017). Therefore, the second aim of the current meta-analysis was to identify components related to stronger and weaker intervention effects at follow-up. Studying how components relate to both stronger and weaker effects enabled us to examine ‘what might work’ as well as ‘what might not work’. Given that previous research has not yet examined relations between components and long-term effects of universal school-based interventions, this aim was exploratory.

Method

The current meta-analysis was part of a larger project examining associations between intervention components and intervention effects (PROSPERO registration number: CRD42019137981). Therefore, our meta-analysis applied the same method as the meta-analysis of Mertens et al. (2020).

Inclusion and Exclusion Criteria

We aimed to include studies that evaluated universal secondary school-based interventions that intended to stimulate competencies and/or prevent the development of problems in students’ intra- and interpersonal domains. Universal secondary school-based interventions were defined as interventions implemented during regular school hours that targeted all students (Mychailyszyn et al., 2012; Peters et al., 2009). The intrapersonal domain was defined as the ability to manage one’s own feelings, emotions, and attitudes about the self (Barber, 2005). The interpersonal domain was defined as the ability to build and maintain positive relationships with others; to understand social situations, roles, and norms; and to respond appropriately in social contexts (Pellegrino & Hilton, 2012; Shek & Leung, 2016). Students’ abilities in both domains can facilitate personal functioning represented by competencies (e.g., resilience, self-regulation, social competence) or obstruct personal functioning represented by experienced problems (e.g., internalizing behavior, aggression, bullying; Dufner et al., 2019; Finkel & Vohs, 2006). Definitions of the two general domains and the subdomains are provided in the online Appendix A.

Inclusion criteria were: (1) the intervention was implemented in a regular school setting (i.e., schools providing special needs education were excluded), (2) the intervention took place during regular school hours in a group setting, (3) the intervention aimed to improve competencies and/or prevent problems in the intra- and/or interpersonal domain (i.e., interventions primarily aiming to improve students’ physical health (e.g., prevention of substance use, nutrition, pregnancy, STDs) or prevent a specific disorder (e.g., depression) were excluded.), (4) the intervention was universal, so targeting all students at the school, (5) the participants were in middle school or high school (Grades 6–12), (6) the study included a control group, (7) the study included a quantitative baseline and follow-up measurement of (subdomains of) the intrapersonal domain and/or interpersonal domain, (8) sufficient information concerning baseline and follow-up measurements was reported, or obtained after contact with the author, so that effect sizes could be calculated at follow-up, corrected for baseline differences, (9) the study was written in English, and (10) the study was published as article, book, or book chapter. We did not include unpublished studies as their inclusion does not reduce the possible impact of publication bias and can even be counterproductive, due to selection bias (Ferguson & Brannick, 2012).

Literature Search

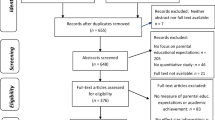

We searched four databases (i.e., PsycINFO, PubMed, ERIC, and CENTRAL) to cover psychological, medical, and education literature without a restriction on time period. We searched for randomized controlled trials and quasi-randomized controlled trials as both study designs feature a comparison between an intervention and a control condition (though the extent of random allocation to the condition differs between the two designs). Search terms to obtain school literature (e.g., school, class), interventions (e.g., prevention, intervention), adolescents (e.g., adolescent, youth), and intra- and interpersonal outcomes (e.g., self-esteem, social competence) were used. Given that this search results in an extremely high number of studies, restrictions to the search were added. These restrictions aimed to avoid interventions that targeted other populations (e.g., preschool, clinical) or outcomes (e.g., substance use, lifestyle) than those targeted in this study (see online Appendix B for the complete search string). This search (January 2021) resulted in 7,028 studies in PsycINFO, 3643 studies in PubMed, 1766 studies in ERIC, and 431 studies in CENTRAL. After duplicates were removed, 10,847 unique studies remained. Additionally, we searched the reference lists of included studies and relevant reviews and meta-analyses, resulting in an additional 14 studies. As a first step, all identified studies were screened by the first author based on title and abstract, which led to the exclusion of 10,350 studies (95%). The author then screened the full-texts of the remaining 497 studies, resulting in the exclusion of another 444 studies (89%). See Fig. 1 for the flow diagram.

One of the co-authors independently screened a random selection of studies to assess reliability of the two screening phases. To this end, 11% (1175 studies) of all identified studies and 10% (48 studies) of the studies remaining after the first screening were checked for relevance for inclusion in the present meta-analysis. Reliability was corrected for prevalence bias due to the high rate of exclusion over inclusion (Hallgren, 2012). Reliability for both screening phases was good (title/abstract: 96% agreement, prevalence corrected κ = 0.96; full-text screening: 98% agreement, prevalence corrected κ = 0.96). Disagreements regarding inclusion of studies were solved through discussion between the two researchers.

Data Extraction

Studies were coded for information concerning the study (e.g., year of publication, country where study was conducted), sample (e.g., age, gender distribution), design and method (e.g., randomization, attrition analyses), intervention (e.g., intervention provider, aim of intervention), effect size data (e.g., outcome category), and intervention components (e.g., problem solving, practice, parental involvement). The intervention components were primarily based on the meta-analysis by Boustani and colleagues (2015) who, in turn, based their components on the PracticeWise Clinical Coding System (PracticeWise, 2009). An overview of all components and their definitions is presented in the online Appendix C. Sources cited in the study and other freely available materials, such as descriptions from the developer or websites, were retrieved for coding the components (Boustani et al., 2015; Kaminski et al., 2008). In cases where insufficient data were reported for calculating the effect size, the first author was contacted. When this author had not responded after a reminder, the second or last author was contacted and, if necessary, reminded. If the required data could not be obtained after this, the study was excluded from the meta-analysis (See Fig. 1 for the flow diagram). Key characteristics of the included interventions are provided in the online Appendix D.

Of the included studies, 30 studies (28%) were coded independently by a second coder for reliability. The inter-rater reliability was moderate to excellent with an average intra-class-correlation of 0.97 (SD = 0.05), ranging from 0.88 to 1.00, for continuous variables, and an average Cohen’s kappa of 0.82 (SD = 0.11), ranging from 0.60 to 1.00, for categorical variables. An exception was coding of the component ‘Insight building’ which had a slightly lower reliability (Cohen’s kappa of 0.52). Disagreements between the two coders were discussed and solved unanimously.

Calculation and Analyses of Effect Sizes

Effect sizes reflected standardized mean differences between the intervention and control condition (Cohen’s d), following the procedures of Lipsey and Wilson (2001). Effect sizes were calculated for the first follow-up measurement after the post-measurement, unless the post-measurement was conducted more than 6 months after the end of the intervention. In those cases, the post-measurement was treated as a follow-up (Gottfredson et al., 2015). Effect sizes were adjusted for baseline differences and for Hedges’ (1983) small sample correction. The effect sizes were screened on outliers and winsorized by replacing outliers with the lower or upper value of two standard deviations from the mean (Lipsey & Wilson, 2001).

Publication Bias

Given that studies with nonsignificant or negative results are less likely to be published than studies with significant or positive results, we assessed publication bias using a funnel plot. Effect sizes of studies are assumed to be symmetrically distributed around a true effect size represented by a funnel with at the top more precise effect sizes and at the bottom less precise effect sizes. An asymmetrical distribution of effect sizes can be an indication of publication bias (Light & Pillemer, 1984). The symmetry of a funnel plot can be tested with Egger’s regression test (Egger et al., 1997). In case of an asymmetrical funnel plot, indicated by Egger’s regression test, the effect for possible publication bias can be adjusted with the trim-and-fill analysis (Duval & Tweedie, 2000a, 2000b). Studies that fall outside the symmetric part of the funnel plot are estimated and trimmed. Subsequently, the true center of the trimmed funnel plot is estimated and the trimmed studies and their missing counterparts are replaced in the funnel. The corrected mean of this filled funnel plot can be estimated providing an effect size adjusted for possible publication bias. However, these tests assume independence of effect sizes which is not the case in multilevel meta-analyses. We took dependency among effect sizes into account by including the variance of the effect sizes as a moderator in Egger’s regression test. This approach was not possible in the trim-and-fill analysis, since no moderator can be added. To have some indication of potentially missing effect sizes, the trim-and-fill method was used for sensitivity analyses.

Analyses

For each measure reported in the studies that fell within the intra- or the interpersonal domain, we calculated an effect size. Furthermore, we examined the time span between the end of the intervention and the follow-up measurement as a moderator of intervention effects. We took the clustering of effect sizes within a study into account by using multilevel meta-analytical models with three levels. The first level models sampling variance around each effect size. The second level models variance between effect sizes within studies. The third level models variance between studies (Assink & Wibbelink, 2016; Van den Noortgate et al., 2013).

Given that we were interested in the interventions’ effectiveness compared to the control condition, we used interventions as the unit of analysis. Hence, if a publication examined two interventions, both interventions were included and analyzed separately. If multiple publications examined the same intervention, though evaluated in different samples, the effect sizes of these publications were analyzed within the same intervention cluster. If multiple publications examined the same intervention within the same sample, we coded the most comprehensive publication. The less comprehensive publication was checked for additional information.

The multilevel analyses were conducted in R using the metaphor package (Viechtbauer, 2010). We analyzed the overall effectiveness at follow-up of universal school-based interventions on students’ intra- and interpersonal domains in separate models. To assess the extent to which these effect sizes at follow-up reflected intervention effects rather than methodological influences or biases, we examined methodological rigor (Lipsey & Wilson, 2001) based on the Cochrane Risk of Bias 2.0 tool for Cluster Randomized Trials (Higgins et al., 2016). We analyzed randomization (random vs. quasi-random assignment), completeness of outcome data (percentage of drop-out), and type of comparison group (passive: No intervention/waitlist vs. active: Care as usual/other intervention) as covariates. Characteristics of methodological rigor that were significantly related to the overall effect sizes at follow-up were included in further analyses as covariates.

Associations between components and intervention effects at follow-up were examined through moderation analyses. Moderation analyses were only conducted when both levels of the moderator (i.e., component present or not) contained at least three effect sizes (Crocetti, 2016). We report on significant effects (p < 0.05) as well as effects that trend towards significance (p < 0.10) given the low power of some of our analyses. In addition, these findings contribute to the hypotheses generating character of the meta-analysis and help illustrate the generalizability of moderation effects to other outcomes.

Results

Descriptive Characteristics

In total, 54 studies were included that reported on 52 unique interventions, from which we extracted 283 effect sizes. Of these intervention effects, 128 were in the intrapersonal domain and 155 were in the interpersonal domain. Six effect sizes from two studies (Bonell et al., 2018; Kaveh et al., 2014) were extreme outliers (Cohen’s d = 1.38–3.69) and believed to be unrepresentatively high. We therefore winsorized these six effect sizes.

The included studies were published between 1988 and 2021 (median year of publication: 2015). They were conducted in Europe (k = 26), the USA (k = 13), Australia (k = 6), Asia (k = 5), Africa (k = 2), and Canada (k = 2). Studies mostly allocated participants randomly to the conditions (k = 37) and slightly more often used a passive control group (k = 32) than an active control group (k = 22). On average, the follow-up measurement occurred 27.70 weeks (SD = 20.80) after the end of the intervention. The follow-up periods ranged from 2 to 109 weeks with 4 studies (7.4%) having a follow-up period of less than 3 months and 42 studies (78%) having a follow-up period of 24 weeks or longer.

In total, 51,017 participants participated in the included studies with an average age of 13.65 years (SD = 1.43) at the start of the intervention. Roughly half of these participants were boys (46%). Of the studies that reported on participants’ ethnic backgrounds (k = 31), 20 (37%) included participants mainly from an ethnic majority background, 8 (15%) included participants mostly from an ethnic minority background, and 3 (6%) included participants from a mix of majority and minority ethnic backgrounds. On average, participants’ drop-out rate in the studies was 17% (SD = 14.20). The interventions consisted of 11 sessions (SD = 7.32) on average, with an average timespan of 20.85 weeks (SD = 28.36). Teachers conducted the interventions about half the time (k = 24), while professionals conducted the other half (k = 30). Additionally, peers were involved in the implementation of 3 interventions.

Overall Effect Sizes at Follow-Up

In the intrapersonal domain, interventions had a small positive effect at follow-up (d = 0.19; see Table 1). Intervention effects on self-esteem and general wellbeing were slightly stronger than on self-regulation. The effect size of internalizing behavior was significant but negligible (d = 0.08). No significant intervention effect was found on resilience. Also in the interpersonal domain, interventions had a small positive effect at follow-up (d = 0.16). The strongest effect was found on aggression. Intervention effects on sexual health and social competence were significant and comparable in magnitude. The intervention effect on bullying was also comparable in magnitude, though not significant.

The duration of the follow-up period did not affect the magnitude of intervention effects, other than for intervention effects on general wellbeing and social competence. A longer time span between the intervention and the follow-up was related to stronger intervention effects on general wellbeing [B = 0.25, p = 0.009, 95% CI (0.07; 0.43)]. In contrast, a longer time span between the intervention and follow-up was related to weaker intervention effects on social competence [B = − 0.09, p = 0.006, 95% CI (− 0.15; − 0.03)].

Analyses examining methodological rigor showed that effect sizes at follow-up concerning the intrapersonal domain, the interpersonal domain, and the subdomains did not depend on methodological considerations, i.e., the randomization of participants, rate of drop-out, or choice of comparison group.

Publication Bias

The distribution of effect sizes in the intrapersonal domain appeared to be asymmetrical (Egger’s regression test z = − 4.02, p < 0.001; see Fig. 2a), showing that there was a risk of publication bias. The trim-and-fill analysis indicated that 35 effect sizes were missing on the top right, meaning that large scale studies with larger effect sizes were missing. The adjusted effect size was 0.28 [95% CI (0.23;0.34)]. This sensitivity analysis, in which we could not correct for dependency among effect sizes, indicated that the original effect sizes provided a conservative estimate of the overall intervention effect. We therefore conducted further analyses based on the original effect sizes. Regarding the interpersonal domain, the distribution of effect sizes appeared to be symmetrical (Egger’s regression test z = 0.59, p = 0.558; see Fig. 2b), indicating that there was a low risk of publication bias.

Intervention Components Related to Intervention Effects at Follow-Up

Descriptive Analyses

Content and instructional components that are commonly used in interventions to stimulate competencies and prevent problems in the intrapersonal domain (see Fig. 3) are generally the same components as those used in interventions addressing the interpersonal domain (see Fig. 4). Commonly used components are teaching students emotion regulation and social skills (content components), practicing skills during the intervention, facilitating discussions, and providing didactic instructions (instructional components). Interventions differ, though, in their commonly used structural components. Interventions addressing the intrapersonal domain often include an individual aspect, whereas interventions addressing the interpersonal domain often involve parents and/or the whole school in the intervention.

Intrapersonal Domain

None of the components was significantly associated with intervention effects at follow-up on the intrapersonal domain in general (see Table 2). Regarding the subdomains, insight building and multimedia use were related to stronger intervention effects on self-regulation, whereas teaching students problem solving was related to weaker effects on this outcome. Having group discussions was associated with stronger effects on internalizing behavior and involving the whole school in the intervention was related to stronger effects on students’ general wellbeing.

There were also some relations between components and intervention effects showing a trend towards significance (p < 0.10). Increasing students’ self-efficacy was related to stronger intervention effects on resilience. Practicing skills during the intervention and having group discussions were related to stronger effects on self-regulation. Last, multimedia use was related to stronger effects on internalizing behavior.

Interpersonal Domain

Teaching students to regulate their emotions was significantly related to stronger intervention effects at follow-up on the interpersonal domain in general (see Table 3). Concerning the subdomains, increasing students’ self-efficacy, facilitating insight building, modeling desired behaviors, and setting goals were related to stronger intervention effects on sexual health.

Again, some associations between components and intervention effects showed a trend towards significance (p < 0.10). The inclusion of an individual part in the intervention was related to weaker intervention effects on the interpersonal domain in general. Regarding the subdomains, teaching assertiveness was related to stronger intervention effects on sexual health. In contrast, teaching assertiveness and having group discussions were related to weaker effects on social competence. Teaching students to regulate their emotions was related to stronger intervention effects on bullying, whereas involving parents in the intervention was related to weaker effects on this outcome.

Discussion

Although universal secondary school-based interventions aim to stimulate students’ long-term development, intervention effects generally seem to fade-out over time (e.g., Dray et al., 2017; Taylor et al., 2017). More insight is needed into which intervention effects last long-term and which components may generate or maintain these long-term effects. Our meta-analysis focused on a broad range of competencies and problems in which we made a distinction between outcomes in the intrapersonal domain and outcomes in the interpersonal domain. We explicitly examined interventions targeting adolescents. Given that long-term intervention effects seem especially small for this group, it is important to gain knowledge about interventions’ long-lasting impact on their psychosocial development and which intervention components are associated with stronger effects at follow-up for adolescents specifically.

Long-term Effects of Universal School-Based Interventions

The intervention effects at follow-up were positive, albeit small, on multiple competencies and problems. More specifically, in the intrapersonal domain we found small positive effects on self-esteem, self-regulation, general wellbeing, and internalizing problems, but found no significant effects on resilience. In the interpersonal domain, we found small positive effects on sexual health, social competence, and aggression, though no significant effects on bullying. The effects were generally unaffected by the duration of the follow-up period. Only intervention effects on general wellbeing and social competence seemed to be affected by the duration of follow-up. Regarding general wellbeing, intervention effects became stronger over time. Accordingly, intervention effects at post-measurement on general wellbeing found in the meta-analysis of Mertens et al. (2020) were smaller than the intervention effects at follow-up on general wellbeing found in the current meta-analysis, respectively d = 0.13 and d = 0.22. This increase in effect indicates that it might take some time before students can benefit optimally from intervention content related to their general wellbeing. Students may need time to practice skills learned during the intervention and gain confidence in using these skills (Van Aar et al., 2017). Over time, they may apply these skills to deal effectively with situations encountered after the intervention, strengthening their sense of agency and improving their general wellbeing (Bell et al., 2013).

Concerning social competence, intervention effects became weaker over time. Although minimal, this decrease in intervention effects over time also shows when comparing the effect at post-measurement found by Mertens et al. (2020) with the effect at follow-up found in the current meta-analysis, respectively d = 0.16 and d = 0.11. Newly learned social skills may especially need to be practiced on a regular basis in order to be maintained. Based on these findings, practitioners may consider implementing booster sessions after the intervention to sustain intervention effects over time on social competence (Van Aar et al., 2017). However, before booster sessions are implemented, more research is needed to inform the development of effective booster sessions, as booster sessions are not inherently related to stronger intervention effects over time (Lochman et al., 2014; Neil & Christensen, 2009).

Intervention Components and Follow-Up Effects

Components seemed to be related to effect sizes on specific outcomes rather than across several outcomes within a domain. In fact, our results suggest that some components may be associated with stronger effects on one outcome and weaker effects on another outcome. For example, having group discussions during the intervention was related to stronger effects at follow-up on self-regulation and internalizing behaviors. However, it was also related to weaker effects at follow-up on social competence. Moreover, components related to stronger intervention effects at follow-up were not necessarily frequently implemented. For instance, setting goals was related to stronger intervention effects, though this component was only implemented in 15% and 19% of the interventions targeting the intra- and interpersonal domains, respectively. These findings emphasize the importance of carefully selecting components that match the competency or problem the intervention addresses and that have an evidence base for their effectiveness.

Content components seemed particularly important to stimulate follow-up effects on competencies and problems in the interpersonal domain. Teaching students social-emotional skills, such as emotion regulation, self-efficacy, and insight building, was related to stronger intervention effects at follow-up. Interestingly, in other studies, focusing on social-emotional skills was related to negative effects immediately after the intervention. In the meta-analysis of Gaffney et al. (2021), which examined anti-bullying interventions, teaching students social-emotional skills during the intervention was related to weaker effects immediately after the intervention on both bullying and victimization. Similarly, the meta-analysis of Mertens et al. (2020), which examined universal school-based interventions, found that teaching students to regulate their emotions, a specific social-emotional skill, was related to weaker effects at post-measurement on self-esteem and bullying. These contrasting results may be explained within the framework of the Healthy Context Paradox (Salmivalli, 2018), which states that individuals who experience problems in a positive context may develop more problems because they feel they cannot benefit from the positive context, while others can. Conceivably, during interventions that teach social-emotional skills, students might become more aware of the discrepancy between their daily life experiences and societal norms. Due to this awareness, they might report more problems immediately after the intervention. At the same time, the intervention can provide students the skills needed to address and overcome these problems, resulting in more competence and fewer problems over time. Based on this process, negligible to negative intervention effects would be expected at post-measurement, and positive effects would be expected at follow-up. This delayed benefit from the intervention can be regarded as a sleeper effect of components in which the intervention’s content may require additional time to settle in (Bell et al., 2013).

Instructional components appeared particularly relevant for fostering follow-up effects on competencies and problems in the intrapersonal domain. In line with previous meta-analyses examining short-term effects (e.g., Mertens et al., 2020; Sheridan et al., 2019), active learning approaches, such as practicing skills, modeling desired behaviors, setting goals, and using multimedia, were related to stronger intervention effects at follow-up. The active learning approach through group discussions during the intervention is an ambiguous component. While this component was related to stronger effects on self-regulation and internalizing behavior, it was related to weaker effects on social competence. Implementing successful group discussions requires well-developed social and communication skills from facilitators (Wong et al., 2019). Perhaps a certain level of social competence is also required for students to participate successfully in discussions when implemented in the school context. Hence, using active learning approaches appears a promising intervention method, though it may be important to match the learning approach with the competencies and strengths of the participating students and facilitators.

Structural components were related to only a few follow-up intervention effects, suggesting that this type of component may be less relevant for establishing long-term intervention effects. In other words, to establish long-term intervention effects, what students learn (i.e., content components) and how they learn it (i.e., instructional components) may be more important than the way the intervention is set up (i.e., structural components). Although a whole school approach was related to stronger effects on students’ general wellbeing, components indicative of more extensive interventions were not consistently related to stronger intervention effects. Interventions that involved parents were related to weaker effects on bullying, and those that included an individual aspect were related to weaker effects on the interpersonal domain in general. These findings suggest that investing in longer and more extensive school-based intervention may establish stronger effects in the short-term (e.g., Gaffney et al., 2021; Mertens et al., 2020; Sheridan et al., 2019), but they may be less worthwhile in the long run.

Limitations

The current meta-analysis has several limitations. First, the associations between components and intervention effects are based on correlational data and should therefore be regarded as hypotheses generating results, indicating which components are particularly interesting to examine further. To stimulate the formulation of hypotheses, we reported significant findings as well as findings with a trend towards significance. Relatedly, we analyzed components that were embedded within intervention programs that included multiple components. As a result, we cannot draw conclusions about the effectiveness of specific components on their own. Second, to code the components, we were dependent on the sufficiency of the intervention descriptions. Although we searched for additional information regarding the interventions in appendices, other articles and on websites, if a component was not mentioned in those descriptions, we coded it as absent. Hence, some components may falsely be coded as absent. Vice versa, components described as part of the intervention may not have been implemented, even if they were coded as such. Third, due to the low implementation frequency of some components, some analyses regarding the components had relatively low power or could not be conducted. To avoid marking components prematurely as irrelevant due to low power, we interpreted associations between components and long-term effects that were significant and that showed a trend towards significance. This approach optimizes the relevance of our results for future research (i.e., hypotheses generation).

Conclusion

Taken together, our meta-analysis has two important implications. First, universal secondary school-based interventions show small positive long-term effects on multiple outcomes. While they are small, these effects show that interventions can have a long-lasting effect and have the potential to cultivate important aspects of adolescents’ psychosocial development. On the other hand, the small effect sizes indicate that there is room for improvement. To increase our understanding of long-term intervention effects, intervention studies should conduct follow-up assessments to examine long-term effectiveness and the relationships between long-term effects and the intervention’s components. Unfortunately, conducting a follow-up is not yet the standard in intervention research.

Second, components seem to be related to intervention effects on specific outcomes, sometimes with opposing effects. This raises the question of whether the heterogeneity in problems that exists in the total student population can be addressed by implementing a single intervention with a broad aim. Some components appear related to stronger long-term intervention effects on one outcome, though they are related to weaker effects on another outcome. In addition, some components appear associated with stronger short-term effects, though they show weaker long-term effects (or vice versa) on specific outcomes (Mertens et al., 2020). Gaining insight into these conflicting effects is critical. Thus, future research should examine the extent to which components relate to effects on different outcomes and how components can be combined into one intervention that addresses a broad range of outcomes. Such interventions should include components related to stronger effects on both the short-term and the long-term.

The effectiveness of an intervention is not only determined by the implemented components, but also by numerous other factors (e.g., the training, enthusiasm and characteristics of facilitators, the fit between the intervention content and the class- and school climate, and the resources and time available to invest in implementation). However, a better understanding of long-term intervention effects and the intervention components associated with such effects can facilitate the development of optimized interventions that exhibit increased long-term effectiveness.

Data Availability

Not applicable.

References

* Study included in the meta-analysis

*Allen, J. P., Narr, R. K., Nagel, A. G., Costello, M. A., & Guskin, K. (2021). The Connection Project: Changing the peer environment to improve outcomes for marginalized adolescents. Development and Psychopathology, 33(2), 647–657. https://doi.org/10.1017/S0954579419001731

Assink, M., & Wibbelink, C. J. M. (2016). Fitting three-level meta-analytic models in R: A step-by-step tutorial. The Quantitative Methods for Psychology, 12, 154–174.

*Baker, C. K., Naai, R., Mitchell, J., & Trecker, C. (2014). Utilizing a train-the-trainer model for sexual violence prevention: Findings from a pilot study with high school students of Asian and Pacific Islander descent in Hawai’i. Asian American Journal of Psychology, 5, 106–115. https://doi.org/10.1037/a0034670

Barber, B. K. (2005). Positive interpersonal and intrapersonal functioning: An assessment of measures among adolescents. In K. A. Moore & L. H. Lippman (Eds.), What do children need to flourish: Conceptualizing and measuring indicators of positive development (pp. 147–161). Springer Science and Business Media.

Bell, E. C., Marcus, D. K., & Goodlad, J. K. (2013). Are the parts as good as the whole? A meta-analysis of component treatment studies. Journal of Consulting and Clinical Psychology, 81(4), 722–736. https://doi.org/10.1037/a0033004

*Bonell, C., Allen, E., Warren, E., McGowan, J., Bevilacqua, L., Jamal, F., Legood, R., Wiggings, M., Opondo, C., Mathiot, A., Sturgess, J., Fletcher, A., Sadique, Z., Elbourne, D., Christie, D., Bond, L., Scott, S., & Viner, R. M. (2018). Effects of the Learning Together intervention on bullying and aggression in English secondary schools (INCLUSIVE): A cluster randomised controlled trial. Lancet, 392, 2452–2464. https://doi.org/10.1016/S0140-6736(18)31782-3

Boustani, M. M., Frazier, S. T., Ecker, K. D., Bechor, M., Dinizulu, S. M., Hedemann, E. R., Ogle, R. R., & Pasalich, D. S. (2015). Common elements of adolescent prevention programs: Minimizing burden while maximizing reach. Administration and Policy in Mental Health, 42, 209–219. https://doi.org/10.1007/s10488-014-0541-9

*Bull, H. D., Schultze, M., & Scheithauer, H. (2009). School-based prevention of bullying and relational aggression: The fairplayer.manual. European Journal of Developmental Science, 3, 312–317. https://doi.org/10.1002/yd.20007

*Burckhardt, R., Manicavasagar, V., Batterham, P. J., Hadzi-Pavlovic, D., & Shand, F. (2017). Acceptance and commitment therapy universal prevention program for adolescents: A feasibility study. Child and Adolescent Psychiatry and Mental Health, 11, 27–36. https://doi.org/10.1186/s13034-017-0164-5

*Burckhardt, R., Manicavasagar, V., Shaw, F., Fogarty, A., Batterham, P. J., Dobinson, K., & Karpin, I. (2018). Preventing mental health symptoms in adolescents using dialectical behaviour therapy skills group: A feasibility study. International Journal of Adolescence and Youth, 23, 70–85. https://doi.org/10.1080/02673843.2017.1292927

*Calear, A. L., Christensen, H., Mackinnon, A., Griffiths, K. M., & O’Kearney, R. (2009). The YouthMood Project: A cluster randomized controlled trial of an online cognitive behavioral program with adolescents. Journal of Consulting and Clinical Psychology, 77, 1021–1032. https://doi.org/10.1037/a0017391

*Calvete, E., Orue, I., Fernandez-Gonzalez, L., & Prieto-Fidalgo, A. (2019). Effects of an incremental theory of personality intervention on the reciprocity between bullying and cyberbullying victimization and perpetration in adolescents. PLoS ONE, 14(11), e0224755. https://doi.org/10.1371/journal.pone.0224755

*Caprara, G. V., Kanacri, B. P. L., Gerbino, M., Zuffianò, A., Alessandri, G., Vecchio, G., Caprara, E., Pastorelli, C., & Bridgall, B. (2014). Positive effects of promoting prosocial behavior in early adolescence: Evidence from a school-based intervention. International Journal of Behavioral Development, 38, 386–396. https://doi.org/10.1177/0165025414531464

*Carissoli, C., & Villani, D. (2019). Can videogames be used to promote emotional intelligence in teenagers? Results from EmotivaMente, a school program. Games for Health Journal, 8(6), 407–413. https://doi.org/10.1089/g4h.2018.0148

*Castillo, R., Salguero, J. M., Fernández-Berrocal, P., & Balluerka, N. (2013). Effects of an emotional intelligence intervention on aggression and empathy among adolescents. Journal of Adolescence, 36(5), 883–892. https://doi.org/10.1016/j.adolescence.2013.07.001

*Challen, A. R., Machin, S. J., & Gillham, J. E. (2014). The UK Resilience Programme: A school-based universal nonrandomized pragmatic controlled trial. Journal of Consulting and Clinical Psychology, 82, 75–89. https://doi.org/10.1037/a0034854

*Coelho, V. A., Marchante, M., & Sousa, V. (2015). “Positive attitude”: A multilevel model analysis of the effectiveness of a social and emotional learning program for Portuguese middle school students. Journal of Adolescence, 43, 29–38. https://doi.org/10.1016/j.adolescence.2015.05.009

*Corder, K., Werneck, A. O., Jong, S. T., Hoare, E., Brown, H. E., Foubister, C., Wilkinson, P. O., & van Sluijs, E. M. (2020). Pathways to increasing adolescent physical activity and wellbeing: A mediation analysis of intervention components designed using a participatory approach. International Journal of Environmental Research and Public Health, 17(2), 390. https://doi.org/10.3390/ijerph17020390

Crocetti, E. (2016). Systematic reviews with meta-analysis: Why, when, and how? Emerging Adulthood, 4, 3–18. https://doi.org/10.1177/2167696815617076

*Cross, D., Shaw, T., Hadwen, K., Cardoso, P., Slee, P., Roberts, C., Thomas, L., & Barnes, A. (2016). Longitudinal impact of the Cyber Friendly Schools program on adolescents’ cyberbullying behavior. Aggressive Behavior, 42, 166–180. https://doi.org/10.1002/ab.21609

*De Graaf, I., De Haas, S., Zaagsma, M., & Wijsen, C. (2016). Effects of Rock and Water: An intervention to prevent sexual aggression. Journal of Sexual Aggression, 22, 4–19. https://doi.org/10.1080/13552600.2015.1023375

*De Villiers, M., & Van den Berg, H. (2012). The implementation and evaluation of a resiliency programme for children. South African Journal of Psychology, 42(1), 93–102. https://doi.org/10.1177/008124631204200110

Dray, J., Bowman, J., Campbell, E., Freund, M., Wolfenden, L., Hodder, R. K., McElwaine, K., Tremain, D., Bartlem, K., Bailey, J., Small, T., Palazzi, K., Oldmeadow, C., & Wiggers, J. (2017). Systematic review of universal resilience-focused interventions targeting child and adolescent mental health in the school setting. Journal of the American Academy of Child and Adolescent Psychiatry, 56, 813–824. https://doi.org/10.1016/j.jaac.2017.07.780

Dufner, M., Gebauer, J. E., Sedikides, C., & Denissen, J. J. A. (2019). Self-enhancement and psychological adjustment: A meta-analytic review. Personality and Social Psychology Review, 23, 48–72. https://doi.org/10.1177/1088868318756467

Durlak, J. A., Weissberg, R. P., Dymnicki, A. B., Taylor, R. D., & Schellinger, K. B. (2011). The impact of enhancing students’ social and emotional learning: A meta-analysis of school-based universal interventions. Child Development, 82, 405–432. https://doi.org/10.1111/j.1467-8624.2010.01564.x

Duval, S., & Tweedie, R. (2000a). A nonparametric ‘trim and fill’ method of accounting for publication bias in meta-analysis. Journal of the American Statistical Association, 95, 89–99.

Duval, S., & Tweedie, R. (2000b). Trim and Fill: A simple funnel-plot based method of testing and adjusting for publication bias in meta-analysis. Biometrics, 56, 455–460. https://doi.org/10.1111/j.0006-341X.2000.00455.x

*Edwards, K. M., Banyard, V. L., Sessarego, S. N., Waterman, E. A., Mitchell, K. J., & Chang, H. (2019). Evaluation of a bystander-focused interpersonal violence prevention program with high school students. Prevention, 20(4), 488–498. https://doi.org/10.1007/s11121-019-01000-w

Egger, M., Smith, G. D., Schneider, M., & Minder, C. (1997). Bias in meta-analysis detected by a simple, graphical test. BMJ, 315, 629–634. https://doi.org/10.1136/bmj.315.7109.629

Ferguson, C. J., & Brannick, M. T. (2012). Publication bias in psychological science: Prevalence, methods for identifying and controlling, and implications for the use of meta-analyses. Psychological Methods, 17, 120–128. https://doi.org/10.1037/a0024445

Finkel, E. J., & Vohs, K. D. (2006). Introduction. Self and Relationships. In K. D. Vohs & E. J. Finkel (Eds.), Self and relationships: Connecting intrapersonal and interpersonal processes (pp. 1–9). The Guilford Press.

*Foshee, V. A., Bauman, K. E., Ennett, S. T., Suchindran, C., Benefield, T., & Linder, G. F. (2005). Assessing the effects of the dating violence prevention program “Safe dates” using random coefficient regression modeling. Prevention Science, 6, 245–258. https://doi.org/10.1007/s11121-005-0007-0

Gaffney, H., Ttofi, M. M., & Farrington, D. P. (2021). What works in anti-bullying programs? Analysis of effective intervention components. Journal of School Psychology, 85, 37–56. https://doi.org/10.1016/j.jsp.2020.12.002

*Ghobari Bonab, B., Khodayarifard, M., Geshnigani, R. H., Khoei, B., Nosrati, F., Song, M. J., & Enright, R. D. (2021). Effectiveness of forgiveness education with adolescents in reducing anger and ethnic prejudice in Iran. Journal of Educational Psychology, 113(4), 846–860. https://doi.org/10.1037/edu0000622

*Gollwitzer, M., Banse, R., Eisenbach, K., & Naumann, A. (2007). Effectiveness of the vienna social competence training on explicit and implicit aggression: Evidence from an Aggressiveness-IAT. European Journal of Psychological Assessment, 23, 150–156. https://doi.org/10.1027/1015-5759.23.3.150

Gottfredson, D. C., Cook, T. D., Gardener, F. E. M., Gorman-Smith, D., Howe, G. W., Sandler, I. N., & Zafft, K. M. (2015). Standards of evidence for efficacy, effectiveness, and scale-up research in prevention science: Next generation. Prevention Science, 16, 893–926. https://doi.org/10.1007/s11121-015-0555-x

Hallgren, K. A. (2012). Computing inter-rater reliability for observational data: An overview and tutorial. Tutorials in Quantitative Methods for Psychology, 8(1), 23–34.

Hedges, L. (1983). A random effects model for effect sizes. Psychological Bulletin, 93, 388–395.

Higgins, J. P. T., Sterne, J. A. C., Savović, J., Page, M. J., Hróbjartsson, A., Boutron, I., Reeves, B., & Eldridge, S. (2016). A revised tool for assessing risk of bias in randomized trials. Cochrane Database of Systematic Reviews, 10(Suppl 1), 29–31. https://doi.org/10.1002/14651858.CD201601

*Horn, A. B., Pössel, P., & Hautzinger, M. (2010). Promoting adaptive emotion regulation and coping in adolescence: A school-based programme. Journal of Health Psychology, 16, 258–273. https://doi.org/10.1177/1359105310372814

*Jaycox, L. H., McCaffrey, D., Eiseman, B., Aronoff, J., Shelley, G. A., Collins, R. L., & Marshall, G. N. (2006). Impact of a school-based dating violence prevention program among Latino teens: Randomized controlled effectiveness trial. Journal of Adolescent Health, 39, 694–704. https://doi.org/10.1016/j.jadohealth.2006.05.002

*Jewkes, R., Gevers, A., Chirwa, E., Mahlangu, P., Shamu, S., Shai, N., & Lombard, C. (2019). RCT evaluation of Skhokho: A holistic school intervention to prevent gender-based violence among South African Grade 8s. PLoS ONE, 14(10), e0223562. https://doi.org/10.1371/journal.pone.0223562

Kaminski, J. W., Valle, L. A., Filene, J. H., & Boyle, C. L. (2008). A meta-analytic review of components associated with parent training program effectiveness. Journal of Abnormal Child Psychology, 36, 567–589.

*Kaveh, M. H., Hesampour, M., Ghahremani, L., & Tabatabaee, H. R. (2014). The effects of a peer-led training program on female students’ self-esteem in public secondary schools in Shiraz. Journal of Advances in Medical Education & Professionalism, 2(2), 63–70.

*Kiselica, M. S., Baker, S. B., Thomas, R. N., & Reedy, S. (1994). Effects of stress inoculation training on anxiety, stress, and academic performance among adolescents. Journal of Counseling Psychology, 41, 335–342. https://doi.org/10.1037/0022-0167.41.3.335

*Kozina, A. (2018a). Can the “my friends” anxiety prevention programme also be used to prevent aggression? A six-month follow-up in a school. School Mental Health, 10, 500–509. https://doi.org/10.1007/s12310-018-9272-5

*Kozina, A. (2018b). School-based prevention of anxiety using the “My FRIENDS” emotional resilience program: Six-month follow-up. International Journal of Psychology. https://doi.org/10.1002/ijop.12553

*Lamke, L. K., Lujan, B. M., & Showalter, J. M. (1988). The case for modifying adolescents’ cognitive self-statements. Adolescence, 23, 967–974.

Lee, B. R., Ebesutani, C., Kolivoski, K. M., Becker, K. D., Lindsey, M. A., Brandt, N. E., Cammack, N., Strieder, F., Chorpita, B. F., & Barth, R. P. (2014). Program and practice elements for placement prevention: A review of interventions and their effectiveness in promoting home-based care. American Journal of Orthopsychiatry, 84, 244–256. https://doi.org/10.1037/h0099811

Light, R., & Pillemer, D. (1984). Summing up: The science of reviewing research. Harvard University Press.

Lipsey, M. W., & Wilson, D. B. (2001). Practical meta-analysis. Sage Publications.

Lochman, J. E., Baden, R. E., Boxmeyer, C., Powell, N. P., Qu, L., Salekin, K. L., & Windle, M. (2014). Does a booster intervention augment the preventive effects of an abbreviated version of the Coping Power Program for aggressive children? Journal of Abnormal Child Psychology, 42(3), 367–381. https://doi.org/10.1007/s10802-013-9727-y

Mackenzie, K., & Williams, C. (2018). Universal, school-based interventions to promote mental and emotional well-being: What is being done in the UK and does it work? A systematic review. British Medical Journal Open, 8, e022560. https://doi.org/10.1136/bmjopen-2018-022560

Mertens, E. C. A., Deković, M., Leijten, P., Van Londen, M., & Reitz, E. (2020). Components of school-based interventions stimulating students’ intrapersonal and interpersonal domains: A meta-analysis. Clinical Child and Family Psychology Review, 23, 605–631. https://doi.org/10.1007/s10567-020-00328

*Meyer, G., Roberto, A. J., Boster, F. J., & Roberto, H. L. (2004). Assessing the get real about violence® curriculum: process and outcome evaluation results and implications. Health Communication, 16(4), 451–474. https://doi.org/10.1207/s15327027hc1604_4

*Miller, S., Williams, J., Cutbush, S., Gibbs, D., Clinton-Sherrod, M., & Jones, S. (2015). Evaluation of the Start Strong initiative: Preventing teen dating violence and promoting healthy relationships among middle school students. Journal of Adolescent Health, 56, S14–S19. https://doi.org/10.1016/j.jadohealth.2014.11.003

*Muck, C., Schiller, E., Zimmermann, M., & Kartner, J. (2018). Preventing sexual violence in adolescence: Comparison of a scientist-practitioner program and a practitioner program using a cluster-randomized design. Journal of Interpersonal Violence. https://doi.org/10.1177/0886260518755488

Mychailyszyn, M. P., Brodman, D. M., Read, K. L., & Kendall, P. C. (2012). Cognitive-behavioral school-based interventions for anxious and depressed youth: A meta-analysis of outcomes. Clinical Psychology, Science and Practice, 19, 129–153. https://doi.org/10.1111/j.1468-2850.2012.01279.x

Neil, A. L., & Christensen, H. (2009). Efficacy and effectiveness of school-based prevention and early intervention programs for anxiety. Clinical Psychology Review, 29(3), 208–215. https://doi.org/10.1016/j.cpr.2009.01.002

Nocentini, A., Zambuto, V., & Menesini, E. (2015). Anti-bullying programs and Information and Communication Technologies (ICTs): A systematic review. Aggression and Violent Behavior, 23, 52–60. https://doi.org/10.1016/j.avb.2015.05.012

Onrust, S. A., Otten, R., Lammers, J., & Smit, F. (2016). School-based programmes to reduce and prevent substance use in different age groups: What works for whom? Systematic review and meta-regression analysis. Clinical Psychology Review, 44, 45–59. https://doi.org/10.1016/j.cpr.2015.11.002

*Orpinas, P., Parcel, G. S., McAlister, A., & Frankowski, R. (1995). Violence prevention in middle schools: A pilot evaluation. Journal of Adolescent Health, 17, 360–371. https://doi.org/10.1016/1054-139X(95)00194-W

*Pannebakker, F. D., van Genugten, L., Diekstra, R. F. W., Gravesteijn, C., Fekkes, M., Kuiper, R., & Kocken, P. L. (2019). A social gradient in the effects of the skills for life program on self-efficacy and mental wellbeing of adolescent students. Journal of School Health, 89, 587–595. https://doi.org/10.1111/josh.12779

Pellegrino, J. W., & Hilton, M. L. (2012). Education for life and work: Developing transferable knowledge and skills in the 21st century. National Academy of Sciences.

*Perkins, A. M., Bowers, G., Cassidy, J., Meiser-Stedman, R., & Pass, L. (2021). An enhanced psychological mindset intervention to promote adolescent wellbeing within educational settings: A feasibility randomized controlled trial. Journal of Clinical Psychology, 77(4), 946–967. https://doi.org/10.1002/jclp.23104

*Peskin, M. F., Markham, C. M., Shegog, R., Baumler, E. R., Addy, R. C., Temple, J. R., Hernandez, B., Cuccaro, P. M., Thiel, M. A., Gabay, E. K., & Tortolero Emery, S. R. (2019). Adolescent dating violence prevention program for early adolescents: The Me & You randomized controlled trial, 2014–2015. American Journal of Public Health, 109(10), 1419–1428. https://doi.org/10.2105/AJPH.2019.305218

Peters, L. W. H., Kok, G., Ten Dam, G. T. M., Buijs, G. J., & Paulussen, T. G. W. M. (2009). Effective elements of school health promotion across behavioral domains: A systematic review of reviews. BMC Public Health, 9, 182–196.

PracticeWise. (2009). Psychosocial and combined treatments coding manual. Satellite Beach, FL: PracticeWise.

*Richardson, S. M., Paxton, S. J., & Thomson, J. S. (2009). Is BodyThink an efficacious body image and self-esteem program? A controlled evaluation with adolescents. Body Image, 6, 75–82. https://doi.org/10.1016/j.bodyim.2008.11.001

*Ruini, C., Ottolini, F., Tomba, E., Belaise, C., Albieri, E., Visani, D., Offidani, E., Caffo, E., & Fava, G. A. (2009). School intervention for promoting psychological well-being in adolescence. Journal of Behavior Therapy and Experimental Psychiatry, 40, 522–532. https://doi.org/10.1016/j.jbtep.2009.07.002

*Ruiz-Aranda, D., Castillo, R., Salguero, J. M., Cabello, R., Fernández-Berrocal, P., & Balluerka, N. (2012). Short- and midterm effects of emotional intelligence training on adolescent mental health. Journal of Adolescent Health, 51, 462–467. https://doi.org/10.1016/j.jadohealth.2012.02.003

Salmivalli, C. (2018). Peer victimization and adjustment in young adulthood: Commentary on the special section. Journal of Abnormal Child Psychology, 46, 67–72. https://doi.org/10.1007/s10802-017-0372-8

*Sharma, D., Mehari, K. R., Kishore, J., Sharma, N., & Duggal, M. (2020). Pilot evaluation of Setu, a school-based violence prevention program among indian adolescents. Journal of Early Adolescence, 40(8), 1142–1166. https://doi.org/10.1177/0272431619899480

Shek, D. T. L., & Leung, J. T. Y. (2016). Developing social competence in a subject on leadership and intrapersonal development. International Journal on Disability and Human Development, 51, 165–173. https://doi.org/10.1515/ijdhd-2016-0706

Sheridan, S. M., Smith, T. E., Moorman Kim, E., Beretvas, S. N., & Park, S. (2019). A meta-analysis of family-school interventions and children’s social-emotional functioning: Moderators and components of efficacy. Review of Educational Research, 89(2), 296–332. https://doi.org/10.3102/0034654318825437

*Shoshani, A., & Steinmetz, S. (2014). Positive psychology at school: A school-based intervention to promote adolescents’ mental health and well-being. Journal of Happiness Studies, 15, 1289–1311. https://doi.org/10.1007/s10902-013-9476-1

*Shoshani, A., Steinmetz, S., & Kanat-Maymon, Y. (2016). Effects of the Maytiv positive psychology school program on early adolescents’ well-being, engagement, and achievement. Journal of School Psychology, 57, 73–92. https://doi.org/10.1016/j.jsp.2016.05.003

*Simons-Morton, B., Haynie, D., Saylor, K., Crump, A. D., & Chen, R. (2005). The effects of the Going Places Program on early adolescent substance use and antisocial behavior. Prevention Science, 6, 187–197. https://doi.org/10.1007/s11121-005-0005-2

*Singh, N., Minaie, M. G., Skvarc, D. R., & Toumbourou, J. W. (2019). Impact of a secondary school depression prevention curriculum on adolescent social-emotional skills: Evaluation of the Resilient Families Program. Journal of Youth and Adolescence, 48, 1100–1115. https://doi.org/10.1007/s10964-019-00992-6

Sklad, M., Diekstra, R., De Ritter, M., & Ben, J. (2012). Effectiveness of school-based universal social, emotional, and behavioral programs: Do they enhance studnets’ development in the area of skill, behavior, and adjustment? Psychology in the Schools, 49(9), 892–909. https://doi.org/10.1002/pits.21641

*Soliday, E., Garofalo, J. P., & Rogers, D. (2004). Expressive writing intervention for adolescents’ somatic symptoms and mood. Journal of Clinical Child and Adolescent Psychology, 33, 792–801. https://doi.org/10.1207/s15374424jccp3304_14

*Solomontos-Kountouri, O., Gradinger, P., Yanagida, T., & Strohmeier, D. (2016). The implementation and evaluation of the ViSC program in Cyprus: Challenges of cross-national dissemination and evaluation results. European Journal of Developmental Psychology, 13, 737–755. https://doi.org/10.1080/17405629.2015.1136618

*Stevens, V., Bourdeaudhuij, I., & Oost, P. (2000). Bullying in Flemish schools: An evaluation of anti-bullying intervention in primary and secondary schools. British Journal of Educational Psychology, 70, 195–210. https://doi.org/10.1348/000709900158056

Sun, M., Rith-Najarian, L. R., Williamson, T. J., & Chorpita, B. F. (2019). Treatment features associated with youth Cognitive Behavioral Therapy follow-up effects for internalizing disorders: A meta-analysis. Journal of Clinical Child and Adolescent Psychology, 48(sup1), S269–S283. https://doi.org/10.1080/15374416.2018.1443459

*Sundgot-Borgen, C., Friborg, O., Kolle, E., Engen, K., Sundgot-Borgen, J., Rosenvinge, J. H., Pettersen, G., Klungland Torstveit, M., Piran, N., & Bratland-Sanda, S. (2019). The healthy body image (HBI) intervention: Effects of a school-based cluster-randomized controlled trial with 12-months follow-up. Body Image, 29, 122–131. https://doi.org/10.1016/j.bodyim.2019.03.007

*Sundgot-Borgen, C., Stenling, A., Rosenvinge, J. H., Pettersen, G., Friborg, O., Sundgot-Borgen, J., Kolle, E., Torstveit, M. K., Svantorp-Tveiten, K., & Bratland-Sanda, S. (2020). The Norwegian healthy body image intervention promotes positive embodiment through improved self-esteem. Body Image, 35, 84–95. https://doi.org/10.1016/j.bodyim.2020.08.014

Taylor, R. D., Oberle, E., Durlak, J. A., & Weissberg, R. P. (2017). Promoting positive youth development through school-based social and emotional learning interventions: A meta-analysis of follow-up effects. Child Development, 88(4), 1156–1171. https://doi.org/10.1111/cdev.12864

*Thomaes, S., Bushman, B. J., Orobio de Castro, B., Cohen, G. L., & Denissen, J. J. A. (2009). Reducing narcissistic aggression by buttressing self-esteem: An experimental field study. Psychological Science, 20, 1536–1542.

*Tomba, E., Belaise, C., Ottolini, F., Ruini, C., Bravi, A., Albieri, E., Rafanelli, C., Caffo, E., & Fava, G. A. (2010). Differential effects of well-being promoting and anxiety-management strategies in a non-clinical school setting. Journal of Anxiety Disorders, 24(3), 326–333. https://doi.org/10.1016/j.janxdis.2010.01.005

Van Aar, J., Leijten, P., Orobio de Castro, B., & Overbeek, G. (2017). Sustained, fade-out or sleeper effects? A systematic review and meta-analysis of parenting interventions for disruptive child behavior. Clinical Psychology Review, 51, 153–163. https://doi.org/10.1016/j.cpr.2016.11.006

Van den Noortgate, W., López-López, J. A., Marín’ Martínez, F., & Sánchez-Meca, J. (2013). Three-level meta-analysis of dependent effect sizes. Behavior Research Methods, 45, 576–594. https://doi.org/10.3758/s13428-012-0261-6

Van der Put, C. E., Assink, M., Gubbels, J., & Boekhout van Solinge, N. F. (2018). Identifying effective components of child maltreatment interventions: A meta-analysis. Clinical Child and Family Psychology Review, 21, 171–202. https://doi.org/10.1007/s10567-017-0250-5

Van Orden, K., Wingate, L. R., Gordon, K. H., & Joiner, T. E. (2005). Interpersonal factors as vulnerability to psychopathology over the life course. In B. L. Hankin & J. R. Z. Abela (Eds.), Development of psychopathology: A vulnerability-stress perspective (pp. 136–160). Sage Publications.

Viechtbauer, W. (2010). Conducting meta-analyses in R with the metafor package. Journal of Statistical Software, 36, 1–48.

*Volanen, S. M., Lassander, M., Hankonen, N., Santalahti, P., Hintsanen, M., Simonsen, N., Raevuori, A., Mullola, S., Vahlberg, T., But, A., & Suominen, S. (2020). Healthy learning mind—Effectiveness of a mindfulness program on mental health compared to a relaxation program and teaching as usual in schools: A cluster-randomised controlled trial. Journal of Affective Disorders, 260, 660–669. https://doi.org/10.1016/j.jad.2019.08.087

*Williams, J., Miller, S., Cutbush, S., Gibbs, D., Clinton-Sherrod, M., & Jones, S. (2015). A latent transition model of the effects of a teen dating violence prevention initiative. The Journal of Adolescent Health, 56, S27–S32. https://doi.org/10.1016/j.jadohealth.2014.08.019

*Wolfe, D. A., Crooks, C., Jaffe, P., Chiodo, D., Hughes, R., Ellis, W., Stitt, L., & Donner, A. (2009). A school-based program to prevent adolescent dating violence: A cluster randomized trial. Archives of Pediatrics & Adolescent Medicine, 163(8), 692–699. https://doi.org/10.1001/archpediatrics.2009.69

Wong, D., Grace, N., Baker, K., & McMahon, G. (2019). Measuring clinical competencies in facilitating group-based rehabilitation interventions: Development of a new competency checklist. Clinical Rehabilitation, 33(6), 1079–1087. https://doi.org/10.1177/0269215519831048

Funding

This work was supported by a grant from The Netherlands Organization for Health Research and Development, grant number 531001106.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no competing interests.

Ethical Approval

This article does not contain any studies with human participants performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mertens, E.C.A., Deković, M., van Londen, M. et al. Components Related to Long-Term Effects in the Intra- and Interpersonal Domains: A Meta-Analysis of Universal School-Based Interventions. Clin Child Fam Psychol Rev 25, 627–645 (2022). https://doi.org/10.1007/s10567-022-00406-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10567-022-00406-3