Abstract

In seismic risk assessment, the sources of uncertainty associated with building exposure modelling have not received as much attention as other components related to hazard and vulnerability. Conventional practices such as assuming absolute portfolio compositions (i.e., proportions per building class) from expert-based assumptions over aggregated data crudely disregard the contribution of uncertainty of the exposure upon earthquake loss models. In this work, we introduce the concept that the degree of knowledge of a building stock can be described within a Bayesian probabilistic approach that integrates both expert-based prior distributions and data collection on individual buildings. We investigate the impact of the epistemic uncertainty in the portfolio composition on scenario-based earthquake loss models through an exposure-oriented logic tree arrangement based on synthetic building portfolios. For illustrative purposes, we consider the residential building stock of Valparaíso (Chile) subjected to seismic ground-shaking from one subduction earthquake. We have found that building class reconnaissance, either from prior assumptions by desktop studies with aggregated data (top–down approach), or from building-by-building data collection (bottom–up approach), plays a fundamental role in the statistical modelling of exposure. To model the vulnerability of such a heterogeneous building stock, we require that their associated set of structural fragility functions handle multiple spectral periods. Thereby, we also discuss the relevance and specific uncertainty upon generating either uncorrelated or spatially cross-correlated ground motion fields within this framework. We successively show how various epistemic uncertainties embedded within these probabilistic exposure models are differently propagated throughout the computed direct financial losses. This work calls for further efforts to redesign desktop exposure studies, while also highlighting the importance of exposure data collection with standardized and iterative approaches.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Epistemic uncertainties stem from the incomplete knowledge of the actual problem and its parameters, or simply from, often unavoidable, modelling and methodology errors (e.g., Vamvatsikos et al. 2010). The performance of earthquake loss models for large-scale residential building portfolios under the influence of such epistemic uncertainties, which are related to the lack of data describing the exposure composition, is the central aspect of this work. Exposure refers to the number, type, and monetary value of the elements (e.g., buildings) that are under threat from natural hazards and are subjected to potential loss (e.g., UNISDR 2009). Together with the hazard and vulnerability components, the exposure contributes to most quantitative risk assessment applications. In such studies, the degree of knowledge of the hazard and exposure components plays a fundamental role since their associated uncertainties are propagated to the final loss estimates. Therefore, accurate estimates of the expected spatial distribution of seismic ground-shaking intensities for an earthquake scenario, together with increasingly consistent classifications of the building stock into suitable building vulnerability classes, will provide more accurate central metrics and minimize the variance of the final loss estimates over an area of interest. Being able to track and disaggregate the influence of the hazard and exposure is a crucial factor for decision making, urban planning, and finance (e.g., the insurance industry). In the latter, the smaller the variation in the mean loss values, the lower the risk is perceived (Wesson and Perkins 2001).

In exposure modelling, the buildings are classified into vulnerability classes which ultimately describe their expected susceptibility to damage. The vulnerability-class definition, therefore, links the hazard intensities to the expected damage based on a clear understanding of the building’s structural and non-structural characteristics (e.g., Calvi et al. 2006). Porter et al. (2002) showed that the influence of uncertainties in ground shaking on the overall uncertainty in the seismic performance of individual buildings (repair cost) is similar to the influence of uncertainty in the capacity of a building to resist the damage. However, for large-scale seismic risk, it has been conventionally assumed that the relative uncertainty associated with the definition of building classes and their relative proportions contributes much less to the final loss estimates than the aleatory components of the risk processing chain (i.e., ground motion variability in seismic hazard). This has led to the general practice of assuming that the collection of building exposure data is not as worthwhile compared to the more detailed assessment of the hazard component (Crowley and Bommer 2006). This practice implies further community-accepted assumptions, such as supposing fixed proportions over aggregated data (i.e., census-based desktop studies), without exploring their underlying uncertainties. Only in recent times have a few studies pointed out the exposure uncertainty is an area that would particularly benefit from further assessment (Crowley 2014; Corbane et al. 2017; Silva et al. 2019). Under this framework, there are several components of epistemic uncertainty that need further exploration, such as the basic reconnaissance of the building classes and their location while gathering their attributes, as well as exploring sensitivities in loss outcomes if more than a single set of building classes is used. Therefore, the assessment of a selected set of taxonomic attributes within a statistical exposure model while investigating the uncertainty in the class assignment and their effects on the loss estimates is a pathway worth exploring within a seismic risk framework.

This work describes how the epistemic uncertainty associated with defining a building exposure model is correlated with the variance in the loss estimates in earthquake scenarios. First, we briefly describe the current practices and limitations in the current state of the art in building exposure modelling for seismic risk (Sect. 2). Subsequently, we present a method for exploring the epistemic uncertainty of the building exposure models in earthquake loss models (Sect. 3). As a premise, in this work the building exposure model is visualized under the scope of compositional theory within a Bayesian framework in three stages: (1) statistical modelling based on the collection of data about a building’s attributes and the configuration of synthetic building portfolios; (2) a novel method for obtaining the probabilistic compatibility-levels between two sets of building classifications; (3) the novel proposal of an exposure-oriented logic-tree that propagates and disaggregates some of the components that contribute to uncertainty in the loss estimates. These steps are exemplified for the residential building stock of Valparaíso, Chile (Sect. 4), to investigate how their associated variability impacts upon estimates of direct financial losses arising from a subduction earthquake scenario, while also considering both uncorrelated and spatially cross-correlated ground motion fields (GMF).

2 Current state of the art in building-exposure modelling for seismic risk assessment

In classical exposure models for large-scale seismic risk assessment, only some basic attributes are used to classify a building stock (e.g., the material of the lateral load resistance system (LLRS), height, and age). To date, few efforts to explore the associated uncertainties in the exposure composition have been made. For instance, Crowley and Pinho (2004) considered the spatial variation in the individual attributes as being random and less than the uncertainty induced by grouping different individual buildings into a single typology. Crowley et al. (2005) later showed that there is a great variability in the damage loss ratios imposed by grouping certain typologies in terms of storey ranges over a portfolio, even when the buildings are assumed to have other homogenous attributes (e.g., in terms of material of the LLRS). These simplifications have led to the practice of representing the epistemic uncertainty in the classification of buildings into predefined typologies as aleatory uncertainty. However, the same study also pointed out that detailed inspections to collect attributes of all the buildings in a study area would allow this uncertainty to be treated as epistemic. This is relevant considering that the location of specific building attributes are, in reality, not aleatory within a building stock (e.g., Dell’Acqua et al. 2013; Martínez-Cuevas et al. 2017) and can affect their seismic vulnerability (Lagomarsino and Giovinazzi 2006). Unfortunately, considering the extent and the evolution of the built environment, a full enumeration of the taxonomic features of the assets is a highly time and resource-intensive task, and often simply unfeasible (Pittore et al. 2017). Furthermore, the associated complexity in the building classification would increase and will lead to a more extensive set of classes in comparison to the available set of fragility functions (Haas 2018; Martins and Silva 2020). However, if only a sample of the building structures within the entire stock is inspected, the epistemic uncertainly associated with the class assignment in exposure models could be accounted for, allowing then the investigation of their impact upon earthquake loss models. For this aim, the use of taxonomies is a conventional practice to describe the built environment.

2.1 The use of taxonomies for building exposure modelling

2.1.1 Risk-oriented taxonomies: schemes

Buildings are grouped into categories with expected similar performance when subjected to ground shaking. These categories are actually risk-oriented taxonomies which describe vulnerability classes with respect to a specific natural hazard and are described by a set of mutually exclusive, collectively exhaustive, building classes. To refer to such a set of building classes, we will be using the word “scheme”. Some of the most common schemes include the European Macroseismic Scale 1998 (EMS-98, Grünthal 1998), the USA specific HAZUS model (FEMA 2003), and PAGER-STR (Jaiswal et al. 2010). These schemes classify large-scale exposure models often based on census information and are spatially aggregated over specific administrative units. Given the lack of local models, these taxonomies have been applied outside their original geographical scope. This is the case for HAZUS, which has been used to classify building stocks and to estimate losses in other geographical contexts (e.g., in Chile, Aguirre et al. 2018). Similar practices have been reported using the EMS-98 risk-oriented taxonomy in Central Asia (e.g., Bindi et al. 2011; Pilz et al. 2013).

2.1.2 Faceted taxonomies: taxonomic attributes

Faceted taxonomies, by contrast, provide an exhaustive and structured sets of mutually exclusive and well-described attributes. These taxonomies allow the description of individual structures in a standard way and are largely independent of specific fragility or vulnerability models. The most widely used and well-established example is the GEM Building Taxonomy (GEM v.2.0, Brzev et al. 2013). This taxonomy has been adapted for a multi-hazard-risk initiative (GED4ALL, Silva et al. 2018) and for classifying structures with special occupancies, such as schools, to assess their seismic vulnerability within the Global Library of School Infrastructure project (GLOSI) outlined in D’Ayala et al. (2020). Every building class within a given risk-oriented taxonomy can be disaggregated into attributes within a faceted taxonomy. This has been described in Pittore et al. (2018) and has been noted in Pavić et al. (2020).

2.2 Exposure modelling methods for large area spatially distributed buildings

Regardless of the type of taxonomy (either risk-oriented or faceted), there are two conventional methods for the exposure modelling of large-scale spatially distributed buildings: (1) a top–down approach, which involves the analysis of aggregated data (e.g., census data) through expert elicitation, and (2) a bottom–up approach, which uses individual observations. These two approaches classify the building stock by addressing a double expert elicitation process:

-

(1)

To classify the building inventory into assumed building classes within a given study area.

-

(2)

To obtain the building exposure composition (i.e., proportions in every building class).

These approaches are briefly explained hereafter. An innovative approach that dynamically combines these through statistical analyses will be introduced and discussed later in this work.

2.2.1 Top–down approach: building class from the analysis of aggregated data

Recently, the implementation of the GEM “mapping schemes” for the analysis of aggregated data has been outlined in Yepes-Estrada et al. (2017) and further implemented in the European exposure model (Crowley et al. 2020) and the Global Seismic Risk model (Silva et al. 2020). These mapping schemes classify a building stock through desktop studies and expert elicitation with respect to earthquake vulnerability classes. Each class is described by selected attributes from the GEM v2.0 faceted taxonomy. They rely on available regionally aggregated data (e.g., region-specific census data) while addressing socioeconomic characteristics for dwellings and not at the building level (Crowley 2014). Since there might be only a few very useful attributes for physical vulnerability assessment, these mapping-schemas have been customized to include other attributes by defining covariate relations between census descriptors and expected proportions per building class to ultimately use a single set of typologies to represent a building stock (e.g., Acevedo et al. 2020; Dabbeek and Silva 2020). Therefore, the variation of taxonomic attributes is still being treated as being random within an aleatory uncertainty framework instead of a reducible and trackable epistemic uncertainty. Moreover, exposure models derived from purely top–down desktop studies neglect the temporal evolution of the ancillary data. Since census data are neither standard across regions nor in time (including possible changing data formats), once the mapping schema is used, the resultant exposure model would remain static until new census information is generated (Silva et al. 2019). Recent discussions about epistemic uncertainties in regional exposure models have been presented by Kalakonas et al. (2020). These authors observed negligible differences in the loss estimates when alternative exposure compositions were compared within a sensitivity analysis for probabilistic risk assessment. Notably, recent studies have highlighted the importance of the statistical nature of the exposure models by forecasting its dynamic spatiotemporal evolution (Rivera et al. 2020; Calderón and Silva 2021) as well as counting with efficient techniques for their spatial aggregation (Dabbeek et al. 2021).

2.2.2 Bottom–up approach: individual building observations

When the composition of the portfolio is expected to be heterogeneous, data collection of attributes over a selected sample of individual buildings is required to constrain and validate the underlying assumptions imposed by a top–down vision. Freely available data products such as OpenStreetMap (OSM) may offer some descriptors (occupancy or footprint shape) that have been proved to be useful for constructing large scale exposure models with particular occupancies (e.g., Sousa et al. 2017). However, due to the lack of standardized data formats, including vulnerability drivers’ attributes within harmonized data formats is still required by volunteer mapping initiatives to describe more robust schemes. This harmonization has been addressed by data standards with taxonomic attributes. This is the case for FEMA 154 (2002), the SASPARM 2.0 project (Grigoratos et al. 2016), CARTIS (Polese et al. 2020); and initiatives for data collection of post-earthquake damage such as AeDES (Baggio et al. 2007; Nicodemo et al. 2020). Recently, Kechidi et al. (2021) presented a comprehensive comparison between census-based (using mapping schemes) and survey-based (inspecting a sample) exposure models for risk assessment. The authors highlighted that the accuracy of the risk estimates is directly correlated with the number of surveys within a given region.

2.2.3 Dynamic building exposure modelling based on data collection and statistical analyses

Some studies have proposed the association between building characteristics (dynamically collected) and their related vulnerability classes through statistical modelling. These type of approaches were first exemplified by Pittore and Wieland (2013) who employed Bayesian networks, and by Riedel et al. (2015) who made use of machine learning techniques. Moreover, a dynamic building exposure modelling method with a probabilistic nature has been recently suggested by Pittore et al. (2020), where it was proposed to define the portfolio’s vulnerability classes in a top–down manner, while the expected frequency of the related classes was constrained through a bottom–up approach by integrating attribute-based data collection. To the best of the authors’ knowledge, these statistical models have not yet been exploited to investigate the epistemic uncertainties in building exposure model composition, nor its impact on loss estimates. A detailed exploration of the epistemic uncertainty carried by statistical building exposure models upon scenario-based loss estimates is introduced hereafter.

3 Methodology

3.1 Probabilistic—exposure models: a Bayesian formulation

The building portfolio configuration is conceptualized by compositional theory within a fully probabilistic Bayesian framework, as initially suggested by Pittore et al. (2020). First, we introduce the concept of the likelihood function, followed by the assumptions on the prior and the posterior distributions within this context. This formulation considers risk-oriented schemes that contain a finite set of building classes and their associated fragility functions.

3.1.1 The definition of the likelihood function: the intra-scheme compatibility levels

A suitable scheme containing \(k\) risk-oriented building classes is selected for the area of interest, where we assume some data has also been collected through surveying (evidence). Following the proposal of Pittore et al. (2020), we assume that a sample of \(n = \left\{ {n_{1} , \ldots ,n_{k} } \right\}\), \(\mathop \sum \nolimits_{k} n_{i} = N\) building types are observed, where \(n_{i}\) is the number of specimens of building type \(i\). We assume that the statistical population of buildings from which the observed sample is drawn is characterized by k typologies, whose frequencies are characterised by a proportion \(\theta = \left\{ {\theta_{1} , \ldots ,\theta_{k} } \right\}\), \(\theta_{i}\) > 0 \(\forall i\) and \(\mathop \sum \nolimits_{k} \theta_{k} = 1\). Assuming a Multinomial sampling model, the probability of observing \(n\) conditionals on \(\theta\) is given by:

We assume that the set of observations given their proportions is the likelihood distribution of the Bayesian formulation for building exposure modelling. This emerges naturally considering the bottom–up data collection of individual attributes. Subsequently, every risk-oriented building class (k within a given scheme (e.g.,\(T_{k}^{A}\)) is translated into basic taxonomic attribute values \(\{ F\}_{m}\) offered by a faceted building taxonomy. This is expressed by Eq. 2.

Triangular fuzzy values are assigned through expert criteria to score the compatibility degree between the observed attribute values and every building class, as formulated in Pittore et al. (2018), to constrain the actual proportion of every class within the exposure model. Subsequently, the data collection over individual buildings are used in the class assignment. Every attribute type j has an associated numerical weight, \(w_{j}\), that acknowledges their relevance to the vulnerability assessment as well as their ability to be satisfactorily identified during the survey. By evaluating the compatibility degree between the observed building attributes and the building class, a transparent assignment of the most likely class within a fully probabilistic framework is achieved.

3.1.2 Prior and posterior distributions

As formulated in Pittore et al. (2020), the expected proportion \(\theta_{i}\) for every building class is treated as a Dirichlet-distributed random variable:

where \(\alpha = \left\{ {\alpha_{1} , \ldots ,\alpha_{k} } \right\}\), \(\alpha_{i} > 0,\forall i\) with \(\alpha_{0} = \sum\nolimits_{i = 1}^{k} {\alpha_{i} }\) being termed the concentration factor. The Dirichlet hyper parameter \(\alpha_{i}\) is factorized as a product of a proportion (\(\theta_{k}\)) and a common concentration factor such as:

where \(\alpha_{0}\) increases the virtual counts for the category \(k\). By Bayes theorem, and since the prior Dirichlet is the conjugate prior for the Multinomial likelihood, the posterior probability distribution of \(\theta_{i}\) will also be a Dirichlet distribution that can be described in terms of the likelihood \(p\left( {{\varvec{n}}{|}{\varvec{\theta}}} \right)\user2{ }\) and prior \(p\left( {\varvec{\theta}} \right)\):

When the number of observations increases, the probability estimate is dominated by the Multinomial likelihood. Therefore, the expert-based priors will be increasingly superseded by real data as it is continuously captured during surveys.

3.1.3 Synthetic building portfolios for a logic tree construction and spatial allocation

We propose to further characterise the prior and likelihood terms to obtain customized posterior distributions with proportions that represent the building stock composition. This is done through a logic tree. A similar approach was suggested by Riga et al. (2017) to highlight the uncertainties at the vulnerability level. Within our scope, we propose it as a tool for exploring the epistemic uncertainty in the portfolio composition. It has four complexity levels, namely:

-

(1)

The selection of the building class scheme (group of building classes).

-

(2)

The selection of the numerical weight, \({w}_{j}\) (per attribute type j), which scores and ranks the relevance of every attribute type in the vulnerability assessment and their ability for assessment during surveys. The set of \({w}_{j}\) is called the ‘weighting arrangement” (W.A).

-

(3)

The definition of a prior distribution which describes the initial guess about the composition of the building portfolio in the form of a Dirichlet distribution. This composition describes the representability of every building class in the area and is driven by data collection, expert criteria, or aggregated data (e.g., census).

-

(4)

The selection of the hyper-parameter \(\alpha_{0}\) (concentration factor(s)) of the conjugate posterior Dirichlet distribution obtained from Eq. 5. This selection acknowledges the degree of trust in the former assumptions. Larger values (\(\alpha_{0}\) ~ 50) represents a higher level of knowledge give similar compositions (almost unanimous consensus) whilst smaller ones (\(\alpha_{0}\) ~ 1) means low information content and hence low knowledge of the portfolio composition that result in sparser distributions (Hastie et al. 2015). For each selected \(\alpha_{0}\), a number of samples must be selected to represent stochastic compositions within synthetic building portfolios.

With this formulation, we retain the statistical nature of the exposure modelling while overcoming the top–down vision of having a fixed composition. To spatially distribute every synthetic building portfolio, a dasymetric disaggregation from population counts is followed as proposed in Pittore et al. (2020). This approach is suitable for a differential spatial allocation of the synthetic building portfolios whose composition is being reconfigured with every sample. Population counts reported in any aggregated data source (e.g., LandScan; WorldPop; GPWv4) can be used.

It should be noted that the former steps regard the use of a single group of building classes (scheme \(T_{k}^{A}\)). However, for the exposure modeller, there might be more than one suitable scheme to describe the building stock of a given area (e.g., \(T_{j}^{B} )\). Although subjective compatibility relations between building classes contained in two different schemes have been already proposed (e.g., between HAZUS and EMS-98 in Hancilar et al. 2010), there is still the question of how to obtain some of the basic metrics for this alternative exposure model \(T_{j}^{B}\) (i.e., their proportions, average night-time residents, and replacement costs). This is not a trivial task since this information might be only available in terms of one reference scheme. Thereby, we propose to obtain these metrics for other suitable schemes through the formulation of probabilistic inter-scheme compatibility matrices. This method is described in the following.

3.2 Probabilistic inter-scheme compatibility matrix

As presented in Eq. 2, we assume that each building class k within a given scheme (\(T_{k}^{A} )\) can be disaggregated into observable taxonomic features of a faceted taxonomy \(\{ F\}_{m}\). This procedure is also followed for the target scheme \((T_{j}^{B} )\) of interest. A straightforward application of the total probability theorem and a probabilistic description of the building type in taxonomic features allow us to define Eq. 6. This formulation allows us to obtain their probabilistic compatibility degree \(p\left( {T_{k}^{A} |T_{j}^{B} } \right)\) as a matrix.

Since we assume that the representations of a building within the two considered schemes are conditionally independent ( ) given the information on taxonomical features, we can describe the source scheme (\(T_{k}^{A} )\) as being modelled in terms of the taxonomic attributes that also compose the target-scheme (\(T_{j}^{B} :\)

) given the information on taxonomical features, we can describe the source scheme (\(T_{k}^{A} )\) as being modelled in terms of the taxonomic attributes that also compose the target-scheme (\(T_{j}^{B} :\)

, the former equation can also be expressed as a product, as expressed in Eq. 7.

, the former equation can also be expressed as a product, as expressed in Eq. 7.

We obtain a probabilistic representation of the compatibility degree across the two considered building classes in an alternative Bayesian formulation, as presented in Eq. 8.

Synthetic surveys based on the possible combinations of attributes that may describe every building class are input to solve the compatibility scores and are integrated through the selection of the weighting arrangement for every commonly considered attribute. (\(w_{j}\), see Sect. 3.1.1). Using this matrix, we can obtain the missing normalized values (i.e., distribution of prior proportions) of the target scheme \((\{ R\}_{{T_{j}^{B} }} )\) by simply applying a dot product between the obtained matrix and the equivalent quantities of the source scheme (\(\{ R\}_{{T_{k}^{A} }} )\), as illustrated in Eq. 9.

For non-normalized metrics (e.g., average night-time residents and replacement cost of every class), the associated value with the most compatible class of the source scheme (\(T_{k}^{A} )\) is proposed to be selected. Examples of this procedure have been recently reported in Gomez-Zapata et al. (2021a, c). Once we have the number of residents and prior compositions of the alternative portfolio, we can once again perform the formerly described dasymetric disaggregation procedure from population counts (end of Sect. 3.1.3) to obtain an exposure model for \(T_{j}^{B}\).

3.3 Scenario-based earthquake risk assessment with spatially distributed ground motion fields

An earthquake scenario is selected for the construction of a seismic rupture and the simulation of spatially distributed ground motion from suitable ground motion prediction equation(s) (GMPE). At least 1000 ground motion simulations must be computed for the considered earthquake rupture scenario to address its aleatory uncertainty (Silva 2016). Each realisation generates a spatially and inter-period cross-correlated GMF that is estimated based upon the GMPE-based intra-event variance. The actual selection of the cross-correlation model (among the currently available ones) is naturally also subject to epistemic uncertainties and its study is beyond the scope of this work. It is important to generate spatially cross-correlated ground motion fields for the same intensity measures that are required by the fragility functions and its use is transversal to all the logic tree levels explained in Sect. 3.1.3 To complement the vulnerability analysis, a consequence model that includes the total replacement cost for the building class and their loss ratios for every damage state must be selected. The investigation of the epistemic uncertainties associated with these choices is not within the scope of this work.

3.4 Epistemic uncertainty exploration of the building exposure definition in earthquake loss models

Once all of the aforementioned components are gathered, each synthetic portfolio customised within the logic tree (Sect. 3.1.3) is used to investigate the impact of the differential building exposure composition on scenario-based earthquake loss models through Monte Carlo simulations. This allows us to differentially propagate and disaggregate the influence of each of the four components listed in Sect. 3.1.3. Sensitivity analyses are done to compare their respective loss estimates (replacement cost values) with each other and to explore their related individual uncertainties.

4 Application

4.1 Context of the study area: Valparaíso, Chile

The study area comprises the communes of Valparaíso and Viña del Mar (see Fig. 1). Hereafter, for simplicity, both communes will be called ‘Valparaíso’. It is the second-largest Chilean urban centre, with its port being the main container and passenger port in Chile and hence, is vital for the country’s economy. As described by Indirli et al. (2011), Valparaíso shows a very heterogeneous building inventory, with its historic district being declared a World Heritage Site by UNESCO in 2003 after recognizing its diverse urban layout and architecture (Jiménez et al. 2018). Notably, Geiß et al. (2017) investigated the usefulness of training segments from OSM data for exposure data extraction and modelling from satellite imagery for Valparaíso.

The Central Chile area, and in particular Valparaíso, have been hit by powerful historical earthquakes. One of the few with a description is the 1906 earthquake, with an inferred magnitude of Mw 8.0–8.2 (Carvajal et al. 2017), which caused widespread damage (Montessus de Ballore 1914). In 1985, a Mw 8.0 event with an epicentre located just 120 km west of the city destroyed 70.000 houses and damaged an additional 140,000 dwellings, leaving 950,000 persons homeless, and caused losses of about $1.8 billion (Comte et al. 1986). The 2010 Mw 8.8 earthquake caused structural damage to some buildings in Viña del Mar (de la Llera et al. 2017) and impacted the labour market recovery and the overall economy (Jiménez Martínez et al. 2020). Furthermore, recent seismic activity was noticed in the region during the 2017 Mw 6.9 event, which was triggered by a slow slip event and led to an important clustered aftershock sequence (Ruiz et al. 2017). It is notable that the MARVASTO project (Indirli et al. 2011) developed earthquake scenarios to obtain the expected seismic ground motions and the structural performance of three churches in the city. Nonetheless, to the best of the authors’ knowledge, no scenario describing seismic risk for the residential building stock of Valparaíso has been reported in the scientific literature.

4.2 Probabilistic exposure model construction for Valparaíso

4.2.1 The definition of the likelihood function: The intra-scheme compatibility levels

Two earthquake-oriented schemes, namely SARA and HAZUS, have been considered to represent the building portfolio in Valparaíso. Both schemes have already been proposed for exposure modelling at the third administrative division, “commune”, in Chile in earlier works. SARA constitutes an effort to harmonize and define all the building types in the South American Andes region (GEM 2014), largely based on the World Housing Encyclopaedia reports (WHE 2014) through expert judgment that carefully designed local mapping-schemas at the country level (Yepes-Estrada et al. 2017). Thus, on the one hand, we can infer 17 SARA building classes for Valparaíso, combining the storey ranges when it was possible (Table 1). On the other hand, according to Aguirre et al. (2018), HAZUS addresses 11 residential classes for another Chilean city with similar construction practices as Valparaíso (see Table 2). Short descriptions of the typologies enclosed in both schemes are provided in these two tables. Notably, SARA implies the assumption that the residential buildings in Chile can only comprise up to 19 storeys, does not include steel types, and only considers wall structure for reinforced concrete structures. The latter expert-based assumptions do not coincide with local studies for the city (e.g., Jiménez et al. 2018).

604 randomly distributed buildings in the urban area of Valparaíso (Fig. 1) were inspected by local structural engineers from the Chilean Research Centre for Integrated Disaster Risk Management (CIGIDEN) to test the actual plausibility of the selected schemes in the study area. To construct the customized likelihood terms that regard the building proportions of the surveyed sample, as presented in Sect. 3.1.1, the building stock is assumed to follow a Multinomial distribution. This data collection of their attribute values was done in terms of the GEM v.2.0 taxonomy through the RRVS web-platform (Haas et al. 2016) and is available in Merino-Peña et al. (2021).

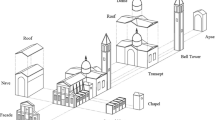

Every building class in the two schemes is disaggregated into attribute types and values of the GEM v.2.0 taxonomy. The corresponding fuzzy compatibility levels between the attribute values and building classes are assigned through expert elicitation (see Sect. 3.1.1 as proposed in Pittore et al. 2018). Their graphical representation is depicted in Fig. 2. The complete description of these taxonomic attributes can be found in the web version of the Glossary for GEM taxonomy (https://taxonomy.openquake.org/). Descriptions of the HAZUS typologies in terms of the GEM v.2.0 taxonomy are available in https://platform.openquake.org/vulnerability/list as part of the initiative started by Yepes-Estrada et al. (2016). A python code to generate these schemes in JSON format along with these figures has been made available in Gomez-Zapata et al. (2021b).

As outlined in Sect. 3.1.1, numerical weights to rank the importance of the considered taxonomic attribute types are required. This selection is based on expert elicitation and carries epistemic uncertainties. For SARA and HAZUS, four common attribute types are selected for two weighting arrangements, W.A-1 and W.A-2, as depicted in Table 3 (Fig. 3).

Pictures of some selected buildings’ façades surveyed in Valparaíso. Their classifications in terms of the SARA and HAZUS schemes are displayed considering the two weighting arrangements presented in Table 3. No distinction is made when both weights led to the same class. ©Google Street View, digital images, 2021

Subsequently, the observed attribute values over the surveyed building sample are classified using the two selected weighting arrangements. This process leads to different building typology distributions, as shown in Fig. 4. This is because the LLRS ductility was, in most of the cases, not correctly assigned during the surveys, resulting in the misidentification of unreinforced (W.A-1) and reinforced structures (W.A-2).

Distributions of the most likely vulnerability classes of the 604 buildings surveyed in Valparaíso. This was achieved by evaluating the compatibility levels between the observed taxonomic building attributes and the typologies within each scheme: a SARA and b HAZUS. For each scheme we consider the two weighting arrangements (W.A-1, W.A-2) presented in Table 3

4.2.2 Prior and posterior distributions

Priors have been considered as (1) informative if the portfolio composition is derived from expert elicitation (GEM 2014) and (2) uninformative if the portfolio has equal proportions per class. Since informative prior proportions, average night-time occupancy, and replacement costs are only known for the source scheme SARA (Table 1), we follow the method presented in Sect. 3.2 (inter-scheme compatibility matrix) to obtain these quantities for HAZUS (target scheme). This is obtained by generating all possible combinations of attribute values per scheme (see the horizontal axis of Fig. 2). The two sets of weights reported in Table 3 are used to obtain the inter-scheme compatibility matrices presented in Fig. 5. The scripts and related inputs to produce these two matrices are provided in Gomez-Zapata et al. (2021b). The application of Eq. 9 allows us to obtain the two sets of informative priors’ proportions for the HAZUS building classes that are reported in Table 4.

Inter-scheme compatibility matrices for SARA (source) and HAZUS (target) for the residential building stock of Valparaíso. They are obtained from the weighting arrangements a W.A-1 and b W.A-2 for their common attributes (Table 3)

Table 4 also reports the replacement costs and night-time residents for each of the HAZUS target classes. These quantities are assigned from the SARA source class with the largest compatibility in the inter-scheme compatibility matrices. Therefore, identical values of replacement costs and night-time residents are obtained across the HAZUS scheme for both W.A-1 and W.A-2, except for the classes C3L, RM1L, RM1M, and URML.

Once we have obtained the prior and likelihood terms for every building class, the posterior distributions are constructed following Eq. 5 and are shown in Fig. 6. Since the assignment of priors through expert elicitation has been at the commune level (GEM 2014), the obtained posterior distributions are up-scaled to represent different exposure models with various building compositions in Valparaíso.

4.2.3 Synthetic building portfolios for a logic tree construction and spatial allocation

Each of the four types of posterior distributions per considered scheme are explored by considering three concentration factors (\(\alpha_{o}\)), namely: \(\alpha_{{0_{1} }}\)= 1.0; \(\alpha_{{0_{2} }}\)= 15, and \(\alpha_{{0_{3} }}\)= 50 (see Eq. 4). They describe three different degrees of confidence in the assumptions beneath the construction of the posterior distribution: very low, moderate, and very high, respectively. Since we assume conjugacy (Eq. 5), we generate 300 random samples from the twelve Dirichlet posterior distributions per scheme. Each of these samples represents a synthetic building portfolio. They can also be interpreted as 300 different criteria (e.g., a pool of virtual experts) regarding the portfolio composition: very divergent opinions, moderately similar, and very similar, with respect to the average proportions in the posteriors. A logic tree with four branches was ultimately constructed, as shown in Fig. 7.

Dasymetric disaggregation of the gridded population product GPWv4, with a 30 arc-second grid resolution model and population projections for 2020 (CIESIN 2018) was carried out to obtain the spatial distribution of the building counts per synthetic buildings portfolio. For this process, we have used the occupancy (residents per building class) of the SARA (Table 1) and HAZUS schemes (Table 4).

The corresponding expected total building counts in terms of SARA and HAZUS schemes assuming equally composed portfolios, as well as expert-based are shown in the first column of Fig. 8. Since the number of residents in every HAZUS building class is different for W.A-1 and W.A-2, the building counts vary accordingly. It can be seen that in the top–down approach, the total building counts in Valparaíso are almost identical, regardless of the scheme implemented. The plots in the other four columns of Fig. 8 display the associated variabilities in the estimations of the total number of buildings for the synthetic portfolios constructed from the 300 samples. Regardless of the scheme used, it is evident that the total building counts from synthetic portfolios obtained from posterior distributions with flat priors present a much lower variability than their counterparts with informative priors. Of course, results assuming equally composed portfolios from prior distributions are not realistic for our study area. These related subplots are only shown to raise awareness that a careful first assumption on the prior is vital, otherwise the next step (defining \(\alpha_{0}\)) may lead to very different building proportions with respect to the ones based on informative assumptions (e.g., expert-based).

Dependency between the portfolio composition and the spread of the estimated total building counts (from dasymetric disaggregation of the population and occupancy). The subplots on the first column (top–down approach) comprises a single value of expected building counts either equally composed (i.e., with equal proportions) or as defined by the SARA model (GEM 2014) and the respective weighting arrangements. The distributions presented in the other subplots correspond to every case of the logic tree depicted in Fig. 7

Moreover, it is interesting that when both informative priors and \(\alpha_{0} = 50\) (high degree of confidence) are jointly addressed, the resultant variability in the building counts provide a range that contains the value of the unique composition that was assigned by the top–down approach. This type of similarity is more evident in the HAZUS scheme, whilst a larger variability appears in the SARA scheme. This might be due to the comparatively larger sensitivity of SARA to the individual building assessment during the surveys (in terms of the weighting arrangement, see Fig. 4) which also impacted upon the construction of their respective likelihood distributions. Also, for both schemes, we observe that the selection of W.A-2 imposes a larger number of observations of ductile buildings (with a larger number of residents, see Tables 1 and 4). Therefore, there is a consequent reduction in the variance of building counts when these distributions are obtained from W.A-2 in comparison to when they are generated using W.A-1. The reduction in this variability does not necessarily mean that the third boxplot in Fig. 8j better represents the entire building counts for the exposure model of Valparaíso than its counterpart in Fig. 8h. Rather, it is pointing to the underlying assumptions in deriving total building counts from dasymetric disaggregation, fully relying on top–down approaches (without integrating any evidence). Instead of having a fixed total buildings count (Fig. 8a, f), we are obtaining a range of total building count values whose variation is consistent with prior assumptions and observations (third boxplots in Fig. 8c, e, j, and h). These ranges are also consistent with the degree of variation observed in Geiß et al. (2017), that assessed the likely range of building counts for Valparaíso (i.e., 64,803–72,412 building units) by a combination of remote sensing data products and OpenStreetMap building footprints.

4.3 Scenario-based earthquake risk assessment with spatially distributed ground motion fields

An earthquake scenario with a magnitude Mw 8.2, similar to the 1906 Valparaíso event, is used throughout this example. Given the lack of instrumentation at that time, its exact location and other parameters are uncertain. A finite fault model was generated making use of the OpenQuake Engine (Pagani et al. 2014). The basic parameters used in the simulations are: hypocentral location (longitude = − 72.25°; latitude = − 33.88°; depth = 28 km), strike = 9°, dip = 18°, and rake = 90°. The spatial distribution of the expected ground motion values is modelled using the GMPE developed for the Chilean inter-plate subduction area reported in Montalva et al. (2017). This GMPE considers in the “site” term the shear wave velocity in the uppermost 30 m depth (Vs30). We used the topography-based Vs30 values (Allen and Wald 2007) and replaced them when possible with the seismic microzonation study reported in Mendoza et al. (2018). The final Vs30 gridded values are displayed in Fig. 9.

As a first step, we generate the GMPE-based median ground motion fields (GMF) for PGA; S.A. (0.3 s); S.A. (1.0 s) for the selected earthquake scenario. They are shown in Fig. 10a–c, respectively. To account for spatial variability, we follow two approaches where we generate these fields: (1) uncorrelated random fields (No Corr) and (2) spatially and inter-period cross-correlated random fields using the Markhvida et al. (2018) cross-correlation model (Corr). The aleatory uncertainty in the simulated ground motion has been addressed by generating 1000 realisations in every case. Single realisations of cross-correlated GMF per spectral acceleration are shown for the study are (Fig. 10d–f).

First row: median values of the Mw 8.2 earthquake rupture for three IMs a PGA, b S.A. (0.3 s), and c S.A. (1.0 s) using the Montalva et al. (2017) GMPE. The earthquake hypocentre is depicted by a white square. The rupture plane is displayed by a green rectangle. The study area is shown by a yellow square. Second row: details of the study area with a single realisation of the cross-correlated seismic GMF for their corresponding spectral periods using the Markhvida et al. (2018) model

The selection of the aforementioned GMFs is related to the IM required by the SARA fragility functions (Villar-Vega et al. (2017). It should be noted that, although HAZUS (FEMA 2012) provides fragility functions at the fundamental period of the structure, we have considered the common assumption of using PGA as the only IM for the entire set of curves (e.g., Aguirre et al. 2018). The seismic design standard for moderate-code (MC) is selected for the HAZUS classes for which this category is available (i.e., 8 types out of 11) and low-code (LC) is assumed for the remaining 3 types (S5H, C3L, and URML). This decision stems from the fact that the construction of most of the currently inhabitable residential buildings in Valparaíso took place between the establishment of two major Chilean seismic codes (NCh433 Of.72, INITN 1972, and NCh433 Of.96, INN 1996). Their development was motivated by the 1960 Mw 9.5 Valdivia earthquake and the 1985 Mw 8.0 Valparaíso earthquake respectively.

The consequence model for vulnerability assessment is complemented with the selection of loss ratios. For the four damage states considered by these two sets of fragility models, we assume loss ratios of 2%, 10%, 50%, and 100% of their replacement costs (Tables 1, 4). Similar loss ratios have been recently proposed for generic seismic risk applications (e.g., Martins and Silva 2020). A sensitivity analysis of this selection is out of the scope of this study.

4.4 Results: epistemic uncertainty of exposure compositions in seismic risk scenario

The influence of the epistemic uncertainty in the residential building portfolio composition of Valparaíso to loss assessment is carried out while performing a sensitivity analysis for the selected earthquake scenario (Mw 8.2) and the various exposure compositions considered. Two comparisons are presented:

-

(1)

The direct financial losses are computed only for the portfolios whose composition is given by the posterior distributions (Fig. 6), and whose median building counts are given by the value in red on the boxplots when \({\alpha }_{0}\)~ 50 in Fig. 8). These results are reported in Fig. 11.

-

(2)

The direct financial losses for every complete distribution of Fig. 8 are computed, and their results are presented in the form of normalized loss exceedance curves (LEC).

Comparison of the scenario-based direct financial losses (USD) on the residential building stock in Valparaíso considering SARA and HAZUS (moderate code) using the 1000 GMF for each case: with a spatially cross-correlation model (Corr.) and spatially uncorrelated (No Corr.). Different portfolio compositions are considered: top–down vision and expert elicitation (first column), equally composed portfolios (second one), and the customized posterior distributions of Fig. 6

Figure 11 also shows the overall variability in the losses imposed by the epistemic uncertainty related to the consideration of using or not spatially cross-correlated GMF. There is a very low variability in the resulting losses when 1000 uncorrelated GMF were generated (No Corr). In fact, for such a dense, spatially aggregated building portfolio, the effect of spatially uncorrelated variations in the ground motion will eventually average out, leading to very little dispersion in the loss estimates. Similar evidence on the impact of correlation models and the size of the building portfolios have been noted by other researchers (i.e., Bazzurro and Luco 2005; Sousa et al. 2018; Silva 2019). Figure 11 also shows that, for a single composition, using either cross-correlated GMF (for SARA) or only spatially correlated GMF (for HAZUS), similar uncertainty ranges are expected. A similar feature was noted by Michel et al. (2017) performing sensitivity analyses to the IM of the fragility functions for various spatially correlated GMF.

Subsequently, we present loss exceedance curves (LEC) from the earthquake scenario considering the SARA and HAZUS schemes for the complete distribution of building counts presented in Fig. 8. They are illustrated in Figs. 12 and 13, respectively. They are obtained considering the 300 synthetic portfolios for each of the 12 posteriors distributions as presented in Fig. 7. LEC are displayed in blue if cross-correlated GMF were addressed and in yellow considering spatially uncorrelated GMF. There are another three sets of LEC included in each subplot:

-

(1)

A pair of curves that represent the direct losses obtained from a unique composition according to the joint expert elicitation at the Commune level (GEM 2014) and the use of mapping-schemas over census data (Yepes-Estrada et al. 2017). They are coloured in purple when cross-correlated GMF are accounted for and in red when spatially uncorrelated GMFs were not addressed.

-

(2)

A pair of curves generated considering the portfolio composition, as described by the posterior distributions (Fig. 6), are represented by non-continuous white curves.

-

(3)

A pair of curves while foreseeing the portfolio with equally composed proportions are shown by black curves.

Normalized loss exceedance curves (LEC) for different exposure compositions as depicted in the logic tree (Fig. 7) for the SARA scheme. Curves are displayed in blue if the ground motion cross-correlation model was addressed and in yellow with spatially uncorrelated ground motions. LEC from their respective customized posterior distributions are displayed in non-continuous white curves. The single composition vision as informative priors (as defined by GEM) are depicted in purple when the spatially cross-correlated GMF were addressed and in red with spatially uncorrelated GMF. Black curves represent the losses of a portfolio whose composition is assumed to have equal proportions

Normalized loss exceedance curves (LEC) for different exposure compositions as depicted in the logic tree (Fig. 7) for the HAZUS scheme. Curves are displayed in blue if the ground motion cross-correlation model was addressed and in yellow with spatially uncorrelated ground motions. LEC from their respective customized posterior distributions are displayed in non-continuous white curves. The single composition vision as informative priors (from the inter-scheme conversion matrices in Fig. 5) are depicted in purple when the spatially cross-correlated GMF were addressed and in red with spatially uncorrelated GMF. In every plot, the LEC values from their respective posterior distributions are displayed in non-continuous white curves. Black curves represent the losses of a portfolio whose class composition is assumed to have equal proportions

The white and black curves have not been distinguished by colours on whether spatially cross-correlated GMF are or not included. Nonetheless, when they were addressed, these two sets of curves are always within the range of the blue and the purple curves (i.e., smoother shape and shorter initial plateau). Similarly, when spatially uncorrelated GMF are accounted for, they fall within the range of the yellow and red curves (sharper shapes). A decision to normalise the direct financial losses (repair cost) in all LEC results with respect to the maximum loss values obtained from the use of GMF with spatially uncorrelated residuals has been taken. Then, the metric in these two plots is the “normalized number of losses”. This decision is supported by the work of Vamvatsikos et al. (2010) who argued that, due to continuously evolving exposure over time and location, erroneous physical damage predictions can arise if the losses are shown as absolute instead of normalized. This procedure is useful to compare the uncertainties that arise from the different exposure composed by synthetic portfolios.

The axes are not identical for the SARA (Fig. 12) and HAZUS (Fig. 13) results. For the latter, the horizontal axis starts at 0.7 to graphically highlight some differences. These curves are the joint result of the epistemic uncertainty in the selection of the basic scheme (set of buildings), its degree of confidence, the IM of the fragility functions and whether using a correlation model or not. The role of these involved components within this seismic risk modelling exercise is discussed separately hereafter.

4.4.1 The role of the concentration factor \(\alpha_{0}\)

The plots in the first column of Fig. 12 (SARA) and Fig. 13 (HAZUS) show a greater dispersion (and therefore uncertainty in the results) when \(\alpha_{{0_{1} }}\)=1.0 is addressed. This represents a lower degree of confidence in the portfolio compositions which is perceived as an “increased spread” around the mean value. This variability decreases for \(\alpha_{{0_{2} }} = 15\) (intermediate degree of confidence) in the second column, being the lowest when \(\alpha_{{0_{3} }} = 50\) is considered (third column), which simulates very similar compositions as the customized posterior distributions (an almost unanimous consensus on the portfolio composition).

4.4.2 The role of the identification of buildings attributes (evidence) in the likelihood term.

The selection of the numerical weight, \(w_{j}\), which score and integrate the taxonomic attribute types, and that together with the collected data evidence construct the likelihood term (see Sect. 3.1.1), does not show a relatively large impact on the resultant LEC for the SARA scheme. This could be inferred from Fig. 6 where the weighting arrangement did not impose such a large difference upon the construction of posterior distributions as the prior definition did. Nonetheless, these weights did heavily impact upon the construction of the inter-scheme compatibility matrices for HAZUS (Fig. 5). The former ones are used to obtain the informative prior and replacement costs for HAZUS (see Table 4), and hence also impacted upon their respective loss estimates. This feature is evident in the different importance assigned to the ductility level (Table 3) which led to the assignment of a larger proportion of non-ductile building classes for W.A-1 (Fig. 4). This is also linked to the fact that the fragility functions of W.A-1 driven classes (non-ductile: DNO), require lower acceleration values to reach the same damage state than the W.A-2 driven classes (ductile: DUC) (e.g., MCF-DNO-H1-3 vs. MCF-DUC-H1-3 in SARA; and URML vs. RM1L in HAZUS).

4.4.3 The role of the selection of the prior distribution type

LEC obtained from posterior distributions which were constrained using a flat prior (non-continuous white curves in the first and third rows) always led to lower values than assuming equally composed portfolios (respective black curves) and assuming unique top–down vision using the GEM mapping-schemes (purple and red curves). Posteriors created with flat priors tend to concentrate lower loss values at the lowest probabilities of exceedance (p.o.e). The opposite is observed when informative priors are addressed. Since for both types of posteriors there was an incorporation of evidence (surveys) into the construction of the likelihood term, these differences come from the selection of the type of prior (uninformative vs. informative). Similar features could be observed in Fig. 6 where flat priors largely impacted upon the creation of the posterior distributions. We recall once again that flat priors are only introduced herein for illustrative purposes and their use is never recommended. The greater dispersion in SARA’s LEC might be due to the joint effect of the prior definition and the variability induced by using the cross-correlation model for the GMF generated for the three spectral periods required by SARA. This aspect is discussed hereafter.

4.4.4 The role of the spatially cross-correlated ground motion residuals

Regardless of the building portfolio composition, when the cross-correlation model is considered, their corresponding LEC shows a greater variability. This is not surprising and is aligned with the findings of Crowley et al. (2008) in relation to the log-normal distribution of the seismic ground motion induced by the GMPE. Moreover, considering that the spatial correlation of ground motion IMs decreases rapidly with distance (e.g., Schiappapietra and Douglas 2020), its effect on loss-estimations is maximized when it is applied to a dense and large exposure model such as ours (aggregated building portfolio on a 1 km × 1 km grid), since buildings within a grid cell are treated as if the inter-station distance was zero. Moreover, because we have utilised the HAZUS fragility functions as functions of the structural demand for a single intensity (PGA), in reality only the spatially cross-correlated GMFs for PGA are utilised for that scheme. This leads to a comparatively lower variability in the HAZUS’ LEC that only starts to be perceptible, for greater p.o.e, at significantly larger values of their normalized metric (i.e., 0.7). Interestingly, whether we decide to use spatially uncorrelated or cross-correlated GMF, when we employ a single IM in the vulnerability analyses, as assumed for HAZUS, we observe that as the level of knowledge in the exposure composition increases (with increasing \(\alpha_{0}\)), the bias in the LEC (made up of the stochastic portfolios) is accordingly reduced. Contrary, this point of convergence with respect to the composition of each posterior distribution is never reached by the SARA’s LEC.

Considering that PGA alone is not a sufficient IM to model the various structural fragility functions that a real heterogeneous building portfolio requires (Luco and Cornell 2007), when we decide to use more realistic fragility functions for the heterogeneous portfolio, the spatial variation in the ground motion places a lower limit on the uncertainty in the loss estimates that cannot be entirely reduced (Bal et al. 2010; Michel et al. 2017). This remaining embedded uncertainty in the loss estimations will be present even when the composition of the building portfolio is well known (i.e., \(\alpha_{{0_{3} }} = 50\)). We remark that in this study, the variability in the GMF is brought by the selection of using (or not) a correlation model, and not by employing various GMPE, as more conventional sensitivity analyses apply. A note about this aspect is presented in the discussion section.

Furthermore, in Fig. 14 we present normalized loss curves using the results obtained from the top–down assumption (single exposure composition) and without correlation per scheme. This benchmark (black dashed line) is used not because it is the most acceptable model, but because it is the most conventionally used in standard approaches (see Sect. 2.2.1). Only posterior distributions designed with informative priors and with W.A-2 (for ductile structures in the surveys) are used and are shown by purple lines. Blue lines represent the stochastic portfolios either with \(\alpha_{{0_{2} }} = 15\) or \(\alpha_{{0_{3} }} = 50\). As expected, it can also be seen that as \(\alpha_{0}\) increases, there is a continuous reduction in the biases. For similar \(\alpha_{0}\), we see the larger variability in the normalized loss given by the posterior proportions of SARA in comparison with HAZUS. This once again shows the impact of the cross-correlated GMF.

Figure 15a displays the sensitivity resulting from the selection of the weighting arrangement (\(w_{j}\)) for both schemes. Its benchmark is the HAZUS loss curve which was obtained by assuming the portfolio composition as the posterior proportion for W.A-1 (Fig. 6) and with spatially cross-correlated GMF. Similarly, as shown in Fig. 11, the largest values are obtained for HAZUS with W.A-2. Figure 15-b shows the impact of foreseeing the portfolio composition either exclusively from a top–down vision (Prior) or when the evidence from surveys is included (Post.). Its benchmark is the HAZUS loss curve assuming a portfolio that is entirely composed as the prior proportion for W.A-1 (Table 4) and with spatially cross-correlated GMF. Although the posterior always led to larger losses, it is noted that such differences are comparative lower for SARA than for HAZUS. Interestingly, in both figures, we observe that for the HAZUS model, the spatially correlated GMF for PGA used by its fragility functions led to certain variations around the same value with respect to the benchmark, but still considerably lower than using uncorrelated random residuals.

Normalized losses for the earthquake scenario in Valparaiso for SARA and HAZUS schemes. Plots show sensitivity on a the selection of the weighting arrangement (\({w}_{j}\)), and b the portfolio composition, either from a top–down vision (Prior) or including surveyed evidence (Post.). The normalization nomenclature (i.e., || ||) is used to distinguish the benchmarks

5 Discussion and future outlook

In this study we have considered that, within a Bayesian approach for exposure modelling, the Dirichlet distribution is suitable for representing the composition (i.e., the relative proportions) of spatially distributed residential building portfolios. To consider the integration of empirical (e.g., field-based) observations into the model, we have assumed the likelihood term to follow a multinomial distribution. Due to conjugacy of multinomial and Dirichlet distributions, prior and posterior are both Dirichlet distributions that differ only by the empirical contribution inferred from the frequency observed in the field. It is also worth pointing out that this data collection does not need to be exclusively derived from surveys (as herein exemplified), but complementary exploiting emerging technologies such as remote sensing and image reconnaissance for exposure modelling (e.g., Liuzzi et al. 2019; Torres et al. 2019). Furthermore, we have proposed to factorize the Dirichlet hyperparameter \(\alpha_{i}\) through the so-called concentration factor, \(\alpha_{0}\), that “weights” and adjusts the prior proportions to the observed frequencies. This parameter is useful for describing the extent of confidence in the building portfolio’s initial assumptions while still lacking a consistent number of empirical observations. With the increase in the number of observations, the influence of the concentration factor on the posterior distribution will promptly decrease.

In such a probabilistic framework, we have proposed to arrange the components involved into a logic tree approach (Fig. 7). It has allowed us to explore individually how the related uncertainty to their individual components are propagated throughout scenario-based seismic risk and ultimately be reflected in the biases of the direct loss estimates (replacement cost). For the sake of simplicity in illustrating the proposed approach, we have considered only some uncertainties in the parameters employed throughout its development. Thus, the application part of this work has several limitations that are clearly beyond its scope. However, they could be addressed in future research. We list some of them hereafter.

-

The fixed number of population counts projected for 2020 reported by GPWv4 (CIESIN 2018) was spatially disaggregated to estimate the distribution of total building counts per stochastic exposure model (Fig. 8). This was based on the average number of night-time residents (for each building class) that we have assumed to be statistical values. However, accounting population projections as well as the number of inhabitants per building as random variables that may follow local distributions (e.g., Calderón and Silva 2021) will certainly contribute to creating more comprehensive probabilistic exposure models.

-

The assessment of the replacement cost for each building class as well as the selection of cost ratios per damage state (within the economic consequence model for vulnerability assessment) are an important source of uncertainties in seismic risk assessments that will benefit from further study (Martins et al. 2016; Michel et al. 2017). In this sense, the relative importance of the selection of loss ratios as a function of the building classes, as investigated by Kalakonas et al. (2020), could be further explored.

-

A larger set of prior distributions could also be obtained in future studies by knowledge-elicitation of a pool of domain experts. This process could be done more rigorously to constrain the prior assumptions for smaller sectors within a large study area.

-

The choice of GMPE(s) influences the resulting cross-correlated ground motion fields for earthquake scenarios. This comes from the manner in which the residuals and soil nonlinearity are accounted for in the functional form of the selected attenuation model (Weatherill et al. 2015). Hence, it is worth conducting sensitivity analyses in the future that provide us a more complete picture about their differential impact within the proposed method (e.g., Kotha et al. 2018). However, it is remarkable that for the subduction regime upon which Valparaíso is located, there are few adequate GMPE models available which follow similar functional forms (i.e., Abrahamson et al. 2016; Montalva et al. 2017). In fact, Hussain et al. (2020) found negligible differences in direct loss estimates for the residential building stock of another Chilean city after making use of these two GMPE to simulate some the associated GMF from subduction earthquake scenarios.

-

The subjective selection of the type of spatial cross-correlation model used to generate the GMF carries epistemic uncertainties (Weatherill et al. 2015). Although the selected model proposed by Markhvida et al. (2018) has been already implemented for subduction earthquakes for the South American context by Markhvida et al. (2017), more rigorous practices could include the incorporation of a locally constrained spatial correlation model (Candia et al. 2020, study published after the elaboration of our work). Furthermore, it is important to have in mind the simplifications induced by applying generic correlation models without having performed local wave-form analyses (Stafford et al. 2019; Schiappapietra and Smerzini 2021). These assumptions might induce overestimations in the risk estimates as observed in Abbasnejadfard et al. (2021).

To analyse more rigorously the contribution of the aforementioned components, the logic tree proposed in this work could be extended. To improve the validation of the proposed theoretical framework, a larger sample of inspected building attributes would be beneficial. Also, post-damage damage surveys (according to taxonomic data standards) should be carried out in the aftermath of a damaging event, along with suitable recordings/observations of the macroseismic/instrumental intensity to analyse the differences between the observed levels of damage and theoretical earthquake loss models. In fact, it is only recently that such validation issues have started to be comprehensively investigated (Riga et al. 2021).

We emphasize that the test site we have selected (Valparaíso, Chile) has been only presented to discuss the role of uncertainty in the exposure model definition on scenario-based earthquake loss models using the methodology herein presented. Likewise, it should be noted that the resulting direct loss estimates that we obtained from flat-prior, as well as those from spatially uncorrelated GMF, are unrealistic and have been presented only as a part of the sensitivity analyses included in the methodology. The study presented does not argue whether one scheme (SARA or HAZUS) is better suited for representing the residential building stock of the city. Although the use of the set of SARA building classes is not completely validated from the surveyed data (Fig. 3) and is more sensitive to the non-identification of certain attributes (Fig. 4), their associated fragility functions (Villar-Vega et al. 2017), unlike HAZUS (FEMA 2012), provide some advantages for risk assessment. For instance, they were derived from the analysis of some regional records within the tectonic setting of the study area and also provided a clearer link between GMF with spatially and inter-period cross-correlated IM required to model the seismic vulnerability of heterogeneous building stocks. However, considering that that set of fragility functions was derived using a regional database of records, they lack hazard-consistent ground motions records as recently discussed by Hoyos and Hernández (2021). Interestingly, these authors also agreed on the possibility of implementing logic trees for various stages within the derivation of vulnerability functions. Such advice emerged as a justification after they found large differences in the risk metrics for a local (city-scale) building portfolio imposed by using the regionally derived SARA fragility functions compared to their subsequent parametrisation (i.e., accounting for local structural characteristics and a locally-consistent record selection). Similar findings, along with the importance of having sufficient IM, were also recently reported in Kohrangi et al. (2021). Although these kinds of approaches are beyond the scope of our study, drawing attention to this topic adds another layer to the exploration of epistemic uncertainties in earthquake loss models for local building portfolios. The integration between the aforementioned optimisation procedures in the vulnerability component along with probabilistically modelled building exposure models is definitely worth exploring in future risk assessment studies.

It is worth noting that, contrary to the two studies cited above, our Bayesian exposure modelling approach has been only tested herein for scenario-based risk calculations and not on probabilistic risk assessment. For the latter, it is also worth exploring in future studies how metrics such as the Average Annual Loss (AAL, which is more relevant to the insurance industry) may vary when Bayesian-derived exposure models are considered to represent specific portfolio of assets to be insured. However, since we were able to successfully propagate some uncertainties implicit in the exposure modelling processes along the risk chain, the range of variation obtained in the results for the most optimal combination (i.e., the adoption of cross-correlated GMF together with a high level of knowledge of the portfolio in terms of the SARA scheme and vulnerability functions) could provide useful input for risk communication strategies. For instance, in the framework of the RIESGOS project (https://www.riesgos.de/en/), the assumptions involved within our modular approach have already been discussed with local scientists and end-users. Furthermore, as discussed by Beven et al. (2018), the use of branched explorative frameworks such as the one we have proposed here for probabilistic exposure modelling (not necessarily identical to the one we present in Fig. 7) provides an structured summary of the related assumptions that simplify the communication of the underlying uncertainties to local experts, who can then evaluate and compare them with continuously evolving exposure models for seismic risk.

6 Conclusions and recommendations

This study argues that most large-area portfolios within an exposure model are affected by epistemic uncertainties, resulting in a range of possible total building counts and class compositions. Expert-elicited models used by top–down approaches, even when carefully crafted (e.g., Ma et al. 2021) may often provide only a partial perspective of the real composition of the building stock, while bottom–up approaches based on field-surveys are usually resource-intensive and are seldom carried out systematically.

To tackle these limitations, an exploratory Bayesian framework to study the epistemic uncertainty in seismic risk loss estimates associated with the probabilistic nature of the building exposure model has been presented. This approach allows the seamless integration of desktop-based and expert-elicited approaches (Sect. 2.2.1) with empirical field-based (and remote) observations (Sect. 2.2.2). In the proposed Bayesian formulation, these uncertainties can decrease by improving the number of observations and the quality of prior assumptions. The influence of the epistemic uncertainties to the resulting loss estimation has been explored through the systematic simulation of the individual parameters based on a logic tree approach for which we have considered four hierarchical components:

-

(1)

Considering the selection of risk-oriented building class schemes, fragility functions should be available for each of them.

-

(2)

The selection of the weighting arrangement (\(w_{j}\)) that rank relevance of taxonomic building attributes upon vulnerability assessment, as well as their ability of reconnaissance by the surveyor, are not only used to probabilistically assign the building classes of the observed sample (Fig. 4) and to configure the likelihood terms to update the proportions of building composition, but also to obtain inter-scheme compatibility matrices (Fig. 5) when two schemes are intended to represent the same building stock.

-

(3)

The selection of prior distributions about the composition of the building portfolio from expert-based knowledge (e.g., conventional top–down desktop studies). This is assumed to follow a Dirichlet distribution.

-

(4)

The selection of the concentration factor \(\alpha_{0}\) of the resultant posterior Dirichlet distribution. This parameter represents the degree of confidence in the underlying assumptions when little empirical evidence is available.

While valuable information can be retrieved from aggregated data sources (e.g., census data) and mapping schemes in order to gather expert-based priors, they should not be used to represent a given building stock (Sect. 2.2.1) without exploring the underlying uncertainties. Moreover, such top–down approaches, including the expert-based elicitation of the prior distributions, should be integrated by empirical observations whenever possible. Within this framework, the expert-based priors will be increasingly superseded by real data in the statistical exposure models. An iterative process can thus be envisaged which aims at continuously updating the model rather than employing static modelling. This foreshadows new avenues of research in exposure modelling for seismic risk, as recently discussed by other authors (Calderón and Silva 2021). Hence, collected evidence (e.g., surveys) that can support these assumptions is needed, and provides a clearer picture of the uncertainties in risk loss estimates (Kechidi et al. 2021).

The description of building classes in terms of taxonomic attribute types has been shown to be instrumental in identifying the most likely classes of a selected observed sample (within a predefined scheme). Considering the presence of certain taxonomic attributes within a probabilistic exposure model as part of a reducible epistemic uncertainty framework allows better links between the observed structural features of buildings with their most likely vulnerability classes. Such data collections, in terms of a faceted taxonomy, allows us to assess the degree of compatibility of each surveyed building with respect to a set of risk-oriented building classes (Sect. 3.1.1). Furthermore, this description is also an input for a novel probabilistic inter-scheme conversion (Sect. 3.2). That approach is useful for obtaining exposure descriptors (i.e., number of buildings belonging to a certain class, night-time residents, and replacement costs) under another reference (target) scheme in large-area exposure modelling applications. This can be done in terms of vulnerability descriptors for other hazard-reference schemes (e.g., Gomez-Zapata et al. 2021a), thus extending its application beyond the field of seismic risk.