Abstract

Recent work has demonstrated how data-driven AI methods can leverage consumer protection by supporting the automated analysis of legal documents. However, a shortcoming of data-driven approaches is poor explainability. We posit that in this domain useful explanations of classifier outcomes can be provided by resorting to legal rationales. We thus consider several configurations of memory-augmented neural networks where rationales are given a special role in the modeling of context knowledge. Our results show that rationales not only contribute to improve the classification accuracy, but are also able to offer meaningful, natural language explanations of otherwise opaque classifier outcomes.

Similar content being viewed by others

1 Introduction

Terms of service (ToS), also known as terms and conditions or simply terms, are consumer contracts governing the relation between providers and users. Terms that cause a significant imbalance in the parties’ rights and obligations, to the detriment of the consumer, are deemed unfair by Consumer Law. Despite substantive law in place, and despite the competence of enforcers for abstract control, providers of online services still tend to use unfair and unlawful clauses in these documents (Loos and Luzak 2016; Micklitz et al. 2017).

Consumers often cannot do anything about it. To begin with, they rarely read the contracts they are required to accept (Obar and Oeldorf-Hirsch 2016). Then, even if they did, a seemingly insurmountable knowledge barrier creates a clear unbalance. Legal knowledge is difficult, if not impossible, to access for individual consumers, as it is also difficult for consumers to know what data practices are implemented by companies and, therefore, to pinpoint unfair or unlawful conduct (Lippi et al. 2020). Finally, even if consumers had sufficient knowledge and awareness to take legal action, there is still the insurmountable difference between the financial resources of the average customer and those of the average provider. To help mitigate such an unbalance, consumer protection organizations have the competence to initiate judicial or administrative proceedings. However, they do not have the resources to fight against each unlawful practice.

It was thus suggested that Artificial Intelligence (AI) and AI-based tools can aid consumer protection organizations and leverage consumer empowerment, for example by supporting the automatic analysis and exposure of unfair ToS clauses (Lippi et al. 2019a).

Among other initiatives, the CLAUDETTE projectFootnote 1 undertook the challenge of consumer empowerment via AI, by investigating ways to automate reading and legal assessment of online consumer contracts and privacy policies with natural language processing techniques, so as to evaluate their compliance with EU consumer and data protection law.

A web service developed and maintained by the project automatically analyzes any ToS a user may feed it, and returns an annotated version of the same document, which highlights the potentially unfair clauses it contains (Lippi et al. 2019b).Footnote 2

While this constitutes a noteworthy first step, it suffers from poor transparency. In other words, however accurate a system like CLAUDETTE may be, it can hardly explain its output. This shortcoming is not specific to this particular system. Indeed, in recent years a rich debate has flourished around the opacity of AI systems that, in terms of accuracy, offer unprecedented results, but at the same time cannot be easily inspected in order to find reasons behind blatant and even possibly dangerous mistakes. This adds to the growing concern that data-driven machine-learning systems may exasperate existing biases and social inequalities (O’Neil 2016; Lippi et al. 2020). The debate is very lively as it involves thinkers with all sorts of backgrounds and complementary perspectives, governments, and, to some extent, the entire civil society.

There are good reasons for such a great interest. Research in social science suggests that providing explanations for recommended actions deeply influences users’ confidence in, and acceptance of, AI-based decisions and recommendations (Cramer et al. 2008). From this viewpoint, consumers, their organizations, and legal experts want to understand why a certain conclusion is made before accepting AI response.

In our opinion, a promising approach to associating explanations to the outcomes of neural-network classifiers could be enabled by Memory-Augmented Neural Networks or MANNs (Sukhbaatar et al. 2015). The basic idea behind MANNs is to combine the successful learning strategies developed in the machine learning literature for inference with a memory component that can be read and written to.

Consider for instance the following story:

Joe went to the kitchen. Fred went to the kitchen. Joe picked up the milk. Joe travelled to the office. Joe left the milk. Joe went to the bathroom. Where is the milk now?

Answering the question requires comprehension of the actions “picked up” and “left” as well as of the time elements of the story (Weston et al. 2014). A MANN can answer these questions by storing in dedicated parts of the network, called memories, all previously seen sentences, so as to retrieve the most relevant facts to a given query. The list of memories used to answer a given query, for example “Joe travelled to the office” and “Joe left the milk” constitutes, in a way, an explanation to the answer “The milk is in the kitchen”.

We believe that these tasks present similarities with the problem we are tackling, of providing an explanation to why a given clause has been labeled as potentially unfair.

In particular, our hypothesis is that useful explanations may be given in terms of rationales, i.e. ad-hoc justifications provided by legal experts motivating their conclusion to consider a given clause as unfair. Accordingly, if we train a MANN classifier to identify unfair clauses by using as facts the rationales behind unfairness labels, then a possible explanation of an unfairness prediction could be constructed based on the list of memories, i.e., the rationales, used by the MANN.

Such explanations could be especially useful to legal experts and consumers because, rather than aiming to explain an underlying logical model or uncover the role of particular neural network connections, they would be more in line with a dialectical and communicative viewpoint, as advocated by Miller (2019).

Consider for example a unilateral termination clause, giving the provider the right to suspend or terminate the service and/or the contract. In general, this provision could be unfair because, from the consumer’s perspective, it could undermine the whole purpose of entering into the contract, and it may skew the balance of power between the parties involved. Indeed, the detection of a “unilateral termination” clause “with 98.8 percent confidence” could be a useful piece of information. However, the reason why a specific unilateral termination clause would be potentially unfair may not be self-evident. Instead, a more specific rationale such as “the clause mentions the contract or access may be terminated but does not state the grounds for termination” could provide a more compelling argument in that regard. It would explain why a clause has been labeled as unfair, and would go in the direction of causal explanations, which are arguably more effective, in this context, than “opaque” confidence measures.

This paper describes our approach to exposing unfairness by providing rationales using a MANN trained on a large corpus of online ToS. Since MANNs enable us to accommodate unstructured knowledge and easily embed raw text, we envision arbitrary knowledge integration as a middle ground between traditional structured information injection and natural language comprehension tasks. Thus, we find this type of memory-augmented architecture quite suitable for our purposes.

The system we built relies on an extensive study made on all the possible rationales associated with 5 major categories of unfair clauses, which we explicitly stated in the form of self-contained English sentences. This exercise served two purposes. The first one was to build a knowledge base that could help the laymen understand the possible motivations behind unfairness in the general case, and hopefully, to a broader extent, also guide service providers in defining fair terms of services. The other purpose was to be able to train MANN classifiers in detecting unfair clauses by encoding legal rationales in the memories.

The knowledge base of rationales constituted the basis for creating a corpus of 100 annotated ToS, which we used to train different MANN architecture configurations. We evaluated their performance with respect to relevant baselines, including support vector machine classifiers, convolutional neural networks and long short-memory networks. We also run an initial qualitative evaluation with domain experts in order to understand the explanatory efficacy of rationales in this context.

The novel corpus, as well as all the code needed to reproduce our experiments, are made available for research purposes.Footnote 3

The results on the new corpus are encouraging. The MANN architectures were able to match or outperform the baselines on all categories of unfair clauses, in some cases by a significant margin. Moreover, unlike all other baselines, the MANN could provide meaningful references to the relevant rationales, especially if during training the MANN is fed with the information of which rationales are related to which clause, a technique known as strong supervision. These results suggest that MANN are a promising way to address the problem of explaining unfairness in consumer contracts and pave the way to their extensive use in other areas of automated legal text analytics.

A pilot study on the use of MANN for detecting and explaining unfair clauses in consumer contracts was recently presented by Lagioia et al. (2019), and it gave promising results. Compared to it, the present study relies on a significantly extended dataset, with several unfairness categories and related rationales. Additionally, we evaluate multiple MANN configurations, limited to single-hop reasoning regarding task-related assumptions, and explore the benefits of strong supervision (see Sect. 4), from both the classification performance and the model explainability perspectives.

The rest of this paper is organized as follows. In Sect. 2 we briefly discuss other machine-learning approaches in the consumer law domain, and the state of the art of machine-learning techniques used to address related problems. In Sect. 3 we describe our corpus and the rationales used to annotate it. In Sect. 4 we introduce the MANN architectures used in our study and the experimental methodology we adopted. Results are discussed in Sect. 5. Section 6 concludes.

2 Background

Ashley (2017) discusses a recent trend, in the legal domain, of using machine learning methods for the analysis and classification of legal documents. Recent popular solutions widely adopt traditional data-driven solutions following standard supervised learning, such as support vector machines (SVM) (Biagioli et al. 2005; Lippi et al. 2019b; Moens et al. 2007). While such approaches mainly consider detection tasks, such as argument detection (Lippi et al. 2015), cited facts and principles in legal judgements (Shulayeva et al. 2017), and prediction problems like forecasting judicial decisions (Aletras et al. 2016), work on consumer law is still limited, and it is mainly about consumer contracts and privacy policies (Fabian et al. 2017; Harkous et al. 2018; Sadeh et al. 2013; Harkous et al. 2018; Braun 2018; Lippi et al. 2019b). In particular, the last one describes a SVM classifier trained on a set of consumer contracts annotated by domain experts for clause unfairness detection. However, in all these works legal knowledge is mostly restricted to labeling information, thus, not pointing to any kind of external, accessible resource, such as legal rationales.

A topic of growing interest, not only in legal applications but in data-driven AI in general is explainability.

Social scientists address explanation as a communication problem (Miller 2019), concentrating on whom the explanation is provided for and thus on the interaction between explainer and explainee, as well as on the ‘rules’ that govern such an interaction. Many believe that explainability (or explicability) should be an inspiring principle for the development of AI, along with beneficence, non-maleficence, autonomy and justice (Selbst and Barocas 2018; Floridi et al. 2018; Jobin et al. 2019).

The communicative and dialectical dimensions of explanation are particularly relevant, even crucial, to legal experts, consumers and their organizations. In that regard, it has been argued (Miller 2019; Wachter and Mittelstadt 2019) that the following approaches are needed: (i) contrastive explanation; (ii) selective explanation; (iii) causal explanation; and (iv) social explanation. While contrastive explanation is used to specify what input values determined the adoption of a certain decision (e.g., the unfairness of a clause under a certain category) rather than possible alternatives (e.g., the fairness of that specific clause), selective explanation is based on those factors that are most relevant according to human judgments. Indeed, causal chains are often too large to comprehend, especially for those who lack the specific domain competence, such as lay consumers. Causal explanation focuses on causes, rather than on merely statistical correlations. If we consider consumers, NGOs and legal experts as addressees, referring to probabilities and statistical generalizations is not as effective and meaningful as referring to causes. Finally, the explanation has a social nature. It is useful to adopt a conversational approach in which information is tailored to the recipient’s beliefs and way of understanding.

Computer scientists have focused on the technical possibility of providing understandable models of opaque AI systems (and, in particular, of deep neural networks), i.e., models of the functioning of such systems that can be mastered by human experts (Doran et al. 2017; Guidotti et al. 2018).

In particular, from a computer science perspective the explanation needs to include three components. The first one is a model explanation, i.e., an interpretable and transparent model, capturing the whole logic of the obscure system. The second is a model inspection, i.e., a human-comprehensible representation of the specific properties of an opaque system and its prediction, making it possible to understand how the black box behaves internally depending on the input values, namely its sensitivity to certain attributes (e.g., how a change in the consumer’s age, gender, location, educational level or search history makes a difference in delivering online behavioural advertising), up to and including, for instance, the connections in a neural network. The third one is the outcome explanation, making it possible to understand the reasons for certain decisions, i.e., the causal chains leading to a certain outcome in a particular instance (Guidotti et al. 2018; Arrieta et al. 2020; Biran and Cotton 2017).

Our approach gives special emphasis to the third component, and it consists in explaining the outcome of a classifier by integrating external knowledge expressed in natural language, in the form of rationales.

External knowledge integration in natural language processing has been widely explored in several different tasks, spanning from traditional classification (Wang et al. 2017; Sun et al. 2018) to language representation (Mikolov et al. 2013; Bian et al. 2014; Zhang et al. 2019b; Devlin et al. 2018) and generation (Zhou et al. 2018; Chen et al. 2019; Guan et al. 2019). One of the most common approaches to encode information is by means of knowledge graphs. Specifically, tasks-specific entities as well as their relations are encoded into nodes and edges in an entity-based graph, respectively. Such structured knowledge aids the detection of non-trivial connections between named entities, highlighting implicit groupings that can be used to achieve improved performance in named entity recognition (Callan and Mitamura 2002; Dekhili et al. 2019; Torisawa et al. 2007; Dadas 2019), sentiment analysis (Cambria et al. 2014; Ma et al. 2018; Bohlouli et al. 2015; Kontopoulos et al. 2013; Schmunk et al. 2013) and text classification (Wang et al. 2017; Zelikovitz and Hirsh 2003; Zhang et al. 2019a; Choi et al. 2017). Notable examples propose to enrich entity information by exploring structured ontologies, such as WordNet (Miller 1995), FreeBase (Bollacker et al. 2008), DBPedia (Auer et al. 2007) and ConceptNet (Speer and Havasi 2013), unstructured text sources like Wikipedia as external knowledge (Torisawa et al. 2007; Dekhili et al. 2019), or other task specific ontologies, such as biomedical databases (Amith et al. 2017).

Alternatively to knowledge graphs, prior information can be formulated as a set of constraints in the form of first-order logic (FOL) rules. Formal restrictions come as a natural representation of task specific requirements, such as structural conditions for sequential prediction tasks (Hu et al. 2016). Similarly to knowledge graphs, logic rules are also employed for learning rich language representations by incorporating commonsense knowledge (Rocktäschel et al. 2014; Wang et al. 2014; Bowman et al. 2014; Rocktäschel et al. 2015). Lastly, an interesting yet different task, termed knowledge graph embedding, tackles the problem of encoding entire knowledge graphs into continuous vector spaces in order to convey rich external knowledge into machine learning models in an intuitive and compliant way, without losing structural properties. In this context, logic rules are employed to guide the distillation process so as to consider entity-specific relations (Guo et al. 2018).

Differently from the above proposals, we relied on MANNs. These have been extensively employed in complex reasoning tasks, such as reading comprehension (Miller et al. 2016; Hill et al. 2015; Cheng et al. 2016), dialogue systems (Bordes and Weston 2016) and general question answering (Sukhbaatar et al. 2015; Kumar et al. 2016; Bordes et al. 2015, 2016). Their novelty and main contribution is the introduction of an external memory block as support for reasoning. MANNs have also been widely applied to classification tasks, mainly sentiment analysis (Tang et al. 2016) and document tagging (Prakash et al. 2016), as well as graph analysis and path finding (Graves et al. 2016; Zaremba and Sutskever 2015).

To illustrate, consider the challenging activity of question answering, where the input is usually a question concerning a single element of a corresponding text document. In this scenario, a MANN can efficiently tackle the task by encoding the question as the network input and storing the context document within the memory block. By doing so, the neural architecture can easily extract non-sequential information conditioned on the query, and potentially modify or filter the content of the memory so as to ease task resolution. Enhancing the capability of a recurrent neural network by adding the possibility of processing unordered information is an effective solution to the well-known problem of learning long-term dependencies.

3 Data

Our starting point is the dataset produced by Lippi et al. (2019b), consisting of 50 relevant online consumer contracts, i.e., Terms of Service (ToS) of online platforms, analyzed by legal experts and marked in XML. The existing annotations identify eight different categories of unfair clauses. We doubled the size of the dataset, which now includes 100 ToS. At the same time, we narrowed down our focus to five categories. In particular, we selected the five most challenging categories and excluded from this study the remaining three categories, which were too easy, not particularly interesting, as they were almost always associated with the same rationale. We selected the new contracts among those offered by some of the major players in terms of global relevance, number of users, and time the service was established.Footnote 4

All the ToS we analyzed are standard terms available on the provider’s website for review by potential and current consumers. Indeed, as it will be noted below, many providers assert that rather than being an obligation on the service provider to notify their users regarding changes to the ToS and even to the service, consumers are required to review the ToS from time to time, visiting the website on a regular basis to check for any changes. The ToS collected in the dataset were gradually downloaded and analyzed over a period of eighteen months by four legal experts, with some follow-up review for the same services. Potentially unfair clauses were tagged using the guidelines described in Lippi et al. (2019b).

For the purpose of this study, we have conducted an in-depth analysis of the data set and we have created a novel structured corpus of different legal rationales, with regard to the following clauses:

-

(i)

liability exclusions and limitations (ltd);

-

(ii)

the provider’s right to unilaterally remove consumer content from the service, including in-app purchases (cr);

-

(iii)

the provider’s right to unilaterally terminate the contract (ter);

-

(iv)

the provider’s right to unilaterally modify the contract and/or the service (ch); and

-

(v)

arbitration on disputes arising from the contract (a).

Interestingly, out of the 21,063 sentences in the corpus, 2346 sentences (11.1%) were labeled as containing a potentially or clearly unfair clause. We take it as a confirmation of the importance and potential impact of the analysis work we carried out.

The distribution of the different categories across the 100 documents is reported in Table 1. We shall notice the high frequency of some of the chosen categories within the dataset. Arbitration clauses are the most uncommon, and are found in 43 documents only. All other categories appear in at least 83 out of 100 documents. Limitation of liability and unilateral termination together represent more than half of all potentially unfair clauses.

We expected that detecting unfair clauses under these categories would be especially challenging. Not only the state-of-the-art classifier showed lower performance on such clauses in comparison to other categories (Lippi et al. 2019b), but it also turns out that each of these potentially unfair clause categories could be matched with several potentially unfair practices/legal rationales. The resulting one-to-many mapping of clauses to legal rationales will be detailed in the following sections. The link between rationales and clauses can be used to instruct the system so that it can provide an explanation for the unfairness of particular clauses.

3.1 Limitation of liability clauses

Service providers often dedicate a considerable portion of their ToS to disclaiming and limiting liabilities. Clauses falling under this category stipulate that the duty to pay damages is limited or excluded for certain kinds of losses and under certain conditions. Most of the circumstances under which these limitations are declared significantly affect the balance between the parties’ rights and obligations, and unlikely will pass the Directive’s unfairness test. In particular, clauses excluding liability for broad categories of losses or causes of them were marked as potentially unfair, including those containing blanket phrases like “to the fullest extent permissible by law”. Conversely, clauses meant to reduce, limit, or exclude the liability for physical injuries, intentional harm, or gross negligence were marked as clearly unfair (Lippi et al. 2019b; Micklitz et al. 2017).

The analysis of the dataset enabled us to identify 19 legal rationales for (potentially) unfair limitation of liability, which map different questionable circumstances under which the ToS reduce or exclude liability for losses or injuries. For each rationale we defined a corresponding identifier [ID], as shown in Table 2.

As noted above, each (potentially) unfair ltd clause within the data set may be relevant, and thus indexed with the corresponding IDs, under more than one legal rationale. As an example, consider the following clause taken from the Box terms of service (retrieved on August 2017) and classified as potentially unfair:

To the extent not prohibited by law, in no event will Box, its affiliates resellers, officers, employees, agents, suppliers or licensors be liable for: any direct, incidental, special, punitive, cover or consequential damages (including, without limitation, damages for lost profits, revenue, goodwill, use or content) however caused, under any theory of liability, including, without limitation, contract, tort, warranty, negligence or otherwise, even if Box has been advised as to the possibility of such damages.

The clause above, to the extent not prohibited by law, limits the provider’s liability by kind of damages, i.e., broad category of losses (e.g. loss of data, economic loss and loss of reputation); by standard of care, since it states that the provider will never be liable even in case of negligence and awareness of the possibility of damages; by causal link (e.g. special, incidental and consequential damages) as well as by any liability theory. As a consequence, the clause has been linked to the following identifiers: extent, anydamage, reputation, dataloss, ecoloss, awareness, liabtheories.

As a further example, consider the following clause taken from the Endomondo terms of service (retrieved on May 2018) and classified as clearly unfair:

Except as otherwise set out in these Terms, and to the maximum extent permitted by applicable law, we are not responsible or liable, either directly or indirectly, for any injuries or damages that are sustained from your physical activities or your use of, or inability to use, any Services or features of the Services, including any Content or activities that you access or learn about through our Services (e.g., a Third-Party Activity such as a yoga class), even if caused in whole or part by the action, inaction or negligence of Under Armour or by the action, inaction or negligence of others.

To the maximum extent permitted by the law, the clause above limits the service provider’s liability by the causal link with broad categories of potential damages (i.e., to encompass direct and/or indirect damages), by cause (i.e., service interruption and/or unavailability, third party actions and content published, stored, and processed within the service), and by kind, in particular for personal injury, resulting from an act or an omission of the supplier. Thus, it has been linked to the following identifiers: extent, anydamage, injury, thirdparty, content, discontinuance.

3.2 Content removal

Content removal clauses give the provider a right to modify and/or delete user’s content, including in-app purchases, and sometimes specifies the conditions under which the service provider may do so. As in the case of unilateral termination, clauses that indicate conditions for content removal were marked as potentially unfair, whereas clauses stipulating that the service provider may remove content in his full discretion, and/or at any time for any or no reasons and/or without notice nor possibility to retrieve the content were marked as clearly unfair.

Under this category, we identified 17 legal rationales, shown in Table 3.

Each (potentially) unfair clause falling under the content removal category has been indexed with one or more identifiers of rationales. Consider, for instance, the following examples taken from the terms of service of TikTok (retrieved on October 2018) and Pokemon GO (retrieved on July 2016):

In addition, we have the right - but not the obligation - in our sole discretion to remove, disallow, block or delete any User Content (i) that we consider to violate these Terms, or (ii) in response to complaints from other users or third parties, with or without notice and without any liability to you.

Niantic further reserves the right to remove any User Content from the Services at any time and without notice and for any reason.

The first clause above, previously classified as potentially unfair, has been linked to the following IDs: termviolation, complaint, nonotice, tpright. Coversely, the second clause, previously classified as clearly unfair, has been linked to the IDs IDs: nonotice, fulldiscretion.

Such practices they would have no means to influence their content.

3.3 Unilateral termination

The unilateral termination clause gives the provider the right to suspend and/or terminate the service and/or the contract, and sometimes details the circumstances under which the provider claims to have a right to do so. From the consumer’s perspective, a situation where the agreement may be dissolved at any time and for any reason could seriously undermine the whole purpose of entering into the contract. These clauses may skew the balance of power between the parties and be considered (potentially) unfair whenever the consumer has a reasonable interest in preserving the contract’s longevity, given the foreseeably invested time and effort in the services, e.g., by importing and storing digital content. This is all the more true if the trader does not provide a reasonably long notice period allowing the consumer to migrate to another service (e.g., withdrawing and transferring all the digital content elsewhere).

Unilateral termination clauses that specify reasons for termination were marked as potentially unfair, whereas clauses stipulating that the service provider may suspend or terminate the service at any time for any or no reasons and/or without notice were marked as clearly unfair. Under this (potentially) unfair clause category, we identified 28 different legal rationales (Table 4).

As examples, consider the following clauses, taken from the DeviantArt (effective date not available) and Academia (retrieved on May 2017) terms of service:

Furthermore, you acknowledge that DeviantArt reserves the right to terminate or suspend accounts that are inactive, in DeviantArt’s sole discretion, for an extended period of time (thus deleting or suspending access to your Content)

Academia.edu reserves the right, at its sole discretion, to discontinue or terminate the Site and Services and to terminate these Terms, at any time and without prior notice.

The first clause above, previously classified as potentially unfair, has been linked to the dormant ID, since it states that the service provider will suspend or terminate apparently dormant accounts, also deleting or suspending access to consumers’ digital contents. Conversely, the second clause was previously classified as clearly unfair and it has been linked to the IDs no_grounds, no_notice. since the service provider claims the right to unilaterally terminate both the contract and the service without prior notice and grounds for termination are completely missing.

3.4 Unilateral changes

Under this category, we identified 7 different legal rationales, as reported in Table 5, mapping the different circumstances under which service providers claim their right to unilaterally amend and modify the terms of service and/or the service. Unilateral change clauses were always considered as potentially unfair, since the ECJ has not yet issued a judgment in this regard, though the Annex to the Directive contains several examples supporting such a qualification. Unilateral change clauses are particularly worrisome in cases where the proposed amendment significantly impact the consumers’ rights, thus creating a disproportionate balance between the parties. This is particularly true whenever consumers are either not given any opt-out options, where no consent to the new terms is requested, or where no notification to the consumers is given.

As relevant examples, consider the following clauses taken from the Academia (retrieved on May 2017) and Endomondo (retrieved on January 2016) terms of service:

Academia.edu reserves the right, at its sole discretion, to modify the Site, Services and these Terms, at any time and without prior notice.

With new products, services, and features launching all the time, we need the flexibility to make changes, impose limits, and occasionally suspend or terminate certain offerings.

The first clause above is representative of the largest group of the unilateral change clauses, stating that the provider has the right for unilateral change of the contract and/or the services and/or the provided goods and/or features, for any reason, at its full discretion and at any time and without notice. Thus, it has been linked to the following IDs: anyreason, nowarning. The second clause, stating that the service provider has the right to make generic unilateral changes to maintain a level of flexibility, has been linked to the ID update.

3.5 Arbitration

The arbitration clause can be considered as a kind of forum selection clause, since it requires or allows the parties to resolve their disputes through an arbitration process, before the case could go to court. However, such a clause may or may not specify that arbitration should occur within a specific jurisdiction. While clauses defining arbitration as fully optional has been marked as clearly fair, those stipulating that the arbitration should (1) take place in a state other than the state of consumer’s residence and/or (2) be based not on law but on arbiter’s discretion were marked as clearly unfair. In every other case, the arbitration clause was considered as potentially unfair.

Under this category, we identified 8 legal rationales, as reported in Table 6.

As examples, consider the following clauses taken from the Airbnb (retrieved on November 2019) and Grindr (retrieved on July 2018) terms of service:

By accepting these Terms of Service, you agree to be bound by this arbitration clause and class action waiver.

Any Covered Dispute Matter must be asserted individually in binding arbitration administered by the American Arbitration Association (AAA) in accordance with its Consumer Arbitration Rules (including utilizing desk, phone or video conference proceedings where appropriate and permitted to mitigate costs of travel).

The first clause above, previously classified as potentially unfair, has been linked to the consent_tos identifier, since it states that the agreement to the Terms of Service is specifically treated as consent to the arbitration clause. Conversely, the second clause was previously classified as clearly unfair and it has been linked to the following IDs: arb_obligatory, extralegal_rules since it states that all disputes must be resolved through arbitration, and the consumer is mandatorily subject to rules on dispute resolution not covered by law, i.e. Consumer Arbitration Rules by the American Arbitration Association (AAA).

4 Methodology

From a machine learning perspective, it is usually hard to endow classification models with the ability to produce interpretable results, resembling justifications such as those provided by experts. With deep neural models, interpretability becomes even harder. Taking inspiration from experts’ behaviour, we aim to enrich general text classification by emulating the aforementioned perspective on rationales. Specifically, implicit evaluation is addressed by data-driven learning, whereas explicit comparison with available external knowledge is modelled at architectural level. In particular, we view legal rationales as our knowledge base (KB) and we focus on their linking to given statements that have to be classified, as a way to explicitly address model interpretability.

Differently from common knowledge integration techniques in natural language processing (Hu et al. 2016; Dhingra et al. 2016), justifications given by experts cannot be easily formalized as structured information, such as knowledge graphs or logic rules, unless a high pre-processing effort is carried out. This is mainly due to the presence of abstract concepts, implicit references to several external sources, and common sense motivations. Additionally, such aspects may also be deeply intertwined and, thus, hard to properly isolate. Nonetheless, word-level information, e.g. available in ontologies (Miller 1995), and context-grounded statements, such as unfair examples listing, can be easily formalized and integrated into the learning phase. Intuitively, the former unstructured knowledge representation, in the form of raw text, is a generalization of the latter structured formalization, achievable via ad-hoc distillation techniques, either manually or, more ambitiously, automatically. Therefore, we focus on integrating external information, i.e., legal rationales, in order to both convey rich contextual reasoning as well as providing a simple interface to account for model interpretability.

4.1 Memory-augmented neural networks

As a first building block, our choice fell on MANNs due to their extensive usage in tasks that present similarities with our approach, such as machine reading comprehension and question answering. We could choose among several MANN architectures of various levels of sophistication. However, since our main motivation concerns correct knowledge usage without negatively affecting the overall classification performance, it turned out that the simplest MANN architecture available (Sukhbaatar et al. 2015) did met our needs. We may explore alternative, more advanced and typically much more computationally expensive architectures in future developments.

We formulate the task of potentially unfair clause detection as a standard classification problem, yet enhanced by introducing an external knowledge, containing possible explanations for the labeled unfairness types.

Specifically, we extend the setup described by Lagioia et al. (2019), in which statements to be classified are explicitly compared with the given knowledge. In particular, following the technical formulation of MANNs, we consider input clauses as a query to the memory, which contains a collection of legal rationales.

When a clause q has to be classified, the system attempts to retrieve from memory M those rationales (possibly more than one) that best match such clause. This match can be computed by exploiting a similarity metric \(s(q,m_i)\) between the input clause and each memory slot \(m_i\). Then, the MANN extracts content from memory M, by computing a weighted combination of all the memory slots, where the weight \(w_i\) of the i-th slot is proportional to the similarity metric: \(c = \sum _{i=1}^{|M|} w_i \cdot m_i\). It is worthwhile noticing that, differently from Lagioia et al. (2019), here we compute \(w_i\) by applying a sigmoid function \(\sigma\) to the output of the similarity function: \(w_i = \sigma (s(q,m_i))\). Compared to softmax, a sigmoid activation allows us both to view the external memory as an optional source of information, promoting the idea that rationales may not be required for all clauses, and to enable the activation of multiple memory slots.

Content c is then combined with clause q to build the final representation \({\tilde{q}}\) to be used to classify the clause. In our experimental setting this query update consists in a simple concatenations of the two vectors.Footnote 5

The MANN finally considers \({\tilde{q}}\) to predict whether the input clause is potentially unfair.

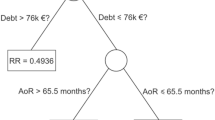

The whole architecture of the system is depicted in Fig. 1a.

a MANN general architecture for unfair clause detection. Given a clause q to classify and a set of legal rationales, i.e., memory M, the model first evaluates the similarity between q and each memory slot \(m_i\). Subsequently, proportionally to their similarity with q, memory components \(m_i\) are aggregated into a single vector representation. Intuitively, if the similarity metric is non-zero only for a single \(m_i\), the aggregated vector is a weighted representation of \(m_i\). Lastly, such aggregated vector is used to update q so as to enrich input information for the final classification step. b Weak and strong supervision. The parametric similarity step can be guided by specifying which memory content \(m_i\) should have high similarity with q. This process is formally known as strong supervision (SS) in the MANN literature. In particular, the model is instructed to shift from its current similarity behaviour (black bars) to the one desired by SS (red bars)

4.2 Weak and strong supervision

We consider two different kinds of supervisions for the use of memory, usually named weak and strong supervision (Sukhbaatar et al. 2015).

Under weak supervision we feed the MANN with the whole collection of rationales (the KB), without providing the information, during training, of which set of rationales should be used for which clause. Under strong supervision, instead, we provide the MANN with the explanation(s) used for every potentially unfair clause.

Strong supervision of legal rationales encoded in the memory is technically implemented as a max margin loss at extraction level, and it can be informally envisioned as suggesting higher preference for memory elements, i.e., legal rationales, that are labeled by experts as being the true motivation of given clause unfairness.

This added penalty term, to be minimized, gives a high cost to examples (unfair clauses) that are not assigned to the corresponding true explanation(s) in the memoryFootnote 6:

where \(M^n_+\) is the set of target explanations for a given example \(q^n\), \(M^n_-\) is the set of non-target explanations for n, \((m_+, m_-)\) is a target/non-target explanation pair for a given sample n, and \(\gamma\) is the margin hyper-parameter. Intuitively, this loss function pushes the MANN to compute scores for the target explanations that are larger than those for non-target explanations for at least a margin \(\gamma\).

By controlling the intensity of this preference via a sort of regularization coefficient, we can trade-off between classification performance and model output interpretability (see Fig. 1b).

In fact, in our scenario, being able to properly motivate the proposed model predictions is as crucial as evaluating its performance by standard classification metrics. As already mentioned, the introduction of a KB comprised of expertise rationales allows us to better interpret the model output by evaluating its linkage to the KB.

5 Results

For each unfairness category introduced in Sect. 3 we employed sets of expert justifications, each of a different size. In particular, we consider each category as a standalone binary classification task, where model evaluation is defined as a standard 10-fold cross-validation.

As it is a well known fact that standard MANN architectures are affected by high variance (Weston et al. 2014), for each fold in the cross-validation three networks are trained with different random seed initializations, picking the best according to the performance on the validation set, as customary in many applications (Bengio 2012).

All models are implemented in Tensorflow 2 and are available for reproducibility.Footnote 7

As for the deep architecture, we employ a simple variant of the traditional end-to-end MANN (Sukhbaatar et al. 2015), with the following modifications, already introduced in Sect. 4:

-

1.

attention operation via sigmoid activation function instead of softmax in order to enable the selection of multiple (possibly none) memory slots;

-

2.

number of reading iterations over memory limited to just one, which also simplifies training;

-

3.

query update via concatenation instead of embedding sum;

-

4.

clause-justification similarity operation via a two-layer MLP, to further take into account strong supervision as an additional penalty term.

The last two architectural choices follow a preliminary experimental evaluation and make the model more expressive. In particular, we have defined a hyper-parameter calibration step on the validation set via Bayesian optimization (with the hyperopt package). Different query update variants were tested: sum, concatenation, computation via an MLP or an RNN. For the similarity operation, we have tested the following configurations: dot product, scaled dot-product, from 1 up to 3 dense layers with units belonging to the set [64, 128, 256, 512].

For each test fold in the cross-validation, the remaining data are split between 80% for training and 20% for validation. Training is then regularized by early stopping on validation \(F_1\), with a patience equal to 40 epochs.

As for performance comparison, we consider the current state-of-the-art solution implemented in the CLAUDETTE system (Lippi et al. 2019b), based on support vector machines (SVM), as well as a set of deep neural networks that do not leverage any kind of external information: (i) a simple set of stacked convolutional neural networks (CNNs), on the top of which a 2-layer MLP classifier is added for binary classification; (ii) a set of stacked recurrent neural networks along with a 2-layer MLP for classification.

We set the hyper-parameters of each model, including MANNs, by selecting the architecture with the best performance on the validation set. In particular, for MANNs we chose a word embedding size of 256, an L2-regularization weight of \(10^{-3}\), a dropout equal to 0.7, an MLP with a hidden layer of 32 neurons for the similarity score between clause and memory, and 64 neurons for the final classification layer. For strong supervision, we set the margin \(\gamma = 0.5\) to ensure that uncorrelated legal explanations are irrelevant for classification, while we tuned the penalty coefficient separately for each unfairness category.

We present two different tables for results. In Table 7 we report the standard classification metrics, namely the macro-average \(F_1\) score computed on the cross-validation procedure, evaluated on the five unfairness categories of interest. Table 8 instead reports several statistics about memory usage and related classification performance when considering both weak and strong supervision. In particular, we compute the following metrics:

-

memory usage (U): the percentage of input examples (clauses) for which memory is used;

-

coverage (C): the percentage of correct memory slot selection over all unfair examples;

-

coverage precision (CP): the percentage of correct memory slot selection over examples where memory is used.

These metrics allow to ascertain whether knowledge integration is effective, and to what extent. Moreover, we report a classification-oriented score concerning prediction accuracy with non-zero memory usage, namely memory precision (MP).

However, more detailed information about memory selection is required so as to exclude ill-behaved scenarios, such as where the model uses all available memory, and to avoid rushed positive conclusions about memory usage even though the model mostly prefers non-target justifications. To this end, we also report metric CP when only the memory slot with the highest score—that is, the most preferred explanation—is considered (CP@1) as well as the three slots with the highest scores are considered (CP@3). Finally, we report the average percentage of used memory, that is, the average number of memory elements selected with respect to total memory size (APM).

The results in Table 7 show that even a naive combination of a simple MANN architecture and raw knowledge representation is sufficient to show increased performance over traditional knowledge-agnostic models, such as the proposed neural baselines and the current state-of-the-art SVM solution. However, solely relying on classification performance is a non-exhaustive evaluation criterion to assess whether a model is better than another. Weak supervision is already sufficient to slightly enhance performance, whereas strong supervision still adds a margin of improvement. More specifically, harder unfair detection scenarios, such as A and CR, show a large benefit of added knowledge that compensates the high class distribution unbalance of these settings. However, a mixture of different factors, such as noisy knowledge representation, a high amount of possible unfairness explanations and the increase of available data, stand as an important challenge for knowledge integration, hence the performance improvement over the other categories of interest appears to be only moderate. In particular, LTD and TER categories present the highest amount of explanation variation, which increase the complexity of knowledge formulation for correct performance boost.

As for the accuracy in providing explanations, which is the main point of adopting a MANN architecture, Table 8 confirms our intuition that strong supervision provides a crucial element in correctly linking potentially unfair clauses to their correct rationales.

Table 8 clearly shows that, when strong supervision is applied, the CP metric is very high, ranging from 0.63 (CR and TER) up to 0.8 and 0.9 (A, CH, LTD). In fact, the top-scoring rationale is very often correct for A and CH (above 0.7 and 0.8, respectively). For all the categories, the correct explanation is among the top-3 scoring in over 50% of the cases (around 0.9 for A and CH). The APM metric also show that the MANN is quite selective in choosing the memory: in fact, the percentage of used explanations never exceeds 50% of the memory size, on average.

Lastly, to further illustrate the benefits introduced by strong supervision, Table 9 shows two examples of proper and selective memory usage that lead to correct unfairness detection. In particular, not only the model encouraged to use target legal rationales for given example, but it also learns to attentively use such added knowledge. On the other hand, a model without such direct supervisions may fail to filter out irrelevant information or to select relevant information for classification.

6 Conclusions

A recent trend in legal informatics is the use of machine learning methods for document analysis and classification. Yet, especially in this domain, before the results of automated classifiers can be trusted, they need to be explainable.

A pilot study on the use of MANN for detecting and explaining unfair clauses in consumer contracts was recently presented by Lagioia et al. (2019), with promising results. We have extended that study by considering methods for better incorporating domain expertise into the explanations (rationales) produced by the MANN. Our results indicate that strong supervision could lead to more content-aware and selective classification of rationales.

Our work also confirms that the problem of incorporating external knowledge into data-driven methodologies is a challenging one, particularly in the domain of legal analysis where context knowledge is significant, broad and noisy. An additional issue is scalability. Being able to rely on several sources of explanation may provide high flexibility, easing cross-domain adaption. However, incorporating large sets of legal rationales certainly requires approximation methods at memory addressing level (Chandar et al. 2016; Munkhdalai et al. 2019), depending on the given model architecture.

In the future we plan to consider other MANN architectures (Xiong et al. 2016; Miller et al. 2016) and more advanced language models such as BERT (Devlin et al. 2018), and to investigate the applicability of our methodology to other areas of legal analytics, for example legal argument analysis (Lippi et al. 2015) and privacy policies (Contissa et al. 2018). In particular, in the latter scenario, the high structural complexity demands richer task formulations rather than just sentence-level information: intra-document contextual information is crucial to assess the correctness of a legal statement and, thus, will represent one of the major leading research directions in the future.

Notes

The CLAUDETTE web service has been updated with current work and now provides explanations in addition to its predictions. The web service is accessible at: http://claudette.eui.eu/demo/.

The whole corpus consists of the ToS offered by: 9gag.com, Academia.edu, Airbnb, Alibaba, Amazon, Atlas Solutions, Badoo, Betterpoints, Blablacar, Booking.com, Box, Courchsurfing, Crowdtangle, Dailymotion, Deliveroo, Deviantart, Diply, Dropbox, Duolingo, eBay, eDreams, Electronic Arts, Endomondo, EpicGames, Evernote, Expedia, Facebook, Fitbit, Foursquare, Garmin, Goodreads, Google, Grammarly, Grinder, Groupon, Habbo, Happn, Headspace, HeySuccess, Imgur, Instagram, Lastfm, Linden Lab, LinkedIn, Masquerade, Match, Microsoft, MyHeritage, MySpace, Moves-app, Mozilla, Musically, Netflix, Nintendo, Oculus, Onavo, Opera, Paradox, Pinterest, Pokemon GO, Quora, Rayanair, Reddit, Rovio, Shazam, Skype, Skyscanner, Slack, Snapchat, Sporcle, Spotify, Steam, Supercell, SyncMe, Tagged, Terravision, TikTok, Tinder, TripAdvisor, TrueCaller, Tumbler, Twitch, Twitter, Uber, Ubisoft, VeryChic, Viber, Vimeo, Vivino, Wechat, Weebly, WeTransfer, WhatsApp, World of Warcraft, Yahoo, Yelp, YouTube, Zalando, Zara, Zoho and Zynga.

The general MANN architecture would also allow for multiple iterations of memory reading operations. We leave to future research a deep investigation of this possibility.

It is worthwhile noticing that each clause in the training set could be associated with multiple justifications. We consider the memory to be correctly used if at least one of the supervision rationales is selected.

References

Aletras N, Tsarapatsanis D, Preoţiuc-Pietro D, Lampos V (2016) Predicting judicial decisions of the European court of human rights: a natural language processing perspective. PeerJ Comput Sci 2:e93

Amith M, Zhang Y, Xu H, Tao C (2017) Knowledge-based approach for named entity recognition in biomedical literature: a use case in biomedical software identification. In: International conference on industrial, engineering and other applications of applied intelligent systems. Springer, pp 386–395

Arrieta AB, Díaz-Rodríguez N, Del Ser J, Bennetot A, Tabik S, Barbado A, García S, Gil-López S, Molina D, Benjamins R et al (2020) Explainable artificial intelligence (xai): concepts, taxonomies, opportunities and challenges toward responsible ai. Inf Fusion 58:82–115

Ashley KD (2017) Artificial intelligence and legal analytics: new tools for law practice in the digital age. Cambridge University Press. https://doi.org/10.1017/9781316761380

Auer S, Bizer C, Kobilarov G, Lehmann J, Cyganiak R, Ives Z (2007) Dbpedia: a nucleus for a web of open data. In: The semantic web. Springer, pp 722–735

Bengio Y (2012) Practical recommendations for gradient-based training of deep architectures. In: Neural networks: tricks of the trade. Springer, pp 437–478

Biagioli C, Francesconi E, Passerini A, Montemagni S, Soria C (2005) Automatic semantics extraction in law documents. In: Proceedings of the 10th international conference on Artificial intelligence and law (ICAIL '05). Association for Computing Machinery, New York, NY, USA, pp 133–140. https://doi.org/10.1145/1165485.1165506

Bian J, Gao B, Liu TY (2014) Knowledge-powered deep learning for word embedding. In: Joint European conference on machine learning and knowledge discovery in databases. Springer, pp 132–148

Biran O, Cotton C (2017) Explanation and justification in machine learning: A survey. In: IJCAI-17 workshop on explainable AI (XAI), vol 8, no 1, pp 8–13

Bohlouli M, Dalter J, Dornhöfer M, Zenkert J, Fathi M (2015) Knowledge discovery from social media using big data-provided sentiment analysis (somabit). J Inf Sci 41(6):779–798

Bollacker K, Evans C, Paritosh P, Sturge T, Taylor J (2008) Freebase: a collaboratively created graph database for structuring human knowledge. In: Proceedings of the 2008 ACM SIGMOD international conference on management of data, pp 1247–1250

Bordes A, Weston J (2016) Learning end-to-end goal-oriented dialog. CoRR. arXiv:abs/1605.07683

Bordes A, Usunier N, Chopra S, Weston J (2015) Large-scale simple question answering with memory networks. ArXiv preprint arXiv:150602075

Bordes A, Boureau YL, Weston J (2016) Learning end-to-end goal-oriented dialog. ArXiv preprint arXiv:160507683

Bowman SR, Potts C, Manning CD (2014) Recursive neural networks can learn logical semantics. ArXiv preprint arXiv:14061827

Braun D (2018) Customer-centered LegalTech: automated analysis of standard form contracts

Callan J, Mitamura T (2002) Knowledge-based extraction of named entities. In: Proceedings of the 11th international conference on information and knowledge management, pp 532–537

Cambria E, Olsher D, Rajagopal D (2014) Senticnet 3: a common and common-sense knowledge base for cognition-driven sentiment analysis. In: 28th AAAI conference on artificial intelligence

Chandar S, Ahn S, Larochelle H, Vincent P, Tesauro G, Bengio Y (2016) Hierarchical memory networks. ArXiv preprint arXiv:160507427

Chen J, Chen J, Yu Z (2019) Incorporating structured commonsense knowledge in story completion. Proc AAAI Conf Artif Intell 33:6244–6251

Cheng J, Dong L, Lapata M (2016) Long short-term memory-networks for machine reading. ArXiv preprint arXiv:160106733

Choi E, Bahadori MT, Song L, Stewart WF, Sun J (2017) Gram: graph-based attention model for healthcare representation learning. In: Proceedings of the 23rd ACM SIGKDD international conference on knowledge discovery and data mining, pp 787–795

Contissa G, Docter K, Lagioia F, Lippi M, Micklitz HW, Palka P, Sartor G, Torroni P (2018) Automated processing of privacy policies under the EU general data protection regulation. In: Legal knowledge and information systems: JURIX 2018: the 31st annual conference, frontiers in artificial intelligence and applications, vol 313. IOS Press, pp 51–60. https://doi.org/10.3233/978-1-61499-935-5-51

Cramer H, Evers V, Ramlal S, Van Someren M, Rutledge L, Stash N, Aroyo L, Wielinga B (2008) The effects of transparency on trust in and acceptance of a content-based art recommender. User Model User-Adapted Interact 18(5):455

Dadas S (2019) Combining neural and knowledge-based approaches to named entity recognition in polish. In: International conference on artificial intelligence and soft computing. Springer, pp 39–50

Dekhili G, Le NT, Sadat F (2019) Augmenting named entity recognition with commonsense knowledge. In: Proceedings of the 2019 workshop on widening NLP

Devlin J, Chang MW, Lee K, Toutanova K (2018) Bert: pre-training of deep bidirectional transformers for language understanding. ArXiv preprint arXiv:181004805

Dhingra B, Liu H, Yang Z, Cohen WW, Salakhutdinov R (2016) Gated-attention readers for text comprehension. ArXiv preprint arXiv:160601549

Doran D, Schulz S, Besold TR (2017) What does explainable ai really mean? A new conceptualization of perspectives. ArXiv preprint arXiv:171000794

Fabian B, Ermakova T, Lentz T (2017) Large-scale readability analysis of privacy policies. In: Proceedings of the international conference on web intelligence, pp 18–25

Floridi L, Cowls J, Beltrametti M, Chatila R, Chazerand P, Dignum V, Luetge C, Madelin R, Pagallo U, Rossi F et al (2018) Ai4people-an ethical framework for a good AI society: opportunities, risks, principles, and recommendations. Minds Mach 28(4):689–707

Graves A, Wayne G, Reynolds M, Harley T, Danihelka I, Grabska-Barwińska A, Colmenarejo SG, Grefenstette E, Ramalho T et al (2016) Hybrid computing using a neural network with dynamic external memory. Nature 538(7626):471

Guan J, Wang Y, Huang M (2019) Story ending generation with incremental encoding and commonsense knowledge. Proc AAAI Conf Artif Intell 33:6473–6480

Guidotti R, Monreale A, Ruggieri S, Turini F, Giannotti F, Pedreschi D (2018) A survey of methods for explaining black box models. ACM Comput Surv (CSUR) 51(5):1–42

Guo S, Wang Q, Wang L, Wang B, Guo L (2018) Knowledge graph embedding with iterative guidance from soft rules. In: 32nd AAAI conference on artificial intelligence

Harkous H, Fawaz K, Lebret R, Schaub F, Shin KG, Aberer K (2018) Polisis: automated analysis and presentation of privacy policies using deep learning. In: 27th {USENIX} security symposium ({USENIX} security 18), pp 531–548

Hill F, Bordes A, Chopra S, Weston J (2015) The Goldilocks principle: reading children’s books with explicit memory representations. ArXiv preprint arXiv:151102301

Hu Z, Ma X, Liu Z, Hovy E, Xing E (2016) Harnessing deep neural networks with logic rules. ArXiv preprint arXiv:160306318

Jobin A, Ienca M, Vayena E (2019) Artificial intelligence: the global landscape of ethics guidelines. ArXiv preprint arXiv:190611668

Kontopoulos E, Berberidis C, Dergiades T, Bassiliades N (2013) Ontology-based sentiment analysis of twitter posts. Expert Syst Appl 40(10):4065–4074

Kumar A, Irsoy O, Ondruska P, Iyyer M, Bradbury J, Gulrajani I, Zhong V, Paulus R, Socher R (2016) Ask me anything: dynamic memory networks for natural language processing. In: International conference on machine learning, pp 1378–1387

Lagioia F, Ruggeri F, Drazewski K, Lippi M, Micklitz HW, Torroni P, Sartor G (2019) Deep learning for detecting and explaining unfairness in consumer contracts. In: Legal knowledge and information systems: JURIX 2019: the 32nd annual conference, frontiers in artificial intelligence and applications, vol 322. IOS Press, pp 43–52

Lippi M, Lagioia F, Contissa G, Sartor G, Torroni P (2015) Claim detection in judgments of the eu court of justice. In: AI approaches to the complexity of legal systems. Springer, pp 513–527

Lippi M, Contissa G, Lagioia F, Micklitz HW, Pałka P, Sartor G, Torroni P (2019a) Consumer protection requires artificial intelligence. Nat Mach Intell 1(4):168–169. https://doi.org/10.1038/s42256-019-0042-3

Lippi M, Pałka P, Contissa G, Lagioia F, Micklitz HW, Sartor G, Torroni P (2019b) CLAUDETTE: an automated detector of potentially unfair clauses in online terms of service. Artif Intell Law 27(2):117–139

Lippi M, Contissa G, Jablonowska A, Lagioia F, Micklitz H, Palka P, Sartor G, Torroni P (2020) The force awakens: artificial intelligence for consumer law. J Artif Intell Res 67:169–190. https://doi.org/10.1613/jair.1.11519

Loos M, Luzak J (2016) Wanted: a bigger stick. On unfair terms in consumer contracts with online service providers. J Consum Policy 39(1):63–90

Ma Y, Peng H, Cambria E (2018) Targeted aspect-based sentiment analysis via embedding commonsense knowledge into an attentive LSTM. In: 32nd AAAI conference on artificial intelligence

Micklitz HW, Pałka P, Panagis Y (2017) The empire strikes back: digital control of unfair terms of online services. J Consum Policy 40(3):367–388

Mikolov T, Chen K, Corrado G, Dean J (2013) Efficient estimation of word representations in vector space. ArXiv preprint arXiv:13013781

Miller A, Fisch A, Dodge J, Karimi AH, Bordes A, Weston J (2016) Key-value memory networks for directly reading documents. ArXiv preprint arXiv:160603126

Miller GA (1995) Wordnet: a lexical database for English. Commun ACM 38(11):39–41

Miller T (2019) Explanation in artificial intelligence: insights from the social sciences. Artif Intell 267:1–38

Moens MF, Boiy E, Palau RM, Reed C (2007) Automatic detection of arguments in legal texts. In: Proceedings of the 11th international conference on artificial intelligence and law, pp 225–230

Munkhdalai T, Sordoni A, Wang T, Trischler A (2019) Metalearned neural mem-ory. In: Wallach H, Larochelle H, Beygelzimer A, d'Alch′e Buc F, Fox E, Gar-nett R (eds) Advances in neural information processing systems, Curran Associates, Inc., vol 32. https://proceedings.neurips.cc/paper/2019/file/182bd81ea25270b7d1c2fe8353d17fe6-Paper.pdf

Obar JA, Oeldorf-Hirsch A (2016) The biggest lie on the internet: Ignoring the privacy policies and terms of service policies of social networking services. In: TPRC 44: The 44th research conference on communication, information and internet policy

O’Neil C (2016) Weapons of math destruction: how big data increases inequality and threatens democracy. Crown Publishing Group, New York

Prakash A, Zhao S, Hasan SA, Datla VV, Lee K, Qadir A, Liu J, Farri O (2016) Condensed memory networks for clinical diagnostic inferencing. CoRR arXiv:abs/1612.01848

Rocktäschel T, Bosnjak M, Singh S, Riedel S (2014) Low-dimensional embeddings of logic. In: Proceedings of the ACL 2014 workshop on semantic parsing, pp 45–49

Rocktäschel T, Singh S, Riedel S (2015) Injecting logical background knowledge into embeddings for relation extraction. In: Proceedings of the 2015 conference of the North American chapter of the association for computational linguistics: human language technologies, pp 1119–1129

Sadeh N, Acquisti A, Breaux TD, Cranor LF, McDonald AM, Reidenberg JR, Smith NA, Liu F, Russell NC, Schaub F, et al (2013) The usable privacy policy project: combining crowdsourcing. In: Machine learning and natural language processing to semi-automatically answer those privacy questions users care about Carnegie Mellon University technical report CMU-ISR-13-119, pp 1–24

Schmunk S, Höpken W, Fuchs M, Lexhagen M (2013) Sentiment analysis: extracting decision-relevant knowledge from ugc. In: Information and communication technologies in tourism 2014. Springer, pp 253–265

Selbst AD, Barocas S (2018) The intuitive appeal of explainable machines. Fordham L Rev 87:1085

Shulayeva O, Siddharthan A, Wyner A (2017) Recognizing cited facts and principles in legal judgements. Artif Intell Law 25(1):107–126

Speer R, Havasi C (2013) Conceptnet 5: a large semantic network for relational knowledge. In: The people’s web meets NLP. Springer, pp 161–176

Sukhbaatar S, Weston J, Fergus R, et al (2015) End-to-end memory networks. In: Advances in neural information processing systems, pp 2440–2448

Sun H, Dhingra B, Zaheer M, Mazaitis K, Salakhutdinov R, Cohen WW (2018) Open domain question answering using early fusion of knowledge bases and text. ArXiv preprint arXiv:180900782

Tang D, Qin B, Liu T (2016) Aspect level sentiment classification with deep memory network. ArXiv preprint arXiv:160508900

Torisawa K, et al (2007) Exploiting wikipedia as external knowledge for named entity recognition. In: Proceedings of the 2007 joint conference on empirical methods in natural language processing and computational natural language learning (EMNLP-CoNLL), pp 698–707

Wachter S, Mittelstadt B (2019) A right to reasonable inferences: re-thinking data protection law in the age of big data and ai. Colum Bus L Rev 494

Wang J, Wang Z, Zhang D, Yan J (2017) Combining knowledge with deep convolutional neural networks for short text classification. In: IJCAI, pp 2915–2921

Wang WY, Mazaitis K, Cohen WW (2014) Structure learning via parameter learning. In: Proceedings of the 23rd ACM international conference on conference on information and knowledge management, pp 1199–1208

Weston J, Chopra S, Bordes A (2014) Memory networks. ArXiv preprint arXiv:14103916

Xiong C, Merity S, Socher R (2016) Dynamic memory networks for visual and textual question answering. In: International conference on machine learning, pp 2397–2406

Zaremba W, Sutskever I (2015) Reinforcement learning neural turing machines-revised. ArXiv preprint arXiv:150500521

Zelikovitz S, Hirsh H (2003) Integrating background knowledge into text classification. In: IJCAI, pp 1448–1449

Zhang J, Lertvittayakumjorn P, Guo Y (2019a) Integrating semantic knowledge to tackle zero-shot text classification. ArXiv preprint arXiv:190312626

Zhang Z, Han X, Liu Z, Jiang X, Sun M, Liu Q (2019b) Ernie: Enhanced language representation with informative entities. ArXiv preprint arXiv:190507129

Zhou H, Young T, Huang M, Zhao H, Xu J, Zhu X (2018) Commonsense knowledge aware conversation generation with graph attention. In: IJCAI, pp 4623–4629

Funding

Open access funding provided by Alma Mater Studiorum - Università di Bologna within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Francesca Lagioia has been supported by the European Research Council (ERC) Project “CompuLaw” (Grant Agreement No. 833647) under the European Union’s Horizon 2020 research and innovation programme. Paolo Torroni has been partially supported by the H2020 Project AI4EU (Grant Agreement No. 825619). Marco Lippi would like to thank NVIDIA Corporation for the donation of the Titan X Pascal GPU used for this research.

Supplementary experimental details

Supplementary experimental details

To further assess the contribution of direct rationales supervision, i.e. strong supervision, Figs. 2, 3, 4, 5 and 6 compare ground truth total rationales usage with respect to employed MANN models. Categories are sorted (descending order) based on ground truth values. In some cases (see Figs. 2 and 3), strong supervision significantly drives MANN models towards ground truth usage, without negatively affecting the performance. In other cases, for instance CR and LTD (Figs. 4 and 5) it does not appear to have any significant effect. More fine-grained results seen in Table 8 also confirm these observations. These empirical results could be due to the way rationales are formulated and distributed across unfair examples and/or to the strength of strong supervision regularization during training. For the latter case, Fig. 6 shows an additional example where strong supervision is excessively affecting the training phase, causing memory over-usage. We plan to further investigate this issue in future extensions.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ruggeri, F., Lagioia, F., Lippi, M. et al. Detecting and explaining unfairness in consumer contracts through memory networks. Artif Intell Law 30, 59–92 (2022). https://doi.org/10.1007/s10506-021-09288-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10506-021-09288-2