Abstract

We provide a detailed importance sampling analysis for variance reduction in stochastic volatility models. The optimal change of measure is obtained using a variety of results from large and moderate deviations: small-time, large-time, small-noise. Specialising the results to the Heston model, we derive many closed-form solutions, making the whole approach easy to implement. We support our theoretical results with a detailed numerical analysis of the variance reduction gains.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and general overview

1.1 Introduction

Monte Carlo simulation is the standard (if not the only) technique for most numerical problems in stochastic modelling. It has a long history and has been successfully applied in many fields, such as biology (Manly, 2018), statistical Physics (Binder et al., 2012), Finance (Glasserman, 2004) among others. The default order of magnitude for the variance of the estimator is \(\mathcal {O}(N^{-1/2})\) with N the number of sample paths. It has long been recognised though that several tricks achieve lower variance with equivalent (hopefully zero) bias; among those antithetic variables and importance sampling have become ubiquitous. We focus on the latter, for which large and moderate deviations (LDP and MDP) provide closed-form formulae, making their applications pain-free and without additional computer costs.

The first attempt to reduce the variance of a Monte Carlo estimator based on asymptotics originated, rather heuristically, in Siegmund (1976). This was then made rigorous by Glasserman and Wang (1997), who also highlighted pitfalls of the method and by Dupuis and Wang (2004), who provided clear explanations on the trade-off between asymptotic approximations and the restrictions they entail on the induced change of measure. Guasoni and Robertson (2007) put this into practice for out-the-money path-dependent options in the Black–Scholes model, and Robertson (2010) developed a thorough analysis for the Heston model using sample path large deviations. This is our starting point, and the goal of our current enterprise is to analyse different asymptotic regimes (small-time, large-time, small-noise), both in the large deviations and in the moderate deviations regimes, in the Heston model and to show how these yield closed-form formulae for an optimal change of measure for importance sampling purposes.

We propose, in particular, a specific form of adaptive drift, allowing for fast computation and increase in variance reduction. For geometric Asian Call options in the Heston model, MDP-based estimators with deterministic changes of drift turn out to be no better than those computed with deterministic volatility approximation in the LDP approach. However, MDP-based estimators with adaptive changes of drift perform much better than their LDP counterparts with deterministic volatility approximation, and in fact show a performance very close to the LDP-based estimators in Heston. These adaptive MDP-based estimators therefore provide an efficient alternative in models where LDP is difficult to compute.

Setting and notations Throughout this paper we work on a filtered probability space \((\Omega , \mathcal {F}, \mathbb {P}, \mathbb {F})\) with a finite time horizon \(T>0\), where \(\Omega = \mathcal {C}([0,T]\rightarrow \mathbb {R}^2)\) is the space of all continuous functions, \(\mathcal {F}\) is the Borel-\(\sigma \)-algebra on \(\Omega \) and \(\mathbb {F}:=(\mathcal {F}_t)_{t\in [0,T]}\) is the natural filtration of a given two-dimensional standard Brownian motion \(\varvec{\textrm{W}}:=(W, W^{\perp })\). For a pair of (possibly deterministic) process (X, Y), with X predictable and Y a semi-martingale, we write the stochastic integral \(X\circ Y :=\int _{0}^{\cdot } X_s \textrm{d}Y_s\) and \(X\circ _{t}W :=(X\circ W)_t\) for any \(t\in [0,T]\). We denote any d-dimensional path by \(\varvec{\textrm{h}}:=(h_1,\dots ,h_d)\) for \(d\in \mathbb {N}\), and for such a path, \(\Vert \varvec{\textrm{h}}\Vert _T^2 :=\int _{0}^{T}\left( |h_1(t)|^2+\dots + |h_d(t)|^2\right) \textrm{d}t\). The Cameron-Martin space \(\mathbb {H}^0_T\) of Brownian motion is isomorphic to the space of absolutely continuous functions \(\mathcal{A}\mathcal{C}([0,T])\) starting at zero and with square integrable first derivatives. We define a similar space \(\smash {\mathbb {H}^{x}_T :=\{\varphi \in \mathcal {C}([0,T]\rightarrow \mathbb {R}^d): \varphi _{t}=x+\int _{0}^{t} \dot{\varphi }_{s} \textrm{d}s, \; \dot{\varphi } \in L^{2}\left( [0, T]; \mathbb {R}^{d}\right) \} }\) for processes starting at \(x\in \mathbb {R}^d\) and a subspace \(\mathbb {H}_T^{x,+}\subset \mathbb {H}_T^x\) where functions map to \((\mathbb {R}^{+})^{d}\) instead of \(\mathbb {R}^d\). Whenever a variable has an obvious time-dependence, we drop the explicit reference in the notation. We also write \(\mathcal {C}_T:=\mathcal {C}([0,T]\rightarrow \mathbb {R})\) to simplify statements. Finally \(\{X^\varepsilon \}\sim \textrm{LDP}(\textrm{I}^{X}, \mathcal {C}_T)\) means that the sequence \(\{X^\varepsilon \}\) satisfies a large deviations principle as \(\varepsilon \) tends to zero on \(\mathcal {C}_T\) with good rate function \(\textrm{I}^{X}\). For a given function f, we denote by \(\mathcal {D}(f)\) its effective domain. We finally write \(\mathbb {R}^+:=[0,\infty )\) and \(\mathcal{B}\mathcal{V}\) for the space of paths with bounded variation.

1.2 Overview of the importance sampling methodology

We consider a given risk-neutral probability measure \(\mathbb {P}\), so that the fundamental theorem of asset pricing implies that the price of an option with attainable payoff \(G\in L^2(\Omega ;\mathbb {R})\) is equal to \(\mathbb {E}^{\mathbb {P}}[G]\). While, strictly speaking, we do not need \(L^2(\Omega ;\mathbb {R})\) for pricing purposes, we require it to estimate the variance of payoff estimators. Monte–Carlo estimators rely on the (strong) law of large number, whereby for iid samples \(\{G_{i}\}_{1\le i \le n}\) from \(\mathbb {P}\circ G^{-1}\), the empirical mean \(\widehat{G}_n :=\frac{1}{n}\sum _{i=1}^{n}G_i\) converges to the true expectation \(\mathbb {P}\)-almost surely:

Importance sampling is a method to reduce the variance of the estimator \(\widehat{G}_n\), yielding a new law \(\mathbb {Q}\) such that \(\mathbb {E}^{\mathbb {Q}}[G] = \mathbb {E}^{\mathbb {P}}[G]\) and \(\mathbb {V}^{\mathbb {Q}}[G] < \mathbb {V}^{\mathbb {P}}[G]\) (and of course both the equality and inequality remain true with G replaced by \(\widehat{G}_n\)). Let for example \(Z :=\frac{\textrm{d}\mathbb {Q}}{\textrm{d}\mathbb {P}}\) denote the Radon-Nikodym derivative of the change of measure, so that \(\mathbb {E}^{\mathbb {P}}[G] = \mathbb {E}^{\mathbb {Q}}[G Z^{-1}]\). The variance of the Monte–Carlo estimator based on iid samples of \(\widehat{G}_n Z^{-1}\) under \(\mathbb {Q}\) is then

If Z is chosen such that \(\mathbb {E}^{\mathbb {P}}[\widehat{G}_n^2 Z^{-1}] < \mathbb {E}^{\mathbb {P}}[\widehat{G}_n^2]\), the variance is thus reduced. Finding such Z however is usually hard, and we shall instead consider the approximation

for small \(\varepsilon >0\), for two random variables \(G_{\varepsilon }\) and \(Z_{\varepsilon }\) whose choices will be discussed later. The computation of this expression is then further simplified by the use of the Varadhan’s lemma (Theorem B.0.1), which casts the problem into a deterministic optimisation over the appropriate Cameron-Martin space.

1.3 Choosing an approximated random variable \(G_{\varepsilon }\)

Consider an attainable payoff G(X), where X is a unique strong solution to the stochastic differential equation

where \(b,\sigma :\mathbb {R}\rightarrow \mathbb {R}\) are sufficiently well-behaved deterministic functions and W is a standard Brownian motion. The approximation of G is then defined as \(G_{\varepsilon }:=G(X^{\varepsilon })\), where the following are possible approximations of X:

Definition 1.3.1

Let X be a unique strong solution to (1.2). The process \(X^\varepsilon \) is called

-

(i)

Small-noise approximation if

$$\begin{aligned} \textrm{d}X_t^\varepsilon = b(X_t^\varepsilon ) \textrm{d}t + \sqrt{\varepsilon } \sigma (X_t^\varepsilon )\textrm{d}W_t, \quad X_0^\varepsilon = x_0. \end{aligned}$$(1.3) -

(ii)

Small-time approximation if

$$\begin{aligned} \textrm{d}X_t^\varepsilon = \varepsilon b(X_t^\varepsilon ) \textrm{d}t + \sqrt{\varepsilon } \sigma (X_t^\varepsilon ) \textrm{d}W_t, \quad X_0^\varepsilon = x_0 \end{aligned}$$(1.4) -

(iii)

Large-time approximation if

$$\begin{aligned} \textrm{d}X_t^\varepsilon = \frac{1}{\varepsilon } b(X_t^\varepsilon ) \textrm{d}t + \frac{1}{\sqrt{\varepsilon }} \sigma (X_t^\varepsilon ) \textrm{d}W_t, \quad X_0^\varepsilon = x_0. \end{aligned}$$(1.5)

The terminology here is straightforward since (1.4) follows from (1.2) via the mapping \(t \mapsto \varepsilon t\) and (1.5) follows from (1.2) via the mapping \(t\mapsto t/\varepsilon \). The small-noise (1.3) comes from the early works on random perturbations of deterministic systems by Varadhan (1967) and Freidlin and Wentzell (2012).

1.4 General approach

We consider an asset price \(S:=\{S_t\}_{t\in [0,T]}\) and the corresponding log-price process \(X:=\log (S) :=\{X_t\}_{t\in [0,T]}\), with dynamics

where \(\varvec{\textrm{W}}= (W,W^{\perp })\) is a standard two-dimensional Brownian motion and \(B:=\rho W + \overline{\rho }W^{\perp }\) with correlation coefficient \(\rho \in (-1,1)\) and \(\overline{\rho }:=\sqrt{1-\rho ^2}\). The drift and diffusion coefficients of the volatility process satisfy \(f:\mathbb {R}^+\rightarrow \mathbb {R}\) and \(g:\mathbb {R}^+\rightarrow \mathbb {R}^+\) and Assumption 1.4.1 if not stated otherwise (e.g. in the case of large-time approximation in Sect. 3.3 additional assumptions are required for ergodicity purposes).

Assumption 1.4.1

-

(i)

The function \(f:\mathbb {R}^+\rightarrow \mathbb {R}\) is globally Lipschitz continuous;

-

(ii)

The function \(g:\mathbb {R}^+\rightarrow \mathbb {R}^+\) is increasing, strictly positive outside origin. Furthermore, there exist \(K>0\) and \(p\ge \frac{1}{2}\) such that, for all \(x,y\in \mathbb {R}^+\),

$$\begin{aligned} \left| g(x)-g(y)\right| \le K |x-y|^p. \end{aligned}$$

Remark 1.4.1

Condition (ii) in fact implies p-polynomial growth condition for \(p\ge \frac{1}{2}\), i.e., \(|g(x)|\le C(1 + |x|^p)\) for all \(x\in \mathbb {R}^+\). Indeed, let \(y=0\) and \(x\in \mathbb {R}^+\), then \(|g(x)-g(0)|\le K|x|^p\) and by the triangle inequality \(|g(x)|\le |g(0)|+K|x|^p\).

Under this assumption, Yamada-Watanabe’s theorem (Yamada & Watanabe, 1971, Theorem 1) ensures the existence of a unique strong solution to (1.6). Consider now the continuous map \(G\in \mathcal {C}_T\), yielding the option price \(\mathbb {E}[G(X)]\). Finding the optimal Radon–Nikodym derivative encoding the change of measure from \(\mathbb {P}\) to \(\mathbb {Q}\) is hard in general and we instead consider the particular class of change of measure

which is well defined and satisfies \(\mathbb {E}^{\mathbb {P}}[Z_T]=1\) whenever \(\varvec{\textrm{h}}\in \mathbb {H}^0_T\). Now let \(F :=\log |G|\), so that \(\mathbb {E}^{\mathbb {P}}[Z_T^{-1} G(X)^2] = \mathbb {E}^{\mathbb {P}}[\textrm{e}^{2F(X) - \log (Z_T)}]\); the approximation (1.1) then yields for \(\varvec{\textrm{W}}^\varepsilon :=\sqrt{\varepsilon }\,\varvec{\textrm{W}}\) the estimate

to compute, for some proxies \(X^\varepsilon \).

Definition 1.4.1

A path \(\varvec{\textrm{h}}\in \mathbb {H}_T^0\) minimising \(L(\varvec{\textrm{h}})\) is called an asymptotically optimal change of drift.

From this point onward, several approaches exist in the literature. In Dupuis et al. (2012), Hartmann et al. (2018), Dupuis and Johnson (2017) fully adaptive schemes are considered, where \(\varvec{\textrm{h}}\) is function of \((t, X_t, V_t)\). These schemes effectively reduce variance, but are expensive to compute. For that reason, we look at the case where \(\varvec{\textrm{h}}\) is an absolutely continuous function with derivatives in \(L^2([0,T]; \mathbb {R})\). The main advantage is the fast computation in comparison to the fully adaptive schemes. Conserving this advantage, we also look at paths \(\varvec{\textrm{h}}\) of the form \(\int _0^.\dot{\varvec{\textrm{h}}}(t)\sqrt{V_t}\textrm{d}t\) (yielding a stochastic change of measure) for which computations are usually just as fast and variance reduction is higher. In the case where \(\varvec{\textrm{h}}\) is a deterministic function (the approach is similar in other settings), the main methodology we shall develop below then goes through the following steps:

-

(i)

We choose appropriate approximations \(X^{\varepsilon }\) as in Sect. 1.3;

-

(ii)

We prove an LDP (MDP) with good rate function \(\textrm{I}^{X}\) for \(\{X^\varepsilon \}_{\varepsilon >0}\);

-

(iii)

We show that Varadhan’s Lemma applies, so that the function \(L(\varvec{\textrm{h}})\) in (1.8) reads

$$\begin{aligned} L(\varvec{\textrm{h}}) = \sup _{X\in \mathcal {D}(\textrm{I}^{X})} \mathfrak {L}(X;\varvec{\textrm{h}}), \end{aligned}$$(1.9)where

$$\begin{aligned} \mathfrak {L}(X;\varvec{\textrm{h}}) :=2F(X) - \left( -\frac{1}{2}\Vert \dot{\varvec{\textrm{h}}}\Vert ^2 + \dot{\varvec{\textrm{h}}}\circ _T\varvec{\textrm{W}}^{\varepsilon \top }\right) - \textrm{I}^{X}(X). \end{aligned}$$(1.10) -

(iv)

We consider the dual problem of (1.9) in the sense of Definition 1.4.2. For more details see the remark below.

Definition 1.4.2

The primal problem is defined as

while the dual consists in

Remark 1.4.2

In many cases the primal optimisation problem (1.11) may be difficult to solve analytically, so we deal with the dual problem which turns out to be much simpler. With further assumptions, it may be possible to prove strong duality, however this is outside the scope of this paper. Note in particular that the functional \(\mathfrak {L}\) does not admit any obvious convexity property, making classical duality results unusable (see for example Rockafellar 1974, Sect. 4). Recent results about duality and optimality conditions in stochastic optimisation (Biagini et al., 2018; Pennanen, 2011) could provide some hints though.

Remark 1.4.3

Small-time approximations may induce important loss of information because the drift term in \(H^\varepsilon \) (the quadratic part in \(\dot{h}\)) can be negligible and can thus lead to a trivial dual problem. In the Black–Scholes setting (Sect. 4.1), a small-time approximation leads to the following problem: let \(F:\mathcal {C}_T\rightarrow \mathbb {R}^+\) be a smooth enough function so that Varadhan’s Lemma (Theorem B.0.1) holds, then the small-noise approximation problem reads

However, in the small-time case we actually have

In this small-time setting, the dual problem (1.12) then reads

Clearly the path \(\textrm{h}\) can be multiplied by any arbitrarily large positive constant to increase the inner supremum, and therefore the optimisation does not admit a maximiser. In these cases, we thus do not consider the small-time approximation.

Remark 1.4.4

Another drawback of the outlined method exists. In most cases, solving the dual problem in step iv) involves numerical optimisation, which in turn means that the Novikov conditions of the resulting optimal change of drift \(\varvec{\textrm{h}}\) cannot be rigorously verified. However, practically we see notable reduction in variance, implying that the obtained Radon-Nikodym derivative induces a change of measure and is indeed a martingale.

The paper’s structure is as follows. In Sect. 2 we look at stochastic volatility models satisfying Assumption 1.4.1 and derive explicit solutions for large deviations approximations for path-dependent payoffs of the form \(F\big (\smallint _0^T\vartheta _t\textrm{d}X_t\big )\) for general deterministic paths \(\vartheta \in \mathcal {C}_T\). This includes state-dependent payoffs of European type, i.e., functions of \(X_T\) (for the choice of \(\vartheta = 1\)) and of Asian type \(\frac{1}{T}\smallint _0^TX_t\textrm{d}t\) (for \(\vartheta _t = \frac{T-t}{T}\)). In contrast to Robertson (2010) we consider a more general approach using Garcia’s theorem (Garcia, 2007), which includes small-time approximations and studies stochastic changes of measure. Later in Sect. 3 we study moderate deviations, where we derive small-noise, small-time and large-time MDPs, whose advantages, compared to LDP, are simpler forms of rate functions. In Sect. 4, we provide examples of models used in mathematical finance, which satisfy our setup, with a particular emphasis on the Heston model. Finally, in Sect. 5 we highlight numerical results for the Heston model and compare variance reduction results for different approximation types. Some of the technical proofs are relegated to the Appendix A.

2 Importance sampling via large deviations

2.1 Small-noise LDP

We start with the small-noise approximation of (1.6):

2.1.1 Large deviations

In the spirit of Freidlin and Wentzell (2012), we provide an LDP in \({\mathcal {C}([0,T]\rightarrow \mathbb {R}^2)}\) for \(\{X^\varepsilon , V^\varepsilon \}\); usual assumptions involve non-degenerate and locally-Lipschitz diffusion though, which clearly fails for square-root type stochastic volatility models. Hence, to derive LDP for the (log-)price process, it is first necessary to derive LDP for the pair \(\{V^\varepsilon , Y^\varepsilon \}\) given by

assuming an LDP for V. To be more exact we follow (Robertson, 2010) and assume the following:

Assumption 2.1.1

\(\{V^{\varepsilon }\}_{\varepsilon >0}\sim \textrm{LDP}(\textrm{I}^{V}, \mathcal {C}_T)\) with a good rate function \(\textrm{I}^{V}\) with \({\mathcal {D}(\textrm{I}^{V})\subset \mathbb {H}_{T}^{v_0}}\).

Thus, to continue, we first need to show that Assumption 2.1.1 holds in our setting and check whether any further assumption on the coefficients are necessary. Many processes arising from volatility models, where the classical Freidlin-Wentzell does not apply, have been studied in the literature. For example Donati-Martin et al. (2004) show that \(V^{\varepsilon }\) satisfies an LDP in the case of the Heston model (4.4), then Chiarini and Fischer (2014) show existence of an LDP for class of models with uniformly continuous coefficients on compacts, Conforti et al. (2015) show an LDP for non-Lipschitz diffusion coefficient of CEV type. Most notably, Baldi and Caramellino (2011) cover the case of Assumption 1.4.1 with \(f(0)>0\) and sub-linear growth when \(f,g\rightarrow \infty \). We now state their main result, which requires a small additional assumption.

Assumption 2.1.2

Both \(f,g:\mathbb {R}\rightarrow \mathbb {R}\) have at most linear growth at infinity and \(f(0)>0\).

Theorem 2.1.1

(Theorem 1.2 in Baldi and Caramellino 2011) Let \(V^{\varepsilon }\) solve (2.1) on [0, T] with \(v_0>0\). Then under Assumptions 1.4.1 and 2.1.2 the process \(V^\varepsilon \) satisfies an LDP with good rate function

The process \(Y^\varepsilon =\int _0^\cdot \sqrt{V^\varepsilon _{t}} \textrm{d}W^{\varepsilon ,\perp }_{t}\) is simply the Itô integral of a square root diffusion against a Brownian motion, which falls exactly into the setting of Garcia’s Theorem (Garcia, 2007). Before stating it (Theorem 2.1.2) though, we require the notion of uniform exponential tightness.

Definition 2.1.1

(Definition 1.2 in Garcia 2007) Let \(\mathcal {U}\) denote the space of simple, real-valued, adapted processes H such that \(\sup _{t \ge 0}\left| H_{t}\right| \le 1\). A sequence of semi-martingales \(\left\{ M^{\varepsilon }\right\} _{\varepsilon > 0}\) is uniformly exponentially tight (UET) if, for every \(c, t>0\), there exists \(K_{c, t}>0\) such that

Theorem 2.1.2

(Theorem 1.2 in Garcia 2007) Let \(\left\{ Z^{\varepsilon }\right\} _{\varepsilon > 0}\) be a sequence of adapted, càdlàg stochastic processes, and \(\left\{ M^{\varepsilon }\right\} _{\varepsilon > 0}\) a sequence of uniformly exponentially tight semi-martingales. If the sequence \(\left\{ Z^{\varepsilon }, M^{\varepsilon }\right\} _{\varepsilon > 0} \) satisfies an LDP with rate function \(\widehat{\textrm{I}}\), then the sequence of triples \(\left\{ Z^{\varepsilon }, M^{\varepsilon }, Z^\varepsilon \circ M^\varepsilon \right\} _{\varepsilon > 0} \) satisfies an LDP with the good rate function

In particular, the sequence of stochastic integrals \(\left\{ Z^{\varepsilon } \circ M^{\varepsilon }\right\} _{\varepsilon > 0}\) satisfies an LDP with rate function

Theorem 2.1.3

Under Assumption 2.1.1, \(\{V, W^\perp , Y^{\varepsilon }\}\sim \textrm{LDP}(\textrm{I}^{V, W, Y}, \mathcal {C}([0,T]\rightarrow \mathbb {R}^3)\) with

Moreover, we also have \(\{V^{\varepsilon }, Y^{\varepsilon }\}\sim \textrm{LDP}\left( \textrm{I}^{V,Y},\mathcal {C}\left( [0,T]\rightarrow \mathbb {R}^2\right) \right) \), where

with \(\textrm{I}^{W}(w) = \frac{1}{2} \int _{0}^{T} \dot{w}_{t}^{2} \textrm{d}t\) if \(w \in \mathbb {H}_{T}^{0}\) and infinite otherwise.

Proof

Since the scaled Brownian motion \(W^{\varepsilon ,\perp }\) is a uniformly exponentially tight martingale (Garcia, 2007, Example 2.1), we only need the LDP for the pair \(\{\sqrt{V^\varepsilon }, W^{\varepsilon , \perp }\}\) to apply Theorem 2.1.2 in order to obtain an LDP for the stochastic integral \(Y^\varepsilon \). The LDP for \(\sqrt{V^\varepsilon }\) is immediate from the Contraction principle (Dembo & Zeitouni, 2010, Theorem 4.2.1), where the corresponding rate function reads \(\textrm{I}^{\sqrt{V}}(\psi ) = \textrm{I}^{V}\left( \psi ^2\right) \) and the joint \(\{\sqrt{V^{\varepsilon }}, W^{\varepsilon ,\perp }\}\sim \textrm{LDP}\left( \textrm{I}^{\sqrt{V}, W}, \mathcal {C}([0,T]\rightarrow \mathbb {R}^2)\right) \) is thus a classical result, where the rate function is simply a sum of corresponding rate functions: \(\textrm{I}^{\sqrt{V}, W}(\psi , w)=\textrm{I}^{V}(\psi ^2) + \textrm{I}^{W}(w)\). Here, \(\textrm{I}^{W}\) is nothing else than the usual energy function for the Brownian motion from Schilder’s theorem (Schilder, 1966). This allows us to apply Theorem 2.1.2 to obtain \(\{\sqrt{V}, W^\perp , Y^{\varepsilon }\}\sim \textrm{LDP}\left( \textrm{I}^{\sqrt{V}, W, Y}, \mathcal {C}([0,T]\rightarrow \mathbb {R}^3)\right) \) with \(\textrm{I}^{\sqrt{V}, W, Y}(\psi , w, \phi ) =\textrm{I}^{\sqrt{V}, W}(\psi , w)\) if \(\phi =\psi \circ w\) for \(w\in \mathcal{B}\mathcal{V}\) and infinite otherwise. Let us now consider a continuous map \((x,y,z) \mapsto (x^2, y, z)\) through which we recover the LDP for \(\left\{ V^\varepsilon , W^{\varepsilon ,\perp }, Y^\varepsilon \right\} \), where the rate function \(\textrm{I}^{V,W,Y}(\psi , w, \phi ) =\textrm{I}^{\sqrt{V},W,Y}(\sqrt{\psi }, w, \phi )\) is granted by the Contraction principle. Finally, by projection, we have the LDP for the pair \(\{V^\varepsilon ,Y^\varepsilon \}\) with the rate function

where in the second line, the fact that absolute continuity implies bounded variation was used after application of Theorem 2.1.2. \(\square \)

Remark 2.1.1

The form of the rate function is the same as in Robertson (2010, Lemma 3.1), but now holds for a general volatility process adhering to aforementioned Assumption 2.1.1.

We now continue similarly to Robertson (2010, Sect. 3): the LDP for \(X^\varepsilon \) and its corresponding price process \(S^\varepsilon \) are obtained from Theorem 2.1.3 using the Contraction principle (Dembo & Zeitouni, 2010, Theorem 4.2.1), since both maps \(X^\varepsilon =X^\varepsilon (V^\varepsilon ,Y^\varepsilon )\) and \(S^\varepsilon =S^\varepsilon (V^\varepsilon ,Y^\varepsilon )\) are continuous.

2.1.2 LDP-based importance sampling

We consider two changes of measure, with a deterministic and a stochastic change of drift, and start with the former.

Deterministic change of drift The drift is of the form

with \(\varvec{\textrm{h}}\in \mathbb {H}_T^0\). The limit (1.8) then reads

We now follow the same approach as in the case of deterministic volatility in Sect. 4.1. Let \(F:\mathcal {C}_T\rightarrow \mathbb {R}^+\) be continuous and bounded from above and \(\dot{\varvec{\textrm{h}}}\) be of finite variation, then the tail condition of Varadhan’s Lemma in Sect. B.0.1 is immediate from Lemma 4.1.1 and the functional L in (1.9) reads

where \(\varphi (\varvec{\textrm{x}})\) is the unique solution on [0, T] to

with initial conditions \(\varphi _0(\varvec{\textrm{x}}) = 0\) and \(\psi _0 = v_0\), and \(\varvec{\mathrm {\varrho }}:=(\rho , \overline{\rho })\). To solve the dual problem (1.12), the inner optimisation reads

and can then be solved as

which is an asymptotically optimal change of drift in the sense of Definition 1.4.1.

Stochastic change of drift We now consider the stochastic change of measure

with \(\dot{\varvec{\textrm{h}}}\) a deterministic function of finite variation such that \(\mathbb {E}[\frac{\textrm{d}\mathbb {Q}}{\textrm{d}\mathbb {P}}]=1\); this holds for example under the Novikov condition \(\mathbb {E}\left[ \exp (\frac{1}{2}\int _0^T \Vert \dot{\varvec{\textrm{h}}}(t)\Vert ^2 V_t \textrm{d}t)\right] <\infty \). We again consider \(G:\mathcal {C}_T\rightarrow \mathbb {R}^+\) and let \(F:=\log |G|\). The minimisation problem (1.8) now reads

Since F is continuous, the term inside the exponential is a continuous function of \(\{V^{\varepsilon },\varvec{\textrm{Y}}^{\varepsilon }\}\). Varadhan’s Lemma then yields \(L(\varvec{\textrm{h}}) = \sup _{\varvec{\textrm{x}}\in \mathbb {H}_T^0}\mathfrak {L}(\varvec{\textrm{x}},\varvec{\textrm{h}})\), with

where \(\varvec{\textrm{x}}= (x_1 \; x_2)^\top \) and \(\{\varphi (\varvec{\textrm{x}}),\psi (\varvec{\textrm{x}})\}\) are unique solutions on [0, T] to

with initial conditions \((\varphi _0,\psi _0) = (0,v_0)\). For the dual problem, we search for a change of measure with \(\varvec{\textrm{h}}\) such that:

The maximisation problem is very similar to the one with deterministic change of drift (2.2). However, as we will see in Sect. 5, the stochastic version usually gives better results.

2.1.3 Application to options with path-dependent payoff

Consider a payoff \(G(\vartheta \circ _{T}X)\) with \({G:\mathbb {R}^{+} \rightarrow \mathbb {R}^{+}}\) differentiable, \(\vartheta \) a positive function of class \(\mathcal {C}^1\) and \(F :=\log |G|\). We only look at the deterministic case, namely the optimisation problem (2.2) since the solutions to (2.3) can be easily deduced from it. It reads

The following lemma helps transforming the above optimisation problem.

Lemma 2.1.1

Let \(\varvec{\textrm{x}}\in \mathbb {H}_T^0 \times \mathbb {H}_T^0\). The function \(\mathfrak {K}:\mathbb {H}_T^0 \times \mathbb {H}_T^0 \rightarrow \mathbb {H}_T^0\times \mathbb {H}_T^{v_0,+}\) such that \(\mathfrak {K}(\varvec{\textrm{x}})=(\varphi ,\psi )\) is solution to

with initial conditions \(\varphi _0 = 0\) and \(\psi _0 = v_0\), is well defined and is a bijection.

Proof

Clearly \(\dot{\psi }\in L^{2}\left( [0, T]; \mathbb {R}\right) \) and the unique solution \(\psi \) to the ODE \(\dot{\psi _t}=f(\psi _t)+g(\psi _t)\dot{w}_t\) with \(\psi _0 = v_0 > 0\) is strictly positive under Assumption 1.4.1 by Baldi and Caramellino (2011, Proposition 3.11). Therefore \(\psi \in \mathbb {H}_T^{v_0, +}\) and \(\mathfrak {K}(\varvec{\textrm{x}})=(\varphi , \psi )\) is well defined. Finally, \(\mathfrak {K}\) is clearly a bijection and its inverse can be computed explicitly as

\(\square \)

Using Lemma 2.1.1, we can substantially simplify the optimisation problem by writing it in terms of \(\mathfrak {K}(\varvec{\textrm{x}})\). To be more precise, we will make use of the following transformation:

which stems from the two components of \(\mathfrak {K}(\varvec{\textrm{x}})\). The optimisation problem becomes

This allows us to apply Euler-Lagrange to the problem seen as an optimisation over \(\left\{ \int _0^\cdot \vartheta _t \dot{\varphi }_t \textrm{d}t,\,\psi \right\} \):

where \(\zeta :=f'(\psi )g(\psi ) + (\dot{\psi }-f(\psi ))g'(\psi )\). This system of equations is still hard to solve for general f and g, but can be solved for the Heston model, as done in Sect. 4.4 below.

2.2 Small-time LDP

Applying the mapping \(t\mapsto \varepsilon t\) to (1.6) yields

Robertson (2010, Proposition 3.2) showed that \(\varepsilon \int _0^\cdot V_t^\varepsilon \textrm{d}t\) is in fact exponentially equivalent to zero (Definition B.0.1), so that the drift of \(V^\varepsilon \) can be ignored at the large deviations level. In the case of a general drift f, the following lemma provides a similar statement:

Lemma 2.2.1

The process \(\varepsilon \int _0^\cdot f(V_t^\varepsilon ) \textrm{d}t\) is exponentially equivalent to zero.

Proof

Markov’s inequality implies that, for any \(\delta >0\),

and we are therefore left to show that \(\limsup _{\varepsilon \downarrow 0}\varepsilon \log \mathbb {E}\left[ \exp \left\{ \frac{1}{\varepsilon }\int _0^T f(V_s^\varepsilon )\textrm{d}s \right\} \right] \) is finite. To that end we apply the integral Jensen inequality

and the linear growth condition from the global Lipschitz condition in Assumption 1.4.1:

Next, by the properties of the logarithm and the supremum

We can now apply Gronwall’s Lemma to the last term, which yields for some \(C>0\),

which is finite. \(\square \)

Following this lemma, the results from the previous section could simply be adapted so that \(\{V^\varepsilon ,W^{\varepsilon }\}\) satisfy the same LDP by simply setting \(f=0\) (or equivalently \(\kappa = 0\) in the case of Heston (4.4)). However, this violates the condition \(f(0)>0\) in Baldi and Caramellino (2011). Fortunately, Conforti et al. (2015) removed the need for strict positivity on the drift at the initial time by imposing more stringent conditions on the diffusion.

Assumption 2.2.1

-

(i)

There exists \(\xi >0\) such that \(g(y)=\xi |y|^\gamma \) for \(\gamma \in [1 / 2,1)\) for all \(y\ge 0\);

-

(ii)

The equality \(f(y)=\tau (y)+ K y\) holds for all \(y\ge 0\), where \(\tau \) is a Lipschitz continuous and bounded function, and \(\tau (y) \ge 0\) in a neighbourhood of the origin.

Theorem 2.2.1

(Theorem 1.1 in Conforti et al. 2015) Under Assumption 2.2.1, the solution \(V^\varepsilon \) to (2.1) satisfies \(\left\{ V^{\varepsilon }\right\} \sim \textrm{LDP}(\textrm{I}^{V}, \mathcal {C}([0, T]\rightarrow \mathbb {R}^{+}))\) with

Therefore by setting \(K=0\) we can use the methodology form the previous section since the LDPs are the same. Similarly as before, we only consider the deterministic change of drift, since the stochastic case is very similar.

We therefore search for \(\varvec{\textrm{h}}\) such that

where \(\varphi _t(\varvec{\textrm{x}})\) is the unique solution on [0, T] to

2.3 Large-time LDP

Variance reduction for affine stochastic volatility processes via importance sampling through the large-time approximation is extensively covered in Grbac et al. (2021), so we do not repeat the study and refer the reader to the aforementioned work.

3 Importance sampling via moderate deviations

In the previous sections, large deviations provided us with a way of computing the asymptotic change of measure for importance sampling, via an \(\varepsilon \)-approximation of the log-price \(X^{\varepsilon }\). While the large deviation rate function is a convenient quadratic function in the deterministic volatility setting, it is in general rather cumbersome to compute numerically, unfortunately offsetting any importance sampling gain. Moderate deviations act on a cruder scale, but provide quadratic rate functions, easier to compute. Suppose that the sequence \(\{X^{\varepsilon }\}_{\varepsilon >0}\) converges in probability to \(\overline{X}\). Moderate deviations for \(\{X^{\varepsilon }\}_{\varepsilon >0}\) are defined as large deviations for the rescaled sequence

where \(h(\varepsilon )\) tends to infinity and \(\sqrt{\varepsilon }h(\varepsilon )\) to zero as \(\varepsilon \) tends to zero. A typical choice is \(h(\varepsilon ) = \varepsilon ^{-\tilde{\alpha }}\) for \(\tilde{\alpha } \in (0,\frac{1}{2})\), equivalently \(\frac{1}{\sqrt{\varepsilon }h(\varepsilon )} = \varepsilon ^{-\alpha }\) for \(\alpha \in (0,\frac{1}{2})\). We shall stick to this choice of h here in order to highlight rates of convergence. We now introduce the approximation

This process is centered around \(\overline{X}\) and is a simple candidate. Furthermore, in stochastic volatility models, and particularly in large-time setting, the moderate deviations rate function is simply the second-order Taylor expansion of the large deviations rate function around its minimum \(\overline{X}\) (Jacquier & Spiliopoulos, 2019, Remark 3.5). We again consider the dynamics (1.6) with Assumption 1.4.1 for the coefficients. We further assume the following conditions:

Assumption 3.0.1

-

(i)

For \(f\in \mathcal {C}^2(\mathbb {R}^{+}\rightarrow \mathbb {R})\), the equation \(\bar{\psi }_t = v_0 + \int _0^t f\left( \bar{\psi }_s\right) \textrm{d}s\) admits a unique strictly positive solution \(\bar{\psi }\in \mathcal {C}^2([0,T]\rightarrow \mathbb {R})\);

-

(ii)

The small-noise approximation (2.1) of V satisfies an LDP with the good rate function \(\textrm{I}^{V}\) and speed \(\varepsilon \) such that \(\textrm{I}^{V}\) admits a unique minimum and is null there.

Remark 3.0.1

When a large deviations principle holds, then the infimum of the rate function has to be zero, indeed, since \(\mathbb {P}(X^\varepsilon \in B)\sim \exp \{-\frac{1}{\varepsilon }\inf _{x\in B} I(x)\}\), then taking B to be the whole space, the probability on the left-hand side has to be equal to one, and the infimum is thus null. It may not be attained though. However it is in the case of good rate functions (see for example Dembo-Zeitouni’s book, bottom of Page 5). As stated at the end of Sect. 1.1, we are only concerned here with large deviations with good rate functions. The only gap that could appear is the case of several points where zero is attained, but this does not seem restrictive in our setup.

As it will be shown in Lemma 3.1.3, the sequence \(\{V^\varepsilon \}_{\varepsilon >0}\) converges in probability to the function \(\bar{\psi }\) as a consequence of Assumption 3.0.1. This provides a natural choice for the centered process \({\overline{X}_t = -\frac{1}{2}\int _0^t\bar{\psi }_s\textrm{d}s}\), so that the approximation (3.1) reads, for any \(t\in [0,T]\),

3.1 Small-noise moderate deviations

For the small-noise approximation (2.1), the process \(\widetilde{X}^\varepsilon \) in (3.2) satisfies the SDE

starting at \(\widetilde{X}^{\varepsilon }_0 = 0\), where the small-noise approximation (2.1) of the variance reads

This transformation creates a discrepancy between the decreasing speeds of \(\widetilde{X}^{\varepsilon }\) and that of \(V^{\varepsilon }\) (speed of convergence to zero of the diffusion part of the volatility process i.e. \(\varepsilon ^{\frac{1}{2} - \alpha }\) versus \(\varepsilon ^{\frac{1}{2}}\)). Since \(\widetilde{X}^{\varepsilon }\) is our reference, we adjust the speed of the LDP via \(\varepsilon ^{\frac{1 - 2\alpha }{2}}\mapsto \sqrt{\varepsilon }\). With

we obtain the system

where the notation \(V^{\varepsilon ,\beta }\) was used to emphasise the change of speed to \(\varepsilon ^{\frac{1}{2}+\beta }\). In the following, we provide an LDP for \(\{\eta ^\varepsilon \}\) (equivalently an MDP for \(\{V^{\varepsilon ,\beta }\}\)). We relegate more technical proofs to Appendix A.

3.1.1 Theoretical results

The main moderate deviations result of this section is Theorem 3.1.1, but we first start with the following three technical lemmata, useful for the theorem but also of independent interest, proved in Appendices A.1, A.2 and A.3:

Lemma 3.1.1

Let \(\{Z^{\varepsilon }\}_{\varepsilon >0}\) be a family of random variables mapping to any metrisable space \(\mathcal {X}\) and satisfying an LDP with good rate function \(\tilde{\textrm{I}}\). If there exists a unique \(x_0\) such that \(\tilde{\textrm{I}}(x_0) = 0\), then for all \(\beta > 1\), \(Z^{\varepsilon ^\beta }\) satisfies an LDP with the good rate function

If for \(\beta > 1\), \(Z^{\varepsilon }\) satisfies an LDP with speed \(\varepsilon ^{\beta }\) and the good rate function \(\textrm{I}\), then \(Z^{\varepsilon }\) is exponentially equivalent to \(x_0\) with speed \(\varepsilon \).

As a consequence of this lemma, the sequence \(\{Z^{\varepsilon }\}\) converges in probability to \(x_0\).

Lemma 3.1.2

Let \(\{Z^{\varepsilon }\}_{\varepsilon >0}\) be a sequence of random variables mapping to any metrisable space \(\mathcal {X}\) and satisfying an LDP with good rate function \(\textrm{I}\) such that \(\textrm{I}(x)=0\) if and only if \(x = x_0\) for some \(x_0 \in \mathcal {X}\). If \(Z^{\varepsilon }\) is uniformly integrable, then \(\lim _{\varepsilon \downarrow 0}\mathbb {E}[Z^{\varepsilon }] = x_0\).

Lemma 3.1.3

Let \(V^{\varepsilon ,\beta }\) be given by (3.3) such that \(f, g\in \mathcal {C}(\mathbb {R}^+\rightarrow \mathbb {R})\) satisfy Assumption 1.4.1. Then \(\{V^{\varepsilon ,\beta }\}\) converges almost surely to the unique solution of \(\dot{\bar{\psi }}_t =f(\bar{\psi }_t)\) on [0, T] with boundary condition \(\bar{\psi }(0) = v_0\).

Theorem 3.1.1

Let \(\beta , v_0>0\) and let \(f, g \in \mathcal {C}(\mathbb {R}^+\rightarrow \mathbb {R})\) be such that Assumption 1.4.1 is satisfied and let \(W^{\varepsilon } :=\sqrt{\varepsilon }W\) and \(V^{\varepsilon ,\beta }, \eta ^{\varepsilon }\) as defined in (3.3), then under Assumption 3.0.1, the triple \(\{V^{\varepsilon ,\beta }, \eta ^\varepsilon ,W^\varepsilon \}\) satisfies an LDP with speed \(\varepsilon \) and the good rate function

3.1.2 MDP-based importance sampling

Consider the system (3.3), and let \(\varvec{\textrm{W}}^\varepsilon :=\sqrt{\varepsilon }\varvec{\textrm{W}}\) and \(\varvec{\textrm{Y}}^\varepsilon :=\sqrt{V^{\varepsilon ,\beta }}\circ \varvec{\textrm{W}}^\varepsilon \). First we apply Theorem 3.1.1, which in turn allows us the use of Theorem 2.1.3. Next, the Contraction principle and Dembo and Zeitouni (2010, Exercise 4.2.7) imply that the triple \(\{\eta ^\varepsilon ,\varvec{\textrm{W}}^\varepsilon , \varvec{\textrm{Y}}^\varepsilon \}\) satisfies an LDP with speed \(\varepsilon \) and good rate function

Remember that the exponential tightness condition necessary for Dembo and Zeitouni (2010, Exercise 4.2.7) is automatically granted, given that the space we work on is Polish (Lynch & Sethuraman, 1987, Lemma 2.6).

Consider a payoff \(G\in \mathcal {C}_T\rightarrow \mathbb {R}^{+}\) and let \(F:=\log |G|\). Recall that we are interested in finding a measure change minimising \(\mathbb {E}\left[ \textrm{e}^{2F}\frac{\textrm{d}\mathbb {P}}{\textrm{d}\mathbb {Q}}\right] \).

Deterministic change of drift We first consider a deterministic change of drift, via

for \(\varvec{\textrm{h}}\in \mathbb {H}_T^0\) with \(\dot{\varvec{\textrm{h}}}\) of finite variation. In the spirit of moderate deviations, we use the approximation

and thus aim at minimising

Under the conditions of Varadhan’s lemma B.0.2 (for example if F is bounded), then \(L(\varvec{\textrm{h}}) =\sup _{\varvec{\textrm{x}}\in \mathbb {H}_T^0} \mathfrak {L}(\varvec{\textrm{x}},\varvec{\textrm{h}})\) where

with \(\varvec{\mathrm {\varrho }}:=\left( \rho \;\; \overline{\rho }\right) ^\top \),

Minimising \(L(\varvec{\textrm{h}})\) is far from trivial, and hence, as before, we define the optimal change of drift \(\varvec{\textrm{h}}^{*}\) as a solution to the dual problem \(\inf \limits _{\varvec{\textrm{h}}\in \mathbb {H}_T^0}\mathfrak {L}(\varvec{\textrm{x}},\varvec{\textrm{h}})\), so that, with \(\eta \) as in (3.4),

Stochastic change of drift We now consider instead a stochastic change of drift in the form \( \left. \frac{\textrm{d}\mathbb {Q}}{\textrm{d}\mathbb {P}}\right| _{\mathcal {F}_T} =\exp \left\{ -\frac{1}{2}\Vert \dot{\varvec{\textrm{h}}}\sqrt{V}\Vert _{T}^2 + (\dot{\varvec{\textrm{h}}}\sqrt{V})\circ _{T}\varvec{\textrm{W}}^\top \right\} , \) for \(\varvec{\textrm{h}}\in \mathbb {H}_T^0\), \(\dot{\varvec{\textrm{h}}}\) of finite variation and with \(\mathbb {E}\left[ \frac{\textrm{d}\mathbb {Q}}{\textrm{d}\mathbb {P}}\right] =1\). Again, we use the approximation

and aim at minimising

If Varadhan’s lemma conditions hold, then again \(L(\varvec{\textrm{h}}) = \sup _{\varvec{\textrm{x}}\in \mathbb {H}_T^0}\mathfrak {L}(\varvec{\textrm{x}},\varvec{\textrm{h}})\) where

with \(\eta \) defined as in (3.4). As minimising \(L(\varvec{\textrm{h}})\) is a priori complicated, we define our optimal change of drift \(\varvec{\textrm{h}}^{*}\) as a solution to the dual problem

3.1.3 Application to options with path-dependent payoff

Consider an option with payoff \(G(\vartheta \circ _{T}X)\), where \(G:\mathbb {R}\rightarrow \mathbb {R}^{+}\) is a differentiable function and \(\vartheta \) a positive (almost everywhere) function of class \(\mathcal {C}^1([0,T]\rightarrow \mathbb {R}^{+})\). The payoff is then a continuous function of the path. Now let \(F = \log |G|\) and \(\overline{F}(x) = F(x-\frac{1}{2}\int _0^T\bar{\psi }_s\vartheta _s \textrm{d}s)\) and suppose that Assumptions 1.4.1 and 3.0.1 hold.

Deterministic change of drift We proceed in a similar fashion as in Sect. 2 and consider the transformations

so that the optimisation problem (3.5) for a path-dependent payoff can then be written as

with

Then, by applying Euler-Lagrange to the problem seen as an optimisation over \(\left\{ \int _0^\cdot \vartheta _t\dot{\varphi }_t\textrm{d}t,\eta \right\} \), we obtain the system of ODEs

with boundary conditions \(\lambda = - 2\overline{F}_T'\) and \(U_T = -\rho \vartheta _T\sqrt{\bar{\psi }_T}\overline{F}_T'\), where \(\overline{F}_T':=\overline{F}'\left( \int _0^T\vartheta _t\dot{\varphi }_t\textrm{d}t\right) \). Introducing \(A :=\frac{2U}{g(\bar{\psi })} - \lambda \rho \vartheta \frac{\sqrt{\bar{\psi }}}{g(\bar{\psi })}\) simplifies the problem to the linear ODE: \(\dot{A}- \dot{f}A = \frac{1}{2}\lambda \vartheta \) with \(A_T = 0\), with solution

where \(B:=\int _0^\cdot f(\bar{\psi }_t)\textrm{d}t\) and \(\gamma :=\int _0^\cdot \textrm{e}^{B_t}\vartheta _t \textrm{d}t\). We can now solve for U and Z:

with \(u = \frac{1}{4}g(\bar{\psi })\textrm{e}^{-B}(\gamma -\gamma _T)\). Our optimisation problem was posed over \(\left\{ \int _0^\cdot \vartheta _t \dot{\varphi }_t \textrm{d}t, \eta \right\} \) so we require the solution in terms of this couple, and therefore

-

(i)

\(\displaystyle \int _0^t \vartheta _s \dot{\varphi }_s \textrm{d}s = \lambda \int _0^t \vartheta _s \left\{ \dot{\varphi }^1_s - \frac{1}{2}\dot{\varphi }^2_s \right\} \textrm{d}s + \eta _0 T \textrm{e}^{B_T}\), where

$$\begin{aligned} \dot{\varphi }^1 = \left( u+ \frac{1}{2}\vartheta \sqrt{\bar{\psi }}\right) \sqrt{\bar{\psi }} \qquad \text {and}\qquad \dot{\varphi }^2 = \textrm{e}^B \int _0^\cdot \textrm{e}^{-B_s}\left( u_s + \frac{1}{2}\vartheta _s\sqrt{\bar{\psi }_s} \right) g(\bar{\psi }_s)\textrm{d}s; \end{aligned}$$ -

(ii)

\(\displaystyle \eta _t = \lambda \textrm{e}^{B_t} \int _0^t \textrm{e}^{-B_s}\left( u_s + \frac{1}{2}\rho \vartheta _s\sqrt{\bar{\psi }_s} \right) g(\bar{\psi }_s)\textrm{d}s + \eta _0 \textrm{e}^{B_t}\).

Here, \(\lambda ,\eta _0\in \mathbb {R}\) are parameters over which we perform our optimisation. Thus the original optimisation objective (3.6) becomes

Stochastic change of drift

The stochastic change of drift objective is equivalent to the one with deterministic drift, the difference being how \(\varvec{\textrm{h}}\) is calculated.

3.2 Small-time moderate deviations

We now mimic the results of the previous section, but in the context of small-time moderate deviations. We again consider the log-price dynamics (1.6) under Assumption 1.4.1 and Assumption 3.0.1. Let \(\alpha \in (0,\frac{1}{2})\) and define \({\widetilde{X}^\varepsilon :=\varepsilon ^{-\alpha } X^{\varepsilon }}\), so that

As a consequence of Theorem 3.2.1 the results remain the same as in the case of small-noise moderate deviations of the previous section with \(f = 0\) and \(\bar{\psi }= v_0\). This being the case, we do not repeat them here. Let us nevertheless note that in the case of change of measure with deterministic drift, the problem is similar to the one, where V is constant equal to \(v_0\).

3.2.1 Theoretical results

Analogously to Lemma 3.1.3 and Theorem 3.1.1, we have

Lemma 3.2.1

Let \(V^{\varepsilon }\) be given by (3.3) such that \(f,g\in \mathcal {C}(\mathbb {R}^+\rightarrow \mathbb {R})\) satisfy Assumption 1.4.1. Then \(\{V^{\varepsilon }\}\) converges in probability to \(v_0\).

Theorem 3.2.1

Let \(v_0>0\) and let \(f, g \in \mathcal {C}(\mathbb {R}^+\rightarrow \mathbb {R})\) be such that Assumption 1.4.1 is satisfied and let \(W^{\varepsilon } :=\sqrt{\varepsilon }W\) and \(V^{\varepsilon ,\beta }, \eta ^{\varepsilon }\) defined as in (3.3), then, under Assumption 3.0.1, the triple \(\{V^{\varepsilon ,\beta }, \eta ^\varepsilon ,W^\varepsilon \}\) satisfies an LDP with speed \(\varepsilon \) and the good rate function

3.3 Large-time moderate deviations

We now consider a rescaling of (1.6) defined as \(V_t^\varepsilon = V_{\frac{t}{\varepsilon }}\) and \(X_t^\varepsilon = \varepsilon X_{\frac{t}{\varepsilon }}\), so that under Assumption 1.4.1 and Assumption 3.3.1,

which leads to Regime 2 in the slow-fast setting of Morse and Spiliopoulos (2017, Theorem 2.1), by choosing the time-scale separation parameter equal to \(\varepsilon \). The following assumption is needed in order to conform to the conditions in Morse and Spiliopoulos (2017).

Assumption 3.3.1

-

(i)

f is locally bounded and of the form \(f(y) = -\kappa y +\tau (y)\) with \(\tau \) globally Lipschitz with Lipschitz constant \(L_{\tau }<\kappa \). In addition, the tail condition \(\lim _{|y| \uparrow \infty }\frac{\tau (y)y}{|y|^2} = \kappa \) holds.

-

(ii)

The function g is either uniformly continuous and bounded from above and away from zero or takes the form \(g(y) = \xi |y|^{q_g}\) for \(q_g \in [\frac{1}{2},1)\) with \(\xi \ne 0\).

Remark 3.3.1

Together with Assumption 1.4.1, Condition (ii), necessary to ensure ergodicity of the volatility process, collapses g to the form \(g(y)=\xi |y|^\frac{1}{2}\,\) (for details we refer to Jacquier and Spiliopoulos 2019, Morse and Spiliopoulos 2017).

3.3.1 Theoretical results

In order to apply the methodology from the previous sections to derive the desired changes of measure, we need a large-time MDP. More precisely, we need an MDP for \(\{X^{\varepsilon },\sqrt{\varepsilon }W,\sqrt{\varepsilon }W^{\perp }\}\) in the case of deterministic drift. We do not consider the stochastic drift change here, since a rigorous treatment is out of scope of this paper. Similar problem has been studied in Morse and Spiliopoulos (2017, Theorem 2.1) and Jacquier and Spiliopoulos (2019, Theorem 3.3), where authors propose fewer conditions, although in a simpler setting (which happens to include the Heston model as well). We now introduce a theorem, which is a direct application of Jacquier and Spiliopoulos (2019, Theorem 3.3) and Morse and Spiliopoulos (2017, Theorem 2.1), that provides the desired MDP.

Theorem 3.3.1

Let \(\mathcal {L}_{V}\) denote the infinitesimal generator of V before rescaling, i.e.

Under Assumptions 1.4.1 and 3.3.1 the following hold:

-

(i)

There exists a unique invariant measure \(\mu \) corresponding to \(\mathcal {L}_V\);

-

(ii)

The process \(X^{\varepsilon }\) converges in probability to \(-\frac{1}{2}\overline{v}t\), where \(\overline{v} = \int _0^\infty y \, \mu (dy)\);

-

(iii)

There exists a unique solution \(\varsigma \) with at most polynomial growth to the Poisson equation

$$\begin{aligned} \mathcal {L}_V(\varsigma )(y) = \frac{y - \bar{v}}{2}, \qquad \text {with} \qquad \int _0^\infty \varsigma (y)\mu (dy)=0. \end{aligned}$$

Furthermore, denote \(\varvec{\textrm{Q}}:=\int _0^\infty \varvec{q}(y)\varvec{q}^\top (y)\mu (\textrm{d}y)\), where

Then the triple \(\{X^{\varepsilon },\sqrt{\varepsilon }W,\sqrt{\varepsilon }W^{\perp }\}\) follows an MDP with good rate function \(I_{\varvec{\textrm{Q}}}\), where

if \(\phi \in \mathcal{A}\mathcal{C}\) and infinite otherwise.

Lemma 3.3.1

The matrix \(\varvec{\textrm{Q}}\) is invertible.

Proof

Let Y a random variable with distribution \(\mu \), the invariant measure. Then

by Cauchy-Schwarz, where an equality would imply that V is constant \(\mu \)-almost surely. This implies \(f(\overline{v})=g(v)=0\), which is not possible due to Assumption 1.4.1 on f and g. \(\square \)

3.3.2 Application to options with path-dependent payoff

Let us again consider an option with a continuous payoff function G and let \(F=\log |G|\). Following the approach of the previous sections and with similar notations, we search solutions to the dual problems for deterministic change of drift. Let \(\varvec{\textrm{x}}:=\begin{pmatrix}x_1&x_2&x_3\end{pmatrix}^\top \) and \(\underline{\varvec{\textrm{x}}}:=\begin{pmatrix}x_2&x_3\end{pmatrix}^\top \). By considering a deterministic change of drift similarly as before our problem becomes

Applying the modified Varadhan lemma gives us the target functional

Using the diagonal operator \({\text {diag}}(x_1,\dots ,x_n)=\sum _{i=1}^n x_i e_i e_i^\top \), where \(n\in \mathbb {N}\) and \(\{e_i\}_{i=1}^n\) canonical basis of \(\mathbb {R}^n\), we now write the problem in terms of \(\widetilde{\varvec{\textrm{x}}}:=\dot{\varvec{\textrm{x}}}\,\),

where \(\varvec{\textrm{A}}:= \varvec{\textrm{Q}}^{-1} + {\text {diag}}(0,1,1)\), which is equivalent to

This substantially simplifies the main optimisation problem, which can now be solved explicitly, since \( \varvec{\textrm{A}}= \begin{pmatrix} a_{11} &{} \varvec{\textrm{a}}_{21}^\top \\ \varvec{\textrm{a}}_{21} &{}\varvec{\textrm{A}}_{22}\\ \end{pmatrix}\), with \(a_{11} \in \mathbb {R}\), \(\varvec{\textrm{a}}_{21}\in \mathbb {R}^{2}\), \(\varvec{\textrm{A}}_{22}\in \mathbb {R}^{2\times 2}\), so that the infimum in (3.8) yieldsFootnote 1\({\underline{\varvec{\textrm{x}}}^{*} = - \varvec{\textrm{A}}_{22}^{1} \varvec{\textrm{a}}_{21} x_1 =:\varvec{\textrm{B}}x_1}\). The quadratic form in fact reduces to \({\varvec{\textrm{x}}^\top \varvec{\textrm{A}}\varvec{\textrm{x}}= \left( a_{11} - \varvec{\textrm{a}}_{21}^\top \varvec{\textrm{A}}_{22}^{-1} a_{21} \right) x_1^2 :=\nu \, x_1^2}\), where both constants

are obtained from the definition of \(\varvec{\textrm{Q}}\). Finally, we have

Remark 3.3.2

This problem is similar to the problem in Sect. 4.1, with deterministic volatility.

Moderate deviations, though less studied than large deviations in mathematical finance, have been applied to a wide variety of settings beyond Heston, predominantly in multiscale systems (Morse & Spiliopoulos, 2017, 2020). A unifying treatment of pathwise moderate deviations for Markovian models commonly used in financial applications can be consulted in Jacquier and Spiliopoulos (2019), and further developments in the context of (rough) fractional volatility models were studied in Bayer et al. (2018) and in Jacquier and Pannier (2022).

4 Examples

Our setting allows for a variety of stochastic volatility models. We show some examples below, and give special attention to the Heston model (Heston, 1993), the focus of our numerical experiments.

4.1 Black–Scholes with deterministic volatility

We first consider the Black–Scholes model with time-dependent deterministic volatility, namely

where \(\sigma \in \mathcal {C}_T\) is a deterministic function adhering to Assumption 1.4.1. We provide full details of this specific case, which we will often refer to later on. We consider options with payoffs that are continuous functions of X. Let \(G:\mathcal {C}_{T}\rightarrow \mathbb {R}^+\) and \(F :=\log |G|\), which is then a continuous function with values in \([-\infty ,+\infty )\). Our objective is to find an almost surely positive random variable Z with \(\mathbb {E}[Z] = 1\) minimising \(\mathbb {E}[G^2(X)Z^{-1}] = \mathbb {E}\left[ \textrm{e}^{2F(X)}Z^{-1}\right] \).

4.1.1 Small-noise

The small-noise approximation (1.3) reads

Deterministic change of drift With the change of measure

with \(h\in \mathbb {H}_T^0\), we can write

To apply Varadhan’s lemma and estimate L(h), we assume there exists \(\gamma >1\) such that

This condition is satisfied if, for example, the following assumption holds (for details see the proof of Guasoni and Robertson 2007, Theorem 3.6):

Assumption 4.1.1

Let \(F:\mathcal {C}_T\rightarrow \mathbb {R}\) such that \(F(x)\le K_{1} + K_{2} \sup _{t\in [0,T]}|x_t|^{\alpha }\) for some \(\alpha \in (0,2)\) and \(K_{1}, K_{2}>0\).

Lemma 4.1.1

If \(\dot{h}\) is of finite variation, the function

is well-defined and continuous and for every \(\gamma >0\),

Proof

If \(\dot{h}\) is of finite variation and x is continuous with \(x(0)=0\), then \(\int _0^\cdot \dot{h}_t\textrm{d}x_t\) is well defined as a Riemann-Stieltjes integral and

where \(\textrm{TV}(\cdot )\) denotes the total variation. Similarly, \(\int _0^\cdot \sigma (t)\textrm{d}x_t\) is well defined and

Since F is continuous, the first statement on existence and continuity holds. The second one follows from the computation of exponential Gaussian moments: for every \(\gamma >0\),

\(\square \)

Lemma 4.1.2

If \(\dot{h}\) is of finite variation, then \(L(h) = \sup _{x \in \mathbb {H}_T^0}\mathfrak {L}(x;h)\).

Proof

Let \(\gamma >1\) such that (4.1) holds. Then by Cauchy-Schwarz and Lemma 4.1.1,

so that the conditions of Theorem B.0.1 are verified. The continuity has already been shown. \(\square \)

Since \(\mathcal {G}: W\mapsto X\) is continuous by continuity of the Itô integral, we can introduce \(\widetilde{F} =F\circ \mathcal {G}\) and the existence of a minimum to the dual version of \(\inf \limits _{h \in \mathbb {H}_T^0} \sup \limits _{x \in \mathbb {H}_T^0} \mathfrak {L}(x;h)\), namely \(\sup \limits _{x \in \mathbb {H}_T^0} \inf \limits _{h \in \mathbb {H}_T^0}\mathfrak {L}(x;h)\), can be proved as in Guasoni and Robertson (2007) under Assumption 4.1.1 by choosing \(M=0\) in Guasoni and Robertson (2007, Lemma 7.1). The minimum is then attained for \(h=x\) and equal to

Furthermore, it immediately follows from Guasoni and Robertson (2007, Theorem 3.6) that, if \(h^*\in \mathbb {H}_T^0\) is of finite variation and is a solution to (4.2), then it is asymptotically optimal if

Therefore, in order to derive a change of measure, we search for \(h^{*} \in \mathbb {H}_T^0\) such that

Simplified deterministic change of drift We consider a simplified version of the problem: since \(\varepsilon \int _0^\cdot \sigma ^2(t)\textrm{d}t\) is exponentially equivalent to 0, the previous results remain valid when replacing \(F(-\frac{1}{2}\int _0^\cdot \sigma ^2(t)\textrm{d}t + \int _0^\cdot \sigma (t) \dot{x}_t \textrm{d}t)\) with \(F(\int _0^\cdot \sigma (t) \dot{x}_t \textrm{d}t)\). The problem then becomes

4.1.2 Application to options with path-dependent payoff

Consider the payoff \(G(\vartheta \circ _{T}X)\) with \(G:\mathbb {R}^+ \rightarrow \mathbb {R}^+\) differentiable and \(\vartheta \) a positive continuous function of finite variation. The optimisation problem reads

with \(F = \log |G(\cdot - \frac{1}{2}\int _0^T\vartheta _t \sigma ^2(t) \textrm{d}t)|\) in the log-price small-noise approximation. The mapping \(\dot{y}_t = \vartheta _t\sigma (t)\dot{x}_t\) yields the Euler-Lagrange equation \( \frac{\textrm{d}}{\textrm{d}t}\frac{\dot{y}_t}{(\vartheta _t\sigma (t))^2} = 0\), or in terms of the original problem \(\frac{\textrm{d}}{\textrm{d}t}\frac{\dot{x}_t}{\vartheta _t\sigma (t)} = 0\). We thus search for a solution \(\dot{x}_t = \lambda \vartheta _t\sigma (t)\) for some \(\lambda \in \mathbb {R}\). The optimisation problem becomes

For example, if \(F(x) = \log (\textrm{e}^x - \textrm{e}^c)^+\) for \(x,c \in \mathbb {R}\), \(\widetilde{\lambda }\) will be the unique solution on \((1,\infty )\) of \(v\lambda + \log (\lambda - 1) - \log (\lambda ) - c = 0\). The following payoffs are standard and satisfy this setup:

-

(European Call option): \(G(x) = (\textrm{e}^{x} - K)^+\) and \(\vartheta _t=1\) with \(x^+ = \max (0,x)\).

-

(Geometric Asian Call option): \(G(x) = (\textrm{e}^{x} - K)^+\) and \(\vartheta _t=1 - t/T\).

4.1.3 Small-time

The small-time approximation (1.4) reads

with \(X_0 ^{\varepsilon } = 0\) and \(W^{\varepsilon } = \sqrt{\varepsilon }W\). The couple \(\{\sigma (t\varepsilon ), W_t^\varepsilon \}\) is exponentially equivalent to \(\{\sigma (0),W_t^\varepsilon \}\). Since \(\textrm{I}^{W}\) is the good rate function of the LDP verified by \(\{W^\varepsilon \}\) by Schilder’s Theorem (Dembo & Zeitouni, 2010, Theorem 5.2.3), \(\{\sigma (t\varepsilon ), W_t^\varepsilon \}\) verifies an LDP with good rate function \(\textrm{I}(s,w) = \textrm{I}^{W}(w)\) if \(s = \sigma (0)\) and is infinite otherwise. By Theorem 2.1.3, \(\{\int _0^\cdot \sigma (t\varepsilon )\textrm{d}W_t^\varepsilon ,W^\varepsilon \}\) satisfies an LDP with the same good rate function as \(\{\int _0^\cdot \sigma (0)\textrm{d}W_t^\varepsilon ,W^\varepsilon \}\). Noticing that \(\int _0^\cdot \varepsilon \sigma (t\varepsilon )^2\textrm{d}t\) is exponentially equivalent to zero, our method then leads to the same solution as the problem for \(\textrm{d}X_t^{\varepsilon } = -\frac{1}{2}\varepsilon \sigma ^2(0)\textrm{d}t + \sigma (0) \textrm{d}W_t^{\varepsilon }\), which was treated above. In this small-time setting, we lose all information on the path of \(\sigma \), except for its initial value.

4.2 Ornstein–Uhlenbeck

Lognormal-based stochastic volatility models, such as Sepp and Karasinski (2012), read

This model clearly satisfies all the assumptions in the previous sections (linear drift and diffusion) and therefore the importance sampling results can be applied.

4.3 Rough volatility

Our setup above clearly does not include rough volatility models (Gatheral et al., 2018), where the instantaneous variance process is driven by a continuous Gaussian process with Hurst index in \((0,\frac{1}{2})\). However, recent developments have proved, with various degrees of generality, pathwise large and moderate deviations for this class of models (Gerhold et al., 2021; Gulisashvili, 2018, 2021; Jacquier et al., 2018; Jacquier & Pannier, 2022), and our general results above can definitely be tailored to them. We however leave this model-specific analysis to future endeavours.

4.4 The Heston model

The Heston model is one of the most widely used stochastic volatility model in Finance, first proposed in Heston (1993), in which the log stock price process satisfies

for two Brownian motions B and W such that \(\textrm{d}\langle B, W\rangle _{t} = \rho \ \textrm{d}t\). The coefficient \(\kappa >0\) is the speed of mean reversion of the volatility process, while \(\theta >0\) is the long-term mean and \(\xi >0\) is the volatility of the volatility process. The variance process V admits a unique strong solution by Yamada–Watanabe’s conditions (Karatzas & Shreve, 1998, Proposition 2.13), and so does X. In the setting of (1.6) the functions f and g take the form

and therefore Assumption 1.4.1 clearly holds.

4.4.1 Small-noise LDP

From Sect. 2.1, Assumption 2.1.2 is clearly satisfied, and thus Theorem 1.4.1 holds. In the Heston model, the system (2.5) becomes

Solving the first ODE and plugging it into the second one gives for all \(t\in [0,T]\)

where \(\lambda >0\) is an arbitrary constant and \(A_t = \frac{U_t}{\sqrt{\psi _t}}\) is the solution to the Riccati equation. The option payoff at the terminal time determines the boundary conditions through the Euler-Lagrange equations so that

with \(F_T=F\left( \smallint _0^T\vartheta _t\left( Z\sqrt{\psi }-\frac{1}{2}\psi \right) \textrm{d}t\right) \). Since both conditions include the same optimising variable, the resulting problem becomes an optimisation in \(\mathbb {R}^2\) over \(\lambda \) and \(A_T\) (or equivalently \(A_0\)) and is thus much simpler than the original optimisation. After solving for \(A_t\) for all \(t\in [0,T]\), we then solve for \((\varphi ,\psi )\) by writing U and Z in terms of it using (2.4).

Example 4.4.1

In the case \(\vartheta _t=\vartheta >0\) constant, let \(C(\lambda ):=\frac{1}{2}\xi \lambda \vartheta \left( \frac{1}{2}-\frac{1}{4}\overline{\rho }^2\lambda \vartheta -\rho \frac{\kappa }{\xi }\right) \) so that the Riccati equation (4.5) reads

the solution to which is

where \(D\in \mathbb {R}\) is determined from the initial condition on A. Since \(A_t=\tfrac{U_t}{\sqrt{\psi _t}}=\tfrac{\dot{\psi }_t-\kappa (\theta -\psi _t)}{\psi _t}\), we are to solve \(\dot{\psi }_t + (\kappa - A_t)\psi _t = \kappa \theta \), for \(t \in [0,T]\), which is just a non-homogeneous linear ODE with the solution

Then the optimisation problem for \(\varvec{\textrm{h}}\) reduces to

which is an optimisation over \((A_0,\lambda )\in \mathbb {R}^2\).

4.4.2 Small-time LDP

In the context of the small-time LDP, Assumption 2.2.1 is clearly satisfied and therefore Theorem 2.2.1 applies immediately. Consider for example an option with path-dependent payoff \(G(\vartheta \circ _{T}X)\) as in Sect. 2.1.3. In the deterministic case, we have

where \(U(\psi ) = \frac{\dot{\psi }}{\xi \sqrt{\psi }}\) and \(Z(\varphi ,\psi ) = \frac{\dot{\varphi }}{\sqrt{\psi }}\). Similarly to Example 4.4.1, with \(A_t = \frac{U_t}{\sqrt{\psi _t}}\),

for all \(t\in [0,T]\), which is very similar to (4.5), but for the \(\kappa \) term.

4.4.3 Large-time moderate deviations

In the context of Sect. 3.3, the conditions of Assumption 3.0.1 are clearly satisfied. In this case, the invariant measure \(\mu \) is a Gamma distribution \(\Gamma \big (\frac{2\kappa \theta }{\xi ^2}, \frac{2\kappa }{\xi ^2}\big )\) as shown in Cox et al. (1985, Sect. 3). Therefore, in light of Theorem 3.3.1, \(\overline{v} = \theta \) and the solution of the Poisson equation \(\dot{\varsigma }(y)=-\frac{1}{2\kappa }\) is constant, which means that \(\varsigma (y) = (\theta -y)/(2\kappa )\) because of the constraint \(\int _{0}^{\infty }\varsigma (y)\mu (\textrm{d}y)=0\). Therefore

From this, noting that \(\dot{\varsigma }(y)g(y) = -\xi \sqrt{y}/(2\kappa )\) we can calculate the constants (3.9):

4.4.4 Other

Results in Heston which are not mentioned in this section follow directly from their corresponding optimisation problems in Sects. 2 and 3 by choosing f and g as in (4.4).

5 Numerical results

In all the different settings we studied, the final form of the optimisation problem is

where F was linked to the payoff, \(\ell \) to the rate function of the (log-)price process and \(\varphi \) and \(\phi \) are absolutely continuous paths that arise from Varadhan’s lemma of the (log-)price and volatility processes respectively. In the tables below, we summarise all problems considered so far. As one can see, in the deterministic drift setting, the MDP problem is usually as simple as solving the problem under the Black–Scholes (BS) model or at least by approximating the model with a Black–Scholes model. Furthermore, the variance reduction for geometric Asian options are also similar under the MDP with deterministic drift change, meaning advantage over a simple BS model is not significant. However, when it comes to the stochastic change of drift of the form \(\int _0^\cdot \dot{h}_t\sqrt{V_t}\textrm{d}t\), the MDP problems are slightly harder than in BS approximation and the variance reduction results are in fact significantly better. We study stochastic change of drift in all cases except in the MDP large-time case, where the invariant distribution of \(\int _0^\cdot \dot{h}_{t}\sqrt{V_t}dt\) is not immediate and would require a careful study, which is outside the scope of this paper.

5.1 Numerical results for Black–Scholes

We first provide numerical evidence in the Black–Scholes model (Sect. 4.1) with \(S_0=50\) in the log-price small-noise approximation. In order to compare estimators, we look at Asian Arithmetic Call options, with payoff \((\frac{1}{T}\int _0^T \exp \{X_t\} \textrm{d}t - K)^+\). The form of the solution of the optimisation problem studied previously can be found in Guasoni and Robertson (2007). We compare the naive Monte–Carlo estimator to:

-

antithetic Monte–Carlo estimator;

-

control estimator based on the price of Geometric Asian options with payoff \(\left( \exp \left\{ \frac{1}{T}\int _0^T X_t \textrm{d}t\right\} - K\right) ^+\), that can be computed explicitly;

-

LDP-based importance sampling estimator above.

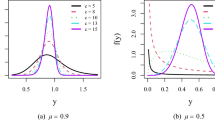

Instead of simulating \(\int _0^T \exp (X_t)\textrm{d}t\), we consider a discretised payoff on \(n=252\) dates and draw \(10^5\) paths. For the LDP-based estimator, the law of W after the change of measure is given by Girsanov theorem. In what follows, when we refer to variance reduction we mean the ratio of variance of the classical Monte–Carlo estimator over the variance of estimator in question. As we can see in Table 3 and in Fig. 2, even in non-rare events, the estimator derived using LDP provides good variance reduction. However, it is mainly in the context of rare events that it performs best and outperforms the other estimators (Fig. 1 and Table 3), revealing the true power of LDP-based importance sampling estimators.

Left: Estimator with with the highest variance reduction among antithetic, control and LDP estimators (Sect. 5.1) for different values of \(\sigma \), K, T. Right: Best performing estimator in terms of variance reduction among antithetic, control and LDP estimators (Sect. 5.1) as a function of probability of a positive payoff (estimated using Monte–Carlo). Clearly, the LDP estimator performs best when probability of exercising an option is low

5.2 Numerical results for the Heston model

5.2.1 Asian option in Heston

To compare variance reduction results in the Heston model, we look at Asian Geometric Call options, with payoffs of the form

where \(x^+ = \max \{x,0\}\). We consider the following model parameters (realistic on Equity markets) for the Heston model recalled in Sect. 4.4:

To simulate the paths (X, V) on [0, T], we use a standard Euler-Maruyama scheme for X, but use the scheme (Lord et al., 2009) for the CIR process in the volatility, which is upward biased,Footnote 2 however nevertheless converges strongly in \(L^1\) to the true process V, which is enough for the purpose of pricing. For \(n\in \mathbb {N}\), \(\Delta =\frac{T}{n}\) and the increments of the Brownian motion \(\{\Delta W_{i}^n\}_{i=0}^{n-1}\) the discretisation scheme over [0, T] for the variance process \(\{V_i^n\}_{i=0}^n\) reads:

In what follows, we compare different LDP and MDP, with \(n=252\) trading days per year. All the results are computed for \(T=1\) using \(N_{\text {MC}}=500,000\) Monte–Carlo samples. We also consider an antithetic estimator and an LDP estimator derived assuming a deterministic volatility (denoted by BS). Furthermore, since LDP-based deterministic changes of drift in the BS setting (or in cases where the final form of the optimisation problem is similar) are easy to compute, we also propose a fully adaptive scheme based on the BS estimator:

where \(h^i_t\) is the best deterministic change of drift up to the i-th discretisation step.Footnote 3 We shall refer to deterministic schemes to mean changes of law with deterministic changes of drift and to adaptive changes of drift for changes of law with drift of the form \(\int _0^\cdot \dot{h}_t\sqrt{V_t}\textrm{d}t\).

5.2.2 LDP results in different settings

We now look at the results of LDP based estimators in small-noise, small-time and large-time setting. Figure 3 indicates that the estimators derived in small-time setting provide good results, but are outperformed by small-noise estimators. Although not apparent in the figure, looking at Table 4, the adaptive estimators provide slightly better results, as a matter of fact, they are notably better for small strikes. However, the computation time is also higher for adaptive estimators, which balances out the slight increase in variance reduction for higher strikes. It thus seems that LDP adaptive estimators (in this case) imply a higher computational cost (given in Table 6) which is not justified by the variance reduction they can provide. In Tables 4, 5, 7, we indicate in bold the methods that provide the largest variance reduction.

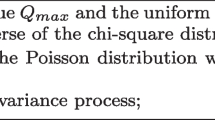

5.2.3 MDP results in different settings

In the deterministic case, all considered estimators have similar variance reduction (see Fig. 4). To be more precise, the BS estimator has a very similar variance reduction or even even slightly outperforms the MDP based estimators (left plot of Fig. 4, where the lines are almost indistinguishable except for very high strikes, outside the ‘moderate’ domain, which hence cause numerical problems). Therefore, in that aspect, MDP based estimators do not justify their higher computational cost compared to the simple LDP-BS estimator. In the adaptive case, the BS estimator performs slightly better than before, whereas the MDP based estimators significantly outperform their results from the deterministic case and those of the BS estimator. Moreover, as it will be discussed in the next section, their variance reduction is in fact even close to that of the LDP based estimators.

Variance reduction for MDP based estimators for in log-scale. Left: deterministic change of drift. Right: adaptive changes of drift. Because of computational problems, the adaptive small-time MDP estimator was not computed correctly for a strikes greater than 75. Nevertheless, it looks to be outperformed significantly by other MDP estimators

5.2.4 Overall comparison

Looking at Fig. 5, as expected the LDP small-noise adaptive estimators perform best, although MDP small-noise and large-time adaptive estimators are not far behind. As for the computation times, Table 6 indicates MDP estimators are on average about \(10\%\) and LDP estimators approximately \(15\%\) slower than the corresponding standard BS estimators. The fully adaptive BS estimator provides interesting results, especially for near-the-money strikes, where it performs much better than MDP and LDP estimators. Although the estimator is time-consuming, it can still provide a good balance between variance reduction and computation time for certain strikes (Tables 4, 5 and 6 and Fig. 6). In the following three tables and plots, we use the following short notations (with in brackets their precise meanings, used in the graphs):

-

Prob: Probability of having a positive payoff.

-

LDPsn (LDP small-noise): Deterministic estimator based on LDP in small-noise log-price setting.

-

LDPsn A (LDP small-noise adaptive): Adaptive estimator based on LDP in small-noise log-price setting.

-

BS (BS approximation): Deterministic BS estimator.

-

BS A (BS approximation adaptive): Adaptive BS estimator.

-

MDPsn A (MDP small-noise adaptive): Adaptive estimator based on MDP in small-noise log-price setting.

-

BS A2 (BS fully adaptive): Fully adaptive BS estimator.

-

Ant (antithetic): Antithetic estimator.

-

Classic: Classic Monte–Carlo estimator.

5.2.5 Variance swaps in Heston

The methodology can also be applied to options with payoffs depending on volatility, for example for options with payoffs of the form \(\int _0^T V_t 1\hspace{-2.1mm}{1}_{\{S_t\ge K\}}\textrm{d}t\). Consider \(T = 1\) and different strikes \(K>0\) under the Heston model with the same parameters as above. The results for different estimators are summarised in Table 7. For small strikes, the LDP estimators based on the BS approximation are not performing well, which is not surprising since in the BS approximation the payoff of the option in question is almost constant for small strikes. On the other hand, LDP and MDP give good results. We also notice a clear difference in favour of non-adaptive changes of drift. For high strikes, we have the same behaviour as before: adaptive MDP estimators give intermediate results between LDP (performing best) and BS. At this stage though, we do not have a clear explanation for the observed differences in the performance of non-adaptive and adaptive changes of drift for different strikes. Further research is required to understand the underlying dynamics better.

Notes

Since \(\varvec{\textrm{Q}}\) is positive-definite, both \(\varvec{\textrm{A}}\) and \(\varvec{\textrm{A}}_{22}\) are positive-definite and thus invertible.

There are many discretisation schemes for the Heston model. Since the objective of this paper is not to study the effects of different schemes we satisfy ourselves with Lord et al. (2009).

Fully adaptive schemes are computationally very heavy, therefore we only consider it in the Black–Scholes setting. The change of law is computed \(n \times N_{\text {MC}}\) times (\(252 \times 5\textrm{E}5 = 1.26\textrm{E}8\) in our case).

It is easy to show that \(\eta ^{\varepsilon } =\textrm{e}^{\int _0^\cdot f(\psi _s)\textrm{d}s}\int _0^\cdot \textrm{e}^{-\int _0^t f(\psi _s)\textrm{d}s}\widetilde{\eta }_\varepsilon (t) \textrm{d}t \) solves the ODE in (A.2).

References

Baldi, P., & Caramellino, L. (2011). General Freidlin–Wentzell large deviations and positive diffusions. Statistics & Probability Letters, 81, 1218–1229.

Bayer, C., Friz, P. K., Gulisashvili, A., Horvath, B., & Stemper, B. (2018). Short-time near-the-money skew in rough fractional volatility models. Quantitative Finance, 19, 779–798.

Biagini, S., Pennanen, T., & Perkkiö, A.-P. (2018). Duality and optimality conditions in stochastic optimization and mathematical finance. Journal of Convex Analysis, 25, 403–420.

Binder, K., Ceperley, D. M., Hansen, J.-P., Kalos, M., Landau, D., Levesque, D., Mueller-Krumbhaar, H., Stauffer, D., & Weis, J.-J. (2012). Monte Carlo methods in statistical physics (Vol. 7). Springer Science & Business Media.

Chiarini, A., & Fischer, M. (2014). On large deviations for small-noise Itô processes. Advances in Applied Probability, 46, 1126–1147.

Conforti, G., De Marco, S., & Deuschel, J.-D. (2015). On small-noise equations with degenerate limiting system arising from volatility models. In P. K. Friz, J. Gatheral, A. Gulisashvili, A. Jacquier, J. Teichmann (Eds.), Large deviations and asymptotic methods in finance (pp. 473–505). Springer.

Cox, J., Ingersoll, J., & Ross, S. (1985). A theory of the term structure of interest rates. Econometrica, 53, 385–407.

Dembo, A., & Zeitouni, O. (2010). Large deviations techniques and applications. Springer.

Donati-Martin, C., Rouault, A., Yor, M., & Zani, M. (2004). Large deviations for squares of Bessel and Ornstein–Uhlenbeck processes. Probability Theory and Related Fields, 129, 261–289.

Dupuis, P., & Johnson, D. (2017). Moderate deviations-based importance sampling for stochastic recursive equations. Advances in Applied Probability, 49, 981–1010.

Dupuis, P., Spiliopoulos, K., & Wang, H. (2012). Importance sampling for multiscale diffusions. Multiscale Modeling & Simulation, 10, 1–27.

Dupuis, P., & Wang, H. (2004). Importance sampling, large deviations, and differential games. Stochastics: An International Journal of Probability and Stochastic Processes, 76, 481–508.

Freidlin, M. I., & Wentzell, A. D. (2012). Random perturbations of dynamical systems. Springer.

Garcia, J. (2007). A large deviation principle for stochastic integrals. Journal of Theoretical Probability, 21, 476–501.

Gatheral, J., Jaisson, T., & Rosenbaum, M. (2018). Volatility is rough. Quantitative Finance, 18, 933–949.

Gerhold, S., Jacquier, A., Pakkanen, M., Stone, H., & Wagenhofer, T. (2021). Pathwise large deviations for the rough Bergomi model: Corrigendum. Journal of Applied Probability, 58, 849–850.

Glasserman, P. (2004). Monte Carlo methods in financial engineering (Vol. 53). Springer.

Glasserman, P., & Wang, Y. (1997). Counterexamples in importance sampling for large deviations probabilities. The Annals of Applied Probability, 7, 731–746.

Grbac, Z., Krief, D., & Tankov, P. (2021). Long-time trajectorial large deviations and importance sampling for affine stochastic volatility models. Advances in Applied Probability, 53, 220–250.

Guasoni, P., & Robertson, S. (2007). Optimal importance sampling with explicit formulas in continuous time. Finance and Stochastics, 12, 1–19.

Gulisashvili, A. (2018). Large deviations principle for Volterra type fractional stochastic volatility models. SIAM Journal on Financial Mathematics, 9, 1102–1136.

Gulisashvili, A. (2021). Time-inhomogeneous Gaussian stochastic volatility models: Large deviations and super roughness. Stochastic Processes and Their Applications, 139, 37–79.

Hartmann, C., Schütte, C., Weber, M., & Zhang, W. (2018). Importance sampling in path space for diffusion processes with slow-fast variables. Probability Theory and Related Fields, 170, 177–228.

Heston, S. L. (1993). A closed-form solution for options with stochastic volatility with applications to bond and currency options. Review of Financial Studies, 6, 327–343.

Jacquier, A., Pakkanen, M. S., & Stone, H. (2018). Pathwise large deviations for the rough Bergomi model. Journal of Applied Probability, 55, 1078–1092.

Jacquier, A., & Pannier, A. (2022). Large and moderate deviations for stochastic Volterra systems. Stochastic Processes and Their Applications, 149, 142–187.

Jacquier, A., & Spiliopoulos, K. (2019). Pathwise moderate deviations for option pricing. Mathematical Finance, 30, 426–463.

Karatzas, I., & Shreve, S. E. (1998). Brownian motion and stochastic calculus. Springer.

Klenke, A. (2014). Probability theory. Springer.

Lord, R., Koekkoek, R., & Van Dijk, D. (2009). A comparison of biased simulation schemes for stochastic volatility models. Quantitative Finance, 10, 177–194.