Abstract

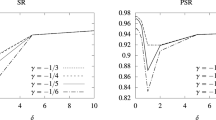

We discuss the problem of constructing information criteria by applying the bootstrap methods. Various bias and variance reduction methods are presented for improving the bootstrap bias correction term in computing the bootstrap information criterion. The properties of these methods are investigated both in theoretical and numerical aspects, for which we use a statistical functional approach. It is shown that the bootstrap method automatically achieves the second-order bias correction if the bias of the first-order bias correction term is properly removed. We also show that the variance associated with bootstrapping can be considerably reduced for various model estimation procedures without any analytical argument. Monte Carlo experiments are conducted to investigate the performance of the bootstrap bias and variance reduction techniques.

Similar content being viewed by others

References

Akaike H. (1973) Information theory and an extension of the maximum likelihood principle. In: Petrov B.N., Csaki F. (eds) 2nd international symposium in information theory. Budapest, Akademiai Kiado, pp 267–281

Akaike, H. (1974). A new look at the statistical model identification. IEEE Transactions on Automatic Control, AC-19, 716–723.

Bozdogan H. (1994) Proceeding of the first US/Japan conference on the frontiers of statistical modeling: An informational approach. Kluwer, The Netherlands

Cavanaugh J.E., Shumway R.H. (1997) A bootstrap variant of AIC for state-space model selection. Statistica Sinica 7: 473–496

Davison A.C. (1986) Approximate predictive likelihood. Biometrika 73: 323–332

Davison A.C., Hinkley D.V. (1992) Bootstrap likelihoods. Biometrika 79(1): 113–130

Efron B. (1979) Bootstrap methods: Another look at the Jackknife. Annals of Statistics 7: 1–26

Efron B. (1983) Estimating the error rate of a prediction rule: Improvement on cross-validation. Journal of the American Statistical Association 78(382): 316–331

Hurvich C.M., Tsai C.L. (1989) Regression and time series model selection in small samples. Biometrika 76: 297–307

Ishiguro M., Sakamoto Y., Kitagawa G. (1997) Bootstrapping log likelihood and EIC, an extension of AIC. Annals of the Institute of Statistical Mathematics 49(3): 411–434

Konishi S., Kitagawa G. (1996) Generalized information criteria in model selection. Biometrika 83(4): 875–890

Konishi, S., Kitagawa, G. (1998). Second order bias correction for generalized information criterion. Research Memorandom, No.661. The Institute of Statistical Mathematics.

Konishi S., Kitagawa G. (2003) Asymptotic theory for information criteria in model selection—functional approach. Journal of Statistical Planning and Inference 114: 45–61

Konishi S., Kitagawa G. (2008) Information criteria and statistical modeling. Springer, New York

Kullback S., Leibler R.A. (1951) On information and sufficiency. Annals of Mathematical Statistics 22: 79–86

Sakamoto Y., Ishiguro M., Kitagawa G. (1986) Akaike information criterion statistics. D. Reidel Publishing Company, Dordrecht

Shibata R. (1997) Bootstrap estimate of Kullback–Leibler information for model selection. Statistica Sinica 7: 375–394

Stone M. (1977) An asymptotic equivalence of choice of model by cross-validation and Akaike’s criterion. Journal of the Royal Statistical Society B39: 44–47

Sugiura N. (1978) Further analysis of the data by Akaike’s information criterion and the finite corrections. Communications in Statistics Series A 7(1): 13–26

Takeuchi K. (1976) Distributions of information statistics and criteria for adequacy of models. Mathematical Science 153: 12–18 (in Japanese)

Wong W. (1983) A note on the modified likelihood for density estimation. Journal of the American Statistical Association 78(382): 461–463

Author information

Authors and Affiliations

Corresponding author

About this article

Cite this article

Kitagawa, G., Konishi, S. Bias and variance reduction techniques for bootstrap information criteria. Ann Inst Stat Math 62, 209–234 (2010). https://doi.org/10.1007/s10463-009-0237-1

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10463-009-0237-1