Abstract

Cancer screening and diagnosis with the utilization of innovative Artificial Intelligence tools improved the treatment strategies and patients’ survival. With the rapid development of imaging technologies and the rise of artificial intelligence (AI), there is a significant opportunity to improve cancer diagnostics through the combination of image analysis and AI algorithms. This article provides a comprehensive review of studies that have investigated the application of AI-assisted image processing in cancer diagnosis. We searched the Web of Science and Scopus databases to identify relevant studies published between 2014 and January 2024. The search strategy utilized targeted keywords such as cancer diagnostics, image analysis, artificial intelligence, and advanced imaging techniques. We limited the review to articles written in English and using AI-assisted image processing in cancer diagnosis. The results show that by leveraging machine learning algorithms, including deep learning, computer-aided diagnosis systems have been developed that are efficient in detecting tumors, thereby facilitating early cancer detection. Additionally, various authors have explored the integration of personalized treatment approaches and precision medicine, allowing for the development of treatment plans tailored to individual patient characteristics and needs. The review emphasizes the potential of AI-assisted image processing in revolutionizing cancer diagnostics. The insights gained from this study contribute to the current understanding of the field and pave the way for future research and development aimed at advancing cancer diagnostics using image analysis and artificial intelligence.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Avoid common mistakes on your manuscript.

1 Introduction

Cancer is a complex and devastating disease characterized by the uncontrolled growth and spread of abnormal cells within the body (Bethesda 2007). Early and accurate diagnosis is essential for determining the cancer type, stage, and the most appropriate treatment method to improve the survival rate and reduce the potential morbidity and mortality of these patients (Pulumati et al. 2023). Delays in diagnosis can have severe consequences, potentially compromising treatment effectiveness and patient outcomes (Al-Azri 2016; Pulumati et al. 2023). In recent years, medical imaging techniques have emerged as invaluable tools for diagnosing various cancers, including breast, lung, prostate, and brain cancers (Hollings and Shaw 2002; Fass 2008). These advanced imaging techniques—such as X-rays, computed tomography (CT), magnetic resonance imaging (MRI), and positron emission tomography (PET)—offer visualizations of internal structures that enable clinicians to detect and characterize cancerous lesions (Arabahmadi et al. 2022; Abhisheka et al. 2023). For example, the application of CT in lung nodule detection is assessed and the 94.4–96.4% of sensitivity and 99.9% of negative predictive value of incidence screening 1–2 years following a baseline CT scan in the context of the NLST (National Lung Screening Trial) and NELSON (Nederlands-Leuvens Longkanker Screenings Onderzoek) were remarkedly considerable (Rubin 2015).

Such medical images can be difficult to interpret, however, requiring skilled radiologists and oncologists to accurately analyze and identify cancerous regions (Schlemmer et al. 2018). Even with experienced professionals, challenges persist (Limkin et al. 2017; Schlemmer et al. 2018). Among these challenges is the inherent risk of misdiagnosis associated with image-based cancer detection (Huang et al. 2020; Mu et al. 2022). This reality highlights a crucial point: delayed or inaccurate diagnoses can have a significant impact on patient well-being (Al-Azri 2016). The intricacies of detecting cancer in its advanced stages introduce a host of complications that ripple through the entire treatment trajectory (Al-Azri 2016; Schlemmer et al. 2018). Early detection of cancers improves the overall survival of patients. In cases of breast cancer, it is estimated that women experiencing symptoms for a duration of 3 to 6 months have a 7% lower five-year survival rate compared to those whose symptoms persist for a shorter period (Richards 2009). Also, research indicates that early diagnosis can lead to a significant reduction in cancer mortality. For example, a modeling study found that implementing multi-cancer early detection (MCED) screening could potentially reduce late-stage cancer diagnoses by 78% and result in an absolute reduction of 104 deaths per 100,000 individuals screened (Hubbell et al. 2021).

Late-stage detection not only compromises the efficacy of treatment interventions but also casts a shadow over survival rates (Schlemmer et al. 2018; Crosby et al. 2022). The ramifications extend beyond the immediate diagnostic phase, influencing the overall effectiveness of therapeutic measures and directly impacting the patient’s chances of survival and reduce the potential morbidity and mortality of these patients. In light of these implications, the urgent call to action is clear—there is an imperative to not only navigate the challenges of image interpretation but also to refine and expedite diagnostic processes. As an example of the importance of early diagnosis in cancer, the COVID-19 pandemic negatively impacted the diagnosis and treatment of cancer due to limited access to medical care. This situation could lead to a temporary decrease in cancer incidence, followed by a rise in cases diagnosed at more advanced stages and, ultimately, a higher mortality rate (Siegel et al. 2022).

Addressing the complexities of interpreting medical images is a crucial step in enhancing the overall efficacy of cancer diagnosis and treatment. By acknowledging and surmounting these challenges, the medical community can pave the way for swifter, more accurate diagnoses that hold the potential to transform the landscape of cancer care and ultimately improve patient outcomes.

Implementing advanced imaging techniques and AI in cancer diagnosis presents significant challenges, particularly in resource-constrained settings (Bi et al. 2019). Financial limitations often hinder access to modern equipment and reliable internet connectivity, which are crucial for the effective use of these technologies (Bi et al. 2019; Chen et al. 2022). Additionally, a shortage of trained healthcare professionals skilled in advanced diagnostics can lead to underutilization of available tools (Jenny et al. 2021). Cultural barriers and social stigmas surrounding cancer further complicate timely diagnosis and treatment (Oystacher et al. 2018). Addressing these challenges is essential for advancing global healthcare equity, as disparities in cancer care between high-income and low-income countries persist. Efforts must focus on developing cost-effective solutions tailored to local contexts, ensuring that all populations benefit from advancements in cancer diagnostics.

This is where the integration of image analysis and artificial intelligence (AI) techniques comes into play (Huang et al. 2020). With the help of image-processing algorithms and modern AI, we can improve the accuracy and efficiency of cancer diagnostics (Hunter et al. 2022). The urgency to advance cancer diagnosis has spurred remarkable progress, particularly in the realm of image-based identification (Savage 2020; Hunter et al. 2022). It is such modern tools allowing for imaging medical data that triggered intensive research into the intricate nuances of various cancers (Kumar et al. 2022). Image-based diagnosis, propelled by advancements in medical imaging, has emerged as a critical frontier in the pursuit for early and precise cancer detection (Jiang et al. 2023). AI algorithms, adept at swiftly and accurately analyzing vast datasets, stand as promising tools in unraveling intricate patterns and anomalies within medical images (Pinto-Coelho 2023; Abdollahzadeh et al. 2024; El-kenawy et al. 2024). This synergy of AI and image processing not only augments diagnostic accuracy, but also paves the way for personalized medicine and proactive interventions (Najjar 2023; Pinto-Coelho 2023). As an example of personalized treatment, a precision medicine company, has developed the ArteraAI Prostate Test, which predicts treatment benefits for patients with localized prostate cancer, analyzing multiple patient characteristics from digital pathology images and clinical data, enabling healthcare providers to make treatment decisions (2024).

For these reasons, this study first endeavors to explore and provide insights into the effective integration of image analysis and AI algorithms within existing cancer diagnostic workflows. By analyzing methodologies and considerations, the study seeks to offer valuable guidance on seamlessly incorporating these advanced technologies into clinical settings. Secondly, the research addresses the specific challenges and limitations associated with applying image processing and AI techniques to different types of cancers.

2 Methodology

The study aims to conduct a systematic review on the integration of image analysis and AI algorithms to improve clinical practice in cancer diagnosis and treatment. During the preparation of this manuscript, we strictly adhered to the guidelines provided by Smith et al. (Smith et al. 2011), the Systematic Reviews and Meta-Analyses (PRISMA) guidelines (Page et al. 2021) in particular.

2.1 Research questions

In this study, we conducted a systematic review by initially screening titles, abstracts, and full texts of related scientific articles. We selected articles that address at least one of our research questions, namely:

-

RQ1: How can image analysis and AI algorithms be effectively integrated into existing cancer diagnostic workflows?

-

RQ2: What specific challenges and limitations are associated with applying image processing and AI techniques to different types of cancers?

-

RQ3: What are the potential benefits and drawbacks of incorporating these advanced technologies into clinical practice?

By investigating these research questions, we aim to gain insights into the effective integration, challenges, limitations, and potential advantages and disadvantages of utilizing image analysis and AI algorithms in cancer diagnosis and treatment workflows.

2.2 Selection criteria

We included articles written in English, published between 2014 and 2024. This timeframe ensures the inclusion of recent developments, methodologies, and studies that align with the current state of medical imaging and AI applications in oncology. Since AI is a fresh and emerging topic in medicine, we decided not to prioritize any types of articles (e.g., review, original, short, or letter to the editor)—even a letter to the editor can offer a fresh perspective. Therefore, we assessed the significance of each retrieved article based on its relevance to addressing our research questions, irrespective of its type and character.

2.3 Search strategy

For this study, we utilized the Web of Science database to review relevant articles. The keywords used in article search were carefully selected based on the study’s objectives and research questions. To ensure accuracy and relevance, we refined them using MeSH (Medical Subject Headings) (Lipscomb 2000). This process helped us come up with the following keywords:

-

Cancer.

-

Cancer diagnostic.

-

Image processing.

-

Image analysis.

-

AI algorithms.

-

AI techniques.

-

Challenges and limitations.

-

Benefits and drawbacks.

-

Advantages and disadvantages.

By using these keywords, we aimed to capture and explore the intersection of image analysis, AI algorithms, cancer diagnosis, challenges, limitations, image processing, AI techniques, different cancer types, benefits, drawbacks, and advanced technologies. To achieve this and to optimize the search strategy for each question and keyword, we developed specific search strings to be used in the Web of Science database, presented in Table 1.

In addition, we implemented citation chaining to further enhance the literature review process (Hirt et al. 2020). This involved examining the references cited in the articles retrieved from the search strategies listed in Table 1. By doing so, we aimed to ensure that no relevant topics or studies mentioned in these articles were overlooked.

2.4 Study selection process

The article selection process for our systematic review on advancing cancer diagnosis and treatment through the integration of image analysis and AI algorithms was conducted with careful attention to detail and rigor. Our approach aimed to ensure that only relevant, high-quality studies were included in our review.

After compiling a list of potential articles, each member of our research team independently reviewed the titles and abstracts to assess their relevance based on pre-defined inclusion and exclusion criteria. These criteria focused on studies that specifically addressed the application of AI in cancer diagnosis and treatment, particularly those utilizing image analysis techniques. The expertise of our team—comprising specialists in AI and oncology—was crucial in evaluating the methodological rigor of each study. We aimed to ensure that only studies employing sound methodologies relevant to our research questions were included. Each article was evaluated by at least two independent reviewers. In cases where reviewers disagreed about the relevance or quality of a study, we engaged in discussions to reach a consensus. If consensus could not be achieved, we consulted the fourth author—a specialist in oncology—to provide additional insights. To maintain objectivity throughout the selection process, we implemented a strict conflict of interest protocol. Researchers who had prior involvement with a study (e.g., as co-authors) recused themselves from evaluating those specific articles to prevent any potential bias.

By following this rigorous selection process, we aimed to ensure that our systematic review accurately reflects the current landscape of AI applications in cancer diagnosis and treatment.

2.5 Data extraction and synthesis

We used structured data extraction to capture essential information from the selected studies, encompassing study design, methodologies for integrating image analysis and AI algorithms, types of cancers studied, outcomes measured, and challenges encountered. This systematic data extraction process was implemented to maintain consistency and accuracy in capturing relevant information. The synthesized data were then interpreted in alignment with the research question and objectives. The resulting synthesis of data from the included studies provided valuable insights into the successful integration of image analysis and AI algorithms within cancer diagnostic workflows.

2.6 Study quality appraisal

The Critical Appraisal Skills Programme (CASP) Systematic Review tool, a standard in scientific study evaluation, was employed to study quality appraisal (2018). This tool, widely used in medicine and other science disciplines, evaluates research articles in three main areas: research questions, design and method, and results interpretation (Zeng et al. 2015). Each section involves scoring based on the quality and reliability of the information. The tool comprises 10 questions with possible answers of “Yes”, “No” and “Can’t Tell”, with a scoring system ranging from 1 to 10. Articles scoring 8–10 were considered highly relevant and those scoring 6–7 moderately relevant, while those below 6 were deemed irrelevant (Supplementary File 1). The review involved collaboration between two researchers, with discrepancies resolved through consultation with a third author.

3 Results

3.1 Article selection

Based on our search strategies conducted in the Web of Science database, we identified a total of 1,783 articles. These articles were categorized according to their relevance to our research questions: 452 articles were pertinent to the first question, 1,191 to the second question, and 140 to the third question. However, among these initial findings, we discovered that 845 articles were duplicates. After removing these duplicates, we were left with 938 unique articles.

From this refined list, we applied several exclusion criteria. We excluded 35 articles due to being in other languages and 520 articles because they fell outside our specified disciplines. This process reduced our count to 383 articles. Upon further review of the titles and abstracts, we identified an additional 186 articles that did not meet our study design criteria, resulting in a total of 197 articles for full-text review.

After thoroughly examining the full texts of these 197 articles, we excluded another 144 due to inappropriate study designs. Consequently, we arrived at a final selection of 53 articles for comprehensive analysis: 14 related to the first question, 23 to the second question, and 16 to the third question.

In addition to our initial search, we conducted a citation-chaining search that yielded further relevant findings. This approach led us to discover one additional article relevant to the first question, two additional articles for the second question, and one for the third question. Ultimately, this expanded our final count to 57 articles for full-text analysis: 15 addressing the first question, 25 addressing the second question, and 17 addressing the third question. The entire selection process is visually summarized in Fig. 1.

3.2 Data extraction

In our analysis of 57 studies, we aimed to investigate various aspects including the type of study, types of cancers examined, research purposes, methodologies, results and outcomes measured, conclusions drawn, and challenges faced. The studies encompassed a range of cancer types, with detailed information available in Supplementary File 2.

Study quality appraisal.

The results of study quality appraisal of the studies, conducted using the CASP tool, revealed the following findings:

-

No study had a quality score of 10.

-

Two studies had a score of 9 (3.5% of the analyzed articles) and 21 studies a score of 8 (36.8%), so 30 articles (40.3%) were considered highly relevant.

-

24 studies had a score of 7 (42.1%); they were considered moderately relevant.

-

10 studies (17.5%) had a score of 6; they were considered irrelevant.

These findings indicate that most the reviewed studies (add number and percentage, but after checking the numbers above) were considered relevant—either highly or moderately. For more detailed information regarding the qualitative selection of the studies, please consult supplementary file 3.

3.3 Research questions

3.3.1 Integrating image analysis and AI algorithms into Cancer Diagnostic workflows

We followed a process shown in Fig. 2. It shows that the effective integration of image analysis and AI algorithms into existing cancer diagnostic workflows can enhance accuracy, efficiency, and overall patient care.

Many various studies on the integration of image analysis and artificial intelligence algorithms into existing cancer diagnosis workflows have revealed key finding (Araújo et al. 2017; Hart et al. 2019; Martín Noguerol et al. 2019; Haggenmüller et al. 2021; Jenny et al. 2021; Brancati et al. 2022; Chen and Novoa 2022; Cifci et al. 2022; Schuurmans et al. 2022; Suarez-Ibarrola, Sigle et al. 2022, Baydoun et al. 2023; Mosquera-Zamudio et al. 2023). First, the use of image analysis and AI algorithms shows promising results in improving the accuracy and efficiency of cancer diagnosis (Jenny et al. 2021; Brancati et al. 2022; Chen and Novoa 2022; Cifci et al. 2022; Baydoun et al. 2023). These algorithms can help analyze large amounts of data and identify patterns or anomalies that may be overlooked by human observers, including qualified ones (Goldenberg et al. 2019; Chen and Novoa 2022; Cifci et al. 2022; Suarez-Ibarrola, Sigle et al. 2022, Baydoun et al. 2023), as it might be challenging to detect subtle patterns and anomalies for human eye to spot, especially in the early stages of disease. In a clinical trial involving 80,020 women, reported that AI could outperform traditional radiologist readings by identifying 20% more breast cancer cases, indicating AI’s potential to enhance cancer detection rates without increasing false positives (Timsit 2023). Two studies emphasized the importance of data quality and preprocessing (Martín Noguerol et al. 2019; Schuurmans et al. 2022). Collecting large and diverse datasets of cancer images, along with relevant clinical information, is crucial for effective training of AI algorithms (Araújo et al. 2017; Hart et al. 2019; Haggenmüller et al. 2021; Schuurmans et al. 2022).

An important step in integrating artificial intelligence algorithms is image annotation and labeling (Brancati et al. 2022; Suarez-Ibarrola, Sigle et al. 2022). Annotating images with relevant information, such as tumor boundaries or specific features, enables algorithms to learn and make accurate predictions (Liu et al. 2021; Chen and Novoa 2022).

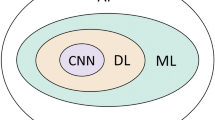

Algorithm development and training are key components of the integration process (Araújo et al. 2017; Mosquera-Zamudio et al. 2023). Convolutional Neural Networks (CNN) have been widely used to analyze cancer images due to their ability to comprehend complex features and phenomena in images (Araújo et al. 2017; Hart et al. 2019; Haggenmüller et al. 2021; Mosquera-Zamudio et al. 2023). CNNs are the most employed deep-learning model for tumor detection in the pathology of breast cancer, renal cell carcinoma, and prostate cancer (Folmsbee, Liu et al. 2018; Tabibu et al. 2019).

To ensure a model’s effectiveness and reliability, it is crucial to validate trained machine-learning (ML) algorithms (Hart et al. 2019; Jenny et al. 2021). For this, separate datasets should be used for model training and tuning, validation and testing (Haggenmüller et al. 2021; Jenny et al. 2021).

In practice, however, it happens that only two datasets are used for building ML models: a training and a test dataset, a validation dataset not being used altogether and the validation process being performed using either the training or test dataset. Sometimes used in the medical and pharmaceutical industry, this approach should be avoided if one wants to create high-quality models (which should mean “always”). When the available data is limited, however, it may be ineffective (or even impossible) to split the data into three datasets (training, validation and test); this is when data scientists sometimes decide to perform validation on either training or test data, neither solution being a good modeling design.

Integrating AI algorithms into existing cancer diagnosis workflows requires seamless integration with other diagnostic tools (Cifci et al. 2022; Baydoun et al. 2023). This can be achieved by incorporating algorithms into existing image analysis software or developing new software interfaces (Suarez-Ibarrola et al. 2020; Cifci et al. 2022; Baydoun et al. 2023).

Automatic validation and tuning of models is seldom optimal. It can be improved, often significantly, through clinical validation and tuning of models, which an important step in the integration process (Goldenberg et al. 2019; Chen and Novoa 2022; Cifci et al. 2022). The best ML models are those that are continuously improved. This is often done based on providing new data for training or by constantly validating the output of the model based on feedback from patients, doctors or other experts (Goldenberg et al. 2019; Chen and Novoa 2022).

Some of the analyzed articles highlighted the importance of regulatory compliance (Chen and Novoa 2022; Cifci et al. 2022; Suarez-Ibarrola, Sigle et al. 2022, Baydoun et al. 2023). Medical devices or software that integrate AI algorithms must meet legal requirements, which is particularly significant in domains that use sensitive data—and medicine is among the most prominent example of such domains (Goldenberg et al. 2019; Hart et al. 2019; Martín Noguerol et al. 2019; Jenny et al. 2021; Schuurmans et al. 2022). Regulatory institutions, such as the European Union’s Medical Device Regulation (MDR), the In Vitro Diagnostic Medical Devices Regulation (IVDR), and the FDA, have established new guidelines to ensure the responsible adoption of AI/ML in medical devices (Pesapane et al. 2018; Gilbert et al. 2021).

Machine learning is a complicated methodology, and as such, it provides models and tools of high complexity and difficulty. Thus, such models are usually created and maintained by expert data scientists—but once they are ready to use, they are usually operated by medical professionals. Therefore, such professionals need to be educated on how to effectively use AI algorithms in their diagnostic workflows (Jenny et al. 2021; Brancati et al. 2022; Chen and Novoa 2022; Cifci et al. 2022; Schuurmans et al. 2022; Baydoun et al. 2023). Otherwise, they may be unable to understand these tools, leading to serious mistakes and consequences. These days, it is much easier to convince medical professionals that AI tools provide such benefits that it is worth spending even significant amount of time to learn using them. This was not so simple a decade ago, when AI was much less developed than it is now. Therefore, various authors have emphasized that offering essential support to medical professionals and demonstrating the benefits of this highly complex technology can facilitate the adoption of its tools, overcome potential resistance, and encourage self-development and commitment (Goldenberg et al. 2019; Chen and Novoa 2022; Cifci et al. 2022; Suarez-Ibarrola, Sigle et al. 2022).

3.3.2 Challenges and limitations in applying image Processing and AI techniques to different types of cancers

Figure 3 highlights a range of challenges and limitations when it comes to applying image processing and AI techniques in the context of various cancer types.

Many studies on the challenges and limitations of using image processing and artificial intelligence techniques in different types of cancers have identified important findings (Azuaje 2019; Higaki et al. 2019; Nakaura et al. 2020; Chelebian et al. 2021; Cheung and Rubin 2021; Cirillo et al. 2021; Kriti et al. 2022; Sheikh et al. 2022; Zhou et al. 2022; Dixit et al. 2023; Glučina et al. 2023; Illimoottil and Ginat 2023; Olatunji and Cui 2023; Patel et al. 2023; Steele et al. 2023; Tabari et al. 2023; Tamoor et al. 2023; Yoon et al. 2023; Zhang et al. 2023; Fallahpoor et al. 2024). First, the availability of high-quality and annotated data for training AI models has been identified as a major challenge (Kriti et al. 2022; Sheikh et al. 2022; Zhou et al. 2022; Dixit et al. 2023; Tamoor et al. 2023).

Several studies highlighted that variability in image quality constituted another significant challenge. Medical images can vary due to differences in imaging methods, equipment, and patient-specific factors (Higaki et al. 2019; Cheung and Rubin 2021; Dixit et al. 2023; Patel et al. 2023; Tabari et al. 2023; Zhang et al. 2023). Each modality has its own strengths and limitations, which can affect the clarity and diagnostic utility of the images produced (Khayyam et al. 2023). There is a popular casual saying in the data science community: “Garbage in, garbage out.” It aptly represents this very problem: if you train an ML model using low-quality data, you will unlikely obtain a high-quality model, and its predictions will be at best confusing, but more likely incorrect. The above-mentioned quality issues in medical images decrease the quality of the data, and thus the quality of the models trained on them. The same way, if one provides a low-quality image to a model—even a very good one—one can get an incorrect prediction. This emphasizes that data quality pays a significant role at all steps of creating models. In this context (like in many other contexts), ML models behave in a similar way to humans. Imagine a medical imaging expert who learns their craft using low-quality data; these can be blurry X-ray images to learn from or a blurry image to analyze. It’s unlikely that this person will ever be able to make a correct diagnosis with high accuracy, even if the person is highly qualified.

Such low quality of training data can result from various reasons. Researchers stressed the meaning of inter-observer variability as well as differences in interpretations and annotations made by different radiologists or pathologists—such human-based variation have been identified as a major challenge in creating ground-truth labels for training AI models (Azuaje 2019; Nakaura et al. 2020; Chelebian et al. 2021; Cheung and Rubin 2021; Illimoottil and Ginat 2023; Fallahpoor et al. 2024), ground truth being the definitive set of true labels or true data used to train and evaluate machine learning models.

Nevertheless, the low quality of data does not have to result solely from human mistakes and variability in human judgements. It can also result from the internal heterogeneity of cancer types and of individual cancer instances within a cancer type. Different types of cancers have distinct morphological and phenotypic characteristics, which makes it challenging to develop artificial intelligence models that can accurately detect and differentiate cancer types (Chelebian et al. 2021; Su and Li 2021; Pang et al. 2022; Sheikh et al. 2022; Tabari et al. 2023; Zhang et al. 2023). Even then, some cancers like colorectal and gastric cancer can have overlapping imaging features, making differentiation more difficult for AI algorithms (Khayyam et al. 2023; Sufyan et al. 2023).

Overfitting and generalizability have been identified as challenges in developing robust AI models (Takiddin et al. 2021; Boyanapalli and Shanthini 2023; Illimoottil and Ginat 2023; Fallahpoor et al. 2024). If one wants to generalize an AI model to various populations of patients, it must be trained on diverse and representative datasets to answer the natural variability and heterogeneity of the general population (Takiddin et al. 2021; Boyanapalli and Shanthini 2023; Zhang et al. 2023; Fallahpoor et al. 2024). Otherwise, one risks that a model trained on a particular set of data will not generalize well to different data. For example, a model built using data on brain cancer does not have to work well for patients on breast cancer. In order to avoid problems related to generalizability, one should use the model to similar data to those on which it was build. Using such a model it for different types of cancers is called transfer learning, or more specifically, this can be considered a form of domain adaptation within transfer learning. However, the model might require some adaptation to perform well on the new cancer data; this would most likely involve fine-tuning the model on a small dataset specific to the new cancer type.

We are discussing medicine, so ethical considerations should not be ignored. Indeed, they have been considered in many studies. In particular, the use of AI in cancer diagnosis and treatment raises concerns about privacy, bias, and potential effects on the patient-physician relationship (Cheung and Rubin 2021; Cirillo et al. 2021; Frazer et al. 2021; Illimoottil and Ginat 2023; Patel et al. 2023).

Various studies have emphasized the importance of clinical validation and integration of AI models. Rigorous validation in clinical settings is essential to ensure the safety, efficacy, and relevance of AI models in real-world applications (Cirillo et al. 2021; Dixit et al. 2023; Glučina et al. 2023; Illimoottil and Ginat 2023; Olatunji and Cui 2023; Steele et al. 2023; Yoon et al. 2023). The better validated a model, the better it works for real life patient data. It is thus important to build a model on a large dataset, properly split to train, validation and test datasets, meaning that each of them should be of proper size. Disregarding any of them may lead to building poor models. For example, using almost all data for training and only a small fraction for testing may lead to a seriously overfitted model, which at first may look very well but may eventually occur poorly fitted to the whole population.

3.3.3 Potential benefits and drawbacks of incorporating Advanced technologies into clinical practice

The integration of advanced technologies such as image processing and AI into clinical practice holds significant potential benefits and drawbacks, as depicted in Fig. 4.

Incorporating advanced technologies like image processing and AI into clinical practice can have several potential benefits. One of the main advantages is improved accuracy and efficiency. AI algorithms can quickly and accurately analyze large amounts of medical data, aiding in the detection and diagnosis of cancer (Litjens et al. 2016; Serag et al. 2019; Steiner et al. 2021; Chen and Novoa 2022; Geaney et al. 2023). For example, a recent study developed an AI model called Lars that could successfully find lymph node cancer in 90% of cases by analyzing over 17,000 PET and CT images from 5,000 patients. This level of accuracy can aid in early detection and diagnosis (Hosny et al. 2018).

Another benefit is enhanced decision support. AI can provide clinicians with evidence-based recommendations and decision support tools, helping them make more informed and personalized treatment plans for individual patients (Echle et al. 2020, 2021; Unternaehrer et al. 2020; Steiner et al. 2021; Chen and Novoa 2022). Without AI, clinicians are left with regular decision tools, that is, their own knowledge, subject literature and non-AI expert tools traditionally used in cancer detection and diagnosis, such as pathology reports, medical imaging, biopsy, blood test and genetic testing. For example, the xDECIDE system is a web-based clinical decision support tool powered by a “Human-AI Team” that offers oncology healthcare providers ranked treatment options for their patients. It uses natural language processing and machine learning to aggregate and process patient medical records into a structured format, which feeds into an ensemble of AI models called xCORE that ranks potential treatment options based on clinical evidence, expert insights, and real-world outcomes (Shapiro et al. 2024).

AI models can also assist in the early detection and prevention of cancer, before applying expensive and time-consuming tests. By analyzing patient data, AI can identify early signs of the disease, allowing for interventions at a stage when treatment outcomes are more favorable (Ehteshami Bejnordi, Veta et al. 2017, Liu et al. 2018; Echle et al. 2020; Unternaehrer et al. 2020; Steiner et al. 2021; Geaney et al. 2023).

Additionally, AI can facilitate personalized medicine by analyzing complex patient data, such as genetic profiles and imaging data (Pell, Oien et al. 2019, Gonçalves et al. 2022; Geaney et al. 2023). By integrating these diverse data sources, AI can help identify unique patterns and biomarkers that may not be apparent—or particularly difficult to notice—through traditional methods. This can improve accurate diagnosis and creating treatment plans tailored to the individual characteristics of a patient. Researchers in Ontario have developed a system known as PMATCH, which employs machine learning to facilitate the near real-time matching of cancer patients with precision medicine clinical trials, analyzing comprehensive genomic and health data against the eligibility criteria of various clinical trials, thereby streamlining the process of connecting patients with suitable trials. The objective of PMATCH is to enhance the number of cancer patients matched to clinical trials by as much as 50% by automating the identification of eligible patients based on the most recent cancer research data (Atkinson et al. 2007; Bhimwal and Mishra 2023).

AI tools can optimize the typical workflow of clinical practice. This can be done through automating repetitive tasks, such as image analysis and report generation, freeing up clinicians’ time for more complex and critical tasks (Ehteshami Bejnordi, Veta et al. 2017, Liu et al. 2018; Echle et al. 2020; Echle et al. 2021).

Until now, we have discussed advantages of employing AI to enhance cancer detection and diagnosis. This does not mean there are no related drawbacks to consider when incorporating advanced technologies into clinical practice. One major concern is interpretation difficulties of AI models. Often, these models work as “black boxes,” making it challenging to understand and interpret their decision-making process (Liu et al. 2018; Rudin 2019; Ghassemi et al. 2021; Chen and Novoa 2022). Even if a model helps a clinician to draw correct conclusions (e.g., diagnose a particular cancer), it can sometimes be difficult for them to provide additional information, such as the reasoning behind the diagnosis or specific contributing factors identified by the AI. This lack of transparency can hinder the clinician’s ability to explain the diagnosis to patients or colleagues.

Another concern is the quality and bias of the data used to train AI models. These models heavily rely on the quality and representativeness of the data (Eklund et al. 2019; Kiani et al. 2020; Unternaehrer et al. 2020; Cheng et al. 2021; Ghassemi et al. 2021). High-quality data is fundamental for the effectiveness of AI systems. If the data is incomplete, inaccurate, or outdated, it can skew the AI’s learning process, resulting in flawed predictions and decisions. For example, a lack of diverse data can lead to biased AI models that fail to generalize across different demographics, perpetuating existing societal biases.

Ethical considerations are also important when incorporating AI into clinical practice. Patient privacy, consent, and the responsible use of data are all ethical concerns that need to be addressed (Pell, Oien et al. 2019, Echle et al. 2021; Thapa and Camtepe 2021; Chen and Novoa 2022; Gonçalves et al. 2022).

Implementing and maintaining AI technologies can be costly. Training, deploying, maintaining and updating AI models require significant computational resources and expertise, which may not be readily available in all healthcare settings (Serag et al. 2019; Unternaehrer et al. 2020; Cheng et al. 2021). Additional costs result from necessary education of clinicians to be able to properly use such tools.

The use of AI in clinical practice raises legal and regulatory challenges, such as liability and accountability (Echle et al. 2021; Thapa and Camtepe 2021; Chen and Novoa 2022).

4 Discussion

This systematic review provides a comprehensive evaluation of image analysis and AI algorithms for clinical practice studies of cancer diagnosis and treatment. The integration of image analysis and artificial intelligence algorithms in cancer diagnosis presents a significant advancement in clinical practice. Our findings underscore the promising impact on accuracy and efficiency, revealing the ability of these algorithms to discern patterns and anomalies overlooked by human observers (Goldenberg et al. 2019; Chen and Novoa 2022; Cifci et al. 2022; Suarez-Ibarrola, Sigle et al. 2022, Baydoun et al. 2023). Data quality and preprocessing emerge as crucial factors, emphasizing the necessity of diverse datasets for effective algorithm training (Araújo et al. 2017; Hart et al. 2019; Haggenmüller et al. 2021; Schuurmans et al. 2022). Image annotation, particularly delineating tumor boundaries, plays a pivotal role in enhancing algorithmic learning and prediction accuracy (Brancati et al. 2022; Suarez-Ibarrola, Sigle et al. 2022, Thukral et al. 2023).

The use of Convolutional Neural Networks (CNN) in analyzing cancer images proves noteworthy for their capacity to grasp complex features (Araújo et al. 2017; Hart et al. 2019; Haggenmüller et al. 2021; Kaur et al. 2021; Mosquera-Zamudio et al. 2023). Convolutional Neural Networks (CNNs) are a specialized type of deep learning model particularly effective for processing structured grid data, such as images (Wu 2017). They have revolutionized various fields, especially computer vision, by enabling machines to recognize and classify visual patterns with high accuracy (Li, Liu et al. 2021).

To ensure effectiveness and reliability, algorithm development, training, and validation with separate datasets are imperative (Haggenmüller et al. 2021; Jenny et al. 2021). Seamless integration with existing diagnostic tools, achieved through software interfaces or incorporation into image analysis software, is vital for practical implementation. Practical strategies for integration include adopting a modular design that allows incremental implementation without disrupting current workflows (Juluru et al. 2021). Engaging end-users during the development phase ensures that AI tools meet the specific needs of healthcare professionals (Juluru et al. 2021). Additionally, providing comprehensive training on the use of these tools and ensuring interoperability with electronic health records can facilitate smoother transitions (Gorrepati 2024). Addressing organizational barriers through stakeholder engagement and demonstrating the benefits of AI in enhancing diagnostic accuracy will further support successful integration into clinical practice (Alowais et al. 2023).

Clinical validation and optimization, coupled with continuous feedback-driven improvements, contribute to algorithmic performance and usability (Goldenberg et al. 2019; Chen and Novoa 2022; Cifci et al. 2022). Compliance with regulatory standards becomes paramount, emphasizing the need for medical devices integrating AI algorithms to meet legal requirements (Goldenberg et al. 2019; Hart et al. 2019; Martín Noguerol et al. 2019; Jenny et al. 2021; Schuurmans et al. 2022). Furthermore, medical professionals’ education on AI algorithm utilization in diagnostics, along with showcasing technology benefits and providing support, emerges as a pivotal strategy to foster adoption and overcome potential resistance (Goldenberg et al. 2019; Chen and Novoa 2022; Cifci et al. 2022; Suarez-Ibarrola, Sigle et al. 2022).

To validate proposed AI techniques in real-world clinical settings, a structured approach is essential (Hosny et al. 2022). This involves rigorous testing against large, diverse datasets to assess accuracy, sensitivity, and specificity compared to existing diagnostic standards (Hosny et al. 2022; Sandbank et al. 2022). Primary validation should include external testing in clinical scenarios that reflect the target patient population to ensure generalizability (Raciti et al. 2023). However, several challenges can impede this process. High-quality, annotated data is crucial, yet often difficult to obtain due to variability in imaging quality and interpretation (Raciti et al. 2023; Pulaski et al. 2024). Ethical concerns regarding data privacy and the “black-box” nature of AI algorithms can also hinder clinician trust and acceptance (Von Eschenbach 2021). Additionally, resource limitations in healthcare settings may restrict access to necessary infrastructure for implementing these technologies (Ahmed et al. 2023). Addressing these challenges through comprehensive validation processes and stakeholder engagement will be critical for the successful integration of AI into clinical practice.

The exploration of challenges and limitations in employing image processing and artificial intelligence techniques across diverse cancer types reveals critical insights (Aghamohammadi et al. 2024). Foremost among these challenges is the imperative need for high-quality, annotated data for robust AI model training. Studies underscore the significant challenge posed by variability in image quality, stemming from differences in imaging methods, equipment, and patient-specific factors (Kriti et al. 2022; Sheikh et al. 2022; Zhou et al. 2022; Dixit et al. 2023; Tamoor et al. 2023).

Inter-observer variability emerges as a substantial hurdle, with differences in interpretations and annotations by various radiologists or pathologists complicating the establishment of ground truth labels for AI model training (Azuaje 2019; Nakaura et al. 2020; Chelebian et al. 2021; Cheung and Rubin 2021; Illimoottil and Ginat 2023; Fallahpoor et al. 2024). The intricate heterogeneity among cancer types further compounds these challenges, given the distinct morphological and phenotypic characteristics that necessitate nuanced AI models for accurate detection and differentiation (Chelebian et al. 2021; Su and Li 2021; Pang et al. 2022; Sheikh et al. 2022; Tabari et al. 2023; Zhang et al. 2023).

Addressing overfitting and ensuring generalizability stand out as paramount concerns in developing resilient AI models (Takiddin et al. 2021; Boyanapalli and Shanthini 2023; Illimoottil and Ginat 2023; Fallahpoor et al. 2024). Diverse and representative datasets, coupled with the ability to generalize across different patient populations, are identified as crucial factors for real-world effectiveness (Takiddin et al. 2021; Boyanapalli and Shanthini 2023; Zhang et al. 2023; Fallahpoor et al. 2024). Ethical considerations come to the forefront, with privacy, bias, and potential impacts on the patient-physician relationship drawing attention (Cheung and Rubin 2021; Cirillo et al. 2021; Frazer et al. 2021; Illimoottil and Ginat 2023; Patel et al. 2023).

Multiple studies stress the significance of clinical validation and seamless integration of artificial intelligence models (Cirillo et al. 2021; Dixit et al. 2023; Glučina et al. 2023; Illimoottil and Ginat 2023; Olatunji and Cui 2023; Steele et al. 2023; Yoon et al. 2023). Rigorous validation in clinical settings emerges as indispensable, ensuring the safety, efficacy, and relevance of AI models in real-world applications. As the field advances, attention to these challenges becomes pivotal for the responsible and effective incorporation of AI in cancer diagnosis and treatment (Cirillo et al. 2021; Olatunji and Cui 2023).

The integration of advanced technologies like image processing and AI into clinical practice holds substantial promise with a range of potential benefits. Foremost among these is the marked improvement in accuracy and efficiency, as AI algorithms demonstrate the capability to swiftly and precisely analyze extensive medical data for enhanced cancer detection and diagnosis (Litjens et al. 2016; Serag et al. 2019; Steiner et al. 2021; Chen and Novoa 2022; Geaney et al. 2023). Decision support is notably augmented, empowering clinicians with evidence-based recommendations for personalized treatment plans (Echle et al. 2020, 2021; Unternaehrer et al. 2020; Steiner et al. 2021; Chen and Novoa 2022).

AI’s role in early detection and prevention emerges as a pivotal advantage, enabling the identification of early signs and timely interventions with more favorable treatment outcomes (Ehteshami Bejnordi, Veta et al. 2017, Liu et al. 2018; Echle et al. 2020; Unternaehrer et al. 2020; Steiner et al. 2021; Geaney et al. 2023). The prospect of personalized medicine is further realized through the analysis of complex patient data, including genetic profiles and imaging information (Pell, Oien et al. 2019, Gonçalves et al. 2022; Geaney et al. 2023). The optimization of workflow is evident as AI automates repetitive tasks, freeing clinicians to focus on more intricate and critical responsibilities (Ehteshami Bejnordi, Veta et al. 2017, Liu et al. 2018; Echle et al. 2020; Echle et al. 2021).

However, the incorporation of advanced technologies brings forth certain drawbacks. The lack of interpretability in AI models, often operating as “black boxes,” poses a notable concern, making it challenging to comprehend their decision-making processes (Liu et al. 2018; Rudin 2019; Ghassemi et al. 2021; Chen and Novoa 2022). Black box AI refers to artificial intelligence systems whose internal workings and decision-making processes are not transparent to users (Von Eschenbach 2021). While users can see the inputs and outputs, the logic behind how decisions are made remains hidden, making it difficult to understand or trust the model’s conclusions (Wischmeyer 2020; Muhammad and Bendechache 2024).

Quality and bias in the training data also loom large, emphasizing the critical reliance on data quality and representativeness (Eklund et al. 2019; Kiani et al. 2020; Unternaehrer et al. 2020; Cheng et al. 2021; Ghassemi et al. 2021). Ethical considerations, encompassing patient privacy, consent, and responsible data use, demand careful attention (Pell, Oien et al. 2019, Echle et al. 2021; Thapa and Camtepe 2021; Chen and Novoa 2022; Gonçalves et al. 2022). The deployment of AI in cancer diagnosis raises significant concerns regarding data privacy, as sensitive patient information is utilized to train algorithms (Farasati Far 2023). Robust data protection measures must be implemented to prevent unauthorized access and ensure compliance with regulations (Govind Persad). Informed consent is also crucial; patients should be clearly informed about how their data will be used and the role of AI in their diagnosis (Hashemi Fotemi et al. 2024; Saeidnia et al. 2024). Additionally, the interpretability of AI algorithms is vital for clinical decision-making (Khosravi et al. 2024). Clinicians need to understand AI-generated recommendations to effectively communicate these decisions to patients and maintain accountability (Alanazi 2023; Abgrall et al. 2024). Addressing these ethical implications is essential for fostering trust and ensuring responsible integration of AI into cancer care.

While the benefits are evident, the challenges include the significant costs associated with implementing and maintaining AI technologies (Serag et al. 2019; Unternaehrer et al. 2020; Cheng et al. 2021). The demand for substantial computational resources and expertise, not universally available in all healthcare settings, adds complexity (Serag et al. 2019; Unternaehrer et al. 2020; Cheng et al. 2021). Legal and regulatory challenges, including issues of liability and accountability, underscore the need for a comprehensive and thoughtful approach to the integration of AI into clinical practice (Echle et al. 2021; Thapa and Camtepe 2021; Chen and Novoa 2022).

The diversity of datasets used in training AI models for cancer diagnosis is crucial for ensuring their effectiveness and minimizing biases (Hanna et al. 2024). Acknowledging that historical data often lacks representation from various demographic groups, efforts have been made to include diverse patient populations in training datasets (Arora et al. 2023). For instance, studies have shown that AI models trained on large and varied datasets, such as those encompassing different races, ethnicities, and imaging qualities, can significantly improve diagnostic accuracy across diverse patient groups (Dankwa-Mullan and Weeraratne 2022).

To address potential biases, researchers are implementing strategies such as optimizing patient cohort selection to ensure that underrepresented groups are included in clinical trials and training datasets (Dankwa-Mullan and Weeraratne 2022). Additionally, generating synthetic data to enhance representation of darker skin tones in dermatology has been explored as a method to improve model performance and reduce bias (Rezk et al. 2022). By focusing on data diversity and actively mitigating biases in AI models, we can promote equity in cancer care and ensure that AI tools are effective for all patients.

4.1 Limitations

The study encountered certain limitations that warrant acknowledgment. Firstly, it’s noteworthy that the research was not registered in PROSPERO or any analogous database. While PROSPERO registration is typically associated with systematic reviews, we intentionally opted not to register this specific review. This decision is rooted in the review’s scope, which doesn’t strictly align with PROSPERO’s eligibility criteria, and is pragmatically driven by the constraints of our project. It is imperative to reassure readers that despite the absence of PROSPERO registration, our literature search and selection process adhered to a rigorous methodology, and our findings are transparently reported to address any concerns related to credibility.

Additionally, a limitation arises from the exclusive consideration of the Web of Science database, which, although containing high-quality studies, is exclusive and may not capture studies from other scientific databases. Gray literature and manual searches were not conducted in this study, a deliberate choice made to prioritize reliance on quality studies within a reliable scientific base. The rationale behind this decision was rooted in the belief that a systematic and thorough review of one high-quality database would yield superior results compared to reviewing several other databases, focusing on a smaller volume of higher-quality material. Despite these limitations, the study comprehensively reviewed 57 articles, a quantity deemed satisfactory within the study’s defined parameters.

5 Conclusions

This systematic review underscores the significant role of image analysis and artificial intelligence (AI) in improving cancer diagnosis and treatment. Our findings show that integrating these technologies can enhance accuracy and efficiency by identifying patterns that may be missed by human observers. However, several challenges remain.

A key issue is the need for high-quality, annotated data to train effective AI models. Variability in imaging quality and differences in how radiologists interpret images complicate this process. To overcome these hurdles, we need standardized data collection and collaboration among healthcare professionals to create reliable training datasets.

Ethical considerations, such as data privacy and the transparency of AI systems, are also crucial. The “black-box” nature of many AI models can make it difficult for clinicians to trust their recommendations. Therefore, improving the interpretability of these systems is essential for wider acceptance.

As we move forward, rigorous clinical validation and seamless integration of AI into existing workflows are vital. Training healthcare providers on how to use these tools will help foster their adoption.

Finally, we must consider how AI can be adapted for different healthcare settings, especially in areas with limited resources. Many regions face significant barriers, including inadequate infrastructure and a shortage of trained personnel, which can hinder the effective implementation of advanced imaging and AI solutions. Developing portable and cost-effective AI-based diagnostic tools is essential to ensure accessibility and affordability for underserved populations. Collaborations with local healthcare providers can facilitate the creation of solutions that are not only technologically advanced but also culturally appropriate and feasible within existing healthcare frameworks. By prioritizing these adaptations, we can enhance the reach and impact of AI in cancer care, making it a valuable resource for all patients, regardless of their geographic or economic circumstances.

By addressing these challenges, we can work toward making AI a valuable part of cancer care that benefits all patients. In summary, while there are obstacles to integrating AI in cancer diagnostics, with continued research and collaboration, we can unlock its full potential to improve patient outcomes.

Data availability

No datasets were generated or analysed during the current study.

References

Abdollahzadeh B, Khodadadi N, Barshandeh S, Trojovský P, Gharehchopogh FS, El-kenawy E-SM, Abualigah L, Mirjalili S (2024) Puma optimizer (PO): A novel metaheuristic optimization algorithm and its application in machine learning. Cluster Computing: 1–49

Abgrall G, Holder AL, Chelly Dagdia Z, Zeitouni K, Monnet X (2024) Should AI models be explainable to clinicians? Crit Care 28(1):301

Abhisheka B, Biswas SK, Purkayastha B, Das D, Escargueil A (2023) Recent trend in medical imaging modalities and their applications in disease diagnosis: a review. Multimedia Tools Appl 83(14):43035–43070

Aghamohammadi A, Beheshti Shirazi SA, Banihashem SY, Shishechi S, Ranjbarzadeh R, Jafarzadeh Ghoushchi S, Bendechache M (2024) A deep learning model for ergonomics risk assessment and sports and health monitoring in self-occluded images. SIViP 18(2):1161–1173

Ahmed MI, Spooner B, Isherwood J, Lane M, Orrock E, Dennison A (2023) Syst Rev Barriers Implement Artif Intell Healthc Cureus 15(10):e46454

Al-Azri MH (2016) Delay in Cancer diagnosis: causes and possible solutions. Oman Med J 31(5):325–326

Alanazi A (2023) Clinicians’ views on using Artificial Intelligence in Healthcare: opportunities. Challenges beyond Cureus 15(9):e45255

Alowais SA, Alghamdi SS, Alsuhebany N, Alqahtani T, Alshaya AI, Almohareb SN, Aldairem A, Alrashed M, Bin Saleh K, Badreldin HA, Al Yami MS, Al Harbi S, Albekairy AM (2023) Revolutionizing healthcare: the role of artificial intelligence in clinical practice. BMC Med Educ 23(1):689

Arabahmadi M, Farahbakhsh R, Rezazadeh J (2022) Deep learning for smart Healthcare—A survey on brain tumor detection from medical imaging. Sensors 22(5): 1960

Araújo T, Aresta G, Castro E, Rouco J, Aguiar P, Eloy C, Polónia A, Campilho A (2017) Classif Breast cancer Histol Images Using Convolutional Neural Networks PLOS ONE 12(6):e0177544

Arora A, Alderman JE, Palmer J, Ganapathi S, Laws E, McCradden MD, Oakden-Rayner L, Pfohl SR, Ghassemi M, McKay F, Treanor D, Rostamzadeh N, Mateen B, Gath J, Adebajo AO, Kuku S, Matin R, Heller K, Sapey E, Sebire NJ, Cole-Lewis H, Calvert M, Denniston A, Liu X (2023) The value of standards for health datasets in artificial intelligence-based applications. Nat Med 29(11):2929–2938

ArteraAI (2024) Personalized Precision Medicine & AI Cancer Therapy. from https://artera.ai/

Atkinson N, Massett H, Mylks C, Hanna B, Deering M, Hesse B (2007) User-centered research on breast cancer patient needs and preferences of an internet-based clinical trial matching system. J Med Internet Res 9(2):e621

Azuaje F (2019) Artificial intelligence for precision oncology: beyond patient stratification. Npj Precision Oncol 3(1):6

Baydoun A, Jia AY, Zaorsky NG, Kashani R, Rao S, Shoag JE, Vince RA, Bittencourt LK, Zuhour R, Price AT, Arsenault TH, Spratt DE (2023) Artificial intelligence applications in prostate cancer. Prostate Cancer and Prostatic Diseases

Bethesda (2007) Understanding Cancer. Retrieved 11– 1, 2024, from https://www.ncbi.nlm.nih.gov/books/NBK20362/

Bhimwal MK, Mishra RK (2023) Modern Scientific and Technological discoveries: a new era of possibilities. Contemp Adv Sci Technol 6:37

Bi WL, Hosny A, Schabath MB, Giger ML, Birkbak NJ, Mehrtash A, Allison T, Arnaout O, Abbosh C, Dunn IF, Mak RH, Tamimi RM, Tempany CM, Swanton C, Hoffmann U, Schwartz LH, Gillies RJ, Huang RY, Aerts H (2019) Artificial intelligence in cancer imaging: clinical challenges and applications. CA Cancer J Clin 69(2):127–157

Boyanapalli A, Shanthini A (2023) Ovarian cancer detection in computed tomography images using ensembled deep optimized learning classifier. Concurrency Computation: Pract Experience 35(22):e7716

Brancati N, Anniciello AM, Pati P, Riccio D, Scognamiglio G, Jaume G, De Pietro G, Di Bonito M, Foncubierta A, Botti G, Gabrani M, Feroce F, Frucci M (2022) BRACS: A Dataset for BReAst Carcinoma Subtyping in H&E Histology Images. Database 2022.

Chelebian E, Avenel C, Kartasalo K, Marklund M, Tanoglidi A, Mirtti T, Colling R, Erickson A, Lamb AD, Lundeberg J, Wählby C (2021) Morphological features extracted by AI Associated with spatial transcriptomics in prostate. Cancer Cancers 13(19):4837

Chen SB, Novoa RA (2022) Artificial intelligence for dermatopathology: current trends and the road ahead. Semin Diagn Pathol 39(4):298–304

Chen MM, Terzic A, Becker AS, Johnson JM, Wu CC, Wintermark M, Wald C, Wu J (2022) Artificial intelligence in oncologic imaging. Eur J Radiol Open 9:100441

Cheng JY, Abel JT, Balis UGJ, McClintock DS, Pantanowitz L (2021) Challenges in the Development, Deployment, and regulation of Artificial Intelligence in Anatomic Pathology. Am J Pathol 191(10):1684–1692

Cheung HMC, Rubin D (2021) Challenges and opportunities for artificial intelligence in oncological imaging. Clin Radiol 76(10):728–736

Cifci D, Foersch S, Kather JN (2022) Artificial intelligence to identify genetic alterations in conventional histopathology. J Pathol 257(4):430–444

Cirillo D, Núñez-Carpintero I, Valencia A (2021) Artificial intelligence in cancer research: learning at different levels of data granularity. Mol Oncol 15(4):817–829

Crosby D, Bhatia S, Brindle KM, Coussens LM, Dive C, Emberton M, Esener S, Fitzgerald RC, Gambhir SS, Kuhn P, Rebbeck TR, Balasubramanian S (2022) Early Detect cancer Sci 375(6586):eaay9040

Dankwa-Mullan I, Weeraratne D (2022) Artificial intelligence and machine learning technologies in cancer care: addressing disparities, bias, and data diversity. Cancer Discov 12(6):1423–1427

Dixit S, Kumar A, Srinivasan K (2023) A Current Review of Machine Learning and Deep Learning Models in Oral Cancer Diagnosis: Recent Technologies, Open Challenges, and Future Research Directions. Diagnostics 13(7): 1353

Echle A, Grabsch HI, Quirke P, van den Brandt PA, West NP, Hutchins GGA, Heij LR, Tan X, Richman SD, Krause J, Alwers E, Jenniskens J, Offermans K, Gray R, Brenner H, Chang-Claude J, Trautwein C, Pearson AT, Boor P, Luedde T, Gaisa NT, Hoffmeister M and J. N., Kather (2020) Clinical-Grade Detection of Microsatellite Instability in Colorectal Tumors by Deep Learning. Gastroenterology 159(4): 1406–1416.e1411

Echle A, Rindtorff NT, Brinker TJ, Luedde T, Pearson AT, Kather JN (2021) Deep learning in cancer pathology: a new generation of clinical biomarkers. Br J Cancer 124(4):686–696

Ehteshami Bejnordi B, Veta M, Johannes P, van Diest B, van Ginneken N, Karssemeijer G, Litjens JA W. M. Van Der Laak and a. t. C. Consortium (2017). Diagnostic Assessment of Deep Learning Algorithms for Detection of Lymph Node Metastases in Women with. Breast Cancer JAMA 318(22): 2199–2210

Eklund M, Kartasalo K, Olsson H, Ström P (2019) The importance of study design in the application of artificial intelligence methods in medicine. Npj Digit Med 2(1):101

El-kenawy E-SM, Khodadadi N, Mirjalili S, Abdelhamid AA, Eid MM, Ibrahim A (2024) Greylag Goose optimization: nature-inspired optimization algorithm. Expert Syst Appl 238:122147

Fallahpoor M, Chakraborty S, Pradhan B, Faust O, Barua PD, Chegeni H, Acharya R (2024) Deep learning techniques in PET/CT imaging: a comprehensive review from sinogram to image space. Comput Methods Programs Biomed 243:107880

Farasati Far B (2023) Artificial intelligence ethics in precision oncology: balancing advancements in technology with patient privacy and autonomy. Explor Target Antitumor Ther 4(4):685–689

Fass L (2008) Imaging and cancer: a review. Mol Oncol 2(2):115–152

Folmsbee J, Liu X, Brandwein-Weber M, Doyle S (2018) Active deep learning: Improved training efficiency of convolutional neural networks for tissue classification in oral cavity cancer. 2018 IEEE 15th international symposium on biomedical imaging (ISBI 2018), IEEE

Frazer HM, Qin AK, Pan H, Brotchie P (2021) Evaluation of deep learning-based artificial intelligence techniques for breast cancer detection on mammograms: results from a retrospective study using a BreastScreen Victoria dataset. J Med Imaging Radiat Oncol 65(5):529–537

Geaney A, O’Reilly P, Maxwell P, James JA, McArt D, Salto-Tellez M (2023) Translation of tissue-based artificial intelligence into clinical practice: from discovery to adoption. Oncogene 42(48):3545–3555

Ghassemi M, Oakden-Rayner L, Beam AL (2021) The false hope of current approaches to explainable artificial intelligence in health care. Lancet Digit Health 3(11):e745–e750

Gilbert S, Fenech M, Hirsch M, Upadhyay S, Biasiucci A, Starlinger J (2021) Algorithm change protocols in the regulation of adaptive machine learning–based medical devices. J Med Internet Res 23(10):e30545

Glučina M, Lorencin A, Anđelić N, Lorencin I (2023) Cervical Cancer Diagnostics using machine learning algorithms and class balancing techniques. Appl Sci 13(2):1061

Goldenberg SL, Nir G, Salcudean SE (2019) A new era: artificial intelligence and machine learning in prostate cancer. Nat Reviews Urol 16(7):391–403

Gonçalves JPL, Bollwein C, Schwamborn K (2022) Mass Spectrom Imaging Spat Tissue Anal toward Personalized Med Life 12(7):1037

Gorrepati L (2024) Integrating AI with Electronic Health Records (EHRs) to Enhance Patient Care. Int J Health Sci 7:38–50

Govind Persad J Understanding the Legal and Ethical Challenges AI Poses in Oncology

Haggenmüller S, Maron RC, Hekler A, Utikal JS, Barata C, Barnhill RL, Beltraminelli H, Berking C, Betz-Stablein B, Blum A, Braun SA, Carr R, Combalia M, Fernandez-Figueras M-T, Ferrara G, Fraitag S, French LE, Gellrich FF, Ghoreschi K, Goebeler M, Guitera P, Haenssle HA, Haferkamp S, Heinzerling L, Heppt MV, Hilke FJ, Hobelsberger S, Krahl D, Kutzner H, Lallas A, Liopyris K, Llamas-Velasco M, Malvehy J, Meier F, Müller CSL, Navarini AA, Navarrete-Dechent C, Perasole A, Poch G, Podlipnik S, Requena L, Rotemberg VM, Saggini A, Sangueza OP, Santonja C, Schadendorf D, Schilling B, Schlaak M, Schlager JG, Sergon M, Sondermann W, Soyer HP, Starz H, Stolz W, Vale E, Weyers W, Zink A, Krieghoff-Henning E, Kather JN, von Kalle C, Lipka DB, Fröhling S, Hauschild A, Brinker (2021) Eur J Cancer 156:202–216 Skin cancer classification via convolutional neural networks: systematic review of studies involving human experts

Hanna M, Pantanowitz L, Jackson B, Palmer O, Visweswaran S, Pantanowitz J, Deebajah M, Rashidi H (2024) Ethical and Bias Considerations in Artificial Intelligence (AI)/Machine Learning. Modern Pathology: 100686

Hart SN, Flotte W, Norgan AF, Shah KK, Buchan ZR, Mounajjed T, Flotte TJ (2019) Classification of Melanocytic Lesions in selected and whole-slide images via Convolutional neural networks. J Pathol Inf 10(1):5

Hashemi Fotemi SG, Mannuru NR, Kumar Bevara RV, Mannuru A (2024) A systematic review of the Integration of Information Science, Artificial Intelligence, and Medical Engineering in Healthcare: current trends and future directions. Infosci Trends 1(2):29–42

Higaki T, Nakamura Y, Tatsugami F, Nakaura T, Awai K (2019) Improvement of image quality at CT and MRI using deep learning. Japanese J Radiol 37(1):73–80

Hirt J, Nordhausen T, Appenzeller-Herzog C, Ewald H (2020) Using citation tracking for systematic literature searching-study protocol for a scoping review of methodological studies and an expert survey. F1000 Research 9

Hollings N, Shaw P (2002) Diagnostic imaging of lung cancer. Eur Respir J 19(4):722–742

Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts HJ (2018) Artificial intelligence in radiology. Nat Rev Cancer 18(8):500–510

Hosny A, Bitterman DS, Guthier CV, Qian JM, Roberts H, Perni S, Saraf A, Peng LC, Pashtan I, Ye Z, Kann BH, Kozono DE, Christiani D, Catalano PJ, Aerts HJWL, Mak RH (2022) Clinical validation of deep learning algorithms for radiotherapy targeting of non-small-cell lung cancer: an observational study. Lancet Digit Health 4(9):e657–e666

Huang S, Yang J, Fong S, Zhao Q (2020) Artificial intelligence in cancer diagnosis and prognosis: opportunities and challenges. Cancer Lett 471:61–71

Hubbell E, Clarke CA, Aravanis AM, Berg CD (2021) Modeled reductions in late-stage cancer with a multi-cancer early detection test. Cancer Epidemiol Biomarkers Prev 30(3):460–468

Hunter B, Hindocha S, Lee RW (2022) role Artif Intell Early cancer Diagnosis Cancers 14(6):1524

Illimoottil M, Ginat D (2023) Recent advances in Deep Learning and Medical Imaging for Head and Neck Cancer Treatment: MRI, CT, and PET scans. Cancers 15(13):3267

Jenny F, Debra H, Claudia Mazo V, William W, Catherine M, Arman R, Niamh A, Amy C, Aura GC, William G (2021) Future of biomarker evaluation in the realm of artificial intelligence algorithms: application in improved therapeutic stratification of patients with breast and prostate cancer. J Clin Pathol 74(7):429

Jiang X, Hu Z, Wang S, Zhang Y (2023) Deep Learn Med image-based cancer Diagnosis Cancers 15(14):3608

Juluru K, Shih H-H, Keshava Murthy KN, Elnajjar P, El-Rowmeim A, Roth C, Genereaux B, Fox J, Siegel E, Rubin DL (2021a) Integrating Al algorithms into the clinical workflow. Radiol Artif Intell 3(6):e210013

Kaur A, Chauhan APS, Aggarwal AK (2021) An automated slice sorting technique for multi-slice computed tomography liver cancer images using convolutional network. Expert Syst Appl 186:115686

Khayyam H, Madani A, Kafieh R, Hekmatnia A (2023) Artificial Intelligence in Cancer diagnosis and therapy. MDPI-Multidisciplinary Digital Publishing Institute

Khosravi M, Zare Z, Mojtabaeian SM, Izadi R (2024) Artificial Intelligence and decision-making in Healthcare: a thematic analysis of a systematic review of reviews. Health Serv Res Manag Epidemiol 11:23333928241234863

Kiani A, Uyumazturk B, Rajpurkar P, Wang A, Gao R, Jones E, Yu Y, Langlotz CP, Ball RL, Montine TJ, Martin BA, Berry GJ, Ozawa MG, Hazard FK, Brown RA, Chen SB, Wood M, Allard LS, Ylagan L, Ng AY, Shen J (2020) Impact of a deep learning assistant on the histopathologic classification of liver cancer. Npj Digit Med 3(1):23

Kriti J, Virmani, Agarwal R (2022) A characterization Approach for the review of CAD systems designed for breast tumor classification using B-Mode Ultrasound images. Arch Comput Methods Eng 29(3):1485–1523

Kumar Y, Gupta S, Singla R, Hu Y-C (2022) A systematic review of artificial intelligence techniques in cancer prediction and diagnosis. Arch Comput Methods Eng 29(4):2043–2070

Li Z, Liu F, Yang W, Peng S, Zhou J (2021) A survey of convolutional neural networks: analysis, applications, and prospects. IEEE Trans Neural Networks Learn Syst 33(12):6999–7019

Limkin EJ, Sun R, Dercle L, Zacharaki EI, Robert C, Reuzé S, Schernberg A, Paragios N, Deutsch E, Ferté C (2017) Promises and challenges for the implementation of computational medical imaging (radiomics) in oncology. Ann Oncol 28(6):1191–1206

Lipscomb CE (2000) Medical subject headings (MeSH). Bull Med Libr Assoc 88(3):265

Litjens G, Sánchez CI, Timofeeva N, Hermsen M, Nagtegaal I, Kovacs I, Hulsbergen C, van de Kaa P, Bult B, van Ginneken, van der Laak J (2016) Deep learning as a tool for increased accuracy and efficiency of histopathological diagnosis. Sci Rep 6(1):26286

Liu Y, Kohlberger T, Norouzi M, Dahl GE, Smith JL, Mohtashamian A, Olson N, Peng LH, Hipp JD, Stumpe MC (2018) Artificial intelligence–based breast Cancer nodal metastasis detection: insights into the Black Box for pathologists. Arch Pathol Lab Med 143(7):859–868

Liu K, Mokhtari M, Li B, Nofallah S, May C, Chang O, Knezevich S, Elmore J, Shapiro L (2021) Learning melanocytic proliferation segmentation in histopathology images from imperfect annotations. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition

Martín Noguerol T, Paulano-Godino F, Martín-Valdivia MT, Menias CO, Luna A (2019) Strengths, weaknesses, opportunities, and Threats Analysis of Artificial Intelligence and Machine Learning Applications in Radiology. J Am Coll Radiol 16(9 Pt B):1239–1247

Mosquera-Zamudio A, Launet L, Tabatabaei Z, Parra-Medina R, Colomer A, Oliver Moll J, Monteagudo C, Janssen E, Naranjo V (2023) Deep learning for skin melanocytic tumors in whole-slide images. Syst Rev Cancers 15(1):42

Mu W, Schabath MB, Gillies RJ (2022) Images are data: challenges and opportunities in the clinical translation of Radiomics. Cancer Res 82(11):2066–2068

Muhammad D, Bendechache M (2024) Unveiling the black box: a systematic review of Explainable Artificial Intelligence in medical image analysis. Comput Struct Biotechnol J 24:542–560

Najjar R (2023) Redefining radiology: a review of artificial intelligence integration in medical imaging. Diagnostics 13(17):2760

Nakaura T, Higaki T, Awai K, Ikeda O, Yamashita Y (2020) A primer for understanding radiology articles about machine learning and deep learning. Diagn Interv Imaging 101(12):765–770

Olatunji I, Cui F (2023) Multimodal AI for prediction of distant metastasis in carcinoma patients. Front Bioinf 3:1131021

Oystacher T, Blasco D, He E, Huang D, Schear R, McGoldrick D, Link B, Yang LH (2018) Understanding stigma as a barrier to accessing cancer treatment in South Africa: implications for public health campaigns. Pan Afr Med J 29(1):1–12

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, Shamseer L, Tetzlaff JM, Akl EA, Brennan SE, Chou R, Glanville J, Grimshaw JM, Hróbjartsson A, Lalu MM, Li T, Loder EW, Mayo-Wilson E, McDonald S, McGuinness LA, Stewart LA, Thomas J, Tricco AC, Welch VA, Whiting P, Moher D (2021) The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ 372: n71

Pang Y, Wang H, Li H (2022) Medical Imaging Biomarker Discovery and Integration Towards AI-Based Personalized Radiotherapy. Frontiers in Oncology 11

Patel RH, Foltz EA, Witkowski A, Ludzik J (2023) Analysis of Artificial Intelligence-based approaches Applied to Non-invasive Imaging for Early Detection of Melanoma. Syst Rev Cancers 15(19):4694

Pell R, Oien K, Robinson M, Pitman H, Rajpoot N, Rittscher J, Snead D C. Verrill and o. b. o. t. U. N. C. R. I. C.-M. P. q. a. w. group (2019). The use of digital pathology and image analysis in clinical trials. J Pathology: Clin Res 5(2): 81–90

Pesapane F, Volonté C, Codari M, Sardanelli F (2018) Artificial intelligence as a medical device in radiology: ethical and regulatory issues in Europe and the United States. Insights into Imaging 9:745–753

Pinto-Coelho L (2023) How artificial intelligence is shaping medical imaging technology: a survey of innovations and applications. Bioeng (Basel) 10(12):1435

Pulaski H, Harrison SA, Mehta SS, Sanyal AJ, Vitali MC, Manigat LC, Hou H, Madasu Christudoss SP, Hoffman SM, Stanford-Moore A, Egger R, Glickman J, Resnick M, Patel N, Taylor CE, Myers RP, Chung C, Patterson SD, Sejling A-S, Minnich A, Baxi V, Subramaniam GM, Anstee QM, Loomba R, Ratziu V, Montalto MC, Anderson NP, Beck AH, Wack K (2024) Clinical validation of an AI-based pathology tool for scoring of metabolic dysfunction-associated steatohepatitis. Nature Medicine

Pulumati A, Pulumati A, Dwarakanath BS, Verma A, Papineni RVL (2023) Technological advancements in cancer diagnostics: improvements and limitations. Cancer Rep (Hoboken) 6(2):e1764

Raciti P, Sue J, Retamero JA, Ceballos R, Godrich R, Kunz JD, Casson A, Thiagarajan D, Ebrahimzadeh Z, Viret J, Lee D, Schüffler PJ, DeMuth G, Gulturk E, Kanan C, Rothrock B, Reis-Filho J, Klimstra DS, Reuter V, Fuchs TJ (2023) Clinical validation of Artificial Intelligence-Augmented Pathology diagnosis demonstrates significant gains in diagnostic accuracy in prostate Cancer detection. Arch Pathol Lab Med 147(10):1178–1185

Rezk E, Eltorki M, El-Dakhakhni W (2022) Improving skin Color Diversity in Cancer Detection: Deep Learning Approach. JMIR Dermatol 5(3):e39143

Richards M (2009) The size of the prize for earlier diagnosis of cancer in England. Br J Cancer 101(2):S125–S129

Rubin GD (2015) Lung nodule and cancer detection in computed tomography screening. J Thorac Imaging 30(2):130–138

Rudin C (2019) Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat Mach Intell 1(5):206–215

Saeidnia HR, Hashemi Fotami SG, Lund B, Ghiasi N (2024) Ethical considerations in artificial intelligence interventions for mental health and well-being: ensuring responsible implementation and impact. Social Sci 13(7):381

Sandbank J, Bataillon G, Nudelman A, Krasnitsky I, Mikulinsky R, Bien L, Thibault L, Albrecht Shach A, Sebag G, Clark DP, Laifenfeld D, Schnitt SJ, Linhart C, Vecsler M, Vincent-Salomon A (2022) Validation and real-world clinical application of an artificial intelligence algorithm for breast cancer detection in biopsies. npj Breast Cancer 8(1):129

Savage N (2020) How AI is improving cancer diagnostics. Nature 579(7800):S14–S14

Schlemmer H-P, Bittencourt LK, D’Anastasi M, Domingues R, Khong P-L, Lockhat Z, Muellner A, Reiser MF, Schilsky RL, Hricak H (2018) Global challenges for Cancer Imaging. J Global Oncol 4: 1–10

Schuurmans M, Alves N, Vendittelli P, Huisman H, Hermans J (2022) Setting the Research Agenda for Clinical Artificial Intelligence in pancreatic adenocarcinoma imaging. Cancers 14(14):3498

Serag A, Ion-Margineanu A, Qureshi H, McMillan R, Saint Martin M-J, Diamond J, O’Reilly P, Hamilton P (2019) Translational AI and deep learning in Diagnostic Pathology. Frontiers in Medicine 6

Shapiro MA, Stuhlmiller TJ, Wasserman A, Kramer GA, Federowicz B, Hoos W, Kaufman Z, Chuyka D, Mahoney W, Newton ME (2024) AI-augmented clinical decision support in a patient-centric precision oncology registry. AI Precision Oncol 1(1):58–68

Sheikh TS, Kim J-Y, Shim J, Cho M (2022) Unsupervised learning based on multiple descriptors for WSIs. Diagnosis Diagnostics 12(6):1480

Siegel RL, Miller KD, Fuchs HE, Jemal A (2022) Cancer statistics, 2022. CA: a cancer journal for clinicians 72(1)

Smith V, Devane D, Begley CM, Clarke M (2011) Methodology in conducting a systematic review of systematic reviews of healthcare interventions. BMC Med Res Methodol 11(1):15

Steele L, Tan XL, Olabi B, Gao JM, Tanaka RJ, Williams HC (2023) Determining the clinical applicability of machine learning models through assessment of reporting across skin phototypes and rarer skin cancer types: a systematic review. J Eur Acad Dermatol Venereol 37(4):657–665

Steiner DF, Chen P-HC, Mermel CH (2021) Closing the translation gap: AI applications in digital pathology. Biochimica et Biophysica Acta (BBA) -. Reviews Cancer 1875(1):188452

Su S, Li X (2021) Dive into single, seek out multiple: probing Cancer metastases via single-cell sequencing and imaging techniques. Cancers 13(5):1067

Suarez-Ibarrola, R., A. Sigle, M. Eklund, D. Eberli, A. Miernik, M. Benndorf, F. Bamberg and C. Gratzke (2022). Artificial Intelligence in Magnetic Resonance Imaging–based Prostate Cancer Diagnosis: Where Do We Stand in 2021? European Urology Focus 8(2): 409–417