Abstract

In this paper we introduce and develop the notion of minimal subspaces in the framework of algebraic and topological tensor product spaces. This mathematical structure arises in a natural way in the study of tensor representations. We use minimal subspaces to prove the existence of a best approximation, for any element in a Banach tensor space, by means of a tensor given in a typical representation format (Tucker, hierarchical, or tensor train). We show that this result holds in a tensor Banach space with a norm stronger than the injective norm and in an intersection of finitely many Banach tensor spaces satisfying some additional conditions. Examples using topological tensor products of standard Sobolev spaces are given.

Similar content being viewed by others

1 Introduction

Recently, there has been an increased interest in numerical methods which make use of tensors. In particular, for high spatial dimensions one must take care that the numerical cost (in time and storage) is linear in the space dimension and does not increase exponentially. For three spatial dimensions, these methods can be applied with great success.

A first family of applications using tensor decompositions concerns the extraction of information from complex data. It has been used in many areas such as psychometrics [6, 26], chemometrics [2], analysis of turbulent flows [3], image analysis and pattern recognition [28], and data mining. Another family of applications concerns the compression of complex data (for storage or transmission), also introduced in many areas such as signal processing [19] or computer vision [30]. A survey of tensor decompositions in multilinear algebra and an overview of possible applications can be found in the review paper [18]. In these applications, the aim is to compress the information as much as possible or to extract a few modes representing some features to be analysed. The use of tensor product approximations is also of growing interest in numerical analysis for the solution of problems defined in high-dimensional tensor spaces, such as partial differential equations (PDEs) arising in stochastic calculus [1, 5, 11] (e.g., the Fokker-Planck equation), stochastic parametric PDEs arising in uncertainty quantification with spectral approaches [9, 22, 23], and quantum chemistry (cf., e.g., [29]). For details, we refer to [14].

Let d vector spaces V j be given (assume, e.g., that \(V_{j} =\mathbb{R}^{n_{j}}\)). The generated tensor space is denoted by \(\mathbf{V} ={ }_{a}\bigotimes_{j=1}^{d}V_{j}\), where \({}_{a}\bigotimes_{j=1}^{d}V_{j} = \operatorname{span} \{ \bigotimes_{j=1}^{d} v_{j}: v_{j} \in V_{j} \text{ and } 1 \le j \le d\ \} \) (assume, e.g., that ⨂ represents the Kronecker product). A typical representation format is the tensor subspace or Tucker format

where I=I 1×⋯×I d is a multi-index set with I j ={1,…,r j }, r j ≤dim(V j ), \(b_{i_{j}}^{(j)}\in V_{j}\) (i j ∈I j ) are basis vectors, and a i ∈ℝ. Here, i j are the components of i=(i 1,…,i d ). The data size is determined by the numbers r j collected in the tuple r:=(r 1,…,r d ). The set of all tensors representable by (1.1) with fixed r is

Here, it is important that the description (1.1) with the vectors \(b_{i}^{(j)}\) can be replaced by the generated subspace \(U_{j}=\operatorname*{span}\{b_{i}^{(j)}:i\in I_{j}\}\). Note that \(\mathcal{T}_{\mathbf{r}}\) is neither a subspace of V nor a convex set.

A question about minimal subspaces arises naturally from (1.2): Given a tensor v∈V, what are the subspaces U j ⊂V j with minimal dimension r j such that \(\mathbf{v}\in\bigotimes_{j=1}^{d}U_{j}\)?

Another natural question is the approximation of some v∈V by \(\mathbf{u}\in\mathcal{T}_{\mathbf{r}}\) for a fixed r: Find \(\mathbf{u}_{\mathrm{best}}\in\mathcal{T}_{\mathbf{r}}\) such that ∥v−u best∥ equals

for a suitable norm. In the finite-dimensional case, compactness arguments show the existence of a best approximation. In this paper we discuss this question in the infinite-dimensional case (i.e., dim(V j )=∞, while still dim(U j )=r j <∞).

Here, one should note that tensors have properties which are unexpected compared with matrix theory. For instance, one can define another tensor format (r-term or canonical format) as follows. Fix an integer r∈ℕ0 and set

For d=2, \(\mathcal{R}_{r}\) corresponds to matrices of \(\operatorname{rank}\leq r\). Seeking a solution of \(\inf \{ \Vert \mathbf{v}-\mathbf{u}\Vert :\mathbf{u}\in\mathcal{R}_{r} \} \), one finds examples of v∈V even for finite-dimensional V, but d≥3, such that there is no minimiser \(\mathbf{u}_{\mathrm{best}}\in\mathcal{R}_{r}\) (cf. [7]).

There are other formats with even better properties than (1.2) (cf. [15, 24]), which are again related to subspaces. In these cases, further subspaces like, e.g., U 12⊂U 1⊗U 2 appear. The representation using the hierarchical format from [15] uses subspaces U 12 with dimension not exceeding a given bound. For these formats, the results of this paper also apply, e.g., they ensure the existence of best approximations.

There are practical reasons for the interest in the existence of a best approximation. Truncation of a tensor v to a certain format tries to minimise ∥v−u∥. If a best approximation does not exist, one has to expect a numerical instability as ∥v−u∥ approaches the infimum. Even if V is finite dimensional, it is often a discrete version of a function space. If the infinite-dimensional function space allows a best approximation, one can expect uniform stability (i.e., independent of the discretisation parameters).

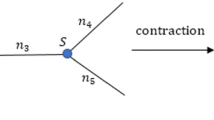

The hierarchical format of [15] is connected with a certain dimension partition tree. In particular, the approach in [24] using a linear tree corresponding to the matrix product systems is applied in quantum chemistry (cf. [29]). There are approaches using a general graph structure (cf. [17]); however, as soon as loops are contained in a graph, the parameters of its representation cannot be described by dimensions of certain subspaces, and the results of this paper do not apply.

In the sequel, we define minimal subspaces \(U_{j}^{\min}(\mathbf{v})\) for algebraic tensors \(\mathbf{v}\in{}_{a}\bigotimes_{j=1}^{d}V_{j}\) (cf. Theorem 2.17) as well as for topological tensors \(\mathbf{v}\in{}_{\Vert \cdot \Vert }\bigotimes_{j=1}^{d}V_{j}\) (cf. Definition 3.11). The main result is given in Theorem 3.15, where we show that for weakly convergent sequences v n ⇀v (see Definition 3.12), the dimension of the limiting minimal subspace is bounded by

This is the key property which allows us to derive the desired properties.

Finally, we discuss the nature of the closed subspace \({}_{\Vert \cdot \Vert }\bigotimes_{j=1}^{d}\overline{U_{j}^{\min}(\mathbf{v})}^{\Vert \cdot \Vert _{j}}\). In the algebraic case, we have by definition that \(\mathbf{v}\in{}_{a}\bigotimes_{j=1}^{d}U_{j}^{\min}(\mathbf{v})\). This property does not seem obvious for a general topological tensor \(\mathbf{v}\in{}_{\Vert \cdot \Vert }\bigotimes_{j=1}^{d}V_{j}\), but we give sufficient conditions for this property. In particular, it holds for Hilbert tensor spaces.

The paper is organised as follows. In Sect. 2, we introduce the concept of minimal subspaces of an algebraic tensor and describe a characterisation. In Sect. 3, minimal subspaces are defined and characterised for Banach tensor spaces. Finally, Sect. 4 is devoted to the proof of the existence of best approximation tensors in \(\mathcal{T}_{\mathbf{r}}\) in a Banach tensor space.

2 Minimal Subspaces in an Algebraic Tensor Space

In the following, X is a Banach space with norm ∥⋅∥=∥⋅∥ X . While X′ denotes the algebraic dual, X ∗ is the dual space of functionals with bounded dual norm \(\Vert \cdot \Vert ^{\ast}=\Vert \cdot \Vert _{X^{\ast}}\):

This implies that we recover the ∥⋅∥ X norm from the dual norm via

By \(\mathcal{L}(X,Y)\) we denote the space of continuous linear mapping from X into Y. The corresponding operator norm is written as ∥⋅∥ Y←X . \(\mathcal{L}(X,Y)\) is a subspace of the space L(X,Y) of all linear mappings (without topology).

Remark 2.1

Let {x ν ∈X:1≤ν≤n} be linearly independent. Then there are functionals φ ν ∈X ∗ such that φ ν (x μ )=δ νμ . The functionals \(( \varphi_{\nu} )_{\nu=1}^{n}\) are called dual to \(( x_{\nu} )_{\nu=1}^{n}\).

The following result is known as the Lemma of Auerbach and is proved, e.g., in Meise–Vogt [21, Lemma 10.5].

Lemma 2.2

For any n-dimensional subspace of a Banach space X, there exists a basis {x ν :1≤ν≤n} and a corresponding dual basis {φ ν :1≤ν≤n}⊂X ∗ such that ∥x ν ∥=∥φ ν ∥∗=1 (1≤ν≤n).

2.1 Algebraic Tensor Spaces

2.1.1 Definitions and Elementary Facts

Concerning the definition of the algebraic tensor space \({}_{a}\bigotimes_{j=1}^{d}V_{j}\) generated from vector spaces V j (1≤j≤d), we refer to Greub [12]. As the underlying field we choose ℝ, but the results hold also for ℂ. The suffix ‘a’ in \({}_{a}\bigotimes_{j=1}^{d}V_{j}\) refers to the ‘algebraic’ nature. By definition, all elements of

are finite linear combinations of elementary tensors \(\mathbf{v}=\bigotimes_{j=1}^{d}v_{j}\) (v j ∈V j ). In Sect. 3, we shall discuss the Banach space obtained as the completion of \({}_{a}\bigotimes_{j=1}^{d}V_{j}\).

Consider a tensor product \(\mathbf{V}={}_{a}\bigotimes_{j=1}^{d}V_{j}\) of vector spaces and a fixed tensor v∈V. Among the subspaces U j ⊂V j with

we are looking for the smallest ones. We have to show that a minimal subspace U j exists and that these minimal subspaces can be obtained simultaneously in (2.3) for all 1≤j≤d. We approach the problem in Sect. 2.2.1 for the matrix case d=2. In Sect. 3.1 we replace the tensor product of vector spaces by a tensor product of Banach spaces. The interesting question is how these minimal subspaces behave as a function of v.

An obvious advantage of the formulation (2.3) is the fact that the U j are of finite dimension even if dim(V j )=∞, as stated below.

Lemma 2.3

For \(\mathbf{v}\in{}_{a}\bigotimes_{j=1}^{d}V_{j}\) there are always finite-dimensional subspaces U j ⊂V j satisfying (2.3).

Proof

By definition of the algebraic tensor space, \(\mathbf{v}\in{}_{a}\bigotimes_{j=1}^{d}V_{j}\) means that there is a finite linear combination

for some n∈ℕ0 and \(v_{j}^{(\nu)}\in V_{j}\). Define

Then \(\mathbf{v}\in\mathbf{U}:={}_{a}\bigotimes_{j=1}^{d}U_{j}\) proves (2.3) with subspaces of dimension dim(U j )≤n. □

The following well-known result is formulated for d=2.

Lemma 2.4

For any tensor v∈V⊗ a W there is an r∈ℕ0 and a representation

with linearly independent vectors {v i :1≤i≤r}⊂V and {w i :1≤i≤r}⊂W.

Proof

Take any representation \(\mathbf{v}=\sum_{i=1}^{n}v_{i}\otimes w_{i}\). If, e.g., the {v i :1≤i≤n} are not linearly independent, one v i can be expressed by the others. Without loss of generality assume \(v_{n}=\sum_{i=1}^{n-1}\alpha_{i}v_{i}\). Then

shows that x possesses a representation with only n−1 terms:

Since each reduction step decreases the number of terms by one, this process terminates; i.e., we obtain a representation with linearly independent v i and w i . □

In accordance with the usual matrix rank we introduce the following definition.

Definition 2.5

The number r appearing in Lemma 2.4 will be called the rank of the tensor v and denoted by \(\operatorname{rank}(\mathbf{v})\).

The following notation and definitions will be useful. We recall that L(V,W) is the space of linear maps from V into W, while V′=L(V,ℝ) is the algebraic dual. For metric spaces, \(\mathcal{L}(V,W)\) denotes the continuous linear maps, while V ∗ is the topological dual.

Let \(\mathcal{I}:=\{1,\ldots,d\}\) be the index set of the ‘spatial directions’. In the sequel, the index sets \(\mathcal{I}\backslash\{j\}\) will appear. Here, we use the abbreviations

Similarly, elementary tensors ⨂ k≠j v (j) are denoted by v [j].

For vector spaces V j and W j over ℝ, let linear mappings A j :V j →W j (1≤j≤d) be given. Then the definition of the elementary tensor

is given by

Note that (2.6) extends uniquely to a linear mapping A:V→W.

Remark 2.6

-

(a)

Let \(\mathbf{V}:={}_{a}\bigotimes_{j=1}^{d}V_{j}\) and \(\mathbf{W}:= {}_{a}\bigotimes_{j=1}^{d}W_{j}\). Then the linear combinations of tensor products of linear mappings \(\mathbf{A}=\bigotimes_{j=1}^{d}A_{j}\) defined by means of (2.6) form a subspace of L(V,W):

$${}_{a}\bigotimes_{j=1}^{d}L(V_{j},W_{j}) \subset L(\mathbf{V},\mathbf{W}). $$ -

(b)

The special case of W j =ℝ for all j (implying W=ℝ) reads as \({}_{a\!}\bigotimes_{j=1}^{d}V_{j}^{\prime}\subset\mathbf{V}^{\prime}\).

-

(c)

If dim(V j )<∞ and dim(W j )<∞ for all j, the inclusion ‘⊂’ in (a) and (b) may be replaced by ‘=’. This can be easily verified by just checking the dimensions of the spaces involved.

Often, mappings \(\mathbf{A}=\bigotimes_{j=1}^{d}A_{j}\) will appear, where most of the A k are the identity (and therefore V k =W k ). If A j ∈L(V j ,W j ) for one j, we use the following notation:

provided that it is obvious what component j is meant. By the multiplication rule \(( \bigotimes_{j=1}^{d}A_{j} ) \circ ( \bigotimes_{j=1}^{d}B_{j} ) =\bigotimes_{j=1}^{d} ( A_{j}\circ B_{j} ) \) and since id∘A j =A j ∘id, the following identityFootnote 1 holds for j≠k:

(in the first line we assume j<k). Proceeding inductively with this argument over all indices, we obtain

If W j =ℝ, i.e., if \(A_{j}=\varphi_{j}\in V_{j}^{\prime}\) is a linear form, then id [j]⊗φ j ∈L(V,V [j]) is used to denote id⊗⋯⊗id⊗φ j ⊗id⊗⋯⊗id defined by

Thus, if \(\boldsymbol{\varphi}=\otimes_{j=1}^{d}\varphi_{j}\in\bigotimes_{j=1}^{d}V_{j}^{\prime}\), we can also write

Consider again the splitting of \(\mathbf{V}={}_{a}\bigotimes_{j=1}^{d}V_{j}\) into V=V j ⊗ a V [j] with V [j]:= a ⨂ k≠j V k . For a linear form \(\boldsymbol{\varphi}_{[j]}\in\mathbf{V}_{[j]}^{\prime}\), the notation id j ⊗φ [j]∈L(V,V j ) is used for the mapping

If \(\boldsymbol{\varphi}_{[j]}=\bigotimes_{k\neq j}\varphi_{k}\in{}_{a}\bigotimes_{k\neq j}V_{k}^{\prime}\) is an elementary tensor,Footnote 2 \(\boldsymbol{\varphi}_{[j]} ( \bigotimes_{k=1}^{d}v^{(k)} ) =\prod_{k\neq j}\varphi_{k} ( v^{(k)} ) \) holds in (2.7e). Finally, we can write (2.7d) as

2.1.2 Matricisation

Definition 2.7

For \(j\in\mathcal{I}=\{1,\ldots,d\}\), the map \(\mathcal{M}_{j}\) is defined as the isomorphism

In the finite-dimensional case of \(V_{k}=\mathbb{R}^{n_{k}}\), the tensor space V j ⊗ a V [j] of order 2 may be considered as a matrix from \(\mathbb{R}^{n_{j}\times n_{[j]}}\), where n [j]=∏ k≠j n k . Then, \(\mathcal{M}_{j}\) maps a tensor entry v[i 1,…,i j ,…,i d ] into the matrix entry \(( \mathcal{M}_{j}(\mathbf{v}) ) [i_{j}, ( i_{1},\ldots,\allowbreak i_{j-1},i_{j+1},\ldots,i_{d} ) ]\). As long as we do not consider matrix properties which depend on the ordering of the index set, we need not introduce an ordering of the (d−1)-tuple (i 1,…,i j−1,i j+1,…,i d ).

Example 2.8

Consider a tensor \(\mathbf{v}=\sum_{i=1}^{3}\sum_{j=1}^{2}\sum_{k=1}^{3}a_{ijk}v_{i}\otimes w_{j}\otimes v_{k}\in\mathbb{R}^{3}\otimes \mathbb{R}^{2}\otimes\mathbb{R}^{3}\), where {v 1,v 2,v 3} is a basis of ℝ3 and {w 1,w 2} a basis of ℝ2. Then \(\mathcal{M}_{2}(v_{i}\otimes w_{j}\otimes v_{k}) =w_{j}\otimes(v_{i}\otimes v_{k})\in\mathbb{R}^{2}\otimes\mathbb{R}^{9}\). The lexicographical ordering of (i,k) leads to the matrix

Next, we restrict the considerations to finite-dimensional V k . Since tensor products of two vectors can be interpreted as matrices, the mapping \(\mathcal{M}_{j}\) is named ‘matricisation’ (or ‘unfolding’). The interpretation of tensors v as matrices enables us to transfer the matrix terminology to v. In particular, we may define the rank of \(\mathcal{M}_{j}(\mathbf{v})\) as a property of v.

Definition 2.9

Let dim(V k )<∞ \(( k\in\mathcal{I} ) \). For all \(j\in\mathcal{I}\) we define

Hitchcock [16, p. 170] (1927) introduced \(\operatorname{rank}_{j}(\mathbf{v})\) as ‘the rank on the jth index’. For infinite-dimensional vector spaces V j , the generalisation is given by \(\operatorname{rank}_{j}(\mathbf{v}):=\dim U_{j}^{\min}(\mathbf{v})\), where the minimal subspaces \(U_{j}^{\min}(\mathbf{v})\) will be defined in Sect. 2.2.

The next result extends Lemma 2.4.

Lemma 2.10

Assume that \(\mathbf{v}\in{}_{a}\bigotimes_{j=1}^{d}V_{j}\) with \(\operatorname{rank}(\mathbf{v})=r\) and \(\mathbf{v}=\sum_{\nu=1}^{r}\bigotimes_{j=1}^{d}v_{\nu}^{(j)}\). Then for each 1≤j≤d, the elementary tensors

are linearly independent in a ⨂ k≠j V k .

Proof

Consider, without loss of generality, the case j=1. If the tensors \(\{\mathbf{v}_{\nu}^{[1]}:1\leq\nu\leq r\}\) are linearly dependent, we also may assume, without loss of generality, that \(\mathbf{v}_{r}^{[1]}\) may be expressed as \(\mathbf{v}_{r}^{[1]}=\sum_{\nu=1}^{r-1}\beta_{\nu}\mathbf{v}_{\nu}^{[1]}\). Then

implies that \(\operatorname{rank}(\mathbf{v})<r\) in contradiction to the minimality of r. □

2.2 Minimal Subspaces

2.2.1 Case d=2

The matrix case d=2 will serve as the start of an induction. To ensure the existence of minimal subspaces U 1,U 2 with v∈U 1⊗U 2, we need a lattice structure, which is the subject of the next lemma.

Lemma 2.11

Assume that X i and Y i are subspaces of V i for i=1,2. Then

Proof

It is clear that (X 1∩Y 1)⊗ a (X 2∩Y 2)⊂(X 1⊗ a X 2)∩(Y 1⊗ a Y 2). It remains to show that v∈X 1⊗ a X 2 and v∈Y 1⊗ a Y 2 imply that v∈(X 1∩Y 1)⊗ a (X 2∩Y 2). By assumption, v has the two representations

From Lemma 2.4, we may assume that \(\{x_{1}^{(\nu )}\}\), \(\{x_{2}^{(\nu)}\}\), \(\{y_{1}^{(\nu)}\}\), \(\{y_{2}^{(\nu)}\}\) are linearly independent. The dual functionals \(\xi_{2}^{(\nu)}\in X_{2}\) of \(\{x_{2}^{(\nu)}\}\) satisfy \(\xi_{2}^{(\nu)}(x_{2}^{(\mu)})=\delta_{\nu\mu}\) (cf. Remark 2.1). Application of \(\mathit{id}_{1}\otimes\xi_{2}^{(\mu)}\) to the first representation yields \((\mathit{id}_{1}\otimes\xi_{2}^{(\mu )}) (\mathbf{v})=x_{1}^{(\mu)}\), while the second representation leads to \(\sum_{\nu=1}^{n_{y}}\xi_{\mu}^{(2)}(y_{2}^{(\nu)}) y_{1}^{(\nu)}\). The resulting equation \(x_{1}^{(\mu)}=\sum_{\nu=1}^{n_{y}}\xi_{2}^{(\mu)} (y_{2}^{(\nu)}) y_{1}^{(\nu)}\) shows that \(x_{1}^{(\nu)}\in Y_{1}\). Using the dual functionals \(\xi_{1}^{(\mu)}\) of \(\{x_{1}^{(\nu)}\}\) and applying \(\xi_{1}^{(\mu)}\otimes \mathit{id}_{2}\) to v prove \(x_{2}^{(\nu)}\in Y_{2}\). Hence \(x_{i}^{(\nu)}\in X_{i}\cap Y_{i}\) is shown, i.e., v∈(X 1∩Y 1)⊗ a (X 2∩Y 2). □

Definition 2.12

For a tensor v∈V 1⊗ a V 2, the minimal subspaces are denoted by \(U_{j}^{\min}(\mathbf{v})\) (j=1,2) defined by the property that v∈U 1⊗ a U 2 implies \(U_{i}^{\min}(\mathbf{v})\subset U_{j}\) (j=1,2), while \(\mathbf{v}\in U_{1}^{\min}(\mathbf{v})\otimes_{a}U_{2}^{\min}(\mathbf{v})\).

For each v, we introduce the family \(\mathcal{F}(\mathbf{v})\) as the set of pairs (U 1,U 2) of subspaces with the property v∈U 1⊗ a U 2⊂V 1⊗ a V 2. By Lemma 2.11, we can write

Hereby, the existence and uniqueness of minimal subspaces \(U_{j}^{\min}(\mathbf{v})\) are guaranteed.

Lemma 2.13

Assume that \(\mathbf{v}=\sum_{\nu=1}^{r}v_{1}^{(\nu)}\otimes w_{2}^{(\nu)}\) with linearly independent \(\{v_{1}^{(\nu )}:1\leq\nu\leq r\}\) and \(\{w_{2}^{(\nu)}:1\leq\nu\leq r\}\). Then these vectors span the minimal spaces:

Proof

Apply the proof of Lemma 2.11 to \(X_{1}=\operatorname*{span}\{v_{2}^{(\nu)}:1\leq\nu\leq r\}\), \(X_{2} =\operatorname*{span}\{w_{2}^{(\nu)}:1\leq\nu\leq r\}\) and \(Y_{j}=U_{j}^{\min }(\mathbf{v})\). It shows that \(X_{j}\subset U_{j}^{\min}(\mathbf{v})\). Since a strict inclusion is excluded, \(X_{j}=U_{j}^{\min}(\mathbf{v})\) proves the assertion. □

Proposition 2.14

Let v∈V 1⊗ a V 2. Then the minimal subspaces \(U_{1}^{\min}(\mathbf{v})\) and \(U_{2}^{\min}(\mathbf{v})\) are characterised by

Proof

We use the characterisation from Lemma 2.13, \(\mathbf{v}=\sum_{\nu=1}^{r}v_{1}^{(\nu)}\otimes w_{2}^{(\nu)}\) with linearly independent \(\{v_{1}^{(\nu)}:1\leq\nu\leq r\}\) and \(\{w_{2}^{(\nu)}:1\leq \nu\leq r\}\) spanning the minimal subspaces. Then for each \(\varphi_{2}\in V_{2}^{\prime}\) we have

From the proof of Lemma 2.11, there are mappings (id 1⊗φ 2) yielding \(v_{1}^{(\mu)}\) for any 1≤μ≤r; thus,

Analogously, \(\{ ( \varphi_{1}\otimes \mathit{id}_{2} ) ( \mathbf{v} ) :\varphi_{1}\in V_{1}^{\prime} \} =U_{2}^{\min }(\mathbf{v})\) is shown, proving (2.9a) and (2.9b). □

For \(V_{1}=\mathbb{R}^{n_{1}}\) and \(V_{2}=\mathbb{R}^{n_{2}}\), when V 1⊗ a V 2 is isomorphic to matrices from \(\mathbb{R}^{n_{1}\times n_{2}}\), definition (2.9a) may be interpreted as \(U_{1}^{\min}(\mathbf{v})=\operatorname{Col}\mathcal{M}_{1}(\mathbf{v})= \operatorname{span}\{\mathcal{M}_{1}(\mathbf{v})x:x\in V_{2}\}\) (\(\mathcal{M}_{1}(\mathbf{v})\) is the matrix corresponding to \(\mathbf{v,}\) cf. Definition 2.7). Similarly, (2.9b) becomes \(U_{1}^{\min}(\mathbf{v})=\operatorname{Col}\mathcal{M}_{1}(\mathbf{v})^{T}=\operatorname{Col}\mathcal{M}_{2}(\mathbf{v})\).

Corollary 2.15

The following statements hold.

-

(a)

Once \(U_{1}^{\min}(\mathbf{v})\) and \(U_{2}^{\min}(\mathbf{v})\) are given, one may select any basis {v (ν):1≤ν≤r} of \(U_{1}^{\min}(\mathbf{v})\) [respectively, {w (ν):1≤ν≤r} of \(U_{2}^{\min}(\mathbf{v})\)] and find a representation (2.4) with these v (ν) [respectively, w (ν)] and some other basis of \(U_{2}^{\min}(\mathbf{v})\) [respectively, \(U_{1}^{\min}(\mathbf{v})\)]. Otherwise, if {v (ν):1≤ν≤r} is a basis of a subspace \(U_{1}\supsetneqq U_{1}^{\min}(\mathbf{v})\) [respectively, {w (ν):1≤ν≤r} of \(U_{2}\supsetneqq U_{2}^{\min}(\mathbf{v})\)], a representation (2.4) still exists, but the v (ν) [respectively, w (ν)] are linearly dependent.

-

(b)

If we fix a basis {w (ν):1≤ν≤r} of a subspace U 2⊂V 2, there are mappings \(\{\varphi^{(\nu)}:1\leq\nu\leq r\}\subset U_{2}^{\prime}\) such that \((\mathit{id}_{1}\otimes\varphi^{(\nu )})(\mathbf{w})\in U_{1}^{\min}(\mathbf{w})\) and

$$\mathbf{w}=\sum_{\nu=1}^{r} \bigl(\mathit{id}_{1}\otimes\varphi^{(\nu)}\bigr) (\mathbf{w})\otimes w^{(\nu)}\quad\text{\textit{for all}}\ \mathbf{w}\in V_{1} \otimes_{a}U_{2}. $$

Proof

For statement (a) consider the representation of v by (2.4) with bases \(\{v_{i}\}_{i=1}^{r}\) and \(\{w_{i}\}_{i=1}^{r}\). Applying a basis transformation \(\{v_{i}\}_{i=1}^{r}\mapsto\{\hat{v}_{i}\}_{i=1}^{r}\), we obtain \(\mathbf{v}=\sum_{i=1}^{r}\hat{v}_{i}\otimes\hat{w}_{i}\) with another basis \(\{\hat{w}_{i}\}_{i=1}^{r}\).

To prove (b) take a basis \(\{\varphi_{2}^{(\nu)}:1\leq\nu\leq r\}\) of \(U_{2}^{\prime}\) dual to \(\{w_{2}^{(\nu)}:1\leq\nu\leq r\}\) and set \(\{\mathit{id}_{1}\otimes\varphi_{2}^{(\nu)}:1\leq\nu\leq r\}\subset L(V_{1}\otimes_{a}U_{2},V_{1})\). By statement (a), any w∈V 1⊗U 2 has a representation given by \(\mathbf{w}=\sum_{\nu=1}^{r}v_{1}^{(\mu )}\otimes w_{2}^{(\mu)}\), and here \(\{v_{1}^{(\mu)}:1\leq\nu\leq r\}\) is a basis of \(U_{1}^{\min}(\mathbf{w})\). Then

holds, proving the assertion. □

2.2.2 Definition in the General Case

In the following, we assume that d≥3, and generalise some of the features of tensors of second order.

By Lemma 2.3, we may assume \(\mathbf{v}\in\mathbf{U}:={}_{a}\bigotimes_{j=1}^{d}U_{j}\) with finite-dimensional subspaces U j ⊂V j . The lattice structure from Lemma 2.11 generalises to higher order.

Lemma 2.16

Assume that X i and Y i are subspaces of V i for i=1,…,d. Then the identity

holds.

Proof

For the start of the induction at d=2 use Lemma 2.11. Assume that the assertion holds for d−1 and write \({}_{a}\bigotimes_{j=1}^{d}X_{j}\) as X 1⊗ a X [1] with \(X_{[1]}:={}_{a}\bigotimes_{j=2}^{d}X_{j}\). Similarly, use \({}_{a}\bigotimes_{j=1}^{d}Y_{j} =Y_{1}\otimes_{a} Y_{[1]}\). Lemma 2.11 states that v∈(X 1∩Y 1)⊗ a (X [1]∩Y [1]). By the induction hypothesis, \(X_{[1]}\cap Y_{[1]}={}_{a}\bigotimes_{j=2}^{d} ( X_{j}\cap Y_{j} )\) is valid, proving the assertion. □

Again, the minimal subspaces \(U_{j}^{\min}(\mathbf{v})\) are given by the intersection of all U j satisfying \(\mathbf{v}\in{}_{a}\bigotimes_{j=1}^{d}U_{j}\).

The algebraic characterisation of \(U_{j}^{\min}(\mathbf{v})\) is similar to that for d=2. To this end, we introduce the following two subspaces (recall (2.7e)):

In the case of a normed space V j , we may consider the subspace

Finally, if V [j]= a ⨂ k≠j V k is a normed space, we can define

Note that, in general, the four spaces \({}_{a}\bigotimes_{k\neq j}V_{k}^{\prime}\), ( a ⨂ k≠j V k )′, \({}_{a}\bigotimes_{k\neq j}V_{k}^{\ast}\), \(\mathbf{V}_{[j]}^{\ast}\) may differ.

Theorem 2.17

For any \(\mathbf{v}\in\mathbf{V}={}_{a}\bigotimes_{j=1}^{d}V_{j}\), there exist minimal subspaces \(U_{j}^{\min }(\mathbf{v})\) (1≤j≤d), whose algebraic characterisation is given by

Furthermore, if V j and V [j]= a ⨂ k≠j V k are normed spaces for 1≤j≤d, then

Moreover, \(\mathbf{v}\in{}_{a}\bigotimes_{j=1}^{d}U_{j}^{\min }(\mathbf{v})\) and \(\dim(U_{j}^{\min}(\mathbf{v}))=\operatorname{rank}_{j}(\mathbf{v})\) hold with \(\operatorname{rank}_{j}\) from (2.8).

Proof

Since the mappings id j ⊗φ [j] are applied to \(\mathbf{v}\in\mathbf{U}:={}_{a}\bigotimes_{j=1}^{d}U_{j}^{\min }(\mathbf{v})\), only the restrictions φ [j] to \({}_{a}\bigotimes_{k\neq j}U_{k}^{\min}(\mathbf{v})^{\prime}\) and \(({}_{a}\bigotimes_{k\neq j}U_{k}^{\min}(\mathbf{v}))^{\prime}\) are of interest. Since the subspace \(U_{k}^{\min}(\mathbf{v})\), for all k, has finite dimension, Remark 2.6c states that \({}_{a}\bigotimes_{k\neq j}U_{k}^{\min}(\mathbf{v})^{\prime} =({}_{a}\bigotimes_{k\neq j}U_{j}^{\min}(\mathbf{v}))^{\prime}\). This proves \(U_{j}^{\mathrm{I}}(\mathbf{v})=U_{j}^{\mathrm{II}}(\mathbf{v})\).

Again, the finite dimension of \(U_{k}^{\min}(\mathbf{v})\) implies \(U_{j}^{\mathrm{I}}(\mathbf{v})={}_{a}\bigotimes_{k\neq j}U_{k}^{\min}(\mathbf{v})^{\prime} ={}_{a}\bigotimes_{k\neq j}U_{k}^{\min}(\mathbf{v})^{\ast}\). By the Hahn–Banach theorem, \(U_{k}^{\min }(\mathbf{v})^{\ast}\) can be extended to \(V_{k}^{\ast}\). This proves \(U_{j}^{\mathrm{III}}(\mathbf{v})\supset U_{j}^{\mathrm{I}}(\mathbf{v})\). The trivial inclusion \(U_{j}^{\mathrm{III}}(\mathbf{v})\subset U_{j}^{\mathrm{I}}(\mathbf{v})\) proves equality.

Next, we show that \(U_{j}^{\mathrm{II}}(\mathbf{v})=U_{j}^{\mathrm{IV}}(\mathbf{v})\). The inclusion ( a ⨂ k≠j V k )∗⊂( a ⨂ k≠j V k )′ implies \(U_{j}^{\mathrm{IV}}(\mathbf{v})\subset U_{j}^{\mathrm{II}}(\mathbf{v})\). Consider \(v_{j}:=(\mathit{id}_{j}\otimes\boldsymbol{\varphi}_{[j]})(\mathbf{v})\in U_{j}^{\mathrm{II}}(\mathbf{v})\) for some φ [j]∈( a ⨂ k≠j V k )′. Since \(\mathbf{v}\in{}_{a}\bigotimes_{j=1}^{d}U_{j}^{\min}(\mathbf{v})\), we may restrict φ [j] to \(\boldsymbol{\varphi}_{[j]}\in ({}_{a}\bigotimes_{k\neq j}U_{k}^{\min}(\mathbf{v}))^{\prime}\). Since \({}_{a}\bigotimes_{k\neq j}U_{k}^{\min}(\mathbf{v})\) is a finite-dimensional subspace of the normed space a ⨂ k≠j V k , by the Hahn–Banach theorem, the algebraic functional φ [j] can be extended to a ⨂ k≠j V k such that \(\overline {\boldsymbol{\varphi}_{[j]}}\in({}_{a}\bigotimes_{k\neq j}V_{k})^{\ast }\), and by \(v_{j}=(\mathit{id}_{j}\otimes\overline{\boldsymbol{\varphi}_{[j]}})(\mathbf{v})\in U_{j}^{\mathrm{IV}}(\mathbf{v})\) the opposite inclusion \(U_{j}^{\mathrm{II}}(\mathbf{v})\subset U_{j}^{\mathrm{IV}}(\mathbf{v})\) follows.

To prove that these spaces coincide with \(U_{j}^{\min}(\mathbf{v})\), we apply the matricisation from Sect. 2.1.2. The isomorphism \(\mathcal{M}_{j}\) from Definition 2.7 maps \({}_{a}\bigotimes_{k=1}^{d}V_{k}\) into V j ⊗ a V [j] (cf. (2.5a)). Proposition 2.14 states that \(U_{j}^{\min}(\mathbf{v})=U_{j}^{\mathrm{II}}(\mathbf{v})\) is the minimal subspace. So far, we have proved \(\mathbf{v}\in U_{j}^{\min}(\mathbf{v})\otimes_{a}V_{[j]}\). From Lemma 2.16, the intersection over all 1≤j≤d yields \(\mathbf{v}\in{}_{a}\bigotimes_{j=1}^{d}U_{j}^{\min}(\mathbf{v})\). □

We remark that for d≥3, in general, the dimensions of \(U_{j}^{\min }(\mathbf{v})\) may be different.

Since \(U_{j}^{\min}(\mathbf{v})\) is a subspace of V j generated by elementary tensors from

for 1≤j≤d, we can write

and, if V k is a normed space for 1≤k≤d, we can also write it as

for 1≤j≤d.

2.2.3 Hierarchies of Minimal Subspaces

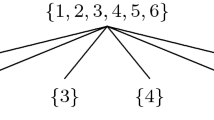

We have introduced the minimal subspace \(U_{j}^{\min}(\mathbf{v})\subset V_{j}\) for a singleton {j}⊂D:={1,2,…,d}. Instead we may consider general disjoint and non-empty subsets of α i ⊂D. For instance, let \(\mathbf{v}\in{}_{a}\bigotimes_{j\in D}V_{j} =\mathbf{V}_{\alpha_{1}}\otimes\mathbf{V}_{\alpha_{2}}\otimes\mathbf{V}_{\alpha_{3}}\), where α 1={1,2}, α 2={3,4}, and α 3={5,6,7}. Then we can conclude that there are minimal subspaces \(\mathbf{U}_{\alpha_{\nu}}^{\min}(\mathbf{v})\) for ν=1,2,3, such that \(\mathbf{v}\in{}_{a}\bigotimes_{\nu=1}^{3}\mathbf{U}_{\alpha_{\nu}}^{\min}(\mathbf{v})\). The relation between \(U_{j}^{\min}(\mathbf{v})\) and \(\mathbf{U}_{\alpha_{\nu}}^{\min}(\mathbf{v})\) is as follows.

Proposition 2.18

Let v∈V= a ⨂ j∈D V j and ∅≠α⊂D. Then the minimal subspaces \(\mathbf{U}_{\alpha}^{\min}(\mathbf{v})\) and \(U_{j}^{\min}(\mathbf{v})\) for j∈α are related by

Proof

We know that \(\mathbf{v}\in\mathbf{U}={}_{a}\bigotimes_{j\in D}U_{j}^{\min}(\mathbf{v})\). We may write \(\mathbf{U}=\mathbf{U}_{\alpha}\otimes_{a}\mathbf{U}_{\alpha^{c}}\), where α c=D∖α and \(\mathbf{U}_{\beta}:={}_{a}\bigotimes_{j\in\beta}U_{j}^{\min}(\mathbf{v})\) for any subset β⊂D. Thus, \(\mathbf{U}_{\alpha}^{\min}(\mathbf{v})\) must be contained in \(\mathbf{U}_{\alpha}={}_{a}\bigotimes_{j\in\alpha}U_{j}^{\min}(\mathbf{v})\). □

An obvious generalisation of the previous results is given below.

Corollary 2.19

Let \(\mathbf{v}\in\mathbf{V}={}_{a}\bigotimes_{j\in\mathcal{I}}V_{j}\). Assume that ∅≠α i ⊂D are pairwise disjoint for i=1,2,…,m. The minimal subspace for \(\alpha:=\bigcup_{i=1}^{m}\alpha_{i}\) satisfies

The algebraic characterisation of \(\mathbf{U}_{\alpha}^{\min}(\mathbf{v})\) is analogous to that given in Theorem 2.17. Formulae (2.10a), (2.10b) become

where \(( \mathit{id}_{\alpha}\otimes\boldsymbol{\varphi}_{\alpha^{c}} ) (\otimes_{j=1}^{d}v^{(j)})= ( \boldsymbol{\varphi}_{\alpha^{c}}(\otimes_{j\in\alpha^{c}}v^{(j)}) ) \otimes_{k\in\alpha}v^{(k)}\). The analogues of (2.10c), (2.10d) apply as soon as norms are defined on V j and \({}_{a}\bigotimes_{j\in\alpha^{c}}V_{j}\).

3 Minimal Subspaces in a Banach Tensor Space

In this section we assume the existence of a norm, namely ∥⋅∥, defined on a tensor space V. More precisely, we introduce the following class of Banach spaces.

Definition 3.1

We say that V ∥⋅∥ is a Banach tensor space if there exist an algebraic tensor space V and a norm ∥⋅∥ on V such that V ∥⋅∥ is the completion of V with respect to a given norm ∥⋅∥, i.e.,

If V ∥⋅∥ is a Hilbert space, we will say that V ∥⋅∥ is a Hilbert tensor space.

Next, we give some examples of Banach and Hilbert tensor spaces.

Example 3.2

For I j ⊂ℝ (1≤j≤d) and 1≤p<∞, the Sobolev space H N,p(I j ) consists of all univariate functions f from L p(I j ) with bounded normFootnote 3

whereas the space H N,p(I) of d-variate functions on I=I 1×I 2×…×I d ⊂ℝd is endowed with the norm

where \(\mathbf{n}\in\mathbb{N}_{0}^{d}\) is a multi-index of length \(\vert \mathbf{n}\vert :=\sum_{j=1}^{d}n_{j}\). It is well known that H N,p(I j ) and H N,p(I) are reflexive and separable Banach spaces. Moreover, for p=2, the Sobolev spaces H N(I j ):=H N,2(I j ) and H N(I):=H N,2(I) are Hilbert spaces. As a first example,

is a Banach tensor space. Examples of Hilbert tensor spaces are

We recall that for the set of norms over a given vector space V, we can define a partial ordering ∥⋅∥1≲∥⋅∥2, if there exists a constant C such that ∥v∥1≤C∥v∥2 for all v∈V.

Given a vector space V, its completion with respect to a norm ∥⋅∥ yields a Banach space which we denote by \(V_{\Vert \cdot \Vert }:=\overline{V}^{\Vert \cdot \Vert }\). Note that ∥⋅∥1≲∥⋅∥2 implies that \(V_{\Vert \cdot \Vert _{2}}\subset V_{\Vert \cdot \Vert _{1}}\).

3.1 Tensor Product of Banach Spaces

Let ∥⋅∥ j , 1≤j≤d, be the norms of the vector spaces V j appearing in \(\mathbf{V}={}_{a}\bigotimes _{j=1}^{d}V_{j}\). By ∥⋅∥ we denote the norm on the tensor space V. Note that ∥⋅∥ is not determined by ∥⋅∥ j , but there are relations which are ‘reasonable’.

Any norm ∥⋅∥ on \({}_{a}\bigotimes_{j=1}^{d}V_{j}\) satisfying

is called a cross norm. As usual, the dual norm to ∥⋅∥ is denoted by ∥⋅∥∗. If ∥⋅∥ is a cross norm and ∥⋅∥∗ is also a cross norm on \({}_{a}\bigotimes_{j=1}^{d}V_{j}^{\ast}\), i.e.,

∥⋅∥ is called a reasonable cross norm.

Remark 3.3

Equation (3.2) implies the inequality \(\Vert\bigotimes_{j=1}^{d}v^{(j)}\Vert\lesssim\prod_{j=1}^{d}\Vert v^{(j)}\Vert_{j}\), which is equivalent to the continuity of the tensor product mapping

given by \(\otimes ( (v_{1},\ldots,v_{d}) ) =\otimes_{j=1}^{d}v_{j}\).

By standard arguments, continuity of the tensor product implies the following result.

Lemma 3.4

Let V j,0 be dense in (V j ,∥⋅∥ j ) for 1≤j≤d. Assume (3.4) to be continuous for some norm ∥⋅∥ defined on \(\mathbf{V}={}_{a}\bigotimes_{j=1}^{d}V_{j}\). Then \({}_{a}\bigotimes_{j=1}^{d}V_{j,0}\) is dense in V, so that \(\overline{_{a}\bigotimes_{j=1}^{d}V_{j,0}}^{\Vert \cdot \Vert }=\mathbf{V}_{\Vert \cdot \Vert }\).

Example 3.5

It is well known that the norm ∥⋅∥0,2 is a reasonable cross norm on \({}_{a}\bigotimes_{j=1}^{d}L^{2}(I_{j}) \), whereas ∥⋅∥ N,2 for N≥1 is not a reasonable cross norm on \({}_{a}\bigotimes_{j=1}^{d}H^{N}(I_{j}) \) (cf. Example 3.2).

Note that any functional \(\boldsymbol{\varphi}=\otimes_{j=1}^{d}\varphi_{j}\in{}_{a}\bigotimes_{j=1}^{d}V_{j}^{\ast}\) is also a linear map \({}_{a}\bigotimes_{j=1}^{d}V_{j} \rightarrow\mathbb{R}\), which is defined for elementary tensors by

Thus, \({}_{a}\bigotimes_{j=1}^{d}V_{j}^{\ast} \subset({}_{a}\bigotimes_{j=1}^{d}V_{j})^{\prime}\). If ∥⋅∥ is a reasonable cross norm, then by (3.3) the map

is also continuous. Consequently, \({}_{a}\bigotimes_{j=1}^{d}V_{j}^{\ast}\subset({}_{a}\bigotimes_{j=1}^{d}V_{j} )^{\ast}\).

Grothendieck [13] named the following norm ∥⋅∥∨ the injective norm.

Definition 3.6

Let V i be a Banach space with norm ∥⋅∥ i for 1≤i≤d. Then for \(\mathbf{v}\in\mathbf{V}={}_{a} \bigotimes_{j=1}^{d}V_{j}\) define ∥⋅∥∨ by

It is well known that the injective norm is a reasonable cross norm (see Lemma 1.6 in [20]). Further properties are given by the next proposition.

Proposition 3.7

The following statements hold.

-

(a)

The injective norm is the weakest reasonable cross norm on V; i.e., if ∥⋅∥ is a reasonable cross norm over V, then ∥⋅∥∨≲∥⋅∥.

-

(b)

For any norm ∥⋅∥ on V satisfying ∥⋅∥∨≲∥⋅∥, the inclusion \({}_{a}\bigotimes_{j=1}^{d}V_{j}^{\ast} \subset({}_{a}\bigotimes_{j=1}^{d}V_{j})^{\ast}\) holds.

Proof

Statement (a) is a classical result (cf. [20], [14]). To prove (b), we use the fact that ∥⋅∥∨≲∥⋅∥ implies \(\Vert \cdot \Vert _{\vee}^{\ast }\gtrsim \Vert \cdot \Vert ^{\ast}\) (see again [20], [14]). Then

for some C>0, and the proof ends using the fact that \(\Vert \cdot \Vert _{\vee}^{\ast}\) is also a cross norm. □

3.2 Minimal Subspaces in a Banach Tensor Space

Let V be a tensor product of Banach spaces (V i ,∥⋅∥ i ) for 1≤i≤d. Then, considering the injective norm on V [j] for 1≤j≤d, for each \(\mathbf{v}\in\mathbf{V,}\) we conclude from Theorem 2.17 thatFootnote 4

(cf. (2.10a)–(2.10d)). Assume that the norm ∥⋅∥ on V satisfies

(cf. Proposition 3.7a). This assumption ensures that the Banach tensor space V ∥⋅∥ is always a Banach subspace of the Banach tensor space \(\mathbf{V}_{\Vert \cdot \Vert _{\vee}}\). This fact allows us to extend the definition of minimal subspaces to a Banach tensor space V ∥⋅∥ with a norm ∥⋅∥ satisfying (3.6). To this end, the following lemma will be useful.

Lemma 3.8

For 1≤i≤d, let (V i ,∥⋅∥ i ) be Banach spaces. For fixed j∈{1,…,d} and a given \(\boldsymbol{\varphi}_{[j]}=\bigotimes_{k\neq j}\varphi_{k}\in{}_{a}\bigotimes_{k\neq j}V_{k}^{\ast}\), the map id j ⊗φ [j] belongs to \(\mathcal{L}(\mathbf{V}_{\Vert \cdot \Vert _{\vee}},V_{j})\), i.e., id j ⊗φ [j] is continuous on \(( \mathbf{V,}\Vert \cdot \Vert _{\vee} ) \). Hence, there exists a unique extension \(\overline{\mathit{id}_{j}\otimes\boldsymbol{\varphi}_{[j]}}\in\mathcal{L}(\mathbf{V}_{\Vert \cdot \Vert _{\vee}},V_{j})\). Moreover, \(\overline{\mathit{id}_{j}\otimes\boldsymbol{\varphi}_{[j]}}\in \mathcal{L} ( \mathbf{V}_{\Vert \cdot \Vert },V_{j} ) \) holds for any norm ∥⋅∥ on V satisfying (3.6) with the operator norm

where the constant C is determined by the estimate in (3.6).

Proof

Let \(\varphi_{j}\in V_{j}^{\ast}\) and use \(\varphi_{j}\circ(\mathit{id}_{j}\otimes\boldsymbol{\varphi}_{[j]})=\bigotimes_{k=1}^{d}\varphi_{k}\) (cf. (2.7f)). Hence, continuity follows from

The last inequality holds for any norm on V satisfying ∥⋅∥≥(1/C)∥⋅∥∨ and proves (3.7). The statement about the extension \(\overline{\mathit{id}_{j}\otimes\boldsymbol{\varphi}_{[j]}}\) is standard. □

An immediate consequence of Lemma 3.8 and Theorem 2.17 is the following.

Corollary 3.9

For 1≤i≤d, let (V i ,∥⋅∥ i ) be a Banach space and assume that ∥⋅∥ is a norm on V satisfying (3.6). Then for each algebraic tensor v∈V the representation

holds for 1≤j≤d. Moreover, we can write

For the hierarchical format from [15] we need to extend the results to a minimal subspace in the tensor space V α :=⨂ k∈α V k , where α⊂D:={1,…,d} contains more than one index. Then the splitting V j ⊗V [j] from above becomes \(\mathbf{V}_{\alpha}\otimes \mathbf{V}_{\alpha^{c}}\), where \(\mathbf{V}_{\alpha^{c}}:=\bigotimes _{k\in D\backslash\alpha}V_{k}\). The definition of, e.g., \(U_{j}^{\mathrm{I}}(\mathbf{v})\) in (2.10a) becomes

involving the identity id α ∈L(V α ,V α ).

Remark 3.10

By arguments analogous to those above, we can show that

In particular, \(\overline{\mathit{id}_{\alpha}\otimes\boldsymbol{\varphi}_{\alpha^{c}}}\in\mathcal{L}(\mathbf{V}_{\Vert \cdot \Vert _{\vee}},V_{\alpha })\subset\mathcal{L} ( \mathbf{V}_{\Vert \cdot \Vert },V_{\alpha} ) \) holds.

3.3 Minimal Closed Subspaces in a Banach Tensor Space

3.3.1 Definitions

So far, \(U_{j}^{\min}(\mathbf{v})\) has been defined for algebraic tensors only. From \(\overline{\mathit{id}_{j}\otimes\boldsymbol{\varphi}_{[j]}}\in\mathcal{L} ( \mathbf{V}_{\Vert \cdot \Vert },V_{j} ) \), we can extend the definition of U jmin(v) in Corollary 3.9 even to topological tensors v∈V ∥⋅∥∖V as follows.

Definition 3.11

For a given Banach tensor space V ∥⋅∥ with a norm ∥⋅∥ satisfying (3.6) we define the set

for each v∈V ∥⋅∥ and 1≤j≤d.

Observe that \((\overline{\mathit{id}_{j}\otimes\boldsymbol{\varphi}_{[j]}})(\mathbf{v})\) is well defined, because \(\overline{\mathit{id}_{j}\otimes \boldsymbol{\varphi}_{[j]}}\) is continuous and coincides with the standard definition when v∈V. Thus, for each v∈V ∥⋅∥ we can define its ‘minimal subspace’ by

If we take into account the topological properties of V ∥⋅∥, we may consider its closure with respect to the norm ∥⋅∥:

The second identity is a consequence of Lemma 3.4. If v∈V, the set \(U_{j}^{\min}(\mathbf{v})\) is a finite-dimensional subspace in V j and therefore closed, i.e., \(\overline {U_{j}^{\min}(\mathbf{v})}^{\Vert \cdot \Vert _{j}}=U_{j}^{\min }(\mathbf{v})\). In the general case of v∈V ∥⋅∥, the subspace \(U_{j}^{\min}(\mathbf{v})\) may be not closed.

Before we discuss the Banach subspace U ∥⋅∥(v) in Sect. 3.3.3, we first analyse the properties of the subspace \(U_{j}^{\min}(\mathbf{v})\). To this end we use the following definition.

Definition 3.12

We say that a sequence (x n ) n∈ℕ in a Banach space X converges weakly to x∈X, if limφ(x n )=φ(x) for all φ∈X ∗. In this case, we write x n ⇀x.

3.3.2 Dependence of \(U_{j}^{\min}(\mathbf{v})\) on v

The properties of the maps id j ⊗φ [j] involved in the definition of \(U_{j}^{\min}(\mathbf{v})\) are discussed in Lemma 3.13. As a consequence, we shall establish our main result in Theorem 3.15 about the dimensions of \(U_{j}^{\min }(\mathbf{v}_{n})\) and \(U_{j}^{\min}(\mathbf{v})\) for a weakly convergent sequence v n ⇀v.

Lemma 3.13

Assume that the norm of the Banach tensor space V ∥⋅∥ satisfies (3.6). Let \(\boldsymbol{\varphi}_{[j]}\in{}_{a}\bigotimes_{k\neq j}V_{k}^{\ast}\) and v n ,v∈V ∥⋅∥ with v n ⇀v. Then weak convergence \((\overline{\mathit{id}_{j}\otimes\boldsymbol{\varphi}_{[j]}})(\mathbf{v}_{n})\rightharpoonup(\overline{\mathit{id}_{j}\otimes\boldsymbol{\varphi}_{[j]}})(\mathbf{v})\) holds in V j .

Proof

Let \(\boldsymbol{\varphi}_{[j]}=\otimes_{k\neq j}\varphi_{k}\in{}_{a}\bigotimes_{k\neq j}V_{k}^{\ast}\) be an elementary tensor. We have to show that

holds for all \(\varphi_{j}\in V_{j}^{\ast}\). By Lemma 3.8, \(\overline{\mathit{id}_{j}\otimes\boldsymbol{\varphi}_{[j]}}:\mathbf{V}_{\Vert \cdot \Vert }\rightarrow V_{j}\) is continuous. Therefore, the composition \(\varphi_{j}\circ(\overline {\mathit{id}_{j}\otimes\boldsymbol{\varphi}_{[j]}}):\mathbf{V}_{\Vert \cdot \Vert }\rightarrow\mathbb{R}\) is a continuous functional belonging to \(\mathbf{V}_{\Vert \cdot \Vert }^{\ast}\), and hence v n ⇀v implies

This proves the lemma for an elementary tensor φ [j]. The result extends immediately to finite linear combinations \(\boldsymbol{\varphi}_{[j]}\in{}_{a}\bigotimes_{k\neq j}V_{k}^{\ast}\). □

Lemma 3.14

Assume N∈ℕ and \(x_{n}^{(i)}\rightharpoonup x_{\infty}^{(i)}\) for 1≤i≤N with linearly independent \(x_{\infty}^{(i)}\in X\). Then there is an n 0 such that for all n≥n 0 the N-tuples \((x_{n}^{(i)}:1\leq i\leq N)\) are linearly independent.

Proof

There are functionals φ (j)∈X ∗ (1≤j≤N) with \(\varphi^{(j)}(x_{\infty}^{(i)})=\delta_{ij}\) (cf. Remark 2.1). Set

\(x_{n}^{(i)}\rightharpoonup x_{\infty}^{(i)}\) implies \(\varphi^{(j)}(x_{n}^{(i)})\rightarrow\varphi^{(j)}(x_{\infty}^{(i)})\). Continuity of the determinant proves \(\Delta_{n}\rightarrow\Delta_{\infty}:=\det( ( \delta_{ij} )_{i,j=1}^{N})=1\). Hence, there is an n 0 such that Δ n >0 for all n≥n 0, proving linear independence of \(\{x_{n}^{(i)}:1\leq i\leq N\}\). □

Theorem 3.15

Assume that the norm of the Banach tensor space V ∥⋅∥ satisfies (3.6). Let v n ∈V ∥⋅∥ be a sequence with v n ⇀v∈V ∥⋅∥. Then Footnote 5

Proof

Since \(U_{j}^{\min}(\mathbf{v})\) is dense in \(\overline{U_{j}^{\min }(\mathbf{v})}^{\Vert \cdot \Vert _{j}}\), the dimensions are identical in the sense of footnote 5. We can select a subsequence (again denoted by v n ) such that \(\dim U_{j}^{\min}(\mathbf{v}_{n})\) is weakly increasing. If \(\dim U_{j}^{\min}(\mathbf{v}_{n})\rightarrow\infty\) holds, nothing is to be proved. Therefore, assume that \(\lim\dim U_{j}^{\min}(\mathbf{v}_{n})=N<\infty\). For an indirect proof assume that \(\dim U_{j}^{\min}(\mathbf{v})>N\). Then, there are N+1 linearly independent vectors

By Lemma 3.13, the sequence \(b_{n}^{(i)}:=(\overline {\mathit{id}_{j}\otimes\boldsymbol{\varphi}_{[j]}^{(i)}})(\mathbf{v}_{n})\rightharpoonup b^{(i)}\) converges weakly. By Lemma 3.14, for large enough n, also \(\{b_{n}^{(i)}:1\leq i\leq N+1\}\) is linearly independent. Because \(b_{n}^{(i)}=(\overline{\mathit{id}_{j}\otimes\boldsymbol{\varphi}_{[j]}^{(i)}})(\mathbf{v}_{n})\in U_{j}^{\min}(\mathbf{v}_{n})\), this contradicts \(\dim U_{j}^{\min}(\mathbf{v}_{n})\leq N\). □

For the hierarchical format from [15], id j ⊗φ [j] must be replaced by \(\mathit{id}_{\alpha}\otimes \boldsymbol{\varphi}_{_{\alpha^{c}}}\) (cf. Corollary 2.19 and Remark 3.10). Similar methods as above show the following generalisations:

Here, we equip the tensor space V α = a ⨂ j∈α V j with the injective norm ∥⋅∥∨ from (3.5).

3.3.3 \(\dim(U_{j}^{\min}(\mathbf{v}))<\infty\)

Consider U(v) and U ∥⋅∥(v) from (3.8a,b). For algebraic tensors v we know that v∈U(v). However, the corresponding conjecture v∈U ∥⋅∥(v), in the general case, turns out to be not quite obvious. The statement v∈U ∥⋅∥(v) requires a sequence of \(\mathbf{v}_{n}\in{}_{a}\bigotimes_{j=1}^{d}\overline{U_{j}^{\min}(\mathbf{v})}^{\Vert \cdot \Vert _{j}}\) with v=limv n . We do not have a proof that this holds in general. A positive result holds for the Hilbert case (see Sect. 3.4) and if the subspaces \(U_{j}^{\min}(\mathbf{v})\) are finite dimensional (see Theorem 3.16). In the general Banach case, we give a proof for v=limv n , provided that the convergence is fast enough.

For practical applications, the finite-dimensional case is the most important one, since it follows from Theorem 3.15 with bounded \(\liminf_{n\rightarrow\infty}\dim U_{j}^{\min}(\mathbf{v}_{n})\).

Theorem 3.16

Assume that V ∥⋅∥ is a Banach tensor space with ∥⋅∥ satisfying (3.6). For v∈V ∥⋅∥ and all 1≤j≤d assume that \(\dim(U_{j}^{\min}(\mathbf{v}))<\infty\). Then v belongs to the (algebraic) tensor space \({}_{a}\bigotimes_{j=1}^{d}U_{j}^{\min }(\mathbf{v}) =\mathbf{U}_{\Vert \cdot \Vert }(\mathbf{v})\).

Proof

Let \(\{b_{j}^{(i)}:1\leq i\leq r_{j}\}\) be a basis of \(U_{j}^{\min}(\mathbf{v})\). There are functionals \(\varphi_{j}^{(i)}\in V_{j}^{\ast}\) with the property \(\varphi_{j}^{(i)}(b_{j}^{(k)})=\delta_{ik}\). Define \(\mathbf{a}_{\mathbf{i}}:=\bigotimes_{j=1}^{d}\varphi_{j}^{(i_{j})}\in{}_{a}\bigotimes_{j=1}^{d}V_{j}^{\ast}\) and \(\mathbf{b}_{\mathbf{i}}:=\bigotimes_{j=1}^{d}b_{j}^{(i_{j})}\in{}_{a}\bigotimes_{j=1}^{d}U_{j}^{\min}(\mathbf{v})\) for i=(i 1,…,i d ) with 1≤i j ≤r j . Any \(\mathbf{u}\in{}_{a}\bigotimes_{j=1}^{d}U_{j}^{\min}(\mathbf{v}) \) is reproduced by

We set

Thus, the theorem follows, if we prove that \(\mathbf{v}=\mathbf{u}_{\mathbf{v}}\in{}_{a}\bigotimes_{j=1}^{d}U_{j}^{\min}(\mathbf{v})\). Observe that the norm ∥v−u v ∥∨ is described by α(v−u v ) with a normalised \(\boldsymbol{\alpha }=\bigotimes_{j=1}^{d}\alpha_{j}\in{}_{a}\bigotimes_{j=1}^{d}V_{j}^{\ast}\). If we can show α(v−u v )=0 for all α, the norm ∥v−u v ∥∨ vanishes and v=u v follows. Thus we need to show the following.

- Claim.:

-

α(v−u v )=0 holds for all \(\boldsymbol{\alpha}=\bigotimes_{j=1}^{d}\alpha_{j}\in{}_{a}\bigotimes_{j=1}^{d}V_{j}^{\ast}\).

To prove the claim, split each α j into \(\alpha_{j}^{(0)}+\sum_{i}c_{i}\varphi_{j}^{(i)}\) with \(c_{i}:=\alpha_{j}(b_{j}^{(i)})\) and \(\alpha_{j}^{(0)}:=\alpha_{j}-\sum_{i}c_{i}\varphi_{j}^{(i)}\). It follows that \(\alpha_{j}^{(0)}(b_{j}^{(i)})=0\) for all i, i.e.,

We expand the product

where all products contained in A have at least one factor \(\alpha_{j}^{(0)}\). Consider such a product in A, where, without loss of generality, we assume j=1, i.e., \(\alpha_{1}^{(0)}\otimes\boldsymbol{\gamma}_{[1]}\) with γ [1]∈V [1]. We conclude that \((\alpha_{1}^{(0)}\otimes\boldsymbol{\gamma}_{[1]})(\mathbf{u}_{\mathbf{v}})=0\), since \((\mathbf{id}_{[1]}\otimes\alpha_{1}^{(0)})(\mathbf{u}_{\mathbf{v}})=\mathbf{0}\) and \(\alpha_{1}^{(0)}\otimes\boldsymbol{\gamma}_{[1]}=\boldsymbol{\gamma}_{[1]}\circ(\mathbf{id}_{[1]}\otimes\alpha_{1}^{(0)})\). Furthermore,

By definition of \(U_{1}^{\min}(\mathbf{v})\), \(w\in U_{1}^{\min}(\mathbf{v})\) holds and \(\alpha_{1}^{(0)}(w)=(\alpha_{1}^{(0)}\otimes\boldsymbol{\gamma }_{[1]})(\mathbf{v})=0\) follows from (3.11), and thus \((\alpha_{1}^{(0)}\otimes\boldsymbol{\gamma}_{[1]})(\mathbf{v}-\mathbf{u}_{\mathbf{v}})=0\) holds.

It remains to analyse \(( \bigotimes_{j=1}^{d} ( \sum_{i}c_{i}\varphi_{j}^{(i)} ) ) (\mathbf{v}-\mathbf{u}_{\mathbf{v}})= ( \sum_{\mathbf{i}}\mathbf{c}_{\mathbf{i}}\mathbf{a}_{\mathbf{i}} ) (\mathbf{v}-\mathbf{u}_{\mathbf{v}})\) for \(\mathbf{c} _{\mathbf{i}}:=\prod_{j=1}^{d}c_{i_{j}}\). Application to u v yields

(cf. (3.10)). Since this value coincides with (∑ i c i a i )(v)=∑ i c i a i (v), we have proved

Thus the claim follows, and thereby \(\mathbf{v}=\mathbf{u}_{\mathbf{v}}\in{}_{a}\bigotimes_{j=1}^{d}U_{j}^{\min}(\mathbf{v}) \). □

3.3.4 \(\dim(U_{j}^{\min}(\mathbf{v}))=\infty\)

Recall that if v∈V then \(\dim(U_{j}^{\min}(\mathbf{v}))< \infty\) for 1≤j≤d. Thus, under the assumption \(\dim(U_{j}^{\min}(\mathbf{v}))=\infty\) for some j∈{1,2,…,d} we have v∈V ∥⋅∥∖V, and then v is defined as the limit of some Cauchy sequence in V.

For the next theorem we need a further assumption on the norm ∥⋅∥. A sufficient condition is that ∥⋅∥ is a uniform cross norm; i.e., it is a cross norm (cf. (3.2)) and satisfies

for all \(A_{j}\in\mathcal{L}(V_{j},V_{j})\) (1≤j≤d) and all \(\mathbf{v}\in{}_{a}\bigotimes_{j=1}^{d}V_{j}\). The uniform cross norm property implies that ∥⋅∥ is a reasonable cross norm (cf. [25]). Hence, condition (3.6) is ensured (cf. Proposition 3.7a). A further consequence will be needed.

Lemma 3.17

Let ∥⋅∥ be a uniform cross norm on \(\mathbf{V.}\) Note that V=V [d]⊗ a V d , where \(\mathbf{V}_{[d]}:={}_{a}\bigotimes_{j=1}^{d-1}V_{j}\).

-

(a)

The map defined by

$$\Vert\mathbf{x}\Vert_{[d]}:=\Vert\mathbf{x}\otimes v_{d} \Vert ,\quad \text{\textit{where}}\ v_{d}\in V_{d},\ \Vert v_{d}\Vert_{d}=1, $$does not depend on the choice of v d . Therefore, it defines a norm on V [d].

-

(b)

The norm ∥⋅∥ is a reasonable cross norm on V [d]⊗V d , i.e.,

$$ \Vert\mathbf{x}\otimes v_{d}\Vert=\Vert\mathbf{x}\Vert_{\lbrack d]} \Vert v_{d}\Vert_{d}\text{\quad \textit{and}\quad}\Vert\boldsymbol{ \varphi}_{[d]}\otimes\varphi_{d} \Vert^{\ast}=\Vert\boldsymbol{\varphi}_{[d]} \Vert_{[d]}^{\ast}\Vert\varphi_{d} \Vert_{d}^{\ast} $$(3.13)for x∈V [d], v d ∈V d , \(\boldsymbol{\varphi }_{[d]}\in\mathbf{V}_{[d]}^{\ast}\), and \(\varphi_{d}\in V_{d}^{\ast}\).

-

(c)

For \(\varphi_{d}\in V_{d}^{\ast}\) and \(\boldsymbol{\varphi}_{[d]}\in\mathbf{V}_{[d]}^{\ast}\), the following estimates hold:

$$ \bigl\Vert ( \mathbf{id}_{[d]}\otimes\varphi_{d} ) ( \mathbf{v})\bigr\Vert_{\lbrack d]}\leq\Vert\varphi_{d} \Vert_{d}^{\ast}\Vert\mathbf{v}\Vert\quad \text{\textit{and}}\quad \bigl\Vert(\mathit{id}_{d}\otimes\boldsymbol{\varphi}_{[d]}) (\mathbf{v})\bigr\Vert_{d}\leq\Vert\boldsymbol{\varphi}_{[d]} \Vert_{\lbrack d]}^{\ast}\Vert\mathbf{v}\Vert. $$(3.14)

Proof

(a) Let \(\varphi_{d}\in V_{d}^{\ast}\) be the functional with \(\Vert\varphi_{d}\Vert_{d}^{\ast}=1\) and φ d (v d )=∥v d ∥ d (cf. (2.2)). Choose any w d ∈V d with ∥w d ∥ d =1 and set \(A_{d}:=w_{d}\varphi_{d}\in\mathcal{L}(V_{d},V_{d})\), i.e., A d v=φ d (v)w d . From \(\Vert A_{d}\Vert_{V_{d}\leftarrow V_{d}}=\Vert\varphi_{d}\Vert_{d}^{\ast}\Vert w_{d}\Vert_{d}=1\), the uniform cross norm property (3.12) with A j =id for 1≤j≤d−1 implies ∥x⊗w d ∥=∥(id [d]⊗A d )(x⊗v d )∥≤∥x⊗v d ∥. Interchanging the roles of w d and v d , we obtain ∥x⊗v d ∥=∥x⊗w d ∥.

(b1) ∥x⊗v d ∥=∥x∥[d]∥v d ∥ d in (3.13) follows from the definition of ∥⋅∥[d].

(b2) For any elementary tensor v=x [d]⊗v d ≠0 we have \(\frac{| ( \boldsymbol{\varphi}_{[d]}\otimes\varphi_{d} ) (\mathbf{v})|}{\Vert\mathbf{v}\Vert}\leq\Vert\boldsymbol{\varphi}_{[d]}\Vert_{[d]}^{\ast}\Vert\varphi_{d}\Vert_{d}^{\ast}\). Taking the supremum over all v=x [d]⊗v d , we obtain

Define \(\mathbf{A}:=\bigotimes_{j=1}^{d}A_{j}\in\mathcal{L}(\mathbf{V},\mathbf{V})\) by A j =id (1≤j≤d−1) and \(A_{d}=\hat{v}_{d}\varphi_{d}\) with \(0\neq\hat{v}_{d}\in V_{d}\). Then Av is an elementary vector of the form \(\mathbf{x}_{[d]}\otimes\hat{v}_{d}\) and \(\Vert A_{d}\Vert_{V_{d}\leftarrow V_{d}}=\Vert\hat{v}_{d}\Vert_{d}\Vert\varphi_{d}\Vert_{d}^{\ast}\) holds. This fact and the cross norm property \(\Vert\mathbf{Av}\Vert\leq\Vert\hat{v}_{d}\Vert_{d}\Vert\varphi_{d}\Vert_{d}^{\ast}\Vert\mathbf{v}\Vert\) lead us to

Since \((\boldsymbol{\varphi}_{[d]}\otimes\varphi_{d})(\mathbf{Av})= (\boldsymbol{\varphi}_{[d]}\otimes ( \varphi_{d}A_{d} )) (\mathbf{v})=\varphi_{d}(\hat{v}_{d})\cdot(\boldsymbol{\varphi}_{[d]}\otimes\varphi_{d})(\mathbf{v})\), the estimate can be continued by

As \(\sup_{\hat{v}_{d}\neq0}\frac{\vert \varphi_{d}(\hat{v}_{d})\vert }{\Vert\hat{v}_{d}\Vert_{d}}=\Vert\varphi_{d}\Vert_{d}^{\ast}\), it follows that \(\frac{| ( \boldsymbol{\varphi}_{[d]}\otimes\varphi_{d} ) (\mathbf{v})|}{\Vert\mathbf{v}\Vert}\leq\Vert\boldsymbol{\varphi }_{[d]}\Vert_{[d]}^{\ast}\Vert\varphi_{d}\Vert_{d}^{\ast}\) for all v∈V, so that \(\Vert\boldsymbol{\varphi}_{[d]}\otimes\varphi_{d}\Vert^{\ast}\leq\Vert\boldsymbol{\varphi}_{[d]}\Vert_{[ d]}^{\ast}\Vert\varphi_{d}\Vert_{d}^{\ast}\). Together with the opposite inequality from above, we have proved the second equation in (3.13).

(c) Any \(\mathbf{\psi}_{[d]}\in\mathbf{V}_{[d]}^{\ast}\) satisfies \(\mathbf{\psi}_{[d]}\otimes\varphi_{d}=\mathbf{\psi}_{[d]} ( \mathbf{id}_{[d]}\otimes\varphi_{d} ) \). For v [d]:=(id [d]⊗φ d )(v) there is a \(\mathbf{\psi}_{[d]}\in\mathbf{V}_{[d]}^{\ast}\) with \(\Vert\mathbf{\psi}_{[d]}\Vert_{[ d]}^{\ast}=1\) and \(\vert \mathbf{\psi}_{[d]} ( \mathbf{v}_{[d]} ) \vert =\Vert\mathbf{v}_{[d]}\Vert_{[ d]}\) (cf. (2.2)). Hence,

proves the first inequality in (3.14). The second one can be proved analogously. □

The next result is proved, e.g., in DeVore–Lorentz [8, Chap. 9, §7] or Meise–Vogt [21, Proposition 10.6].

Lemma 3.18

Let Y⊂X be a subspace of a Banach space X with dim(Y)≤n. Then there exists a projection \(\varPhi\in\mathcal{L}(X,X)\) onto Y such that

The bound is sharp for general Banach spaces, but can be improved to ∥Φ∥ X←X ≤n 1/2−1/p for X=L p.

Before we state the next theorem we recall the following definition. In Sect. 1, we introduced the set \(\mathcal{R}_{r}\) for the tensor space V. Since V≅V [d]⊗ a V d we now introduce the notation

Theorem 3.19

Assume that V ∥⋅∥ is a Banach tensor space with a uniform cross norm ∥⋅∥. If v∈V ∥⋅∥∖V is the limit of a sequence {v n } n∈ℕ⊂V, where \(\mathbf{v}_{n} \in\mathcal{R}_{r} ( \mathbf{V}_{[d]}\otimes_{a}V_{d} ) \) with r≤n, and a convergence rate given by

then v∈U ∥⋅∥(v).

Proof

Use the setting V≅V [d]⊗ a V d from Lemma 3.17. Since \(\mathbf{v}_{n} \in\mathcal{R}_{r} ( \mathbf{V}_{[d]}\otimes_{a}V_{d} ) \), with r≤n, each v n ∈V has a representation in \(\mathbf{U}_{[d]}^{\min}(\mathbf{v}_{n})\otimes U_{d}^{\min}(\mathbf{v}_{n})\) with \(r:=\dim \mathbf{U}_{[d]}^{\min}(\mathbf{v}_{n})=\dim U_{d}^{\min}(\mathbf{v}_{n})\leq n\). Renaming r as n, we obtain the representation \(\mathbf{v}_{n}=\sum_{i=1}^{n}\mathbf{v}_{[d]}^{(i)}\otimes v_{d}^{(i)}\). According to Corollary 2.15b, we can fix any basis \(\{v_{d}^{(i)}\}\) of \(U_{d}^{\min}(\mathbf{v}_{n})\) and recover \(\mathbf{v}_{n}= \sum_{i=1}^{n}(\mathbf{id}_{[d]}\otimes\psi_{d}^{(i)})(\mathbf{v}_{n})\otimes v_{d}^{(i)}\) from the dual functionals \(\{\psi_{d}^{(i)}\}\). We choose \(v_{d}^{(i)}\) and \(\psi_{d}^{(i)}\) according to Lemma 2.2 with \(\Vert v_{d}^{(i)}\Vert_{d}=\Vert\psi_{d}^{(i)}\Vert_{d}^{\ast}=1\). Define

The triangle inequality yields

Note that

where dimU [d],n ≤n.

On the other hand, according to Lemma 2.2, we can choose a basis \(\{\mathbf{v}_{[d]}^{(i)}\}_{i=1}^{n}\) of \(\mathbf{U}_{[d]}^{\min }(\mathbf{v}_{n})\) and its corresponding dual basis \(\{\boldsymbol{\chi}_{[d]}^{(i)}\}_{i=1}^{n}\). An analogous proof shows that

satisfies the properties

and \(\mathbf{u}_{n}^{\mathrm{II}}\in\mathbf{V}_{[d]}\otimes_{a}U_{d,n}\), where \(U_{d,n}:=\operatorname{span}\{ (\overline{\mathit{id}_{d}\otimes\boldsymbol{\chi}_{[d]}^{(i)}})(\mathbf{v}):1\leq i\leq n\} \) has dimU d,n ≤n and is a subspace of \(U_{d}^{\min}(\mathbf{v})\).

From Lemma 3.18 we choose the projection Φ d onto the subspace U d,n and define

The uniform cross norm property (3.12) with A j =id (1≤j≤d−1) and A d =Φ d implies the estimate \(\Vert\mathbf{id}_{[d]}\otimes\varPhi_{d}\Vert_{\mathbf{V\leftarrow V}}=\Vert\varPhi_{d} \Vert_{\mathbf{V}_{d}\mathbf{\leftarrow V}_{d}}\leq\sqrt{n}\), where the latter bound is given by Lemma 3.18. Therefore,

Altogether, we get the estimate

The assumption ∥v−v n ∥≤o(n −3/2) implies ∥u n −v∥→0. □

3.4 Minimal Closed Subspaces in a Hilbert Tensor Space

Let 〈⋅,⋅〉 j be a scalar product defined on V j (1≤j≤d), i.e., V j is a pre-Hilbert space. Then \(\mathbf{V}={}_{a}\bigotimes_{j=1}^{d}V_{j}\) is again a pre-Hilbert space with a scalar product which is defined for elementary tensors \(\mathbf{v}=\bigotimes_{j=1}^{d}v^{(j)}\) and \(\mathbf{w}=\bigotimes_{j=1}^{d}w^{(j)}\) by

This bilinear form has a unique extension 〈⋅,⋅〉:V×V→ℝ. One verifies that 〈⋅,⋅〉 is a scalar product, called the induced scalar product. Let V be equipped with the norm ∥⋅∥ corresponding to the induced scalar product 〈⋅,⋅〉. As usual, the Hilbert tensor space \(\mathbf{V}_{\Vert \cdot \Vert }={}_{\Vert \cdot \Vert }\bigotimes_{j=1}^{d}V_{j} \) is the completion of V with respect to ∥⋅∥. Since the norm ∥⋅∥ is derived via (3.17), it is easy to see that ∥⋅∥ is a reasonable and even uniform cross norm.

We recall that orthogonal projections \(P\in\mathcal{L}(V,V)\) (V Hilbert space) are self-adjoint projections. P is an orthogonal projection onto the closed subspace \(U:=\operatorname{range}(P)\subset V\), which leads to the direct sum V=U⊕U ⊥, where \(U^{\bot}=\operatorname{range}(\mathit{id}-P)\). Vice versa, each closed subspace U⊂V defines an orthogonal projection P with \(U=\operatorname{range}(P)\).

Lemma 3.20

Let V j be Hilbert spaces with subspaces U j ⊂V j such that \(V_{j} = U_{j} \oplus U_{j}^{\bot}\). The norm ∥⋅∥ of the Hilbert tensor space \({}_{\Vert \cdot \Vert }\bigotimes_{j=1}^{d}V_{j}\) is defined via the scalar product (3.17). Then

Proof

We consider the case d=2 only (d≥3 can be obtained by induction). Then the assertion to be proved is

The analogous statement for the algebraic tensor spaces holds by Lemma 2.11. The general rule \(\overline{X\cap Y}\subset \overline{X}\cap\overline{Y}\) (\(\bar{\mathbf{\cdot}}\) is the closure with respect to ∥⋅∥) implies that

The lemma is proved, if the opposite inclusion holds:

Let v∈U 1⊗∥⋅∥ V 2. By definition, there is a sequence v n ∈U 1⊗ a V 2 with v n →v. Let P 1 be the orthogonal projection onto U 1. Then (P 1⊗id 2)v n =v n proves \(\mathcal{P}_{1}\mathbf{v}=\mathbf{v}\) for the extension \(\mathcal{P}_{1}:=\overline{P_{1}\otimes \mathit{id}_{2}}\). Similarly, \(\mathcal{P}_{2}\mathbf{v}=\mathbf{v}\) follows with \(\mathcal{P}_{2}:=\overline{\mathit{id}_{1} \otimes P_{2}}\), where P 2 is the orthogonal projection onto U 2. Since P 1⊗id 2 and id 1⊗P 2 commute, the product P 1⊗P 2 is also an orthogonal projection. Its range is U 1⊗ a U 2, while U 1⊗∥⋅∥ U 2 is the range of its extension \(\mathcal{P}:=\overline{P_{1}\otimes P_{2}}=\mathcal{P}_{1}\mathcal{P}_{2}=\mathcal{P}_{2}\mathcal{P}_{1}\). Hence, \(\mathcal{P}_{1}\mathbf{v}= \mathcal{P}_{2}\mathbf{v}=\mathbf{v}\) implies \(\mathcal{P}\mathbf{v}=\mathbf{v,}\) i.e., v∈U 1⊗∥⋅∥ U 2. This ends the proof of (3.18). □

Lemma 3.21

Let V i (i=1,2) be Hilbert spaces, and U 1⊂V 1 a closed subspace. Then the direct sum \(V_{1}=U_{1}\oplus U_{1}^{\bot}\) implies

Proof

Consider the ranges of \(\mathcal{P}_{1}=\overline{P_{1}\otimes \mathit{id}_{2}}\) and \(\mathit{id}-\mathcal{P}_{1}\), where P 1 is the orthogonal projection onto U 1. □

Unlike Theorem 3.19 for the Banach tensor space setting, we need no assumption on the speed of the convergence v n →v to obtain the result v∈U ∥⋅∥(v).

Theorem 3.22

Assume that V j are Hilbert spaces and that V is equipped with the norm ∥⋅∥ corresponding to the induced scalar product. Then for all v∈V ∥⋅∥ it follows that v∈U ∥⋅∥(v).

Proof

(1) In order to simplify the notation, we set \(U_{j}:=\overline{U_{j}^{\min }(\mathbf{v})}^{\Vert \cdot \Vert _{j}}\) for 1≤j≤d. For all 1≤j≤d we may write V ∥⋅∥ as V j ⊗∥⋅∥ V [j]. If we succeed in proving v∈U j ⊗∥⋅∥ V [j], Lemma 3.20 implies \(\mathbf{v}\in{}_{\Vert \cdot \Vert }\bigotimes_{j=1}^{d}U_{j} =\mathbf{U}_{\Vert \cdot \Vert }(\mathbf{v})\).

(2) According to \(\mathbf{V}_{\Vert \cdot \Vert }= ( U_{j}\otimes_{\Vert \cdot \Vert }\mathbf{V}_{[j]} ) \oplus(U_{j}^{\bot}\otimes_{\Vert \cdot \Vert }\mathbf{V}_{[j]})\) from Lemma 3.21, we split v into

For an indirect proof we assume v ⊥≠0. Then there are \(v_{j}\in U_{j}^{\bot}\) and v [j]∈V [j] with 〈v j ⊗v [j],v ⊥〉=〈v j ⊗v [j],v〉≠0 (otherwise there are no algebraic tensors converging to v ⊥). For \(\boldsymbol{\varphi}_{[j]}:= \langle \mathbf{v}_{[j]},\cdot \rangle_{[j]}\in\mathbf{V}_{[j]}^{\ast}\) one verifies

The definition of \(U_{j}^{\min}(\mathbf{v})\) yields (id j ⊗φ [j])(v)∈U j . Since \(v_{j}\in U_{j}^{\bot}\), we obtain the contradiction 〈v j ⊗v [j],v ⊥〉=〈v j ⊗v [j],v〉=0. Hence v ⊥=0 proves the statement v∈U j ⊗∥⋅∥ V [j] needed in part (1). □

So far, we have assumed that the norm ∥⋅∥ of the Hilbert space V corresponds to the induced scalar product. In principle, we may also define another scalar product 〈⋅,⋅〉 V on V together with another norm \(\Vert \cdot \Vert _{\mathbf{V}}\mathbf{.}\) In this case, we have to assume that ∥⋅∥ V is a uniform cross norm (at least, \(\Vert (\bigotimes_{j=1}^{d}A_{j})(\mathbf{v})\Vert \leq C(\prod_{j=1}^{d}\Vert A_{j}\Vert_{V_{j}\leftarrow V_{j}})\Vert\mathbf{v}\Vert\) must hold for some constant C). This ensures that the projections \(\mathcal{P}_{j}\) (as defined in the proof of Lemma 3.20) belong to \(\mathcal{L}(\mathbf{V},\mathbf{V})\). Furthermore, (3.6) holds. Scalar products like 〈v j ⊗v [j],v〉 in the proof above are to be replaced with (φ j ⊗φ [j])(v), where, as usual, \(\varphi_{j}\in V_{j}^{\ast}\) is defined via φ j (⋅)=〈v j ,⋅〉 j . Then we can state again that v∈U ∥⋅∥(v).

4 On the Best \(\mathcal{T}_{\mathbf{r}}\) Approximation in a Banach Tensor Space

4.1 Main Statement

Theorem 4.1

Let V ∥⋅∥ be a reflexive Banach tensor space with a norm satisfying (3.6). Then for each v∈V ∥⋅∥ there exists \(\mathbf{w}\in\mathcal{T}_{\mathbf{r}}\) such that

Proof

Combine Theorem 4.2 and Proposition 4.3 given below. □

A subset M⊂X is called weakly closed, if x n ∈M and x n ⇀x implies x∈M. Note that ‘weakly closed’ is stronger than ‘closed’, i.e., M weakly closed ⇒ M closed.

Theorem 4.2

[4] Let (X,∥⋅∥) be a reflexive Banach space with a weakly closed subset ∅≠M⊂X. Then the following minimisation problem has a solution: For any x∈X there exists v∈M with

Proposition 4.3

Let V ∥⋅∥ be a Banach tensor space with a norm satisfying (3.6). Then the set \(\mathcal{T}_{\mathbf{r}}\) is weakly closed.

Proof

Let \(\{\mathbf{v}_{n}\}\subset\mathcal{T}_{\mathbf{r}}\) be such that v n ⇀v. Then there are subspaces U j,n ⊂V j such that v n ∈U j,n with dimU j,n =r j . Since \(U_{j}^{\min}(\mathbf{v}_{n})\subset U_{j,n}\), \(\dim U_{j}^{\min}(\mathbf{v}_{n})\leq r_{j}\) holds for all n∈ℕ. Consequently, by Theorem 3.15, \(\dim U_{j}^{\min}(\mathbf{v})\leq r_{j}\). Thus, \(U_{j}^{\min}(\mathbf{v})\) is finite dimensional. From Theorem 3.16 we conclude that \(\mathbf{v}\in{}_{a}\bigotimes_{j=1}^{d}U_{j}^{\min}(\mathbf{v}) \) and, thereby, \(\mathbf{v}\in\mathcal{T}_{\mathbf{r}}\). □

Corollary 4.4

A statement analogous to Theorem 4.1 also holds for the set \(\mathcal{H}_{\mathfrak{r}}\) appearing for the hierarchical format from [15] and the format from [24]. The proof uses the fact that \(\mathcal{H}_{\mathfrak{r}}\) is weakly closed.

Since the assumption of reflexivity excludes important spaces, we add some remarks on this subject. The existence of a minimiser or ‘nearest point’ w in a certain set A⊂X to some v∈V∖A is a well-studied subject. A set A is called ‘proximinal’ if ∥v−w∥=min u∈A ∥v−u∥ has at least one solution w∈A. Without the assumption of reflexivity, there are statements ensuring under certain conditions that the set of points in V∖A possessing nearest points in A is dense (e.g., Edelstein [10]). A smaller class than the weakly closed subsets A are the closed and convex subsets. However, even for closed and convex subsets one cannot avoid reflexivity, in general, because of the following result (cf. [4, Proposition 4]). Note that the sets \(\mathcal{T}_{\mathbf{r}}\) and \(\mathcal{H}_{\mathfrak{r}}\) are not convex, but weakly closed, as we will show with the help of minimal subspaces.

Theorem 4.5

All closed and convex subsets are proximinal, if and only if the underlying Banach space is reflexive.

4.2 Generalisation to the Intersection of Finitely Many Banach Tensor Spaces

We recall that the assumption (3.6) implies \({}_{a}\bigotimes_{j=1}^{d}V_{j}^{\ast}\subset({}_{a} \bigotimes_{j=1}^{d}V_{j})^{\ast}\) (cf. Proposition 3.7b). For certain Banach tensor spaces this property does not hold. Therefore, we have to check whether some of the results given in the previous section can be extended to this case. Thus, in this section we introduce the intersection tensor spaces. We also study sequences of minimal subspaces in this framework in order to prove the existence of a best \(\mathcal{T}_{\mathbf{r}}\) approximation. To illustrate this situation we give the following example.

Recall that \(\Vert f\Vert _{C^{1}(I)}=\max_{x\in I} \{ \vert f(x)\vert ,\vert f^{\prime}(x)\vert \} \) is the norm of continuously differentiable functions in one variable x∈I⊂ℝ. The naming ∥⋅∥1,mix of the following norm is derived from the mixed derivative involved.

Example 4.6

Let I and J be compact intervals in ℝ and consider \(V=(C^{1}(I), \Vert \cdot \Vert _{C^{1}(I)})\) and \(W=(C^{1}(J),\Vert \cdot \Vert _{C^{1}(J)})\). For the tensor space V⊗ a W we introduce the norm

It can be shown that ∥⋅∥1,mix is a reasonable cross norm. However, the standard norm of C 1(I×J) given by

is not a reasonable cross norm.

We have seen that the space C 1(I×J) is not the straightforward result of the tensor product C 1(I)⊗C 1(J). The norm ∥⋅∥1,mix from (4.2) turns out to be a reasonable cross norm, but then the resulting space \(C_{\mathrm{mix}}^{1}(I\times J)\) is a smaller space than C 1(I×J). Vice versa, the dual norm \(\Vert \cdot \Vert _{C^{1}(I\times J)}^{\ast}\) of C 1(I×J) is not bounded for v ∗⊗w ∗∈V ∗⊗W ∗. Therefore, it is not a reasonable cross norm.

The family of Sobolev spaces H m,p(I j ) for m=0,1,…,N is an example of a scale of Banach spaces which we introduce below. From now on, we fix integers N j and denote the j-th scale by

which means that \(V_{j}^{(n)}\) is a dense subspace of \((V_{j}^{(n-1)},\Vert \cdot \Vert _{j,n-1})\) for n=1,…,N j . This fact implies that the corresponding norms satisfy

It is an easy exercise to see that all \(V_{j}^{(n)}\) (1≤n≤N j ) are dense in \((V_{j}^{(0)},\Vert \cdot \Vert _{j,0})\).

Definition 4.7

Under the given assumptions for (4.4) we say that a subset \(\mathcal{N}\subset\mathbb{N}_{0}^{d}\) is an admissible index set, if it satisfies

For each n in an admissible index set \(\mathcal{N}\), we define the tensor space

All spaces V (n) are subspaces of \(\mathbf{V}^{(\mathbf{0})}={}_{a}\bigotimes_{j=1}^{d}V_{j}\) (recall that \(V_{j}=V_{j}^{(0)}\)). Assume that the following conditions hold:

-

(a)

For each admissible \(\mathbf{n}\in\mathbb{N}_{0}^{d}\), a norm ∥⋅∥ n on V (n) exists satisfying ∥⋅∥ n ≤∥⋅∥ m for \(\mathbf{n}\leq\mathbf{m}\in\mathcal{N}\), and

-

(b)

the norm ∥⋅∥ 0 on \(\mathbf{V}^{(\mathbf{0})}={}_{a}\bigotimes_{j=1}^{d}V_{j}\) satisfies (3.6).

Now, we introduce the Banach tensor space

Note that for each \(\mathbf{n}\in\mathcal{N}\), if \(\mathbf{n}\leq\mathbf{m}\in\mathcal{N}\), then \(\mathbf{V}_{\Vert \cdot \Vert }^{(\mathbf{m})}\subset\mathbf{V}_{\Vert \cdot \Vert }^{(\mathbf{n})}\). From Lemma 2.16 one derives the following result.

Lemma 4.8

Let \(\mathcal{N}\subset\mathbb{N}_{0}^{d}\) be an admissible index set. Then the following statements hold:

-

(a)

\(\mathbf{V}^{(\mathbf{n})}=\bigcap_{j=1}^{d}\mathbf{V}^{(\mathbf{n}_{j})}\) for all \(\mathbf{n}\in\mathcal{N}\), where \(\mathbf{n}_{j}:=(\underset{j-1}{\underbrace{0,\ldots,0}},n_{j},\underset{d-j}{\underbrace{0,\ldots,0}})\in\mathbb{N}_{0}^{d}\), for 1≤j≤d.

-

(b)

\(\bigcap_{\mathbf{n}\in\mathcal{N}}\mathbf{V}^{(\mathbf{n})}=\mathbf{V}^{(N_{1},\ldots,N_{d})}={}_{a}\bigotimes_{j=1}^{d}V_{j}^{(N_{j})}\).

Proof

From Lemma 2.16 we have

and statement (a) follows. Also, by Lemma 2.16,

and, by (4.4), statement (b) is proved. □

Let \(\mathcal{N}\subset\mathbb{N}_{0}^{d}\) be an admissible index set. From Lemma 4.8b it follows that the intersection of the set of tensor spaces \(\{\mathbf{V}^{(\mathbf{n})}:\mathbf{n}\in\mathcal{N}\}\) is the tensor space \(\mathbf{V}^{(N_{1},\ldots,N_{d})}\subset\mathbf{V}_{\Vert \cdot \Vert }^{(N_{1},\ldots,N_{d})}\). Observe that the index (N 1,…,N d ) does not necessarily belong to the index set \(\mathcal{N}\). Also, by Lemma 4.8a, we obtain the following minimal representation:

Next, we introduce the Banach space induced by intersection of the set of Banach tensor spaces \(\{\mathbf{V}_{\Vert \cdot \Vert }^{(\mathbf{n})}:\mathbf{n}\in\mathcal{N}\}\).

Definition 4.9

Let \(\mathcal{N}\subset\mathbb{N}_{0}^{d}\) be an admissible index set. The Banach space \(\mathbf{V}_{\Vert \cdot \Vert _{\mathcal{N}}}\) induced by the intersection of the set of Banach tensor spaces \(\{\mathbf{V}_{\Vert \cdot \Vert }^{(\mathbf{n})}:\mathbf{n}\in\mathcal{N}\}\) is defined by

or an equivalent one.

Next, we consider elementary tensors from the tensor space V (0).

Proposition 4.10

Let \(\mathcal{N}\subset\mathbb{N}_{0}^{d}\) be an admissible index set. Then

holds. In particular, each \(\mathbf{v}\in\mathbf{V}^{(\mathbf{0})}\cap\mathbf{V}_{\Vert \cdot \Vert _{\mathcal{N}}}\) has a representation \(\mathbf{v}=\sum_{i=1}^{r}\bigotimes_{j=1}^{d}v_{j}^{(i)}\) with \(v_{j}^{(i)}\in V_{j}^{(N_{j})}\) and a minimal number r of terms.

Proof

By definition (4.10), \(\mathbf{V}^{(\mathbf{0})}\cap\mathbf{V}_{\Vert \cdot \Vert _{\mathcal{N}}}=\bigcap_{\mathbf{n}\in\mathcal{N}} ( \mathbf{V}^{(\mathbf{0})}\cap \mathbf{V}_{\Vert \cdot \Vert }^{(\mathbf{n})} ) \) holds. Since \(\mathbf{v}\in\mathbf{V}^{(\mathbf{0})}\cap\mathbf{V}_{\Vert \cdot \Vert }^{(\mathbf{n})}\) is an elementary tensor, it belongs to V (0)∩V (n)=V (n). From Lemma 2.16 it follows that \(\mathbf{v}\in\bigcap_{\mathbf{n}\in\mathcal{N}}\mathbf{V}^{(\mathbf{n})}={}_{a}\bigotimes_{j=1}^{d} ( \bigcap_{\mathbf{n}\in\mathcal{N}}V_{j}^{(n_{j})} )\). By condition (4.6c), one of the n j equals N j , which implies \(\mathbf{v}\in{}_{a}\bigotimes_{j=1}^{d} ( \bigcap_{\mathbf{n}\in\mathcal{N}}V_{j}^{(n_{j})} ) ={}_{a}\bigotimes_{j=1}^{d}V_{j}^{(N_{j})}\). □

Corollary 4.11

The set \(\mathbf{V}^{(N_{1},\ldots,N_{d})}\) is dense in \(\mathbf{V}_{\Vert \cdot \Vert _{\mathcal{N}}}\) with respect to the \(\Vert \cdot \Vert _{\mathcal{N}}\)-topology.

Proof

The inclusions \(\mathbf{V}^{(N_{1},\ldots,N_{d})}\subset\mathbf{V}^{(\mathbf{n})}\subset\mathbf{V}_{\Vert \cdot \Vert }^{(\mathbf{n})}\) are dense for all \(\mathbf{n}\in\mathcal{N}\) (cf. (4.4)). Definition (4.10) yields the assertion. □

Example 4.12

Fix N>0 and consider the Sobolev spaces \(V_{j}^{n}=H^{n,p}(I_{j})\) for 0≤n≤N and 1≤j≤d. The standard choice of \(\mathcal{N}\) is given by

In this situation we have \(\mathbf{V}^{\mathbf{n}}=H^{n_{1},p}(I_{1})\otimes_{a}\cdots\otimes_{a}H^{n_{d},p}(I_{d})\) for each \(\mathbf{n}\in\mathcal{N}\), and

The choice of the norm in V n is

while in \(\mathbf{V}_{\Vert \cdot \Vert _{\mathcal{N}}}\) we take

which is equivalent to the usual norm ∥⋅∥ N,p . Then, by Corollary 4.11,

Observe that \({}_{\Vert \cdot \Vert _{N,p}}\bigotimes_{j=1}^{d}H^{N,p}(I_{j})\) is a Banach subspace of the Banach space H N,p(I). Moreover, for each \(\mathbf{N}_{j}=(0,\ldots ,0,N,0,\ldots,0)\in\mathcal{N}\), we have

which is clearly a cross norm in

for 1≤j≤d. In particular for p=2 we obtain

and in this case the norm \(\Vert \cdot \Vert _{\mathbf{N}_{j}}\) in

is generated by the induced scalar product (3.17) for 1≤j≤d. Consequently, it is a reasonable cross norm.

Note that Proposition 4.10 states that all functions from the algebraic tensor space \({}_{a}\bigotimes_{j=1}^{d}C^{0}(I_{j})\cap C^{1}(\mathbf{I})\) are already in \(\mathbf{V}_{\mathrm{mix}}=C_{\mathrm{mix}}^{1}(\mathbf{I})\) (see Eq. (4.2)), which is a proper subspace of C 1(I).

Example 4.13

Fix N>0 and consider \(V_{j}^{n}=H^{n,p}(I_{j})\) for 0≤n≤N and 1≤j≤d. Now, we consider the set

In this situation

The norm in V n is also given by (4.12). In particular, the norm

in V (N,…,N) is a cross norm. Since in \(\mathbf{V}_{\Vert \cdot \Vert _{\mathcal{N}}}\) we take

which is equivalent to the ∥⋅∥ mix -norm, by Corollary 4.11, we obtain

Thus,

In particular, for p=2, we have

Moreover, it is easy to see that the ∥⋅∥ mix -norm is generated by the induced scalar product (3.17) of H N(I j ) for 1≤j≤d and satisfies condition (3.6). This fact implies that Theorem 4.1 holds for the Hilbert tensor space \({}_{\Vert \cdot \Vert _{\mathbf{mix}}}\bigotimes_{j=1}^{d}H^{N}(I_{j})\).

Thus, a natural question arising in this example is whether Theorem 4.1 holds for the Hilbert tensor space H N(I) characterised by (4.14).

From Proposition 4.10, there are different equivalent versions of how to define the minimal subspace \(U_{j}^{\min}(\mathbf{v})=\operatorname*{span}\{v_{j}^{(i)}:1\leq i\leq r\}\) for \(\mathbf{v}=\sum_{i=1}^{r}\bigotimes_{j=1}^{d}v_{j}^{(i)}\). Here, we can state the following.

Corollary 4.14

Let \(\mathcal{N}\subset\mathbb{N}_{0}^{d}\) be an admissible index set. For each \(\mathbf{v}\in\mathbf{V}^{(N_{1},\ldots,N_{d})}\),

holds for 1≤j≤d.

Corollary 4.14 cannot be extended as Corollary 3.9 was for the Banach space case. A simple counterexample is f∈C 1(I×J) with f(x,y)=F(x+y) and F∉C 2. Choose φ∈C 1(J)∗ as \(\varphi=\delta_{\eta}^{\prime }\). Then φ(f)(x)=−F′(x+η)∈C 0(I), but φ(f) is not in C 1(I) in contrast to Corollary 4.14. While, in Corollary 4.14, we could take functionals from \(( {}_{a}\bigotimes_{k\neq j}V_{k}^{(n_{k})}) ^{\prime}\) for any n bounded by n k ≤N k , we now have to restrict the functionals to n=0. Because of the notation \(V_{k}^{(0)}=V_{k}\), the definition coincides with the usual one:

where the completion is performed with respect to the norm ∥⋅∥ j,0 of \(V_{j}^{(0)}\).

In the following we show that the same results can be derived as in the standard case. Condition (3.6) used before must be adapted to the situation of the intersection space. Consider the tuples \(\mathbf{N}_{j}=(0,\ldots,0,N_{j},0,\ldots,0)\in\mathcal{N}\) from (4.6c) and the corresponding tensor space

endowed with the norm \(\Vert \cdot \Vert _{\mathbf{\mathbf{N}}_{j}}\). From now on, we denote by \(\Vert \cdot \Vert _{\vee (\mathbf{N}_{j})}\) the injective norm defined from the Banach spaces \(V_{1},\ldots,V_{j-1},V_{j}^{(N_{j})},V_{j+1},\ldots,V_{d}\).

Theorem 4.15

Assume that \(\mathbf{V}_{\Vert \cdot \Vert _{\mathcal{N}}}\) is a reflexive Banach space induced by the intersection of the set of Banach tensor spaces \(\{\mathbf{V}_{\Vert \cdot \Vert _{\mathbf{n}}}^{(\mathbf{n})}:\mathbf{n}\in\mathcal{N}\}\) and

Then, for each \(\mathbf{v}\in\mathbf{V}_{\Vert \cdot \Vert _{\mathcal{N}}}\), there exists \(\mathbf{w}\in\mathcal{T}_{\mathbf{r}}\) such that

Lemma 4.16

Assume that \(\mathbf{V}_{\Vert \cdot \Vert _{\mathcal{N}}}\) is a Banach space induced by the intersection of the set of Banach tensor spaces \(\{\mathbf{V}_{\Vert \cdot \Vert _{\mathbf{n}}}^{(\mathbf{n})}:\mathbf{n}\in\mathcal{N}\}\) satisfying assumption (4.17). Let \(\boldsymbol{\varphi}_{[j]}\in{}_{a}\bigotimes_{k\neq j}V_{k}^{\ast}\) and \(\mathbf{v}_{n},\mathbf{v}\in\mathbf{V}_{\Vert \cdot \Vert _{\mathcal{N}}}\) with v n ⇀v. Then weak convergence \((\overline{\mathit{id}_{j}\otimes \boldsymbol{\varphi}_{[j]}})(\mathbf{v}_{n})\rightharpoonup(\overline {\mathit{id}_{j}\otimes\boldsymbol{\varphi}_{[j]}})(\mathbf{v})\) holds in \(V_{j}^{(N_{j})}\).

Proof

Repeat the proof of Lemma 3.13 and note that \(\varphi_{j}\in(V_{j}^{(N_{j})})^{\ast}\) composed with an elementary tensor φ [j]=⨂ k≠j φ k (\(\varphi_{k}\in V_{k}^{\ast}\)) yields \(\boldsymbol{\varphi}=\bigotimes_{k=1}^{d}\varphi_{k}\in{}_{a}\bigotimes_{k=1}^{d}(V_{k}^{(n_{k})})^{\ast}\) with n k =0 for k≠j and n j =N j . By (4.17) and Proposition 3.7b, φ belongs to \(( \mathbf{V}^{(\mathbf{N}_{j})} )^{\ast}\). □

Corollary 4.17

Under the assumptions of Lemma 4.16, \(\overline {U_{j}^{\min}(\mathbf{v})}^{\Vert \cdot \Vert _{j,0}}\subset V_{j}^{(N_{j})}\) holds for all \(\mathbf{v}\in\mathbf{V}_{\Vert \cdot \Vert _{\mathcal{N}}}\) and 1≤j≤d.

Proof

Let \(\mathbf{v}_{m}\in\mathbf{V}^{(N_{1},\ldots,N_{d})}\) be a sequence with \(\mathbf{v}_{m}\rightarrow\mathbf{v}\in\mathbf{V}_{\Vert \cdot \Vert _{\mathcal{N}}}\) By definition (4.10) of the intersection norm, \(\Vert \mathbf{v}_{m}-\mathbf{v}\Vert _{\mathbf{\mathbf{N}}_{j}}\rightarrow0\) holds for all j. Then (3.7) shows that \(\Vert(\overline{\mathit{id}_{j}\otimes\boldsymbol{\varphi}_{[j]}})(\mathbf{v}-\mathbf{v}_{m})\Vert_{j,N_{j}}\rightarrow0\). Since \((\overline{\mathit{id}_{j}\otimes\boldsymbol{\varphi}_{[j]}})(\mathbf{v}_{m})\in V_{j}^{(N_{j})}\) by Proposition 4.10, the limit of \((\overline{\mathit{id}_{j}\otimes\boldsymbol{\varphi}_{[j]}})(\mathbf{v})\) also belongs to \(V_{j}^{(N_{j})}\). □

Lemma 4.18

Assume that \(\mathbf{V}_{\Vert \cdot \Vert _{\mathcal{N}}}\) is a Banach space induced by the intersection of the set of Banach tensor spaces \(\{\mathbf{V}_{\Vert \cdot \Vert _{\mathbf{n}}}^{(\mathbf{n})}:\mathbf{n}\in\mathcal{N}\}\) satisfying assumption (4.17). For \(\mathbf{v}_{m}\in\mathbf{V}^{(N_{1},\ldots,N_{d})}\) assume \(\mathbf{v}_{m}\rightharpoonup\mathbf{v}\in\mathbf{V}_{\Vert \cdot \Vert _{\mathcal{N}}}\). Then

Proof

We can repeat the proof from Theorem 3.15. □

Finally, in a similar way as in Proposition 4.3, we can also obtain the following statement.

Proposition 4.19

Assume that \(\mathbf{V}_{\Vert \cdot \Vert _{\mathcal{N}}}\) is a Banach space induced by the intersection of the set of Banach tensor spaces \(\{\mathbf{V}_{\Vert \cdot \Vert }^{(\mathbf{n})}:\mathbf{n}\in\mathcal{N}\}\) satisfying the assumption (4.17). Then the set \(\mathcal{T}_{\mathbf{r}}\) is weakly closed.

Proof of Theorem 4.15

The proof is a consequence of Theorem 4.2 and Proposition 4.19. □

Example 4.20