Abstract

Proportional apportionment is the problem of assigning seats to states (resp. parties) according to their relative share of the population (resp. votes), a field heavily influenced by the early work of Michel Balinski, not least his influential 1982 book with Peyton Young (Fair representation, 2nd edn. Brookings Institution Press, Washington, D.C., 2001). In this article, we consider the computational cost of divisor methods (also known as highest averages methods), the de-facto standard solution that is used in many countries. We show that a simple linear-time algorithm can exactly simulate all instances of the family of divisor methods of apportionment by reducing the problem to a single call to a selection algorithm. All previously published solutions were iterative methods that either offer no linear-time guarantee in the worst case or require a complex update step that suffers from numerical instability.

Similar content being viewed by others

1 Introduction

The mathematical problem of proportional apportionment arises whenever we have a finite supply of k indivisible, identical resources which are to be distributed across n parties proportionally to their publicly known and agreed-upon values \(v_1, \ldots , v_n\). The indivisibility constraint makes a perfectly proportional assignment impossible unless the quotas \(k\cdot v_i / V\) with \(V = v_1+\cdots +v_n\) happen to be all integral for \(i=1,\ldots ,n\); apportionment methods decide how to allocate resources in the general case.

Apportionment directly arises in politics in two forms:

-

In a proportional-representation electoral system seats in parliament are assigned to political parties according to their share of all votes. (The resources are seats, and the values are vote counts.)

-

In federal states the number of representatives from each component state often reflects the population of that state. (Resources are again seats, values are the numbers of residents.)

While not identical in their requirements—for example, any state will typically have at least one representative no matter how small it is—the same mathematical framework applies to both instances. Further applications are tables wherein rounded percentages should add up to 100%, the assignment of workers to jobs, or the allocation of service facilities to areas proportional to demand.

In order to use consistent language throughout this article, we will stick to the first metaphor. That is, we assign k seats to n parties proportionally to their respective votes \(v_i\); we call k the house size. In the case of electoral systems which exclude parties below a certain threshold of overall votes from seat allocation altogether, we assume they have already been removed from our list of n parties. An apportionment method maps vote counts \(\varvec{\textbf{v}} = (v_1,\ldots ,v_n)\) and house size k to a seat allocation \(\varvec{\textbf{s}} = (s_1,\ldots ,s_n)\) so that \(s_1 + \cdots + s_n = k\). We interpret \(\varvec{\textbf{s}}\) as party i getting \(s_i\) seats.

There are many conceivable such methods, but [3] show that the divisor methods (introduced below) are the only methods that guarantee pairwise vote monotonicity (population monotonicity in [3]), which requires that a party i cannot lose seats to a party j when i gains votes while j loses votes (and all other parties remain unchanged). For a comprehensive introduction into the topic with its historical, political, and mathematical dimensions, including desirable and undesirable properties of various apportionment methods and corresponding impossibility results, we refer the reader to the books of [3, 9].

1.1 Problem definition

Divisor methods (also known as highest-averages methods) are characterized by the used rounding rule \(\llbracket \cdot \rrbracket \); examples include rounding down, rounding up, or rounding to the nearest even integer (see also Table 1 and [9, p. 70]). Party i is then assigned \(\llbracket {v_i}/{D} \rrbracket \) seats, where D is a divisor chosen so that \(s_1+\cdots +s_n = k\); such a D is guaranteed to exist for any sensible rounding rule and obtained by solving the following optimization problem: \( \max D \text { s.t. } \sum _{i=1}^n \llbracket v_i/D \rrbracket \ge k \). We point out that without an algorithm to solve this problem, divisor methods of apportionment cannot feasibly be applied in practice.

While the concept of divisor methods can be used more generally, a typical assumption is that \(\lfloor x\rfloor \le \llbracket x \rrbracket \le \lceil x\rceil \), which implies that \(s_i\) is roughly proportional to \(v_i/V\). For this section, we also make this assumption; later, we slightly weaken it to nearly-arithmetic divisor sequences (Definition 1).

Different rounding rules yield in general different apportionment methods, and there is no per se best choice. For example, there are competing notions of fairness, each favoring a different divisor method [3, Sect. A.3]. A reasonable approach is therefore to run computer simulations of different methods and compare their outcomes empirically, for example w.r.t. the distribution of final average votes per seat \(v_i/s_i\). For this purpose, many apportionments may have to be computed, making efficient algorithms desirable. Apart from that, settling the computational complexity of this fundamental optimization problem is interesting in its own right.

1.2 Previous work

While methods for proportional apportionment have been studied for a long time, the question of algorithmic complexity has only more recently been considered. A direct iterative method (see Sect. 2) has complexity \(\varTheta (nk)\) when implemented naively, and \(\varTheta (k\log n)\) when using a priority queue. Note that typically \(k\gg n\), and indeed, the input consists of \(n+1\) numbers, so this running time can be exponential in the size of a binary encoding of the input.

A simple refinement, the jump-and-step algorithm described by [9] (see also Sect. 4.1), avoids any dependency on k. It is based on the iterative method, but jumps to within \(O (n)\) of the target value, so worst-case running times are \(O (n^2)\) with naive iteration and \(O (n\log n)\) using a priority queue. These bounds seem to be folklore; they are mentioned explicitly for example by [6, 14]. This running time is not optimal, but the algorithm is simple and performs provably well in certain average-case scenarios [9, Sect. 6.7].

Finally, [5] obtained an algorithm with the optimal \(O (n)\) complexity in the worst case. They reduce the problem of finding a divisor D to selecting the kth smallest element from a multiset formed by n arithmetic progressions, and design a somewhat involved algorithm to solve this special rank-selection problem in \(O (n)\) time. This settles the theoretical complexity of the problem since clearly \(\varOmega (n)\) time is necessary to read the input. However, apart from conceptual complexity, Cheng and Eppstein’s algorithm suffers from a numerical-instability issue that we uncovered when implementing their algorithm.

1.3 Contribution

Our main contribution is a much simpler algorithm than Cheng and Eppstein’s algorithm for divisor methods of apportionment. It directly constructs a multiset \(\hat{{\mathcal {A}}}\) of size \(O (n)\) and a rank \({\hat{k}}\) so that D is obtained as the \({\hat{k}}\)th smallest element in \(\hat{{\mathcal {A}}}\). An example execution of our algorithm is shown in Table 2 (p. 9). Apart from its improved conceptual simplicity and practical efficiency (see Sect. 4), this also circumvents any issues from imprecise arithmetic. Formally, our result is as follows.

Theorem 1

(Main result) Given any rounding rule \(\llbracket \cdot \rrbracket \) with \(\lfloor x\rfloor \le \llbracket x\rrbracket \le \lceil x\rceil \) for all \(x\in {\mathbb {R}}_{\ge 0}\), any vector of votes \(\varvec{\textbf{v}}\in {\mathbb {N}}^n\), and house size \(k\in {\mathbb {N}}\), our algorithm SandwichSelect computes a divisor D that yields seat allocations \(\varvec{\textbf{s}}=(s_1,\ldots ,s_n)\) respecting \(s_i\in \llbracket \frac{v_i}{D}\rrbracket \) and \(s_1+\cdots +s_n = k\) using running time in \(O (n)\). It can do so without explicitly computing \(\llbracket \cdot \rrbracket \).

Moreover, we report from an extensive running-time study of the above apportionment methods. We find that our new method is almost an order of magnitude (a factor 10) faster than Cheng and Eppstein’s algorithm while at the same time avoiding the super-linear complexity of the jump-and-step algorithm for large inputs. Implementations of all algorithms and sources for the experiments are available online [10].

Outline. Section 2 defines divisor methods formally. In Sect. 3, we describe the selection-based algorithms, including our new method. Section 4 describes results of our running-time study; Sect. 5 concludes the paper. For the reader’s convenience, we include an index of notation in “Appendix A”.

2 Preliminaries

Our exposition follows the notation of [5], but we also give the names as used by [9].

2.1 Divisor sequences

A divisor sequence is a nonnegative, strictly increasing and unbounded sequence of real numbers. Throughout the paper, we consider a fixed divisor sequence \(d = (d_j)_{j=0}^\infty \); for notational convenience, we set \(d_{-1} {:}{=}-\infty \). We require a monotonic continuation \(\delta \) of d on the reals which is easy to invert; formally, we assume a function \(\delta : {\mathbb {R}}_{\ge 0} \rightarrow {\mathbb {R}}_{\ge d_0}\) with

-

(D1)

\(\delta \) is continuous and strictly increasing,

-

(D2)

\(\delta ^{-1}(x)\) for \(x\ge d_0\) can be computed with a constant number of arithmetic operations, and

-

(D3)

\(\delta (j) = d_j\) (and thus \(\delta ^{-1}(d_j) = j\)) for all \(j \in {\mathbb {N}}_0\).

All the divisor sequences used in practice fulfill these requirements; cf. Table 1. For convenience, we continue \(\delta ^{-1}\) on the complete real line requiring

-

(D4)

\(\delta ^{-1}(x) \in [-1,0)\) for \(x < d_0\).

Lemma 1

Assuming (D1) to (D4), \(\delta ^{-1}(x)\) is continuous and strictly increasing on \({\mathbb {R}}_{\ge d_0}\). Furthermore, it is the inverse of \(j \mapsto d_j\) in the sense that

for all \(x \in {\mathbb {R}}\). \(\square \)

In particular, \(\lfloor \delta ^{-1}(x) \rfloor + 1 = \bigl |\{j\in {\mathbb {N}}_0 : d_j \le x\}\bigr |\) is the rank function for the set of all \(d_j\).

In the terminology of [9], d is a jumppoint sequence (of a rounding rule, see below), but with a shift of indices (we start with \(d_0\) instead of s(1)). A divisor sequence with \(j\le d_j\le j+1\) for all \(j\in {\mathbb {N}}_0\) is called a signpost sequence.Footnote 1

While divisor methods can be defined for any nonnegative, strictly increasing and unbounded sequence, we will focus our attention on those with the following property.

Definition 1

(nearly arithmetic) A divisor sequence \((d_{j})_{j\in {\mathbb {N}}_0}\) resp. its continuation \(\delta \) is called nearly arithmetic if there are constants \(\alpha > 0\), \({\underline{\beta }} \in [0, \alpha ]\), and \({\overline{\beta }} \ge 0\) so that

Table 1 lists divisor sequences for common apportionment methods; all are nearly arithmetic. Further, any signpost sequence is trivially nearly arithmetic, including power-mean signposts [9, Sect. 3.11–312] and geometric-mean signposts [6]. Nearly arithmetic sequences are also exactly the class of divisor sequences addressed by [5].

2.2 Ties, rounding rules, and seat Allocations

Since the actual seat allocation \(\varvec{\textbf{s}}\) is not uniquely determined in case of ties, it is convenient to have a set-valued rounding rule in addition to the rank function. The rounding rule \(\llbracket \cdot \rrbracket \) induced by divisor sequence d is defined by  , were

, were  if \(x\notin {\mathbb {Z}}\) and

if \(x\notin {\mathbb {Z}}\) and  for \(n\in {\mathbb {Z}}\). (The \(+1\) is due to the index shift in divisor sequences;

for \(n\in {\mathbb {Z}}\). (The \(+1\) is due to the index shift in divisor sequences;  is the natural extension of \(\lfloor \cdot \rfloor \) that returns both limits at jump discontinuities). Note that we have \(\lfloor x\rfloor \le \llbracket x \rrbracket \le \lceil x\rceil \) (for one of the possible values of \(\llbracket x \rrbracket \) in case of ties) if and only if the jumppoint sequence is a signpost sequence, making these particularly natural choices for rounding rules. The set of valid seat assignments for given votes and house size is then given by

is the natural extension of \(\lfloor \cdot \rfloor \) that returns both limits at jump discontinuities). Note that we have \(\lfloor x\rfloor \le \llbracket x \rrbracket \le \lceil x\rceil \) (for one of the possible values of \(\llbracket x \rrbracket \) in case of ties) if and only if the jumppoint sequence is a signpost sequence, making these particularly natural choices for rounding rules. The set of valid seat assignments for given votes and house size is then given by

2.3 Highest averages

Divisor methods can equivalently be defined by an iterative method [3, Prop. 3.3]: Starting with no allocated seats, \(s_i=0\) for \(i\in [n]\), we iteratively assign the next seat to a party with a currently “highest average”, i. e., maximal \(v_i/d_{s_i}\): a party with the most votes per seat. For technical reasons, it turns out to be much more convenient to work with reciprocal averages, i. e., assign the next seat to a party with minimal \(d_{s_i}/v_i\) (fewest current seats per vote). In case of ties, any choice leads to a valid seat allocation \(\varvec{\textbf{s}} \in {\mathcal {S}}(\varvec{\textbf{v}}, k)\).

This iterative method does not yield an efficient algorithm, but it gives rise to to a key structural observation: the minimal quotients \(d_j / v_i\) are weakly increasing over subsequent iterations (by monotonicity of d), and we obtain the final (largest) quotient directly as

using the final seat assignment \(\varvec{\textbf{s}}\). The iterative method yields the same seat assignments as Eq. (1) using \(D=1/a^*\) (cf. [9, 59f]); to get the full set of all feasible assignments \({\mathcal {S}}(\varvec{\textbf{v}}, k)\), one has to simulate all possibilities of breaking ties when selecting the next party to be awarded a seat.

3 Fast apportionment through selection

Worst-case optimal algorithms for divisor methods of apportionment exploit that the quotients \({d_j}/{v_i}\) in the iterative method change monotonically: The final multiplier \(a^*\) is the kth smallest of all possible quotients \({d_j}/{v_i}\), and can hence be found directly using a selection algorithm [5]. The challenge is to suitably restrict the candidate set from which to select.

We need some more notation. Given a (multi)set \(\mathcal {M}\) of (not necessarily distinct) numbers, we write \(\mathcal {M}_{(k)}\) for the kth order statistic of \(\mathcal {M}\), i. e., the kth smallest element (counting duplicates) in \(\mathcal {M}\). For example, if \(M = \{ 5,8,8,8,10,10 \}\), we have \(M_{(1)} = 5\), \(M_{(2)} = M_{(3)} = M_{(4)} = 8\), and \(M_{(5)} = M_{(6)} = 10\). For given votes \(\varvec{\textbf{v}} = (v_1,\ldots ,v_n) \in {\mathbb {Q}}_{>0}^n\), we define the sets

and their multiset union \( \mathcal {A} \mathrel {\;{{:}{=}}\;}\biguplus _{i=1}^n A_i . \) With that notation, we obtain that \(a^* = \mathcal {A}_{(k)}\). We further define the rank function \(r(x,\mathcal {A})\) as the number of elements in multiset \(\mathcal {A}\) that are no larger than x, that is

We write r(x) instead of \(r(x,\mathcal {A})\) when \(\mathcal {A}\) is clear from context. In light of the optimization formulation, \(\min a \text { s.t. } r(a,\mathcal {A}) \ge k\), we call a value a feasible if \(r(a,\mathcal {A}) \ge k\), otherwise it is infeasible. Feasible \(a > a^*\) are called suboptimal.

Note that \(\mathcal {A}\) is infinite, but \(\mathcal {A}_{(k)}\) always exists since the terms \(a_{i,j} = \nicefrac {d_j}{v_i}\) are strictly increasing in j for all \(i \in \{1,\ldots ,n\}\).

3.1 Cheng and Eppstein’s algorithm

Cheng and Eppstein [5] devise an iterative method that maintains an approximation \(\xi \) of \(a^*\). In each step, the method either (at least) halves the difference of \(r(\xi )\) to k or it (at least) halves the number of parties still under consideration. By ensuring that the initial distance of \(r(\xi )\) from k is \(O (n)\), their algorithm terminates after \(O (n)\) iterations. Each iteration selects the median of the set of \(a_{i,j}\) closest to \(\xi \) for all remaining parties i; using a linear-time selection algorithm, this yields overall \(O (n)\) time. More concretely, their algorithm, ChengEppsteinSelect, uses the following three steps.

-

(a)

Identify contributing sequences and compute an initial coarse solution \(\xi \), i. e., a value with rank \(r(\xi ) = k \pm O (n)\).

(The initial coarse solution is essentially our \({\overline{a}}\) as defined below.)

-

(b)

Compute a lower-rank coarse solution \(\xi '\) with rank \(r(\xi ') \in [k-n..k]\) starting with \(\xi \).

-

(c)

Compute \(a^*\) starting at \(\xi '\).

Each of the steps involves a variant of the iterative median-based algorithm sketched above.

Remark 1

(Precision issues) While implementing it for our running time study, we discovered the following shortcoming of ChengEppsteinSelect. After the median selection, one has to determine for how many parties i the closest \(a_{i,j}\) to \(\xi \) yields exactly the new upper bound u; all but one of these have to be excluded and their number must be known precisely (see the computation of m in Algorithm 2 of [5]). This comes down to testing whether \(d_j/v_i = d_{j'}/v_{i'}\) for various values of \(i,j,i',j'\); with a naive implementation based on floating-point arithmetic, this cannot be done reliably. The situation is aggravated by the fact that such an implementation can return incorrect results without any obvious signs of failure.

To circumvent this issue, one can either work with exact (rational) arithmetic, which slows down comparisons during median selection considerably, or keep a mapping from quotients \(a_{i,j}\) back to party i to check \(d_j/v_i = d_{j'}/v_{i'}\) by testing \(d_j v_{i'} = d_{j'} v_{i}\). The latter requires additional space and slows down swaps during median selection. We are not aware of a fully satisfactory solution to this issue.

3.2 Our algorithm

Our algorithm relies on explicitly constructing a small “slice” \(\mathcal {A}\cap [{\underline{a}}, {\overline{a}}]\) that contains \(a^*\); we can then directly apply a rank-selection algorithm on this slice. We delay any detailed justifications to Sect. 3.3 and first state our algorithm. An application of the algorithm to an exemplary input is given in Table 2.

Recall that we assume a fixed apportionment scheme with a nearly-arithmetic divisor sequence, i. e., \(\alpha j + {\underline{\beta }} \le d_j \le \alpha j+{\overline{\beta }}\) (Definition 1).

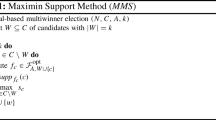

Algorithm 1

\(\textsc {SandwichSelect}_d(\varvec{\textbf{v}}, k)\) :

- Step 1:

-

Compute \( {\underline{a}}\, {:}{=}\, \max \bigl \{0, \alpha \frac{k}{V} - (\alpha -{\underline{\beta }}) \frac{n}{V} \bigr \}\) and \( {\overline{a}} \,{:}{=}\, \alpha \frac{k}{V}+{\overline{\beta }} \frac{n}{V}\).

- Step 2:

-

Initialize \(\hat{\mathcal {A}}\, {:}{=}\, \emptyset \) and \({\hat{k}}\, {:}{=}\,k\).

- Step 3:

-

For \(i = 1,\ldots ,n\) do:

- Step 3.1:

-

Compute \({\underline{j}}\, {:}{=}\, \max \bigl \{0, \bigl \lceil \delta ^{-1}( v_i\cdot {\underline{a}} ) \bigr \rceil \bigr \}\) and \({\overline{j}} \,{:}{=}\, \lfloor \delta ^{-1}(v_i \cdot {\overline{a}}) \rfloor \).

- Step 3.2:

-

For all \(j={\underline{j}},\ldots ,{\overline{j}}\), add \(d_j / v_i\) to \(\hat{\mathcal {A}}\).

- Step 3.3:

-

Update \({\hat{k}} \,{:}{=}\, {\hat{k}} - {\underline{j}}\).

- Step 4:

-

Select and return the \({\hat{k}}\)th smallest element of \(\hat{\mathcal {A}}\).

The intuition behind bounds \([{\underline{a}}, {\overline{a}}]\) on \(a^*\) is to investigate the rank of \(a^*\) in the multiset \(\mathcal {A}\) of all candidates. Since any number between one and n parties can tie for the last seat, all we can say a priori is that \( k \mathrel {\;\;{\le }\;\;}r(a^*) \mathrel {\;\;{\le }\;\;}k + n \). We thus make an ansatz with \(r({\overline{a}}) \ge k + n\) and \(r({\underline{a}}) < k\), and try solve for \({\overline{a}}\) and \({\underline{a}}\). While it seems not possible to do this exactly, we can obtain sufficiently tight bounds for nearly-arithmetic divisor sequences to guarantee \(|\hat{\mathcal {A}}| = O (n)\).

Remark 2

(Numerical stability) We note that all arithmetic computations in SandwichSelect can safely be implemented with imprecise floating-point arithmetic when rounding conservatively, i. e., rounding towards \(-\infty \) for \({\underline{a}}\) and \({\underline{j}}\), resp. towards \(+\infty \) for \({\overline{a}}\) and \({\overline{j}}\). Round-off errors may imply a minor slow-down (by making \(\hat{\mathcal {A}}\) slightly larger than necessary), but they do not affect correctness since we use the same value \({\underline{j}}\) for filling \(\hat{\mathcal {A}}\) and for adjusting \({\hat{k}}\).

Remark 3

(Avoid evaluation of \(\llbracket \cdot \rrbracket \)) Functions \(\delta ^{-1}\) resp. \(\llbracket \cdot \rrbracket \) might be expensive to evaluate in general. We can replace Step 3.1 by \( {\underline{j}} {:}{=}\max \{ 0,\lceil \nicefrac {(v_i {\underline{a}} - {\overline{\beta }})}{\alpha }\rceil \} \) and \( {\overline{j}} {:}{=}\lfloor \nicefrac {(v_i {\overline{a}} - {\underline{\beta }})}{\alpha }\rfloor \). This may make \(\hat{\mathcal {A}}\) slightly larger, but our upper bound from Lemma 4 on \(|\hat{\mathcal {A}}|\) still applies (cf. Eq. (8)). Thus SandwichSelect can run without ever evaluating a rank function or computing an inverse of the divisor sequence. Although this may not be a serious concern for the divisor sequences used in applications, it is unclear whether ChengEppsteinSelect can similarly avoid evaluating rank functions precisely.

Remark 4

(Relation to envy-free stick-division) SandwichSelect is based on a generalization of our solution for the envy-free stick-division problem [12], a task that arose as a subproblem in a cake-cutting protocol [13]. Given n sticks of lengths \(L_1,\ldots ,L_n\) and an integer k, the task is to find the longest length \(\ell \) so that we can cut k sticks of length exactly \(\ell \) from the given sticks (without gluing pieces together); this is essentially equivalent to apportionment with \(d_j = j+1\).

3.3 Proof of main result

Towards proving Theorem 1, we first establish a few intermediate results. We will indeed prove the slightly stronger statement that SandwichSelect correctly computes \(a^*\) using \(O (n)\) arithmetic operations for any nearly-arithmetic divisor sequence, not just signpost sequences. We point out that the running time of SandwichSelect is thus independent of k, even when k grows much faster than n. The proofs are elementary, but require care to correctly deal with ties and boundary cases, so we give detailed calculations.

We start by expressing the rank function r(x) in terms of \(\delta ^{-1}\).

Lemma 2

(Rank via continuation) The rank function \(r(x,\mathcal {A})\) satisfies

Proof

By Eq. (3) on p. 7, it suffices to show that

for each \(i \in \{1, \dots , n\}\). By Lemma 1, \(r(y,\{d_0,d_1,\ldots \}) = \lfloor \delta ^{-1}(y)\rfloor +1\) for all \(y\in {\mathbb {R}}\). Since \(x\le d_j/v_i\) if and only if \(y = x v_i \le d_j\), it follows that \(r(x,A_i) = r(x v_i,\{d_0,d_1,\ldots \}) = \lfloor \delta ^{-1}(v_i x) \rfloor + 1\). \(\square \)

Next, we show simple sufficient conditions for bounds \([{\underline{a}}, {\overline{a}}]\) to contain our target multiplier \(a^*\).

Lemma 3

(Valid slices) If \({\overline{a}}\) and \({\underline{a}}\) are chosen so that they fulfill

then \({\underline{a}} \le a^* \le {\overline{a}}\).

Proof

As a direct consequence of Lemma 2 together with the fundamental bounds \(y-1 < \lfloor y \rfloor \le y\) on floors, we find that

for any x. We now first show that any \(a<{\underline{a}}\) is infeasible. There are two cases: if there is a \(v_i\), such that \(v_i a \ge d_0\), we get by strict monotonicity of \(\delta ^{-1}\)

and a is infeasible. If otherwise \(v_i a < d_0\) for all i, a must clearly have rank \(r(a) = 0\) as it is smaller than any element \(a_{i,j}\in \mathcal {A}\).

In both cases we found that \(a<{\underline{a}}\) has rank \(r(a) < k\).

It remains to show that \(a^* \le {\overline{a}}\). By assumption we have

so \(|\mathcal {A} \cap (-\infty ,{\overline{a}}]| > k\). Hence \(a^* = \mathcal {A}_{(k)} \in \mathcal {A} \cap (-\infty ,{\overline{a}}]\) and the claim \(a^*\le {\overline{a}}\) follows. \(\square \)

The next lemma shows how to compute explicit bounds for \(a^*\) for nearly-arithmetic divisor sequences.

Lemma 4

(Sandwich bounds) Assume the continuation \(\delta \) of divisor sequence d fulfills

for all \(x \in {\mathbb {R}}_{\ge 0}\) with \(\alpha > 0\), \({\underline{\beta }} \in [0, \alpha ]\) and \({\overline{\beta }} \ge 0\). Then, the pair \(({\underline{a}}, {\overline{a}})\) defined by

fulfills the conditions of Lemma 3, that is \({\underline{a}} \le a^* \le {\overline{a}}\). Moreover,

Proof

We consider the linear divisor sequence continuations

for all \(j\in {\mathbb {R}}_{\ge 0}\) and start by noting that the inverses are

for \(x\ge {\underline{\delta }}(0) = {\underline{\beta }}\) and \(x\ge {\overline{\delta }}(0) = {\overline{\beta }}\), respectively. For smaller x, we are free to choose the value of the continuation from \([-1,0)\) (cf. (D4)); noting that \(\nicefrac {x}{\alpha } - \nicefrac {{\overline{\beta }}}{\alpha } < 0\) for \(x < {\overline{\beta }}\), a choice that will turn out convenient is

We state the following simple property for reference; it follows from \({\underline{\delta }}(j) \le \delta (j) \le {\overline{\delta }}(j)\) and the definition of the inverses (recall that \({\underline{\beta }} \le \alpha \)):

Equipped with these preliminaries, we compute

so \({\overline{a}}\) satisfies the condition of Lemma 3. Similarly, we find

that is \({\underline{a}}\) also fulfills the conditions of Lemma 3.

For the bound on the number of elements falling between \({\underline{a}}\) and \({\overline{a}}\), we compute

\(\square \)

We are now in the position to prove our main result.

Proof

(Theorem 1) We construct the multiset \(\hat{\mathcal {A}} \subseteq \mathcal {A}\) as the subsequent union of \(A_i \cap [{\underline{a}}, {\overline{a}}]\), that is

By Lemma 4, we know that \({\underline{a}} \le a^* \le {\overline{a}}\) for the bounds computed in Step 1, so we get in particular that \(a^* \in \hat{\mathcal {A}}\). It remains to show that we calculate \({\hat{k}}\) correctly. Clearly, we discard with \((a_{i,0}, \ldots , a_{i,{\underline{j}} - 1})\) exactly \(\underline{j}\) elements in Step 3.2, that is \(|A_i \cap (-\infty ,{\underline{a}})| = \underline{j}\). Therefore, we compute with

the correct rank of \(a^*\) in \(\hat{\mathcal {A}}\).

For the running time, we observe that the computations in Step 1 and Step 2 are easily done with \(O (n)\) primitive instructions. The loop in Step 3 and therewith Step 3.1 and Step 3.3 are executed n times. The overall number of set operations in Step 3.2 is \(|\hat{\mathcal {A}}| = O (n)\) (cf. Lemma 4). Finally, Step 4 runs in time \(O (|\hat{\mathcal {A}}|) \subseteq O (n)\) when using a (worst-case) linear-time rank selection algorithm (e. g., the median-of-medians algorithm [4]). \(\square \)

4 Comparison of algorithms

We now report from an extensive empirical comparison of all algorithms for divisor methods of apportionment that we found reported in the literature. A more complete discussion of our results is given in our technical report [11]; all source codes are available on GitHub [10]. For the reader’s convenience, we first briefly summarize the algorithms that have not yet been introduced in this article.

4.1 Iterative methods

A naive implementation of the iterative apportionment method (Sect. 2.3) takes time \(\varTheta (kn)\); using a priority queue, this can be sped up to \(O (k \log n)\).

Pukelsheim [9] notes that the above iterative method can be vastly improved in many instances by starting from a more intelligently chosen initial value for \(\varvec{\textbf{s}}\). His jump-and-step algorithm [9, Sect. 4.6] can be formulated using our notation as follows:

Algorithm 2

\(\textsc {JumpAndStep}_d(\varvec{\textbf{v}}, k)\) :

- Step 1:

-

Compute an estimate a for \(a^*\); the exact value depends on d:

- (a):

-

If d is a stationary signpost sequence, i. e., \(d_j = \alpha j+\beta \) and \(\nicefrac {\beta }{\alpha } \in [0,1]\), then set \(a {:}{=}\frac{\alpha }{V} \bigl (k + n \cdot (\nicefrac {\beta }{\alpha } - \nicefrac {1}{2}) \bigr )\).

- (b):

-

If d is a stationary jumppoint sequence, i. e.,

\(d_j = \alpha j+\beta \) but \(\nicefrac {\beta }{\alpha } \notin [0,1]\) then set \(a {:}{=}\frac{\alpha }{V} \bigl (k + n \cdot \lfloor \nicefrac {\beta }{\alpha } \rfloor \bigr )\).

- (c):

-

Otherwise set \(a{:}{=}\frac{\alpha k}{V}\).

- Step 2:

-

Initialize \(s_i = \lfloor \delta ^{-1}(v_i \cdot a) \rfloor + 1\) for \(i=1,\ldots ,n\).

- Step 3:

-

While \(\sum s_i \ne k\)

- Step 3.1:

-

If \(\sum s_i < k\) set \(I {:}{=}\arg \max _{i=1}^n v_i/d_{s_i}\) and \(s_I{:}{=}s_I+1\);

else set \(I {:}{=}\arg \min _{i=1}^n v_i/d_{s_i}\) and \(s_I{:}{=}s_I-1\).

The performance of JumpAndStep clearly depends on the initial distance from the house size, \(\varDelta _a {:}{=}\bigl (\sum _{i=1}^n \lfloor \delta ^{-1}(v_i \cdot a) \rfloor + 1\bigr ) - k\): the running time is in \(\varTheta (n + |\varDelta _a| \cdot \log n)\) when using priority queues for Step 3.1. With initial estimate \(a = k/V\), we have \(\varDelta _a \le n\) for any signpost sequence [6, Prop. 1], yielding an \(O (n \log n)\) method overall. We multiply by \(\alpha \) in the formula for a to account for nearly arithmetic sequences that are not signposts. Slight improvements to \(|\varDelta _a| \le n/2\) are possible for stationary signpost sequences, \(d_j = j + \beta \) with \(\beta \in [0,1]\), using \(a = (k+n(\beta -\frac{1}{2}))/V\) [9, Sect. 6.1]; this corresponds to the case (a) in Step 1. This bound for \(\varDelta _a\) is best possible for the worst case [9, Chap. 6]; it is therefore not possible to obtain a worst-case linear-time algorithm based on JumpAndStep.

4.2 Running time comparison

We have implemented all discussed algorithms in Java and conducted a running-time study to compare the practical efficiency of the methods. We use artificial instances; for a given number of parties n, house size k and divisor sequence, we draw multiple vote vectors \(\varvec{\textbf{v}}\) at random according to different distributions. We fix k to a multiple of n and consider arithmetic divisor sequences of the form \((\alpha j + \beta )_{j \in {\mathbb {N}}_0}\).

We focus on two scenarios here: one resembling current political applications and one exhibiting the worst-case behavior of JumpAndStep; see our technical report [11] for a more comprehensive evaluation. We note that in the context of democratic elections, the effort of tallying up the votes likely dwarfs the effort spent for apportioning afterwards; similarly for census data. However, the algorithmic tasks solved in this context are fundamental optimization problems interesting in their own right. We therefore do not want to limit ourselves to the characteristics of specific current applications.

The implementation of ChengEppsteinSelect posed the complication mentioned in Remark 1. To not unduly slow it down in our running time study, we adopted a fast ad-hoc solution of adding a small constant \(\epsilon \) before computing floors of floating-point numbers. We could manually determine a suitable \(\epsilon \) for our benchmark inputs, but we point out that this approach will in general lead to incorrect results (if vote counts are very close).

This figure shows average running times on a logarithmic scale for SandwichSelect

Figure 1 shows the results of two experiments with practical parameter choices. It is clear that JumpAndStep dominates the field, although SandwichSelect comes close. All other algorithms are substantially slower. As shown in our report [11], these observations are stable across many parameter choices. It is worth noting that for small instances, the priority-queue based implementations are slower than the sequential-search based implementations of the iterative method. This is likely due to the initialization overhead for the priority queue.

4.3 Super-linear worst case for JumpAndStep

While JumpAndStep outperforms SandwichSelect for parameters modeling realistic political scenarios—where its initial jump brings it close to the desired house size—in other configurations, it clearly exhibits superlinear behavior; Fig. 2 shows such a scenario. Although the sizes are beyond current political applications, for sufficiently large n this makes JumpAndStep slower than SandwichSelect.

The left plot shows normalized running times of SandwichSelect

Our report [11] further shows that SandwichSelect has much smaller variance in running times compared to JumpAndStep, both when varying the individual vote vectors and the used divisor sequences.

In summary, we see that, despite its \(\omega (n)\) worst case, JumpAndStep is very fast for many scenarios, and is the best choice for small inputs. SandwichSelect allows for a robust implementation and provides reliable performance across all tested scenarios, independent of divisor sequence and vote distributions; for large instances of the apportionment optimization problem, it is the fastest choice available.

5 Conclusion

We have shown that divisor methods of apportionment can be implemented by a simple and numerically stable algorithm, SandwichSelect, that achieves the optimal linear complexity even in the worst case; the same algorithm works for any rounding rule. The algorithm is simple to state and implement, but its efficiency derives from a close study of the structure of the problem. This concludes the quest for a robust and worst-case efficient implementation of divisor methods.

A closely related area where this quest has not conclusively been achieved is bi-proportional apportionment (double proportionality) [1, 2, 9]. We leave the question whether new insights from the one-dimensional version can be put to good use in the two-dimensional variant for future work.

Notes

Note that [9] additionally requires that signpost sequences do not touch both endpoints of the interval \([j,j+1]\) (“left-right disjunction”). Our conditions (D1) to (D4) already imply this property.

References

Balinski, M.L., Demange, G.: Algorithms for proportional matrices in reals and integers. Math. Program. 45(1–3), 193–210 (1989)

Balinski, M.L., Demange, G.: An axiomatic approach to proportionality between matrices. Math. Oper. Res. 14(4), 700–719 (1989)

Balinski, M.L., Young, H.P.: Fair Representation, 2nd edn. Brookings Institution Press, Washington, D.C. (2001)

Blum, M., Floyd, R.W., Pratt, V., Rivest, R.L., Tarjan, R.E.: Time bounds for selection. J. Comput. Syst. Sci. 7(4), 448–461 (1973)

Cheng, Z., Eppstein, D.: Linear-time algorithms for proportional apportionment. In: International Symposium on Algorithms and Computation (ISAAC) 2014. Springer, Berlin (2014)

Dorfleitner, G., Klein, T.: Rounding with multiplier methods: an efficient algorithm and applications in statistics. Stat. Pap. 40(2), 143–157 (1999)

Flajolet, P., Sedgewick, R.: Analytic Combinatorics. Cambridge University Press, Cambridge (2009)

Graham, R.L., Knuth, D.E., Patashnik, O.: Concrete Mathematics: A Foundation for Computer Science. Addison-Wesley, Boston (1994)

Pukelsheim, F.: Proportional Representation, 1st edn. Springer, Berlin (2014)

Reitzig, R., Wild, S.: Companion source code (2015). https://github.com/reitzig/2015_apportionment, revision db43ee7f05

Reitzig, R., Wild, S.: A practical and worst-case efficient algorithm for divisor methods of apportionment (2017). arXiv:1504.06475

Reitzig, R., Wild, S.: Building fences straight and high: an optimal algorithm for finding the maximum length you can cut \(k\) times from given sticks. Algorithmica 80(11), 3365–3396 (2018)

Segal-Halevi, E., Hassidim, A., Aumann, Y.: Waste makes haste: bounded time algorithms for envy-free cake cutting with free disposal. ACM Trans. Algorithms 13(1), 1–32 (2016)

Zachariasen, M.: Algorithmic aspects of divisor-based biproportional rounding. Technical Report 06/05, University of Copenhagen (2006)

Acknowledgements

We thank Chao Xu for pointing us towards the work by [5] and noting that the problem of envy-free stick-division [12] is related to proportional apportionment as discussed there. He also observed that our approach for cutting sticks—the core ideas of which turned out to carry over to this article—could be improved to run in linear time. Furthermore, we owe thanks to an anonymous reviewer whose constructive feedback sparked broad changes which have greatly improved the article over its previous incarnations.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The majority of this research was done while both authors were at University of Kaiserslautern.

Notation index

Notation index

In this section, we collect the notation used in this paper.

1.1 Generic mathematical notation

- \(\lfloor x\rfloor \), \(\lceil x\rceil \):

-

floor and ceiling functions, as used in [8].

- \(\llbracket \cdot \rrbracket \):

-

used rounding rule; see Sect. 2.2

:

:-

set-valued floor;

if \(x\notin {\mathbb {N}}\) and

if \(x\notin {\mathbb {N}}\) and  for \(n\in {\mathbb {N}}\).

for \(n\in {\mathbb {N}}\). - \(O (f(n))\), \(\varOmega \), \(\varTheta \), \(\sim \):

-

asymptotic notation as defined, e. g., in [7, Sect. A.2].

- \(M_{(k)}\):

-

The kth smallest element of (multi)set/vector M (assuming it exists); if the elements of M can be written in non-decreasing order, M is given by \(M_{(1)} \le M_{(2)} \le M_{(3)} \le \cdots \).

- \(\varvec{\textbf{x}} = (x_1,\ldots ,x_d)\):

-

to emphasize that \(\varvec{\textbf{x}}\) is a vector, it is written in bold

- \(\mathcal {M}\):

-

to emphasize that \(\mathcal {M}\) is a multiset, it is written in calligraphic type.

- \(\mathcal {M}_1 \uplus \mathcal {M}_2\):

-

multiset union; multiplicities add up.

1.2 Notation specific to the problem

- party, seat, vote (count), house size:

-

Parties are assigned seats (in parliament), so that the number of seats \(s_i\) that party i is assigned is (roughly) proportional to that party’s vote count \(v_i\) and the overall number of assigned seats equals the house size k.

- \(d = (d_j)_{j=0}^\infty \):

-

the divisor sequence used in the highest averages method; d must be a nonnegative, (strictly) increasing and unbounded sequence.

- \(\delta \), \(\delta ^{-1}\):

-

a continuation of \(j \mapsto d_j\) on the reals and its inverse.

- n:

-

number of parties in the input.

- \(\varvec{\textbf{v}}\), \(v_i\):

-

\(\varvec{\textbf{v}} = (v_1,\ldots ,v_n) \in {\mathbb {Q}}_{>0}^n\), vote counts of the parties in the input.

- V:

-

the sum \(v_1 + \cdots + v_n\) of all vote counts.

- k:

-

\(k \in {\mathbb {N}}\), the number of seats to be assigned; also called house size.

- \(\varvec{\textbf{s}}\), \(s_i\):

-

\(\varvec{\textbf{s}} = (s_1,\ldots ,s_n) \in {\mathbb {N}}_0\), the number of seats assigned to the respective parties; the result.

- \(a_{i,j}\):

-

\(a_{i,j} {:}{=}d_j / v_i\), the ratio used to define divisor methods; i is the party, j is the number of seats i has already been assigned.

- \(A_i\):

-

For party i, \(A_i {:}{=}\{ a_{i,0}, a_{i,1}, a_{i,2}, \ldots \}\) is the list of (reciprocals of) party i’s ratios

- a:

-

We use a as a free variable when an arbitrary \(a_{i,j}\) is meant.

- \({\mathcal {A}}\):

-

\({\mathcal {A}} {:}{=}A_1 \uplus \cdots \uplus A_n\) is the multiset of all averages.

- \(r(x, {\mathcal {A}})\):

-

the rank of x in \({\mathcal {A}}\), that is the number of elements in multiset \(\mathcal {A}\) that are no larger than x; r(x) for short if \(\mathcal {A}\) is clear from context.

- \(a^*\):

-

the ratio \(a^* = a_{i^*\!\!,j^*}\) selected for assigning the last, i. e., kth seat.

- \({\underline{a}}\), \({\overline{a}}\):

-

lower and upper bounds on candidates \({\underline{a}} \le a\le {\overline{a}}\) such that still \(a^* \in {\mathcal {A}} \cap [{\underline{a}}, {\overline{a}}]\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Reitzig, R., Wild, S. A simple and fast linear-time algorithm for divisor methods of apportionment. Math. Program. 203, 187–205 (2024). https://doi.org/10.1007/s10107-023-01929-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-023-01929-5

Keywords

- Proportional apportionment

- Selection algorithms

- Divisor methods

- d’Hondt method

- Fair division

- Rounding percentages

:

: if

if  for

for