Abstract

Genetic association studies using case–control designs are often done to identify loci associated with disease susceptibility. These studies are often expensive to perform, due to a large number of genetic markers. Several types of two-stage designs are proposed and used from the point of cost effectiveness. We proposed to control the false discovery rate for multiple-testing correction in two-stage designs, using optimal sample sizes and criteria for selecting markers associated with a disease in each stage to minimize the cost of genotyping. The expected power and cost of two-stage designs were compared with those of one-stage designs, under the assumptions that the genetic markers are independent and total sample size is fixed. The results showed that the proposed two-stage procedure usually reduced the cost of genotyping by 40–60%, with a power similar to that of the one-stage designs. In addition, the sample size and selection criteria, which are optimized parameters, are defined as a function of a prior probability that marker–disease association is true. So, the effects of mis-specification of a prior probability on efficiency were also considered.

Similar content being viewed by others

Introduction

Common diseases are considered to be caused by numerous genetic and environmental factors, and many studies have been done on the association between disease and genetic markers. Association studies are expected to be more powerful than linkage studies to detect alleles that confer modest risk to common diseases (Risch and Merikangas 1996), and common and modest-risk alleles are thought to be more important in public health (Carlson et al. 2004). Population-based association studies using case–control designs have become widespread in an attempt to identify genes related to common diseases. Previously, associations between disease and genetic factors targeted several markers within a few candidate genes. Recent advances in high-volume genotyping technology have made it possible to scan whole genomes using hundreds of thousands of single nucleotide polymorphisms (SNPs). Whereas these large-scale studies have many advantages to detect causal genes, the critical issues for efficiency remain, and such studies require stepwise screening processes to increase efficiency in both single and multiple studies (van den Oord and Sullivan 2003; Hirschhorn and Daly 2005; Thomas et al. 2005). In this study we focused on the second screening studies with several hundreds to thousands of sparse markers after the first screening of the whole genome or the selection of tag SNPs to eliminate redundancy in the information provided by the SNPs.

Generally, the disease-associated markers can be identified by hypothesis testing, and the efficiency is considered by power (true positives), sample size, false positives, and cost. Because variants that contribute to common disease have modest effect, large sample sizes are needed. Additionally, significance criteria are rigorous because of multiple-testing correction, requiring further sample sizes. Consequently, these studies are expensive (Botstein and Risch 2003).

In genetic epidemiology studies many multi-stage designs have been suggested and applied to reduce time and cost on average (Böddeker and Ziegler 2001). Most of these designs are two-stage. One of these approaches is to increase sample size in subsequent stages for more promising markers. This approach in two-stage design evaluates all markers from a subset of individuals in the first stage, while the most promising markers selected from the first stage are evaluated in the second stage, using additional individuals (Satagopan et al. 2002, 2004; Satagopan and Elston 2003). These studies show that use of the two-stage approach as a sampling strategy for marker selections is more cost-effective than the one-stage approach. However, in addition to the issue of cost, other problems arise from multiple testing, because a large number of markers is tested simultaneously. The commonly controlled family-wise error rate (FWER) is the probability of yielding one or more false positives when all hypotheses are null. The most familiar example of this is Bonferroni’s method. Though controlling FWER is a familiar method for multiple comparison problems, what happens in the situation when no marker is associated with disease? Often, studies aim to find a number of interesting features in numerous markers rather than to detect any one marker. The investigators would assume that more than one marker is associated with disease. In this case, FWER confers such strict criteria that many features may be missed. Furthermore, these criteria depend on the number of markers used in the study, making the interpretation of results difficult (Colhoun et al. 2003; Wacholder et al. 2004). Benjamini and Hochberg (1995) introduced a new multiple-testing error measure called the false discovery rate (FDR), which is the expected proportion of falsely rejected null hypotheses among all rejected hypotheses. Although a prior probability, which is the proportion of truly associated markers, must be set to use the FDR criteria, it is easy to interpret the outcome of tests. Another advantage is that the efficiency of the subsequent study can be evaluated by the FDR definition. In brief, it is possible to avoid additional costs incurred by examining waste markers that are not associated with a disease. In recent years the FDR has been thought to be more appropriate than the FWER for exploratory studies (Colhoun et al. 2003; Sabatti et al. 2003; Wacholder et al. 2004).

Benjamini and Yekutieli (2005) have reported that optimal multi-stage designs can be constructed with the goal of decreasing the total cost, while the study still controls the FDR at a nominal level. Also, Zehetmayer et al. (2005) have optimized the significance level at the first stage and the fraction of total sample size used in the first stage by controlling the FDR and maximizing the expected power under the fixed total costs; this showed that these two-stage designs have higher power than one-stage designs. Such results are seen to have value for conducting association studies. However, the sample size and effect size must also be considered and may not be very realistic for an association study.

In practice, the total number of subjects will often be limited. The cohort study in which biological samples have been collected at baseline is an example. In two-stage designs with a total sample size constraint, the cost of the study is affected by the sample sizes and selection criteria used at each stage. Thus, we propose two-stage designs in case–control association studies by controlling the FDR and by optimizing the sample sizes and marker selection criteria in each stage and cost ratios to those of a one-stage design, under the assumption that the markers are independent. By this method we can utilize investigators’ knowledge as a prior probability (assumed to be determined subjectively by the investigators). Consequently, the design for each study allows one the flexibility to choose the optimal sample size and selection criteria that fit the actual situation. At the same time, the subjective prior probability would affect the efficiency of the study. Therefore, the robustness of the proposed procedure was evaluated for mis-specification of a prior probability.

Materials and methods

Consider evaluating m markers, using a case–control design with a fixed number of subjects (n) available for each group. It is assumed that the m markers are not in linkage disequilibrium (LD) with each other and can be considered to be independent. Suppose there are D markers associated with disease; it would be assumed that these markers are in complete LD with D disease loci or that the markers themselves would be the disease-susceptible polymorphisms. Also, these D markers have the same effect size μ=μ d on disease, which is the target effect size that we want to detect. π 1 is a prior probability that denotes the proportion of truly associated markers to all markers; π 1=D/m. Any typical test statistic, X, for the association of a marker derived from n subjects will follow an asymptotic normal distribution N(nμ, nσ 2), in which nμ and nσ 2 are mean and variance, respectively, of a distribution; μ would be zero when there is no association between the disease and the marker. Without loss of generality, it can be assumed that σ 2=1 and can be considered one-sided testing. In a case–control design the allele (or genotype or haplotype) frequencies in cases and controls are compared at every marker locus. Generally, the odds ratio (OR) is used to measure the association between a disease and a marker. If we assume that we focus on the effect of certain genotype(s), the relationship between the OR (θ), the effect size (μ d), and the genotype, the frequency of interest in the controls (p 0) would be:

One-stage procedure

In this situation m markers are evaluated using all 2n subjects and controlling the FDR at a nominal level. Let FDR 1 be the desired FDR level. α is the significance level for each marker, and 1−β denotes the power for effect size μ d. FDR 1 is defined as follows:

in which π 0=1−π 1. Hence, α can be obtained, given n, μ d, π 1, and FDR 1. The false non-discovery rate (FNR), the expected proportion of the falsely accepted null hypotheses among all accepted null hypotheses, can be thought of as another error in this study. FNR 1, which is the FNR in this design, is calculated by the following:

In addition, the cost of genotyping, T 1, can be written with the arbitrary constant k as:

Proposed two-stage procedure

In the first stage, all m markers are evaluated using n 1 subjects for each group, and (m′<m) markers are selected by testing with a significance level of α 1. The proportion of this selected marker is denoted by s′ (=m′/m). In the second stage n 2 subjects for each group are added, thereby giving a total of n (=n 1+n 2) subjects per group. m′ markers are tested at a significance level of α 2 using all 2n subjects. The markers that are significant in the second stage are identified as disease-associated markers. In proposed two-stage designs, there are three design parameters: (1) n 1, sample size for each group in the first stage; (2) α 1, significance level (i.e., the criterion for selecting markers) in the first stage; and (3) α 2, significance level in the second stage. These parameters (n 1, α 1, α 2) are decided to control the FDR at a predetermined nominal level.

(X 1, X 2) denote the test statistics of a marker in the first and second stages, as X 1∼N(n 1μ, n 1), X 2∼N(nμ, n). Because cases and controls are unrelated individuals, the test statistics follow the Markov property, i.e., X 2−X 1 is independent of X 1. Therefore, the statistics pair (X 1, X 2) has bivariate normal distribution with a mean (n 1 μ, nμ) and covariance matrix Σ, in which \(\Sigma ={\left[ {\begin{array}{*{20}c} {{n_{1} }} & {{n_{1} }} \\ {{n_{1} }}& {n} \\ \end{array} } \right]}.\)

In the first stage, the FDR is given by the following:

in which 1−β 1 denotes the power for the effect size μ d. The expected proportion of the significant markers in the first stage, E[s′], is given by the following:

Now consider the second stage. Let T i and Γ i denote the statistics and the rejection regions at the ith stage, respectively. The FDR for the two-stage approach is represented below:

in which PrH=0 and PrH=1 denote the probabilities under the null hypothesis and the alternative. Hence, this can be obtained by two probabilities: one is the overall α error, or the probability that a true null marker is selected in the first and second stage, and the other is the overall power, or the probability that a true associated marker is selected in the first and second stage. In this case, P μ=μ denotes the probability that a marker with effect size μ is rejected in the first stage and also in the second stage, and the overall α error and the overall power for effect size μ d can be represented by P μ=0 and \(P_{{\mu = \mu_{{_{d}}}}},\) respectively. Note that this overall power, \(P_{{\mu=\mu_{d}}},\) is thought of as the expected proportion of rejected markers among all truly associated markers, because it is assumed that truly associated markers have the same effect size, μ d. The expected power is called \(P_{{\mu = \mu _{{_{d}}}}}.\) Let Φ (.) and ϕ (.) denote the cumulative distribution function and the probability density function of a standard normal distribution. Z a denotes the 100×ath percentile of a standard normal distribution. P μ=μ is given by the following:

The FDR after the second stage can be written, using P μ=0 and \(P_{{\mu = \mu _{{_{d}}}}}\) , as follows:

FDR2 means the proportion of non-associated markers among the identified markers by this two-stage procedure. The FNR can also be written as follows:

The expected cost of genotyping, T 2, is given by the following:

The expected cost ratio in the one-stage design, F, is written as follows:

Estimating optimal parameters

The purpose of this study was to minimize the expected cost by optimizing the three unknown design parameters n 1, α 1, and α 2. Given parameters of n, π 1, μ d, and FDR 2, the optimal parameters are defined as follows. The FDR is controlled by FDR 2, the difference between the power of the two-stage design and the power of the one-stage design, which is ≤1%, and the expected cost is the minimum among the parameters that satisfy the former two conditions.

Let the values of n, π 1, μ d, and FDR 2 be given. The n 1 value is fixed, and α 1 and β 1 are calculated under the given FDR 2′. Using these values, the expected cost ratio can be obtained using Eq. 12, and P μ=μ is expressed as a function of α 2. The value of α 2 can be estimated from Eq. 9 by a predetermined FDR 2. The expected power, \(P_{{\mu = \mu _{{_{d} }}}},\) can also be obtained at once. Every point is searched on a grid with values that correspond to the values of n 1 and FDR 2′. The design parameters (n 1, α 1, α 2), expected power, and expected cost are estimated at every grid point; then, the optimal parameters that satisfy the above conditions can be identified.

Evaluating the performance for mis-specification of a prior probability

To perform the study using optimal parameters, investigators have to determine a prior probability, π 1. The current situation is that the optimal parameters of expected power and expected cost depend on π 1, so π 1 must be subjectively determined by investigators. Hence, π 1* denotes the subjective prior probability, while π 1 is the true one. Controlling the FDR at a nominal level to achieve the expected power and expected cost requires π 1* to equal π 1. Therefore, we evaluated the performance related to mis-specification of a prior probability by estimating the FDR, the expected power, and the expected cost ratio. These estimates were obtained from Eqs. 8, 9, and 12, with the optimal parameters corresponding to π 1* and π 1.

Results

Optimal parameters

The optimal parameters were estimated for combinations of n=200, 500, and 1,000 for each group, and π 1= 0.001, 0.002, 0.005, 0.01, 0.02, 0.025, 0.05, and 0.1. In addition, the target effect sizes, μ d, were evaluated from 0.1 to 0.3 at intervals of 0.02. Table 1 shows the ORs that correspond to μ d(= 0.1–0.3) and p 0 (=0.1–0.4). The FDR levels are evaluated for FDR 2= 0.05 and FDR 2=0.1. The optimal parameters, expected power, expected cost ratio, and FNR 2 were calculated. Note that the proposed optimal parameters and these performances depend on π 1 rather than on the number of markers, because of the use of FDR criteria against the multiple-comparison problems.

The examples of the optimal parameters when n=1,000 are shown in Table 2. Suppose that the genotype frequency of interest in the controls is 0.3 and the target OR is 1.5; then, 1% of all markers used in the study are likely to be associated with the disease. This OR corresponds to μ d=0.14 (Table 1). In Table 2, in the first stage, each marker is tested at a significance level of 0.153 using 421 of the 1,000 subjects, and in the second stage, the selected markers from the first stage are tested at a significance level of 0.00048, using all 1,000 subjects. Table 3 illustrates the expected values of the proportion of selected markers at each stage, the power and the cost ratio by using the optimal parameters, and also the power of one-stage design. In the above situation the expected power was then approximately 86%, and the expected cost was 51% of the one-stage design; the proportion of the identified markers associated with disease was 0.9%. In Table 3 the number of markers finally selected in the study increased with π 1. In contrast, the number of markers selected in the first stage did not have a monotonic relationship with π 1, due to restriction on the expected cost. These results show that the proposed two-stage design has power similar to that of the one-stage design and that the expected costs are reduced by 40–60%.

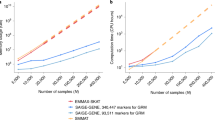

When n and FDR 2 are given, the values of the optimal parameters, expect power, and expected cost ratio, vary with μ d and π 1. Figure 1 illustrates the relationships between a prior probability and the optimal parameters, expected power, expected cost ratio, and FNR 2. n 1/n was about 0.4 and α 1 was from 0.1 to 0.2 in any prior probability (Fig. 1a). These two parameters influence one another to minimize the expected cost. In contrast, as α 2 is decided by a predetermined FDR 2, not the expected cost, α 2 increased monotonically with a prior probability (Fig. 1b). In Figure 1a the expected power naturally increased with a prior probability, and the expected cost ratio also increased monotonically with a prior probability but was convergent to approximately 0.55. This is because the expected cost ratios depend on n 1 and α 1 as well as on a prior probability. FNR 2 also increased with a prior probability (Fig. 1b), but even so, FNR 2 remained <5%, which is considered low enough and an acceptable range. These tendencies in Fig. 1 were the same, regardless of the effect size and sample size that we set.

Characteristics of the optimal two-stage design in which n=1,000, FNR 2=0.05, and μ d=0.14. a The relationships between a prior probability and significance level in the first stage (alpha 1), proportion of sample size in the first stage to all sample size (n 1/n), expected power (power) and expected cost ratio (cost). b The relationships between a prior probability and significance level in the second stage (alpha 2) and false negative rate (FNR 2)

Mis-specification of a prior probability

As mentioned earlier, the results are assured only when the subjective prior probability, π 1*, equals the true prior probability, π 1. Therefore, we evaluated the performance related to the mis-specification of the prior probability. Figure 2 illustrates the results when the optimal parameters for π 1*=0.001, 0.005, 0.01, 0.05 and 0.1 were used and when π 1 is 0.01 (Fig. 2a) and 0.05 (Fig. 2b). These results are for n=1,000, μ d=0.14, and FDR 2=0.05. The vertical and horizontal reference lines in each figure show that π 1*=π 1 and FDR=0.05, respectively. The optimal parameters for lower π 1* lead to loss of power, because the expected power depends on only the optimal parameters. Although the expected cost ratio and the FDR are related to both π 1 and π 1*, the expected cost largely depended on the optimal parameters with π 1*, regardless of π 1. The main difference between these two figures is the FDR. The FDR was also affected by the degree of mis-specification. When π 1* was not more than π 1, the FDR always provided the conservative bias, i.e., control at a nominal level. Also, the FDR was inflated as π 1* was overestimated and had a nearly linear relationship with π 1*.

Discussion

We proposed optimal sample sizes and criteria to select markers for each stage using the FDR in a two-stage design and then evaluated those performances. Differing from methods in which FWER has been controlled, the FDR approach has an advantage because it can reflect prior information. Therefore, the proposed method allowed us to optimize the designs by utilizing π 1, which is the proportion of truly associated markers to all markers used in the study. This flexible strategy allows each study to be done effectively.

The cost was defined as proportionate to the number of genotypings, that is, sample size × the number of markers. Regardless of choice of the design parameters, which are μ d and π 1, at least a 40% reduction in cost was demonstrated. These results show that costs, in both time and personnel associated with genotyping, can be reduced. In this study we assumed the total sample size was fixed. Future improvements in genotyping technology will reduce the cost of genotyping, and the substantial study costs will then depend on only the sample size. To reduce sample size, some investigators (Sobell et al. 1993; König et al. 2001, 2003) have proposed designs that are similar to group sequential methods with application to clinical trials. Although these procedures are considered to be complicated for practical applications, it would be a challenge to improve efficiency by considering the required sample size.

We examined the problem of multiple testing in association studies. Previous proposed two-stage designs, for example, Satagopan and Elston (2003), have proposed controlling the overall α error of the two-stage procedure. Also, Wen et al. (2006) showed the overall α error and FDR when certain fixed design parameters were used, of which the only second-stage significance level was corrected by Bonferroni’s method. Recently, controlling the FDR has been considered to be more relevant for eliminating false positives, especially in the exploratory studies. A lot of measures, estimation methods, and properties have been examined (Benjamini and Hochberg 2000; Efron et al. 2001; Storey and Tibshirani 2001, Storey and Tibshirani 2003; Efron and Tibshirani 2002; Genovese and Wasserman 2002; Storey 2002, 2003; Tsai et al. 2003; Black 2004; Fernando et al. 2004; Pounds and Cheng 2004; Storey et al. 2004). In addition to the strategies to control either FWER or FDR, another type of two-stage strategy using both FWER and FDR criteria has been proposed. Rosenberg et al. (2005) assumes the association studies with several SNPs in a number of candidate genes, and the association between each gene and disease is summarized by a single gene-wise P value that controls the FWER in each gene in the first stage. In the second stage, these gene-wise P values are adjusted to control the FDR to detect particular genes associated with the disease. Accordingly, the application range of the FDR would be considered to become broader.

To control the FDR in our proposed designs, however, π 1 must be decided before the study is performed. Because π 1 affects the performance of the design, it is important to examine its impact on efficiency. The use of an underestimated π 1*, while leading to loss of the expected power, ensured the FDR was controlled at a nominal level. On the other hand, an overestimated π 1* led to an inflated FDR. However, the expected power increased with π 1*, and the FDR was kept at less than double the nominal level if π 1* ≤2π 1. This tendency would be acceptable for exploratory association studies using a number of markers, because the purpose of such studies is generally to select as many true positives as possible, accepting a few false positives. It will also be useful to examine the degree of the loss of power or the inflation of FDR or cost for some range of prior probabilities (e.g., Fig. 2). Particularly, when π 1 was expected to be very low, the FDR increased sharply with π 1*. From this point, we considered that the proposed procedure is more appropriate for π 1 at least ≥0.01. Here, π 1 was determined subjectively by investigators; other proposed methods to estimate π 1 have used available data, and Hsuesh et al. (2003) compared these latter methods. The optimal parameters and performances of the designs will be affected by the method used to estimate π 1. Therefore, the optimization to include the stage to estimate π 1 might be an issue in the future.

The FNR, which is a measure of the truly associated markers left behind, should also be considered. While the FNR was always kept low because of low prior probability, in this study, it is possible to determine the error measure using the FNR together with the FDR and to optimize the design parameters, as both are controlled.

Finally, we assumed that the markers are not in LD with each other. This is considered reasonable for exploratory studies using spaced markers, in which it would be appropriate to use the FDR. With several recent advances, however, whole-genome association studies using a fixed array of high density SNPs are now feasible. Because there would be LD between these SNPs, the proposed procedure could not guarantee that the FDR would be controlled; in this case, making an informed SNP selection could be important for efficiency. Some work has been done on tag SNP selection, which could also improve efficiency by utilizing the information of LD (Zhang et al. 2002, 2004; Stram et al. 2003; Stram 2004; Thomas et al. 2004). Incorporating these approaches into the optimization of designs is one of the challenges of the future.

References

Benjamini Y, Hochberg Y (1995) Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc B 57:289–300

Benjamini Y, Hochberg Y (2000) On the adaptive control of the false discovery rate in multiple testing with independent statistics. J Educ Behav Stat 25:60–83

Benjamini Y, Yekutieli D (2005) Quantitative trait loci analysis using the false discovery rate. Genetics 171:783–790

Black MA (2004) A note on the adaptive control of false discovery rates. J R Stat Soc B 66:297–304

Böddeker IR, Ziegler A (2001) Sequential designs for genetic epidemiological linkage or association studies. A review of the literature. Biom J 43:501–525

Botstein D, Risch N (2003) Discovering genotypes underlying human phenotypes: past successes for Mendelian disease, future approaches for complex disease. Nat Genet 33:228–237

Carlson CS, Eberle MA, Kruglyak L, Nickerson DA (2004) Mapping complex disease loci in whole-genome association studies. Nature 429:446–452

Colhoun HM, McKeigue PM, Smith GD (2003) Problems of reporting genetic associations with complex outcomes. Lancet 361:865–872

Efron B, Tibshirani R (2002) Empirical Bayes methods and false discovery rates for microarrays. Genet Epidemiol 23:70–86

Efron B, Tibshirani R, Storey JD, Tusher V (2001) Empirical Bayes analysis of a microarray experiment. J Am Stat Assoc 96:1151–1160

Fernando RL, Nettleton D, Southey BR, Dekkers JCM, Rothschild MF, Soller M (2004) Controlling the proportion of false positives in multiple dependent tests. Genetics 166:611–619

Genovese C, Wasserman L (2002) Operating characteristics and extensions of the false discovery rate procedure. J R Stat Soc B 64:499–517

Hirschhorn JN, Daly MJ (2005) Genome-wide association studies for common diseases and complex traits. Nat Rev Genet 6:95–108

Hsuesh HM, Chen JJ, Kodell RL (2003) Comparison of methods for estimating the number of true null hypotheses in multiplicity testing. J Biopharm Stat 13:675–689

König IR, Schäfer H, Müller H-H, Ziegler A (2001) Optimized group sequential study designs for tests of genetic linkage and association in complex diseases. Am J Hum Genet 69:590–600

König IR, Schäfer H, Ziegler A, Müller H-H (2003) Reducing sample sizes in genome scans: group sequential study designs with futility stops. Genet Epidemiol 25:339–349

van den Oord EJ, Sullivan PF (2003) False discoveries and models for gene discovery. Trends Genet 19:537–542

Pounds S, Cheng C (2004) Improving false discovery rate estimation. Bioinformatics 20:1737–1745

Risch N, Merikangas K (1996) The future of genetics studies of complex human disease. Science 273:1516–1517

Rosenberg PS, Che A, Chen BE (2005) Multiple hypothesis testing strategies for genetic case–control association studies. Stat Med 25:3134–3149 [Epub ahead of print]

Sabatti C, Service S, Freimer N (2003) False discovery rate in linkage and association genome screens for complex disorders. Genetics 164:829–833

Satagopan JM, Elston RC (2003) Optimal two-stage genotyping in population-based association studies. Genet Epidemiol 25:149–156

Satagopan JM, Verbel DA, Venkatraman ES, Offit KE, Begg CB (2002) Two-stage designs for gene-disease association studies. Biometrics 58:163–170

Satagopan JM, Venkatraman ES, Begg CB (2004) Two-stage designs for gene-disease association studies with sample size constraints. Biometrics 60:589–597

Sobell JL, Heston LL, Sommer SS (1993) Novel association approach for determining the genetic predisposition to schizophrenia: case–control resource and testing of a candidate gene. Am J Med Genet 48:28–35

Storey JD (2002) A direct approach to false discovery rates. J R Stat Soc B 64:479–498

Storey JD (2003) The positive false discovery rate: a Bayesian interpretation and the q-value. Ann Stat 31:2013–35

Storey JD, Tibshirani R (2001) Estimating false discovery rate under dependence, with applications to DNA microarrays. Technical report 2001–28, Department of Statistics, Stanford University, Stanford, CA, USA

Storey JD, Tibshirani R (2003) Statistical significance for genome-wide studies. Proc Natl Acad Sci U S A 100:9440–9445

Storey JD, Taylor JE, Siegmund D (2004) Strong control, conservative point estimation, and simultaneous conservative consistency of false discovery rates: a unified approach. J R Stat Soc B 66:187–205

Stram DO (2004) Tag SNP selection for association studies. Genet Epidemiol 27:365–74

Stram DO, Haiman CA, Hirschhorn JN, Altshuler D, Kolonel LN, Henderson BE, Pike MC (2003) Choosing haplotype-tagging SNPs based on unphased genotype data using a preliminary sample of unrelated subjects with an example from the multiethnic cohort study. Hum Hered 55:27–36

Thomas D, Xie R, Gebregziabher M (2004) Two-stage sampling designs for gene association studies. Genet Epidemiol 27:401–414

Thomas DC, Haile RW, Duggan D (2005) Recent developments in genomewide association scans: a workshop summary and review. Am J Hum Genet 77:337–345

Tsai CA, Hsueh HM, Chen JJ (2003) Estimation of false discovery rates in multiple testing: application to gene microarray data. Biometrics 59:1071–1081

Wacholder S, Chanock S, Garcia-Closas M, El Ghormli L, Rothman N (2004) Assessing the probability that a positive report is false: an approach for molecular epidemiology studies. J Natl Cancer Inst 96:434–442

Wen SH, Tzeng JY, Kao JT, Hsiao CK (2006) A two-stage design for multiple testing in large-scale association studies. J Hum Genet 51:523–532

Zehetmayer S, Bauer P, Posch M (2005) Two-stage designs for experiments with a large number of hypotheses. Bioinformatics 21:3771–3777

Zhang K, Deng M, Chen T, Waterman MS, Sun F (2002) A dynamic programming algorithm for haplotype block partitioning. Proc Natl Acad Sci U S A 99:7335–7339

Zhang K, Qin ZS, Liu JS, Chen T, Waterman MS, Sun F (2004) Haplotype block partitioning and tag SNP selection using genotype data and their applications to association studies. Genome Res 14:908–916

Acknowledgments

We thank Dr. Yutaka Matsuyama, Dr. Takuhiro Yamaguchi, and Ms. Yoichi Ito for their helpful discussions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Aya Kuchiba and Noriko Y. Tanaka have contributed equally to this study.

Rights and permissions

About this article

Cite this article

Kuchiba, A., Tanaka, N.Y. & Ohashi, Y. Optimum two-stage designs in case–control association studies using false discovery rate. J Hum Genet 51, 1046–1054 (2006). https://doi.org/10.1007/s10038-006-0057-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10038-006-0057-6

Keywords

This article is cited by

-

A grid-search algorithm for optimal allocation of sample size in two-stage association studies

Journal of Human Genetics (2007)