Abstract

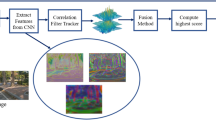

It is a critical step to choose visual features in object tracking. Most existing tracking approaches adopt handcrafted features, which greatly depend on people’s prior knowledge and easily become invalid in other conditions where the scene structures are different. On the contrary, we learn informative and discriminative features from image data of tracking scenes itself. Local receptive filters and weight sharing make the convolutional restricted Boltzmann machines (CRBM) suit for natural images. The CRBM is applied to model the distribution of image patches sampled from the first frame which shares same properties with other frames. Each hidden variable corresponding to one local filter can be viewed as a feature detector. Local connections to hidden variables and max-pooling strategy make the extracted features invariant to shifts and distortions. A simple naive Bayes classifier is used to separate object from background in feature space. We demonstrate the effectiveness and robustness of our tracking method in several challenging video sequences. Experimental results show that features automatically learned by CRBM are effective for object tracking.

Similar content being viewed by others

References

Qian Yu, Thang Ba Dinh, Gérard Medioni (2008) Online tracking and reacquisition using co-trained generative and discriminative trackers. European conference on computer vision, Marseille, France, pp 678–691

Comaniciu Dorin, Ramesh Visanathan, Meer Peter (2003) Kernel-based object tracking. IEEE Trans Pattern Anal Mach Intell 25(5):564–577

Jepson Allan D, Fleet David J, EI-Maraghi Thomas F (2013) Robust online appearance models for visual tracking. IEEE Trans Pattern Anal Mach Intell 25(10):1296–1311

Babenko Boris, Yang Ming-Hsuan, Belongie Serge (2011) Visual tracking with online multiple instance learning. IEEE Trans Pattern Anal Mach Intell 33:2259–2272

Michael Grabner, Helmut Grabner Horst Bischof (2007) Learning features for tracking. IEEE computer society conference on computer vision and pattern recognition, Minneapolis, MN, pp 1–8

Collins Robert T, Liu Yanxi, Leordeanu Marius (2005) Online selection of discriminative tracking features. IEEE Trans Pattern Anal Mach Intell 27(10):1631–1643

Kaihua Zhang, Lei Zhang, Ming-Hsuan Yang (2012) Real-time compressive tracking. European conference on computer vision, Florence, Italy, pp 866–879

Feng Tang, Shane Brennan, Qi Zhao, Hai Tao (2009) Co-tracking using semi-supervised support vector machines. IEEE computer society conference on computer vision and pattern recognition, Pio de Janeiro, pp 1–8

Helmut Grabner, Christian Leistner, Horst Bischof (2008) Semi-supervised on-line boosting for robust tracking. European conference on computer vision 234-247

Hanxi Li, Chunhua Shen, Qinfeng Shi (2011) Real-time visual tracking using compressive sensing. IEEE computer society conference on computer vision and pattern recognition, Providence, RI, pp 1305–1312

Yilmaz Alper, Javed Omar, Shah Mubarak (2006) Object tracking: a survey. ACM Comput Surv 38(4):1–45

Hinton GE, Osindero S, Teh Y (2006) A fast learning algorithm for deep belief nets. Neural Comput 18:1527–1554

Bengio Yoshua (2009) Learning deep architectures for AI. Now Publishers Inc., Hanover

Yilmaz Alper, Li Xin, Shah Mubarak (2004) Contour-based object tracking with occlusion handing in video acquired using mobile cameras. IEEE Trans Pattern Anal Mach Intell 26(11):1531–1536

Doulamis Anastasios (2011) Dynamic tracking re-adjustment: a method for automatic tracking recovery in complex visual environments. Multimed Tools Appl 50(1):49–73

Hinton GE, Salakhutdinov RR (2006) Reducing the dimensionality of data with neural networks. Science 313(5786):504–507

Norouzi M (2007) Convolutional restricted Boltzmann machines for feature learning. Dissertation, Sharif University of Technology

Kalal Z, Matas J, Mikolajczyk K (2010) P-N learning: bootstrapping binary classifiers by structural constraints. IEEE computer society conference on computer vision and pattern recognition, San Francisco, CA, pp 49–56

Faisal Bashir, Fatih Porikli (2006) Performance evaluation of object detection and tracking systems. In: Proceedings of 9th IEEE international workshop on performance evaluation of tracking and surveillance

Acknowledgments

This work was partially supported by the Open Project Program of the State Key Laboratory of Mathematical Engineering and Advanced Computing Grant 2013A08.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lei, J., Li, G., Tu, D. et al. Convolutional restricted Boltzmann machines learning for robust visual tracking. Neural Comput & Applic 25, 1383–1391 (2014). https://doi.org/10.1007/s00521-014-1625-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-014-1625-x