Abstract

In this paper, a graph convolution network prediction model based on the lioness optimization algorithm (LsOA-GCN) is proposed to predict the cumulative number of confirmed COVID-19 cases in 17 regions of Hubei Province from March 23 to March 29, 2020, according to the transmission characteristics of COVID-19. On the one hand, Spearman correlation analysis with delay days and LsOA are used to capture the dynamic changes of feature information to obtain the temporal features. On the other hand, the graph convolutional network is used to capture the topological structure of the city network, so as to obtain spatial information and finally realize the prediction task. Then, we evaluate this model through performance evaluation indicators and statistical test methods and compare the results of LsOA-GCN with 10 representative prediction methods in the current epidemic prediction study. The experimental results show that the LsOA-GCN prediction model is significantly better than other prediction methods in all indicators and can successfully capture spatio-temporal information from feature data, thereby achieving accurate prediction of epidemic trends in different regions of Hubei Province.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Corona Virus Disease 2019 (COVID-19), which emerged in late 2019 and broke out in early 2020, is one of the most severe public health emergencies since the founding of the People's Republic of China. As of 18:00 on November 30, 2021, China has reported a total of 127, 859 confirmed cases and a total of 5697 deaths. Currently, there are still 2913 confirmed cases; a total of 262, 505, 360 confirmed cases and a total of 5, 227, 122 deaths have been reported globally, and there are presently 20, 202, 074 confirmed cases. Figures 1 and 2 show the distribution of cumulative confirmed cases in China and globally, respectively.Footnote 1

Due to its long duration and wide spread, infectious diseases not only seriously threaten the life safety of people, but also affect people's mental health for a long time. Improper handling can easily cause social chaos. Especially in recent years, sudden epidemic infectious diseases have continued to appear in the world. For example, SARS in 2002 quickly spread to 32 countries and regions in a short time. As of August 16, 2003, the global death toll reached 919, with a case fatality rate of nearly 11%. In 2009, the H1N1 broke out in the United States on a large scale and spread to 214 countries and regions, causing nearly 200, 000 deaths. In addition, there are Ebola virus, HIV, dengue, and so on.

At present, the number of confirmed cases of COVID-19 is still increasing. How to monitor and predict sudden epidemics in a timely and effective manner, and eliminate the crisis in the bud, plays an essential role in the prevention and control of the epidemic. Given these reasons, domestic and foreign scholars have carried out a series of studies on the prediction of infectious diseases. To sum up, these methods can be divided into the following categories:

(1) Time series prediction model. The traditional time series prediction model is an effective tool for disease prediction, which mainly realizes the analysis of the spreading law and development trend of the epidemic by fitting epidemic data. Zhang et al. (2016) decomposed the monthly time series data of 11 class C notifiable diseases in China from 2009 to 2014 into a seasonal index representing the seasonal law of infectious diseases and a linear regression model describing the long-term trend of infectious diseases. And based on this, an autoregressive integrated moving average (ARIMA) model was established to predict the incidence of these infectious diseases. Wang et al. (2019) selected the monthly incidence data of brucellosis from 2004 to 2013 in Jinzhou city, Liaoning Province, China. They analyzed the epidemiological characteristics and prevalence situation by using the autoregressive integrated moving average (ARIMA) model and constructed a prediction model to predict the incidence of brucellosis from 2005 to 2014. Qi et al. (2020) collected the monthly incidence data of Hemorrhagic Fever with Renal Syndrome (HFRS) in Shandong Province from 2009 to 2018. And they used the seasonal autoregressive fractionally integrated moving average (SARFIMA) model to predict the incidence of HFRS. The model evaluation results showed that the SARFIMA model could better fit the dynamic changes of the monthly incidence of HFRS in Shandong Province, and the excellent degree of fitting and predictive ability. Singh et al. (2020) used an autoregressive integrated moving average (ARIMA) model with covariates to fit daily confirmed COVID-19 cases in Malaysia from 22 January to 31 March 2020. Then, they verified this prediction model with confirmed cases from 1 to 17 April 2020. Finally, the daily confirmed cases in Malaysia from 18 April to 1 May 2020 were predicted with satisfaction. Aslan et al. (2022) developed a SEIQR-type deterministic model, which mainly used a system of ordinary differential equations (ODEs) to analyze the dynamics of the epidemic in Hubei Province, and achieved accurate prediction of the local epidemic situation through social distancing rate and confirmed case rate.

(2) Infectious diseases model. In the field of epidemic transmission research, scholars have put forward many models of infectious diseases that can be used to predict the number of infected people, among which the representative ones are: SIR, SIRS, SEIR models, etc. The main idea of this type of model is to divide the population within the epidemic range of infectious diseases into susceptible, exposed, infectious, recovered, etc., and establish and solve differential equations based on the transformation relationship between different groups. Guo et al. (2016) analyzed the transmission data of the Ebola virus by introducing the Jacobian matrix to solve the differential equation of the SIR model and constructed a comprehensive compartment model to fit and predict the transmission trend of the Ebola epidemic. Kuperman et al. (2001) used the SIRS model to study the transmission rules of infectious diseases on the small world network and found that when network nodes change from a recovered state to a susceptible state, infectious diseases will exist in the network for a long time, but the infection rate is low. Ding et al. (2004) used the SIJR model to analyze SARS epidemic data in Hong Kong, Singapore, and Canada and obtained essential parameters such as the epidemic transmission rate, and then realized the prediction of SARS transmission by adjusting the parameters in this model. Merler et al. (2011) and Wu et al. (2020) both established the SEIR model and included “exposed” into the simulated population to simulate the development trend of H1N1 in Europe and the COVID-19 in major cities of China, respectively. In addition, there are still many scholars who extend the infectious disease model by adding or adjusting the internal structure (Wang et al. 2022a, b; Yi et al. 2021; Liao et al. 2020; Cao et al. 2020; Bi et al. 2021). Liu et al. (2020) proposed a susceptible-asymptomatic-infected-removed (SAIR) model based on the social network to describe the spread of COVID-19 and analyzed the real epidemic data in Wuhan and finally selected an appropriate method to control the spread of COVID-19. In the classical SIR model, the transmission speed is a constant. However, in reality, the transmission speed will change through various interventions such as personal protection, community lockdowns, and urban control. Therefore, Jia et al. (2020) added a transmission modifier to the SIR model, established a dynamic extended SIR model (eSIR) for infectious diseases, which can dynamically change the transmission speed and predict the epidemic trend of COVID-19 in Italy. In view of the fact that the SEIR model does not consider the influence caused by random factors such as population migration and flow between regions, Yang et al. (2020) introduced input/output flows on the basis of the SEIR model, making the modified SEIR (M-SEIR) model is more suitable for the actual population change when it is used to predict the urban epidemic. Wang et al. (2022a, b) introduced the complex network model and the intelligent agent on the basis of M-SEIR model, and proposed a city-level structured prediction and evaluation (CSPE) model for COVID-19 epidemic. The model has effectively deduced the epidemic situation in Hubei Province. For the COVID-19 pandemic, Manuel et al. (2020) also proposed a susceptible-exposed-symptomatic-infectious-asymptomatic infectious-recovered (SEIAR) model considering population and disease mortality based on the SEIR model, which aims to reduce the number of infectors by assessing the isolation situation.

(3) Machine learning prediction method. In recent years, many machine learning algorithms have also begun to be used for the prediction of infectious diseases. Wang et al. (2017) used the annual county-level human brucellosis (HB) cases and data of 37 environmental variables in Inner Mongolia, China, to build an artificial neural network (ANN) model, which was used to determine essential predictors of human brucellosis and predict the annual county-level cases of human brucellosis. Chen et al. (2018) introduced different predictors for different diseases, countries, and prediction windows, established different LASSO prediction models, and analyzed the importance of different predictors under various conditions by evaluating the prediction performance of these models. Research results showed that different predictors have different effects in different situations, and short-term forecasts usually perform better than long-term forecasts. Hasan (2020) proposed a hybrid model combining ensemble empirical mode decomposition (EEMD) and artificial neural network (ANN). This model first used EEMD to decompose the epidemic time series data and then sent these decomposed IMF components into the neural network model for training. Eventually, the trained neural network was used to predict the trend of COVID-19. Fang et al. (2020) established a random forest model based on meteorological factors to predict the incidence of infectious diarrhea in Jiangsu Province, China. The prediction model better fitted the dynamic characteristics of the disease epidemic. Gumaei et al. (2021) used gradient boosting regression (GBR) to build a training model to predict the total number of daily confirmed COVID-19 cases around the world. Xie et al. (2021) proposed a nonlinear time-varying transmission rate model based on support vector regression in order to overcome the shortcomings of a single model for insufficient extraction of effective information and low prediction accuracy, and applied it to long-term prediction of COVID-19 epidemic in Hubei Province. Smith and Alvarez (2021) took COVID-19 patients in Wuhan, Hubei, China, as the research object and applied a series of machine learning models to analyze the factors affecting the death of COVID-19 patients. They finally obtained the conclusion that the Shapley value is helpful to determine the death factors of COVID-19.

(4) Deep learning prediction method. In recent years, deep learning methods have performed well in fields such as data mining, analysis, and prediction. They have provided new technologies and techniques for studying epidemic transmission. Chae et al. (2018) used search query data, social media big data, and weather data to sort out the factors that affect the occurrence of infectious diseases to build OLS, ARIMA, DNN, and LSTM prediction models and compare their prediction performance. The study found that the prediction model using deep learning is more suitable for predicting the trend of infectious diseases. Wang et al. (2020) used the LSTM network and rolling update mechanism to continuously input new prediction results into the next iteration model for training, and finally made a long-term prediction of epidemic trends in Russia, Peru, and Iran. In this article, the authors also introduced the Diffusion Index (DI) to evaluate the effectiveness of preventive measures such as social isolation and lockdown. Hao et al. (2020) used Elman neural network, LSTM and SVM to predict the cumulative confirmed cases, deaths, and cured cases in Wuhan, Hubei Province, and used SVM with fuzzy granulation to predict the confirmed new cases, new deaths and new cured cases. Finally, they analyzed the applicability of different methods. Rauf et al. (2021) proposed to use long short-term memory (LSTM) network, Recurrent Neural Network (RNN), and Gate Recurrent Unit (GRU), while considering time variables and data nonlinearity, to evaluate each model feature to predict the number of confirmed COVID-19 cases in the Asia–Pacific region in the next 10 days. Chen et al. (2021) developed a data-driven workflow to extract, process, and develop deep learning methods, and built multivariate long and short-term memory (LSTM) models with different architectures to predict the COVID-19 epidemic, thereby supplementing existing modeling methods.

In addition, when predicting the incidence trend of specific infectious diseases, not all features should be included in the prediction model. Sometimes, you can get better prediction results by deleting some unimportant features. Meta-heuristic algorithm is a common feature selection method, which has been widely concerned by scholars (Jia et al. 2019; Turabieh et al. 2021; Samieiyan et al. 2022). Depending on the source of inspiration, meta-heuristic algorithms can be divided into the four categories: (1) biological evolutionary algorithms that simulate the process and mechanism of biological evolution in nature. Among these algorithms, the most widely used is genetic algorithm (GA)(Holland 1992) with the basic operations of selection, crossover and mutation; in addition, there are differential evolution (DE), evolutionary strategy (ES), etc.; (2) algorithms inspired by physical phenomena and laws in nature, such as multiverse optimizer (MVO) inspired by three concepts in cosmology: white hole, black hole and wormhole, simulating the transfer process of matter in the universe from white hole to black hole through the wormhole (Mirjalili et al. 2015); gravitational search algorithm (GSA) based on Newton's law of universal gravitation (Rashedi et al. 2009); lightning search algorithm (LSA) based on the lightning mechanism (Shareef et al. 2015), etc.; (3) algorithms that simulate various human behaviors in society, such as teaching–learning-based optimization (TLBO) that simulates the two stages of “teaching” and “learning” in the learning process to improve the ability of each individual(Rao et al. 2011); heap-based optimizer (HBO) that uses the heap data structure to map corporate rank hierarchy to simulate three human behaviors(Askari et al. 2020), etc.; (4) swarm intelligence algorithms that simulate the social behavior of animal groups in nature, such as particle swarm optimization (PSO) designed to simulate the predatory behavior of birds(Eberhart and Kennedy 1995); whale optimization algorithm (WOA) proposed by imitating the hunting behavior of humpback whales(Mirjalili and Lewis 2016); chimp optimization algorithm (ChOA) for modeling hunting behavior of chimps according to differences in intelligence and sexual motivation(Khishe and Mosavi 2020); In addition, there are recently proposed marine predators algorithm (MPA)(Faramarzi et al. 2020), k-means clustering optimizer (KO)(Minh et al. 2022), etc. The principles of these algorithms are different, and their abilities to solve different types of problems are also different. As the “no free lunch (NFL)” theorem states, no algorithm can solve all types of optimization problems with equal simplicity and efficiency (Wolpert and Macready 1997). Therefore, we think it is necessary to develop new optimization algorithms to better achieve the purpose of feature selection.

In conclusion, many scholars have made great efforts in infectious disease prediction including COVID-19 prediction, and the results have been fruitful. However, by analyzing the current situation of infectious disease prediction research, we found that the existing models still have the following problems:

-

(1) Some models are constructed based on the historical data of a single region, ignoring the spatial correlation of infectious disease transmission, which affects the improvement of the model prediction accuracy;

-

(2) Some prediction models only use directly related data, such as the number of confirmed cases and the number of isolated people, which makes the prediction results unable to timely reflect the influence of other factors (such as social environment and government response policies) in the process of infectious disease transmission, and finally makes the prediction accuracy difficult to be satisfied;

-

(3) Some models do not consider the time lag effect of eclipse period on transmission. Virus infection can have a certain eclipse period (the time from contact with the source of transmission to the onset of symptoms), and even asymptomatic patients can appear. Ignoring the time lag will also affect the prediction performance;

-

(4) Finally, when there are many feature data input into the model, there may be some irrelevant features. If these features are forcibly incorporated into the prediction model without selection, it will not only increase the computation, but also affect the prediction performance of the prediction model.

In order to solve the above problems, this paper proposed a graph convolution network prediction model based on the lioness optimization algorithm (LsOA-GCN) according to the transmission characteristics of the COVID-19. The main contributions are as follows:

-

(1) A novel population-based lioness optimization algorithm was proposed to filter the feature data with time delay (Baidu index), and based on this, a feature matrix for epidemic prediction was constructed;

-

(2) A COVID-19 prediction model, LsOA-GCN, which comprehensively uses the lioness optimization algorithm and graph convolutional network (GCN) (Kipf and Welling 2017), was proposed;

-

(3) Experiments were conducted on epidemic data from 17 regions in Hubei Province, China, and compared with 10 prediction methods, which verified the superiority of the LsOA-GCN prediction model.

The rest of this paper is composed as follows. The second part introduces the LSOA-GCN prediction model in detail, including problem definition, adjacency matrix construction, data source and model construction, etc. The third part compares and analyzes the performance of different prediction methods through model evaluation indicators and statistical test methods. The last part summarizes the proposed prediction model.

2 LsOA-GCN model

2.1 Problem definition

(1) City network graph:\(G\). In this paper, \(G = (V, E)\) is used to describe the topological structure of Hubei Province's city network. We regard each region in Hubei Province as a node in the network graph, where, \(V = \{ v_{1} , v_{2} , ..., v_{n} \}\) represents the set of city nodes in Hubei Province, \(n\) is the total number of nodes, and \(E\) represents the set of edges between cities.

(2) Adjacency matrix: \(A^{n \times n}\). The spatial information between cities is mapped into the city network graph \(G\), and the degree of correlation between city nodes is represented by edges. This paper intends to use the migration relationship graph to reflect the degree of mutual influence between various regions in Hubei Province, which is represented by \(G = (V, A)\), where \(A \in R^{n \times n}\) is the adjacency matrix.

(3) Feature matrix: \(X^{n \times t \times d}\) We use the Baidu Search Index value of keywords related to the epidemic as the attribute features of the nodes in the network, which was represented by \(X \in R^{n \times t \times d}\), where \(t\) is the number of days, and \(d\) is the dimension of the node attribute features. For example, \(X_{t} \in R^{n \times i}\) represents the Baidu Search Index value of \(i\) keywords in \(n\) regions on the \(t\)-th day.

Therefore, the epidemic prediction problem solved in this paper can be expressed as: under the premise of a given city network topology graph \(G\) and the feature matrix \(X\), we planned to predict the cumulative number of confirmed cases in each region of Hubei province in the future through graph convolution network. The formula is as follows:

where \(s\) is the length of the historical time series, \(T\) is the length of the future time series that needs to be predicted, \(Y_{t + 1}\) represents the cumulative number of confirmed cases in each region of Hubei Province on the (\(t\) + 1)-th day, and \(X_{t}\) represents the feature vector of each region on day \(t\).

2.2 Construction of adjacency matrix

Since the outbreak of the COVID-19 has the characteristic of “person-to-person transmission”, this paper assumes that the large-scale population movement during the epidemic will have a certain impact on the spread of the epidemic. And COVID-19 has a certain eclipse period. During the eclipse period, some patients have only mild respiratory symptoms, such as fever, fatigue, dry cough, etc., but they are also infectious, and there are even asymptomatic patients. Based on this, this paper collected the population migration index values of 17 regions in Hubei province from January 10, 2020 to March 15, 2020 (a total of 66 days) and performed a time-delayed Spearman correlation analysis with the cumulative confirmed cases (from January 24, 2020 to March 29, 2020) in each region to construct an adjacency matrix.

where the Spearman correlation coefficient \(\rho = \frac{{\mathop \sum \limits_{i, j} \left( {x_{i} - \overline{x}} \right)\left( {y_{ji} - \overline{y}} \right)}}{{\sqrt {\mathop \sum \limits_{i} \left( {x_{i} - \overline{x}} \right)^{2} \mathop \sum \limits_{i, j} \left( {y_{ji} - \overline{y}} \right)^{2} } }}\), \(x_{i}\) represents the cumulative number of confirmed cases in region \(i\), and \(y_{ji}\) represents the population migration index from region \(j\) to region \(i\).

2.3 Data source

The epidemic data in this paper come from the daily epidemic notification provided by the National Health Commission (http://www.nhc.gov.cn/xcs/yqtb/list_gzbd.shtml) and the COVID-19 epidemic situation in Hubei Province provided by the Hubei Health Committee (https://wjw.hubei.gov.cn/) to collect the cumulative number of confirmed COVID-19 cases in 17 regions of Hubei Province from January 24, 2020, to March 29, 2020.

The migration data used to construct the adjacency matrix in this paper come from Baidu Maps Huiyan (http://qianxi.baidu.com/) developed by Baidu, and we collected and processed the migration scale index of 17 regions in Hubei Province from January 10, 2020 to March 15, 2020. The keyword data used to construct the feature matrix is also derived from the Baidu Search Index (https://index.baidu.com/v2/index.html#/) provided by Baidu. Set the location parameter as “cities in Hubei Province”, the time parameter as “2020-01-10 ~ 2020-03-15”, and the source parameter as “PC+ Mobile”.

2.4 Model construction

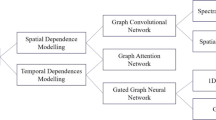

2.4.1 Overall overview

In this section, we give a comprehensive overview of how to use the LsOA-GCN model for epidemic prediction. The LsOA-GCN model is mainly composed of two parts. One is the construction of the feature matrix for capturing temporal information, the other is the graph convolution operation for capturing spatial information. First, the keyword data was sorted out through Spearman correlation analysis with a time lag, and the initial feature matrix was constructed to capture the time series information between the current number of confirmed cases and the Baidu Search Index at different periods in the past. Then LsOA was used to further filter the feature matrix to improve the accuracy of the model and avoid unnecessary feature interference. Finally, the feature matrix was used as input to obtain the spatial features of the epidemic-related data through the graph convolution network, to get the prediction results.

2.4.2 Capture temporal information

In an information society, with the continuous innovation and development of PC and mobile terminals, the Internet has become the primary and critical way for people to collect information from the outside world. Netizens can use various information search platforms to gather the information they want to know and obtain real-time data on the network. According to the 47th “Statistical Reports on Internet Development in China” released by the China Internet Network Information Center (CNNIC), as of December 2020, there have been 989 million Chinese netizens, an increase of 85.4 million compared with March 2020. The Internet has become an essential channel for the public to obtain information. Among the many search platforms, Baidu is the most popular information search platform, with nearly 90% of users in China, accounting for a significant market share. Among them, Baidu Index is a data sharing platform based on massive netizens' behavior data in Baidu. Through the Baidu Index, we can study keyword search trends, gain insights into the interests and needs of netizens, monitor public opinion trends, and locate audience features. In recent years, Baidu Search Index has been used to predict some epidemic diseases, such as dengue (Li et al. 2017), HIV/AIDS (Huang et al. 2020) and Brucellosis (Zhao et al. 2020a). Therefore, it is scientific and feasible to use Baidu Search Index as a feature to predict the COVID-19.

During the epidemic, there is a complicated time series correlation between the current number of confirmed cases and the Baidu Search Index at different periods in the past. Therefore, obtaining the temporal features is also a key issue in epidemic prediction. The first step to obtain time information is the selection of keywords. The selection methods include empirical word selection, technical word selection (Ginsberg et al. 2009), and range word selection (Alessa and Faezipour 2018). The keyword selection used to construct the feature matrix this time mainly adopted the range word selection method, supplemented by the empirical word selection method. Firstly, the selection range of keywords should be roughly determined according to the features of the epidemic, transmission routes, protective measures, etc. Then, relevant words can be recommended through the demand graph function in Baidu Index, while reducing the workload, as far as possible to ensure the completeness of the epidemic-related vocabulary. Based on these, we set up a keyword database from the four dimensions of prevention, symptoms, common words and other related aspects of COVID-19, and initially selected 40 specific keywords, namely “vaccine”, “hand washing”, “mask”, “disinfection”, “nucleic acid”, “flu”, “diarrhea”, “dry cough”, “pneumonia”, “cough”, “fever”, “dyspnea”, “high temperature”, “stuffy nose”, “asymptomatic infection”, “close contact”, “suspected case”, “aerosol transmission”, “infection”, “novel coronavirus”, “NCP”, “SARS-CoV-2”, “COVID-19”, “novel coronary pneumonia”, “novel coronavirus pneumonia”, “virus pneumonia”, “coronavirus”, “novel”, “epidemic”, “Center for Disease Control and Prevention”, “Red Cross Society”, “Spring Festival”, “Health Commission”, “SARS”, “Zhong Nanshan”, “children”, “medical”, “government”, “Wuhan” and “lockdown of the city”.

The specific construction steps of the feature matrix are as follows:

(1) Establish a keyword database from the 4 dimensions mentioned above, and initially screen out 40 keywords;

(2) Time-delayed Spearman correlation analysis is performed between Baidu Index (search index, information index) of 40 keywords and the cumulative number of COVID-19 cases;

(3) Further screen the keyword database and select keyword data with a correlation coefficient greater than 0.5;

(4) Collect keyword data according to the delay days corresponding to the maximum correlation coefficient of each keyword in the thesaurus, and construct a feature matrix based on this;

(5) When dealing with practical problems, not all features must be included in the prediction model, and sometimes deleting some unimportant features may improve the performance of the model. Feature selection is a common data dimensionality reduction method, which has been widely concerned by scholars. They regard feature selection as a 0–1 problem and usually choose the meta-heuristic algorithm to solve (Guo et al. 2022; Jia et al. 2022; Sha et al. 2020). Inspired by this, we used the swarm intelligence optimization algorithm to further filter the feature matrix. Its main purpose is to delete the feature data that is not related to the problem in the original feature matrix, reduce the feature dimension and reduce the running time. This point will be explained in detail in the next part.

2.4.3 Filter feature matrix

In recent years, various intelligent optimization algorithms have emerged one after another, such as the classic Particle Swarm Optimization (Eberhart and Kennedy 1995), Whale Optimization Algorithm (Mirjalili and Lewis 2016), and the newly developed Marine Predator Algorithm (Faramarzi et al. 2020), etc. However, when dealing with practical problems, these algorithms will converge prematurely and easily fall into the risk of local optimum, making it difficult to solve all practical application problems (Faramarzi et al. 2020).

Therefore, we proposed a population-based meta-heuristic optimization algorithm, Lioness Optimization Algorithm (LsOA), to further filter the feature matrix to avoid the interference of unnecessary features. The lion-inspired optimization algorithm appeared in 2012: The Lion's Algorithm (2012) (Rajakumar 2012), which mainly simulates the three behaviors of lion mating, territory takeover, and territory defense, to find optimal solution; The second is Lion pride optimizer (2022) (Wang et al. 2012), which is developed around the mating behavior of lions, and on this basis, three evolutionary strategies are proposed. Survival of the fittest is its main idea; The third chapter is the Lion Optimization Algorithm (2016) (Yazdani and Jolai 2016), which not only draws on the mating and competition behaviors of the lions, but also introduces hunting, migration, patrol and other mechanisms. It can be seen that although the evolution process of the above algorithms incorporate the natural behaviors of lions hunting and mating, they fail to consider some of the core optimization mechanisms in the lion hunting process, resulting in their shortcomings such as slow convergence speed and difficulty in finding global extreme value. Aiming at the shortcomings of the above algorithms, this paper proposed LsOA. Because the optimization process of the algorithm is closer to the hunting behavior of the lions, the lioness is the main performer of the lion hunting. Inspired by this, this algorithm mainly focuses on the hunting behavior of lionesses, and comprehensively considers the two mechanisms of team hunting and elite hunting. During the period, Levy flight and Brownian motion are also introduced. While retaining the strong global exploration ability of the original lion pride algorithms, the local mining ability of the algorithm is improved, which can solve various mathematical optimization problems more effectively, which is completely different from the previous algorithms.

In LsOA, the prey is the global optimal solution, and the current optimal solution is considered to be lioness A or the top lioness. The lions mentioned are all candidate solutions, and they are all lionesses by default. LsOA is mainly composed of the following six mechanisms.

(1)Track prey.

In the hunting process, the lions tend to spread out into a fan shape to surround the prey in the center, and the group of lions in the “center” of the fan shape will consciously adjust their positions according to the positions of other lions and prey. The mathematical model of this behavior is shown in Eqs. (3) and (4), where Eq. (3) defines the step length and direction of the current lion group to the target position, and Eq. (4) defines the position of the lion group after the update.

where \(t\) is the current iteration number, \(\vec{X}(t)\) is the position vector of the lioness, \(\vec{D}\) is the distance between the current lioness and the prey, represents the product, \(\overrightarrow {{{\text{Prey}}}}\) is the position vector of the prey, \(\vec{A}\) and \(\vec{C}\) are coefficient vectors, and their calculation formulas are as follows:

where \(\vec{a} = 2 - \frac{{2 \cdot {\text{Iter}}}}{{{\text{Max}}\_{\text{iter}}}}\)\(\in [0, 2]\), to simulate approaching the prey, as the iteration progresses, the value of \(\vec{a}\) decreases linearly from 2 to 0, and \(\vec{A} \in \left[ { - {{\vec{a}, \vec{a}}}} \right]\). If \(|\vec{A}| < 1\), it will attack the prey and focus on exploitation at this time; if \(|\vec{A}| > 1\), it will force to stay away from the prey and focus on exploration at this time. \(\vec{C} \in \left[ {0, 2} \right]\) is a random value, which represents the weight value of the influence of the position of the current optimal solution on the updated position. \( \vec{r}_{1}, \vec{r}_{2}\) are both random vectors between [0, 1].

(2) Capture prey.

When capturing prey, we believe that the location of the target prey should be near the “central circle” of the hunting team. And this center circle is composed of the four best candidate solutions in the population (lioness A, lioness B, lioness C, and lioness D) and the average of their four. The mathematical model is as follows:

where the calculation of \(A_{i}\), \(C_{i}\)(i=1, 2, 3, 4) are shown in Eq. (5) and Eq. (6), \(\vec{X}_{A} , \vec{X}_{B}, \vec{X}_{C}, \vec{X}_{D}\) are the positions of lioness A, lioness B, lioness C and lioness D, respectively, \(\vec{X}_{ave}\) is the average of \(\vec{X}_{a}, \vec{X}_{b}, \vec{X}_{c}, \vec{X}_{d}\), and \(\vec{X}\) is the position of the current prey.

(3) “Central Circle” and Elite Matrix.

-

“Central Circle”:

According to Eqs. (8) and (9), the positions of the lions at the center of the hunting team can be determined. It is updated based on the positions of the 4 lionesses closest to the prey in the current population, which are \( \vec{X}_{a} , \vec{X}_{b}, \vec{X}_{c}, \vec{X}_{d}\), respectively, and the last candidate is the average \(\vec{X}_{ave}\) of these four candidates. These five positions constitute the hunting team's “ Center circle”:

Figure 3 shows the construction process of the “central circle”. Since the position of the target prey in the search space is not known a priori, this article assumes that the position of the target prey may be located near any of these five positions, and the probability of the five candidates being selected is the same, which is 0.2.

-

Elite Matrix:

The elite matrix is designed based on the elite hunting mechanism. At this time, the population evolves into some agents of the elite, through which agents are used to continuously test the direction and position of the elite, and finally achieve the goal of capturing the prey.

In designing the elite hunting mechanism, this paper did not use a single lioness to carry out optimization iterations, but took the elite hunting mechanism as inspiration, combined with the swarm intelligence mechanism to design: that is, according to the results of the population iteration, the position of the elite is determined on the basis of the lioness of a specific size, and then the optimization iteration is carried out under the guidance of the group search mechanism.

Figure 4 shows the construction process of the elite matrix. In order to avoid the risk of falling into a local optimum caused by relying only on a single elite position, here we draw on the principle of triangular stability, and replace the common method for determining the position of the elite from the common last-round best to a joint decision by the top three agent positions (lioness A, lioness B, and lioness C) in the last-round fitness value. The calculation formula is as follows:

where \( \vec{X}_{A} , \vec{X}_{B}, \vec{X}_{C}\) are the position vectors of lioness A, lioness B and lioness C, respectively. After the position of the top lioness is determined, copy it \(n\) times to build the elite matrix:

where \({ }\overrightarrow {{X^{I} }}\) is the position vector of the top lioness in the lion group.

(4) The march strategy.

With the increasing number of optimization iterations, the risk of the lions falling into local optimization is increasing. In order to enable the lions to quickly explore a new area, this paper designed the march strategy. The implication is that when \(r2 \le M\), unifies all dimensions of the predator into a unique value (march). Otherwise, each dimension will randomly select a value in the \(E\_Team\) to be updated (random). The formulas are as follows:

where \(randi()\) is used to generate pseudo-random integers; size() is used to return the size of the vector. M indicates the degree of influence of the march strategy on the process. The prey here is a matrix with the same dimension as the elite matrix.

(5) Phase-focused mechanism.

In order to avoid premature convergence of LsOA and in reference to the behavioral process of lion hunting, the whole iteration process was divided into three stages in this paper, namely the early iteration, the middle iteration, and the late iteration (Faramarzi et al. 2020). And different search mechanisms were used in each stage.

① the early iteration.

While Iter < \(\frac{{1}}{{3}}\) Max_iter

where Iter is the current iteration number and Max_iter is the maximum iteration number. \(\overrightarrow {{{\text{RB}}}}\) is a vector of random numbers representing Brownian motion, the symbol \(\otimes\) means multiplication term by term. \(P\)= 0.5, \(R\) is a random vector in [0, 1].

② the middle iteration.

While \(\frac{{1}}{{3}}\) Max_iter < Iter < \(\frac{2}{3}\) Max_iter.

For prey:

where \(\overrightarrow {{{\text{RL}}}}\) is the random number vector representing Levy's flight and \(\overrightarrow {{{\text{RL}}}} \otimes \overrightarrow {{{\text{Prey}}}}_{i}\) simulates the behavior of prey. For lions, this study assumed:

where \({\text{CF}} = \left( {1 - \frac{{\text{ Iter }}}{{{\text{Max}}_{ - } {\text{Iter}}}}} \right)^{{\left( {2\frac{{\text{ Iter }}}{{{\text{Max}}_{ - } {\text{Iter}}}}} \right)}}, \) it is regarded as an adaptive parameter that controls the step length of the lion's movement. \(\overrightarrow {RB} \otimes \overrightarrow {{{\text{Elite}}_{ - } {\text{lioness}}}}_{i}\) simulates the behavior of a predator (lioness).

③ the late iteration.

While Iter > \(\frac{2}{{3}}\) Max_iter

where \(\overrightarrow {RL} \otimes \overrightarrow {{{\text{Elite}}_{ - } {\text{lioness}}}}_{i}\) simulates the behavior of a predator (lioness).

(6) The impact of FADs.

FADs (Filmalter et al. 2011) is called “fish aggregating devices”, which is a kind of raft-like structure, usually made of some waste products. This kind of device is easy to attract and gather a large number of fish, making it difficult for them to escape. There are similar facilities (regions) on the African savannah, such as wetlands or swamps. When lions hunt here, they often get caught in them and cannot get out.

Given this point, this paper introduced FADs into the algorithm. FADs is considered to be local optima, and the effect is that lions are trapped in these areas. The FADs effect is expressed mathematically as:

where \(FADs = 0.2\), which means the probability that FADs will affect the optimization process.\(r\) is a uniform random number in [0, 1]. \(r1\), \(r2\) represents the random index of the prey matrix. \(\vec{X}_{\max }\), \(\vec{X}_{\min }\) are the maximum and minimum values of each dimension, respectively. \(Ma\) is a binary vector containing only 0 and 1. First, generate a random vector in [0, 1], if it is less than 0.2, it will be regarded as 0; otherwise, it will be regarded as 1. The pseudo-code of LsOA is as follows:

Note: The value of each dimension is within the range of [0, 1]. If the value is greater than 0.5, it is regarded as 1; otherwise, it is regarded as 0. If the value in a certain dimension is 1, it means that the corresponding feature vector in this dimension is selected, otherwise, it will not be selected. The calculation formula of the fitness value is \(fitness = GCN\left( {G;X} \right)\), \(G\) is the city network graph, \(X\) is the feature matrix, and the output result is the value of Root Mean Squared Error (RMSE).

2.4.4 Capture spatial information

At the beginning of the outbreak, it was the Spring Festival travel season. Most people are taking chances, and their awareness of epidemic prevention is weak. The large-scale population movement has caused the epidemic to spread quickly across the country in a short time. Since the outbreak of the COVID-19 has the feature of “person-to-person transmission”, when forecasting the number of confirmed COVID-19 cases in a certain region, it is necessary to consider not only the number of confirmed cases in the region in the past period of time, but also the inflow of confirmed cases from the surrounding regions to the region. How to characterize the interrelationships between complex spaces has become another vital issue in epidemic prediction.

The conventional convolutional neural network (convolutional neural network, CNN) can get local spatial information, but it can only apply to Euclidean space, such as images, regular grids, and so on. The principle is to get the spatial relationship features of the image through the convolution kernel. The typical CNN two-dimensional convolution is shown in Fig. 5. However, the city network is not a two-dimensional grid, but in the form of a graph, which means that CNN cannot reflect the complex topology of the city network and cannot precisely obtain spatial information. In order to be able to extend convolution to topological graphs of non-European data structures, GCN is born. It can extract features from many irregular data structures in real life, typical of which is graph structure, or topological structure.

Give a set of graph data, which contains N nodes, each node has its own features, we used the nodes with D-dimensional features to construct a feature matrix \(X \in R^{N \times D}\), and then used the mutual relationship between the nodes to construct the adjacency matrix \(A \in R^{N \times N}\). Finally, input the feature matrix \(X\) and the adjacency matrix \(A\) into the network model. GCN is also a neural network layer, and its layer-to-layer propagation mode is as follows:

where \(X^{(l)}\) represents the feature matrix of the \(l\)-th layer, \(W^{(l)}\) represents the learnable parameters of the \(l\)-th layer. \(\tilde{A} = A + I_{N}\), \(A\) is the adjacency matrix, which is used to reflect the network topology in the graph data. \(I_{N}\) is the identity matrix, which is used to extract the features of its own node. \(\tilde{D} = \mathop \sum \limits_{{}} \tilde{A}_{ij}\) is the degree matrix, and the degree matrix is used to measure the importance of neighbor nodes. \(\sigma\) is a nonlinear activation function.

It can be seen from the above formula that the GCN model is equivalent to a feature extractor, which acts on the nodes of the graph, and gets together its first-order neighbors according to different weights, thereby capturing the spatial features between nodes. The specific operation is shown in Fig. 6. Suppose the orange node is the central node, and the blue nodes are the first-order neighbor node of the central node. According to the network topology, the central node aggregates the features of itself and its first-order neighbor nodes, and finally obtains spatial information from the feature data.

The LsOA-GCN model used in this paper constructed a GCN model by superimposing two convolutional layers. A two-layer GCN model can be expressed as:

where \(X^{\left( l \right)}\) represents the feature matrix of the \(l\)-th layer; \({\text{Relu()}}\) is the activation function; \(\hat{A} = \tilde{D}^{{ - \frac{1}{2}}} \tilde{A}\tilde{D}^{{ - \frac{1}{2}}}\), \(\tilde{A} = A + I_{N}\), \(A\) is the adjacency matrix, and \(\tilde{D} = \mathop \sum \limits_{{}} \tilde{A}_{ij}\) is the degree matrix; \(W^{\left( l \right)}\)\(W^{{\left( {l + 1} \right)}}\) represent the learnable parameters of layer \(l\) and layer \(l + 1\), respectively.

2.4.5 Loss function

The loss function is the most basic and critical element of the prediction model in the training process. Its purpose is to minimize the error between the actual value and the predicted value of cumulative confirmed cases. The loss function we defined in the LsOA-GCN model was the mean square loss function. The specific formula is as follows:

where \(Y_{t}\) and \(\hat{Y}_{t}\), respectively, represent the actual and predicted the cumulative number of confirmed cases on day \(t\).

2.4.6 LsOA-GCN model

In order to capture the temporal and spatial information of the feature data simultaneously, we used a graph convolutional network prediction model based on the lioness optimization algorithm (LsOA-GCN). The overall process of LsOA-GCN is shown in Fig. 7. The right part is the flow chart of this prediction model, and the left part is the feature screening process.

In short, the LSOA-GCN model can handle complex temporal dependence and spatial correlation. On the one hand, Spearman correlation analysis with delay days and LsOA are used to capture the dynamic changes of feature information to get the temporal features. On the other hand, we obtain the structure of the city network by graph convolutional network, to get spatial information and finally realize the prediction task.

3 Experiments

3.1 Data set

To verify the performance of the LsOA-GCN model, we predicted the number of confirmed cases of the epidemic for 66 days in 17 regions of Hubei Province. Due to proper epidemic control measures, the number of newly confirmed cases in Hubei Province was almost zero in the later period. Therefore, the prediction target of the confirmed epidemic data set in Hubei Province was set as the cumulative number of daily confirmed cases.

In the experiment, we selected 17 regions in Hubei Province as the primary research areas, namely Wuhan, Ezhou, Enshi, Huanggang, Huangshi, Jingmen, Jingzhou, Qianjiang, Shennongjia, Shiyan, Suizhou, Tianmen, Xiantao, Xianning, Xiangyang, Xiaogan and Yichang. The experimental data was mainly composed of three parts: The first was the migration data from January 10, 2020 to March 15, 2020. The 17*17 adjacency matrix was constructed mainly based on the correlation between the migration scale index and the cumulative number of confirmed cases; The second was the keyword data from January 10, 2020 to March 15, 2020 (the date range varies according to the number of delayed days), which was used to construct a 17*66*44 feature matrix; The third was the epidemic data from January 24, 2020, to March 29, 2020. The daily cumulative number of confirmed cases in each region of Hubei Province was used as the final target value to form a 17*66 target value matrix. During the period, all experimental data used were normalized, and according to the ratio of 9:1, the data set was divided into the training set and the test set. The length of the historical time series was set to 1, and finally the cumulative number of confirmed cases in the next day was predicted. The specific division is shown in Fig. 8.

3.2 Evaluation indicator

At present, there are many indicators used to evaluate regression prediction models (Zhao et al. 2020b). RMSE, mean absolute error (MAE), mean absolute percentage error (MAPE), accuracy (Acc), coefficient of determination (R2), and explained variance score (Var) were selected as evaluation indicators in this paper to evaluate the difference between the real value \(Y_{t}\) and the predicted value \(\hat{Y}_{t}\) of the cumulative number of confirmed cases. RMSE, MAE, and MAPE are mainly used to measure the difference between the predicted value and the real value. The smaller the three indicators are, the smaller the difference between the predicted value and the actual value is, and the better the performance of the prediction model is. Accuracy is mainly used to test the prediction accuracy of the model. R2 and Var are mainly used to measure the fitting degree between the predicted value and the actual value. The larger the three indicators are, the better the prediction effect is. The specific calculation formulas of these six indicators are as follows:

(1) RMSE:

(2) MAE:

(3) MAPE:

(4) Acc:

(5) R2:

(6) Var:

where \(T\) is the predicted days; \(\parallel \cdot \parallel_{F}\) is the Frobenius norm that refers to as F-norm, which is a matrix norm that can be used to compare the error between the real matrix and the estimated matrix; \(Var\{ \cdot \}\) is the variance calculation.

3.3 Correlation analysis between Baidu Index and the cumulative number of confirmed cases of COVID-19

Since Baidu Index includes search index and information index, 40 keywords constitute 80 feature vectors. The search index shows the degree of Internet users' attention to keyword search and the continuous changes. It is based on the data of Internet users' search volume on Baidu, with keywords as statistical objects, scientifically analyzes and calculates the weight of the search frequency of each keyword in Baidu. The information index shows the degree of attention and coverage of specific keywords and continuous changes in news information on the Internet. It is based on Baidu's intelligent distribution and recommended content data and is obtained by the weighted summation of the number of netizens' reading, commenting, forwarding, like, dislike and other behaviors. We first preprocessed the data, deleted the search index or information index of keywords with all zeros, and finally contained only 61 feature vectors.

Figure 9 shows the trend comparison between the five randomly selected feature vectors and the cumulative number of confirmed cases, from January 10, 2020, to March 15, 2020. The five feature vectors are coronavirus (S), dry cough (S), fever (S), diarrhea (X), and hand washing (S), where (S) represents the search index of the keyword and (X) describes the information index of the keyword. Since the ordinate used a logarithmic scale with a base of 10 and negative or zero values could not be drawn correctly on the logarithmic graph, keywords with the Baidu Index value of 0 would appear truncated and had no value in the graph.

As can be seen from Fig. 9, the change of Baidu Index values of individual keywords is earlier than the change of cumulative confirmed cases of COVID-19. This showed that, based on certain keywords, we could predict the epidemic trend in the future to a certain extent, and there was an apparent lag between the Baidu Index of keywords and the cumulative number of confirmed cases. Because of this, we assumed that the Baidu Index could be used as a predictive indicator in a period of time before the diagnosis, that is, the feature vector, and then the number of confirmed cases could be predicted.

Based on the above analysis, in this part, we mainly analyzed the correlation between the daily cumulative number of confirmed cases of the epidemic and the Baidu Index. Due to the eclipse period of COVID-19, there are even asymptomatic infections. And as the main feature vector of the prediction model, the Baidu Index needs to be at least a certain number of days in advance to have practical application value. Therefore, the Baidu Index values of keywords 0 to 14 days in advance (epidemic data was relatively lagging by 0 to 14 days) were successively used for correlation analysis with epidemic data.

Taking Wuhan as an example, we performed Spearman correlation analysis on the Baidu Search Index (search index, information index) of keywords with time delay (0-14d) and the cumulative number of confirmed cases in Wuhan. The results are shown in Table 1, and the correlation analysis results of other 16 regions are shown in Appendix Tables 13–28.

Table 1 shows the correlation coefficient value of each keyword with a delay of 0–14 days and the cumulative number of confirmed cases, where the correlation coefficient value corresponding to the optimal delay days has been shown in bold. For example, for novel coronary pneumonia (S), we found that the 14-day delay has the strongest explanatory power. For fever (S), the most explanatory delay time is 3 days. In this experiment, we believed that it is most appropriate to use the keyword data corresponding to the delay days with the strongest explanatory power, and used it for subsequent prediction tasks. In order to highlight the role of feature vectors and improve prediction performance, based on comprehensive consideration of 17 regions in Hubei Province, we only screened out the keyword data with the maximum correlation coefficient greater than 0.5, a total of 44, as shown in Table 2. Then, according to the delay days corresponding to the maximum correlation coefficient, the keyword data was moved, filled, and sorted out to obtain the final feature vectors.

After screening out 44 feature vectors, in order to further determine the importance of correlation analysis with delay days for epidemic prediction, we, respectively, predicted the sorted feature data and unsorted feature data on the GCN model, and the prediction results are shown in Tables 3 and 4, where Table 3is a comparison of the overall prediction results of Hubei Province, and Table 4 is a comparison of the prediction results of 17 regions in Hubei Province. It was worth noting that due to proper epidemic control measures, the number of newly confirmed cases in various regions of Hubei Province in the later period was almost zero, and the daily cumulative number of confirmed cases was almost unchanged. Therefore, according to the calculation formulas of evaluation indicators, when comparing the prediction results of various regions, we only used the four indicators of RMSE, MAE, MAPE, and Acc. It can be clearly seen from Tables 3 and 4 that the sorted feature data are better than the unsorted feature data in predicting effect, and it is better than the unsorted feature data in all indicators. This result proves that the use of correlation analysis with delay days to sort the feature data can improve the performance of the prediction model.

3.4 Performance analysis of lioness optimization algorithm on benchmark functions

After determining the optimal delay days for each feature, we then considered using lioness optimization algorithm to solve the feature selection problem, whose main task is to delete redundant and irrelevant features from the original feature data. In chapter 2.4.3, we introduced the lioness optimization algorithm. In this part, we mainly tested the performance of the proposed algorithm.

In this experiment, we tested the algorithm through 23 commonly used benchmark functions. The benchmark functions mainly include four categories: (1) unimodal functions (F1-F7), which have the unique optimal solution and are mainly used to test the exploitation performance of the algorithm. (2) Multimodal functions (F8-F13), with multiple local optimal solutions, are mainly used to test the exploration ability of the algorithm. (3) Multimodal functions with fixed dimension (F14-F23), which has low and fixed dimensions. The optimization algorithms involved in the comparison included classical particle swarm optimization (PSO), grey wolf optimizer(GWO) (Mirjalili et al. 2014), whale optimization algorithm(WOA), teaching–learning-based optimization(TLBO), and newly developed heap-based optimizer(HBO), marine predators algorithm(MPA) and K-means clustering algorithm(KO). The parameter settings of various algorithms and more details can be found in the corresponding references. To provide reliable comparison results, for all test functions, the algorithms independently ran 30 times in the same environment, and the number of function evaluations was 15, 000. Therefore, under the same other conditions, the differences between algorithms are caused by different performance. Table 5 shows the test results of different algorithms on common benchmark functions.

It can be seen from Table 5 that for unimodal functions (F1-F7), the average values obtained by LsOA on F5 and F6 were far superior to other algorithms. On F7, LsOA also ranked first with a slight advantage. On F1-F4, compared with KO, LsOA performed poorly, but it was also superior to other algorithms by absolute advantages, showing strong competitiveness. For multimodal functions (F8-F23), LsOA reached global optimization on F9, F11, and F15-F23. On F8, LsOA also had a weak advantage over other algorithms and achieved good results. For F10 and F14, LsOA tied for first with some algorithms and obtained the same results. On F12 and F13, LsOA showed an absolute advantage.

We comprehensively considered the test results of these benchmark functions. The last row in Table 5 is the final ranking result. The overall performance of LsOA on these 23 commonly used benchmark functions was excellent, with an average ranking of 1.17, ranking first in the comprehensive ranking, which was in turn superior to KO, MPA, TLBO, HBO, GWO, WOA, and PSO. The above experiments prove the superior performance of LsOA, which also shows that we can use this algorithm to screen the prediction features of COVID-19.

3.5 Setting training parameters and comparison methods

3.5.1 Training parameters

The parameters used in the GCN training model included the length of history\future time series, feature dimension, learning rate, training epoch, batch size, number of hidden layer nodes, optimizer, loss function, and so on. The processor of the experimental hardware environment was Intel (R) Pentium (R) CPU N3700 @ 1.60 GHz 1.60 GHz, a server with 4 GB of memory, and the software environment consisted of Python 3.6.12 and TensorFlow 1.14.0.

Among the training parameters, the number of hidden layer nodes is a very important parameter in the GCN model. Different numbers of hidden layer nodes will greatly affect the prediction accuracy. Too many nodes will cause over-fitting, and too few nodes will cause the model to be inaccurate. Therefore, in this part, we conducted a sensitivity analysis on the number of hidden layer nodes and conducted experiments with different numbers of hidden layer nodes, to analyze the performance of the model and finally determine the optimal number of hidden layer nodes by comparing the prediction results.

In this experiment, we determined the number of hidden layer nodes from {8, 16, 32, 64, 80, 100, 128, 144, 160}, conducted experiments on the premise that the number of training is 3000, and analyzed the change of prediction accuracy. Finally, the results of sensitivity analysis for different numbers of hidden layer nodes are shown in Fig. 10, where the abscissa represents the number of hidden layer nodes and the ordinate represents the corresponding value of each indicator.

Figure 10 shows the changes of various index values under different numbers of hidden layer nodes when the number of training times is 3000, where Fig. 10a and c, respectively, shows the changes of RMSE, MAE, and MAPE as the number of hidden layer nodes increases. It can be seen from these figures that when the number of hidden layer nodes is 16, the corresponding RMSE and MAE are the smallest, and MAPE is the smallest when the number of hidden layer nodes is 32, indicating that the difference between the predicted value and the actual value in the corresponding state is also the smallest. Figure 10b shows the changes of Accuracy and Var as the number of hidden layer nodes increases. It can be concluded from Fig. 10b that when the number of hidden layer nodes is 16, these three indicators all reach the maximum value, indicating that the model fitting effect is the best at this time. In summary, we could conclude that when the training times are set to 3000, the model has the best effect when the number of hidden layer nodes is set to 16.

We can also see from these figures that as the number of hidden layer nodes increases, the prediction accuracy first decreases, then increases, and then decreases. This is mainly because when the hidden layer node is larger than a certain level, the model complexity and calculation difficulty will greatly increase, thereby reducing the prediction accuracy. Therefore, according to the results of sensitivity analysis, we also set the number of hidden layer nodes as 16 in the subsequent experiment of LsOA-GCN. Finally, the parameters used for the LsOA-GCN training model we obtained are shown in Table 6.

3.5.2 Comparison methods

This part mainly compared several representative time series prediction methods in current epidemic prediction research, including multiple linear regression, support vector regression, backpropagation neural network, kernel ridge regression, Gaussian process regression, stochastic gradient descent regression, GAT, LSTM, and T-GCN models. The nine comparison methods are described as follows:

-

(1) Multiple Linear Regression (Yuchi et al. 2019). When performing regression analysis, we call regression with two or more independent variables under linear correlation conditions as multiple linear regression (MLR). This method uses the optimal combination of multiple independent variables to predict or estimate the dependent variable together. Because the relationship between multiple independent variables is integrated, it is usually a better method than linear regression, which is more effective and more realistic than using only one independent variable for prediction or estimation. In the comparison experiment, the parameters used in this method were all default values.

-

(2) Support Vector Regression (Brereton and Lloyd 2010). Support vector machine (SVM) is a class of generalized linear classifiers that perform binary classification of data in a supervised learning manner. Support vector regression (SVR) is an application model of support vector machines in regression problems. The support vector machine regression model has many variants based on different loss functions. The core idea is to find a separating hyperplane (hypersurface) that minimizes the expected risk. In the comparison experiment, the kernel type used in this method was 'poly', the penalty parameter C = 1.1, the kernel coefficient gamma of 'poly' was set as 'auto', the degree of the polynomial kernel function (‘poly’) was 3, epsilon = 0.1, the independent term in the kernel function coef0 = 1.0, and the rest of the parameters used default values.

-

(3) BP neural network (Wang et al. 2015). BP neural network (backpropagation neural network, BPNN) is a multilayer feedforward network trained according to error backpropagation. Its basic idea is the gradient descent method, which uses gradient search technology to minimize the mean square error between the real output value and the expected output value of the network. It is the most widely used neural network. The BPNN used in the comparison experiment was a three-layer network structure, and the node number of input layer, hidden layer, and output layer was 16, 32, and 1, respectively. The activation function was relu, loss was the mean square loss function, the optimizer used Adam algorithm, the training epoch was set to 3000, and the batch size was 7.

-

(4) Kernel Ridge Regression (Wu and Zhao 2020). The kernel ridge regression (KRR) algorithm introduces the kernel method based on the ridge regression algorithm. The independent variable space is mapped to the high-dimensional feature space through the kernel function, and then, the ridge regression method is used to analyze and process the data in the high-dimensional feature space. In other words, kernel ridge regression is a kind of regression algorithm, and its essence is to use ridge regression to make predictions. Ridge regression is linear regression, and it fits a straight line. When facing a nonlinear situation, you can map the independent variable space to the high-dimensional feature space by adding a kernel function, and then perform linear regression in the high-dimensional feature space. For the KRR model used in the comparison experiment, alpha = 1.0, the kernel used a linear kernel, the regularization strength coefficient was set to 0.9, and the rest of the parameters were default values.

-

(5) Gaussian Process Regression (Arthur et al. 2020). Gaussian process (GP) is a commonly used supervised learning method, which aims to solve regression problems and probabilistic classification problems. Gaussian process regression (GPR) implements the Gaussian process model in the case of regression. It is a nonparametric model that uses Gaussian process priors to perform regression analysis on data. In the comparison experiment, the kernel object used in this method was kernel = DotProduct() + WhiteKernel(), the generator used to initialize the center was random_state = 0, and the rest of the parameters adopted default values.

-

(6) Stochastic Gradient Descent Regression (Ighalo et al. 2020). Stochastic gradient descent (SGD) is a simple but highly efficient method, which is mainly used for discriminant learning of linear classifiers under convex loss functions, such as (linear) support vector machines and logistic regression. Stochastic gradient descent regression supports different loss functions and penalties to fit linear regression models. The SGD model used in the comparison experiment, the maximum number of iterations was set as 1000, the stopping criterion tol was set as ‘0.001’, and the rest of the parameters adopted default values.

-

(7) GAT (Velikovi et al. 2017). Graph attention network (GAT), which aggregates neighbor nodes through the attention mechanism and realizes the adaptive allocation of different neighbor weights. This is different from GCN. The weights of different neighbors in GCN are fixed, and they all come from the normalized Laplacian matrix. GAT greatly improves the expressive ability of the graph neural network model. The GAT model used in the comparison experiment was a double-headed three-layer network structure, the number of nodes in the three layers was 45, 16, and 1, respectively. The activation function was LeakyReLU, loss was the mean square loss function, the optimizer used the Adam algorithm, the default learning rate was 0.001, the training epoch was set to 3000, and the batch size was 7.

-

(8) LSTM (Hochreiter and Schmidhuber 1997). Long short-term memory (LSTM) is a time-cyclic neural network, which is specially designed to solve the long-term dependency problem of general recurrent neural network (RNN). The core concepts of LSTM lie in the cell state and the “gate” structure. The cell state is equivalent to the path of information transmission, so that information can be passed down in the serial connection, which can be regarded as the “memory” of the network. The addition and removal of information is achieved through the “door” structure, which learns which information should be saved or forgotten during the training process. The LSTM model used in the comparison experiment was a three-layer network structure. The number of nodes in the three layers was 45, 200, and 1, respectively. The optimizer used Adam algorithm, the initial learning rate was 0.005, the gradient threshold was set to 1, and after 125 cycles, the learning rate was reduced by multiplying by 0.1, the training epoch was 3000, and the batch size was 7.

-

(9) T-GCN (Zhao et al. 2020b). Temporal graph convolutional network (T-GCN) is proposed for traffic flow prediction and is mainly composed of two parts. One part is to use the graph convolutional network (GCN) to learn the connection relationship of roads in the traffic network graph, to model the spatial correlation of traffic temporal and spatial sequence data. The other part is to use gate recurrent unit (GRU) to obtain the time dependence of data. The T-GCN model used in the comparison experiment was composed of GCN and GRU. The optimizer used Adam algorithm, the initial learning rate was 0.001, the number of hidden layer nodes was 8, the activation function of the graph convolutional layer was sigmoid, the activation function of the GRU layer was tanh, the loss function was \(loss = ||Y_{t} - \hat{Y}_{t} || + \lambda L_{reg}\), the training epoch was 3000, and the batch size was 7.

3.6 Performance evaluation

In this section, in order to verify the effectiveness of the prediction model, we used the cumulative number of confirmed cases of COVID-19 from January 24, 2020, to March 29, 2020 (66 days in total), in 17 regions of Hubei Province and the Baidu Index of adjusted keywords as the data source, used the data in the data set before March 23, 2020, as the training set, and used the LsOA-GCN prediction model to predict the cumulative number of confirmed cases in the 7 days from March 23, 2020 to March 29, 2020. During training, the input data \(X_{i}\) is the keyword data on the \(i\)-th day, where \(X_{i} = \left[ {x^{1} , x^{2} , ..., x^{j} } \right]\), \(1 \le i \le 66\), \(1 \le j \le 44\), and the output value \(Y_{i + 1}\) is the cumulative number of confirmed cases on the (\(i + 1\))-th day. Finally, the prediction results were compared with the results of 10 representative prediction methods in current epidemic prediction research, including multiple linear regression, support vector regression, backpropagation neural network, kernel ridge regression, Gaussian process regression, stochastic gradient descent regression, GAT, LSTM, T-GCN and original GCN model. In order to visually test the prediction accuracy of each model, we compared the predicted value of each model with the actual value through evaluation indicators and obtained the RMSE, MAE, MAPE, Acc, R2, and Var of each model. The final results are shown in Tables 7 and 8, where Table 7 shows the comprehensive prediction results of Hubei Province and Table 8 shows the respective prediction results of 17 regions in Hubei Province.

It can be seen from the comprehensive prediction results in Table 7 that compared with the other 10 methods, the RMSE, MAE, and MAPE values of the cumulative number of confirmed cases predicted by the LsOA-GCN model are the smallest, which are 14.65572, 5.4005, and 0.0035, respectively. It shows that the cumulative number of confirmed cases predicted by this method is closer to the actual value, and the error is smaller. For the three types of indicators, Acc, R2, and Var, the values are all close to 1, indicating that the prediction accuracy of the prediction model is high and it shows an absolute advantage. From the regional prediction results in Table 8, we could also see that when predicting the cumulative number of confirmed cases in Xianning, LsOA-GCN ranked third with only a slight difference in the two indicators of MAE and MAPE. In addition, for the three types of indicators, RMSE, MAE and MAPE, LsOA-GCN has an absolute advantage over other forecasting methods. For Acc, in these 17 regions, the value of Acc is within the range of 0.9914 to 0.9990. The closer Acc is to 1, the stronger the fitting degree between the actual value and the predicted value is, the higher the prediction accuracy is, and the more accurate the prediction method is. In addition, we also found that the same prediction model was used for 17 regions in Hubei Province, but the prediction results would be different to some extent. For example, when using LsOA-GCN for prediction, the RMSE value for Wuhan is 50.6662, Ezhou is 1.4078, and Enshi is 0.2504, which shows that the same prediction method has different prediction effects for different regions.

The convergence curve of Fig. 11 also proves the influence of using LsOA for feature screening on the prediction results, where the ordinate is the value of RMSE, and the abscissa is the number of iterations. It can be seen from Fig. 11 that at the beginning of the iteration, the RMSE value drops sharply. As the iteration progresses, the RMSE value gradually decreases and finally stabilizes. The final feature matrix was a 17*66*3 matrix, and its keyword features were dyspnea (S), novel coronavirus pneumonia (S) and stuffy nose (X). Among them, the two symptoms of dyspnea (S) and stuffy nose (X) are not unique to COVID-19, but they are easy to recognize and attract attention. For the general population, this is an indicator that may be infected with COVID-19. In addition, novel coronavirus pneumonia (S) is a commonly used term in the epidemic, indicating that as the epidemic continues to spread, people have further information needs for the basic knowledge of COVID-19. Based on the above analysis, we could believe that these three keywords can reflect the prevalence of COVID-19 to a certain extent and have certain predictive value.

In general, for these six types of evaluation indicators, the LsOA-GCN prediction model has shown superior performance in Hubei Province or in the 17 regions of Hubei and is the best prediction method.

3.7 Statistical test

Before statistical analysis, Friedman was used to calculate the average ranking of various prediction methods on different indicators. The results are shown in Table 9. According to Friedman mean rank, LsOA-GCN performed best when considering 17 regions in Hubei Province, ranking first under the four indicators of RMSE, MAE, MAPE, and Acc. Among them, for RMSE, the average ranking of LsOA-GCN was 1.00, which was superior to GCN, LSTM, BPNN, SVR, GPR, MLR, KRR, SGD, T-GCN, GAT, respectively, by 182%, 324%, 329%, and 376%, 482%, 512%, 729%, 800%, 853%, 912%; for MAE, the average ranking of LsOA-GCN was 1.12, which was superior to GCN, LSTM, BPNN, SVR, GPR, MLR, KRR, SGD, T-GCN, GAT, respectively, by 121%, 284%, 295%, 363%, 405%, 426%, 637%, 721%, 753%, 800%; for MAPE, the average ranking of LsOA-GCN was 1.12, which was superior to GCN, LSTM, BPNN, SVR, GPR, MLR, KRR, SGD, T-GCN, GAT, respectively, by 121%, 284%, 289%, 363%, 426%, 426%, 632%, 716%, 753%, 795%; for Acc, the average ranking of LsOA-GCN was 11.00, which was superior to GCN, LSTM, BPNN, SVR, GPR, MLR, KRR, SGD, T-GCN, GAT, respectively, by 17%, 29%, 29%, 34%, 46%, 47%, 66%, 72%, 78%, 82%. These results once again verify the superior performance of the LsOA-GCN prediction method. The calculation method is as follows:

where \(rank_{i}\) represents the ranking of the prediction method \(i\), \(i\) is the prediction method used for comparison, and \(rank_{LsOA - GCN}\) is the ranking of LsOA-GCN.

When comparing methods, it is often necessary to perform statistical tests on experimental results. This part used some statistical test methods for comparing methods. First, a nonparametric Friedman test was used to determine whether there are significant differences in the performance of all methods under each indicator. In order to make a reliable comparison, we considered 11 methods and tested them on 4 different indicators for 17 regions, where the indicator used in the first group was RMSE, the second group was MAE, the third group was MAPE, and the fourth group was Acc. First, the Friedman test requires the average ranking to be calculated first. Then, the Friedman test should consider the critical value obtained at the significance level ( α = 0.05, 0.1) and compare the critical value with Friedman's statistical results to determine whether there is evidence that the null hypothesis is false. For the tests of these four indicators, we found that the results rejected the null hypothesis, which indicates that the performance of each group of methods has significant differences.

After the difference was determined, a “post hoc test” was needed to determine which methods have statistical differences in performance. For this purpose, we used the Bonferroni–Dunn test (Zar 2010). This test compared the proposed method, LsOA-GCN, with the other 10 methods; that is, the average ranking difference of each method was compared with the critical difference (CD). If the difference is greater than the critical difference, it means that the method with a good average ranking is statistically superior to the method with a bad average ranking; otherwise, there is no statistical difference between the two. The calculation formula of the critical difference is as follows:

where \(k\) is the number of methods used for comparison, \(N\) is the number of data sets, and the commonly used value of \({\text{q}}_{\alpha }\) can be obtained by looking up the table. See Appendix B for the table.

The results of the Bonferroni–Dunn test are shown in Fig. 12. In order to facilitate observation and comparison, here we only used three indicators: RMSE, MAE, and MAPE. And the average rankings of 11 methods on these 3 indicators are all reflected in the bar chart. The value corresponding to the horizontal line is equivalent to the threshold, that is, the ranking of the compared method plus the value of CD. This part defined two thresholds at the significance level of 0.05 and 0.1, respectively. Each group used a different color to identify (the first group was blue, the second group was red, the third group was green), and the threshold was also distinguished by different colors.

It is worth noting that the method used for comparison (LsOA-GCN) can outperform those whose average ranking is higher than the threshold line (i.e., the height of the bar exceeds its corresponding line). It can be seen from Fig. 12 that in these three groups, LsOA-GCN is better than all methods, with an average ranking of 1.00, 1.12, and 1.12, respectively. In Group 1, LsOA-GCN is significantly better than the other 7 methods except GCN, LSTM and BPNN at the significance level of 0.05 and 0.1, respectively. In Group 2 and Group 3, LsOA-GCN is also significantly better than these 7 methods of GAT, T-GCN, GPR, KRR, MLR, SGD, and SVR at these two significant levels.