Abstract

In this paper, we study the abundance of self-avoiding paths of a given length on a supercritical percolation cluster on \(\mathbb{Z }^d\). More precisely, we count \(Z_N\), the number of self-avoiding paths of length \(N\) on the infinite cluster starting from the origin (which we condition to be in the cluster). We are interested in estimating the upper growth rate of \(Z_N\), \(\limsup _{N\rightarrow \infty } Z_N^{1/N}\), which we call the connective constant of the dilute lattice. After proving that this connective constant is a.s. non-random, we focus on the two-dimensional case and show that for every percolation parameter \(p\in (1/2,1)\), almost surely, \(Z_N\) grows exponentially slower than its expected value. In other words, we prove that \(\limsup _{N\rightarrow \infty } (Z_N)^{1/N}{<}\lim _{N\rightarrow \infty } \mathbb{E }[Z_N]^{1/N}\), where the expectation is taken with respect to the percolation process. This result can be considered as a first mathematical attempt to understand the influence of disorder for self-avoiding walks on a (quenched) dilute lattice. Our method, which combines change of measure and coarse graining arguments, does not rely on the specifics of percolation on \(\mathbb{Z }^2\), so our result can be extended to a large family of two-dimensional models including general self-avoiding walks in a random environment.

Similar content being viewed by others

1 Model and result

1.1 Introduction

We are interested in percolation on the \(\mathbb{Z }^d\) grid (\(d\ge 2\)) with its usual lattice structure. We delete each edge with probability \(1-p\) and investigate the connectivity properties of the resulting network (or rather of its unique infinite connected component when it exists). More precisely, we want to study the asymptotic growth of the number of self-avoiding paths of length \(N\) starting from a given (typical) point on the dilute lattice. A self-avoiding path is a lattice path that does not visit the same vertex twice.

Comparing the number of self-avoiding paths with its expected value gives some heuristic information concerning the influence of a quenched edge dilution on the trajectorial behavior of the self-avoiding walk.

1.2 The self-avoiding walk

Let us first recall some facts about the self-avoiding walk on a regular lattice (we focus on \(\mathbb{Z }^d\) for the sake of simplicity). Set

and \(s_N:=|\mathcal S _{N}|\). As \(s_N\) is a submultiplicative function, the limit

exists.

The constant \(\mu _d\) is called the connective constant of the network. It is not expected to take any remarkable value as far as \(\mathbb{Z }^d\) is concerned (on the two dimensional honeycomb lattice on the contrary, it has been conjectured for a long time and has been recently proved that \(\mu =\sqrt{2+\sqrt{2}}\), see [11]).

The self-avoiding walk of length \(N\) is a stochastic process whose law is given by the uniform probability measure on \(\mathcal S _{N}\). It has been introduced as a model for polymers by Flory [12]. Theoretical physicists have then been interested in describing typical behavior of the walk for large \(N\), to understand whether it differs from that of the simple random walk and why. Their answer to this question depends on the dimension:

-

(i)

When \(d>4\), the self-avoiding constraint is a local one. Indeed, around a typical point of a simple random walk’s trajectory, the past and the future intersect finitely many times, at a finite distance. For this reason, the self-avoiding walk in dimension larger than 4 scales like Brownian Motion. The case \(d=4\) which corresponds to the critical dimension, should be similar but with logarithmic corrections.

-

(ii)

When \(d<4\), the self-avoiding constraint acts also on a large scale and modifies the macroscopic structure of the walk. In particular, it forces the walk to go further: the end to end distance \(|S_N|\) is believed to scale like \(N^\nu ,\) where \(\nu =3/4\) for \(d=2\) and \(\nu \simeq 0.59\) for \(d=3\).

On the mathematical side, the picture is much less complete. Above the critical dimension, when \(d>4\), the use of the lace expansion by Brydges and Spencer [4] allowed to make the physicists prediction rigorous, but when \(d<4\), very few things are known rigorously (for a complete introduction to the subject and a list of the conjecture see the first chapter of [27], or [29] for a more recent survey). Note that recently, Duminil-Copin and Hammond [10] proved that the self-avoiding walk is non-ballistic in every dimension.

1.3 Percolation on \(\mathbb{Z }^d\)

Let \(\omega \) be the edge dilution (or percolation) process defined on the set of the edges of \(\mathbb{Z }^d\) as follows:

-

\((\omega (e))_{e\in E_d}\) is a field of IID \(\{0,1\}\) Bernoulli variables with law \(\mathbb{P }_p\) satisfying \(\mathbb{P }_p(\omega (e)=1)=p\).

-

Every edge \(e\) such that \(\omega (e)=1\) is declared open or present whereas the others are deleted (or closed).

A set of edges is declared open if all the edges in it are open; a self-avoiding path \(S\) is declared open if all the edges in the path are open (we will use the informal notation \(e\in S\) to say that \(e=(S_n,S_{n+1})\) for some \(n\)).

The nature of the new lattice obtained after deleting edges depends crucially on the value of \(p\). There exists a constant \(p_c(d)\) called the percolation threshold such that the dilute lattice contains a unique infinite connected component if \(p\in (p_c,1]\) (in addition to countably many finite connected components), and none if \(p<p_c\). It is also known that \(p_c(2)=1/2\) (see e.g. [16] for a complete introduction to percolation).

1.4 The quenched connective constant for the percolation cluster

In what follows we consider exclusively the supercritical percolation regime where \(p>p_c\). We let \(\mathcal C \) denote the supercritical percolation cluster (the unique infinite connected component).

Set \(Z_N\) to be the number of open self-avoiding paths of length \(N\) starting from the origin:

Similarly, one can define \(Z_N(x)\) by considering paths that starts from \(x\) instead of paths starting from the origin. One has trivially

Our aim is to study the asymptotic behavior of \(Z_N(x)\) when \(x\) belongs to \(\mathcal C \). One can easily compute its expectation: for \(x\) in \(\mathbb{Z }^d\) we have

and this gives an upper bound on the possible growth rate of \(Z_N(x)\) (we cannot compute the expectation conditioned on \(x\in \mathcal C \) exactly, but the reader can check that it has the same order of magnitude).

Now we define two versions of the connective constant \(\mu _d\) for the infinite percolation cluster \(\mathcal C \). We call

the annealed connective constant. To define the quenched one, we prove the following result is which valid in any dimension.

Proposition 1.1

For every \(x\in \mathcal C \), the limit

does not depend on \(x\) and is non-random. We call it the quenched connective constant.

It satisfies the inequality

Moreover, the ratio

between the quenched and the annealed connective constants is a non-decreasing function of \(p\) on \((p_c,1]\).

Remark 1.2

We believe that

exists, but the best we can do here is to state this as a conjecture.

We are interested in knowing whether or not the inequality (1.8) is sharp. The reason for this interest is that at a heuristic level, the ratio \(Z_N/\mathbb{E }[Z_N]\) conveys some information on the trajectorial behavior of the self-avoiding walk on the dilute lattice. The self-avoiding walk of length \(N\) on the dilute lattice is the stochastic process whose law is given by the uniform probability measure on the random set

Note that this definition makes sense for all \(N\) only if \(0\in \mathcal C \).

In analogy with what happens for directed polymers in a random environment, we believe that:

-

(i)

If \(Z_N/\mathbb{E }[Z_N]\) is typically of order 1 then, the self-avoiding walk on the dilute lattice has a similar behavior to the walk on the full lattice.

-

(ii)

If \(Z_N/\mathbb{E }[Z_N]\) decays exponentially fast, then disorder changes the behavior of the trajectories. It induces localization of trajectories (they concentrate in the regions where \(\omega \) is more favorable), and possibly stretches them, making the end-to-end distance \(|S_N|\) larger.

Using some of the techniques that have been used for directed polymers could bring these statements onto more rigorous ground [see [8] for an analogy with case (i) and [5] for an analogy with the localization part of (ii)]. Of course, saying something rigorous about the end-to-end distance for the disordered model is quite hopeless as it is already a difficult open question for the homogeneous model.

Remark 1.3

As the ratio \(\mu _d(p)/p\mu _d(1)\) is non-decreasing, there exists a unique \(\bar{p}_c\in [p_c,1]\) such that \(\mu _d(p)<p\mu _d(1)\) for \(p<(p_c,\bar{p}_c)\) and \(\mu _d(p)=p\mu _d(1)\) for \(p\in (\bar{p}_c,1]\) (one of these intervals possibly being empty).

If \(\bar{p}_c\in (p_c,1)\) then the function \(p\mapsto \mu _d(p)\) cannot be analytic around \(\bar{p}_c\) so that \(\bar{p}_c\) delimits a phase transition in the usual sense of the term, between what we call a localized or strong-disorder phase \((p_c,\bar{p}_c)\) and a weak-disorder phase phase \((\bar{p}_c,1]\). We have no evidence that \(p\mapsto \mu _d(p)\) is analytic or even continuous on \((p_c,\bar{p}_c)\).

1.5 Main result

The main result of this paper is that the quenched connective constant is strictly smaller than the annealed one for the model on \(\mathbb{Z }^2\), suggesting localization of the trajectories.

Theorem 1.4

For every \(p\in (p_c(2),1)\)

meaning that \(Z_N/\mathbb{E }_p\left[ Z_N\right] \) tends to zero exponentially fast. Moreover, the function

is strictly increasing on \((p_c(2),1]\).

Although the proof allows one to extract an explicit upper bound for \(\mu _2(p)-p\mu _2(1)\), which gets exponentially small when \(p\) approaches one, we believe it to be far from optimal when edge dilution is small. Indeed, if \(|S_N|\) scales like \(N^{\nu }\) with \(\nu <1\), then the argument of the proof in [23, §3] give at a heuristic level that \(p\mu _2(1)-\mu _2(p)\) is at least of order \((1-p)^{\frac{1}{2(1-\nu )}}\) (which is to be compared with the bound in (3.27)).

1.6 Comparison with predictions in the physics literature

Although the physics literature concerning the self-avoiding walk on a dilute lattice is quite rich (for the first paper on the subject see [6]), it is difficult to extract a solid conjecture on the value of \(\mu _d(p)\) from the variety of contributions.

The first reason is that most of the studies focus on the trajectorial behavior, and only marginal attention is given to the partition function \(Z_N\) (in that respect, [1] is a noticeable exception with explicit focus on \(\mu _d(p)\)).

The second reason is that while it is often not stated explicitly, it seems that most of the papers from the eighties seem to be focused on the annealed models of self-avoiding walk on a percolation cluster, which is mathematically trivial (see for instance the sequence of equations to compute the mean square of the end-to-end distance in [19]).

Because of this last remark, we do not feel that our result contradicts the many papers (see e.g. [19, 20, 22]) which predict that edge-dilution does not change the walk’s behavior. The few numerical studies available concerning the connective constant are not very informative either: both [7, Table 1] and [1, Fig. 3] give values for \(\mu _2(p)\) that violates the annealed bound \(\mu _d(p)\!\!\;\leqslant \;\!\! p \mu _d(1)\).

In [9], the authors clearly state that they study the quenched problem, and make a number of predictions partially based on a renormalization group study performed on hierarchical lattices:

-

When \(d=2,3\), there is no weak disorder/strong disorder phase transition, and an arbitrary small dilution changes the properties of the self-avoiding walk.

-

When \(d>4\), a small edge-dilution does not change the trajectorial property, and there is a phase transition from a weak disorder phase to a strong disorder phase when \(p\) varies.

Furthermore, they give an explicit formula linking the typical fluctuations of \(\log Z_N\) around its mean with the end-to-end exponent \(\nu \) in the strong disorder phase. This prediction agrees with the earlier one present in [6], where the Harris Criterion (from [18]) is used to decide on disorder relevance.

To our understanding, our result partially confirms the prediction of [9] in dimension 2. We would translate the higher dimension predictions in terms of the quenched connective constant as follows:

-

For \(d=3\,\mu _d(p)<p\mu _d(1)\) for all \(p<1\).

-

When \(d>4\), we have \(\bar{p}_c(d)\in (p_c(d),1)\) where \(\bar{p}_c(d)\) is defined in Remark 1.3.

This is quite similar to what happens for directed percolation (see [24]) where for \(d\;\leqslant \;2\) the number of open directed paths is much smaller than its expected value for every \(p\), while when \(d{\;\leqslant \;} 3\) a weak disorder phase exists.

In Sect. 3.2 we prove a result that supports this conjecture, namely that if the volume exponent in dimension 3 is smaller than 2/3, then \(Z_N\) is typically much smaller than its expectation (but not necessarily exponentially smaller).

Predicting anything about the critical dimension \(d=4\) is trickier, and all of this remains at a very speculative level. Also, the existence of a weak disorder phase is a much more challenging question than for directed percolation for which it can be proved with a two-line computation (by computing the second moment of \(Z_N\)).

Note that the relevant critical dimension for the problem we are interested in should be the one of the random walk and not the one of the percolation process: \(d=4\) for the self-avoiding walk, and \(d=2\) for the simple random walk in the oriented model.

Remark 1.5

After the first draft of this work appeared, we have been able to make a step further in confirming the physicists’ prediction by proving that in large enough dimensions \(\bar{p}_c(d)>p_c\), i.e. that the strong disorder phase exists (see [25]).

2 Existence of the quenched connective constant and monotonicity properties

2.1 Proof of Proposition 1.1

We prove in this section that \(\mu _d(p)\) is well-defined. Some intermediate lemmata are proved for general infinite connected graphs.

Lemma 2.1

For an infinite connected graph \(\mathcal C \) with bounded degree and \(x\) a vertex of \(\mathcal C \), we define

Then the quantity

is a constant function of \(x\).

Before starting the proof, let us mention that the formula (2.3) below, which is the main step of the proof, also appears in a paper of Hammersley [17, Eq. (13)], where it is proved using the same argument.

Proof

As \(\mathcal C \) is by definition connected, it is sufficient to show that \(\limsup _{N\rightarrow \infty } ( Z_N(x))^{1/N}\) takes the same value for every pair of neighbors. Let \(x\) and \(x'\) be connected by an edge of \(\mathcal C \). Let \(\bar{Z}_N(x)\) be the number of self-avoiding paths of length \(N\) starting from \(x\) and never visiting \(x'\). Let \(Y_N(x,x')\) be the number of self-avoiding paths of length \(N\) starting from \(x\) and ending at \(x'\). One has trivially (noting that \(Y_N(x,x')=Y_N(x',x)\))

The second inequality simply says that \(\bar{Z}_N(x)\) also counts the number of paths of length \(N+1\) starting from \(x'\) whose first step is \(x\).

Let \(k\) denote the time of the visit of \(S\) to \(x'\). Decomposing \(Z_N(x)\) according to possible values \(k\) one gets

which is enough to conclude that

and thus by symmetry, that the two are equal. \(\square \)

Remark 2.2

Although to our knowledge, Lemma 2.1 has not appeared before literature, tin

What remains to be done is to prove that when \(\mathcal C \) is a supercritical percolation cluster, \(\mu (\mathcal C )\) is a.s. non-random. A first step is to show that \(\mu (\mathcal C )\) is non-sensitive to individual edge addition (and thus to edge removal).

Lemma 2.3

Let \(\mathcal C \) be an infinite connected graph with bounded degree and \(x,x'\in \mathcal C \) that are not linked by an edge. Then define

-

(i)

\(\mathcal C '\) to be the graph constructed from \(\mathcal C \) by adding a new vertex called \(y\) and and an edge \((x,y)\) linking \(x\) to \(y\).

-

(ii)

\(\mathcal C ''\) to be the graph with same set of vertices as \(\mathcal C \), and an added edge: \((x,x')\).

We have

Proof

We call \(Z'_N\) and \(Z''_N\) the number of self-avoiding path of length \(N\) on \(\mathcal C '\) resp. \(\mathcal C ''\). Note that for \(N\ge 2\), we have \(Z_N(x)=Z'_N(x)\) and thus \(\mu (\mathcal C )= \mu (\mathcal C ')\).

Now consider the case of \(\mathcal C ''\). Decomposing over paths that use the edge \((x,x')\) and those that don’t, one gets

Taking the above inequality to the power \(\frac{1}{N}\) and passing to the \(\limsup \), one gets that

which ends the proof. \(\square \)

We are now ready to conclude the proof of Proposition 1.1. In what follows \(\mathcal C \) denotes again the infinite connected component of the percolation process. By uniqueness of the infinite cluster, modifying the environment on any finite set of edges only adds or deletes finitely many edges to \(\mathcal C \), so that the new cluster can be obtained from the old one by performing Operations (i) or (ii) of Lemma 2.3 or their converse a finite number of times. Hence by Lemma 2.3, \(\mu (\mathcal C (\omega ))\) is measurable with respect to the tail sigma-algebra of the field \((\omega _e)_{e\in E_d}\), which is known to be trivial. Hence it is non-random. \(\square \)

2.2 Proof of monotonocity of \((\mu _d(p)/p\mu _d(1))\)

To prove the monotonicity of the ratio between the quenched and annealed connectivity constants, we use a coupling argument that is quite standard. We couple the two measures \(\mathbb{P }_p\) and \(\mathbb{P }_p'\) for \(p_c<p<p'<1\) as follows: let \(E_d\) denote the set of edges of \(\mathbb{Z }^d\), we consider a field \((X(e))_{e\in E_d}\) of IID random variables (call \(\mathbb{E }\) the law of the field) that are uniformly distributed in [0, 1]. Then one sets

With this construction, the infinite open clusters of \(\omega _p\) and \(\omega _p'\) satisfy \(\mathcal C _p\subset \mathcal C _{p'}\). Moreover, if one sets

then one has

The reader can then check that

Summing over \(S\in \mathcal S _{N}\) gives

Using the Borel–Cantelli Lemma, one gets that for all \(N\) large enough

which implies

\(\square \)

3 Proof of the main result: Theorem 1.4

In this section we focus on the proof of the non-equality between the quenched and annealed connective constants, or Eq. (1.11). For the proof of the strict monotonicity of \(\mu _d(p)/p\mu _d\) we refer to Sect. 4.

3.1 About the proof

The main ingredients of the proof are fractional moment, coarse-graining and change of measure. This combination of ingredients has been used several times in the recent past in the study of disordered systems with the aim of comparing quenched and annealed behaviors. The method was first introduced in [13] for the study of disordered pinning on a hierarchical lattice. It was then improved in [28] (introduction of an efficient coarse-graining on a non-hierarchical setup) and in [14, 15] (improvement of the change of measure argument by introducing a multibody interaction). It has also been successfully adapted to a variety of models and we can cite a few contributions on the random walk pinning model [2, 3], directed polymers in a random environment [23], stretched polymers [31], random walk in a random environment [30] (for technical details [23, §4] is probably the most related to what we are doing here).

The major difference between all the models mentioned above and the one we are studying here is the amount of knowledge that one has on the annealed model. The annealed version of all of the models is either the directed simple random walk or a mildly modified version of it (e.g. in [30, 31]) and the proof uses the rather precise knowledge that one has about the simple random walk (e.g. the central limit theorem) to draw conclusions. In [14, 15, 23, 30, 31], in the (1 + 2)- or 3-dimensional case, the need for a more refined change of measure is due to the fact that we are at the critical dimension, where extra precision is needed.

On the contrary, here, even though we are not at the critical dimension (recall that we believe that the result also holds in dimension 3), similar refinements have to be used for a different reason. The problem is rendered more difficult by the fact that almost nothing has been rigorously proved for the planar self-avoiding walk in spite of numerous conjectures (e.g. we don’t have a good control on \(\mathbb{E }\left[ Z_N \right] \) beyond the exponential scale, and we almost have no rigorous knowledge about the trajectory properties). For this reason, we need a method that covers all of the worst-case scenarios. As a consequence we believe that the quantitative estimate that we derive from our method is almost irrelevant. The techniques we use rely neither on the peculiar features of percolation nor on the lattice and thus are quite easy to export to other 2-dimensional models (see Sects. 5 and 6).

The main novelties in the proof are:

-

A new type of coarse-graining, that allows to take into account the fact that contrary to the directed walk case, the walk can and will return to regions that it has already visited (in [30, 31] even if the walks are not directed the situation is different because they naturally stretch along one direction).

-

A new type of change of measure (inspired from the one used in [23], but modified to adapt our new setup) and a new method to estimate the gain given with this change of measure.

The rest of the proof is organized as follows: In Sect. 3.2, in order to familiarize the reader with our change of measure technique, we prove a simple result (Proposition 3.1) which gives a relation between the volume exponent and the behavior of the partition function at small dilution. In Sect. 3.3, we explain what we mean by fractional moments, and introduce our coarse-grained decomposition. It associates a coarse-grained lattice animal with each trajectory. This reduces the proof of (1.11) to Proposition 3.2, which controls the contribution of each animal. In Sect. 3.4 we give the main idea of the proof of Proposition 3.2, which is proved in Sects. 3.5 and 3.6 for small and large values of \(m\) respectively, \(m\) being the size of the coarse-grained animal.

3.2 Change of measure without coarse graining: heuristics and conjecture support

In order to give the reader a clear view of the ideas hiding behind our proof strategy, we want to prove first a simpler result that partially confirms the prediction of [9]. Note also that it suggests that the use of Harris Criterion in [6] is valid, as our result establishes a relation between the relevance of disorder and the positivity of the specific heat exponent \(2-d\nu _d\).

Set

where \(\Vert S_n\Vert \) is the \(l_{\infty }\)-norm of \(S_n\), and \(s_N(\alpha ):=|\mathcal S _{N}(\alpha )|\). We define the volume exponent

Proposition 3.1

Assume that the relation \(d\nu _d<2\) is satisfied. Then for all \(p<1\), in probability,

In particular when \(d=3\), if \(\nu _3<2/3\) then (3.3) holds for all \(p<1\).

Proof

Choose \(\alpha >\nu _d\) such that \(d\alpha <2\) and set

By the definition of \(\nu \) one has

and hence \(Z_N^{(2)}/\mathbb{E }[Z_N]\) tends to zero in probability.

To show that \(Z_N^{(1)}/\mathbb{E }[Z_N]\) also tends to zero in probability, we prove that

We introduce a new measure \(\widetilde{\mathbb{P }}_N\) on the environment which modifies the law of \(\omega \) inside the box \([-N^{\alpha },N^\alpha ]^d\). Under \(\widetilde{\mathbb{P }}_N\), the \(\omega (e)\) are still independent Bernoulli variables but they are not identically distributed, and the probability of being open is lower for edges in \([-N^{\alpha }, N^\alpha ]^d\), or more precisely

where \(e\in [-N^{\alpha },N^\alpha ]^d\) means that both ends of \(e\) are in \([-N^{\alpha },N^\alpha ]^d\), and

The Radon–Nikodym derivative of \(\widetilde{\mathbb{P }}_N\) with respect to \(\mathbb{P }\) is equal to

With this choice of \(p'\), the probability law \(\widetilde{\mathbb{P }}_N\) is not too different from \(\mathbb{P }\) (the total variation distance between the two is bounded away from one when \(N\) tends to infinity), but \(\widetilde{\mathbb{E }}_N\left[ Z_N^{(1)}\right] \) is much smaller than \(\mathbb{E }[Z_N^{(1)}]\), and this is what is crucial to make our proof work.

Using the Cauchy–Schwartz inequality

For a path in \(S\in \mathcal S _{N}(\alpha )\) we have \(\widetilde{\mathbb{P }}_N(S \text { is open})=(p')^N\), and hence

Moreover

The first inequality above uses second order Taylor expansions of \((1\pm x)^{-1}\) and thus is valid for fixed \(p\), when \(N\) is sufficiently large. The last inequality uses the fact that

Hence combining (3.8) with (3.9) and (3.10) we obtain

for some constant \(C(d,p)\). As \(1-(d\alpha )/2>0\) this shows that (3.5) holds and this concludes the proof. \(\square \)

A drawback of the result presented in this section is that it is not even known rigorously that \(\nu <1\) for \(d=2\) so even in this case it cannot apply. Also—and this is the most important point—it does not give the exponential decay of \(Z_N/ \mathbb{E }[Z_N]\), but only its convergence to zero in probability.

To prove the exponential decay of \(Z_N / \mathbb{E }[Z_N]\) we use the change of measure technique of the proof of the proposition above, but combine it with a coarse graining argument: we divide the lattice in big cells of width \(N_0\) and then apply a change of measure similar to the one in (3.7) to each cell. This technique will allow us to gain better information on the decay of \(Z_N / \mathbb{E }_p[Z_N(\omega )\). Changing the density of the open edges as in (3.7) is however not always sufficient, and we will have to use a more subtle change of measure that induces negative correlation between the edges in one cell (Sect. 3.6).

3.3 The fractional moment method and animal decomposition

Fractional moment is a technique extensively used by physicists that consists in estimating non-integer moments of a partition function in order to get information about it. From now on we omit implicit dependences on \(p\) in our notation when it does not affect understanding.

In our case, the fractional moment method consists in saying that to prove our result (1.11), it is sufficient to prove that there exists \(\theta \in (0,1)\) and \(b<1\) such that, for \(N\) large enough

Indeed by the Borel–Cantelli Lemma (combined with the Markov inequality), (3.12) implies that a.s. for sufficiently large \(N\)

so that passing to the \(\limsup \) one gets

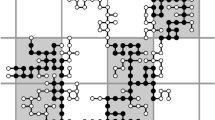

We consider the following coarse graining procedure which associates a lattice-animal on a rescaled lattice with each path. Set

where \(C_2\) is a constant (independent of \(p\)) whose value will be fixed at the end of the proof, and let us partition the set of edges \(E_d\) into squares of side length \(N_0\). More precisely, let \(r(e)\) denote the smaller end (for the lexicographical order on \(\mathbb{Z }^2\)) of an edge \(e\in E_2\) , and for \(x\in \mathbb{Z }^2\) set

Now, one associates with each path \(S\) the set of squares \(I_x\) that it visits. Set

Note that \(A(S)\) is a connected subset of \(\mathbb{Z }^2\) that contains the origin (sometimes called a site-animal), and that

The upper bound comes from the fact that in \(N_0\) steps, one cannot visit more than 9 different \(I_x\)’s (the one from which one starts and of its neighbors), whereas the lower bound simply uses the fact that there are only \(N_0^2\) sites to visit in each square. From now on, one drops the integer parts in the notation for simplicity. Let \(\mathfrak A _m\) be the set of connected subsets of \(\mathbb{Z }^2\) of size \(m\) containing the origin, and \(a_m:=|\mathfrak A _m|\). For each animal \(\mathcal A \) we set

Then one decomposes the partition function according to the contribution of each animal:

We use the following trick: for any \(\theta <1\) and any summable sequence of positive numbers \((a_n)_{n\in \mathbb{N }}\) one has

Thus applying this to (3.20) and averaging one gets

There are at most exponentially many animals of size \(m\). Here, we use the crude estimate \(a_m{\;\leqslant \;} 49^m\) (see e.g. [16, (2.4) p. 81] for a proof: the definition of lattice animal given there differs, but the bound still applies). Hence

Thus, in order to prove (3.12) it is sufficient to prove that \(\mathbb{E }\left[ Z_N(\mathcal A )^{\theta }\right] {\;\leqslant \;} 100^{-m}(p^N s_N)^{\theta }\) for every \(m\) and \(\mathcal A \). This is the key part of the proof.

Proposition 3.2

For \(\theta =1/2\) and for every \(\mathcal A \in \mathfrak A _m\)

if the constant \(C_2\) is chosen large enough.

The above proposition combined with Eq. (3.23) implies that for \(\theta =1/2\)

which implies (3.12) for \(b=5^{-1/N^2_0}\) (5 is chosen instead of 4 to absorb the extra \(N\) factor appearing) and \(N\) large enough. Thus from (3.12), (3.13) and (3.14) we get

Hence there exists a constant \(c\) such that for all \(p\)

3.4 Change of measure strategies

Let us explain our strategy behing the proof of Proposition 3.2. It is based on a change a measure argument. The fundamental idea is that if \(\mathbb{E }[\sqrt{Z_N(\mathcal{A })}]\) is much smaller than \(\sqrt{\mathbb{E }[Z_N(\mathcal{A })]}\), it must be because there is a small set of \(\omega \) (of small \(\mathbb{P }\) probability) giving the main contribution to \(\mathbb{E }[Z_N(\mathcal{A })]\). We want to introduce a random variable \(f_{\mathcal{A }}(\omega )\) which takes a low value for these untypical environment.

Lemma 3.3

For any \(\mathcal A \) and any positive random variable \(f_\mathcal{A }\) one has

Proof

Apply the Cauchy–Schwartz inequality to the product \((Z_N(\mathcal A )f_\mathcal{A })^{1/2}\times f_\mathcal{A }^{-1/2}\). \(\square \)

Note that if \(f_\mathcal{A }\) has finite expectation, then it can be thought of as a probability density after renormalization, so this operation can indeed be interpreted as a change of measure. The main problem then is to find an efficient change of measure for which the cost of the change \(\mathbb{E }\left[ (f_\mathcal{A })^{-1}\right] \) is much smaller than the benefit one gets on \(\mathbb{E }\left[ f_\mathcal{A }Z_N(\mathcal A )\right] \).

In order to get an exponential decay in \(m\) for \(\mathbb{E }\left[ Z_N(\mathcal A )^{1/2}\right] /\mathbb{E }\left[ Z_N(\mathcal A )\right] ^{1/2}\), the good choice is to take \(f_\mathcal{A }\) to be a product over all \(x\in \mathcal A \) of functions of the environment of each block \((\omega _e)_{e\in I_x}\).

One possibility is to diminish the intensity of open edges in \(\cup _{x\in \mathcal A } I_x\), like in Sect. 3.2, simply by choosing

for some \(\lambda <1\). This turns out to be a good choice when the animal \(\mathcal A \) considered is relatively small, but it does not give a good result when \(m=|\mathcal A |\) is of order \(N/N_0\), even after optimizing the value of \(\lambda \). A more efficient strategy in that case is to induce negative correlation between the \(\omega (e)\) that decays with the distance instead of reducing the intensity of edge opening.

This idea was first used in [14]. There and in all related works, the induced negative correlations were chosen to be proportional to the Green function of the underlying process (either a renewal process in [14] or a directed random walk in [23, 30, 31]). Here, the situation is a bit different as one does not have any information on the underlying process: therefore the choice of correlation (i.e. of the coefficients in the quadratic form \(Q\) in Eq. (3.40)) is done via an optimization procedure, so that it lowers significantly the probability of \(\mathbb{P }[S \text { is open}]\) for every possible path (and not only the more probable ones).

We adopt the first strategy when \(m\!\!\;\leqslant \;\!\! N/[N_0(\log N_0)^{1/4}]\), and the second one when \(m> [N_0(\log N_0)^{1/4}]\).

3.5 Proof of Proposition 3.2 for small values of \(m\)

In this section we assume that

We choose to modify the environment in

by augmenting the intensity of the edge dilution. We choose the probability of an edge being open under the new measure to be equal to

where \(\lambda <1\) is chosen such that

As there are \(2N^2_0\) edges in each block \(I_x\), the density function corresponding to this change of measure is given by

Then, we can estimate the cost of the change of measure

where for the first inequality we use \(\log (1+x){\;\leqslant \;} x\) and the identity (3.31), and for the second one, the fact that \(\lambda \) is close to one (if \(C_2\) is chosen large enough).

The probability that a path of length \(N\) whose edges are all in \(I_\mathcal{A }\) is open under the modified measure is equal to \([\lambda p/(1-p(1-\lambda )]^N\), so

Then we remark that \(|\mathcal S _{N}(\mathcal A )|\;\leqslant \;s_N\) and that

Hence, if \(C_2\) is large enough,

Combining this with (3.33) and Lemma 3.3, we get (3.24). \(\square \)

Remark 3.4

Let us now comment on why we believe that (3.27) is suboptimal. The idea is that in fact, if the typical scaling of a self-avoiding path is \(N^{\nu }\), then (at heuristic level) the typical number \(m\) of blocks visited is of order \(N/(N_0^{1/\nu })\), and the argument above works for a much lower value of \(N_0\) (of order \((1-p)^{\frac{2\nu }{2(1-\nu )}}\)), giving then a much better upper bound for all \(\mu _2(p)\). Bringing this kind of argument to a rigorous ground would require very detailed knowledge of the behavior of the self-avoiding walk.

3.6 Proof of Proposition 3.2 for large values of \(m\)

Even when trying to optimize over the value of \(\lambda \) or when taking a much larger value for \(N_0\) the preceding method fails when the size of the animals is of order \(N/N_0\), and we have to apply a different method in this case. Throughout this section we will assume that

Our proof is still based on Lemma 3.3, but the construction of our \(f_\mathcal{A }\) is a bit more complicated in this case, and for notational convenience we do not normalize it: it is not a probability density.

First, given an animal \(\mathcal A \) of size \(m\), one can extract a set of vertices \(\bar{\mathcal{A }}\) of size \(m/13\) such that the vertices of \(\mathcal A \) have disjoint \(l_{\infty }\) neighborhood, i.e. such that

where \(|x|_{\infty }=\max (|x_1|,|x_2|)\).

For instance, one can construct \(\bar{\mathcal{A }}\) by picking vertices in \(\mathcal A \) iteratively as follows: at each step we pick the smallest available vertex according to the lexicographical order in \(\mathbb{Z }^2\), and make all the vertices at a \(l_\infty \) distance 2 or less of this vertex unavailable for future picks. As at most 13 vertices are made unavailable at each step, we can keep this procedure going for \(m/13\) steps to get \(\bar{\mathcal{A }}\). For \(x\in \mathbb{Z }^2\) set

We define the distance \(d\) between edges to be the Euclidean distance between their midpoints. Given \(K\) a (large) constant, we define \(f_x\) to be a function of \(\omega \), that depends only on \(\omega |_{\bar{I}_x}\): first set \(Q_x\) to be the following random quadratic form

and then define

Finally, set

In order to use Lemma 3.3, one needs to bound \(\mathbb{E }\left[ (f_\mathcal{A })^{-1}\right] \) from above.

Lemma 3.5

If \(K\) is chosen sufficiently large, then for every \(A\in \mathfrak A _m\), we have

Proof

The function \(f_\mathcal{A }(\omega )\) is a product of \(m/13\) IID random variables (\(f_x(\omega )\), \(x \in \bar{\mathcal{A }}\)) (since our choice for \(\bar{\mathcal{A }}\), the blocks \((\bar{I}_x)_{x\in \bar{\mathcal{A }}}\) are disjoint). Thus

It remains to prove that \(\mathbb{E }\left[ (f_{0})^{-1}\right] {\;\leqslant \;}2\), and for this purpose it is sufficient to estimate the variance of \(Q_0(\omega )\). First note that \(\mathbb{E }[Q_0(\omega )]=0\), and that only the diagonal terms of the double sum that appears when expanding \(Q^2_0(\omega )\) contribute to the second moment. Note also that the maximal distance between two edges in \(\bar{I}_0\) is less than \(5 N_0\), so

where \(C_1\) is a universal constant that is independent of \(p\) and \(N_0\). Thus, by the Chebytcheff inequality, if \(K\) is large enough (independently of all parameters of the problem)

\(\square \)

It remains to estimate \(\mathbb{E }\left[ f_\mathcal{A }Z_N(\mathcal A )\right] \), which is the more delicate part. We do so by bounding uniformly the contribution of each path.

Lemma 3.6

For any \(S\in \mathcal S _{N}(\mathcal A )\), we have

Lemma 3.6, combined with the trivial bound \(|\mathcal S _{N}(\mathcal A )|\;\leqslant \;s_N\) gives

so that together with Lemmas 3.3 and 3.5 one obtains

which proves Proposition 3.2.

Proof of Lemma 3.6

Note that even after conditioning on \(S\) being open, \(f_\mathcal{A }(\omega )\) is still a product of independent variables (though the \(f_x(\omega )\) are not identically distributed any more), so that

Our idea is then to show that most of the terms in the product \(\mathbb{E }\left[ f_x(\omega )\ | \ S \text { is open}\right] \) are small. We do so by showing that conditioning on the event \(\{S \text { is open}\}\) makes the expectation of \(Q_x\) large whereas its variance remains relatively small. The problem is that both the expectation and the variance of \(Q_x(\omega )\) may grow when additional edges are conditioned on being open, and things become difficult to control when the number of edges that \(S\) visits in the block \(\bar{I}_x\) is much larger than \(N_0\). This is the reason why we restrict the use of this method to large values of \(m\): we show that \(\mathbb{E }\left[ f_x(\omega )\ | \ S \text { is open}\right] \) is small only for blocks for which the number of edges visited by \(S\) is not too large.

Set

where \(S\) is considered as a set of edges. As the total number of edges in \(S\) is \(N\!\!\;\leqslant \;\!\! m N_0 (\log N_0)^{1/4}\) and the \(\bar{I}_x\) are disjoint, we have

and hence \(| \bar{A}(S)|\ge m/30\).

Thus from (3.50), and using the fact that all the terms in the product are smaller than one, to prove (3.47) it is enough to prove that for each \(x\in \bar{A}(S)\) we have

Assume in the rest of the proof that \(x\in \bar{A}(S)\). The definition of \(f_x\) gives

and thus if \(K\) is chosen large enough, it is sufficient to prove that

To obtain such an estimate, it is sufficient to compute the two first moments of \(Q_x(\omega )\) under the conditioned measure. To keep the notation light, we write \(\mathbb{P }_S\) for \(\mathbb{P }\left[ \ \cdot \ | \ S \text { is open}\right] \).

To show that the first moment is large, one needs to extract a long path of adjacent edges. Set \(S^{(x)}\) to be a path of length \(N_0\) defined as follows:

-

If \(x=0\) then \((S^{(0)}_n)_{n\in [0,N_0]}:=(S_n)_{n\in [0,N_0]}\)

-

For all other values of \(x\), one sets \(\tau _x\) be the first time that \(S\) hits \(I_x\) (note that \(\tau _x\ge N_0\)), and one defines \((S^{(x)}_n)_{n\in [0,N_0]}:=(S_{n+\tau _x-N_0})_{n\in [0,N_0]}\).

Note that \(S^{(x)}\) has all its edges in \(\bar{I}_x\).

Under the measure \( \mathbb{P }_S\), the \(\omega (e)\) are independent, equal to one if \(e\in S\), and distributed as Bernoulli variables of parameter \(p\) otherwise. Thus

Now, for every edge \(e\in S^{(x)}\), as the trajectory \(S^{(x)}\) cannot move faster than ballistically we have

Therefore

Let us now bound from above the variance \({{\mathrm{\mathrm{Var}}}}_{\mathbb{P }_S}\left( Q_{x}(\omega )\right) \). Most of the terms in the resulting sum appear in the non-conditioned case, so we have to check that the additional terms generated by the conditioning only give a small contribution. Indeed,

The first term in the r.h.s. is less than \({{\mathrm{\mathrm{Var}}}}_{\mathbb{P }}(Q_x)\) (it is the same sum as in Eq. (3.45), with some missing terms) and thus is bounded above by \(C_1\). Using the Cauchy–Schwartz inequality, we can bound the second term from above as follows:

Thus for \(x\in \bar{A} (S)\), (recalling (3.51))

Recall that \(N_0:= \exp (\frac{C_2}{(1-p)^2})\), and set

Then

Hence, by the Chebytcheff inequality and (3.61),

which proves (3.55) if \(K\) is large enough, and ends the proof. \(\square \)

4 Starting from a supercritical percolation cluster: proof that \(p\mapsto \mu _2(p)/(p\mu _2(1))\) is strictly increasing

In the previous section, the proof does not make very much use of the fact that the lattice is \(\mathbb{Z }^2\), but only the fact that it is two dimensional. In order to prove that \(\mu _2(p)/(p\mu _2(1)\) is strictly increasing, what we have to do is replicate the same proof, but starting with a dilute lattice instead of \(\mathbb{Z }^2\). The reader can note that the proof would adapt to any connected sub-lattice of \(\mathbb{Z }^2\) or any “nice” two dimensional lattice.

Consider \(p<p'\), both in the interval \((p_c,1)\). We couple two percolation environments \(\omega _p\) and \(\omega _{p'}\) as we did in Sect. 2.2. With this coupling, the percolation process with parameter \(p\) is obtained by performing percolation with parameter \(q:=p/p'\) on the non-connected lattice \( \mathcal G _{p'} \) whose vertices are the same as those of \(\mathbb{Z }^2\) but whose set of edges is

As in the \(p'=1\) case, it is sufficient to show that for all realizations of \(\omega _{p'}\)

Indeed, using the Borel–Cantelli Lemma, (4.1) implies that almost surely

and hence

For the rest of this section we use the structure of the proof of the case \(p'=1\) (the previous section) to prove (4.1). We use the notation \(Z^p_N\) for \(Z_N(\omega _p)\), and in the proof we have to replace \(\mathbb{Z }^2\) by \(\mathcal G _{p'}\), \(p\) by \(q\), \(\mathbb{E }\) by \(\mathbb{E }[ \cdot \ | \ \mathcal F _{p'}]\), \(s_N\) by \(Z^{p'}_N\) and keep in mind that “open” means “open for \(\omega _p\)”. We detail only the points where the modifications are not trivial.

We set \(N_0:=\exp (C_2/(1-q)^2)\) and after performing our coarse graining we can reduce the proof of (4.1) to proving the inequality

We prove it using Lemma 3.3; we just need to specify our choice of \(f_\mathcal{A }\).

4.1 The case \(m{\;\leqslant \;} N/ (N_0(\log N_0)^{1/4})\)

Set

With this modification the cardinality \(| I_\mathcal{A }|\) is not always equal to \(2mN^2_0\); it depends on the realization of \(\omega _{p'}\). For this reason, for small animals, we use the following definition for \(f_\mathcal{A }\) (instead of (3.32))

As \(| I_\mathcal{A }|\;\leqslant \;2mN^2_0\), (3.33) remains valid with this change of definition. Equation (3.34) is replaced by

which allows us to conclude that

and to derive (4.4) from Lemma 3.3.

4.2 The case of \(m> N/ (N_0(\log N_0)^{1/4})\)

For large animals, we have to check that we can still control the variance and expectation of \(Q_x\) (defined with the modified version of \(\bar{I}_x\) and \(q\) instead of \(p\)) when we start with a dilute lattice. The fact that \(I_x\) contains fewer edges only makes the variance of \(Q_x\) smaller. Indeed the sums are made on a subset of the indices. Hence (3.45) and Lemma 3.5 are still valid (even if the \(f_x\) are independent but not identically distributed).

Lemma 3.6 is replaced by

Lemma 4.1

For every \(S\in \mathcal S _{N}(\mathcal A )\) which is open for \(\omega _{p'}\), we have

Equations (3.58) and (3.61) are still valid if \(\mathbb{P }_S\) is replaced by “\(\mathbb{P }[ \cdot | \mathcal F _{p'}]\) conditioned to \(S\) being open for \(\omega _p\)”: for (3.58) the estimate remains the same, and for (3.61) we just have a sum on a smaller family of edges. Hence the proof is exactly as for Lemma 3.6.

5 The self-avoiding walk in a random potential

Percolation is just one type of random potential in which one can make \(S\) evolve but there are many other possibilities. We introduce in this section a general self-avoiding walk in a random potential and show that at every temperature the partition function grows exponentially slower than its expectation. Let \(\omega (x)\) be a collection of IID random variables of zero mean and unit variance, indexed by sites of \(\mathbb{Z }^2\) (let \(\mathbb{P }\) denote their joint law) satisfying

for all \(\beta \in \mathbb{R }\).

The self-avoiding walk in a random potential is the stochastic process whose law is given by the probability measure on \(\mathcal S _{N}\) for which each path \(S\) has a probability proportional to

Physically, \((-\omega )\) corresponds to an energy attached to each site, and \(\beta \) is the inverse temperature. We are interested in the growth rate of \(Z_N\) the partition function of this model defined by

Theorem 5.1

For any \(\beta \ne 0\), we have

As in the percolation case, we remark that it is sufficient to show that

decays exponentially fast in \(N\). We prove (5.4) with the same line of proof as Theorem 1.4. We do not reproduce the parts of the proof that are identical to the percolation case. Without loss of generality we assume \(\beta >0\) in the proof. The first thing we will do is to restrict ourselves to the case of small \(\beta \). We do so by using the FKG inequality, similarly to what is done in [8]. Afterwards we use the animal decomposition, and bound the contribution of each animal by using change of measure.

5.1 Restriction to small \(\beta \)

We show in this section that when \(N\) is fixed the quantity (5.4) is a non-increasing function of \(\beta \). For this, we follow [8, Lemma 3.3 (b)], and show that the derivative in \(\beta \) is non-positive:

For a fixed \(S\), the measure \(\mathbb{P }_S\) defined by

is a product probability measure, and hence satisfies the FKG inequality [26, p. 78]. As \(\left( Z_N(\beta ,\omega ) e^{-N\lambda (\beta )}\right) ^{-1/2}\) and \(\left[ \left( \sum _{n=0}^N\omega (S_n)\right) -N\lambda '(\beta )\right] \) are respectively decreasing and increasing functions of \(\omega \), one has

where the last equality is due to the fact that \(\mathbb{E }_S[\omega (x)]=\lambda '(\beta )\mathbf{1}_{x\in S}\). In what follows we will always consider that \(\beta {\;\leqslant \;}\beta _0\) is small enough (how small will depend on the law of \(\omega \)).

5.2 Coarse graining

Fix \(N_0:=\exp (C_2\beta ^{-4})\). Similarly to what is done in Sect. 3.3, we can reduce (5.4) to the proof of a statement analogous to Proposition 3.2 controling the contribution of each animal. We want to prove that for every \(\mathcal A \in \mathfrak A _m\), we have

where

We will prove it using Lemma 3.3 and appropriate functions \(f_{\mathcal{A }}\), separating the cases \(m{\;\leqslant \;}N/[N_0(\log N_0)^{1/4}]\) and \(m> N/[N_0(\log N_0)^{1/4}]\).

5.3 The case \(m{\;\leqslant \;}N/[N_0(\log N_0)^{1/4}]\)

In the first case we set

where the definition of \(I_\mathcal{A }\) and \(I_x\) has been adapted so that they are sets of points rather than sets of edge (\(I_x:=\mathbb{Z }^2\cap [0,N_0)^2\) contains \(N^2_0\) points), and \(\delta =\delta _{N_0}:=(1/N_0)\). This corresponds to an exponential tilt of the \(\omega \) inside \(I_\mathcal{A }\).

Then, provided that \(N_0\) is large enough (hence for \(\beta \) small enough) one has

where we used that \(\lambda (\delta )\sim _{0} \delta ^2/2\) from the assumption on the variance of \(\omega \).

On the other hand,

where the last inequality uses our assumption \(m{\;\leqslant \;}N/[N_0(\log N_0)^{1/4}]\) and the facts \([ \lambda (\beta )-\lambda (\beta -\delta )-\lambda (-\delta )]>0\), \(|\mathcal S _{N}(\mathcal A )|{\;\leqslant \;}s_N\). Moreover by the mean value theorem (used twice), there exists \(\delta _1\in [0,\delta ]\) and \(\beta _1\in [-\delta _1,\beta -\delta _1]\) such that

As \(\lambda ''(s)\) tends to one at zero, \( \lambda ''(\beta _1)\ge 1/2\) if \(\beta \) is small enough; hence

and one can conclude that (5.8) holds by combining (5.10), (5.11), (5.13) and Lemma 3.3.

5.4 Case \(m> N/[N_0(\log N_0)^{1/4}]\)

Let us now move to the case of large \(m\). We introduce a quadratic form

where \(d\) denote the Euclidean distance and \(f_x\) and \(f_\mathcal{A }\) are defined as in (3.41) and (3.42). Then there is no problem in proving the equivalent of Lemma 3.5, just by controlling the variance of \(Q_x\) as follows

Instead of Lemma 3.6, we must then prove that for any \(S\) in \(\mathcal S _{N}(\mathcal A )\) and \(x\in \bar{\mathcal{A }}\) such that \(|S\cap \bar{I}_x|\;\leqslant \;30 N_0(\log N_0)^{1/4}\) (recalling (5.6)) we have

which is proved by controlling the mean and variance of \(Q_x(\omega )\) under \(\mathbb{P }_S\). The computations are almost the same as for the proof of Lemma 3.6. One notices that

In what follows, we consider \(\beta \) small enough so that

(recall that \(\lambda ''(0)=1\) from the unit variance assumption).

One obtains in the same fashion as (3.58) that

Now, we have to control the variance under \(\mathbb{P }_S\).

The two terms on the r.h.s. correspond to the two cases where the covariance \({{\mathrm{\mathrm{Cov}}}}_{\mathbb{P }_S}(\omega _{z_1}\omega _{z_2}, \omega _{z_3}\omega _{z_4})\) is non-zero: either \(z_1=z_3\), \(z_2=z_4\) (or \(z_1=z_4\), \(z_2=z_3\)) which is counted in the first term, or \(z_1=z_3\) and \(z_2\ne z_4\) but \(z_2\) and \(z_4\) belong to \(S\) (and three other cases obtained by permutation of the indices). In each case we have bounded the covariance from above by neglecting to subtract the product of expectations \(\mathbb{E }_S[\omega _{z_1}\omega _{z_2}]\mathbb{E }_S[\omega _{z_3}\omega _{z_4}]\), which is always positive.

Note that, with our assumptions, in the sum above we have \(\mathbb{E }_{\mathbb{P }_S}[\omega ^2_{z}\omega ^2_{z'}]{\;\leqslant \;}4\) and \(\mathbb{E }_S[\omega ^2_z]{\;\leqslant \;}2\). Hence, the first term is smaller than

For the second term, as in (3.60) one gets

so that the second term is smaller than \(32 C_1\beta ^2 (|S\cap \bar{I}_x|/N_0)^2\).

Hence, when \(|S\cap \bar{I}_x|\;\leqslant \;30 N_0 (\log N_0)^{1/4}\) we have

Equations (5.18) and (5.22) allow us to prove (5.16), just as (3.58) and (3.61) are used to prove Lemma 3.6.

6 Some other models to which the proof can adapt

Note that our proof, though technical, did not use many specifics of the model. The key point that makes the proof work is that we can find \(Q_x\) for which the variance is bounded but whose expectation under \(\mathbb{E }_S\) diverges with \(N_0\). This is where the crucial fact that the lattice is 2-dimensional is used. For this reason, our result extends readily to any kind of two-dimensional lattice (e.g. the triangular lattice, the honeycomb lattice, lattices with spread-out connections). Moreover, the proof would also work with only minor modification for a large variety of 2D models, an example of which was given in the last section. Without trying to describe a meta-model that would include all of these models, we give here two further examples that could be of interest.

6.1 Site percolation

We consider the equivalent of the model studied in the core of this paper, but with the disorder \((\omega (x))_{x\in \mathbb{Z }^2}\) lying on the sites of \(\mathbb{Z }^2\) rather than on the edges. One says that a self-avoiding path is open if all the sites visited by the path are open. One can readily check that one can prove the existence of the quenched connective constant (with \(\limsup \)) and the fact that it differs from the annealed one for every \(p\) using exactly the same arguments. One can furthermore adapt Sect. 4 to show that the ratio of the two connective constants is an increasing function of \(p\).

6.2 Lattice trees/lattice animals on a dilute network

A lattice tree of size \(N\) on \(\mathbb{Z }^2\) is a finite connected subgraph of \(\mathbb{Z }^2\) with \(N\) vertices and no cycles. Let \(\mathcal T _N\) denote the number of (unlabeled) lattice trees of size \(N\) containing the origin in \(\mathbb{Z }^2\) and \(t_n=|\mathcal T _N|\). It is known (see e.g. [21], where lattice trees are studied as a model for branching polymers) that there exists a constant \(\lambda \) such that

The method presented in Sect. 3 also allows us to give an upper bound on the number of lattice trees present on an infinite percolation. We say that a lattice tree is open if all of its the edges are open. Given a realization \((\omega (e))_{e\in E_2}\) of the edge dilution process, one defines

letting \(Z_N(x)\) be the analogous sum for lattice trees containing \(x\). It can be shown, as we have done for self-avoiding paths, that the upper-growth rate

is constant in \(x\) and non-random on the infinite percolation cluster. Using exactly the same proof as in Sect. 3, one can further prove

Theorem 6.1

For any \(p\in (1/2,1)\) one has \(\mathbb{P }_p\) a.s. for all point \(x\in C\), we have

Proof

The only point that needs to be explained in the adaptation of the proof is how one chooses the \(S^{(x)}\) appearing in (3.56). For \(x=0\), we choose arbitrarily a path of length \(N_0\) moving away from the root (there has to be at least one if \(N\ge N_0^2\)). For the other values of \(x\), we take \(S_0^{(x)}\) to be a point of the tree that lies in \(I_x\), and \(S^{(x)}\) to be first \(N_0\) steps on the paths from this point towards the root, i.e. the origin. \(\square \)

A similar result could be stated for lattice animals on the supercritical percolation cluster. One could also consider trees or lattice animals in a random potential that is not percolation, and prove a result similar to the one of Sect. 5.

References

Barat, K., Karmakar, S.N., Chakrabarti, B.K.: Self-avoiding walk, connectivity constant and theta point on percolating lattices. J. Phys. A Math. Gen. 24, 851–860 (1991)

Berger, Q., Toninelli, F.: On the critical point of the random walk pinning model in dimension \(d=3\). Electr. J. Probab. 15, 654–683 (2010)

Birkner, M., Sun, R.: Disorder relevance for the random walk pinning model in dimension 3. Ann. Inst. H. Poincaré Probab. Stat. 47, 259–293 (2011)

Brydges, D., Spencer, T.: Self-avoiding walk in 5 or more dimensions. Commun. Math. Phys. 97, 125–148 (1985)

Carmona, P., Hu, Y.: On the partition function of a directed polymer in a random Gaussian environment. Probab. Theory Relat. Fields 124, 431–457 (2002)

Chakrabarti, B.K., Kertész, J.: The statistics of self-avoiding walks on a disordered lattice. Z. Phys. B Cond. Mat. 44, 221–223 (1981)

Chakrabarti, B.K., Roy, A.K.: The statistics of self-avoiding walks on random lattices. Z. Phys. B Cond. Mat. 55, 131–136 (1984)

Comets, F., Yoshida, N.: Directed polymers in random environment are diffusive at weak disorder. Ann. Probab. 34, 1746–1770 (2006)

Le Doussal, P., Machta, J.: Self-avoiding walks in quenched random environments. J. Stat. Phys. 64, 541–578 (1991)

Duminil-Copin, H., Hammond, A.: Self-avoiding walk is sub-ballistic. Comm. Math. Phys. (to appear)

Duminil-Copin, H., Smirnov, S.: The connective constant for the honeycomb lattice equals \(\sqrt{2+\sqrt{2}}\). Ann. Math. 175, 1653–1665 (2012)

Flory, P.J.: The configuration of a real polymer chain. J. Chem. Phys. 17, 303–310 (1949)

Giacomin, G., Lacoin, H., Toninelli, F.L.: Hierarchical pinning models, quadratic maps and quenched disorder. Probab. Theor. Rel. Fields 147, 185–216 (2010)

Giacomin, G., Lacoin, H., Toninelli, F.L.: Marginal relevance of disorder for pinning models. Commun. Pure Appl. Math. 63, 233–265 (2010)

Giacomin, G., Lacoin, H., Toninelli, F.L.: Disorder relevance at marginality and critical point shift. Ann. Inst. H. Poincaré. Prob. Stat. 47, 148–175 (2011)

Grimmett, G.: Percolation, Grundlehren der Mathematischen Wissenschaften, vol. 321, 2nd edn. Springer, Berlin (1999)

Hammersley, J.M.: Percolation processes II. The connective constant. Proc. Camb. Phil. Soc. 53, 642–645 (1957)

Harris, A.B.: Effect of random defects on the critical behaviour of Ising models. J. Phys. C 7, 1671–1692 (1974)

Harris, A.B.: Self-avoiding walks on random lattices. Z. Phys. B Cond. Mat. 49, 347–349 (1983)

Harris, A.B., Meir, Y.: Self-avoiding walks on diluted networks. Phys. Rev. Lett. 63, 2819–2822 (1989)

Klein, D.J.: Rigorous results for branched polymers with excluded volume. J. Chem. Phys 75, 5186–5189 (1981)

Kremer, K.: Self-avoiding-walks on diluted lattices, a Monte-Carlo analysis. Z. Phys. B Conduct. Mat. 45, 149–152 (1981)

Lacoin, H.: New bounds for the free energy of directed polymers in dimension 1+1 and 1+2. Commun. Math. Phys. 294, 471–503 (2010)

Lacoin, H.: Existence of an intermediate phase for oriented percolation. Electr. J. Probab. 18(41), 1–17 (2012)

Lacoin, H.: Existence of a non-averaging regime for the self-avoiding walk on a high-dimensional infinite percolation cluster. Preprint, arXiv:1212.4641

Liggett, T.: Interacting particle systems, Grundlehren der Mathematischen Wissenschaften, vol. 276. Springer, Berlin (1985)

Madras, N., Slade, G.: The self-avoiding walk. Birkhäuser, Boston (1993)

Toninelli, F.L.: Coarse graining, fractional moments and the critical slope of random copolymers. Electr. J. Probab. 14, 531–547 (2009)

Slade, G.: The self-avoiding walk: a brief survey. In: Blath, J., Imkeller, P., Roelly, S. (eds.) Surveys in Stochastic Processes, pp. 181–199. European Mathematical Society, Zürich (2011)

Yilmaz, A., Zeitouni, O.: Differing averaged and quenched large deviations for random walks in random environments in dimensions two and three. Commun. Math. Phys. 300, 243–271 (2010)

Zygouras, N.: Strong disorder in semi-directed random polymers. Ann. Inst. H. Poincaré Probab. Stat. 49, 753–780 (2013)

Acknowledgments

The author is grateful to an anonymous referee for his careful examination of the paper, and numerous suggestions to improve its quality. He also would like thank to Ben Smith for his help on the text. This work was initiated during the authors stay in the Instituto de Matematica Pura e Applicada, he acknowledges kind hospitality and the support of CNPq.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lacoin, H. Non-coincidence of quenched and annealed connective constants on the supercritical planar percolation cluster. Probab. Theory Relat. Fields 159, 777–808 (2014). https://doi.org/10.1007/s00440-013-0520-1

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00440-013-0520-1