Abstract

Models of associative memory with discrete state synapses learn new memories by forgetting old ones. In the simplest models, memories are forgotten exponentially quickly. Sparse population coding ameliorates this problem, as do complex models of synaptic plasticity that posit internal synaptic states, giving rise to synaptic metaplasticity. We examine memory lifetimes in both simple and complex models of synaptic plasticity with sparse coding. We consider our own integrative, filter-based model of synaptic plasticity, and examine the cascade and serial synapse models for comparison. We explore memory lifetimes at both the single-neuron and the population level, allowing for spontaneous activity. Memory lifetimes are defined using either a signal-to-noise ratio (SNR) approach or a first passage time (FPT) method, although we use the latter only for simple models at the single-neuron level. All studied models exhibit a decrease in the optimal single-neuron SNR memory lifetime, optimised with respect to sparseness, as the probability of synaptic updates decreases or, equivalently, as synaptic complexity increases. This holds regardless of spontaneous activity levels. In contrast, at the population level, even a low but nonzero level of spontaneous activity is critical in facilitating an increase in optimal SNR memory lifetimes with increasing synaptic complexity, but only in filter and serial models. However, SNR memory lifetimes are valid only in an asymptotic regime in which a mean field approximation is valid. By considering FPT memory lifetimes, we find that this asymptotic regime is not satisfied for very sparse coding, violating the conditions for the optimisation of single-perceptron SNR memory lifetimes with respect to sparseness. Similar violations are also expected for complex models of synaptic plasticity.

Similar content being viewed by others

1 Introduction

One line of experimental evidence suggests that synapses may occupy only a very limited number of discrete states of synaptic strength (Petersen et al. 1998; Montgomery and Madison 2002, 2004; O’Connor et al. 2005a, b; Bartol et al. 2015), or may change their strengths via discrete, jump-like processes (Yasuda et al. 2003; Bagal et al. 2005; Sobczyk and Svoboda 2007). Discrete state synapses overcome the catastrophic forgetting of the Hopfield model (Hopfield 1982) in associative memory tasks, turning memory systems into so-called palimpsests, which learn new memories by forgetting old ones (Nadal et al. 1986; Parisi 1986). Unfortunately, memory lifetimes in the simplest such models are rather limited, growing only logarithmically with the number of synapses (Tsodyks 1990; Amit and Fusi 1994; see also Leibold and Kempter 2006; Barrett and van Rossum 2008; Huang and Amit 2010). Memory lifetimes may be extended by considering either sparse coding at the population level (Tsodyks and Feigel’man 1988) or complex models of synaptic plasticity in which synapses can express metaplasticity (changes in internal states) without necessarily expressing plasticity (changes in strength) (Fusi et al. 2005; Leibold and Kempter 2008; Elliott and Lagogiannis 2012; Lahiri and Ganguli 2013). Two previous studies have examined complex models of synaptic plasticity operating in concert with sparse coding (Leibold and Kempter 2008; Rubin and Fusi 2007). For a discussion of the possible roles of the persistence and transience of memories and the synaptic mechanisms underlying synaptic stability, see, for example, Richards and Frankland (2017), and Rao-Ruiz et al. (2021).

We have proposed integrate-and-express models of synaptic plasticity in which synapses act as low-pass filters in order to control fluctuations in developmental patterns of synaptic connectivity (Elliott 2008; Elliott and Lagogiannis 2009). We have also applied these complex models of synaptic plasticity to memory formation, retention and longevity with discrete synapses (Elliott and Lagogiannis 2012), finding that they outperform cascade models (Fusi et al. 2005) in most biologically relevant regions of parameter space (Elliott 2016b). In this paper, we consider the role of sparse coding in the memory dynamics of a filter-based model. For comparison, we also consider the cascade model (Fusi et al. 2005), the serial synapse model (Leibold and Kempter 2008; Rubin and Fusi 2007) and a model of simple synapses (Tsodyks 1990) using our protocols.

Our paper is organised as follows. In Sect. 2, we present our general approach by describing the two memory storage protocols that we study, considering two different definitions of memory lifetimes, and obtaining general, model-independent results. Then, in Sect. 3, we consider both simple and complex models of synaptic plasticity, obtaining the analytical results required to study memory lifetimes in detail. We compare and contrast results for memory lifetimes in simple and complex models in Sect. 4. Finally, in Sect. 5, we briefly discuss our results.

2 General approach and formulation

We provide a convenient list of the most commonly used mathematical symbols and their meanings, excluding those that appear in the appendices, in Table 1.

2.1 Memories and memory lifetimes

We consider a population of P neurons forming a memory system, perhaps performing association or auto-association tasks. Let each neuron receive N synaptic connections from N other neurons that are randomly selected from the entire population. Fully recurrent connectivity would imply that \(N = P - 1\) (excluding self-connections) but in general \(N \ll P\). Other than the requirement that \(N < P\), N may be regarded as mathematically independent of P. Memories are stored sequentially, one after the other, by this memory system. We take them to be stored at times \(t \ge 0\) s governed by a Poisson process of rate r Hz. This continuous time approach is more realistic than a discrete time approach in which memories are stored at uniformly spaced time steps. Due to ongoing synaptic plasticity driven by the storage of later memories, the synaptic patterns that embody earlier memories may be degraded, so that the fidelity of recall of earlier memories may fall over time, ultimately falling to an equilibrium or background level of complete amnesia. It is typical in these scenarios to track the fidelity of recall of the first memory as subsequent memories are stored. This first memory is taken to be stored at time \(t=0\) s on the background equilibrium probability distribution of synaptic strengths.

In previous work, we have focused on a single neuron, or perceptron, in such a system and have examined its recall of stored memories. Here, in a sparse population coding context, we must consider the collective dynamics of the entire population of neurons, but these collective dynamics are nevertheless driven by synaptic processes occurring at the level of single perceptrons in the system. Considering, then, a single perceptron in this population, let its N synapses have strengths \(S_i(t) \in \{-1, +1\}\), \(i=1, \ldots , N\), at time \(t \ge 0\) s. These two strength states should be thought of as low and high rather than inhibitory and excitatory. As memories are presented to the system for storage, the perceptron is exposed to synaptic inputs characterised by the N-dimensional vectors \(\underline{\xi }^\alpha \), \(\alpha = 0, 1, 2, \ldots \), where \(\alpha \) indexes the memories. The component \(\xi _i^\alpha \) represents the input through synapse i during the presentation of memory \(\alpha \), and for simplicity we assume that these components are independent between synapses and across memories.

In response to each of these memory vectors, the perceptron must generate the correct activation or output. With inputs \(x_i\) through its N synapses, the perceptron’s activation is defined as usual by

The perceptron’s output is some possibly nonlinear function of its activation, where this output can correspond to spontaneous activity under conditions of no (or spontaneous) input. We track the fidelity of recall of the first memory \(\underline{\xi }^0\) by examining the perceptron’s activation upon re-presentation (but not re-storage) of this memory at later times \(t > 0\) s. We refer to \(h(t) \equiv h_{\underline{\xi }^0}(t)\) as the tracked memory signal or just the memory signal. The dynamics of h(t) will determine the lifetime of memory \(\underline{\xi }^0\), at least as far as this single perceptron’s capacity to generate the correct output upon re-presentation of \(\underline{\xi }^0\) is concerned. Of course, we are not interested in the lifetime of any particular tracked memory \(\underline{\xi }^0\) stored on any particular pattern of synaptic connectivity and subject to any particular sequence of subsequent, non-tracked memories \(\underline{\xi }^\alpha \), \(\alpha > 0\), stored at any particular set of Poisson-distributed times \(0< t_1< t_2< t_3 < \cdots \). Rather, we are interested only in the lifetime of a typical tracked memory subject to a typical sequence of later memories. Thus, we consider only the statistical properties of h(t) when suitably averaged over all memories.

Memory lifetimes may be defined in a variety of ways using these statistical properties. The simplest definition is to consider the mean and variance of h(t)

define the signal-to-noise ratio (SNR) as:

and then define the memory lifetime as that value of t, call it \(\tau _{\mathrm {snr}}\), that is the (largest) finite, non-negative solution of the equation \(\mathrm {SNR}(\tau _{\mathrm {snr}}) = 1\) when this solution exists; otherwise, we set \(\tau _{\mathrm {snr}} = 0\) s (Tsodyks 1990). This is the last time at which \(\mu (t)\) is distinguishable from its equilibrium value \(\mu (\infty )\) at the level of one standard deviation. Although an “ideal observer” approach to defining memory lifetimes has also been considered (Fusi et al. 2005; Lahiri and Ganguli 2013), it is essentially equivalent to the SNR approach (Elliott 2016b).

The activation h(t) provides a direct read-out of the perceptron’s response to the re-presentation of \(\underline{\xi }^0\) at later times, and would correspond to a neuron’s membrane potential in a more realistic, integrate-and-fire model. By focusing on this read-out of the perceptron’s state, we are naturally led to consider the first passage time (FPT) for the perceptron’s activation to fall below firing threshold, and thus to consider the mean first passage time (MFPT) for this process, which is the mean taken over all tracked and non-tracked memories (Elliott 2014). We may then define an alternative memory lifetime, call it \(\tau _{\mathrm {mfpt}}\), as this MFPT for the perceptron’s activation in response to re-presentation of a typical tracked memory to fall below firing threshold. We have extensively discussed and contrasted the SNR and FPT approaches to defining memory lifetimes elsewhere (Elliott 2014, 2016a, 2017a). In essence, SNR memory lifetimes are only valid in asymptotic, typically large N regimes, while FPT memory lifetimes are valid in all regimes. SNR lifetimes must therefore be interpreted with caution.

To compute FPT lifetimes, we require \(\mathsf {Prob}[ h_{\alpha +1} | h_\alpha ]\), the transition probability describing the probability that the perceptron’s activation (in response to re-presentation of the tracked memory) is \(h_{\alpha +1}\) immediately after the storage of average non-tracked memory \(\underline{\xi }^{\alpha +1}\), given that its activation is \(h_\alpha \) immediately before the memory’s storage. This transition probability is most easily computed in simple models of synaptic plasticity, for which it is independent of the memory storage step (Elliott 2014, 2017a, 2019). This independence arises because simple synapses with only two strength states are “stateless” (Elliott 2020), having no internal states and not enough strength states to carry information between consecutive memory storage steps. In this case, all the probabilities \(\mathsf {Prob}[ h_{\alpha +1} | h_\alpha ]\) over all the possible, discrete values of \(h_\alpha \) and \(h_{\alpha +1}\) define the elements of a transition matrix in the perceptron’s activation between memory storage steps that is independent of the non-tracked memory storage step \(\alpha + 1\) (\(\alpha \ge 0\)). We can then drop the index \(\alpha \) and consider general elements \(\mathsf {Prob}[ \, h \, | \, h' \, ]\) between any two possible values of the perceptron activation, h and \(h'\). We will therefore examine FPT lifetimes only for simple synapses, and SNR memory lifetimes for both simple and complex synapses, but with the understanding that SNR results must be interpreted cautiously. With the transition probabilities \(\mathsf {Prob}[ \, h \, | \, h' \, ]\) being independent of the memory storage step, and with the storage of the definite tracked memory \(\underline{\xi }^0\) inducing the definite activation \(h_0\) immediately after its storage, the FPT lifetime of the memory \(\underline{\xi }^0\) is the solution of the equation

where transitions to activations below the firing threshold \(\vartheta \) are disallowed (Elliott 2014; see van Kampen 1992, for a general discussion). Equation (4) generalises in the obvious way to an integral equation when h can be regarded as a continuous rather than discrete variable. Solving Eq. (4) for \(\tau _{\mathrm {mfpt}}(h_0)\) for all values of \(h_0 > \vartheta \) entails solving a linear system involving the perceptron activation transition matrix. We therefore refer to Eq. (4) for simplicity but somewhat inaccurately as a matrix equation, to distinguish it from its integral equation equivalent in the continuum limit. This matrix or integral equation (MIE) approach to FPTs is exact. We may also consider an approximation involving the Fokker–Planck equation (FPE) approach by computing the jump moments induced by \(\mathsf {Prob}[ \, h \, | \, h' \, ]\). Because memories are stored as a Poisson process, the jump moments are simply

where the expectation values are calculated with respect to \(\mathsf {Prob}[ \, h \, | \, h' \, ]\). Then, standard methods (Elliott 2014; see van Kampen 1992, in general) give the MFPT as the solution of the equation:

subject to the boundary condition \(r \tau _{\mathrm {mfpt}}(\vartheta ) = 0\). Equations similar to Eqs. (4) and (6) give the higher-order FPT moments (Elliott 2019). Given \(\tau _{\mathrm {mfpt}}( h_0 )\), we obtain \(\tau _{\mathrm {mfpt}}\) by averaging over the distribution of \(h_0\) (for values of \(h_0 > \vartheta \)), corresponding to averaging over the tracked memory \(\underline{\xi }^0\), i.e. \(\tau _{\mathrm {mfpt}} = \langle \tau _{\mathrm {mfpt}}( h_0 ) \rangle _{h_0 > \vartheta }\).

2.2 Hebb protocol

We adopt and adapt the memory storage protocol employed by Leibold and Kempter (2008). Their memory system performs an association task. Within the population of P neurons, a sub-population of “cue” neurons is required to activate a sub-population of “target” neurons. Synapses from cue to target neurons experience potentiating induction signals during memory storage, while those from target to cue experience depressing induction signals; all other synapses do not experience plasticity induction signals. Although Leibold and Kempter (2008) do allow for the possibility of some overlap between cue and target sub-populations, this will not be relevant here. The storage of different memories involves different cue and target sub-populations, so that the entire population of P neurons will be involved in storing many memories over time. If cue and target sub-populations are of equal size, as we assume, then potentiation and depression processes are equally balanced on average. This assumption stands in lieu of realistic neuron models, in which we expect (Elliott 2016a) synaptic plasticity to be dynamically regulated to move to stable dynamical fixed points in which such balancing is achieved automatically (Bienenstock et al. 1982; Burkitt et al. 2004; Appleby and Elliott 2006).

While Leibold and Kempter (2008) consider activities \(\xi _i^\alpha \in \{ 0, 1 \}\), corresponding to inactive (\(\xi _i^\alpha = 0\)) and active (\(\xi _i^\alpha = 1\)) input neurons, we will consider the more general case of \(\xi _i^\alpha \in \{ \zeta , 1 \}\), with \(0 \le \zeta < 1\), where \(\xi _i^\alpha = \zeta \) represents a spontaneous, non-evoked, or background level of activity for an input neuron that is in neither cue nor target sub-populations, while \(\xi _i^\alpha = 1\) represents evoked activity from a cue or target input. We often refer below to active and inactive inputs or neurons, with the understanding that we mean evoked activity and spontaneous activity, respectively. Because synaptic plasticity occurs only between cue and target neurons, synapses between a pre- or postsynaptic neuron that is only spontaneously active do not undergo synaptic plasticity. This accords with our expectations from known physiology: protocols for long-term potentiation (LTP; Bliss and Lømo 1973) and long-term depression (LTD; Lynch et al. 1977) require sustained bouts of evoked electrical activity rather than just spontaneous levels of activity. On a broadly BCM view of synaptic plasticity (Bienenstock et al. 1982), we would expect two thresholds for synaptic plasticity: as activity levels ramp up from spontaneous to weak to strong tetanisation, plasticity switches from none to LTD to LTP. Since synaptic plasticity can only occur between pairs of active, synaptically coupled neurons in this scenario, we refer to it as the Hebb protocol: Hebbian synaptic plasticity is typically understood to mean activity-dependent, bidirectional synaptic plasticity between active pre- and postsynaptic neurons. Although spontaneous activity has by assumption no impact on synaptic plasticity here, it nevertheless has a direct impact on h(t).

For a particular perceptron, let the probability that it is active during the storage of any particular memory be g. Since the perceptron could be part of either cue or target sub-populations, the probability that it is either cue or target during the storage of a memory is just \({\textstyle \frac{1}{2}}g\). The probability that any one of its synaptic inputs is active during memory storage is also just g. However, for the purposes of clarity it is convenient to distinguish between these two probabilities, so we denote the probability that an input is active as f (\(\equiv g\)). In this way, the appearance of a factor of g indicates a global, postsynaptic factor due to the perceptron, or postsynaptic cell, being in the cue or target population, while a factor of f indicates a local, presynaptic factor due to an input being in the cue or target population. The probability g, or f, controls the sparseness of the memory representation in this memory system. Considering just a single perceptron, if it is neither cue nor target, then none of its synapses can experience plasticity induction signals. If it is a cue, then only those inputs that correspond to target cells (if any) experience plasticity induction signals, and specifically depressing signals. If it is a target, then similarly only cue inputs experience induction signals, and so only potentiating signals. Without loss of generality, we may therefore just assume that during memory storage, an active perceptron’s active inputs are either all cue or all target neurons. This simplifying assumption effectively doubles on average the rate of plasticity induction signals experienced by synapses compared to the scenario in which the perceptron’s active inputs could represent a combination of cue and target neurons. We could therefore just scale f accordingly.

We summarise the Hebb protocol in Fig. 1, which schematically illustrates a sample of the population of pairs of pre- and postsynaptic neurons, showing all possible combinations of presynaptic activities and postsynaptic roles with their respective probabilities, together with the direction of synaptic plasticity induced by them.

Schematic illustration of the Hebb protocol for memory storage. Six pairs of synaptically coupled neurons are shown. Each cell body is represented by a triangle, with the value (\(\zeta \) or 1) inside the triangle indicating the neuron’s activity during memory storage. A neuron’s axon is denoted by a directed line, while two of its dendrites are denoted by the dashed lines. Synaptic coupling is indicated by a small black blob where an axon terminates on a dendrite, with the symbol to the right of the blob indicating the direction of induced synaptic plasticity during memory storage (“\(\uparrow \)” indicates potentiation, “\(\downarrow \)” depression, and “\(\times \)” no change). The labels “C”, “N” or “T” attached to a postsynaptic cell body indicate that the neuron is a cue cell, neither a cue cell nor a target cell, or a target cell, respectively, in the population. Probabilities of presynaptic activity (f or \(1-f\)) are indicated, as are the joint probabilities of postsynaptic activity and specific role (\(\frac{1}{2}g\) or \(1-g\)). The fact that an active presynaptic neuron synapsing on a cue or target cell always experiences the induction of depression or potentiation, respectively, reflects the simplifying assumption discussed in the main text

To assess memory lifetimes under this protocol, we may track the ability of the cue sub-population to successfully evoke activity in the target sub-population. Considering a single perceptron in the target sub-population, we may obtain general expressions for \(\mu (t)\) and \(\sigma (t)^2\) in Eq. (2), where these expressions are independent of any particular model of synaptic plasticity. Because \(h(t) = \frac{1}{N} \sum _{i=1}^N \xi _i^0 S_i(t)\) and similarly \(h(t)^2 = \frac{1}{N^2} \sum _{i,j=1}^N \xi _i^0 \xi _j^0 S_i(t) S_j(t)\), their expectation values lead to

where we could pick any synapse i in Eq. (7a) and any distinct pair of synapses i and j in Eq. (7b) but we restrict without loss of generality to \(i=1\) and \(j=2\). In these equations, we condition on whether a synapse has experienced a potentiating induction signal (“\(+\)”) with probability f or not (“\(\times \)”) with probability \(1-f\), during the storage of \(\underline{\xi }^0\). For the models of synaptic plasticity that we consider below, the (marginal) equilibrium probability distribution of any single synapse’s strength is uniform, or \(\mathsf {Prob}[ S_i (\infty ) = \pm 1 ] = {\textstyle \frac{1}{2}}\), so that if a synapse does not experience a plasticity induction signal during the storage of \(\underline{\xi }^0\), then \(\mathsf {E}\left[ S_i(t) \, | \, {\times } \, \right] \equiv 0\) at \(t=0\) s and this remains true for all times \(t \ge 0\) s when potentiation and depression processes are treated symmetrically, as indicated in Eq. (7a). However, for the pairwise correlations in Eq. (7b) that condition on one or both synapses not having experienced an induction signal, the expectation values do not vanish under the Hebb protocol. This is because of the higher-order equilibrium correlational structure induced by the fact that it is impossible for some of the synapses of an active neuron to experience potentiating induction signals while others experience depressing induction signals during the storage of the same memory under the Hebb protocol.

We may obtain general expressions for the expectation values in Eq. (7) by writing down the transition processes that govern changes in a single synapse’s strength or simultaneous changes in a pair of synapses’ strengths. Let each synapse have s possible internal states for each of its two possible strengths \(\pm 1\), so that the possible state of a synapse is described by a 2s-dimensional vector, with the internal states for strength \(-1\) (respectively, \(+1\)) corresponding to the first (respectively, last) s components. Given the stochastic nature of the plasticity induction signals, this vector defines a joint probability distribution for a synapse’s combined strength and internal state. Let the transition matrix \(\mathbb {M}_+\) implement the definite change in a synapse’s state in response to a potentiating induction signal, and \(\mathbb {M}_-\) that for a depressing induction signal. We then determine the transition matrix governing the change in a single synapse’s state in response to the storage of a typical non-tracked memory by conditioning on all possible combinations of presynaptic activity and postsynaptic role. Defining \(\mathbb {K}_\pm = (1 - f) \mathbb {I} + f \mathbb {M}_\pm \), where \(\mathbb {I}\) is the identity matrix, this transition matrix is

or just \(\mathbb {T}_1 = (1 - g) \, \mathbb {I} + g \, \mathbb {K}\), where \(\mathbb {K} = (1 - f) \mathbb {I} + f \mathbb {M}\) with \(\mathbb {M} = {\textstyle \frac{1}{2}}( \mathbb {M}_+ + \mathbb {M}_- )\). The three terms in Eq. (8a) arise from conditioning on the three possible perceptron roles in memory storage (determined by the global factor g), while the two terms in each of \(\mathbb {K}_\pm \) arise from conditioning on the two possible levels of presynaptic activity (determined by the local factor f). Similarly, the transition operator that governs simultaneous changes in pairs of synapses’ states during typical non-tracked memory storage is

with the generalisation to \(\mathbb {T}_n\) for any number of synapses n being clear. The (marginal) equilibrium probability distribution of a single synapse’s state, denoted by \(\underline{A}_1\), is the (normalised) eigenvector of \(\mathbb {T}_1\) with unit eigenvalue, which is also just the unit eigenvector of \(\mathbb {M}\). That for any pair of synapses, \(\underline{A}_2\), corresponds to the unit eigenvector of \(\mathbb {T}_2\). However, because \(\mathbb {T}_2 \ne (1-g) \mathbb {I} \otimes \mathbb {I} + g \, \mathbb {K} \otimes \mathbb {K}\), then \(\underline{A}_2 \ne \underline{A}_1 \otimes \underline{A}_1\). Rather, \(\underline{A}_2\) must be explicitly computed as the unit eigenstate of \({\textstyle \frac{1}{2}}( \mathbb {K}_+ \otimes \mathbb {K}_+ + \mathbb {K}_- \otimes \mathbb {K}_- )\). It is this failure of factorisation that induces the non-trivial pairwise correlational structure in the equilibrium state.

Using \(\mathbb {T}_1\) and \(\mathbb {T}_2\), we may write down the conditional expectation values in Eq. (7). We define the vector \(\underline{\Omega }^{\mathrm {T}} = \left( {-} \underline{1}^\mathrm {T} \, | \, {+} \underline{1}^{\mathrm {T}} \right) \), where \(\mathrm {T}\) denotes the transpose and the s-dimensional vector \(\underline{1}\) is a vector all of whose components are unity. This vector weights synaptic states according to their two possible strengths. Then,

and

and for the other two pairwise expectation values in Eq. (7b), we replace \(\mathbb {M}_+ \otimes \mathbb {M}_+\) in Eq. (9b) by \(\mathbb {M}_+ \otimes \mathbb {I}\) for \({+} {\times }\) and \(\mathbb {I} \otimes \mathbb {I}\) for \({\times } {\times }\). Since \(\mathbb {T}_1 - \mathbb {I} = f g \left( \mathbb {M} - \mathbb {I} \right) \), we have \(\mu (t) = f \, \underline{\Omega }^{\mathrm {T}} e^{\left( \mathbb {M} - \mathbb {I} \right) f g r t} \, \mathbb {M}_+ \underline{A}_1\), so that sparse coding just introduces a multiplicative factor of f and scales the rate r by the product fg in \(\mu (t)\). In the equilibrium limit, by definition \(\exp [(\mathbb {T}_n - \mathbb {I} \otimes \cdots \otimes \mathbb {I})rt] \, \underline{v} \rightarrow \underline{A}_n\) for any state \(\underline{v}\) corresponding to a probability distribution, as \(t \rightarrow \infty \). Hence, \(\mu (\infty ) = f \, \underline{\Omega }^{\mathrm {T}} \underline{A}_1 \equiv 0\), which always follows when potentiation and depression processes are treated symmetrically. For the equilibrium variance, we obtain

The second, covariance term does not in general vanish because of the equilibrium synaptic pairwise correlations.

These general results allow us to obtain SNR lifetimes when the matrices \(\mathbb {M}_\pm \) are specified for any particular model of synaptic plasticity. We defer the derivation of the transition matrix elements \(\mathsf {Prob}[ \, h \, | \, h' \, ]\) that are required for FPT lifetimes until we explicitly discuss simple models of synaptic plasticity in Sect. 3.1.

2.3 Hopfield protocol

Although the Hebb protocol is intuitive as a means of exploring memory lifetimes in an associative memory system, its non-trivial equilibrium distribution of synaptic states is awkward. To avoid this awkwardness, we may consider an alternative protocol that is nevertheless equivalent to the Hebb protocol in the limit of small fgN. We first define the protocol and then demonstrate the equivalence.

During memory storage, instead of defining cue and target sub-populations, we now specify the entire activity pattern, representing a memory, across the whole population of neurons. We allow these activities to take values from the set \(\{ -1, -\zeta , +\zeta , +1 \}\) with probabilities \(\{ {\textstyle \frac{1}{2}}f, {\textstyle \frac{1}{2}}(1-f), {\textstyle \frac{1}{2}}(1-f), {\textstyle \frac{1}{2}}f \}\). Here, the values \(\pm 1\) represent evoked activity (the neuron is involved in memory storage), with \(+1\) (respectively, \(-1\)) representing a strongly (respectively, weakly) tetanising stimulus in the usual LTP (respectively, LTD) sense. In contrast, the values \(\pm \zeta \) represent spontaneous activity (the neuron is not involved in memory storage). For a single perceptron, this amounts to specifying memory vectors \(\underline{\xi }^\alpha \) with components \(\xi _i^\alpha \) taking one of these four values, and also specifying the perceptron’s required output in response to an input vector, where this output is drawn from the same set with the same probabilities, but with f replaced by g as usual. We can track the perceptron’s activation when its required output is either \(+1\) or \(-1\), but by symmetry its activation would differ only by a sign between these two cases, so for concreteness we just take the required output to be \(+1\) during the storage of \(\underline{\xi }^0\). As with the Hebb protocol, a synapse does not experience a plasticity induction signal if its presynaptic input or the postsynaptic perceptron itself is only spontaneously active. However, if both input and perceptron are active, then the synapse experiences a plasticity induction signal, either potentiating if both activities are the same or depressing if different. This is just the standard Hopfield rule (Hopfield 1982), so we refer to this protocol as the Hopfield protocol: we obtain a pattern of synaptic plasticity induction signals in response to evoked activity that is identical to the standard Hopfield rule, but supplemented by the presence of spontaneous activity that does not induce synaptic plasticity.

Figure 2 summarises the Hopfield protocol, showing all allowed combinations of pre- and postsynaptic activities during memory storage, together with their associated probabilities and induced plasticity induction signals. Depressing and potentiating induction signals both occur with the same overall probability \({\textstyle \frac{1}{2}}f g\) as in the Hebb protocol.

Computing \(\mu (t)\) and \(\sigma (t)^2\) for the Hopfield protocol and using the various symmetries \(\mathsf {E}\left[ S_1(t) \, | \, + \, \right] = - \mathsf {E}\left[ S_1(t) \, | \, - \, \right] \), \(\mathsf {E}\left[ S_1(t) S_2(t) \, | \, {+} {+} \, \right] = \mathsf {E}\left[ S_1(t) S_2(t) \, | \, {-} {-} \, \right] \), etc., we obtain

These are structurally identical to the expressions in Eq. (7) for the Hebb protocol, except that the linear terms in \(\zeta \) are absent because of cancellation. Had we instead used a single level \(\zeta \) of spontaneous activity rather than the two levels \(\pm \zeta \), we would have obtained identical linear terms, too. Writing down the transition operators \(\mathbb {T}_1\) and \(\mathbb {T}_2\) in the Hopfield protocol, we obtain

with immediate generalisation to \(\mathbb {T}_n\). The (marginal) equilibrium distribution of a pair of synapses’ states is therefore determined by the unit eigenstate of \(\mathbb {K} \otimes \mathbb {K}\) and thus of \(\mathbb {M} \otimes \mathbb {M}\), and so is just \(\underline{A}_2 = \underline{A}_1 \otimes \underline{A}_1\); again, generalisation to \(\underline{A}_n\) is immediate. The result is that all conditional expectation values involving at least one synapse that does not experience a plasticity induction event during the storage of \(\underline{\xi }^0\) vanish, when potentiation and depression processes are treated symmetrically. So, whether we use four-level or three-level activities in the Hopfield protocol, the \(\zeta \)-dependent contributions to the covariance term in Eq. (11b) drop out, as indicated, so that the variance is affected only by the \(\zeta ^2\) term in the first term on the right-hand side (RHS) of Eq. (11b). Moreover, the covariance term vanishes entirely in the large t, equilibrium limit, since \(\mathsf {E}\left[ S_1(t) S_2(t) \, | \, {+} {+} \, \right] \rightarrow \big ( \underline{\Omega }^{\mathrm {T}} \underline{A}_1 \big )^2 \equiv 0\), so that \(\sigma (\infty )^2 = [ f + (1-f) \zeta ^2 ] / N\).

The equivalence of the Hebb and Hopfield protocols in the limit of small fgN is now clear. The corresponding transition matrices \(\mathbb {T}_1\) are in any case identical for both protocols, and hence so are the means. For \(\mathbb {T}_2\), in both protocols we have that

and for general \(\mathbb {T}_N\) the \(\mathscr {O}(f g)\) term on the RHS contains N terms, each of which contains \(N-1\) factors of \(\mathbb {I}\) and just one factor of \(\mathbb {M} - \mathbb {I}\). This structure reflects the fact that in the limit of small fgN, at most one of the perceptron’s synapses experiences a plasticity induction signal, regardless of the protocol. The corresponding unit eigenstate of \(\mathbb {T}_N\) in this limit is just \(\underline{A}_1 \otimes \cdots \otimes \underline{A}_1\), regardless of the protocol. Therefore, in the small fgN limit, the equilibrium distribution of synaptic states in the Hebb protocol reduces to that in the Hopfield protocol, and all statistical properties of h(t) must therefore also reduce in the same way. The Hopfield protocol therefore offers a way of extrapolating the small fgN behaviour of the Hebb protocol to larger f without the awkwardness of the Hebb protocol’s equilibrium structure in this regime. Furthermore, the simpler form of the results in the Hopfield protocol allow us to use it to extract the scaling properties of memory lifetimes as a function of small f (or g) in both protocols.

For the non-sparse-coding case of \(f=1\), spontaneous activity does not contribute to the Hopfield protocol’s dynamics, and we recover precisely the Hopfield model with discrete-state synapses. For \(f<1\), we expand the possible activities of neurons to allow for spontaneously active neurons that are not involved in memory storage. Thus, although the Hopfield protocol provides a convenient tool for examining the small fgN limit of the Hebb protocol, we also regard the Hopfield protocol as a fully fledged protocol in its own right, because it constitutes a very natural way of examining sparse coding with a Hopfield plasticity rule.

2.4 Population memory lifetimes

So far we have focused on the memory dynamics of a single perceptron. We now consider the memory dynamics of the entire population of P neurons. We do this only for the Hopfield protocol for simplicity. The tracked memory will evoke activity in a sub-population of on average gP neurons. In an experimental protocol, during the storage of the tracked memory we can at least in principle explicitly identify all those neurons that are active, and then subsequently track all their activities during later re-presentations of the tracked memory. Because of synaptic coupling between these tracked neurons and the other on average \((1-g)P\) neurons, spontaneous activity in the other neurons will affect and potentially degrade the activation of the tracked neurons upon re-presentation of the tracked memory, affecting the tracked neurons’ ability to read out the tracked memory. But, as we are only concerned with the tracked neurons’ read-out of the tracked memory, we do not need to explicitly track the activities of all these other neurons: their activities do not directly form part of the memory signal from the tracked neurons.

Schematic illustration of the Hopfield protocol for memory storage. The format of this figure is essentially identical to that for the Hebb protocol in Fig. 1, except that labels indicating postsynaptic roles are not required. To avoid duplication, spontaneously active neurons are shown with both possible spontaneous activity levels, \(\pm \zeta \); the corresponding probability is for each of these levels rather than for both

In the Hopfield protocol, a tracked neuron will by definition have an output of \(+1\) or \(-1\) during memory storage. For a single perceptron, we focused on an output of \(+1\) without loss of generality. A perceptron with an initial output of \(-1\) will have identical dynamics to one with an initial output of \(+1\), except that the activation will be reversed in sign. Therefore, we can just define the memory signal for any active perceptron to be \(\pm h(t)\), depending on this sign. Denoting the moment generating function (MGF) of \(+h(t)\) for a tracked neuron with an initial output of \(+1\) by \(\mathscr {M}(z;t)\), the MGF for \(-h(t)\) for a tracked neuron with an initial output of \(-1\) will also be just \(\mathscr {M}(z;t)\). All tracked neurons therefore have the same MGF for their memory signals.

Suppose that \(P_{\mathrm {eff}}\) neurons form the sub-population that stores the tracked memory, where \(P_{\mathrm {eff}}\) is binomially distributed with parameter P and probability g. Although these neurons’ activations will not in general evolve independently, as an extremely coarse approximation we assume that their activations do evolve independently during subsequent memory storage (cf. Rubin and Fusi 2007). Population memory lifetimes obtained from this simplifying assumption will therefore only be theoretical, and perhaps very loose, upper bounds on exact memory lifetimes. With this simplification, the MGF for the memory signal from the tracked sub-population is then \(\left[ \mathscr {M}(z;t) \right] ^{P_{\mathrm {eff}}}\), by independence. Averaging over \(P_{\mathrm {eff}}\), the MGF of the population memory signal is then just \(\left[ (1-g) + g \mathscr {M}(z;t) \right] ^P\). The mean, \(\mu _p(t)\), and variance, \(\sigma _p(t)^2\), of this population signal follow directly. Ignoring covariance terms (or considering the limit \(t \rightarrow \infty \) for the variance), we have \(\mu _p(t) = g P \mu (t)\) and \(\sigma _p(t)^2 \approx g P \sigma (t)^2\), where \(\mu (t)\) and \(\sigma (t)^2\) are the single-perceptron mean and variance above.Footnote 1 Hence, the population SNR, \(\mathrm {SNR}_p(t) = \left[ \mu _p(t) - \mu _p(\infty ) \right] / \sigma _p(t)\), is just scaled by the factor \(\sqrt{g P}\) relative to the single-perceptron SNR, so

The population SNR memory lifetime, which we denote by \(\tau _{\mathrm {pop}}\), is then the solution of \(\mathrm {SNR}_p(\tau _{\mathrm {pop}}) = 1\). With \(\sigma (t)^2 \approx [f + (1-f) \zeta ^2] / N\) in the Hopfield protocol, \(\mathrm {SNR}_p(t)\) depends on \(\mathscr {N} = N P\), the total number of synapses in the memory system, but it also contains the additional factor of \(\sqrt{g}\) compared to \(\mathrm {SNR}(t)\), which modifies scaling behaviour compared to single-perceptron results.

3 Models of synaptic plasticity

3.1 Simple synapses: the stochastic updater

The simplest model of synaptic plasticity to consider is one in which synapses lack any internal states so that \(s=1\), and given a plasticity induction signal, they change strength (if possible) with some fixed probability p (Tsodyks 1990). Because a synapse just changes its strength stochastically in this model, we have called such a synapse a “stochastic updater” (SU; Elliott and Lagogiannis 2012). The underlying strength transition matrices are then

and so

where we define \(\psi = f p\) for convenience.

The equilibrium distribution of a single synapse’s strength in both protocols is just the normalised unit eigenvector of \(\mathbb {K}\), or \(\underline{A}_1 = \frac{1}{2}\left( 1, 1 \right) ^{\mathrm {T}}\). For the Hopfield protocol, any pair of synapses’ strengths has the equilibrium distribution \(\underline{A}_2 = \underline{A}_1 \otimes \underline{A}_1\). For the Hebb protocol, we require the unit eigenstate of \({\textstyle \frac{1}{2}}( \mathbb {K}_+ \otimes \mathbb {K}_+ + \mathbb {K}_- \otimes \mathbb {K}_- )\), which gives

where \(\kappa _2 = \psi / (2 - \psi )\). The quantity \(\kappa _2\) determines the pairwise correlations present in this state, since \(\big ( \underline{\Omega }^{\mathrm {T}} \otimes \underline{\Omega }^{\mathrm {T}} \big ) \underline{A}_2 \equiv \kappa _2\). For \(f \rightarrow 0\), \(\kappa _2 \rightarrow 0\) and \(\underline{A}_2 \rightarrow \underline{A}_1 \otimes \underline{A}_1\). With these equilibrium distributions, we may explicitly compute \(\mu (t)\) and \(\sigma (t)^2\) in both protocols using Eqs. (7) and (11). For the common mean, we obtain

and for the two variances, we need the various correlation functions in Eqs. (7b) and (11b). For the Hebb protocol, these are

and for the Hopfield protocol, we just set \(\kappa _2 = 0\) in these equations. These results allow us to determine SNR lifetimes for simple, SU synapses. Approximating the Hopfield variance by its asymptotic form \(\sigma (\infty )^2\), the single-perceptron SNR memory lifetime in the Hopfield protocol for a stochastic updater is then

and for the population SNR lifetime \(\tau _{\mathrm {pop}}\), we replace \(\sigma _N(\infty )\) by \(\sigma _{\mathscr {N}}(\infty ) / \sqrt{g}\), where the subscript in \(\sigma _X(\infty )^2 = [f + (1-f) \zeta ^2]/X\) indicates either N or \(\mathscr {N} = N P\).

To determine FPT lifetimes, we require \(\mathsf {Prob}[ \, h \, | \, h' \, ]\) for the MIE approach, or the induced jump moments \(A(h')\) and \(B(h')\) for the FPE method. We relegate the derivation of \(\mathsf {Prob}[ \, h \, | \, h' \, ]\) to Appendix A, where we also indicate our numerical methods for obtaining FPTs. From Appendix A, we obtain the jump moments in Eq. (5) for the FPE approach to FPTs. For both protocols we get the same first jump moment

and for the second jump moment, we get

for the Hebb and Hopfield protocols, respectively. We have explicitly indicated the dependence of \(B(h')\) on \(N_{\mathrm {eff}}\), where \(N_{\mathrm {eff}}\) is the number of a perceptron’s synapses that are active during the storage of \(\underline{\xi }^0\). We write \(B(h' \, | \, N_{\mathrm {eff}}) = \psi g B_0(N_{\mathrm {eff}}) + \psi ^2 g \, (h')^2\), where we separate out the quadratic dependence on \(h'\) and it is convenient to remove an overall factor of \(\psi g\) from the definition of \(B_0(N_{\mathrm {eff}})\). Dropping the quadratic term from \(B(h' \, | \, N_{\mathrm {eff}})\) is equivalent to considering dynamics based on the Ornstein–Uhlenbeck process (Uhlenbeck and Ornstein 1930), which we have found to be a very good approximation (Elliott 2014, 2017a, 2019), so we work with just the constant term.

For the MIE approach to FPTs, a technical difficulty as discussed in Appendix A requires us to restrict to the specific case of \(\zeta = 0\) only. We use numerical methods to obtain FPT lifetimes from the MIE approach, but for small fN, the dynamics are dominated by \(N_{\mathrm {eff}}= 1\). For \(N_{\mathrm {eff}}= 1\) and \(\vartheta = 0\), Eq. (4) is trivial because the only contribution to the sum involves no transition, occurring with probability \(1 - {\textstyle \frac{1}{2}}\psi g\) regardless of the protocol. Writing \(\sigma _{\mathrm {fpt}}^2\) as the variance in the FPT, we obtain

at leading order, for small f (\(= g\)) in both protocols. We see that \(\tau _{\mathrm {mfpt}}\) scales as 1/f in this regime, but that \(\sigma _{\mathrm {fpt}}\) scales as \(1/f^{3/2}\). Although \(\sigma _{\mathrm {fpt}}\) swamps \(\tau _{\mathrm {mfpt}}\) for small f, \(\tau _{\mathrm {mfpt}}\) is nevertheless robustly positive. We may use our earlier results to obtain the corresponding forms for the FPE approach to FPT lifetimes for small f (see Eqs. (3.29) and (3.30) in Elliott 2017a). We obtain

In contrast to Eq. (23), now \(\tau _{\mathrm {mfpt}}\) scales as \(1/f^2\) and not 1/f, and \(\sigma _{\mathrm {fpt}}\) scales as \(1/f^2\) and not \(1/f^{3/2}\). Moreover, in the FPE approach, the FPT moments have lost their overall scaling with N. Although the forms in Eq. (24) are obtained using mean field approximations that are expected to be invalid when fN is small, in fact we obtain the same scaling behaviour when the expectation values are obtained by averaging properly over \(h_0\) and \(N_{\mathrm {eff}}\). Our simulation results, discussed in Sect. 4, agree with the behaviour in Eq. (23). Therefore, the failure of the FPE approach for small fN in Eq. (24) is due to the approximations intrinsic to the FPE approach itself. These include the diffusion and especially the continuum limit. For small fN, the system is nowhere near the continuum limit, so the scaling behaviour must be incorrect there.

3.2 Complex synapses

We now turn to models of complex synapses that have internal states, so that \(s > 1\). In such models, synapses can undergo metaplastic changes in their internal states without expressing changes in synaptic strength. We will only consider SNR lifetimes in relation to complex synapses. We have studied FPT lifetimes for filter-based synaptic plasticity for both bistate (Elliott 2017b) and multistate (Elliott 2020) synapses in a non-sparse coding context, but we have yet to consider other models of complex synapses. We therefore restrict to SNR lifetimes, but with the caveat that they are valid only in an asymptotic regime.

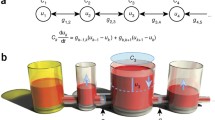

We have discussed filter-based models of synaptic plasticity at length elsewhere (Elliott 2008; Elliott and Lagogiannis 2009, 2012; Elliott 2016b), so we only briefly summarise them here. Synapses are proposed to implement a form of low-pass filtering by integrating plasticity induction signals in an internal filter state. Synapses then filter out high-frequency noise in their induction signals and pass only low-frequency trends, rendering them less susceptible to changes in strength due to fluctuations in their inputs. Potentiating (respectively, depressing) induction signals increment (respectively, decrement) the filter state, with synaptic plasticity being expressed (if possible) only when the filter reaches an upper (respectively, lower) threshold. For symmetric potentiation and depression processes, we may take these thresholds to be \(\pm \Theta \). The filter can occupy the \(2 \Theta -1\) states \(-(\Theta -1), \ldots , +(\Theta -1)\), with the thresholds \(\pm \Theta \) not being occupiable states. Several variant filter models are distinguishable by their different dynamics upon reaching threshold (Elliott 2016b), but we consider only the simplest of them here. In the simplest model, the filter always resets to the zero filter state upon reaching threshold, regardless of its strength state and regardless of the type of plasticity induction signal. This filter generalises to any multistate synapse. If the synapse is saturated at its upper (respectively, lower) strength state and reaches its upper (respectively, lower) filter threshold upon receipt of a potentiating (respectively, depressing) induction signal, the filter resets to zero despite the fact that it cannot increment (respectively, decrement) its strength. The transitions for this filter for the case of \(\Theta = 3\) are illustrated in Fig. 3A. Although for clarity we have shown all permitted transitions between all filter and strength states, we stress that each synapse possesses only a single synaptic filter: the filter is not duplicated for each strength state. Transitions in filter state occur independently of strength state. Nevertheless, to describe transitions in the joint strength and filter state, we require \(2(2 \Theta -1) \times 2(2 \Theta -1)\) matrices, so \(s = 2 \Theta - 1\), although the number of required physical states for filter-based synapses is just \(2 \Theta - 1\) for the filter states themselves, and an additional, binary-valued variable for the bistate strength, so a total of \(2 \Theta \) states.

Strength and internal state transitions for various models of complex synapses. Coloured circles indicate synaptic states, with red (respectively, blue) circles corresponding to strength \(S=-1\) (respectively, \(S=+1\)), and the labelled numbers inside the circles identifying the particular internal states (indexed by I for filter states and i for serial and cascade states). Different internal states of the same strength state are organised in the same vertical column, while different strength states correspond to different columns. Solid (respectively, dashed) lines between states show transitions caused by potentiating (respectively, depressing) induction signals, with arrows indicating the direction of the transition. Loops to and from the same state indicate no transition. Three different models are shown, as labelled, corresponding to a \(\Theta = 3\) filter model (A), and \(s = 5\) serial (B) and cascade (C) synapse models. For the filter and serial synapse models, given the presence of an induction signal of the correct type, the transition probabilities are unity. For the cascade model, the transition probabilities are as discussed in the main text

We state without derivation the result for \(\mu (t)\) in this filter model:

where \(\lfloor \cdot \rfloor \) denotes the floor function. This expression is obtained from Eq. (4.24) in Elliott (2016b) just by multiplying by f and inserting a factor of fg into the exponents. This result is required for obtaining SNR lifetimes. The pairwise correlation functions required for \(\sigma (t)^2\) are computed via numerical matrix methods using the matrices \(\mathbb {M}_\pm \) for the filter model (given in Elliott (2016b) or implied by the transitions in Fig. 3A), and we also obtain the Hebb equilibrium distribution \(\underline{A}_2\) by numerical methods.

To estimate SNR lifetimes in the filter model for the Hopfield protocol, we consider the slowest decaying mode in the first and second terms of Eq. (25). For non-sparse coding, it is usually enough to consider just the slowest mode in the first term, but with sparseness, both terms must be considered for a better approximation. For \(\Theta \) large enough, we then have

Approximating the Hopfield variance by its asymptotic form \(\sigma (\infty )^2\), the single-perceptron SNR memory lifetime for the filter model is then

where in deriving this expression, we have regarded the second term as a correction to the first term, with the first term arising purely from the first term in Eq. (26). To obtain the population SNR memory lifetime \(\tau ^{\mathrm {(fil)}}_{\mathrm {pop}}\), we again just replace \(\sigma _N(\infty )\) by \(\sigma _{\mathscr {N}}(\infty )/\sqrt{g}\) in Eq. (27).

We also consider the serial synapse model (Leibold and Kempter 2008; Rubin and Fusi 2007). In this model, a synapse performs a symmetric, unbiased, one-step random walk on a set of 2s states between reflecting boundaries. The first (respectively, second) group of s states are identified as corresponding to strength \(-1\) (respectively, \(+1\)). For each strength state, there are thus s metastates. If a synapse has strength \(-1\) (respectively, \(+1\)) and experiences a sequence of depressing (respectively, potentiating) induction signals, then it is pushed into progressively higher metastates. However, the synapse can only change strength when in the lowest, \(i=1\) metastate. The transitions are illustrated in Fig. 3b. The transition matrices \(\mathbb {M}_\pm \) are just

where \(\mathrm {diag}_u\) and \(\mathrm {diag}_l\) denote the upper and lower diagonals, respectively. The eigen-decomposition of \(\mathbb {M} = \frac{1}{2}\left( \mathbb {M}_+ {+} \mathbb {M}_- \right) \) is standard (cf. Elliott 2016a, for the eigen-decomposition of the similar matrix \(\mathbb {C}\) there), so we can directly evaluate \(\mu (t) = f \, \underline{\Omega }^{\mathrm {T}} e^{\left( \mathbb {M} - \mathbb {I} \right) f g r t} \, \mathbb {M}_+ \underline{A}_1\), where \(\underline{A}_1^{\mathrm {T}} = \left( \, \underline{1}^{\mathrm {T}} \; | \; \underline{1}^{\mathrm {T}} \right) /(2s)\). We obtain

For the Hebb protocol, we again use numerical matrix methods to obtain \(\underline{A}_2\). To estimate SNR lifetimes for the Hopfield protocol, it is sufficient to consider just the slowest decaying term in Eq. (29), giving

for s large enough, and hence

as the required approximation, with \(\tau ^{\mathrm {ser}}_{\mathrm {pop}}\) obtained in the usual way.

In the cascade model of synaptic plasticity (Fusi et al. 2005), there are also 2s metalevels, s for each bistate strength state, but unlike the serial synapse model, a potentiating (respectively, depressing) induction signal for a synapse with strength \(-1\) (respectively, \(+1\)) in metastate i can with probability \(2^{1-i}\) (or \(2^{2-i}\) for \(i = s\)) cause the synapse to change strength and return to metastate \(i=1\). The same probabilities govern transitions to higher metastates. The transitions are illustrated in Fig. 3C. The cascade model essentially constitutes a tower of stochastic updaters that progressively render the synapse less labile. We have extensively analysed the cascade model elsewhere (Elliott and Lagogiannis 2012) and compared its memory performance to filter-based synapses, which outperform the cascade model in almost all biologically relevant regions of parameter space (Elliott 2016b). It is possible to obtain analytical results for the Laplace transform of the mean dynamics in the cascade model (Elliott and Lagogiannis 2012), but here we use numerical matrix methods. Rubin and Fusi (2007) give a formula for the SNR based on finding a fit to numerical results. The implied formula for the mean is

Taking the asymptotic variance \(\sigma (\infty )^2\) in the Hopfield protocol, we can then use the expression \(\mu _{\mathrm {cas}}(t) / \sigma _N(\infty )\) for the SNR. This still cannot be solved analytically for the SNR lifetime \(\tau ^{\mathrm {cas}}_{\mathrm {snr}}\) (or the population form \(\tau ^{\mathrm {cas}}_{\mathrm {pop}}\)), but we can use it to obtain numerical solutions that can be compared to results obtained from exact matrix methods.

A serial or cascade synapse possesses 2s states, with each set of s metalevels duplicated for each strength. Metalevel i for strength \(-1\) cannot be identified with metalevel i for strength \(+1\) because the transitions induced by plasticity induction signals are in opposite directions. This is in contrast to the filter model, in which the filter transitions are independent of the strength state. Serial and cascade synapses therefore possess fully 2s physical states characterising the state of a synapse, while a filter synapse possesses \(2 \Theta \) physical states and not \(2 (2 \Theta - 1)\) states. Hence, we may directly compare the performance of a filter synapse with threshold \(\Theta \) to a serial or cascade synapse with a total of 2s metastates, or s metastates per strength state.

4 Results

We now turn to a discussion of our results, comparing and contrasting the various models of synaptic plasticity considered above, for the Hebb and Hopfield protocols. For simplicity we consider simulation results only for SU synapses, to confirm and validate our analytical results. Simulations are run according to protocols discussed extensively elsewhere (see, for example, Elliott and Lagogiannis 2012; Elliott 2014), but modified to allow for sparse coding. We first consider single-perceptron memory lifetimes and then population memory lifetimes.

4.1 Single-perceptron memory lifetimes

In Fig. 4, we show results for memory lifetimes for SU synapses with no spontaneous activity, \(\zeta = 0\), comparing the Hopfield and Hebb protocols. We consider both FPT and SNR lifetimes, and for FPT lifetimes, we show results for both the FPE and MIE approaches. Simulation results are also shown, although only for \(f \ge 10^{-3}\): for smaller values it becomes increasingly difficult to obtain enough statistics for decent averaging due to the longer simulation run times. We select an update probability of \(p=1/10\), which is our standard choice of p in earlier work (see, for example, Elliott 2014). From Eq. (24), \(r \tau _{\mathrm {mfpt}}\) and \(r \sigma _{\mathrm {fpt}}\) are expected to scale as \(1/f^2\) for small f for the FPE approach, so we remove this scaling by multiplying by \(f^2\), which in this figure affords greater clarity and resolution.

Convergence of Hebb and Hopfield protocol results for stochastic updater synapses in the limit of sparse coding. Scaled single-perceptron memory lifetimes are shown as a function of sparseness, f. Results in red (respectively, blue) correspond to the Hopfield (respectively, Hebb) protocol. Shaded regions indicate \(f^2 r ( \tau _{\mathrm {mfpt}} \pm \sigma _{\mathrm {fpt}} )\) (with the central solid line showing \(f^2 r \tau _{\mathrm {mfpt}}\)) computed using the FPE approach to FPTs, so that we show the (scaled) MFPT \(\tau _{\mathrm {mfpt}}\) surrounded by the one standard deviation region around it, governed by \(\sigma _{\mathrm {fpt}}\). Short-dashed lines show \(f^2 r \tau _{\mathrm {mfpt}}\) obtained using the exact, MIE approach to FPTs. Circular data points correspond to results from simulation, for \(f \ge 10^{-3}\). Long-dashed lines show results for \(f^2 r \tau _{\mathrm {snr}}\); \(r \tau _{\mathrm {snr}} = 0\) for the Hebb protocol over the whole range of f in panel A. The value of N is indicated in each panel. In all panels, \(p = 1/10\), \(\zeta = 0\) and \(\vartheta = 0\)

Above we showed that the Hopfield and Hebb protocols must coincide for \(f \lessapprox 1/\sqrt{N}\). For the various choices of N used in Fig. 4, we see this convergence of both protocols’ results, becoming indistinguishable for f below \(1/\sqrt{N}\), for all forms of memory lifetime. Focusing first on \(r \tau _{\mathrm {mfpt}}\) from the FPE approach, for smaller N we clearly see that \(f^2 r \tau _{\mathrm {mfpt}}\) asymptotes to a common, N-independent constant as f becomes small; we would see the same behaviour for larger N too, but would need to take smaller values of f than those used in this figure. We also see that \(f^2 r \tau _{\mathrm {mfpt}}\) from the MIE approach tracks that from the FPE approach quite closely, and indeed for intermediate values of f and smaller choices of N, it plateaus, so that \(r \tau _{\mathrm {mfpt}}\) scales as \(1/f^2\) in this regime. However, for \(N=10^3\) and \(f \lessapprox 10^{-3}\), we clearly see the MIE \(f^2 r \tau _{\mathrm {mfpt}}\) turn downwards and approach zero as f decreases. This behaviour is consistent with the derived form of the exact scaling behaviour in Eq. (23), in which \(r \tau _{\mathrm {mfpt}} \propto 1/f\) for small f. We also just see this change for \(N=10^4\) for f close to \(10^{-4}\), but for larger N we would need to take f smaller to see the 1/f scaling of the exact form of \(r \tau _{\mathrm {mfpt}}\). Our simulation results agree with the results from the MIE approach, validating both. Although we do not take f small enough to see the switch to 1/f scaling for \(N=10^3\) in Fig. 4A, we nevertheless do clearly see the start of the down-turn at \(f=10^{-3}\).

For \(f > 1/\sqrt{N}\) in Fig. 4, we see very significant differences between the Hebb and Hopfield protocols. While for the Hopfield protocol \(f^2 r \tau _{\mathrm {mfpt}}\) grows like \(\log _e N\) for fN large enough, this is not the case for the Hebb protocol. For f in the region of unity, \(f^2 r \tau _{\mathrm {mfpt}}\) is roughly speaking independent of N. This means that the dynamics are dominated by the correlations between pairs of synapses’ strength in the Hebb protocol. For \(f=1\), we obtain \(r \tau _{\mathrm {mfpt}} \approx 5.34\) and 5.35 for \(N=10^3\) and \(N=10^6\), respectively, from the FPE approach. (The corresponding values from the MIE approach are 6.64 and 6.79, respectively.) In the regime of f not too far from unity, memory lifetimes in the Hebb protocol are therefore significantly reduced by the synaptic correlations induced by this protocol, where the influence of these correlations cannot be removed by increasing N.

We see that \(r \tau _{\mathrm {mfpt}}\) is robustly positive in Fig. 4 for all choices of N over the whole range of displayed f, and it remains so for small f because of the discussed scaling behaviour. However, looking at the one standard deviation region around \(r \tau _{\mathrm {mfpt}}\), it is clear that in some regimes for f, there can be high variability in FPT memory lifetimes. For the Hopfield protocol, this regime of high variability occurs for small f (where what is “small” f depends on N), while in the Hebb protocol, there is an additional regime for f close to unity. High variability does not mean that memories cannot be stored: \(r \tau _{\mathrm {mfpt}}\) is always robustly positive. Rather, high variability simply means that some memories are stored strongly while others are stored weakly or not at all.

Turning to a consideration of \(r \tau _{\mathrm {snr}}\), we see from Fig. 4 that \(r \tau _{\mathrm {snr}}\) exists (i.e. \(r \tau _{\mathrm {snr}} > 0\)) in precisely those regions of low variability in FPT lifetimes. Indeed, the results for \(r \tau _{\mathrm {snr}}\) track quite closely those for \(r (\tau _{\mathrm {mfpt}} - \sigma _{\mathrm {fpt}})\) over some range of f, and deviate from it elsewhere. We have shown in a non-sparse coding context that FPT and SNR lifetimes for simple synapses essentially coincide (up to additive constants) in the regime in which the distribution of \(h_0\) is tightly concentrated around its supra-threshold mean (Elliott 2017a). For the specific case of \(\vartheta = 0\), as here, we showed that if we can write the initial variance \(\sigma (0)^2\) in the form \(\sigma (0)^2 \approx B_0(N)/2\), then the parameter \(\mu ' \equiv \mu (0) \sqrt{2/B_0(N)}\) must be large enough, which means \(\mu ' \gtrapprox 2\) (Elliott 2017a). We then have that \(\mu ' \approx \mu (0) / \sigma (0) \gtrapprox 2\), which is just a condition on the initial SNR.Footnote 2 Using the pre-averaged form \(\langle B_0(N_{\mathrm {eff}}) \rangle _{N_{\mathrm {eff}}}\) (see Appendix A), this condition reduces to \(4/(p^2 N) \lessapprox f\) in the Hopfield protocol for \(\zeta = 0\). For the Hebb protocol, the limit of large N with p not too close to unity additionally satisfies the requirement on \(\sigma (0)^2\), giving the upper bound \(f \lessapprox p / 2\) for \(\zeta = 0\). In the Hebb protocol, we therefore have the interval \(4/(p^2 N) \lessapprox f \lessapprox p / 2\) for equivalence of SNR and FPT memory lifetimes, for \(\zeta = 0\). (We must have \(N \gtrapprox 8/p^3\) for this interval to exist.) With \(p = 1/10\) in Fig. 4, these conditions are \(400/N \lessapprox f\) and \(400/N \lessapprox f \lessapprox 0.05\) for the Hopfield and Hebb protocols, respectively. For \(400/N \lessapprox f\) in both protocols (except for the Hebb protocol for \(N=10^3\), where the bounding range of f is invalid), we do indeed see that the FPE results for \(f^2 r \tau _{\mathrm {mfpt}}\) and those for \(f^2 r \tau _{\mathrm {snr}}\) run essentially parallel to each other, but that for \(f < 400/N\), \(f^2 r \tau _{\mathrm {snr}}\) peels away from \(f^2 r \tau _{\mathrm {mfpt}}\). The same is true for the Hebb protocol for \(N > 10^3\): as f increases above 0.05, \(f^2 r \tau _{\mathrm {snr}}\) also peels away from \(f^2 r \tau _{\mathrm {mfpt}}\). Thus, these two estimates for the two protocols appear to capture well the region of f for which \(r \tau _{\mathrm {snr}}\) is a reliable indicator of memory longevity. SNR lifetimes are therefore acceptable surrogates for FPT lifetimes when the latter are subject to low variability, but outside these regions SNR lifetimes fail to capture the possibility of memory storage, albeit with high variability. Importantly, the requirement that \(f \gtrapprox 4/(p^2 N)\) in both protocols means that the SNR approach cannot be extended to very small or just small f, because such values violate the asymptotic regime. Essentially, then, the SNR approach cannot probe the very sparse coding regime in either protocol.

For the Hopfield protocol, Eq. (20) is just

With \(\zeta = 0\), we require \(f>1/(p^2 N)\) for \(r \tau _{\mathrm {snr}} > 0\), and we see precisely these threshold values for the different choices of N in Fig. 4. Alternatively, we require \(N>1/(fp^2)\) for memories to be stored according to the SNR criterion. However, these conditions do not carry over to FPT memory lifetimes: we need neither a minimum N nor a minimum f for \(r \tau _{\mathrm {mfpt}} > 0\), because it is always positive. This failure of SNR conditions to carry over to the FPT case also applies to any optimality conditions derived from \(r \tau _{\mathrm {snr}}\). From Eq. (33) with \(\zeta = 0\), we may find that value of f, \(f^{\mathrm {opt}}\), that maximises \(r \tau _{\mathrm {snr}}\), giving rise to \(r \tau _{\mathrm {snr}}^{\mathrm {opt}}\), with the result that \(f^{\mathrm {opt}} = \sqrt{e}/(p^2 N)\). The same value essentially applies to the Hebb protocol, albeit with complicated corrections. However, for the validity of the SNR results, both protocols require \(f \gtrapprox 4/(p^2 N)\). If the SNR optimality condition is valid, then it must satisfy \(f^{\mathrm {opt}} = \sqrt{e}/(p^2 N) \gtrapprox 4/(p^2 N)\), or \(\sqrt{e} \gtrapprox 4\). This is clearly false, and hence the SNR optimality condition for f is spurious, because at \(f = f^{\mathrm {opt}}\), the asymptotic validity condition is violated. In fact, we may essentially take f as small as we like and \(r \tau _{\mathrm {mfpt}}\) will continue to grow, albeit with increasing variability in the FPT lifetimes. Thus, although we will shortly consider optimality conditions for SNR memory lifetimes with complex synapses, these conditions must be viewed with extreme caution.

Figure 4 considers only the case of exactly zero spontaneous activity, \(\zeta = 0\). In Fig. 5, we examine the impact of spontaneous activity on SU memory lifetimes. We show only the case of \(N = 10^5\) to avoid unnecessary clutter, but the results are qualitatively similar for other choices of N. In the Hopfield protocol, \(\zeta \) appears only through a quadratic term in \(B(h')\) or \(\sigma (t)^2\), while in the Hebb protocol, \(\zeta \) also appears through a linear term. This difference makes the Hebb protocol much more sensitive to spontaneous activity than the Hopfield protocol, and we see this explicitly in Fig. 5. In the Hopfield protocol, the asymptotic variance takes the form \(\sigma (\infty )^2 = [ f + (1-f) \zeta ^2 ]/N\), so \(\zeta \) exerts a significant influence on memory lifetimes only for \(f \lessapprox \zeta ^2\). We therefore only start to see a divergence of memory lifetimes from those for \(\zeta = 0\) at around \(f \approx \zeta ^2\), and this is confirmed in the figure. However, as f is taken small, the dependence of \(r \tau _{\mathrm {mfpt}}\) (from the FPE) on \(\zeta \) is lost (just as its dependence on N is lost), so that for very small f, \(\zeta \) does not affect (FPE) FPT lifetimes, neither their means nor their variances. This is because for small f, the scaling results in Eq. (24) depend only on the A and not the B jump moment, so they depend only on drift and not diffusion. However, \(\zeta \) appears only through the diffusion term. In contrast to the Hopfield protocol, even a choice of \(\zeta = 0.01\) induces a large reduction in memory lifetimes in the Hebb protocol, at least away from the small f regime. For small f, the Hebb and Hopfield protocols coincide, so we observe the same loss of dependence on \(\zeta \) in (FPE) FPT lifetimes in the Hebb protocol. However, away from the small f regime, the linear term in \(\zeta \) in B or \(\sigma (t)^2\) significantly impacts memory lifetimes.

Impact of spontaneous activity on stochastic updater single-perceptron memory lifetimes. Results are shown for \(f^2 r \tau _{\mathrm {mfpt}}\) (from the FPE approach) and \(f^2 r \tau _{\mathrm {snr}}\) for both the Hopfield and Hebb protocols, as indicated in the different panels. Different line styles correspond to different levels of spontaneous activity, \(\zeta \), as indicated in the common legend in panel D. Some lines style are absent in panel D because there is no corresponding \(r \tau _{\mathrm {snr}} > 0\). In all panels we take \(N = 10^5\), with \(p = 1/10\) and \(\vartheta = 0\) in all cases

Examining Eq. (33) for the Hopfield protocol, for \(\zeta = 0\), we have just \(f^1\) in the logarithm, while for \(\zeta = 1\), we have \(f^2\). Roughly speaking, for intermediate values of \(\zeta \), the effective power of f switches rapidly from one to two in the vicinity of \(f = \zeta ^2\). This switching can be seen clearly in Fig. 5, where as f decreases, \(f^2 r \tau _{\mathrm {snr}}\) (and also \(f^2 r \tau _{\mathrm {mfpt}}\)) tracks closely the form for \(\zeta = 0\), until it rapidly peels away, following a different power. Although it is still clearly the case that optimality conditions obtained from \(r \tau _{\mathrm {snr}}\) are invalid, it is nevertheless worth examining \(f^{\mathrm {opt}}\). For \(\zeta = 0\), we again obtain \(f^{\mathrm {opt}} = \sqrt{e}/(p^2 N)\), but for \(\zeta = 1\), we instead obtain \(f^{\mathrm {opt}} = \sqrt{e}/(p^2 N)^{1/2}\), so that the N-dependence changes. The corresponding optimal lifetimes are \(r \tau _{\mathrm {snr}}^{\mathrm {opt}} = p^3 N^2 / (4 e)\) for \(\zeta = 0\) and \(r \tau _{\mathrm {snr}}^{\mathrm {opt}} = p N / (2 e)\) for \(\zeta = 1\). Of course, we see explicitly in Fig. 5 that these SNR-derived optimal values of f and thus maximum possible SNR lifetimes are invalid, but SNR lifetimes do at least indicate when FPT lifetimes are subject to lower variability and when they are subject to higher variability.

Considering \(\zeta = 1\) is of course biologically meaningless, as then there is no distinction between spontaneous and evoked electrical activity levels. However, taking either \(\zeta = 0\) or \(\zeta = 1\) allows explicit optimality results to be obtained for these two cases, while such results are not available for intermediate values of \(\zeta \). As just indicated, empirically we observe a very rapid switching in dynamics in the vicinity of \(\zeta = \sqrt{f}\), with the explicit results for \(\zeta =0\) and \(\zeta =1\) therefore indicating the general behaviour prior to and after, respectively, this switching. When we give results for \(\zeta = 1\), we therefore do so with this understanding: that the limit is biologically meaningless, but that it nevertheless indicates the general behaviour for \(\zeta \) in excess of around \(\sqrt{f}\).

We now turn to complex models of synaptic plasticity, considering only SNR lifetimes. In Figs. 6 and 7, we plot SNR lifetimes against sparseness, f, for the three complex models discussed above, for both zero and nonzero spontaneous firing rates, and for the Hopfield (Fig. 6) and Hebb (Fig. 7) protocols. All results are obtained by numerical matrix methods to solve the SNR equation \(\mu (\tau _{\mathrm {snr}}) = \sigma (\tau _{\mathrm {snr}})\), where the standard deviation \(\sigma (t)\) is computed fully rather than via just its asymptotic form \(\sigma (\infty )\).

Spontaneous activity reduces single-perceptron memory lifetimes and limits sparseness in complex synapse models: Hopfield protocol. Single-perceptron SNR memory lifetimes are shown for different complex models of synaptic plasticity under the Hopfield protocol, as a function of sparseness, f. Each panel shows results for the indicated model and choice of spontaneous activity, either \(\zeta = 0\) or \(\zeta = 0.1\). Results are shown for \(\Theta \) or s ranging from 2 to 12 in increments of 2, with the particular choice identified by the line colour described by the common legend in panel B. In all cases, \(N = 10^5\)

Spontaneous activity reduces single-perceptron memory lifetimes and limits sparseness in complex synapse models: Hebb protocol. The format of this figure is identical to Fig. 6, except that it shows results for the Hebb protocol, and in the right-hand panels we use a smaller value \(\zeta = 0.01\). Some lines of specific colour are absent in some graphs because there is no corresponding \(r \tau _{\mathrm {snr}} > 0\). In all cases, \(N = 10^5\)

For the Hopfield protocol in Fig. 6 we see in all cases and for all choices of parameters an onset of SNR lifetimes for a minimum, threshold value of f, the rapid attainment of a peak or optimal value of \(r \tau _{\mathrm {snr}}\), followed by a steady fall in lifetimes as f increases further. For all complex models, this onset of SNR lifetimes occurs for increasingly large values of f as \(\Theta \) or s increases. At least for the parameter ranges in this figure, in the filter and serial models, for a given choice of f, increasing \(\Theta \) or s increases \(r \tau _{\mathrm {snr}}\), although as the number of internal states continues to increase, ultimately \(r \tau _{\mathrm {snr}}\) will start to fall. In the case of the cascade model, however, the dependence of \(r \tau _{\mathrm {snr}}\) on s for fixed f is not as simple as for the other complex models. We note that for all models in this figure, the optimal values of \(r \tau _{\mathrm {snr}}\) decrease for increasing \(\Theta \) or s, at least for \(\zeta = 0\). However, when we increase the spontaneous activity to \(\zeta = 0.1\), the optimal values lose most of their dependence on \(\Theta \) or s in the filter and serial models, although not in the cascade model. This loss of dependence on \(\Theta \) or s is strongly N- and \(\zeta \)-dependent. For \(N=10^3\), we must take \(\zeta \) close to unity before this loss of dependence is noticeable, while for \(N=10^6\), even \(\zeta =10^{-3/2} \approx 0.0316\) is sufficient.

For the Hebb protocol, in Fig. 7, for smaller f we obtain essentially the same results as for the Hopfield protocol because these two protocols must coincide for \(f \lessapprox 1/\sqrt{N}\), regardless of the model of synaptic plasticity. However, for larger f, the synaptic correlation terms induced by the Hebb protocol again significantly impact SNR memory lifetimes, with the impact being greater for larger \(\Theta \) or s in the filter and serial models. Thus, as with SU synapses under the Hebb protocol, SNR lifetimes exist only in some interval of f (below \(f=1\)), with this interval shrinking and disappearing as the number of internal states increases (or as p decreases for SU synapses). These dynamics dramatically limit the number of internal states that give rise to positive SNR lifetimes. Nevertheless, as \(\Theta \) or s increases, \(r \tau _{\mathrm {snr}}\) in general increases, at least until the permissible range of f becomes very small and then disappears entirely. For the cascade model, however, the upper limit on f is roughly speaking independent of s, but we also see that in general, as s increases, \(r \tau _{\mathrm {snr}}\) decreases for fixed f. This relative insensitivity of the upper limit of the permissible range of f to s in the cascade model occurs because the cascade model has different metastates with different update probabilities, with some synapses residing in the lower metastates and so having larger update probabilities than those residing in higher metastates.

In the presence of spontaneous activity, we see a dramatic change in the memory lifetimes. Indeed, such is the sensitivity of the Hebb protocol to \(\zeta \), especially for complex synapse models, that in contrast to Fig. 6 for the Hopfield protocol, for which we took \(\zeta = 0.1\), in Fig. 7 we take \(\zeta = 0.01\). Even with just 1% spontaneous activity, the filter and serial models’ number of internal states becomes severely restricted, in terms of giving rise to positive SNR lifetimes. The cascade model under the Hebb protocol is not quite so sensitive, again because of its different metastates, but a 10% level of spontaneous activity would still dramatically restrict the permissible ranges of f and s, compared to the Hopfield protocol.

We quantify these observations by explicitly considering the optimal choices of the parameters f and either \(\Theta \) or s, so \(f^{\mathrm {opt}}\) and either \(\Theta ^{\mathrm {opt}}\) or \(s^{\mathrm {opt}}\), that maximise \(r \tau _{\mathrm {snr}}\), giving rise to \(r \tau _{\mathrm {snr}}^{\mathrm {opt}}\). In Figs. 8 and 9, we plot \(f^{\mathrm {opt}}\) and \(r \tau _{\mathrm {snr}}^{\mathrm {opt}}\) against \(\Theta \) or s, for different levels of spontaneous activity, \(\zeta \), for the particular choice of \(N = 10^5\). Results are obtained both by numerical matrix methods and by using the approximations for \(\mu (t)\) and \(r \tau _{\mathrm {snr}}\) given in Sect. 3.2. For the latter, we maximise \(r \tau _{\mathrm {snr}}\) as a function of f for fixed \(\Theta \) or s.

Optimal sparseness in complex synapse models for single perceptrons in the Hopfield protocol. The left-hand panels (A, C, E) show the optimal single-perceptron memory lifetimes \(r \tau _{\mathrm {snr}}^{\mathrm {opt}}\) obtained at the corresponding optimal levels of sparseness \(f^{\mathrm {opt}}\) shown in the right-hand panels (B, D, F), for the indicated complex models. Lines show numerical matrix results while the corresponding data points show approximate analytical results obtained as discussed in the main text. Results are shown for different values of \(\zeta \), with identifying line styles corresponding to those in Fig. 5. We have set \(N = 10^5\) in all panels

Optimal sparseness in complex synapse models for single perceptrons in the Hebb protocol. The format of this figure is essentially identical to Fig. 8, except that it shows results for the Hebb protocol. Approximate analytical results are not available for the Hebb protocol and so are not present. The termination of a line at a threshold value of \(\Theta \) or s indicates that above that value, no choice of f generates \(r \tau _{\mathrm {snr}} > 0\). We have set \(N = 10^5\) in all panels