Abstract

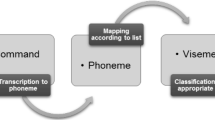

Appearance-based visual speech recognition using only video signals is presented. The proposed technique is based on the use of directional motion history images (DMHIs), which is an extension of the popular optical-flow method for object tracking. Zernike moments of each DMHI are computed in order to perform the classification. The technique incorporates automatic temporal segmentation of isolated utterances. The segmentation of isolated utterance is achieved using pair-wise pixel comparison. Support vector machine is used for classification and the results are based on leave-one-out paradigm. Experimental results show that the proposed technique achieves better performance in visemes recognition than others reported in literature. The benefit of this proposed visual speech recognition method is that it is suitable for real-time applications due to quick motion tracking system and the fast classification method employed. It has applications in command and control using lip movement to text conversion and can be used in noisy environment and also for assisting speech impaired persons.

Similar content being viewed by others

Notes

The experimental procedure was approved by the Human Experiments Ethics Committee of RMIT University (ASEHAPP 12-10).

References

Xiaodong, C., Alwan, A.: Noise robust speech recognition using feature compensation based on polynomial regression of utterance SNR. IEEE Trans. Speech Audio Process. 13(6), 1161–1172 (2005)

Haitian, X., Zheng-Hua, T., Dalsgaard, P., Lindberg, B.: Robust speech recognition by nonlocal means denoising processing. IEEE Signal Process. Lett. 15, 701–704 (2008)

Zheng-Hua, T., Lindberg, B.: Low-complexity variable frame rate analysis for speech recognition and voice activity detection. IEEE J. Sel. Top. Signal Process. 4(5), 798–807 (2010)

Petajan, E.: Automatic lipreading to enhance speech recognition. In: IEEE Global Telecommunications Conference, Atlanta, GA, USA, pp. 265–272. IEEE Computer Society Press, Los Alamitos (1984)

Arjunan, S.P., Kumar, D.K., Yau, W.C., Weghorn, H.: Unspoken vowel recognition using facial electromyogram. In: Engineering in Medicine and Biology Society. EMBS’06. 28th Annual International Conference of the IEEE, Aug. 30 2006–Sept. 3 2006, pp. 2191–2194 (2006)

Schultz, T., Wand, M.: Modeling coarticulation in EMG-based continuous speech recognition. Speech Commun. 52(4), 341–353 (2010). doi:10.1016/j.specom.2009.12.002

Medizinelektronik, C.: (2008). http://www.articulograph.de/

Soquet, A., Saerens, M., Lecuit, V.: Complementary cues for speech recognition, pp. 1645–1648 (1999)

Potamianos, G., Neti, C., Gravier, G., Garg, A., Senior, A.W.: Recent advances in the automatic recognition of audiovisual speech. Proc. IEEE 91(9), 1306–1326 (2003)

Yau, W.C., Kumar, D.K., Arjunan, S.P.: Visual speech recognition using dynamic features and support vector machines. Int. J. Image Graph. 8(3), 419–437 (2008)

Xiang, T., Gong, S.: Beyond tracking: modelling activity and understanding behaviour. Int. J. Comput. Vis. 67(1), 21–51 (2006)

Meng, H., Pears, N., Bailey, C.: Motion information combination for fast human action recognition. In: Proc. Computer Vision Theory and Applications, Spain, pp. 21–28 (2007)

Ma, J., Cole, R., Pellom, B., Ward, W., Wise, B.: Accurate automatic visible speech synthesis of arbitrary 3D models based on concatenation of diviseme motion capture data. Comput. Animat. Virtual Worlds 15(5), 485–500 (2004)

Govokhina, O., Bailly, G., Breton, G.: Learning Optimal Audiovisual Phasing for a HMM-based Control Model for Facial Animation (2007)

Musti, U., Toutios, A., Ouni, S., Colotte, V., Wrobel-Dautcourt, B., Berger, M.O.: HMM-based automatic visual speech segmentation using facial data. In: Proc. of the Interspeech 2010, pp. 1401–1404 (2010)

Koprinska, I., Carrato, S.: Temporal video segmentation: a survey. Signal Process. Image Commun. 16(5), 477–500 (2001)

Da Silveira, L.G., Facon, J., Borges, D.L.: Visual speech recognition: a solution from feature extraction to words classification. In: XVI Brazilian Symposium on Computer Graphics and Image Processing SIBGRAPI 2003, pp. 399–405 (2003)

Luettin, J., Thacker, N.A., Beet, S.W.: Active shape models for visual speech feature extraction. NATO ASI Ser. Comput. Syst. Sci. 150, 383–390 (1996)

Otani, K., Hasegawa, T.: The image input microphone—a new nonacoustic speech communication system by media conversion from oral motion images to speech. IEEE J. Sel. Areas Commun. 13(1), 42–48 (1995)

Hueber, T., Chollet, G., Denby, B., Stone, M., Zouari, L.: Ouisper: corpus based synthesis driven by articulatory data. In: 16th International Congress of Phonetic Sciences, pp. 2193–2196 (2007)

Yuhas, B.P., Goldstein, M.H. Jr., Sejnowski, T.J.: Integration of acoustic and visual speech signals using neural networks. IEEE Commun. Mag. 27(11), 65–71 (1989)

Bregler, C., Konig, Y.: Eigenlips for robust speech recognition. In: IEEE International Conference on Acoustics, Speech, and Signal Processing, ICASSP-94, 19–22 Apr 1994, pp. 669–672 (1994)

Silsbee, P.L., Bovik, A.C.: Computer lipreading for improved accuracy in automatic speech recognition. IEEE Trans. Speech Audio Process. 4(5), 337–351 (1996)

Potamianos, G., Luettin, J., Neti, C.: Hierarchical discriminant features for audio-visual LVCSR. In: Proceedings of IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP’01), vol. 161, pp. 165–168 (2001)

Zhao, G., Barnard, M., Pietikainen, M.: Lipreading with local spatiotemporal descriptors. IEEE Trans. Multimed. 11(7), 1254–1265 (2009)

Yuille, A.L., Hallinan, P.W., Cohen, D.S.: Feature extraction from faces using deformable templates. Int. J. Comput. Vis. 8(2), 99–111 (1992)

Kass, M., Witkin, A., Terzopoulos, D.: Snakes: active contour models. Int. J. Comput. Vis. 1(4), 321–331 (1988)

Papandreou, G., Katsamanis, A., Pitsikalis, V., Maragos, P.: Adaptive multimodal fusion by uncertainty compensation with application to audiovisual speech recognition. IEEE Trans. Audio Speech Lang. Process. 17(3), 423–435 (2009)

Matthews, I., Cootes, T., Bangham, J., Cox, S., Harvey, R.: Extraction of visual features for lipreading. IEEE Trans. Pattern Anal. Mach. Intell. 24(2), 198–213 (2002)

Goldschen, A.J., Garcia, O.N., Petajan, E.: Continuous optical automatic speech recognition by lipreading. In: Conference Record of the Twenty-Eighth Asilomar Conference on Signals, Systems and Computers, 31 Oct–2 Nov, pp. 572–577 (1994)

Mase, K., Pentland, A.: Automatic lipreading by optical-flow analysis. Syst. Comput. Jpn. 22(6), 67–76 (1991)

Iwano, K., Yoshinaga, T., Tamura, S., Furui, S.: Audio-visual speech recognition using lip information extracted from side-face images. EURASIP J. Audio Speech Music Process. 2007(1), 4 (2007)

Rajavel, R., Sathidevi, P.S.: A novel algorithm for acoustic and visual classifiers decision fusion in audio-visual speech recognition system. Signal Process. 4(1), 23–37 (2010)

Venkatesh Babu, R., Ramakrishnan, K.: Recognition of human actions using motion history information extracted from the compressed video. Image Vis. Comput. 22(8), 597–607 (2004)

Weinland, D., Ronfard, R., Boyer, E.: Free viewpoint action recognition using motion history volumes. Comput. Vis. Image Underst. 104(2–3), 249–257 (2006)

Valstar, M., Patras, I., Pantic, M.: Facial action unit recognition using temporal templates. In: 13th IEEE International Workshop on Robot and Human Interactive Communication, ROMAN, 20–22 Sept 2004, pp. 253–258 (2004)

Ahad, M.: Analysis of motion self-occlusion problem due to motion overwriting for human activity recognition. J. Multimed. 5(1), 36–46 (2010)

Young, S., Evermann, G., Kershaw, D., Moore, G., Odell, J., Ollason, D., Valtchev, V., Woodland, P.: The HTK Book, vol. 2. Entropic Cambridge Research Laboratory, Cambridge (1997)

Su, Q., Silsbee, P.L.: Robust audiovisual integration using semicontinuous hidden Markov models. In: Proc. International Conference on Spoken Language Processing, Philadelphia, PA, pp. 42–45 (2002)

Krone, G., Talk, B., Wichert, A., Palm, G.: Neural architectures for sensor fusion in speech recognition. In: Proc. European Tutorial Workshop on Audio-Visual Speech Processing, Rhodes, Greece, pp. 57–60 (1997)

Yau, W., Kumar, D., Arjunan, S.: Voiceless speech recognition using dynamic visual speech features. In: Proc. of HCSNet Workshop on the Use of Vision in HCI, Canberra, Australia, pp. 93–101. Australian Computer Society, Inc., Canberra (2006)

Duchnowski, P., Meier, U., Waibel, A.: See me, hear me: integrating automatic speech recognition and lip-reading. In: Proceedings of the International Conference on Spoken Language and Processing, Yokohama, Japan, pp. 547–550. Citeseer, Princeton (1994)

Heckmann, M., Berthommier, F., Kroschel, K.: A hybrid ANN/HMM audio-visual speech recognition system. In: International Conference on Auditory-Visual Speech Processing, Aalborg, Denmark, pp. 189–194. Citeseer, Princeton (2001)

Yau, W., Kant Kumar, D., Chinnadurai, T.: Lip-reading technique using spatio-temporal templates and support vector machines. In: Progress in Pattern Recognition, Image Analysis and Applications, pp. 610–617 (2008)

Ganapathiraju, A., Hamaker, J., Picone, J.: Hybrid SVM/HMM architectures for speech recognition. In: International Conference on Spoken Language Processing, pp. 504–507. Citeseer, Princeton (2000)

Gordan, M., Kotropoulos, C., Pitas, I.: A support vector machine-based dynamic network for visual speech recognition applications. EURASIP J. Appl. Signal Process. 2002(1), 1248–1259 (2002)

Sun, D., Roth, S., Lewis, J., Black, M.: Learning optical flow. In: Forsyth, D., Torr, P., Zisserman, A. (eds.) Computer Vision—ECCV 2008. Lecture Notes in Computer Science, vol. 5304, pp. 83–97. Springer, Berlin (2008)

Otsu, N.: A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 9(1), 62–66 (1979)

Khotanzad, A., Hong, Y.H.: Invariant image recognition by Zernike moments. IEEE Trans. Pattern Anal. Mach. Intell. 12(5), 489–497 (1990)

Teh, C.H., Chin, R.T.: On image analysis by the methods of moments. IEEE Trans. Pattern Anal. Mach. Intell. 10(4), 496–513 (1988)

Chang, C.C., Lin, C.J.: LIBSVM: a Library for Support Vector Machines (2001)

Yau, W.C.: Video Analysis of Mouth Movement Using Motion Templates for Computer-Based Lip-Reading. RMIT University, Melbourne (2008)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Shaikh, A.A., Kumar, D.K. & Gubbi, J. Automatic visual speech segmentation and recognition using directional motion history images and Zernike moments. Vis Comput 29, 969–982 (2013). https://doi.org/10.1007/s00371-012-0751-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-012-0751-7