Abstract

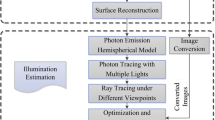

Illumination consistency is important for photorealistic rendering of mixed reality. However, it is usually difficult to acquire illumination conditions of natural environments. In this paper, we propose a novel method for evaluating the light conditions of a static outdoor scene without knowing its geometry, material, or texture. In our method, we separate respectively the shading effects of the scene due to sunlight and skylight through learning a set of sample images which are captured with the same sun position. A fixed illumination map of the scene under sunlight or skylight is then derived reflecting the scene geometry, surface material properties and shadowing effects. These maps, one for sunlight and the other for skylight, are therefore referred to as basis images of the scene related to the specified sun position. We show that the illumination of the same scene under different weather conditions can be approximated as a linear combination of the two basis images. We further extend this model to estimate the lighting condition of scene images under deviated sun positions, enabling virtual objects to be seamlessly integrated into images of the scene at any time. Our approach can be applied for online video process and deal with both cloudy and sun shine situations. Experiment results successfully verify the effectiveness of our approach.

Similar content being viewed by others

References

Agusanto, K., Li, L., Chuangui, Z., Sing, N.W.: Photorealistic rendering for augmented reality using environment illumination. In: Proc. IEEE/ACM International Symposium on Augmented and Mixed Reality, pp. 208–216 (2003)

Andersen, M.S., Jensen, T., Madsen, C.B.: Estimation of dynamic light changes in outdoor scenes without the use of calibration objects. In: ICPR, vol. 4, pp. 91–94 (2006)

Choudhury, B., Chandran, S.: A survey of image-based relighting techniques. In: GRAPP 2006: Proceedings of the First International Conference on Computer Graphics Theory and Applications, pp. 176–183 (2006)

Debevec, P.: Rendering synthetic objects into real scenes: Bridging traditional and image-based graphics with global illumination and high dynamic range photography. In: Proc. SIGGRAPH ’98, pp. 189–198 (1998)

Debevec, P.E., Malik, J.: Recovering high dynamic range radiance maps from photographs. In: Proc. SIGGRAPH ’97, pp. 369–378 (1997)

Gibson, S., Cook, J., Howard, T., Hubbold, R.: Rapid shadow generation in real-world lighting environments. In: EGSR, pp. 219–229 (2003)

Hara, K., Nishino, K., Ikeuchi, K.: Light source position and reflectance estimation from a single view without the distant illumination assumption. IEEE Trans. Pattern Anal. Mach. Intell. 27(4), 493–505 (2005)

Jacobs, K., Loscos, C.: Classification of illumination methods for mixed reality. Comput. Graph. Forum 25(1), 29–51 (2006)

Jacobs, N., Roman, N., Pless, R.: Consistent temporal variations in many outdoor scenes. In: CVPR, pp. 1–6 (2007)

Lalonde, S.G.N.J.-F., Efros, A.A.: What does the sky tell us about the camera? In: ECCV (2008)

Kim, F.J.P.M., S.J.: Radiometric calibration with illumination change for outdoor scene analysis. In: CVPR (2008)

Koppal, S.J., Narasimhan, S.G.: Clustering appearance for scene analysis. In: CVPR, vol. 2, pp. 1323–1330 (2006)

Loscos, C., Drettakis, G., Robert, L.: Interactive virtual relighting of real scenes. IEEE Trans. Vis. Comput. Graph. 6(3), 289–305 (2000)

Matsushita, Y., Lin, S., Kang, S.B., Shum, H.-Y.: Estimating intrinsic images from image sequences with biased illumination. In: ECCV, pp. 274–286 (2004)

Nakamae, E., Harada, K., Ishizaki, T., Nishita, T.: A montage method: the overlaying of the computer generated images onto a background photograph. In: Proc. SIGGRAPH ’86, vol. 20, pp. 207–214 (1986)

Narasimhan, S.G., Wang, C., Nayar, S.K.: All the images of an outdoor scene. In: ECCV, pp. 148–162 (2002)

Nayar, S.K., Krishnan, G., Grossberg, M.D., Raskar, R.: Fast separation of direct and global components of a scene using high frequency illumination. ACM Trans. Graph. 25(3), 935–944 (2006)

Rees, W.: Physical Principles of Remote Sensing. Cambridge Univ. Press, Cambridge (1990)

Sato, I., Sato, Y., Ikeuchi, K.: Acquiring a radiance distribution to superimpose virtual objects onto a real scene. IEEE Trans. Vis. Comput. Graph. 5(1), 1–12 (1999)

Sato, I., Sato, Y., Ikeuchi, K.: Illumination distribution from shadows. In: CVPR, pp. 306–312 (1999)

Seitz, S.M., Matsushita, Y., Kutulakos, K.N.: A theory of inverse light transport. In: ICCV ’05: Proceedings of the Tenth IEEE International Conference on Computer Vision, pp. 1440–1447 (2005)

Sharma, S., Joshi, M.V.: A practical approach for simultaneous estimation of light source position, scene structure, and blind restoration using photometric observations. EURASIP J. Adv. Signal Process. 2008(3), 12 pages (2008)

Sunkavalli, K., Matusik, W., Pfister, H., Rusinkiewicz, S.: Factored time-lapse video. ACM Trans. Graph. 26(3), 101 (2007)

Sunkavalli, K., Romeiro, F., Matusik, W., Zickler, T., Pfister, H.: What do color changes reveal about an outdoor scene? In: CVPR (2008)

Tadamura, K., Nakamae, E., Kaneda, K., Baba, M., Yamashita, H., Nishita, T.: Modeling of skylight and rendering of outdoor scenes. Comput. Graph. Forum 12(3), 189–200 (1993)

Tappen, M.F., Freeman, W.T., Adelson, E.H.: Recovering intrinsic images from a single image. IEEE Trans. Pattern Anal. Mach. Intell. 27(9), 1459–1472 (2005)

Wang, Y., Samaras, D.: Estimation of multiple directional light sources for synthesis of augmented reality images. Graph. Models 65(4), 185–205 (2003)

Weiss, Y.: Deriving intrinsic images from image sequences. In: Computer Vision, IEEE International Conference on, vol. 2, p. 68 (2001)

Yu, Y., Debevec, P.E., Malik, J., Hawkins, T.: Inverse global illumination: Recovering reflectance models of real scenes from photographs. In: Proc. SIGGRAPH ’99, pp. 215–224 (1999)

Zhang, Y., Yang, Y.H.: Multiple illuminant direction detection with application to image synthesis. IEEE Trans. Pattern Anal. Mach. Intell. 23(8), 915–920 (2001)

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

About this article

Cite this article

Liu, Y., Qin, X., Xu, S. et al. Light source estimation of outdoor scenes for mixed reality. Vis Comput 25, 637–646 (2009). https://doi.org/10.1007/s00371-009-0342-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-009-0342-4