Abstract

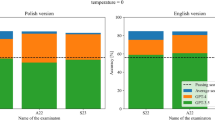

We compared different LLMs, notably chatGPT, GPT4, and Google Bard and we tested whether their performance differs in subspeciality domains, in executing examinations from four different courses of the European Society of Neuroradiology (ESNR) notably anatomy/embryology, neuro-oncology, head and neck and pediatrics. Written exams of ESNR were used as input data, related to anatomy/embryology (30 questions), neuro-oncology (50 questions), head and neck (50 questions), and pediatrics (50 questions). All exams together, and each exam separately were introduced to the three LLMs: chatGPT 3.5, GPT4, and Google Bard. Statistical analyses included a group-wise Friedman test followed by a pair-wise Wilcoxon test with multiple comparison corrections. Overall, there was a significant difference between the 3 LLMs (p < 0.0001), with GPT4 having the highest accuracy (70%), followed by chatGPT 3.5 (54%) and Google Bard (36%). The pair-wise comparison showed significant differences between chatGPT vs GPT 4 (p < 0.0001), chatGPT vs Bard (p < 0. 0023), and GPT4 vs Bard (p < 0.0001). Analyses per subspecialty showed the highest difference between the best LLM (GPT4, 70%) versus the worst LLM (Google Bard, 24%) in the head and neck exam, while the difference was least pronounced in neuro-oncology (GPT4, 62% vs Google Bard, 48%). We observed significant differences in the performance of the three different LLMs in the running of official exams organized by ESNR. Overall GPT 4 performed best, and Google Bard performed worst. This difference varied depending on subspeciality and was most pronounced in head and neck subspeciality.

Similar content being viewed by others

References

Haver HL, Ambinder EB, Bahl M, Oluyemi ET, Jeudy J, Yi PH (2023) Appropriateness of breast cancer prevention and screening recommendations provided by ChatGPT. Radiology 307(4):e230424. https://doi.org/10.1148/radiol.230424

Shen Y, Heacock L, Elias J et al (2023) ChatGPT and other large language models are double-edged swords. Radiology 307(2):e230163. https://doi.org/10.1148/radiol.230163

Alkaissi H, McFarlane SI (2023) Artificial hallucinations in ChatGPT: implications in scientific writing. Cureus. Published online February 2023. https://doi.org/10.7759/cureus.35179

Ismail A, Ghorashi NS, Javan R (2023) New horizons: the potential role of OpenAI’s ChatGPT in clinical radiology. J Am Coll Radiol 20(7):696–698. https://doi.org/10.1016/j.jacr.2023.02.025

Kitamura FC (2023) ChatGPT is shaping the future of medical writing but still requires human judgment. Radiology 307(2):e230171. https://doi.org/10.1148/radiol.230171

Kung TH, Cheatham M, Medenilla A et al (2023) Performance of ChatGPT on USMLE: potential for AI-assisted medical education using large language models. PLOS Digit Health 2(2):e0000198. https://doi.org/10.1371/journal.pdig.0000198

Bhayana R, Krishna S, Bleakney RR (2023) Performance of ChatGPT on a radiology board-style examination: insights into current strengths and limitations. Radiology 307(5):e230582. https://doi.org/10.1148/radiol.230582

Ueda D, Mitsuyama Y, Takita H et al (2023) ChatGPT’s diagnostic performance from patient history and imaging findings on the diagnosis please quizzes. Radiology 308(1). https://doi.org/10.1148/radiol.231040

Biswas S (2023) ChatGPT and the future of medical writing. Radiology 307(2):e223312. https://doi.org/10.1148/radiol.223312

Stokel-Walker C (2023) ChatGPT listed as author on research papers: many scientists disapprove. Nature 613(7945):620–621. https://doi.org/10.1038/d41586-023-00107-z

Lourenco AP, Slanetz PJ, Baird GL (2023) Rise of ChatGPT: it may be time to reassess how we teach and test radiology residents. Radiology 307(5):e231053. https://doi.org/10.1148/radiol.231053

Blüthgen C (2023) Does GPT4 dream of counting electric nodules? Eur Radiol. Published online April 2023. https://doi.org/10.1007/s00330-023-09671-4

OpenAI. Better language models and their implications. Httpsopenaicomblogbetter- Lang-Models. https://openai.com/blog/better-language-models/

Patil NS, Huang RS, Van Der Pol CB, Larocque N (2023) Comparative performance of ChatGPT and Bard in a text-based radiology knowledge assessment. Can Assoc Radiol J. Published online August 14, 2023:08465371231193716. https://doi.org/10.1177/08465371231193716

OpenAI (2023) GPT-4 technical report. Published online March 27, 2023. http://arxiv.org/abs/2303.08774. Accessed 23 Aug 2023

Health TLD (2023) ChatGPT: friend or foe? Lancet Digit Health 5(3):e102. https://doi.org/10.1016/S2589-7500(23)00023-7

Ayers JW, Poliak A, Dredze M et al (2023) Comparing physician and artificial intelligence chatbot responses to patient questions posted to a public social media forum. JAMA Intern Med 183(6):589. https://doi.org/10.1001/jamainternmed.2023.1838

Doo FX, Cook TS, Siegel EL et al (2023) Exploring the clinical translation of generative models like ChatGPT: promise and pitfalls in radiology, from patients to population health. J Am Coll Radiol. Published online July 2023:S1546144023005161. https://doi.org/10.1016/j.jacr.2023.07.007

Acknowledgements

We would like to thank Sara Fullone of European Society of Neuroradiology Central Office for the support provided.

Funding

No funding.

Author information

Authors and Affiliations

Contributions

Gennaro D’Anna: Concept, analysis, writing.

Sofie Van Cauter: Writing, Revision.

Majda Thurnher: data, revision.

Johan Van Goethem: revision.

Sven Haller: concept, analysis, writing, revision.

Corresponding author

Ethics declarations

Conflicts of interest/Competing interests

- G. D’Anna: Head of ESNR Social Media Committee; The Neuroradiology Journal Associate Editor.

- S. Van Cauter: nothing to disclose.

- M Thurnher: Chair of EBNR Management Board.

- J. Van Goethem: Neuroradiology Editor in Chief.

- S. Haller: Imaging SAG EPAD; Consultant Spineart; Consultant WYSS; Consultant HCK; Radiology deputy editor; Neuroradiology section editor advanced imaging.

Ethics approval

Not applicable.

Informed consent

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

D’Anna, G., Van Cauter, S., Thurnher, M. et al. Can large language models pass official high-grade exams of the European Society of Neuroradiology courses? A direct comparison between OpenAI chatGPT 3.5, OpenAI GPT4 and Google Bard. Neuroradiology (2024). https://doi.org/10.1007/s00234-024-03371-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00234-024-03371-6