Abstract

Peripersonal space (PPS) is created by a multisensory interaction between different sensory modalities and can be modified by experience. In this article, we investigated whether an auditory training, inside the peripersonal space area, can modify the PPS around the head in sighted participants. The auditory training was based on echolocation. We measured the participant’s reaction times to a tactile stimulation on the neck, while task-irrelevant looming auditory stimuli were presented. Sounds more strongly affect tactile processing when located within a limited distance from the body. We measured spatially dependent audio-tactile interaction as a proxy of PPS representation before and after an echolocation training. We found a significant speeding effect on tactile RTs after echolocation, specifically when sounds where around the location where the echolocation task was performed. This effect could not be attributed to a task repetition effect nor to a shift of spatial attention, as no changes of PPS were found in two control groups of participants, who performed the PPS task after either a break or a temporal auditory task (with stimuli located at the same position of echolocation task). These findings show that echolocation affects multisensory processing inside PPS representation, likely to better represent the space where external stimuli, have to be localized.

Similar content being viewed by others

Introduction

Space is a construction of our brain and mind. Several lines of evidence show that our brain continuously generates multiple neural representations of coexisting spaces, depending on incoming sensory inputs, action/intention, and reference frames (McNaughton and Nadel 1990; Holmes and Spence 2004; Pasqualotto et al. 2013). An interesting spatial representation, which is nowadays attracting a renewed interest, is the peripersonal space (PPS), i.e., the space immediately surrounding the body (Rizzolatti et al. 1997; Ladavas and Serino 2008; Cléry et al. 2015; Dijkerman and Farnè 2015; Serino 2016). Studies show that PPS is represented by the integration between somatosensory stimuli from the body and visual (Làdavas et al. 1998; Macaluso and Maravita 2010) or auditory stimuli (Occelli et al. 2011) from the environment, when they are presented at a limited distance from the body. This integration defines the extent of the PPS (Bassolino et al. 2015; De Vignemont and Iannetti 2015). Interestingly, PPS representation has a direct link to the motor system, as stimuli presented within the PPS prime defensive (Graziano and Cooke 2006) or approaching (Rizzolatti et al. 1997) body actions (Cardinali et al. 2009; Serino et al. 2009; Makin et al. 2009; Avenanti et al. 2012).

An important property of PPS representation is that it dynamically modifies through experience, i.e., by short (Farnè and Làdavas 2000; Holmes and Spence 2004; Holmes et al. 2004; Canzoneri et al. 2013b) and long-term (Serino et al. 2007) tool-use, social interaction (Heed et al. 2010; Ferri et al. 2013; Teneggi et al. 2013; Pellencin et al. 2018) and potential movements (Brozzoli et al. 2010; Noel et al. 2015).

In this study, we investigated whether a novel form of exploring and interacting with the environment through sounds (echolocation) shapes PPS representation. Echolocation is based on the ability to measure the time delay between a sound and any echoes reflected by the environment. Specifically, using self-generated sounds, expert echolocators are able to navigate and detect an object present in the environment (Supa et al. 1944; Kolarik et al. 2014). Therefore, echolocation can be conceived as a form of tool-use able to modify the PPS. Echolocation can be used to “reach” sectors of space which are normally out of reach without visual information, thanks to the interpretation of the echoes produced by sound reflections on the objects. Recent studies have demonstrated that also sighted people, after a training, are able to perform simply echolocation tasks, such as size discrimination (Teng 2011; Thaler et al. 2014), detection task (Schenkman and Nilsson 2010; Thaler and Castillo-Serrano 2016) or bypassing obstacles (Kolarik et al. 2016; Tonelli et al. 2018). In a recent study (Tonelli et al. 2016), we trained sighted people in a depth echolocation task. Afterward few hours of training, participants were able to estimate objects depth with good accuracy and precision.

In the present study, we adopted an echolocation training in healthy participants, and then tested whether such training can modify PPS. To this aim, we evaluated the PPS around the head before and after an echolocation detection task, in which participants had to detect the presence of an object inside the peripersonal space by self-generated mouth clicks. To quantify the PPS, we adopted a behavioral measure, extensively used in previous studies to assess PPS in humans (Canzoneri et al. 2012, 2013a; Teneggi et al. 2013; Finisguerra et al. 2015; Noel et al. 2015) and to investigate the effects of different experimental manipulation and training on PPS representation (Canzoneri et al. 2013a, b; Bassolino et al. 2015; Maister et al. 2015; Salomon et al. 2017). In this task, participants had to respond as fast as possible to a tactile stimulus applied to their body, while task-irrelevant sounds were presented, giving the impression of a looming sound. Previous results showed that sounds speeded up the detection of tactile stimuli specifically when presented at a certain distance from the participants (and not farther from them). Such distance can be measured as a proxy of the extent of the participant’s PPS (Serino et al. 2015a, b). In addition to the experimental group, the same PPS task was administered to two control groups of participants to directly link any change in PPS representation to echolocation and to exclude general effects of increasing attention for an auditory stimulus at a given spatial location or task repetition. Thus, one control group performed the PPS task before and after a perceptual training, involving mainly temporal components (auditory time bisection), and the other group took simply a break between the two sessions of the PPS task.

Methods

Subjects

A total of 44 healthy sighted individuals were recruited to participate in this study (twenty four females; average age 25.93, SD = ± 4.43). Participants have been assigned to three groups (see below). Sixteen participants were allocated to the echolocation group (ECHO—2 participants were excluded from the analysis for their inability to complete the training), 14 participants to the temporal discrimination training (TIME) and 14 to the group who did not perform any task between the 2 PPS assessment (REST). All participants reported normal touch and hearing and gave written informed consent before starting the test. The study was approved by the ethics committee of the local health service (Comitato etico, ASL 3, Genova) and conducted in line with the Declaration of Helsinki.

Tasks and stimuli

Peripersonal space task

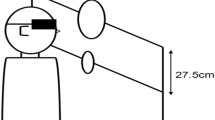

The task was to respond as quickly as possible to a vibro-tactile stimulation on the neck, ignoring sounds moving towards each participant. For the acoustic stimuli, we used a custom-made device comprising an array of seven serial connected loudspeakers placed on a table on the left of the participant (Fig. 1a). The first loudspeaker was located at 17 cm from the head (at the same elevation) and the last loudspeaker was at a distance of 119 cm. The distance between each loudspeaker was of 17 cm. The sounds (white noise) were originated from seven spatial sources so that we were able to precisely trigger the tactile stimulation when the sound was at the level of one of the loudspeakers located in space (Finisguerra et al. 2015). The sound moved along the distance of 102 cm in 3 s (i.e., at the speed of 34 cm/s). We sampled seven positions (17, 34, 51, 68, 85, 102, and 119 cm). For the tactile stimuli, we used a vibro-tactile custom-made device consisting of a vibration motor. The motor had a surface area of 18 mm2. The vibro-tactile device was placed on the left side of participants neck. Tactile stimulation lasted 20 ms. The sound and tactile stimuli were controlled through a custom-made code running on Matlab© software.

Experimental set-ups. a The set-up for the PPS task is shown. There were seven speakers generating sound sources at a different distance from the body. The first sound source was placed 17 cm apart from the left side of the head of each participant. The sound moved across the speakers as approaching the participant’s head (grey arrow). The vibro-tactile device was placed on the left side of the neck. The tactile stimulus was delivered when the sound was placed at one of the seven possible depicted distances (17, 34, 51, 68, 85, 102, 119). b The set-up for the echolocation detection task is shown. We used a bar located at 34 cm ahead the participant. The black the red arrows represent, respectively, the path of the self-generated click and the echo reflected by the bar. c The set-up of the temporal bisection task is shown

The PPS task consisted of three types of trials, randomized among the experimental block (Canzoneri et al. 2012; Serino et al. 2015b). The critical trial for the task were experimental audio-tactile trials, that were approximately 60% of the total trials. In these trials, participants heard a sound and, at a given moment in time, received a vibro-tactile stimulation, to which they were requested to respond saying ‘‘TAH’’ as quickly as possible, ignoring the auditory stimulus (Canzoneri et al. 2012). To record the time of vocal response, we performed several pilot testing in which we estimated the average noise of the experimental room (mean 52.5 dB, range 50–53 dB) and we set a threshold (53 dB) allowing to detect the 95% of the response of the participants. Then to estimate the RTs during the experiment, we subtracted the time of the vocal trigger (i.e., when the vocal response crossed the estimated threshold) from the time of the tactile stimulation. On each trial, the tactile stimulus was administered at one out of the seven temporal delays, which corresponded to a progressively shorter distance between the location of the sound and the body when the touch was given (e.g., the tactile stimulation just occurred at a specific point in time, i.e., when the sound was in a particular location in space). Approximately 20% of the trials were unimodal tactile trials, whereby the target vibro-tactile stimulus was delivered in the absence of auditory stimulation. Unimodal tactile trials were presented at two different temporal delays, before the beginning of the sound (500 ms) and after the end (500 ms) of it. These time delays have been calculated on the basis of the time that the sound takes to travel 17 cm which is the distance that separates one loudspeaker from the other. Finally, approximately 20% of trials were catch trials, through which only auditory stimuli were presented and participants were requested not to respond. These trials were included to avoid an automatic response, to assure that participants were attentive to the task and to minimize an expectancy effect intrinsic in the task (i.e., participants become faster in responding as the trial goes by as they increase their expectancy to receive the tactile target) (Kandula et al. 2017). Participants RTs were recorded by means of a microphone. Each participant performed a total of 140 trials, 28 unimodal tactile, 28 catch trials, and 12 trials for each audio-tactile combination.

Inter-trial-interval was not fixed and each trial was started by the experimenter.

Echolocation detection task

The task consisted in detecting an external object presented at about 34 cm from the mouth via the echo produced by emitted mouth clicks. A rectangular bar made of poly-methyl methacrylate (40 × 30 cm) was used as a target stimulus for the echolocation training. A beam microphone held the bar with the longer side placed vertically (Fig. 1b). The bar was located in front of the participant at the head level. Participants performed the task, for a total of 40 trials. The first 10 trials (practice block) were considered as practice. On the other 30 trials (training block), the percentage of correct answers was calculated (for more details see below). The bar was presented in the 50% of the trials. The participants had 20 s to give the response. To prevent from receiving any acoustic or floor vibration feedbacks due to the movement of the target, participants wore a pair of Philips SHL3000PP headphones, which played mixed music and the chair was located on a stack of rigid foam mats high 4.5 cm.

Auditory time bisection task

On each trial, participants heard three consecutive sounds and were requested to estimate which interval was shorter, the one between the first and the second sound or the one between the second and the third sound. The stimuli were 500 Hz tones, each having a duration of 75 ms. Sounds always came from the loudspeaker located at 34 cm from the head, i.e., at the same distance where the object for the echolocation task was placed. The experimenter took note of participants’ response at each trial. The interval duration between stimuli was determined by QUEST (Watson and Pelli 1983), an adaptive algorithm which estimates the best stimulus value to be presented after each trial, given by the current participant’s estimate. To ensure that a wide range of durations was sampled, the estimation was jittered by a random amount, drawn from a Gaussian distribution of time covering a range between 0 and 900 ms. The training included 80 trials. Inter-trial-interval was not fixed and each trial was started by the experimenter.

Distance perception task

Each trial was identical to the bimodal trials of the PPS, but in this case, participants were asked to verbally indicate the perceived position of the sound in space when they had felt the vibro-tactile stimulation, on a scale from 1 (very close) to 100 (very far) from the head. A total of 49 trials were performed, 7 for each position. The purpose of this task was to see whether participants were able to perceive the sound source at different locations according to their distance. It was performed by all participants.

Procedure

All participants started the experiment blindfolded. First, we evaluated the PPS for all participants (Fig. 1a), to assess the location of their PPS boundary before any training. Then participants were divided into three groups: ECHO, TIME, and REST group. Firstly, we collected the data for the ECHO group. We decided to exclude all participants whose performance in the training block of the echolocation task was worse than their practice performance, based on the percentage of correct responses. If the percentage of correct responses in the practice was lower than the percentage at the end of the training, the participant was excluded. Then we recruited the other thirty participants for the TIME and REST group that was pseudo-randomly assigned to each group.

The first group—ECHO group—(N = 14; 7 females) performed an echolocation detection task after the evaluation of the PPS (see above for the task description). They were asked to sit in a different location from where the PPS task was performed so that the loudspeakers did not interfere with the echolocation task. During the change of location, participants were allowed to remove the blindfold, but they did not see the object used for the task because it was hidden by view behind a cloth. Participants had no knowledge of echolocation technique, therefore, before the beginning of the task, they received instructions on how to produce the echolocation signals with their mouth, after that they were blindfolded again. The echolocation sound was naturally produced, using no external device. While the experimenter moved the target, participants wore headphone played mixed music. Once the experimenter placed the target, the participant received a patch on the shoulder as a signal to remove the headphones and start the trial.

Participants had to judge whether the bar was in front of them or not, producing mouth clicks and estimating their echoes (Fig. 1b). All participants performed 40 trials divided into 2 blocks. During the practice block, participants received a feedback on their responses.

The second group—TIME group—(N = 14, 7 female) performed an auditory time bisection task as training. To maintain the same procedure as for the ECHO group, the participants of the TIME group were allowed to remove the blindfold for a few minutes, but they put it on again before beginning the time bisection task.

The third group—REST group—(N = 14, 8 females) simply had 30 min of break. They were allowed to remove the blindfold for just a couple of minutes. For the rest of the time, they kept the blindfold on.

All participants performed a second time the PPS task, to measure the changes in their PPS representation, and removed the blindfold at the end of the task.

Moreover, all participants performed a distance perception task to confirm that they were able to discriminate the different sound locations accordingly to the seven actual sound source positions (Canzoneri et al. 2012; Finisguerra et al. 2015).

Results

First, we checked for any possible outliers in the RTs. We considered as outliers all the RTs below or above 2 standard deviations respect to the mean. An average of 2.34% (± 0.49) trials for the PRE condition and of 2.84% (± 0.41) for the POST condition have been excluded.

To eliminate the possibility that any effect was due to an expectancy effect, we corrected the bimodal RTs for the unimodal tactile RTs. To this aim, first we calculated the average of the tactile unimodal RTs collected before the onset of the sound in both experimental session (PRE and POST training); second, we selected the fastest RTs average between the one obtain in the PRE and POST training session; third we subtracted from the average of the raw bimodal RTs of both the experimental sessions the fastest unimodal RTs previously selected. We have made this correction for all the participant for each session.

To test whether there was a facilitation of bimodal RTs, we compared them for all the position in both session for each group against zero (that represent the facilitation threshold) using t test tests (with a Bonferroni correction for multiple comparisons). For the ECHO group the significant positions were 17 (t14 = − 2.1, p = 0.05), 51 (t14 = − 3.34, p < 0.05), 68 (t14 = − 2.39, p = < 0.05) before the training (PRE); after the training (POST) the significant positions were 17 (t14 = − 7.27, p < 0.01), 51 (t14 = − 7.9, p < 0.01), 68 (t14 = − 6.57, p = < 0.01), 85 (t14 = − 6.05, p < 0.01), 102 (t14 = − 6.39, p < 0.01). For the REST group the significant position both before and after the training were 17 (PRE, t14 = − 2.59, p < 0.05; POST, t14 = − 2.47, p < 0.05), 51 (PRE, t14 = − 2.35, p < 0.05; POST, t14 = − 2.94, p < 0.05). Instead for the TIME group the significant position before the training (PRE) were 17 (t14 = − 4.97, p < 0.01), 51 (t14 = − 2.67, p < 0.05), 68 (t14 = − 2.21, p = < 0.01), but just the first two positions after the training (POST, 17—t14 = − 3.05, p < 0.05 and 54—t14 = − 2.66, p < 0.05). Results demonstrated that in all group there was a difference in RTs between near and far positions respect to the body.

We run a three-way ANOVA with between factor GROUP (ECHO vs REST vs TIME) and two within factors Sound distance (17, 34, 51, 68, 85, 102 and 119) and Session (PRE, POST). There was a significant main effect of Session (F1,39 = 3.38, p = 0.05, η2 = 0.01) and Distance (F6,234 = 32.22, p = 3.06 × 10−28, η2 = 0.12). It was significant just the interaction between Group and Session (F2,39 = 5.1 p = 0.01, η2 = 0.04).

After, we performed three-separated ANOVAs for the baseline-corrected-RTs, one per group (Fig. 2) with the within factors of Sound distance (17, 34, 51, 68, 85, 102 and 119) and Session (PRE, POST). As expected, the main effect of distance was significant for all the groups (ECHO, F6,78 = 11.63, p = 2.87 × 10−9, η2 = 0.18; TIME, F6,78 = 13.35, p = 2.37 × 10−10, η2 = 0.12; REST, F6,78 = 8.62, p = 3.35 × 10−7, η2 = 0.07). Instead, the main effect for Session was significant only for the group ECHO (F1,13 = 25.83, p < 0.01, η2 = 0.19). Also the two-way interaction Sound distance × Session was significant for the Group ECHO (F6,78 = 2.28, p = 0.04, η2 = 0.02), and not for the other two groups (Group TIME, F6,78 = 0.06, p = 0.8, η2 = 0.0009; Group REST, F6,84 = 0.0001, p = 0.913, η2 < 0.004).

The averaged bimodal RTs (normalized for the unimodal RTs) for each group is shown as a function of the seven distances sampled during the PPS task. Data for the ECHO group before (in blue) and after (in red) the echolocation training. Data for the TIME group before (in green) and after (in orange) the time bisection task. Data for the REST group before (in magenta) and after (in cyan) 15 min of break. *Significant difference with p < 0.05. ***Significant difference with p < 0.001. The error bars represent the standard error

Post hoc tests (with a Bonferroni correction for multiple comparisons) on the group ECHO (Fig. 2a) revealed a significant reduction of corrected-RTs between POST and PRE sessions for sound sources at 17 (t14 = − 5.56, p < 0.001), 34 (t14 = − 6.85, p < 0.001) and 51 (t14 = − 7.46, p < 0.001), 85 (t14 = − 3.48, p < 0.05). To control that the effect was not due to a difference on the unimodal RTs (Fig. 3), used to correct the bimodal RTs, we run a two-way ANOVA on the unimodal RTs, with the within factor Session (PRE, POST) and the between factor Group (ECHO, REST and TIME). Results showed no significant effect for either of the main factors (Session, F1,39 = 2.9, p = 0.1; Group, F2,39 = 2.08, p = 0.14), nor for the interaction (F2,39 = 1.45, p = 0.24). The same analysis was run using raw RTs, for more information see supplementary materials.

Finally, to control for possible differences between groups before the training, we conducted a two-way ANOVA on the bimodal RTs obtained at the first PPS session, with the factors Group (ECHO, REST and TIME) and Sound distance. The main effect of distance was significant (F6,234 = 12.39, p = 4.12 × 10−12, η2 = 0.1), whereas no main effect of Group (F2,39 = 0.03, p = 0.9), nor a Sound Distance × Group interaction was found (F12,234 = 1.19, p = 0.3).

Considering performance in the secondary tasks, we checked whether the percentage of correct responses in the detection echolocation task was better than chance level (i.e., 50%—bar plot in Fig. 4 on the left) and which was the average accuracy and precision in the time bisection (psychometric function in Fig. 4 on the right). The percentage of correct responses in the echolocation task was 60.71% (t test, t14 = 3.86, p < 0.01), significantly above the chance level. In the time bisection, in order that the second interval was judged longer than the first, it had to last, on average, 497.57 ms, with an SD of 116.25 ms (maximum duration of the interval could be 900 ms).

The bar plot on the left reports the average percentage of correct responses in the detection echolocation task and each empty symbols represent the result of a single participant. The plots on the right report the average of the precision and accuracy obtained in the time bisection task. The scatter plots represent the value for each participant. The error bars represent the standard error

Finally, we checked that participants did perceive the different sounds as coming from separate locations, using the distance perception task. A repeated measure ANOVA run on participants’ responses, with Distance as a within-subject factor, indicated a main effect of Distance (F6,234 = 305.61, p = 1.14 × 10−112), indicating that, as expected, participants perceived sounds progressively closer to their body, as sounds approached. Data confirmed that participants perceived the sound source at different locations according to their distance (Fig. 5).

Estimate of sound distance in a scale from 1 to 100 as a function of point in space sampled for the seven-speaker set-up. Participants estimated sound distance for sounds originating from 119 cm in front (positive x value) and terminating at 17 cm at the head level. y-axis represents the average perceived value of the position of the sound on a scale from 1 to 100. The dashed line shows the equality line. The error bars represents the standard error

Discussion

In the present study, we showed that performing echolocation training with stimuli in the near space, affected multisensory interaction within the PPS. The training consists in detecting an external object presented at about 34 cm from the body via the echoes produced by the echolocation signals (mouth clicks). Compared to the RTs before the training, the RTs to tactile stimuli, coupled with looming sounds, speeded up around the area where the echolocation training was conducted.

This effect might not depend on a learning process of the task used to evaluate the PPS, due to task repetition. Indeed, participants of the REST group, who were tested twice after the same amount of time as for the ECHO group, did not show any change in their RTs between the first and the second PPS assessment.

Another explanation for the present effect is that the change in the multisensory interaction of the ECHO group within the PPS might be due to a general enhanced attention towards auditory stimuli in the near space. However, a shift of attention cannot explain, per se, the results of the ECHO group. Indeed, the participants of TIME group—who were engaged in a demanding task on auditory stimuli occurring exactly at the same location as the echolocation training—did not show any specific changes in multisensory interaction after the training.

A difference between the TIME group and the ECHO group is in the nature of the task performed in the near space. In the former is required to analyze temporal intervals between the sounds. On the contrary, the echolocation task is focused on acquire spatial feature from auditory cues. It can be argued that time bisection task can involve spatial component, in line with the view that temporal intervals are also mentally represented accordingly to spatial representations (Bonato et al. 2012). However, there are two fundamental differences between the mental processes involved in the TIME and ECHO tasks. Firstly, time–space overlap in mental representation acts on the lateral dimension of space (Vallesi et al. 2008), whereas the echolocation task, and the related changes in PPS representation, occur in depth. Secondly, the temporal training implied a pure perceptual task related to stimuli presented in a given position of space, whereas the echolocation task also implied a sensory-motor component: participants performed an action (i.e., emitting a sound with their mouth) and processed the sensory consequences of that action (i.e., the echo produced) in space. We suggest that this second sensory-motor aspect of the task is critical to determine its effect on multisensory processing. The sensory-motor spatial task implied in the echolocation training resembles tool-use. The action with a tool allows people to extend their action possibilities in order to get sensory information from the far space. Previous studies showed that PPS can be modified by tool-use training (Maravita and Iriki 2004; Holmes et al. 2007; Canzoneri et al. 2013b; Martel et al. 2016). Conversely, displace spatial attention towards the farther space to point towards far objects (Canzoneri et al. 2013b) or passively hold a tool (Farnè and Làdavas 2000) are not sufficient actions for PPS extension. More recently, Serino et al. (2015a) proposed a neural network model to explain plasticity in PPS representation induced by tool-use via multisensory congruency. They showed that the temporal congruency between a stimulus on the body and an auditory feedback from the far space drives the extension of multisensory integration towards the location of the sensory feedback. A similar mechanism can be suggested to explain the effect of the echolocation training: participants, producing the clicks with their mouth, performed a movement that generates a time coherent feedback between the tactile stimulation (mouth) and the echo from a further spatial location during the training. Our results suggest that the repetition for a given amount of time of such activity induces a specific effect on PPS processing for space where the training is performed.

In the present protocol, the object in the echolocation training was placed in a fixed position, near the participant. The present findings showed that, actually, the training did not induce an extension of the participants’ PPS, rather, it increased multisensory interaction around the location of the object (with a possible weaker effect for the origin of the echo generated by the room walls). The increase of multisensory processing in the near space might be seen as a difference compared to previous reports about the classic extension of PPS induced by tool-use. The present results do not show any PPS extension. However, previous tool-use studies (Farne et al. 2005; Farnè et al. 2005, 2007) also showed that the change of PPS processing is specific to the location where the tool is functionally used. Therefore, it occurs at closer distances from the body at the level of the functional part of the tool (Ursino et al. 2007; Magosso et al. 2010; Gallivan et al. 2013).

Echolocation is mainly used by blind people to locate objects in space or to navigate through the environment, to avoid obstacles. A similar function is achieved by blind people using the white cane. Interestingly, Serino et al. (2007) showed that a short training with a white cane is sufficient to temporarily modify PPS representation in sighted participants, whereas long-term blind cane users show a PPS representation which is extended toward the tip of the cane, as if the cane constitutes the new boundary of their PPS (Witt et al. 2005). Such a remapping of PPS representation seems to have an adaptive value, allowing to locate in advance a possible harmful object before it collides with the body (Rossetti et al. 2015). Unlike the white cane, that physically allows reaching the far space, echolocation allows the blind person to detect objects thanks to the interpretation of the echoes produced by the reflections of sounds. Therefore, we propose that echolocation is a way to reduce the lack of information about the space between the body and an external object in absence of visual cues. Increasing multisensory processing for that portion of space might be a key mechanism to achieve this function. Further investigations are needed to test whether echolocation can be compared to tool-use in the far space producing an extension of PPS.

Another point to highlight is related to how the task of the PPS is designed. It might occur that the participants react to tactile stimulation not using spatial cues. Indeed, it is possible that when the tactile stimulation occurs the reaction is based on the time delay from the begging of the sound and the tactile stimulation or on the duration of the sound. Therefore, it might be that the effect of echolocation training is not specific for space, but for time.

The issue about the temporal or spatial nature of the PPS task has been address by several experimental studies in the past. Canzoneri et al. (2012) compared the RTs between looming and receding sounds with tactile stimuli, given at the same delay, and associated to complementary and specular distances. The authors reported that the speeding up of tactile RTs depended on the distance of the sound and not by the temporal delay between the beginning of the sound and the tactile stimulation. Although the effect was stronger for looming sounds, as expected by the response properties of PPS neurons (Graziano and Cooke 2006). Similar findings have been replicated by Serino et al. (2015b); see also Salomon et al. (2017) for the visual version of the task with subthreshold stimuli). More recently, Finisguerra et al. (2015) showed a space-dependent and a delay-independent increase of motor evoked potentials induced by TMS stimulation over the primary motor cortex for both looming and receding sounds. Moreover, Noel et al. (2018) found a spatially dependent facilitation effect on RTs as a function of the velocity of the looming stimuli, i.e., faster sounds are associated with an extended PPS boundary. These results are in line with neurophysiology data on monkeys showing that the receptive fields of PPS neurons are more extended when probed with faster visual stimuli compared to slower ones (Fogassi et al. 1996).

Based on evidence in the literature, we can state that the enhancing of RTs is due to the spatial position of the sounds in space, even if an expectancy effect due to the temporal modulation of the task does exist (Kandula et al. 2017; Pellencin et al. 2018). Such effect is significantly weaker than the effect due to the position in space and it has been repetitively dissociated from it.

Moreover, if the task of PPS is based on time delay, why it has been found an effect just on the ECHO group and not in the TIME group, in which the training was specific for time intervals? This brings us back to the explanation, we gave above. We would like to point out that echolocation is not a technique that uses only spatial information. Echolocation use the delay between the sound produced and the coming back echoes to infer spatial information. So in both cases, PPS task and echolocation, there is a temporal and a spatial component, but the main component remains space.

To conclude, in this work we have shown—for the first time, to the best of our knowledge—that the representation of the PPS around the head can be modified by echolocation. This effect is not related to a training effect, nor to focusing attention to a specific spatial location. It likely depends on the plastic property of the PPS system, which adapts as a function of the congruency between a body action and a sensory feedback from a given position in space.

References

Avenanti A, Annela L, Serino A (2012) Suppression of premotor cortex disrupts motor coding of peripersonal space. Neuroimage 63:281–288

Bassolino M, Finisguerra A, Canzoneri E et al (2015) Dissociating effect of upper limb non-use and overuse on space and body representations. Neuropsychologia 70:385–392

Bonato M, Zorzi M, Umiltà C (2012) When time is space: evidence for a mental time line. Neurosci Biobehav Rev 36:2257–2273. https://doi.org/10.1016/j.neubiorev.2012.08.007

Brozzoli C, Cardinali L, Pavani F, Farnè A (2010) Action-specific remapping of peripersonal space. Neuropsychologia 48:796–802. https://doi.org/10.1016/j.neuropsychologia.2009.10.009

Canzoneri E, Magosso E, Serino A (2012) Dynamic sounds capture the boundaries of peripersonal space representation in humans. PLoS One 7:3–10. https://doi.org/10.1371/journal.pone.0044306

Canzoneri E, Marzolla M, Amoresano A et al (2013a) Amputation and prosthesis implantation shape body and peripersonal space representations. Sci Rep. https://doi.org/10.1038/srep02844

Canzoneri E, Ubaldi S, Rastelli V et al (2013b) Tool-use reshapes the boundaries of body and peripersonal space representations. Exp Brain Res 228:25–42. https://doi.org/10.1007/s00221-013-3532-2

Cardinali L, Brozzoli C, Farnè A (2009) Peripersonal space and body schema: two labels for the same concept? Brain Topogr 21(3–4):252–260

Cléry J, Guipponi O, Wardak C, Ben Hamed S (2015) Neuronal bases of peripersonal and extrapersonal spaces, their plasticity and their dynamics: knowns and unknowns. Neuropsychologia 70:313–326. https://doi.org/10.1016/j.neuropsychologia.2014.10.022

De Vignemont F, Iannetti GD (2015) How many peripersonal spaces? Neuropsychologia 70:327–334

Dijkerman HC, Farnè A (2015) Sensorimotor and social aspects of peripersonal space. Neuropsychologia 70:309–312

Farne A, Bonifazi S, Ladavas E (2005) The role played by tool-use and tool-length on the plastic elongation of peri-hand space: a single case study. Cogn Neuropsychol. https://doi.org/10.1080/02643290442000112

Farnè A, Làdavas E (2000) Dynamic size-change of hand peripersonal space following tool use. Neuroreport 11:1645–1649

Farnè A, Iriki A, Làdavas E (2005) Shaping multisensory action-space with tools: evidence from patients with cross-modal extinction. Neuropsychologia 43(2):238–248

Farnè A, Serino A, Làdavas E (2007) Dynamic size-change of peri-hand space following tool-use: determinants and spatial characteristics revealed through cross-modal extinction. Cortex. https://doi.org/10.1016/S0010-9452(08)70468-4

Ferri F, Ardizzi M, Ambrosecchia M, Gallese V (2013) Closing the gap between the inside and the outside: interoceptive sensitivity and social distances. PLoS One 8(10):e75758

Finisguerra A, Canzoneri E, Serino A et al (2015) Moving sounds within the peripersonal space modulate the motor system. Neuropsychologia 70:421–428. https://doi.org/10.1016/j.neuropsychologia.2014.09.043

Fogassi L, Gallese V, Fadiga L et al (1996) Coding of peripersonal space in inferior premotor cortex (area F4). J Neurophysiol 76:141–157

Gallivan JP, Mclean A, Valyear KF, Culham JC (2013) Decoding the neural mechanisms of human tool use. Elife. https://doi.org/10.7554/eLife.00425.001

Graziano MSA, Cooke DF (2006) Parieto-frontal interactions, personal space, and defensive behavior. Neuropsychologia 44:2621–2635. https://doi.org/10.1016/j.neuropsychologia.2005.09.009

Heed T, Habets B, Sebanz N, Knoblich G (2010) Others’ actions reduce crossmodal integration in peripersonal space. Curr Biol. https://doi.org/10.1016/j.cub.2010.05.068

Holmes NP, Spence C (2004) The body schema and multisensory representation(s) of peripersonal space. Cogn Process 5:94–105. https://doi.org/10.1007/s10339-004-0013-3

Holmes NP, Calvert G, Spence C (2004) Extending or projecting peripersonal space with tools? Multisensory interactions highlight only the distal and proximal ends of tools. Neurosci Lett 372:62–67. https://doi.org/10.1016/j.neulet.2004.09.024

Holmes NP, Sanabria D, Calvert GA, Spence C (2007) Tool-use: capturing multisensory spatial attention or extending multisensory peripersonal space? Cortex. https://doi.org/10.1016/S0010-9452(08)70471-4

Kandula M, Van der Stoep N, Hofman D, Dijkerman HC (2017) On the contribution of overt tactile expectations to visuo-tactile interactions within the peripersonal space. Exp Brain Res 235(8):2511–2522

Kolarik AJ, Cirstea S, Pardhan S, Moore BCJ (2014) A summary of research investigating echolocation abilities of blind and sighted humans. Hear Res 310:60–68. https://doi.org/10.1016/j.heares.2014.01.010

Kolarik AJ, Scarfe AC, Moore BCJ, Pardhan S (2016) An assessment of auditory-guided locomotion in an obstacle circumvention task. Exp Brain Res. https://doi.org/10.1007/s00221-016-4567-y

Ladavas E, Serino A (2008) Action-dependent plasticity in peripersonal space representations. Cogn Neuropsychol 25:1099–1113

Làdavas E, di Pellegrino G, Farnè a, Zeloni G (1998) Neuropsychological evidence of an integrated visuotactile representation of peripersonal space in humans. J Cogn Neurosci 10:581–589. https://doi.org/10.1162/089892998562988

Macaluso E, Maravita A (2010) The representation of space near the body through touch and vision. Neuropsychologia 48:782–795

Magosso E, Ursino M, di Pellegrino G et al (2010) Neural bases of peri-hand space plasticity through tool-use: insights from a combined computational-experimental approach. Neuropsychologia 48:812–830. https://doi.org/10.1016/j.neuropsychologia.2009.09.037

Maister L, Cardini F, Zamariola G et al (2015) Your place or mine: shared sensory experiences elicit a remapping of peripersonal space. Neuropsychologia. https://doi.org/10.1016/j.neuropsychologia.2014.10.027

Makin TR, Holmes NP, Brozzoli C et al (2009) Coding of visual space during motor preparation: approaching objects rapidly modulate corticospinal excitability in hand-centered coordinates. J Neurosci 29:11841–11851

Maravita A, Iriki A (2004) Tools for the body (schema). Trends Cogn Sci 8:79–86. https://doi.org/10.1016/j.tics.2003.12.008

Martel M, Cardinali L, Roy AC, Farnè A (2016) Tool-use: an open window into body representation and its plasticity. Cogn Neuropsychol 33:82–101. https://doi.org/10.1080/02643294.2016.1167678

McNaughton BL, Nadel L (1990) Hebb-Marr networks and the neurobiological representation of space. In: Neuroscience and connectionist theory. Chapter 1, Erlbaum, Hillsdale, NJ, pp 1–63

Noel J-P, Grivaz P, Marmaroli P et al (2015) Full body action remapping of peripersonal space: the case of walking. Neuropsychologia 70:375–384. https://doi.org/10.1016/j.neuropsychologia.2014.08.030

Noel J-P, Blanke O, Magosso E, Serino A (2018) Neural adaptation accounts for the dynamic resizing of peripersonal space: evidence from a psychophysical-computational approach. J Neurophysiol 119:2307–2333

Occelli V, Spence C, Zampini M (2011) Audiotactile interactions in front and rear space. Neurosci Biobehav Rev 35:589–598

Pasqualotto A, Spiller MJ, Jansari AS, Proulx MJ (2013) Visual experience facilitates allocentric spatial representation. Behav Brain Res 236:175–179. https://doi.org/10.1016/j.bbr.2012.08.042

Pellencin E, Paladino MP, Herbelin B, Serino A (2018) Social perception of others shapes one’s own multisensory peripersonal space. Cortex 104:163–179

Rizzolatti G, Fadiga L, Fogassi L, Gallese V (1997) The space around us. Science 277:190–191. https://doi.org/10.1126/science.277.5323.190

Rossetti A, Romano D, Bolognini N, Maravita A (2015) Dynamic expansion of alert responses to incoming painful stimuli following tool use. Neuropsychologia 70:486–494

Salomon R, Noel J-P, Łukowska M et al (2017) Unconscious integration of multisensory bodily inputs in the peripersonal space shapes bodily self-consciousness. Cognition 166:174–183

Schenkman BN, Nilsson ME (2010) Human echolocation: blind and sighted persons’ ability to detect sounds recorded in the presence of a reflecting object. Perception 39:483–501. https://doi.org/10.1068/p6473

Serino A (2016) Variability in multisensory responses predicts the self-space. Trends Cogn Sci 20:169–170

Serino A, Bassolino M, Farnè A, Làdavas E (2007) Extended multisensory space in blind cane users. Psychol Sci 18:642–648. https://doi.org/10.1111/j.1467-9280.2007.01952.x

Serino A, Annella L, Avenanti A (2009) Motor properties of peripersonal space in humans. PLoS One 4(8):e6582

Serino A, Canzoneri E, Marzolla M et al (2015a) Extending peripersonal space representation without tool-use: evidence from a combined behavioral-computational approach. Front Behav Neurosci 9:4. https://doi.org/10.3389/fnbeh.2015.00004

Serino A, Noel J-P, Galli G et al (2015b) Body part-centered and full body-centered peripersonal space representations. Sci Rep 5:18603. https://doi.org/10.1038/srep18603

Supa M, Cotzin M, Dallenbach KM (1944) “Facial Vision”: the perception of obstacles by the blind. Am J Psychol 57:133. https://doi.org/10.2307/1416946

Teneggi C, Canzoneri E, Di Pellegrino G, Serino A (2013) Social modulation of peripersonal space boundaries. Curr Biol 23:406–411. https://doi.org/10.1016/j.cub.2013.01.043

Teng S (2011) The acuity of echolocation: spatial resolution in the sighted compared to expert performance. J Vis Impair Blind 105:20–32

Thaler L, Castillo-Serrano J (2016) People’s ability to detect objects using click-based echolocation: a direct comparison between mouth-clicks and clicks made by a loudspeaker. PLoS One 11:e0154868. https://doi.org/10.1371/journal.pone.0154868

Thaler L, Wilson RC, Gee BK (2014) Correlation between vividness of visual imagery and echolocation ability in sighted, echo-naïve people. Exp brain Res 232:1915–1925. https://doi.org/10.1007/s00221-014-3883-3

Tonelli A, Brayda L, Gori M (2016) Depth echolocation learnt by novice sighted people. PLoS One 11:e0156654. https://doi.org/10.1371/journal.pone.0156654

Tonelli A, Campus C, Brayda L (2018) How body motion influences echolocation while walking. Sci Rep 8:15704. https://doi.org/10.1038/s41598-018-34074-7

Ursino M, Zavaglia M, Magosso E et al (2007) A neural network model of multisensory representation of peripersonal space: effect of tool use. In: 2007 29th annual international conference of the IEEE engineering in medicine and biology society. IEEE, pp 2735–2739

Vallesi A, Binns MA, Shallice T (2008) An effect of spatial-temporal association of response codes: understanding the cognitive representations of time. Cognition. https://doi.org/10.1016/j.cognition.2007.10.011

Watson AB, Pelli DG (1983) QUEST: a Bayesian adaptive psychometric method. Percept Psychophys 33:113–120

Witt JK, Proffitt DR, Epstein W (2005) Tool use affects perceived distance, but only when you intend to use it. J Exp Psychol Hum Percept Perform 31:880–888

Author information

Authors and Affiliations

Contributions

AT and MG conceived and designed the project. CC and AT performed experiments. CC developed stimuli presentation devices. AT and CC analyzed data. AT, CC, AS, and MG wrote and edited the manuscript. All authors gave final approval for publication.

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

OpenAccess This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Tonelli, A., Campus, C., Serino, A. et al. Enhanced audio-tactile multisensory interaction in a peripersonal task after echolocation. Exp Brain Res 237, 855–864 (2019). https://doi.org/10.1007/s00221-019-05469-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-019-05469-3