Abstract

Within manufacturing there is a growing need for autonomous Tool Condition Monitoring (TCM) systems, with the ability to predict tool wear and failure. This need is increased, when using specialised tools such as Diamond-Coated Burrs (DCBs), in which the random nature of the tool and inconsistent manufacturing methods create large variance in tool life. This unpredictable nature leads to a significant fraction of a DCB tool’s life being underutilised due to premature replacement. Acoustic Emission (AE) in conjunction with Machine Learning (ML) models presents a possible on-machine monitoring technique which could be used as a prediction method for DCB wear. Four wear life tests were conducted with a \(\varnothing \)1.3 mm #1000 DCB until failure, in which AE was continuously acquired during grinding passes, followed by surface measurements of the DCB. Three ML model architectures were trained on AE features to predict DCB mean radius, an indicator of overall tool wear. All architectures showed potential of learning from the dataset, with Long Short-Term Memory (LSTM) models performing the best, resulting in prediction error of MSE = 0.559 \(\mu \)m\(^{2}\) after optimisation. Additionally, links between AE kurtosis and the tool’s run-out/form error were identified during an initial review of the data, showing potential for future work to focus on grinding effectiveness as well as overall wear. This paper has shown that AE contains sufficient information to enable on-machine monitoring of DCBs during the grinding process. ML models have been shown to be sufficiently precise in predicting overall DCB wear and have the potential of interpreting grinding condition.

Similar content being viewed by others

1 Introduction

Real-time Tool Condition Monitoring (TCM) is becoming more desirable within the manufacturing industry to combat the costs associated with tool wear and failure. Tool wear not only impacts the machined surface quality and machining precision but also the process efficiency, with 20% of machine tool downtime being a result of tool failure [1]. This problem is exacerbated when utilising specialised tools commonly found within the grinding sector. Diamond-Coated Burrs (DCBs) are used extensively in the manufacture of high hardness ceramic or glass components, with complex forms preventing the use of traditional grinding wheels. Similarly, to traditional machine tooling, DCBs come in a variety of sizes, profiles, grits and construction types. Dissimilarly however, DCBs have large variation in tool life between tools of the same specification. As small diameter mono-layer tools, the grinding behaviour changes throughout each DCB’s life and it is not possible to dress DCBs to achieve low run-out [2, 3]. Additionally the electroplating fabrication method causes wheel parameters such as: grain density, spacing and protrusion height all to follow a normal distribution, in which the standard deviation increases with decreasing tool diameter [4]. These factors and inherent variation make predictive monitoring of a DCB’s Remaining Usable Life (RUL) difficult without offline measurement. As such, it is typical for only 50-80% of the tool life to be utilised [5]. DCBs are categorised by their manufacturing method, electroplated or sintered. Electroplated DCBs are mono-layer tools, in which a singular layer of diamond grains are plated to the tool’s core with a nickel bonding material, Fig. 1 shows the diamond grains (black) protruding from the nickel bond material (grey). Due to the increase in costs of sintered DCBs above the micro level, electroplated tools are more widely used. Utilised as "mill-grinding" tools DCBs share characteristics from both grinding wheels and end mills. Recent work has shown that DCB tools wear via similar mechanisms found in grinding wheels, mainly grain fracture and pullout [6, 7]. The monitoring of these tools however is very limited. Huang et al. [8] is the only study to explore this area, in which the impact of differing DCB wear states on acquired Acoustic Emission (AE) features was investigated. Amplitude of both the AE time domain and specific frequency ranges were shown to be affected by tool wear state, suggesting this could form the basis for a monitoring system of DCBs. But with limited tool wear measurements during one wear test, the work does not show how the tool’s evolution affects AE and whether these features are common for multiple DCBs during different tests.

1.1 Background

1.1.1 Grinding wear

Grinding applications have long been a key area of study for TCM systems due to their complicated and random nature, when compared to conventional machining processes. Grinding tool wear is the result of the culmination of many individual interactions between abrasive grains and the workpiece material [9]. Three main mechanisms dominate the wear process of grinding tools: attritous wear, micro/macro grain fracture and bond fracture [10, 11]. Attritous wear involves the flattening/dulling of abrasive grains due to insufficiently large grinding forces for fracture. Grain micro fracture is the favourable wear mechanism enabling the tool to stay sharp with a slow but consistent wear rate. Grain macro-fracture differs by breaking the grain into larger fragments, implying the complete removal of the grain is imminent and rapidly accelerates tool wear. Bond wear occurs mainly through removed material debris and can lead to grain pullout [11, 12].

Overall, tool wear results from a combination of all three wear mechanisms, making the grinding tool’s end of life hard to identify. Excessive grinding forces and out of tolerance workpieces both suggest a grinding wheel has reached end of life [13]. But, without a skilled operators involvement or direct measurement it is difficult to ascertain a tool’s wear state. As a result, a grinding tool’s RUL is normally quantified by either volumetric loss, radial wear or time spent grinding [9, 14]. These three metrics are limited because they are not informed by the current tool’s wear state potentially leading to large under/over predictions of a tool’s RUL.

1.1.2 Sensing methods: acoustic emission

Current tool wear measurement techniques are classified by their sensing methods. Direct methods utilise optical or physical means to measure tool wear, whereas indirect methods infer the wear from a variety of sensors such as AE, vibration, cutting force, spindle power and contact resistance [15]. Whilst indirect methods are typically less precise, they are favourable over direct methods within the manufacturing industry for two main reasons: difficultly to optically measure within a machine environment, and the expense of measuring with respect to both time and cost [16].

Of the indirect monitoring methods, AE has become one of the most promising and widely used methods for grinding process monitoring [17]. The AE technique utilises the phenomenon in which as a material experiences a permanent change from damage, energy is spontaneously released in the form of elastic waves. These high frequency elastic waves are monitored and recorded to form the basis of AE monitoring. Unlike most other acquisition methods, AE can be generated by very small interactions between the tool and workpiece. Lee [18] was able to identify changes between brittle and ductile regimes in an atomic force microscope based nanomachining operation with traditional AE analysis techniques utilising both AE time and frequency domain features. Pandiyan and Tjahjowidodo [19] identified changing material removal mechanisms (rubbing, ploughing and cutting) of a single aluminium oxide abrasive grain, from the Short Time Fourier Transform (STFT) of acquired continuous AE. AE’s high signal/noise ratio and sensitivity at the micro scale, both of which stem from AE’s inherent high frequency content (ranging from 10 kHz - 2.5 MHz), make it desirable when compared to other sensing methods which are typically limited at extremely small depths of cut [20,21,22]. The higher frequency range of AE prevents machine noise from dominating the acquired signals, isolating the microscale machining mechanisms within noisy machining environments [23, 24]. AE sensor choice, coupling and location all impact the acquired signals. High frequency signals, such as AE, attenuate with distance and surface boundaries, consequently AE sensors operate best when mounted as close to the source as possible. Typically within machine tools, AE sensors are bonded to the workpiece directly [25, 26], the work-holding device [17, 27] or spindle head [28, 29]. Additionally it is possible to acquire AE with coolant as a transmission pathway [30, 31].

As a result AE is well-suited to the monitoring of machining processes at the precision level, stemming from its ability to detect microscale deformation mechanisms within noisy machining environments, leading many researchers to utilise AE for grinding applications. Yesilyurt et al. [17] monitored plunge grinding with AE, and was able to identify healthy and burn-damaged conditions from time-frequency processing methods. Additionally, the sensitivity of AE allowed changes in operating conditions, depth of cut and infeed rate, to be identified. When cutting conditions were changed the frequency spectra’s shape remained consistent but its power at certain peaks were altered. Wan et al. [26] identified high correlation frequency bands between 0 - 300 kHz from AE signals collected during the grinding of alumina ceramics. Signal features were then extracted and a random forest optimisation algorithm then enabled the prediction of grinding wheel wear states. Leading to a classification accuracy of 90.6%, when using features from within these frequency bands. Bi et al. [27] employed linear discriminant analysis (LDA) in which samples of AE from tools of varying wear levels were projected into a low-dimensional feature space. LDA was capable of showing division between states of wear, and with the addition of sub-states allowed the real-time prediction of wear state based solely on samples from the current grinding wheel.

1.1.3 Monitoring and prediction approaches

To effectively predict traditional grinding wheel wear a range of methods have been developed consisting of physics-based models and data-driven/statistical models [32]. Physics-based models require in-depth knowledge of the system to generate models based on the fundamental failure mechanisms. Accurate analytical models are rare due to the complexity and incomplete understanding of the wear process, and as such are typically limited in scope and applications. Data-driven models require significant data in order for the models to be trained, but require little expertise about the process [33]. Due to the random nature of grinding processes, statistical or data-driven models are more commonly employed and successful as the models can easily be updated in real-time when the process inherently changes [32, 34]. Additionally, as the increase of collected data to monitor machine tools becomes more prevalent, advancements in Machine Learning (ML) and deep learning methods that can exploit this information are highly desirable [35]. ML approaches stand out within the data-driven category, comprising of many methods and architectures all of which allow for the training of a model based on inputted raw data or extracted features. A range of ML architectures have been employed and validated within TCM, for a variety of problems. The most popular category of which are Artificial Neural Networks (ANNs), mainly Multi-Layer Perceptron (MLP), Recurrent Neural Network (RNN) and Convolutional Neural Network (CNN). MLP neural networks are a standard yet effective architecture for a range of applications, being capable of handling large datasets and multiple inputs. Defined as a feed-forward neural network comprised of at least three fully-connected ("Dense") layers: an input layer, hidden layer/s and an output layer. Abu-Mahfouz [36] demonstrated the capability of an MLP architecture to classify the type and state of wear of twist drills based on vibration signals. A combination of time and frequency domain features were extracted and used as inputs, leading to a classification rate of 80% in drill wear type. A detection model for grinding wheel burn from AE was developed by Wang et al. [37]. Two feature groups from AE were selected as inputs to MLP models, autoregressive features and averaged statistical properties, from which regions of burn could easily be identified, even when trained on a small dataset. Moia et al. [38] used three AE time domain statistics, including \(AE_{rms}\), to classify grinding wheel condition. AE was acquired during the dressing process, leading to a mean classification error of \(<0.3\)%. However, a limitation of this application, was the binary classification states of dressed or un-dressed. A different approach was taken by Nakai et al. [39], in which MLP regression models were optimised for radial grinding wheel wear, allowing a continuous range for prediction. The study also compared the effect of differing sensor inputs and features, with the three best performing model all utilising only AE based features. Prediction percentage errors of < 4.8% across three depth of cuts were achieved, but due to the required dataset size training occurred offline.

CNNs are a widespread and advanced deep learning method, having been successful for computer vision, image recognition and classification tasks. CNNs require 2-Dimen-sional input data, which typically takes the form of images. Through three main layers convolutional, pooling and fully-connected, CNN models are able to classify 2D data based on similar patterns or features [35, 40]. Gouarir et al. [41] used an encoded representation of each force component as inputs for a singular CNN. Force data was taken from a milling operation using a 6 mm ball nose end mill over 315 cutting passes. The CNN was trained to classify the data into three states (rapid initial wear, uniform wear and failure wear), leading to an overall accuracy of 90% without any feature extraction or selection having taken place. However, due to the shorter nature of the initial and failure wear states, misclassification rates were higher when labelling data in these categories. Bi et al. [25] utilised a CNN model for grinding wheel classification based on raw AE waveform inputs. The selected model contained two convolutional layers, a max-pooling layer and two additional fully-connected layers before the output layer, leading to a prediction accuracy of \(>90\%\). This result however is of more interest as the authors used 19 prediction labels representing 19 sequential wear states, instead of three states. Additionally Bi et al. [25] visualised the outputs of the two convolutional layers, which indicated the layers focused on differing frequency ranges of AE (150-200 kHz and 0-50 kHz respectively). Showing the capability of CNNs to extract differing AE components from the raw time-domain signal. Recently the use of CNN models has allowed for the direct use of time-frequency domain representations, such as the STFT and various wavelet transform methods, potentially allowing a more informationally dense input feature [42,43,44,45].

A limitation of both MLP and CNN models is their lack of consideration for the temporal nature of certain input data types. RNNs were developed to fill this void, enabling models to have access to the history of previous inputs. This memory allows RNNs to learn representations across sequenced or time-series data [46]. Long Short-Term Memory (LSTM) models have prevailed as the most useful and applicable RNN, allowing long-term and short-term dependencies to be captured [47]. LSTMs have been applied to a range of problems, including speech recognition [48], genome modelling [49] and natural language processing [50]. Zhao et al. [32] compared multiple ML architectures against both basic and deep LSTM models for regression of flank wear of a ball nose end mill. The dataset comprised of input data of force and vibration signals collected during milling, and flank wear measurements were conducted offline after every cut as the desired output. Without feature selection deep LSTM models (comprising of 21-21-28 LSTM cells) performed well across the two metrics, root mean squared error of 13.73 and a mean absolute error of 10.73 across three datasets. When compared with the entire measured dataset the trained model showed the capability to predict and follow the trend of wear across the whole cycle. Guo et al. [51] applied a similar experimental procedure to grinding wheel wear. With a combination of data from force, vibration and acoustic emission sensor, a LSTM model was able to achieve a root mean squared error of 0.240 and a \(\text {R}^{\text {2}}\) score of 0.994. This is a large improvement in prediction scores compared to Zhao et al. [32] but does require the selection and processing of input features prior to training.

Based on the presented literature this is the first paper to utilise ML for TCM on this type of tool supported by direct wear measurements. A series of four tool life tests were conducted, allowing the collection of AE and wear measurements throughout a DCB’s life. During all grinding passes, an AE sensor mounted to the workpiece was used as an indirect sensing method and acquired AE throughout, with DCB circumferential surface profiles measured immediately after each pass. A Renishaw NC4 system was utilised to measure surface profiles within the machine tool, negating the need to remove the tool from the spindle for direct measurements, a key point to maintain consistent levels of run-out throughout the test. Existing techniques were utilised to train prediction models for regression analysis using MLPs, MLP with sliding windows and LSTMs, to predict the expected tool wear from AE features. The performance of each model architecture was evaluated with repeated Cross-Validation (CV) techniques from which they were compared. With the overarching aim of validating existing ML techniques for an AE based TCM system to be applied to DCB monitoring. Overall, a means of effective monitoring and prediction of DCB tool wear during grinding was demonstrated with the hope to improve the efficiency and quality of the DCB grinding process whilst reducing costs.

2 Methods

This section presents the methodology in order to acquire the relevant AE and tool wear data, as well as the techniques utilised to develop and evaluate the predictive TCM models.

2.1 Experimental procedure

To gain sufficient data for the supervised learning process, four tool life tests were conducted. Every test started with a new DCB and concluded when total tool failure was detected, in between which the tool repeated the same grinding operation and underwent periodic surface measurements. A Genentech \(\varnothing \)1.3 mm #1000 DCB tool was used in each test, grinding silicon carbide workpieces. To capture a comprehensive dataset, each tool was run until failure, ensuring data was acquired during the tool’s final wear stage [10, 52]. Tool failure was determined by a reduction in tool length \(\ge \) 0.5 mm between consecutive grinding passes, measured by a Renishaw NC4+ blue optical tool setter [53]. This indicates a complete failure of the tool.

Throughout all four tests the grinding parameters and tool specifications were kept constant, detailed in Table 1. As a result, variations in the total number of cuts completed in each test were the effect of differing initial tool conditions or varying wear rates, during the test.

To wear the DCB a side milling grinding operation was selected, it allowed for easier observation of the workpeice surface post-grinding and is a good representation of a common mill-grinding operation used with DCBs [54,55,56]. Figure 2 shows the side milling operation with labelled dimensions from Table 1. A cut comprised of a single grinding pass across the 20 mm width of the workpiece, L. Grinding was conducted in a Jingdiao VT600 A12S machine centre, operating with a water-based coolant to prevent thermal degradation of the diamond tools.

After every grinding pass, the tool’s surface was measured by the Renishaw NC4+. Circumferential surface profiles were measured without changing or removal of the tool, eliminating changing levels of run-out between the spindle and tool collet. Each circumferential measurement was taken at halfway up the axial depth of cut, \(a_p\) (2.5 mm from the end of the tool). This measurement location is recommended by Renishaw as standard operating procedure, minimising the chance of not measuring the region of interest, due to thermal drift or misalignment.

To record the AE, a MISTRAS Wideband Differential (WD) sensor [57] was bonded with silicon sealant to the workpeice’s top surface. Figure 3 shows the position of the AE sensor in relation to the workpiece and grinding face. The mounting position was chosen to minimise signal attenuation without compromising ease of attachment. WD sensors have high sensitivity across a wide bandwidth 100 - 900 kHz with minimal dropoff outside this range, which is beneficial when the frequency content of the signal is unknown. During each grinding pass AE was sampled continuously at 2 MHz by a National Instruments PXI oscilloscope, having been conditioned by a MISTRAS 2/4/6 pre-amplifier prior to acquisition. The pre-amplifier filtered the AE through a 0 - 1200 kHz bandpass filter as well as amplifying with a gain of 20 dB. To ensure the AE system was set up correctly and the mounting position was adequate, prior to all tests a Hsu-Neilson pencil lead break test was conducted both next to the sensor and at the cutting face [58]. Figure 4 shows overall layout of equipment within the machine tool.

2.2 Approach for TCM regression model

Wear tests following the experimental procedure in Section 2.1 record continuous AE during each grinding pass followed by measuring the tool’s wear state, enabling a predictive regression model to be produced with these two measurements as the input and outputs respectively. Mean radius was chosen as the regression target from the tool measurements, as the best indicator of RUL following the trend of a typical wear cycle, detailed in Section 3.1. This choice is relevant as volumetric loss of grinding wheels is a common metric for RUL within manufacturing [9, 14]. The aim of the developed models was to predict the given overall DCB wear, a product of the mean tool radius, after a given pass with solely AE inputs.

2.2.1 Pre-processing

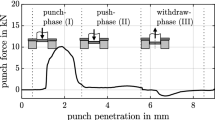

Prior to models being trained, the data first was pre-processed, split and normalised. It is common for AE data to be represented as simplified extracted features, acting as a basic method of dimensionality reduction [59]. With careful selection, these extracted features have been shown to contain sufficient information to discriminate between different damage modes and sources [60, 61]. From each AE signal, four time domain and three frequency domain features were extracted. Table 2 shows the extracted time domain features. The partial power from 3 different 1 kHz frequency bins (10, 35 and 134 kHz) were extracted from the power spectrum density (PSD) of each signal. These frequency bins were chosen based on their pearson correlation coefficient with the target feature. The Fast Fourier Transform (FFT) was used to transform the time domain AE signals into the frequency domain, with a Hanning window of 1000 samples per window. From which the PSD is calculated as the modulus squared of the FFT [62,63,64]. Each feature was calculated only during the period of grinding, shown by the trigger points in Fig. 5.

To get a true evaluation of any ML model, the dataset was then randomly split into a training and validation set, with 20% of the data being reserved only for validation scoring. After which the data was normalised to a range between 0-1 using Eq. 1, a common technique when the features are of vastly different scales. Importantly the validation data was scaled based on minimum and maximum values from the training set, avoiding contamination between the two datasets.

2.2.2 Model architecture

With the collected dataset, the frequent wear measurements enabled the problem to be framed as supervised regression in place of the more commonly utilised classification techniques for TCM. Three different ANN architectures were selected, optimised and evaluated: MLP, MLP with sliding time window (\(\text {MLP\_WIN}\)) and LSTM.

MLP models are a simple and well researched form of ANN, and as such are the ideal baseline for comparison of other architectures or novel processing techniques. MLPs are feed-forward networks comprising of at least three layers of perceptrons/neurons; the input layer, the hidden layer/s and the output layer. Figure 6 shows a diagrammatic representation of the MLP architecture, displaying each layer and neuron connections. For a given neuron in layer k it is connected to each neuron in the previous layer \(k-1\), forming a fully-connected or "dense" layer, as seen in Fig. 6. Each input to every neuron is separately weighted, before the sum is added to a bias term and passed through an activation function, \(\phi \). The activation function allows for the introduction of non-linearity into the model, a vital component of MLPs success. The computation of the output for a fully connected layer, is shown in Eq. 2:

In which \(\varvec{X}\) represents the matrix of inputs to the layer, \(\varvec{W}\) the matrix of weights and \(\varvec{b}\) the bias vector [65]. The choice of activation function, \(\phi \), has a large impact on the hidden layers capability to learn representations and the type of predictions the output layer can create. There are a wide range of common functions, most notably the logistic sigmoid, the hyperbolic tangent function and the Rectified Linear Activation (ReLU) [66]. Through the optimisation of both \(\varvec{W}\) and \(\varvec{b}\) with a gradient descent algorithm, to minimise the loss function, MLP models can approximate almost all continuous functions if sufficiently complex [67]. In this work a vector of the seven AE selected features collected during each grinding pass are used as inputs into the MLP, to predict the subsequent DCB mean radius.

Within MLP models there are no feed-back connections, in which the outputs of layers or neurons are fed back into itself. RNNs utilise this idea of sharing parameters across the model to work with sequential data. This small inclusion of cyclic connections allows RNNs to map the whole history of previous inputs to each output [68]. Currently the most effective and utilised architecture for working with sequenced data are LSTM models. Developed by Hochreiter and Schmidhuber [46] to remedy the vanishing gradient problem [69], the ability of LSTM models to generalise across large time steps allow it to represent both short and long term dependencies, a significant improvement from classic RNNs.

Data inputted into LSTM models is formatted as a 3D matrix of shape: (batch size, time steps, input features), where batch size relates to the number of sequences to input from the database, time steps is the length of each inputted feature sequence and input features are the number of data features used for model training. Of these three parameters, time steps is the most important when optimising LSTM models, as it effects the amount of history the LSTM has to generalise from [70]. Increasing the length of each individual sequence available to the LSTM allows it to generalise longer term dependencies, with the impact of increasing the number of internal iterations of each LSTM cell [71, 72].

LSTM cells are comprised of two memory states: a short-term hidden state, \(\varvec{h}_t\) and a long-term cell state, \(\varvec{c}_t\), three sigmoid decision gates (forget, input, and output gates) and an additional single layer ANN. A LSTM can contain multiple layers of many cells, in which every cell recursively loops over each input sequence. Figure 7 shows the internal architecture of a LSTM cell and the recursive process of training.

The three decision gates decide what information within the sequence should be stored, thrown away or used. All three gates are a single fully-connected layer, with a sigmoid activation function, \(\sigma \). Based on the previous time steps’ hidden state, \(\varvec{h}_{t-1}\), and the current input vector, \(\varvec{x}_t\), each gate outputs a vector with values between 0-1, indicating the irrelevance or relevance of the corresponding values. These gate vectors are then used to scale their respective sequences, resulting in either the removal or persistence of values within the sequence. The forget gate vector, \(\varvec{f}_t\), input gate vector, \(\varvec{i}_t\) and output gate vector, \(\varvec{o}_t\) are calculated with the following equations:

Following the recursive flow of sequences within the LSTM cell, shown in Fig. 7, at each time step, t, the previous time steps’ cell state, \(\varvec{c}_{t-1}\), is first scaled by the forget gate vector, \(\varvec{f}_t\). This step controls the long-term memory up to the previous time step, allowing the meaning and importance of the long-term history to be modified before additional information is added.

Next \(\varvec{i}_t\) is used to evaluate which values are going to be stored in the cell state. The additional single layer ANN, utilising the hyperbolic tangent function, tanh, is used to analyse both \(\varvec{x}_t\) and \(\varvec{h}_{t-1}\) and output the current time steps’ temporary cell state, \(\varvec{\tilde{c}}_t\). From which, \(\varvec{\tilde{c}}_t\) is then scaled via \(\varvec{i}_t\) and added to \(\varvec{c}_{t-1}\), to create the current updated cell state, \(\varvec{c}_t\).

These two operations are key as they control the addition of information to the long term memory of the LSTM cell, through both the input gate filtering and the temporary cell state computation.

Finally, \(\varvec{o}_t\) is used to determine which parts of \(\varvec{c}_t\) should be filtered out to create this time steps’ main output, \(\varvec{y}_t\) and short-term memory, \(\varvec{h}_t\). Prior to filtering with \(\varvec{o}_t\), \(\varvec{c}_t\) is passed through an additional tanh function to scale the data between -1 and 1.

Both MLP and LSTM models have distinct benefits and drawbacks. MLP models are highly capable and due to their relative simplicity are quick to train. But MLPs lack LSTM models’ ability to generalise across previous inputs limiting their capability when working with sequenced data. With the hope of combining the the benefits of each architecture, the capability of a \(\text {MLP\_WIN}\) [73, 74] was also investigated. By applying a sliding time window to the input data, the same level of history present in each LSTM input can be inputted into a MLP model. Comparing to the 3D input data of LSTM models, the \(\text {MLP\_WIN}\) input matrix is reshaped to a 2D shape of (batch size, time steps \(\times \) input features) [75]. The squeezed dataset is then used as the input for a MLP model, which due to the increased amount of available information typically requires a more complex or deep architecture.

2.2.3 Optimisation and evaluation

Each model’s hyper-parameters were optimised using the grid search methodology with repeated k-fold CV as an evaluation technique [76, 77]. The list of predefined hyper-parameters to be searched over are specified in Table 3. Each model’s iteration performance was evaluated via three regression metrics; mean-squared error (MSE), mean absolute error (MAE) and the coefficient of determination (R\(^2\)), from which the best parameters and model architecture can be chosen based on prediction error and repeatability.

MAE gives an average difference between the actual and predicted values, and acts as a good representation of a model’s general prediction capabilities. Equation 9 is used to calculate MAE:

where, \(Y_i\) is the observed value, \(\hat{Y_i}\) is the predicted value and \(n\) is the observation sample size. However, MAE is not effective at identifying large errors or outliers, especially when the number of samples, n, is large. MSE heavily penalises large prediction errors, and as such is an effective loss function for regression models [78]. Equation 10 is used to calculate MSE:

The R\(^2\) score represents the proportion of variance resulting from the model. Therefore indicating how well the model will predict on unseen samples. Equation 11 is used to calculate R\(^2\):

Figure 8 shows the optimisation process, in which the training data is used to determine the optimal hyper-parameters during the CV process. After being determined the best parameters can then be used to build a new model which is trained on all the training data. Final scoring of the model is then conducted with the validation data, as well as repeating the CV process with the training data.

Repeated k-fold CV was used in all instances where previously mentioned. K-fold CV involves the process of randomly splitting the data into \(k\) splits, from which a model is trained on \((k-1)\) of the splits, with one split used for scoring the model. The process is then repeated, rotating which split is used as the evaluation data, until all the data has been used. Repeated k-fold CV involves repeating this process \(n\) times, leading to different splits to be created each repetition. The process results in a distribution of scores, from which the mean and standard deviation are commonly used as indicators of the model’s overall performance.

3 Results

Four wear tests were conducted, all of which resulted in a different total of grinding passes and final DCB wear states, detailed in Table 4. Prior to the training of prediction models, the AE and DCB wear measurements were explored with the aim of identifying insights about the wear process or correlations between the two data sources.

3.1 Tool wear

When aligned the NC4 surface measurements show the degradation of the DCB throughout the wear test. Figure 9 shows the DCB circumferential surface measurements acquired with the NC4 system, throughout test 4. Enabling the degradation of the DCB’s surface during the fourth test to be observed. In Fig. 9 a "crater" can be seen developing as the grinding progresses, eventually encompassing half of the DCB’s surface. The forming of surface craters was observed in all tests conducted, of which three encompassed greater than 40% of the tool’s circumference. Large scale diamond grain pull-out could trigger the rapid development of craters, resulting in the large step heights present on the tool surface. Wear rate will accelerate within the transition regions, between crater troughs and the nominal tool surface, as a result of the areas being more prone to macro fracture. The final state of each test’s DCB can be seen in Fig. 10. Each tool failed in small localised bands in which the inner core was exposed during grinding.

Figure 11 shows the extracted DCB measurements from the surface profiles. The mean and peak radius of the DCB are representations of the tool’s RUL, whilst the form error and run-out of the DCB show the geometric error of the measured surface. The mean and peak radius plots both follow the traditional three phase wear cycle of rapid initial wear in, followed by a phase of slow steady wear, ending with rapid failure until total tool failure [52, 79]. The mean radius shows a smoothed trend when compared to the peak radius in Fig. 11, whilst still enabling the clear sectioning of wear phases. Validating the choice of DCB mean radius as the optimal regression target. The mean and peak radius plots in Fig. 11 also confirm that DCBs follow the traditional wear cycle of machining tools. The formation of surface craters can also be identified from Fig. 11 by the sudden rise in form error of the DCB seen in all tests. Additionally Fig. 11 also shows the large amount of variation in initial tool state and overall tool life of DCBs. The initial levels of run-out varied significantly with a range of 13 \(\mu \)m between the tests conducted, and whilst the nominal radius of each tool was 0.65 mm, each tool did not reach that radius until at least cut 100. During the four tests, it also was observed that the overall final wear of the tools did not equate to the total volume of workpiece material removed, as seen in Table 4.

3.2 Acoustic emission

Analysis of the AE signals were carried out in both time and frequency domains.

3.2.1 Time domain analysis

Of the extracted time domain features the AE Root-Mean Square (\(AE_{rms}\)) and kurtosis (\(AE_\kappa \)) of the continuous AE signals were of most interest. Both showing potential to be utilised as a DCB condition indicator.

The kurtosis of each AE signal appeared to be intrinsically linked to the DCB form error and run-out. The kurtosis of a signal describes the tailedness of a distribution, which when applied to continuous AE signals indicates the amount of the signal having been measured at zero voltage. As the grinding process is assumed to be an uninterrupted process, continuous AE should be produced. Implying that an increase in the recorded \(AE_\kappa \) is due to interrupted surface contact between the tool and workpiece. High levels of DCB run-out or form error can lead to interrupted grinding due to loss of contact, however tool wear could also present as a reason for interrupted grinding. Therefore, the \(AE_\kappa \) should be seen as an indicator of a DCB’s overall condition rather than as a wear indicator, as a result of the tool’s run-out, form error and wear state. Figure 12 shows a comparison between the \(AE_\kappa \) and the DCB form error and run-out, in which a change in run-out or form error is often accompanied by a change in \(AE_\kappa \). The \(AE_\kappa \) therefore acts as a good indicator of crater formation or major wear. Figure 12 shows a change in trend in the \(AE_\kappa \) at each crater formation point, identified by the inflection point of the form error plot.

The \(AE_{rms}\) during the wear tests are shown in Fig. 13, it also appears to be an indicator of overall tool condition by correlating well to the measured run-out and form error. But unlike \(AE_\kappa \), it indicates the energy present within the AE signal. High levels of run-out or form error will not only create interrupted grinding, but will also cause larger bursts of AE to be generated, observed by increased levels of \(AE_{rms}\). This is seen in tests 1 and 2, during which a consistently high level of run-out and form error leads to consistently higher levels of \(AE_{rms}\). Similarly to \(AE_\kappa \) rapid changes in form error and run-out can be identified through changes in \(AE_{rms}\), for example sharp increases in DCB form error and run-out can be seen between cuts 100 - 150 in test 1 and cuts 75 - 100 in test 4 of Fig. 11, correlating to similar increases of \(AE_{rms}\) over these regions in Fig. 13.

3.2.2 Frequency domain analysis

Figure 14 shows the FFT of a single measured AE signal during each wear test, with Fig. 15 showing the spectrogram throughout each test’s duration. All four FFTs show a similar trend, differing in amplitude at certain frequencies. Importantly peaks and troughs are consistent in their frequency across the four tests, such as at 100, 240, 291 and 520 kHz, implying a similar process has been used to generate the AE. The observed loss in amplitude is expected as frequency increases, due to the higher attenuation rate for high frequency signals over the distance between source and sensor. Seen in Fig. 15 the amplitude at given frequencies are not constant during the wear test, suggesting the frequency content of the AE contains relevant information about the tool’s changing wear level throughout the test. Compared to the investigated AE time domain features, AE frequency domain features showed a much higher level of correlation to the DCB wear metrics. In particular the 35 kHz frequency band, from the PSD in Fig. 15, had a 0.77 Pearson correlation coefficient with DCB mean radius. From the selected frequency domain partial powers the reduction in radius of each tool during the steady state wear could be observed. However, when the tool underwent unpredictable or large changes, such as within the initial and rapid failure wear stages, the frequency features did not correlate to any wear metrics.

This lack of responsiveness to rapid changes would be a major limitation of a soley frequency based TCM system. The inclusion of AE time domain features, which were suggested to be indicators of the changing error of each DCB, with the frequency partial powers into a TCM system was hoped to enable accurate predictions of wear across the whole tool wear cycle.

3.3 ML models

Three predictive ANNs architectures were trained and optimised based on models and processes detailed in Section 2.2. Repeated k-fold CV was utilised with 10 repeats each consisting of 10 splits, as recommended by current literature [76, 77], allowing comparisons of hyper-parameters to be based on the scoring of 100 trained models for each iteration. As a result of the optimisation process the hyper-parameters that yielded the highest scoring and most consistent predictions for each model are detailed in Table 5. Consistency in scores during CV was prioritised over small average performance gains, as repeatability is a key factor within large scale manufacturing cases. Interestingly, the \(\text {MLP\_WIN}\) performed worse with increasing sequence length, unlike the LSTM which improved significantly with small increases in sequence length. This observation could signify that the \(\text {MLP\_WIN}\) is unsuitable for working with time-series inputs, or that increases in sequence length require significant increases in model depth/complexity. One significant improvement stemmed from the inclusion of a final dense fully-connected layer after the LSTM layers within the LSTM models. Figure 16 displays a sample loss plot of each optimised model architecture, showing convergence was reached by all models before 3000 epochs.

Table 6 shows the scores of all three optimised models, when evaluated against training data during CV and the corresponding validation data. The prediction scores show that all three model architectures are capable of learning and predicting DCB wear solely from AE features. From the prediction scores shown in Table 6, it is clear that the \(\text {MLP\_WIN}\) model produces the worst prediction accuracy of the three models, indicated by it scoring worst in five out of the six metrics. The model performs well on average indicated by its reasonable MAE score, but often predicts a small number of large error values inflating its MSE score. Figure 17 shows each model’s predictions on the validation dataset compared to the true measured value, and clearly shows the mentioned anomalous predictions from the \(\text {MLP\_WIN}\) model. Both the LSTM and MLP models predict to a similar level of accuracy across the training sets, but the LSTM architecture is able to score to a high level of precision when evaluated over the validation set. This difference is the likely result of the LSTM’s superior generalisation ability. A model capable of generalising training input data effectively, will score well on both previously seen and unseen input data. LSTM models recurrent nature and cell history allow them to excel at generalising time sequence data [80]. This can be seen by the best validation metric scores all being the result of the LSTM architecture. Similar results of LSTM model prediction accuracy have been presented albeit in different TCM applications. R\(^2\) scores ranging between 0.994-0.999 were achieved with similar LSTM architectures within high-speed turning [81], milling of carbon fibre reinforced plastic [82] and surface grinding [51]. However, all three models were able to generalise sufficiently to score to a similar error level whether using training or validation data. A well trained ANN must be able to generalise the input data and reach a high prediction accuracy, but produce these scores repeatably even when the training data is modified slightly. The stability of a model is therefore crucial. Repeated k-fold CV also allowed each model’s stability to be evaluated, the distribution in training scores throughout the CV process was monitored for each iteration. This results in an additional metric to evaluate each model to be included in Table 6, the standard deviation during CV of each training metric. The LSTM architecture showed the lowest standard deviation across all three training metrics, with the MLP architecture showing a similar level of stability. A limitation of the LSTM model is its significantly slower training time, by up to 12 times per epoch, depending on its industrial application could be a major disadvantage for an on-line system. A drawback of both the MLP and \(\text {MLP\_WIN}\) architectures which is not obvious solely from their scores in Table 6, is their worsened predictions when outside the steady state wear phase. This is likely a compounded effect due to a limited number of samples within the rapid failure phase of wear and these models’ worse generalisation of the whole dataset. LSTM models have not been shown to follow this observation, instead predicting similar level of error throughout the whole range of inputs. Nakai et al. [39] demonstrated an improved level of prediction capability with MLP models utilising AE features when investigating traditional surface grinding operations; MAE values of \(<0.25\) \(\mu \)m \(\pm 0.650\) were achieved across three levels of depth of cut from a MLP model with three hidden layers, suggesting that MLP models are still a highly effective architecture. Figure 18 shows the comparable prediction ability of each model when asked to predict in sequence. It shows clearly the improved prediction capability of LSTM models for this application, showing little noise compared to the actual measured values in contrast to the other architectures.

4 Conclusion

This paper presents a viable process for the indirect monitoring and prediction of electroplated DCB tool wear using AE as a sensing technique. Through the completion of four wear tests, both AE and tool surface measurements were obtained, in order to train a regression ANN capable of predicting the measured DCB mean radius, from seven extracted AE features. Three ML model architectures were trained, optimised and compared, with LSTM models performing best with a MSE prediction error of \(<0.6\) µm.

The experimental procedure enabled the collection of AE alongside tool surface measurements, during a grinding based tool wear test. Four wear tests were conducted with constant grinding parameters, in which a \(\varnothing \)1.3 mm #1000 DCB was worn by grinding a SiC workpiece. From in situ tool wear measurements with a Renishaw NC4+ blue, the DCB tools were shown to follow a traditional three-phase wear cycle. But also indicated a large variance in run-out and form error both initially and developed throughout the tests.

Following processing of the AE signals, links were observed between AE features, \(AE_\kappa \) and \(AE_{rms}\), and DCB surface measurements, run-out and form error. Suggesting both AE features are indicators of overall DCB condition rather than absolute tool wear. Frequency domain analysis showed varying levels of amplitude throughout the tests. FFTs from each test showed similar trends, with local maxima occurring within the same frequency bands, implying a comparable method of AE generation was used during each test. Seven AE features were extracted from each recorded signal, forming the input for ANN training.

Three different ML architectures were chosen for comparison; MLP, \(\text {MLP\_WIN}\) and LSTM. Each regression model was trained to predict the measured DCB mean radius, a representation of the tool’s RUL. Using grid search with repeated k-fold CV for evaluation each model’s hyper-parameters were optimised. Final evaluation results showed LSTM models produced the least variation during CV of the training dataset, and scored best in all metrics on unseen validation data. Leading to a prediction MSE of 0.559 µm when using the optimised LSTM model. LSTM models whilst outscoring the other models, took roughly 10 times longer to train per epoch. Generalisation was also achieved by both the MLP and \(\text {MLP\_WIN}\) models, but due to limitations in scope when working with time-series data both achieved lower prediction scores. With further tests and a larger training dataset, both models could see significant improvements in scoring metrics.

Future extension of this work could include the study of DCB wear with a variety of grinding parameters, tool specifications and workpiece materials, to ensure the processes and techniques are consistent for a broad range of scenarios. Additionally, the models could be expanded to predict tool run-out and form error, producing a well-rounded monitoring technique encompassing wear and tool condition. Further input features could be extracted from the AE with more robust and automatic feature extraction techniques, such those commonly found in image classification problems.

References

Kurada S, Bradley C (1997) A review of machine vision sensors for tool condition monitoring. Comput Indust 34(1):55–72. https://doi.org/10.1016/S0166-3615(96)00075-9

Ohnishi O, Suzuki H, Uhlmann E, Schröer N, Sammler C, Spur G, Weismiller M (2015) Chapter 4 - Grinding. In: Marinescu ID, Doi TK, Uhlmann E (eds.) Handbook of ceramics grinding and polishing. William Andrew Publishing, Boston, pp. 133–233. https://doi.org/10.1016/B978-1-4557-7858-4.00004-2

Rowe WB (2014) 3 - Grinding Wheel Developments. In: Rowe WB (ed.) Principles of modern grinding technology (Second Edition). William Andrew Publishing, Oxford, pp. 35–62. https://doi.org/10.1016/B978-0-323-24271-4.00003-8

Wang C, Gong Y, Cheng J, Wen X, Zhou Y (2016) Fabrication and evaluation of micromill-grinding tools by electroplating CBN. Int J Adv Manufac Technol 87(9):3513–3526. https://doi.org/10.1007/s00170-016-8730-1

Li Y, Liu C, Hua J, Gao J, Maropoulos P (2019) A novel method for accurately monitoring and predicting tool wear under varying cutting conditions based on meta-learning. CIRP Annals 68(1):487–490. https://doi.org/10.1016/j.cirp.2019.03.010

Ben-Hanan U, Judes H, Regev M (2008) Comparative study of three different types of dental diamond burs. Tribol - Mater Surf Interfaces 2(2):77–83. https://doi.org/10.1179/175158308X373054

Regev M, Judes H, Ben-Hanan U (2010) Wear mechanisms of diamond coated dental burs. Tribol - Mater Surf Interfaces 4(1):38–42. https://doi.org/10.1179/175158310X12626998129798

Huang W, Li Y, Wu X, Shen J (2023) The wear detection of mill-grinding tool based on acoustic emission sensor. Int J Adv Manufac Technol 124(11):4121–4130. https://doi.org/10.1007/s00170-022-09058-7

Fathima K, Senthil Kumar A, Rahman M, Lim HS (2003) A study on wear mechanism and wear reduction strategies in grinding wheels used for ELID grinding. Wear 254(12):1247–1255. https://doi.org/10.1016/S0043-1648(03)00078-4

Badger JA (2012) Microfracturing ceramic abrasive in grinding. In: MSEC2012, ASME 2012 International Manufacturing Science and Engineering Conference, pp. 1115–1123. https://doi.org/10.1115/MSEC2012-7324

Azarhoushang B, Daneshi A (2022) 13 - Mechanisms of tool wear. In: Azarhoushang B, Marinescu ID, Brian Rowe W, Dimitrov B, Ohmori H (eds.) Tribology and fundamentals of abrasive machining processes (Third Edition). William Andrew Publishing, ???, pp. 539–554. https://doi.org/10.1016/B978-0-12-823777-9.00020-3

Rowe WB (2014) 5 - Wheel contact and wear effects. In: Rowe WB (ed.) Principles of modern grinding technology (Second Edition). William Andrew Publishing, Oxford, pp. 83–99. https://doi.org/10.1016/B978-0-323-24271-4.00005-1

Hassui A, Diniz AE, Oliveira JFG, Felipe J, Gomes JJF (1998) Experimental evaluation on grinding wheel wear through vibration and acoustic emission. Wear 217(1):7–14. https://doi.org/10.1016/S0043-1648(98)00166-5

Shi Z, Malkin S (2005) Wear of electroplated CBN grinding wheels. J Manufac Sci Eng 128(1):110–118. https://doi.org/10.1115/1.2122987

Dimla DE (2000) Sensor signals for tool-wear monitoring in metal cutting operations—a review of methods. Int J Mach Tools Manufac 40(8):1073–1098. https://doi.org/10.1016/S0890-6955(99)00122-4

Li Z, Liu R, Wu D (2019) Data-driven smart manufacturing: tool wear monitoring with audio signals and machine learning. J Manufac Process 48:66–76. https://doi.org/10.1016/j.jmapro.2019.10.020

Yesilyurt I, Dalkiran A, Yesil O, Mustak O (2022) Scalogram-based instantaneous features of acoustic emission in grinding burn detection. J Dyn Monit Diagn 1(1), 19–28.https://doi.org/10.37965/jdmd.2021.49

Lee SH (2012) Analysis of ductile mode and brittle transition of AFM nanomachining of silicon. Int J Mach Tools Manufac 61:71–79. https://doi.org/10.1016/j.ijmachtools.2012.05.011

Pandiyan V, Tjahjowidodo T (2019) Use of acoustic emissions to detect change in contact mechanisms caused by tool wear in abrasive belt grinding process. Wear 436–437. https://doi.org/10.1016/j.wear.2019.203047

Ferrando Chacón JL, Fernández de Barrena T, García A, Sáez de Buruaga M, Badiola X, Vicente J (2021) A novel machine learning-based methodology for tool wear prediction using acoustic emission signals. Sensors 21(17):5984. https://doi.org/10.3390/s21175984

Shah M, Vakharia V, Chaudhari R, Vora J, Pimenov DY, Giasin K (2022) Tool wear prediction in face milling of stainless steel using singular generative adversarial network and LSTM deep learning models. Int J Adv Manufac Technol 121(1):723–736. https://doi.org/10.1007/s00170-022-09356-0

Haber RE, Jiménez JE, Peres CR, Alique JR (2004) An investigation of tool-wear monitoring in a high-speed machining process. Sensors and Actuators A: Physical 116(3):539–545. https://doi.org/10.1016/j.sna.2004.05.017

Dornfeld DA, Lee Y, Chang A (2003) Monitoring of ultraprecision machining processes. Int J Adv Manufac Technol 21(8):571–578

Han X, Wu T (2013) Analysis of acoustic emission in precision and high-efficiency grinding technology. Int J Adv Manufac Technol 67(9):1997–2006. https://doi.org/10.1007/s00170-012-4626-x

Bi G, Liu S, Su S, Wang Z (2021) Diamond grinding wheel condition monitoring based on acoustic emission signals. Sensors 21(4). https://doi.org/10.3390/s21041054

Wan L, Zhang X, Zhou Q, Wen D, Ran X (2023) Acoustic emission identification of wheel wear states in engineering ceramic grinding based on parameter-adaptive VMD. Ceram Int 49(9, Part A): 13618–13630. https://doi.org/10.1016/j.ceramint.2022.12.238

Bi G, Zheng S, Zhou L (2021) Online monitoring of diamond grinding wheel wear based on linear discriminant analysis. Int J Adv Manufac Technol 115(7):2111–2124. https://doi.org/10.1007/s00170-021-07190-4

Wan B-S, Lu M-C, Chiou S-J (2022) Analysis of spindle AE signals and development of AE-based tool wear monitoring system in micro-milling. J Manufac Mater Process 6(2):42. https://doi.org/10.3390/jmmp6020042

Murakami H, Katsuki A, Sajima T, Uchiyama K, Houda K, Sugihara Y (2021) Spindle with built-in acoustic emission sensor to realize contact detection. Precis Eng 70:26–33. https://doi.org/10.1016/j.precisioneng.2021.01.017

Rowe WB, Chen X, Allanson DR (1997) The coolant coupling method applied to touch dressing in high frequency internal grinding. In: Kochhar AK, Atkinson J, Barrow G, Burdekin M, Hannam RG, Hinduja S, Brunn P, Li L (eds.) Proceedings of the Thirty-Second International Matador Conference. Macmillan Education UK, London, pp. 337–340. https://doi.org/10.1007/978-1-349-14620-8_53

Inasaki I (1998) Application of acoustic emission sensor for monitoring machining processes. Ultrasonics 36(1):273–281. https://doi.org/10.1016/S0041-624X(97)00052-8

Zhao R, Wang J, Yan R, Mao K (2016) Machine health monitoring with LSTM networks. In: 2016 10th International Conference on Sensing Technology (ICST), pp. 1–6. https://doi.org/10.1109/ICSensT.2016.7796266

De Barrena TF, Ferrando JL, García A, Badiola X, de Buruaga MS, Vicente J (2023) Tool remaining useful life prediction using bidirectional recurrent neural networks (BRNN). Int J Adv Manufac Technol 125(9):4027–4045. https://doi.org/10.1007/s00170-023-10811-9

An Q, Tao Z, Xu X, El Mansori M, Chen M (2020) A data-driven model for milling tool remaining useful life prediction with convolutional and stacked LSTM network. Meas 154:107461. https://doi.org/10.1016/j.measurement.2019.107461

Serin G, Sener B, Ozbayoglu AM, Unver HO (2020) Review of tool condition monitoring in machining and opportunities for deep learning Int J Adv Manufac Technol 109(3):953–974. https://doi.org/10.1007/s00170-020-05449-w

Abu-Mahfouz I (2003) Drilling wear detection and classification using vibration signals and artificial neural network. Int J Mach Tools Manufac 43(7):707–720. https://doi.org/10.1016/S0890-6955(03)00023-3

Wang Z, Willett P, DeAguiar PR, Webster J (2001) Neural network detection of grinding burn from acoustic emission. Int J Mach Tools Manufac 41(2):283–309. https://doi.org/10.1016/S0890-6955(00)00057-2

Moia DFG, Thomazella IH, Aguiar PR, Bianchi EC, Martins CHR, Marchi M (2015) Tool condition monitoring of aluminum oxide grinding wheel in dressing operation using acoustic emission and neural networks. J Brazilian Soc Mech Sci Eng 37(2):627–640. https://doi.org/10.1007/s40430-014-0191-6

Nakai ME, Aguiar PR, Guillardi H, Bianchi EC, Spatti DH, D’Addona DM (2015) Evaluation of neural models applied to the estimation of tool wear in the grinding of advanced ceramics Exp Syst Appl 42(20):7026–7035. https://doi.org/10.1016/j.eswa.2015.05.008

Gu J, Wang Z, Kuen J, Ma L, Shahroudy A, Shuai B, Liu T, Wang X, Wang G, Cai J, Chen T (2018) Recent advances in convolutional neural networks. Patt Recognit 77:354–377. https://doi.org/10.1016/j.patcog.2017.10.013

Gouarir A, Martínez-Arellano G, Terrazas G, Benardos P, Ratchev S (2018) In-process tool wear prediction system based on machine learning techniques and force analysis. Procedia CIRP 77:501–504. https://doi.org/10.1016/j.procir.2018.08.253

Zhang Y, Qi X, Wang T, He Y (2023) Tool wear condition monitoring method based on deep learning with force signals. Sensors 23(10):4595. https://doi.org/10.3390/s23104595

Li Z, Liu X, Incecik A, Gupta MK, Królczyk GM, Gardoni P (2022) A novel ensemble deep learning model for cutting tool wear monitoring using audio sensors. J Manufac Process 79:233–249. https://doi.org/10.1016/j.jmapro.2022.04.066

Duan J, Duan J, Zhou H, Zhan X, Li T, Shi T (2021) Multi-frequency-band deep CNN model for tool wear prediction. Meas Sci Technol 32(6):065009. https://doi.org/10.1088/1361-6501/abb7a0

Cao X, Chen B, Yao B, Zhuang S (2019) An intelligent milling tool wear monitoring methodology based on convolutional neural network with derived wavelet frames coefficient. Appl Sci 9(18):3912. https://doi.org/10.3390/app9183912

Hochreiter S, Schmidhuber J (1997) Short-term memory. Neural Comput 9(8):1735–1780. https://doi.org/10.1162/neco.1997.9.8.1735

Yu Y, Si X, Hu C, Zhang J (2019) A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput 31(7):1235–1270. https://doi.org/10.1162/neco_a_01199

Graves A, Jaitly N, Mohamed A-r (2013) Hybrid speech recognition with deep bidirectional LSTM. In: 2013 IEEE Workshop on Automatic Speech Recognition and Understanding, pp. 273–278. https://doi.org/10.1109/ASRU.2013.6707742

Tavakoli N (2019) Modeling genome data using bidirectional LSTM. In: 2019 IEEE 43rd Annual Computer Software and Applications Conference (COMPSAC), vol. 2, pp. 183–188. https://doi.org/10.1109/COMPSAC.2019.10204

Chen Q, Zhu X, Ling Z, Wei S, Jiang H, Inkpen D (2016) Enhanced LSTM for natural language inference. arXiv:1609.06038v3, https://doi.org/10.18653/v1/P17-1152

Guo W, Li B, Zhou Q (2019) An intelligent monitoring system of grinding wheel wear based on two-stage feature selection and long short-term memory network. Proc Inst Mech Eng Part B: J Eng Manufac 233(13):2436–2446. https://doi.org/10.1177/0954405419840556

Marinescu ID, Hitchiner M, Uhlmann E, Rowe WB, Inasaki I (2007) Handbook of machining with grinding wheels. Manufacturing Engineering And Materials Processing. Taylor & Francis Group, ???

Renishaw plc: Renishaw: NC4. http://www.renishaw.com/en/high-accuracy-laser-tool-setting-systems--6099

Gong H, Fang FZ, Hu XT (2010) Kinematic view of tool life in rotary ultrasonic side milling of hard and brittle materials. Int J Mach Tools Manufac 50(3):303–307. https://doi.org/10.1016/j.ijmachtools.2009.12.006

Tian Y, Yang L (2022) Multi-dimension tool wear state assessment criterion on the spiral edge of the milling cutter. Int J Adv Manufac Technol 119(11):8243–8256. https://doi.org/10.1007/s00170-021-08539-5

Buj-Corral I, Vivancos-Calvet J, González-Rojas H (2013) Roughness variation caused by grinding errors of cutting edges in side milling. Mach Sci Technol 17(4):575–592. https://doi.org/10.1080/10910344.2013.837350

Group M (2022) WD - 100-900 kHz wideband differential AE sensor. https://www.physicalacoustics.com/by-product/sensors/WD-100-900-kHz-Wideband-Differential-AE-Sensor

ASTM (2017) Standard guide for mounting piezoelectric acoustic emission sensors. ASTM Int’l

Muir C Almansour A, Sevener K, Smith C, Presby M, Kiser J, Pollock T, Daly S (2021) Damage mechanism identification in composites via machine learning and acoustic emission. npj Comput Mater 7:95. https://doi.org/10.1038/s41524-021-00565-x

de Groot PJ, Wijnen PAM, Janssen RBF (1995) Real-time frequency determination of acoustic emission for different fracture mechanisms in carbon/epoxy composites. Compos Sci Technol 55(4):405–412. https://doi.org/10.1016/0266-3538(95)00121-2

Morscher GN (1999) Modal acoustic emission of damage accumulation in a woven SiC/SiC composite. Compos Sci Technol 59(5):687–697. https://doi.org/10.1016/S0266-3538(98)00121-3

Cooley JW, Lewis PAW, Welch PD (1969) The fast Fourier transform and its applications. IEEE Trans Educ 12(1):27–34. https://doi.org/10.1109/TE.1969.4320436

Duspara M, Sabo K, Stoić A (2014) Acoustic emission as tool wear monitoring. Tehnički vjesnik 21(5):1097–1101

Pandiyan V, Drissi-Daoudi R, Shevchik S, Masinelli G, Logé R, Wasmer K (2020) Analysis of time, frequency and time-frequency domain features from acoustic emissions during laser powder-bed fusion process. Procedia CIRP 94:392–397. https://doi.org/10.1016/j.procir.2020.09.152

Géron A (2022) Hands-on machine learning with Scikit-Learn, Keras, And TensorFlow. ”O’Reilly Media, Inc.”, ???

Ding B, Qian H, Zhou J (2018) Activation functions and their characteristics in deep neural networks. In: 2018 Chinese Control And Decision Conference (CCDC), pp. 1836–1841. https://doi.org/10.1109/CCDC.2018.8407425

Maiorov V, Pinkus A (1999) Lower bounds for approximation by MLP neural networks. Neurocomput 25(1):81–91. https://doi.org/10.1016/S0925-2312(98)00111-8

Graves A (2012) Supervised sequence labelling with recurrent neural networks. Stud Comput Intell, vol. 385. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-24797-2

Hochreiter S (1998) The vanishing gradient problem during learning recurrent neural nets and problem solutions. Int J Uncertain Fuzziness Knowl-Based Syst 06(02):107–116. https://doi.org/10.1142/S0218488598000094

Sundermeyer M, Schlüter R, Ney H (2012) LSTM neural networks for language modeling. In: Thirteenth Annual Conference of the International Speech Communication Association

Boulmaiz T, Guermoui M, Boutaghane H (2020) Impact of training data size on the LSTM performances for rainfall-runoff modeling. Model Earth Syst Environ 6(4):2153–2164. https://doi.org/10.1007/s40808-020-00830-w

Bao X, Xu Y, Kamavuako EN (2022) The effect of signal duration on the classification of heart sounds: a deep learning approach. Sensors 22(6):2261. https://doi.org/10.3390/s22062261

Graves A, Schmidhuber J (2005) Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Netw 18(5):602–610. https://doi.org/10.1016/j.neunet.2005.06.042

Vafaeipour M, Rahbari O, Rosen MA, Fazelpour F, Ansarirad P (2014) Application of sliding window technique for prediction of wind velocity time series Int J Energy Environ Eng 5(2):105. https://doi.org/10.1007/s40095-014-0105-5

Izzeldin H, Asirvadam VS, Saad N (2011) Online sliding-window based for training MLP networks using advanced conjugate gradient. In: 2011 IEEE 7th International Colloquium on Signal Processing and Its Applications, pp. 112–116. https://doi.org/10.1109/CSPA.2011.5759854

Bouckaert RR (2003) Choosing between two learning algorithms based on calibrated tests. In: Proceedings of the Twentieth International Conference on International Conference on Machine Learning. ICML’03, AAAI Press, Washington, DC, USA, pp. 51–58

Witten IH, Frank E, Hall MA (2011) Chapter 5 - Credibility: evaluating what’s been learned. In: Witten IH, Frank E, Hall MA (eds.) Data Mining: Practical Machine Learning Tools and Techniques (Third Edition). The Morgan Kaufmann Series in Data Management Systems. Morgan Kaufmann, Boston, pp. 147–187. https://doi.org/10.1016/B978-0-12-374856-0.00005-5

Wang Q, Ma Y, Zhao K, Tian Y (2022) A comprehensive survey of loss functions in machine learning. Annals Data Sci 9(2):187–212. https://doi.org/10.1007/s40745-020-00253-5

Rowe WB (2014) 17 - Mechanics of abrasion and wear. In: Rowe WB (ed.) Principles of modern grinding technology (Second Edition). William Andrew Publishing, Oxford, pp. 349–379. https://doi.org/10.1016/B978-0-323-24271-4.00017-8

Xu H, Zhang C, Hong GS, Zhou J, Hong J, Woon KS (2018) Gated recurrent units based neural network for tool condition monitoring. In: 2018 International Joint Conference on Neural Networks (IJCNN), pp. 1–7. https://doi.org/10.1109/IJCNN.2018.8489354

Ma K, Wang G, Yang K, Hu M, Li J (2022) Tool wear monitoring for cavity milling based on vibration singularity analysis and stacked LSTM. Int J Adv Manufac Technol 120(5):4023–4039. https://doi.org/10.1007/s00170-022-08861-6

Li B, Lu Z, Jin X, Zhao L (2023) Tool wear prediction in milling CFRP with different fiber orientations based on multi-channel 1DCNN-LSTM. J Intell Manufac. https://doi.org/10.1007/s10845-023-02164-7

Funding

This work was funded by an ESPRC ICASE award in partnership with Renishaw plc.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study and conception and design. Experimental setup, data collection and analysis were performed by Thomas Jessel. The first draft of the manuscript was written by Thomas Jessel and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jessel, T., Byrne, C., Eaton, M. et al. Tool condition monitoring of diamond-coated burrs with acoustic emission utilising machine learning methods. Int J Adv Manuf Technol 130, 1107–1124 (2024). https://doi.org/10.1007/s00170-023-12700-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-023-12700-7