Abstract

Quantitative relationships between molecular structures and bioactivities of a set of CCR5 inhibitor derivatives were discovered. We have demonstrated the detailed application of two efficient nonlinear methods, general regression and radial basis function neural networks, for evaluation of quantitative structure–activity relationships of the studied compounds. Components produced by principal component analysis were used as input of the developed nonlinear models. Comparison between predictability of PC-GRNNs and PC-RBFNNs indicated that later method has higher ability to predict the activity of the studied molecules. In order to design novel derivatives of inhibitors with high activity and low side effects, and because experimental and calculated activities of molecules employed in the model development step, shown a good correlation, developed PC-RBFNNs QSAR model was used to calculate inhibitory activities of some suggested compounds.

Similar content being viewed by others

Introduction

Acquired immunodeficiency syndrome (AIDS) is a fatal disease for which no complete and successful chemotherapy has been suggested so far. Human immunodeficiency virus subtype 1 (HIV-1), a retrovirus of the lentivirus family, has been found to be common in causing this disorder. HIV-1 generates a progressive immunosuppression disorder by destruction of CD4+T lymphocytes, helper cells, which attack directly against infections, and results in opportunistic infections and death (Campiani et al., 2002). To decrease HIV-1 replication, existing medications use a combination of protease and reverse transcriptase inhibitors (Pomerantz, 1999). While suppression of viral replication through therapy with protease and reverse transcriptase inhibitors delays emergence of AIDS, the virus is not eliminated and the immune system finally succumbs to infection (Chun and Fauci, 1999; Finzi et al., 1999; Furtado et al., 1999).

In 1996, it was proved that infection of macrophages, monocytes, and T cells by HIV-1 is mediated by interaction with, as well as the cell surface molecule CD4, the β-chemokine receptor CCR5 belonging to the G-protein coupled receptors family (Dragic et al., 1996).

This finding opened a severe research attempts resulted in development of CCR5 antagonists as potential anti-HIV beneficial compounds.

In addition to its function in the development of HIV infection, many studies show different roles for CCR5 and its ligands in disorders, such as rheumatoid arthritis (Pipitone and Pitzalis, 2000), multiple sclerosis (Sellebjerg et al., 2000), transplant rejection (Fischereder et al., 2001), and inflammatory bowel syndrome (Andres et al., 2000). These studies propose that CCR5 receptor modulators would have potential benefits in a wide variety of disorders.

In medical sciences field, many researchers are continuously searching for new drug-like compounds having high potency to treat AIDS. Using computer-aided drug-design methods, such as quantitative structure–activity relationships (QSARs), development of such ligands may be properly performed and accelerated. Drug discovery process is often faced with situations that a ligand should be discovered for a target protein for which no experimentally verified structure is yet available. The most well-known examples are possibly the GPCRs, which play a key role in many physiological and pathophysiological procedures. Today, 50% of all newly launched drugs are targeted against GPCRs (Klabunde and Hessler, 2002). Hence, designing an approach to suggest new agonists and/or antagonists of GPCRs has a high degree of importance. Due to the lack of knowledge about the 3D structure of CCR5 and the way its antagonists interact with the binding site, one of the best ways to recognize the initial lead compounds is ligand-based method. Pharmacophore identification, structure–activity relationships (SARs), and QSARs are some examples of ligand-based methods.

Over one hundred years after Fischer’s proposal of the lock-and-key analogy (Fischer, 1894) and about half century after the reports of Hansch, Fujita, Free, and Wilson (Free and Wilson, 1964; Hansch and Fujita 1964) QSARs have established as an extensively used approach, significantly contributing to the drug-design procedure. QSAR studies provide medicinal chemists valuable information that is useful for drug design and prediction of drug activity (Hansch et al., 1996, 2001; Schmidli, 1997). They are frequently applied to develop a correlation between various physicochemical properties of potential drug candidates including quantum, geometrical, topological, and their binding affinity towards a common biological target.

Several data mining methodologies have been developed to evaluate quantitative relationships between structure and activity. These relationships might express a linear regression model or may provide to the researcher nonlinear regression ones. Multiple linear regression (MLR), partial least squares (PLS), and principal component regression (PCR) are most common linear regression methods (Arkan et al., 2010; Saghaie et al., 1111; Saghaie et al., 2010; Shahlaei et al., 2010b). Artificial neural networks (ANNs) and support vector machine (SVM) are examples of nonlinear regression approaches to build up mathematical relationships between selected descriptors and activity of the compounds (Arkan et al., 2010; Saghaie et al., 2010; Shahlaei et al., 2010a, b).

Nonlinear regression methods are often employed to model the SARs because of the complexity of such relationships in many bioactive molecules. The development of these methods also opened up the field to the simultaneous analysis of a wider diversity of structures with potentially varying modes of action and noncongeneric compounds (Pompe et al., 1997).

Artificial systems emulate function of the brain, where a very high number of information-processing neurons are interconnected. These systems are known for their capability to model a broad set of functions, including linear and nonlinear, without knowing the analytic forms in advance. The mathematical flexibility of ANNs praises them as an efficient method for pattern recognition and regression and constructing predictive models. A particular benefit of ANNs is their intrinsic ability to show nonlinear relationships between the dependent and independent variables without using an explicit mathematical equation(s).

Although there are a number of different neural networks models, the most frequently used type of neural network in QSAR is the feed-forward back-propagation network.

This algorithm has some disadvantages such as being caught in local minima during learning phase, very poor convergence rate, time-consuming procedures, and difficulty in explicit optimum network configuration (Walczak and Massart, 2000).

The radial basis function neural networks (RBFNNs) let us construct regression model between independent and dependent variables using a fast linear approach. RBFNNs have advantages of short training time and reaching to the optimal unique solution by attaining the global minimum of error surface during training of network. The topology and parameters of developed RBFNNs are straightforward to optimize (Specht, 1991; Tetteh et al., 1998).

The generalized regression neural network (GRNN) is a special kind of normalized radial basis function networks, where the sigmoid activation functions often used in neural networks are replaced by radial basis functions (Chtioui et al., 1999).

Compared with the back-propagation neural networks, the architecture of the GRNNs, namely the numbers of layers and units, is defined by the numbers of objects and predictor in the training dataset. In this algorithm, the only one training parameter to be optimized is the width (spread) of radial basis functions. Due to the straightforwardness of the network structure and its implementation, it has been extensively used in many fields (Celikoglu, 2006; Klocker et al., 2002; Mosier and Jurs, 2002; Niwa, 2003).

Thanks to the modern softwares and hardwares, numerous descriptors (variables in QSAR) can be easily obtained in a short time. To extract the most relevant and significant information from such raw data, using of multivariate regression methods and also data compression and/or variable selection is necessary. Multivariate regression methods are used to develop a quantitative relation, i.e., a model, between the calculated descriptors, stored in a data matrix, X, and the activities of compounds, stored in a data matrix, called Y. One of the most common problems in multivariate regression methods is multicollinearity between variables. Multicollinearity occurs when ratio of descriptors to considered compounds is large. This situation makes the developed model unstable. So reduction of dimensionality of original descriptors is necessary. One of the most common ways to reduce the number of variables without missing useful information is principal component analysis (PCA).

PCA is an approach suitable for overcoming the instability in developed model related to multicollinear descriptors. PCA is used to compress a pool of descriptors into principal components (PCs) as new variables. PCA approach assumes that despite the numerous number of descriptors the QSAR model is governed by a comparatively small number of latent variables namely PCs.

In the present study two different algorithms of ANNs are exploited to correlate the anti-HIV activity of 48 drug-like molecules with the PCs extracted from calculated structural descriptors. These algorithms are (i) RBFNNs and (ii) GRNNs.

We are going to use the obtained information from QSAR to design novel drug-like compounds in this study. Here, key goal was to model potentially active anti-HIV molecules by using the two nonlinear models namely PC-RBFNNs and PC-GRNNs.

Methods

Descriptor generation and assigning calibration and test sets

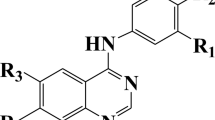

The in vitro biological activity data used in this study were CCR5 inhibitory (in terms of log IC50), of a set of 48 compounds selected from literature (Dorn et al., 2001; Finke et al., 2001). General chemical structures and the structural details of these compounds as well as biological activity data are given in Table 1.

In order to calculate the theoretical molecular descriptors, molecular structures were built by Hyperchem version 7.0 and were optimized using the AM1 algorithm. A gradient cutoff of 0.01 was used for all geometry optimizations. The resulted geometries were transferred into Dragon program (developed by Milano Chemometrics and QSAR Group) (Todeschini et al., 2002). It was used for calculation of a large number of descriptors including 16 different groups. In the computation of descriptors with dragon, descriptors with constant value for all compounds were eliminated. The name and number of calculated descriptors are reported in the Table 2. In addition to these groups of descriptor, a number of quantum-electronic descriptors, such as frontier orbital energies (HOMO and LUMO), most positive charge, lowest negative charge and indices of electronegativity, electrophilicity, hardness, and softness were calculated according to the method proposed by Thanikaivelan et al. (2000). In addition, some different descriptors, such as Log P, surface area, and polarizability were calculated by Hyperchem for each molecule.

In order to test the final model performances, about 20% of the molecules (10 out of 48) were selected as external test set molecules (Table 1). The best situation of this stage of model building is dividing dataset to guarantee that both training and test sets individually cover the total space occupied by original data set. Then ideal splitting of data set as each of objects in test set is close to at least one of the objects in the training set. Various methods were used for splitting the original data set to the training and test sets. According to Tropsha the best models would be built when Kennard and Stone algorithm was used (Tropsha et al., 2003). So this algorithm was applied in this study (Kennard and Stone, 1969). This method has some advantages: the training set molecules map the measured region of the input variable space completely with respect to the induced metric. The other advantage is that the test molecules all fall inside the measured region.

All calculations were performed in the MATLAB (version 7.1, MathWorks, Inc.) environment.

Model development

PCA was used to compress a pool of descriptors into PCs as new variables. In the PCA, the first step is data preprocessing on the calculated descriptors by mean centering and autoscaling. Suppose X i,j be the column mean-centered and autoscaled matrix of descriptors for i samples and j descriptors, and y i,1 the vector of the activities (pIC50). After generation of PCs using matrix X i,j , a new matrix, containing scores of PCs, is created. Then, we use these scores as new variables for regression. Scores as new variables possess two interesting properties:

-

(i)

These new variables are sorted as the information content (variance) that they explain decreases from the first PC to the last one. As a result, the last PCs can be deleted, because they do not have useful information.

-

(ii)

PCs are orthogonal, resulting to solving correlation problem that exist in the pool of descriptors.

As a matter of fact, PCR is MLR using the scores matrix as new variables for model building.

The using of calculated PCs as input variables for GRNNs is referred as PC-GRNNs. For selecting optimum number of PCs to use as input of model, root mean square error of cross validation (RMSECV) was used. The main advantage of GRNNs is that it does not require iterative learning (Esbensen et al., 1994). It is an interesting property that makes the method very attractive for model building and hence GRNNs is much faster than the well-known back-propagation neural network (Specht, 1991).

A GRNN consists of four neuron layers: input, pattern (radial basis), summation, and output layers that are shown Fig. 1 schematically. Input layer receives the input X block and distributes it to the neurons in the next layer for processing that is radial basis layer or pattern layer. Thus, the number of neurons in the first layer is equal to the number of columns, n, in the input X block. Each neuron in the pattern layer will then generate an intermediate output by using of Gaussian radial basis function. The duty of neurons in the subsequent summation layer is performing summation of outputs of pattern layer. This layer consists of two types of neurons, numerator and denominator neurons. Number of numerator neurons is equal to number of elements of output y vector. Duty of these types of neurons is computation of weighted sum of the outputs from the previous layer. Another neuron in this layer, the denominator neuron, has a different operation. Duty of this single neuron is simple summation of outputs of pervious layer. The neurons in the output layer will then carry out divisions of the sums computed by neurons in the summation layer.

GRNN is able to approximate any relationship between X block and its y vector. The operation of approximation of y vector by network was performed during training of network. After figuring out the relationship between input and output of networks, this relationship was used for computation of the output of networks. In the GRNNs model, approximation of an output vector with respect to an X block can be regarded as finding the expected value of y conditional upon the X block.

The predicted value of the y vector \( \hat{y}(x) \) is the most probable value, E [y/x], which is determined by following equation.

where y is the output value estimated by GRNNs, X is the input vector for the estimation of y, and f (X, y) is the joint probability density function of X and y that can be calculated by Parzen’s nonparametric estimator. Substituting Parzen’s nonparametric estimator for joint probability density function and calculating the integrations leads to the following equation of GRNNs:

where σ is spread of network (smoothing parameter) and \( D_{i}^{2} \) = (x i − x)T − (x i − x). Training the GRNNs involves finding the optimal values for the σ parameters in above equation.

In another ANNs model building, The combination of PCs of X matrix as input of radial basis function neural networks is referred as PC-RBFNNs.

In PC-RBFNNs same with PC-GRNNs, the original data matrix is reduced to an orthogonal PC and their scores are used as inputs for RBFNNs. In PC-RBFNNs model, the use of scores instead of original descriptors reduces input nodes, so training time of the network is shortened. Also, noisy information and random error in the original data will be excluded. So using PCs generates a more accurate RBFNNs model. Here number of independent variables is very large, so data reduction is very necessary.

The main advantage of radial basis function neural networks is that it does not require iterative learning. It is an interesting property that makes the method very attractive for model building and hence RBFNNs is very faster than the well-known back-propagation neural network. The complete explanation behind the theory of radial basis function neural networks is adequately described elsewhere (Shi et al., 2006; Yao et al., 2004). Here only a brief description of this type of neural network is presented. RBFNNs include three layers: input layer, hidden layer, and output layer as presented in Fig. 2 schematically. Each neuron in each layer is fully connected to the next layer but there is not any connection between neuron in a given layer. No processing occurs on the input information in the input layer and the duty of this layer is only distribution of input to the hidden layer. In the hidden layer of RBFNNs there are a number of radial basis function units (n h ) and bias (b k ). Each hidden layer unit represents a single radial basis function, with associated center position and width. In the hidden layer each neuron applies a radial basis function as nonlinear transfer function to operate on the input information coming from the previous layer. The most often used RBF is Gaussian function that is characterized by a center (c j ) and width (r j ). By measuring the Euclidean distance between input vector (x) and the radial basis function center (c j ) the RBF function performs the nonlinear transformation using following equation in the hidden layer:

where h j is the notation for the output of the jth RBF unit. For the jth RBF, c j and r j are the center and width, respectively. The operation of the output layer is linear, which is given in:

where y k is the kth output unit for the input vector x, w kj is the weight connection between the kth output unit and the jth hidden layer unit, and b k is the bias.

In order to optimize RBFNNs, centers, number of hidden layer units, width, and weights should be selected. Random subset selection, K-means clustering, orthogonal least-squares learning algorithm, and RBF-PLS are various ways for choosing the centers. The same widths of the radial basis function networks for all the units or different widths for each unit could be selected for optimizing RBFNNs.

In this article, Gaussian functions with a constant width, which was the same for all units were selected. Using training set molecules the centers were optimized by forward subset selection routine. After the selection of optimum values of centers and width of radial basis functions the connection weight between hidden layer and output layer was adjusted using a least-squares solution.

The overall performance of RBFNNs is evaluated in terms of root mean square error cross validation (RMSECV) according to the following equation:

where y k is the experimental value of biological activity, \( \hat{y}_{k} \) is the output predicted activity of network calculated by cross validation. n s is the number of compounds in the analyzed set.

Model validation, predictability, and robustness of model

To demonstrate that the resulted models have good predictability and reliability, some different methods of evaluation of model performance have been used. Here, R 2, which presents the explained variance for a given set, was used to determine the goodness of model’s fit performance. In addition, the prediction performance of the generated models must be estimated in order to build a successful QSAR model. In this study, we evaluated the prediction performance of developed models using the root mean square error (RMSE).

Cross validation is a technique used to explore the reliability of statistical models. Root mean square error cross validation (RMSECV) as a standard index to measure the accuracy of a modeling method which is based on the cross validation technique and \( R_{\text{LOO}}^{2} \) as another criterion of predictability of developed models were applied.

According to Tropsha High \( R_{\text{LOO}}^{2} \) does not routinely mean a high predictability of the developed model (Tropsha et al., 2003). Thus, the high value of \( R_{\text{LOO}}^{2} \) is the necessary but not the sufficient condition for the developed model to have a high predictability. In addition to a high \( R_{\text{LOO}}^{2} \), a reliable model should also be characterized by a high R 2 between the calculated and experimental values of compounds from a test set (Afantitis et al., 2006).

Also, some criteria by Tropsha were suggested, if these criteria were satisfied then it can be said that the model is predictive (Tropsha et al., 2003). These criteria include:

R 2 is the correlation coefficient of regression between the predicted and observed activities of compounds in training and test set. \( R_{0}^{2} \) is the correlation coefficients for regressions between predicted versus observed activities through the origin, \( R_{0}^{'2} \) is the correlation coefficients for regressions between observed versus predicted activities through the origin, and the slope of the regression lines through the origin are assigned by k and k′, respectively. Details of definitions of parameters, such as \( R_{0}^{2} \), \( R_{0}^{'2} \), k, and k′ are presented obviously in literature and are not written again here for shortness (Tropsha et al., 2003).

Also, in addition, according to Roy and Roy (2008) the difference between values of \( R_{0}^{2} \) and \( R_{0}^{'2} \) must be studied and given importance. They suggested following modified R 2 form

If \( R_{m}^{2} \) value for given model is >0.5, indicates good external predictability of the developed model.

The actual predictability of each model developed on the training set is confirmed on an external test set (Roy and Roy, 2008) and is calculated from: \( R_{p}^{2} = 1 - {\text{PRESS}}/{\text{SD}} \); where PRESS is the sum of squared differences between the measured activity and the predicted value for each compound in the test set and SD is the sum of squared deviations between the measured activity for each molecule in the test set and the mean measured value of the training set.

Developed models are also tested for reliability and robustness by Y-randomization testing: new models are recalculated for randomly reordered response. We provided evidence that the proposed models are well founded, and not just the result of chance correlation, by obtaining new models on randomized response with significantly lower R 2 than the original models. If the results show high R 2, it implies that an acceptable QSAR model cannot be obtained.

Results and discussion

At the first, a lot of descriptors (columns of X block) were calculated for each molecule using optimized structures of molecules.

Logarithms of the inverse of biological activity (Log 1/IC50) data of 48 molecules were used to get the relationship with independent variables.

Before the model development, and due to the quality of data, a pretreatment of the original data was necessary. Thus, autoscaling and deletion of columns with zero variance were performed.

After deleting zero variance columns of X block, PCs analysis was performed on the pool of descriptors. PCA is a valuable multivariate statistical approach in which new orthogonal variables called PCs are derived as linear combinations of the original variables. These new generated variables are sorted on the basis of information content (i.e., explained variance of the original dataset). Priority of PCs demonstrates their higher quota in the explained variance, so most of the information is retained at the first few PCs. A main characteristic in PCA is that the generated PCs are uncorrelated. PCs can be used to obtain scores which present most of the original variations in the original data set in a smaller number of dimensions.

Among the generated PCs 16 eigenvalue ranked PCs (eigenvalues > 1) were selected for next model building (Table 3). These PCs can explain more than 86.11% of the variances in the original descriptors data matrix. Therefore, we restricted the next model building to these 16 PCs.

To determine degree of homogeneities in the original data set and recognize potential outliers and clusters in the studied molecules, PCA was performed within the calculated descriptors space for all the molecules. Using three more significant PCs (eigenvalues > 1), which explains 53.63% of the variation in the data (30.25, 15.52, and 7.86%, respectively), distribution of molecules over the three first PCs is shown in Fig. 3. As can be seen, none of the molecules are outlier or no cluster is observed.

After evaluating outliers and dividing the molecules into two parts, calibration and validation sets on the basis of Kennard and Stones algorithm, model building using calibration set was performed. PCR as linear model show poor results. Hence nonlinear models were applied as regression methods that are PC-RBFNNs and PC-GRNNs. Developed models were used to predict the activity of molecules in test set to evaluate performance of the developed models.

PC-RBFNNs

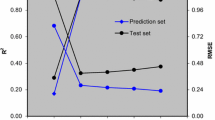

As it was discussed above, radial basis neural network was chosen for constructing nonlinear model. To avoid the problem of collinearity, calculated PCs of original descriptors were used. One of the most important factors determining quality of generated model is number of PCs selected for model building. If the selected number of PC is lower than optimum number, the derived model is called underfitted model and may not calculate true activity of molecules. On the other hand, if too many PCs are used the network is overfitted. Thus for initial training of network, we chose ten hidden nodes and the spread equal to 1.0 and these values were used for finding optimum number of PC-RBFNNs components. This optimization was performed by jointly analyzing RMSEC and RMSECV. As it is shown in Fig. 4a, two PCs were selected as optimum number of PCs. The performance of PC-RBFNNs model is significantly influenced by parameters of networks namely the number of radial basis functions n h and spread of networks.

With two PCs, a response surface methodology was used to optimize n h and spread of networks. As is shown in Fig. 5 a contour surface plot of RMSECV as a function of n h and spread was plotted. n h was changed from 1 to 50 and spread from 0.1 to 3 in increments of 0.1. These ranges were selected according to the previous studies. The results show that a PC-RBFNNs with 13 nodes in hidden layer and spread of 2.3 resulted in the optimum network performance.

\( R_{\text{CV}}^{2} \), the ‘leave-one-out’ (LOO) cross-validated coefficient, is a practical and reliable method for testing the predictive performance and stability of a regression model. LOO approach involves developing a number of models with one molecule deleted at a time. After developing each model, the deleted data are predicted and the differences between the experimental and predicted activity values are calculated. \( R_{\text{CV}}^{2} \) values are then calculated according to the following formula:

where y i is the actual experimental activity, \( \bar{y}_{i} \) the average actual experimental activity and \( \hat{y}_{i} \) is the predicted activity of compound i computed by the new regression equation obtained each time after leaving out one datum point (No. i).

The developed model was trained using the data of training set and it was evaluated by test compounds. For a QSAR model, internal validation, although essential and obligatory, does not adequately assure the predictability of a model. In fact, we are strongly persuaded from pervious experience that models with high apparent predictability, emphasized only by internal validation approaches, can be unpredictive when confirmed on new compounds not applied in developing the model. Thus, for a stronger assessment of model applicability for prediction on new compounds, external validation of the generated models should always be carried out.

In the present study, the generated models were validated externally by the additional test set.

The predicted values of inhibitory activity of the studied compounds resulted from the optimized PC-RBFNNs procedures are reported in Table 4, in association with relative error of prediction (REP). The plots of predicted activity versus experimental activity and the residuals (predicted activity–experimental activity) versus experimental activity value, obtained by the PC-RBFNNs modeling, and the random distribution of residuals about zero mean are shown in Figs. 6a and 7a, respectively.

Residuals both for training and test sets are distributed normally around zero (the mean value), therefore the nonlinear correlation between activity and selected PCs is reliable. The plot of calculated versus experimental activity tells the same theme, adding the information that visually the calculated values appear to capture the experimental values very well.

The statistical parameters and figures of merit as well as Tropsha and Roy parameters for determining the predictability of the developed model are presented for the best-fitted model in Table 5. As presented in Table 5 the model gave an RMSE of 0.180 for the training set and 0.216 for the test set, and the corresponding correlation coefficient R 2 of 0.901 and 0.916, respectively. Furthermore, on the basis of criteria recommended by Tropsha and also \( R_{m}^{2} \) by Roy, the obtained model is predictive.

The PC-RBFNNs was further validated by applying the Y-randomization test. In particular, 10,000 random shuffles of the Y-vector gave low R 2 values. This shows that the developed PC-RBFNN model was not obtained by chance.

PC-GRNNs

In another model, a GRNN was employed. Same with PC-RBFNNs, input of networks is PCs. Leave-one-out cross validation procedure was used to choose the optimum number of PCs for model formation. The number of PCs that produced the least RMSECV was selected as optimum value. A plot of RMSECV and RMSET for PC-GRNNs model as a function of the number of factors is shown in Fig. 4b. Based on this figure, four PCs was selected as the optimum number. GRNN was trained to obtain the relationship between the PCs and activity of molecules. As explained above, for the GRNNs, there is only one parameter: ‘‘spread’’, which is the width that must be optimized. RMSECV of the training set was applied to choose the optimized value of spread. Figure 8 is the plot of RMSECV versus spread and the minimum value was chosen as the optimal value of spread, which was 1.5.

After determining the optimum value of spread, the network was trained using training data and model was evaluated by prediction of molecules in the test set. The predicted activities by using this developed model are listed in Table 4 and are plotted in Fig. 6b. Same as PC-RBFNNs, the calculated values are in good agreement with the experimental values. Also for investigating the existence of any systematic error in the developed PC-GRNNs model, the residual of calculated activity was plotted versus experimental activity and is shown in the Fig. 7b. As can be seen, propagation of residuals on both sides of zero shows that there is not any systematic error in the developed model. The figures of merit and criteria for determining predictability of model for the GRNNs model are reported in Table 5. In this table R 2 that is a criterion of goodness of fit was obtained for two sets and the high value of this parameter indicates a good fit between input of network and predicted activities values of compounds.

As a result, with respect to the developed GRNNs model, it was found that correctly opted and trained neural network could practically represent dependence of the activity of CCR5 inhibitors on the extracted PCs from calculated descriptors of molecules.

To evaluate the PC-GRNNs, a leave one out cross validation technique, similar to that used for the PC-RBFNNs model, was performed. The results are summarized in Table 5.

Inspection of the results reveals the stability of the generated model. With the purpose of exhibiting that PC-GRNNs does not consequence from happenstance, an extensively used method to determine the model robustness is the so-called Y-randomization. It consists of shuffling the experimental activity in such a way that activities do not correspond to the respective molecules. After analyzing 1,000 cases of Y-randomization, R 2 values obtained using this procedure were very small when compared to the one found considering the true calibration (R 2 = 0.88). In this way, the robustness of the developed PC-GRNNs model could be evaluated, indicating that the regression was not a sequence of chance correlation and therefore results in a true SAR.

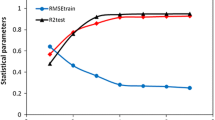

As it can be seen in Table 5, the statistical parameters of the results obtained from two studies for the same set of compounds. The RMS errors of the PC-RBFNNs model for the training, the test, and in the cross validation procedure were lower than that of the model proposed in the PC-GRNNs method. The correlation coefficient (R 2) given by the PC-RBFNNs model was higher than that of the models in the PC-GRNNs method. From the Table 5, it can be seen that the PC-RBFNNs model gives the highest R 2 and low error values, so this model gives the most satisfactory results, compared with the results obtained from the PC-GRNNs method.

Suggestions of new CCR5 inhibitors

As a final point, one could dispute that how researchers can interpret the developed models using PCs or how developed models can be applied to propose novel compounds with improved activity. Said another way, what does the developed models mean to medicinal chemists? As discussed above, the calculated PCs do not mean physicochemically, but they may be employed for building statistical models which help the medicinal chemist limit the number of compounds to be synthesized. For instance, medicinal chemist can propose a training set comprised of molecules which have the characters of two or more chemical classes with the smallest amount of similarity. Then he/she can use the developed models to predict the activity of his/her proposed molecules. This practice may lead to the introduction of biologically active molecules.

In order to investigate the electronic requirements of active CCR5 antagonist compounds, the molecular structures of all the studied derivatives were built with Hyperchem. Gas-phase full geometry optimization for the studied drug-like molecules was performed. The structures were optimized with ab initio method at the hybrid functional B3LYP (Becke’s three-parameter (Becke, 1993)) and the large-size basis set 6-311G**. Full optimization of all bond lengths and angles was performed. Because the calculated values of the electronic descriptors of the drug-like compounds will be influenced by the geometry used, in the current investigation we try to employ the most established conformations of the studied molecules. To avoid the caught in the local minima of geometry optimization process, procedure was run many times with different starting points for each compound, and in each molecule conformation with the lowest energy was selected to use in next steps of evaluation. The overlaid 3D structures of some of the studied molecules (from the benzyl subunit) are shown in Fig. 9. As it is seen the orientation of the sulphonyl subunit of the molecules remains quite unchanged when the structural pattern of the –R group or the number of chlorine atom changes in the phenyl ring of the benzyl subunit. To include the effects of the electronic descriptors of the molecules on their CCR5 inhibitory activity, some quantum chemical parameters such as local charges, most positive charge, most negative charge, dipole moments and HOMO and LUMO energies were calculated and applied in the calculation of PCs. Also for evaluating SAR of compounds and using of extracted information to in silico suggestion of new CCR5 antagonists, general structure of studied compound and highlighted subunits was shown in Fig. 10. It is clear from Table 4 that the compounds 48, 7, 3, and 4 have highest bioactivities. With considering these compounds, it is clear that in all of them R group in sulphonyl subunit is lipophile, such as phenyl (in the compound 48) and cyclohexyl (in the compound 7) and also all of these compounds have at least one withdrawing electron atom on the phenyl ring in benzyl subunit. Also in all of them except to 48, it is clear the beneficial binding affinities of the sulfoxide moiety in pyperidine subunit, which it confirms a hydrogen bond acceptor interaction or a simple polar effect.

In order to design novel derivatives with high inhibition effect of CCR5, and because experimental and computed activities of compounds used in the model development step, shown a good correlation, developed PC-RBFNNs QSAR model was used to calculate inhibitory activities of suggested compounds. Structures of novel antagonists of CCR5 may then be suggested and bioactivities of them could evaluate by using the generated model. Based on the following strategy novel compounds were suggested. Compounds owning the general structure of investigated compounds in addition of the various substituents may produce the novel compounds. Structures of these novel ligands also were generated and then PCs of them were generated. Hence, using calculated PCs and the developed PC-RBFNs model, bioactivities of proposed ligands are calculated.

The general structures of eight suggested compounds and details and also their calculated activities are reported in Table 6. The suggested compounds are combination of the most potent compounds of Table 1 especially compounds 48, 7, 3, and 4. The relative high predicted activities of suggested compounds confirm further study, such as synthesis should be performed on such chemical structures.

Conclusion

The aim of the present study was to rationalize bioactivities of some practically studied CCR5 antagonist compounds through the use of developed QSAR models, in order to finally aid design and suggestion of novel CCR5 antagonists and evaluation of bioactivity of these new compounds computationally.

Two nonlinear methods, the radial basis function neural networks and GRNNs were employed to obtain predictive QSAR models for CCR5 inhibitory activity of a set of 48 compounds using different calculated descriptors. In both methods, a comparison was made between obtained results for the same set of compounds. The RMSE of the PC-RBFNNs model for the training and the test sets, and in the cross validation procedure were lower than RMSE of the model developed by the PC-GRNNs method. The correlation coefficient produced by the PC-RBFNNs model was higher than that in the PC-GRNNs method. In order to design novel derivatives with high inhibition effect of CCR5, and because experimental and computed activities of molecules used in the model development step, shown a good correlation, developed QSAR model by PC-RBFNNs was employed to calculate inhibitory activities of some suggested compounds.

References

Afantitis A, Melagraki G, Sarimveis H, Koutentis PA, Markopoulos J, Igglessi-Markopoulou O (2006) A novel QSAR model for predicting induction of apoptosis by 4-aryl-4H-chromenes. Bioorg Med Chem 14:6686–6694

Andres PG, Beck PL, Mizoguchi E, Mizoguchi A, Bhan AK, Dawson T, Kuziel WA, Maeda N, MacDermott RP, Podolsky DK, Reinecker HC (2000) Mice with a selective deletion of the CC chemokine receptors 5 or 2 are protected from dextran sodium sulfate-mediated colitis: Lack of CC chemokine receptor 5 expression results in a NK11+ lymphocyte-associated Th2-type immune response in the intestine. J Immunol 164:6303–6312

Arkan E, Shahlaei M, Pourhossein A, Fakhri K, Fassihi A (2010) Validated QSAR analysis of some diaryl substituted pyrazoles as CCR2 inhibitors. Eur J Med Chem 45:3394–3406

Becke A (1993) Density-functional thermochemistry. III. The role of exact exchange. J Chem Phys 98:5648–5652

Campiani G, Ramunno A, Maga G, Nacci V, Fattorusso C, Catalanotti B, Morelli E, Novellino E (2002) Non-nucleoside HIV-1 reverse transcriptase (RT) inhibitors: past present and future perspectives. Curr Pharm Des 8:615–657

Celikoglu HB (2006) Application of radial basis function and generalized regression neural networks in non-linear utility function specification for travel mode choice modelling. Math Comput Modell 44:640–658

Chtioui Y, Panigrahi S, Francl L (1999) A generalized regression neural network and its application for leaf wetness prediction to forecast plant disease. Chemometr Intell Lab 48:47–58

Chun T, Fauci A (1999) Latent reservoirs of HIV: obstacles to the eradication of virus. Proc Natl Acad Sci USA 96:10958–10961

Dorn CP, Finke PE, Oates B, Budhu RJ, Mills SG, MacCoss M, Malkowitz L, Springer MS, Daugherty BL, Gould SL, DeMartino JA, Siciliano SJ, Carella A, Carver G, Holmes K, Danzeisen R, Hazuda D, Kessler J, Lineberger J, Miller M, Schleif WA, Emini EA (2001) Antagonists of the human CCR5 receptor as anti-HIV-1 agents. Part 1: discovery and initial structure-activity relationships for 1-amino-2-phenyl-4-(piperidin-1-yl)butanes. Bioorg Med Chem Lett 11:259–264

Dragic T, Litwin V, Allaway GP, Martin SR, Huang Y, Nagashima KA, Cayanan C, Maddon PJ, Koup RA, Moore JP, Paxton WA (1996) HIV-1 entry into CD4+ cells is mediated by the chemokine receptor CC-CKR-5. Nature 381:667–673

Esbensen K, Schönkopf S, Midtgaard T (1994) Multivariate analysis in practice. CAMO AS, Trondheim, Norway

Finke PE, Meurer LC, Oates B, Shah SK, Loebach JL, Mills SG, MacCoss M, Castonguay L, Malkowitz L, Springer MS, Gould SL, DeMartino JA (2001) Antagonists of the human CCR5 receptor as anti-HIV-1 agents. Part 3: a proposed pharmacophore model for 1-[N-(methyl)-N-(phenylsulfonyl)amino]-2-(phenyl)-4-[4-(substituted)piperidin-1-yl]butanes. Bioorg Med Chem Lett 11:2469–2473

Finzi D, Blankson J, Siliciano JD, Margolick JB, Chadwick K, Pierson T, Smith K, Lisziewicz J, Lori F, Flexner C, Quinn TC, Chaisson RE, Rosenberg E, Walker B, Gange S, Gallant J, Siliciano RF (1999) Latent infection of CD4+ T cells provides a mechanism for lifelong persistence of HIV-1 even in patients on effective combination therapy. Nat Med 5:512–517

Fischer E (1894) Einfluss der Configuration auf die Wirkung der Enzyme Berichte der deutschen chemischen. Gesellschaft 27:2985–2993

Fischereder M, Luckow B, Hocher B, Wthrich RP, Rothenpieler U, Schneeberger H, Panzer U, Stahl RAK, Hauser IA, Budde K, Neumayer HH, Kr¤mer BK, Land W, Schlndorff D (2001) CC chemokine receptor 5 and renal-transplant survival. Lancet 357:1758–1761

Free SM Jr, Wilson J (1964) A mathematical contribution to structure-activity. Stud J Med Chem 7:395–399

Furtado MR, Callaway DS, Phair JP, Kunstman KJ, Stanton JL, Macken CA, Perelson AS, Wolinsky SM (1999) Persistence of HIV-1 transcription in peripheral-blood mononuclear cells in patients receiving potent antiretroviral therapy. New Engl J Med 340:1614–1622

Hansch C, Fujita T (1964) Erratum: ρ-σ-π analysis. A method for the correlation of biological activity and chemical structure. J Am Chem Soc 86:5710 (J Am Chem Soc 86:1625)

Hansch C, Hoekman D, Gao H (1996) Comparative QSAR: toward a deeper understanding of chemicobiological interactions. Chem Rev 96:1045–1076

Hansch C, Kurup A, Garg R, Gao H (2001) Chem-bioinformatics and QSAR: a review of QSAR lacking positive hydrophobic terms. Chem Rev 101:619–672

Kennard R, Stone L (1969) Computer aided design of experiments. Technometrics 11:137–148

Klabunde T, Hessler G (2002) Drug design strategies for targeting G-protein-coupled receptors. ChemBioChem 3:928–944

Klocker J, Wailzer B, Buchbauer G, Wolschann P (2002) Bayesian neural networks for aroma classification. J Chem Inf Comput Sci 42:1443–1449

Mosier P, Jurs P (2002) QSAR/QSPR studies using probabilistic neural networks and generalized regression neural networks. J Chem Inf Comput Sci 42:1460–1470

Niwa T (2003) Using general regression and probabilistic neural networks to predict human intestinal absorption with topological descriptors derived from two-dimensional chemical structures. J Chem Inf Comput Sci 43:113–119

Pipitone N, Pitzalis C (2000) The role of chemokines in inflammation and rheumatoid arthritis. Curr Opin Anti-inflamm Immunomodul Invest Drugs 2:9–15

Pomerantz R (1999) Primary HIV-1 resistance: a new phase in the epidemic? JAMA 282:1177–1179

Pompe M, Razinger M, Novic M, Veber M (1997) Modelling of gas chromatographic retention indices using counterpropagation neural networks. Anal Chim Acta 348:215–221

Roy P, Roy PK (2008) On some aspects of variable selection for partial least squares regression models. QSAR Comb Sci 27:302–313

Saghaie L, Shahlaei M, Fassihi A, Madadkar-Sobhani A, Gholivand M, Pourhossein A (1111) QSAR analysis for some diaryl-substituted pyrazoles as CCR2 inhibitors by GA-stepwise MLR. Chem Biol Drug Des 77:75–85

Saghaie L, Shahlaei M, Madadkar-Sobhani A, Fassihi A (2010) Application of partial least squares and radial basis function neural networks in multivariate imaging analysis-quantitative structure activity relationship: study of cyclin dependent kinase 4 inhibitors. J Mol Graph Model 29:518–528

Schmidli H (1997) Multivariate prediction for QSAR. Chemometr Intell Lab 37:125–134

Sellebjerg F, Madsen H, Jensen C, Jensen J, Garred P (2000) CCR5 delta32 matrix metalloproteinase-9 and disease activity in multiple. J Neuroimmunol 102:98–106

Shahlaei M, Fassihi A, Saghaie L (2010a) Application of PC-ANN and PC-LS-SVM in QSAR of CCR1 antagonist compounds: a comparative study. Eur J Med Chem 45:1572–1582

Shahlaei M, Sabet R, Ziari M, Moeinifard B, Fassihi A, Karbakhsh R (2010b) QSAR study of anthranilic acid sulfonamides as inhibitors of methionine aminopeptidase-2 using LS-SVM and GRNN based on principal components. Eur J Med Chem 45:4499–4508

Shi J, Luan F, Zhang H, Liu M, Guo Q, Hu Z, Fan B (2006) QSPR study of fluorescence wavelengths (λex/λem) based on the heuristic method and radial basis function neural networks. QSAR Comb Sci 25:147–155

Specht D (1991) A general regression neural network. IEEE Trans Neural Netw 2:568–576

Tetteh J, Howells S, Metcalfe E, Suzuki T (1998) Optimisation of radial basis function neural networks using biharmonic spline interpolation. Chemometr Intell Lab 41:17–29

Thanikaivelan P, Subramanian V, Raghava Rao J, Unni Nair B (2000) Application of quantum chemical descriptor in quantitative structure activity and structure property relationship. Chem Phys Lett 323:59–70

Todeschini R, Consonni V, Mauri A, Pavan M (2002) DRAGON software version 2.1. Milano, Italy. http://disat.unimib.it/chm/Dragon.html

Tropsha A, Gramatica P, Gombar V (2003) The importance of being earnest: validation is the absolute essential for successful application and interpretation of QSPR models. QSAR Comb Sci 22:69–77

Walczak B, Massart DL (2000) Local modelling with radial basis function networks. Chemometr Intell Lab 50:179–198

Yao X, Panaye A, Doucet J, Zhang R, Chen H, Liu M, Hu Z, Fan B (2004) Comparative study of QSAR/QSPR correlations using support vector machines radial basis function neural networks and multiple linear regression. J Chem Inf Comput Sci 44:1257–1266

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Shahlaei, M., Madadkar-Sobhani, A., Fassihi, A. et al. QSAR study of some CCR5 antagonists as anti-HIV agents using radial basis function neural network and general regression neural network on the basis of principal components. Med Chem Res 21, 3246–3262 (2012). https://doi.org/10.1007/s00044-011-9863-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00044-011-9863-2