Abstract

The classical Kuramoto model consists of finitely many pairwisely coupled oscillators on the circle. In many applications a simple pairwise coupling is not sufficient to describe real-world phenomena as higher-order (or group) interactions take place. Hence, we replace the classical coupling law with a very general coupling function involving higher-order terms. Furthermore, we allow for multiple populations of oscillators interacting with each other through a very general law. In our analysis, we focus on the characteristic system and the mean-field limit of this generalized class of Kuramoto models. While there are several works studying particular aspects of our program, we propose a general framework to work with all three aspects (higher-order, multi-population, and mean-field) simultaneously. In this article, we investigate dynamical properties within the framework of the characteristic system. We identify invariant subspaces of synchrony patterns and study their stability. It turns out that the so called all-synchronized state, which is one special synchrony pattern, is never asymptotically stable. However, under some conditions and with a suitable definition of stability, the all-synchronized state can be proven to be at least locally stable. In summary, our work provides a rigorous mathematical framework upon which the further study of multi-population higher-order coupled particle systems can be based.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Interacting oscillatory processes are abundant in science and technology, whether it is pacemaker cells in the heart [48], neural networks in the brain [52], the synchronization of chemical oscillators [28], Josephson junctions [54], synchronous flashing fireflies [16], stock prices in financial markets [19], and even a group of people causing a bridge to swing by marching over it in step [46]. From a mathematical perspective, many such systems can be described as networks of coupled phase oscillators: While each node in the network is a simple oscillatory process whose state is given by a single phase-like variable on the circle, the network dynamics—the collective dynamics of all nodes—can be quite intricate. Probably the most prominent example of collective network dynamics is synchrony when all nodes evolve in unison [45]. Synchrony can come in many forms, whether oscillators synchronize in phase or in frequency. Synchrony may be global across the network or may be localized in part of the network to give rise to synchrony patterns. Synchrony in its many forms often is also relevant for the function of a network dynamical system: In neural networks, synchrony is linked to cognitive function as well as disease [50].

A classical question in network dynamical systems is how the network structure and functional interactions shape the collective network dynamics. The Kuramoto model has been instrumental in understanding synchronization of coupled oscillators [1, 33, 44].

For a network of N Kuramoto oscillators, each oscillator is described by a phase \(\theta _k\in {\mathbb {S}}= {\mathbb {R}}/2\pi {\mathbb {Z}}\), \(k\in \left\{ 1,\dotsc ,N\right\} \), that evolves according to

where \(K>0\) is the coupling strength and \(\omega _k\in {\mathbb {R}}\) is the intrinsic frequency of oscillator k. First, consider Kuramoto’s classical problem of the onset of synchrony if the intrinsic frequencies are drawn from a probability distribution with a unimodal density \(\upsilon (\omega )\). Uncoupled oscillators (\(K=0\)) behave incoherently but at a critical coupling strength \(K_c\) they start to become partially synchronized. To analyze this as a bifurcation problem, Mirollo and Strogatz [47] considered the mean-field limit of infinitely many oscillators, \(N\rightarrow \infty \). For (1.1) the state of the oscillators in the mean-field limit is given by a density \(\rho (t,\omega , \theta )\) that describes the probability of an oscillator with intrinsic frequency \(\omega \) to be at phase \(\theta \) at time t. It evolves according to the continuity equation

The approach to tackle Kuramoto’s problem, is to understand the bifurcations of the splay state \(\rho (t,\omega ,\theta ) = 1/(2\pi )=:\mathrm {D}\), a density that is invariant under the evolution [44, 47]. Second, consider the classical problem in network dynamics of understanding when full synchrony, where all nodes take the same state—in the context of phase oscillator networks this corresponds to \(\mathrm {S}=\left\{ \theta _1=\cdots =\theta _N\right\} \)—is stable, see for example [2, 21, 41].

However, note that the partial differential equation (PDE) as given in (1.2) is not immediately appropriate to describe full synchrony \(\mathrm {S}\). On the one hand, for non-atomic distributions of the intrinsic frequencies, the synchronized state is not invariant. It is only invariant under the additional assumption that the intrinsic frequencies of the oscillators are identical. That is why previous analyses of full synchrony are usually limited to networks of identical oscillators [8, 17]. On the other hand, the synchronized state cannot be represented by a density. Therefore, we would need to consider weak measure-valued solutions of (1.2), which is already an involved construction. Yet, the first-order PDE (1.2) is connected via the method of characteristics to a system of characteristic equations. The characteristic equations turn out to be more convenient to study the stability of synchronized states and thus form the foundation for our analysis: It yields a framework that allows for the simultaneous analysis of the splay state and full synchrony by taking the evolution of general measures on the circle into account.

In addition, many oscillatory real-world network dynamical systems are more complex and cannot be captured using the Kuramoto model. First, one needs to consider interaction functions that contain more than a single harmonic [18], for example if the interactions are state-dependent [4]. Considering a general coupling function \(g:{\mathbb {S}}\rightarrow {\mathbb {R}}\) breaks the degeneracy and chaotic dynamics are possible even for a small number of oscillators [15]. Second, many networks that arise in real-world systems are not homogeneous or all-to-all coupled but have some form of modularity or community structure [23, 40]: There are different communities or populations that are characterized by the property that the coupling within a population is different from the coupling between populations. Even if the populations are identical, multi-population oscillator networks give rise to a variety of synchrony patterns; see [11] for a recent review. Third, classical network dynamical systems, such as the Kuramoto model (1.1), assume that interactions take place in terms of pairs: The influence of two nodes on a third is simply the sum of two individual contributions. However, nonpairwise higher-order interactions arise naturally when considering phase reductions (cf. [5, 35]) and recent work has highlighted the dynamical importance of higher-order interactions [7, 12]. For example, Skardal and Arenas [43] considered a system with higher-order interactions that induced nonstandard synchronization transitions [31]. In their system for identical oscillators, the phase oscillator dynamics is given by

with nonadditive interactions that involve triplets and quadruplets of oscillators. We emphasize that in each of these three examples, generalized interactions lead to new dynamics compared to sinusoidal Kuramoto interactions, even in the case of identical oscillators. Further examples of new dynamics are possible if these features are combined, e.g., modular networks of phase oscillator networks with higher-order interactions allow for heteroclinic structures between different synchrony patterns [9, 10, 13]. Specifically, the system considered in [10] consists of three populations of phase oscillators that are coupled via higher-order interactions. In this system the synchronization of the oscillators in one population can cause oscillators in another population to desynchronize. That in turn causes the third population to start synchronizing. This causal chain continues and finally results in a heteroclinic cycle in which populations sequentially synchronize and desynchronize.

Attracting heteroclinic structures between synchrony patterns exist in networks of up to nine phase oscillators [10], but numerical simulations indicate that such structures also exist in larger (finite) networks. However, it is unclear whether such cycles can also exist in the mean-field limit.

In this paper, we develop a general framework to describe the mean-field dynamics of multi-population phase oscillator networks with generalized interactions as a set of characteristic equations for the evolution of measures. This framework is sufficiently general to provide a unified description for the examples mentioned above with general interactions, including nonsinusoidal and higher-order interactions. Our main results concern stability of synchrony patterns in this framework. Our results contribute to the understanding of coupled oscillator networks in several ways. First, we generalize the results of [34] to transport equations involving finitely many oscillator populations with higher-order interactions. Second, our stability analysis complements and extends the work in [17] and [20] by considering a general setting with multiple oscillator populations and general coupling with nonsinusoidal pairwise and nonpairwise higher-order interactions. In our work the stability of synchrony patterns is studied with a coupling function including higher-harmonics. Third, our explicit stability results on a measure-valued evolution shows in this setting one cannot expect asymptotic stability of full phase synchrony but just a weaker form of stability. Finally, our results provide a first step towards understanding global dynamical phenomena in the general mean-field limit: Our stability results outline necessary conditions to prove that the heteroclinic structures for small networks exist in the mean-field limit of large networks.

This work is organized as follows. In the remainder of this section we fix some notation that will be used throughout and introduce a general system of equations that describe the network dynamics. In Sect. 2 we introduce the system of characteristic equations state theorems about existence and uniqueness of the equations in the space of probability measures and give examples for their applications. We further clarify the relation between the system of characteristic equations and the Vlasov–Fokker–Planck PDE. The main results regarding synchronization are given in Sect. 3. In particular in Sect. 3.1.1 we explain why one can not expect to have asymptotic stability under generic perturbations in the mean-field limit. Sections 3.1.2 and 3.1.3 give our main results about (asymptotic) stability. Finally, in Sect. 4 we give some concluding remarks and an outlook on future research.

2 Notation

We first fix some notation that will be used throughout this paper. Let \({\mathcal {P}}(X)\) denote the set of all Borel probability measures on the set X. If \({\mathbb {S}}={\mathbb {R}}/2\pi {\mathbb {Z}}\) is the unit circle then \({\mathcal {P}}({\mathbb {S}})\) represents the set of all Borel probability measures on the circle. The symbol \({\mathcal {P}}_\mathrm {ac}({\mathbb {S}})\) is used to denote the set of absolutely continuous probability measures on the unit circle, i.e., those which have a density. Whenever we write \(\alpha _1-\alpha _2\) for two points \(\alpha _1, \alpha _2\in {\mathbb {S}}\) on the circle, we refer to the value of \(\alpha _1-\alpha _2 \in [0,2\pi )\). Further, let us define the open arc on the circle as

Assuming \(\alpha _1\) and \(\alpha _2\) are represented by values \(\alpha _1, \alpha _2\in (-\pi , \pi ]\) we use the notation

To compare two measures \(\mu , \nu \in {\mathcal {P}}({\mathbb {S}})\), we use the Wasserstein-1 distance [51], which is also referred to as the bounded-Lipschitz distance

where \(M_1\gamma \) and \(M_2\gamma \) are the marginals of \(\gamma \), i.e., the push-forward measures under the map \((\alpha , \beta )\mapsto \alpha \) and \((\alpha , \beta )\mapsto \beta \) and

Further, for \(n\in {\mathbb {N}}_{\ge 0}\) we write \([n] := \{1, \dotsc , n\}\) and for \(R\in {\mathbb {N}}_{\ge 0}\) we define the multi-index \(s = (s_1,\dots ,s_R)\in [M]^R\). Then, given \(\mu = (\mu _1,\dots ,\mu _M)\in {\mathcal {P}}({\mathbb {S}})^M\), we define the measure

and write \(\left|s \right| = R\), \({\bar{s}} = \max _{j\in [M]} \left|\{i : s_i = j\} \right|\).

3 Solution theory

In this section we first introduce the system of characteristic equations. Our equations provide a very general variant of this principle allowing for multi-population higher-order coupled systems. Yet, the formulation also naturally reduces to classical cases. Next, we make connections to existing theory [39] about well-posedness of special cases of our system, for which these results have already been established. Since the proof in [39], that is based on a contraction mapping principle argument in suitable space, can mostly be adopted to the multi-population model with higher-order interactions we only state the main definitions and theorems here. However, when generalizing the proof from [39] to multi-populations, an additional technical Lemma is needed that estimates the Wasserstein-1 distances of multi-dimensional product measures in terms of its constituents, see Lemma A.5. For completeness, the full analysis, together with remarks where it differs from the existing analysis, can be found in Appendix A.1. Our analysis also relates to recent results on the mean-field limit on networks with general pairwise interactions [26]; indeed in this special case of pairwise interactions, one can show that the multi-population systems considered here correspond to the dynamics in [26] on invariant subsets forced by symmetry [14].

3.1 The system of characteristic equations

In this paper, we consider the dynamics of \(M\in {\mathbb {N}}_{\ge 1}\) coupled phase oscillator populations with identical intrinsic frequencies within each population. We introduce a general set of equations that describes the network evolution, where the state of population \(\sigma \in [M]\) is given by a probability measure \(\mu _\sigma \).

The network interactions are determined by a multi-index \(s^\sigma \in [M]^{R_\sigma }\) for each population together with Lipschitz continuous coupling functions \(G_\sigma :{\mathbb {S}}^{\left|s^\sigma \right|}\times {\mathbb {S}}\rightarrow {\mathbb {R}}\). The entries of the multi-index \(s^\sigma \) specify the indices of the populations which influence population \(\sigma \) and the coupling functions quantify the coupling type and strength. Specifically, these coupling functions are supposed to be L-Lipschitz when \({\mathbb {S}}^{\left|s^\sigma \right|}\times {\mathbb {S}}\) is considered with the metric \(d(\alpha ,\beta ) = \sum _{i=1}^{\left|s^\sigma \right|+1} |\alpha _i-\beta _i |_{\mathbb {S}}\).

Before we state the general characteristic equations, we illustrate the notation on two examples.

Example 2.1

(Skardal–Arenas) Let us now consider a system which still consists of only one population but involves higher-order interactions. Specifically, we reconsider system (1.3):

Here, N denotes the amount of discrete oscillators, \(k\in [N]\) and \(K_1,K_2,K_3\in {\mathbb {R}}\). In the mean-field limit one assumes that the limiting distribution of the oscillators \(\{\theta _k\}_{k\in [N]}\) is represented by a probability measure \(\mu = \mu _1\in {\mathcal {P}}({\mathbb {S}})\). To find an equivalent of the right-hand side of (2.1) we first consider the triple sum in (2.1). Its summand is influenced by three oscillators, \(\theta _j,~\theta _l\) and \(\theta _m\) if the position \(\theta _k\) is fixed. In the mean-field limit, this fixed position is denoted by \(\phi \in {\mathbb {S}}\) that can be an arbitrary position on the circle and is not constrained to positions \(\{\theta _k\}_{k\in [N]}\). Consequently, these sums should be replaced by integrals in the mean-field limit, giving us

Now we introduce the multi-index \(s = s^1 = (1,1,1)\), that encodes the information that there are three integrals in (2.2) over the first population (hence the entry 1). Note that there is only one population in this example. Using this notation, (2.2) can be written as

To take care of the parts of (2.1) that involve only single and double sums, we include sums of the form \(\frac{1}{N} \sum _{m=1}^N\) and \(\frac{1}{N} \sum _{l=1}^N\) to these parts such that they are also represented by triple sums. For these parts, the summand of the these triple sum then does not depend on all indexing variables j, l, m. This has the advantage that the equivalent of the right-hand side of (2.1) can then be written as

where the coupling function \(G := G_1:{\mathbb {S}}^3\times {\mathbb {S}}\rightarrow {\mathbb {R}}\) is given by

Example 2.2

(Heteroclinic Structures) We consider the network of \(M=3\) populations from the introduction in which the heteroclinic connections between synchronized and desynchronized states have been observed [10]. Here, finite phase oscillator populations are coupled by higher-order interactions. In particular, a slightly adopted version of the main equations from [10] is given by

where the index \(\sigma \pm 1\) for the population has to be understood modulo M if \(\sigma \pm 1\not \in \{1,2,3\}\). Furthermore, \(\phi _{\sigma ,k}\) refers to the phase of the kth oscillator in population \(\sigma \) for \(k = 1,\dots ,N\), \(h_2:{\mathbb {S}}\rightarrow {\mathbb {R}}\) is a Lipschitz-continuous intra-population coupling function and

with a Lipschitz-continuous inter-population coupling function \(h_4:{\mathbb {S}}\rightarrow {\mathbb {R}}\).

As in the last example, we seek for a representation of the right-hand side of (2.4) in the mean-field limit. Unlike in the last example, however, there are now multiple populations, which is why we need a multi-index \(s^\sigma \) for each population \(\sigma = 1,2,3\). The entries of \(s^\sigma \) then give the indices of populations which influence population \(\sigma \). In other words, if there is an entry \({\hat{\sigma }}\) in \(s^\sigma \) it means that an integral over the measure representing population \({\hat{\sigma }}\) appears in the mean-field equivalent of (2.4). Specifically, we set \(s^1 = (3,3,2,2,1), s^2 = (1,1,3,3,2), s^3=(2,2,1,1,3)\) and choose coupling functions that are defined by \(G_1(\alpha ,\phi ) = G_2(\alpha ,\phi ) = G_3(\alpha ,\phi ) := G(\alpha ,\phi )\) with

The mean-field equivalent of the right-hand side of (2.4) can then be written as

Having introduced the notation, we now formulate the general system of characteristic equations.

The characteristic equations describe the evolution of measures. If \(\mu ^\mathrm {in} = (\mu ^\mathrm {in}_1,\dots ,\mu ^\mathrm {in}_M)\in {\mathcal {P}}({\mathbb {S}})^M\) denotes the initial state of the network, \(\#\) denotes the push-forward operator and \(\mu = (\mu _1,\dots ,\mu _M)\), then the evolution of \(\mu (t) = (\mu _1(t), \dots ,\mu _M(t))\) is determined by the characteristic equations

for \(\sigma \in [M]\), \(\xi ^\mathrm {in}_\sigma \in {\mathbb {S}}\), and the evolution operator

where \(\omega _\sigma \in {\mathbb {R}}\) is the instantaneous frequency of all oscillators in population \(\sigma \). The quantity \(\Phi _\sigma (t,\xi ^\mathrm {in}_\sigma , \mu ^\mathrm {in})\) can be regarded as the position of a weightless test particle at time t that initially started at \(\xi ^\mathrm {in}_\sigma \). Note that \(\alpha \) in (2.7) is vector valued, whereas \(\phi \) is not. In particular, \(\alpha \in {\mathbb {S}}^{\left|s^\sigma \right|}\) and \(\phi \in {\mathbb {S}}\). We remark that the general idea of using a mean-field formulation involving probability measures is quite classical [25].

Definition 2.3

Let \(\mu ^\mathrm {in} = (\mu ^\mathrm {in}_1,\dots ,\mu ^\mathrm {in}_M)\in {\mathcal {P}}({\mathbb {S}})^M\). If functions \(t\mapsto \Phi _\sigma (t,\xi ^\mathrm {in}_\sigma , \mu ^\mathrm {in})\) solve the ordinary differential Eq. (2.6a) together with (2.6b), (2.6c) and (2.7), they are referred to as the mean-field characteristic flow. In this case, \(\mu \in C_{{\mathcal {P}}({\mathbb {S}})}^M\), given by (2.6b), is a solution of the system (2.6)–(2.7).

Remark 2.4

For a given mean-field characteristic flow \(\Phi _\sigma \), the solution \(\mu \) of the characteristic system is uniquely given by (2.6b). Conversely, for a given solution \(\mu \in C_{{\mathcal {P}}({\mathbb {S}})}^M\), the mean-field characteristic flow \(\Phi _\sigma \) is unique, as one can see by integrating (2.6a) and (2.6c).

3.2 Existence and uniqueness for the characteristic system

We start with existence and uniqueness building upon ideas by Neunzert [39] developed in the context of more classical single-population kinetic models, which lead to similar mean-field limits in comparison to our system (2.6)–(2.7). First, we have to define a suitable space for solutions.

Definition 2.5

Let \(T>0\). A function \(\mu :[0,T]\rightarrow {\mathcal {P}}({\mathbb {S}})\) is weakly continuous if for all \(f\in C({\mathbb {S}})\) the map

is continuous. Let \(C_{{\mathcal {P}}({\mathbb {S}})}\) be the set of all weakly continuous functions \(\mu :[0,T] \rightarrow {\mathcal {P}}({\mathbb {S}})\).

For a given \(\mu \in C_{{\mathcal {P}}({\mathbb {S}})}^M\), let \(T_{t,s}^\sigma [\mu ]\) denote the flow induced by the velocity field \(({\mathcal {K}}_\sigma \mu (t))(\phi )\), i.e.,

For given \(\mu ^\mathrm {in}\in {\mathcal {P}}({\mathbb {S}})^M\) we now consider the mapping

It can be shown that A as defined in (2.9) is a self mapping when choosing an appropriate metric, see Lemma A.9. The following theorem then follows from the contraction mapping principle:

Theorem 2.6

(cf. [39], Theorem 2) For given \(\mu ^\mathrm {in} = (\mu _1^\mathrm {in},\dots ,\mu _M^\mathrm {in})\in {\mathcal {P}}({\mathbb {S}})^M\) there exists a unique solution \(\mu \in C_{{\mathcal {P}}({\mathbb {S}})}^M\) for the system (2.6)–(2.7).

Remark 2.7

As \(T>0\) is arbitrary, we do not explicitly include T in the notation \(C_{{\mathcal {P}}({\mathbb {S}})}\) for functions mapping from [0, T] to \({\mathcal {P}}({\mathbb {S}})\). Since the following existence and uniqueness result as well as results regarding continuous dependence on initial conditions are valid for all \(T>0\), they can be extended to hold on the half-open interval \([0,\infty )\).

Example 2.8

(Discrete initial measures) As a special case of the characteristic system (2.6)–(2.7), one is often interested in choosing \(\mu ^\text {in}\) as a discrete measure. In particular, initial measures of the form

describe the discrete states of N oscillators in each of the M populations. It can easily be seen, that if functions \(\phi _{\sigma ,k}:{\mathbb {R}}_{\ge 0} \rightarrow {\mathbb {S}}\) solve the finite-dimensional generalized Kuramoto system

with initial condition \(\phi _{\sigma ,k}(0) = \phi ^\mathrm {in}_{\sigma ,k}\), then the measures

solve the characteristic system (2.6)–(2.7).

3.3 Vlasov–Fokker–Planck mean-field equation

Consider the special case that the initial measures \(\mu ^\text {in}_1,\dots ,\mu ^\text {in}_M \in {\mathcal {P}}({\mathbb {S}})\) all have a density, that we denote by \(\rho _\sigma ^\text {in}(\phi )\). It can be shown that the measures \(\mu _\sigma (t)\) for \(\sigma \in [M]\) also have densities \(\rho _\sigma (t,\phi )\) for times \(t\ge 0\) and they solve the Vlasov–Fokker–Planck Mean-Field Equation

with

and \(\sigma \in [M]\). Here, \(\mu _{\rho (t)}\in {\mathcal {P}}({\mathbb {S}})^M\) is the collection of measures whose densities are given by \((\rho _\sigma (t,\cdot ))_{\sigma \in [M]}\). The initial conditions

are given such that

Definition 2.9

(Strong Solution) A family of densities \(\rho _\sigma \in C^1([0,T]\times {\mathbb {S}})\) is a strong solution if it satisfies (2.13) and (2.14).

By this definition non-differentiable densities cannot be strong solutions. To make them admissible anyway, we weaken the definition by multiplying (2.13) by a test function \(w_\sigma \in C^1([0,T] \times {\mathbb {S}})\) that has compact support in \([0,T)\times {\mathbb {S}}\) and perform partial integration using the boundary condition (2.14). By doing so we arrive at the following definition of a weak solution:

Definition 2.10

(Weak Solution) A collection of measurable functions \((\rho _\sigma )_{\sigma \in [M]}\) with \(\rho _\sigma :[0,T]\times {\mathbb {S}}\rightarrow {\mathbb {R}}\) is a weak solution of the initial value problem (2.13)–(2.14) if the following two conditions are fulfilled:

-

For every \(f\in C({\mathbb {S}})\) and for all \(\sigma \in [M]\), the maps \(t\mapsto \int _{\mathbb {S}}f(\alpha )\rho _\sigma (t, \alpha ) \mathrm {d}\alpha \) are continuous.

-

For all \(\sigma \in [M]\) and \(w_\sigma \in C^1([0,T] \times {\mathbb {S}})\) with compact support in \([0,T)\times {\mathbb {S}}\), the following identity holds

$$\begin{aligned}&\int _0^T\int _{\mathbb {S}}\rho _\sigma (t,\phi )\left( \frac{\partial }{\partial t} w_\sigma (t,\phi ) + V_\sigma [\rho ](t,\phi ) \frac{\partial }{\partial \phi }w_\sigma (t,\phi )\right) \mathrm {d}\phi \mathrm {d}t\\&\quad +\int _{\mathbb {S}}\rho ^\mathrm {in}_\sigma (\phi )w_\sigma (0,\phi )\ \mathrm {d}\phi = 0. \end{aligned}$$

However, not all measures need to have a density. To make all measures admissible, we weaken the definition once again.

Definition 2.11

(Weak Measure-Valued Solution) A family of measures \((\mu _\sigma )_{\sigma \in [M]}\in C_{{\mathcal {P}}({\mathbb {S}})}^M\) is a weak measure valued solution of the initial value problem (2.13)–(2.14) if for all \(\sigma \in [M]\) and \(w_\sigma \in C^1([0,T] \times {\mathbb {S}})\) with compact support in \([0,T)\times {\mathbb {S}}\), the following identity holds:

Finally, weak measure valued solutions of the initial value problem (2.13)–(2.14) is the most general definition of solutions. In fact, it can be shown that this definition is equivalent with the definition of solutions to the system of characteristic Eqs. (2.6) and (2.7). A family of measures \((\mu _\sigma )_{\sigma \in [M]}\in C_{{\mathcal {P}}({\mathbb {S}})}^M\) is a weak measure valued solution of the initial value problem (2.13)–(2.14) if and only if it is a solution to the system of characteristic Eqs. (2.6) and (2.7). This can be shown by following the calculations in [25, Theorem 2.3.6 and Theorem 3.3.4] and [39].

Even though these two formulations of solutions are equivalent, we work with the system of characteristic equations, since this is more convenient to study the stability of solutions in Sect. 3.

4 Synchrony and synchrony patterns and their stability

The emergence of synchrony is an essential collective phenomenon of coupled oscillator networks. Recall that for a single population of identical phase oscillators, the splay state \(\mathrm {D}\) describes the phase configurations where the phases are equidistributed on the circle and \(\mathrm {S}\) denotes full (phase) synchrony where the phase of all oscillators take the same value. Networks that consist of multiple populations of phase oscillators, can give rise to synchrony patterns, where individual populations are either phase synchronized or in splay state. In this section we analyze the stability properties of such synchrony patterns in the mean-field system (2.6), (2.7). We find that patterns that contain fully synchronized populations cannot be asymptotically stable for the characteristic mean field equations, even relative to phase shift invariance.

Definition 3.1

We write \(\mathrm {D}= \left\{ \frac{1}{2\pi }\lambda _{\mathbb {S}}\right\} \), where \(\lambda _{\mathbb {S}}\) is the Hausdorff measure on \({\mathbb {S}}\), and \(\mathrm {S}= \left\{ \, \delta _\xi \,\left| \;\xi \in {\mathbb {S}}\right. \right\} \). Population \(\sigma \in [M]\) is in splay phase \(\mathrm {D}\) if \(\mu _\sigma \in \mathrm {D}\) and phase synchronized if \(\mu _\sigma \in \mathrm {S}\).

We adopt the notation in [10] and write \(\mathrm {D}\) if a population is in splay configuration and \(\mathrm {S}\) if it is phase synchronized. That is, we write

to indicate that population \(\sigma \) is fully phase synchronized or in splay phase. We extend the notation to intersections of the sets (3.1). Consequently, \(\mathrm {S}\cdots \mathrm {S}\) (M times) is the set of cluster states where all populations are fully phase synchronized and \(\mathrm {D}\cdots \mathrm {D}\) the set where all populations are in splay phase. While it is easy to see that the synchrony pattern \(\mathrm {S}\cdots \mathrm {S}\) is invariant under the dynamics of (2.6), (2.7), this is in general not true for \(\mathrm {D}\cdots \mathrm {D}\). For this synchrony pattern to be invariant, we additionally assume that each population is only influenced by phase differences of oscillators from the same population. To make this precise, we assume the multi-indices \(s^\sigma \) and the coupling functions \(G_\sigma \) to be of a special form. Firstly, the length of the multi-index \(s^\sigma \) is supposed to be an odd number, i.e. \(\left|s^\sigma \right| = 2L_\sigma +1\) for some \(L_\sigma \in {\mathbb {N}}_{\ge 0}\). Secondly, each second entry equals the previous entry, i.e. \(s^\sigma _{2j} = s^\sigma _{2j-1}\) for all \(j\in [L_\sigma ]\) and thirdly, we assume the last entry to be given by \(\sigma \) itself, i.e. \(s^\sigma _{2L_\sigma +1} = \sigma \). Summarizing these conditions we can write the multi-indices \(s^\sigma \) as

for \(r^\sigma _j:=s^\sigma _{2j}\). These coefficients can be summarized into new multi-indices \(r^\sigma = (r^\sigma _1,\dots , r^\sigma _{L^\sigma })\) with \(\left|r^\sigma \right| = L_\sigma \). Further, the coupling functions \(G_\sigma :{\mathbb {S}}^{\left|s^\sigma \right|}\times {\mathbb {S}}\rightarrow {\mathbb {R}}\) are supposed to be of the form

for functions \(g_\sigma :{\mathbb {S}}^{L_\sigma }\times {\mathbb {S}}\rightarrow {\mathbb {R}}\). These conditions can be summarized by requiring the velocity field \({\mathcal {K}}_\sigma \mu \) to be given by

With this coupling, one population can only influence another population through its phase differences, which can lead to additional symmetries [14].

In the remainder of this paper we study the system (2.6) with (3.4). Our examples can be recast in the notation above:

Example 3.2

(Skardal–Arenas) Let us reconsider Example 2.1. While the multiindex \(s^1=(1,1,1)\) can be cast into the form (3.2), the coupling function (2.3) is not of the form (3.3), since it does not only depend on \(\alpha _1-\alpha _2\) and \(\alpha _3-\phi \). This is only true when \(K_2=0\). In this case the new multi-index is given by \(r^1 = (1)\) and the coupling function \(g:=g_1\) is given by

Example 3.3

(Heteroclinic Structures) Now, we reconsider Example 2.2. Note that all of the multi-indices are of the special form (3.2). Furthermore, the coupling functions are only dependent on \(\alpha _1-\alpha _2, \alpha _3-\alpha _4\) and \(\alpha _5-\phi \). Thus, with \(r^1 = (3,1), r^2 = (1,3), r^3 = (2,1)\) this system can also be put into the form (3.4). The coupling functions are given by \(g_1(\alpha ,\phi ) = g_2(\alpha ,\phi ) = g_3(\alpha ,\phi ) := g(\alpha ,\phi )\), with

Proposition 3.4

(Reducibility to lower dimensions) If we fix m populations, each to be in splay or in synchronized state, the other \(M-m\) populations behave accordingly to (2.6), (3.4) with \(M-m\) instead of M and different coupling functions.

Since this Proposition can be proven by straightforward insertion of the synchronized or splay state in (3.4); for completeness the full proof of this proposition can be found in the Appendix A.2.

Now, we can deduce that synchrony patterns that consist of synchronized and splay states are dynamically invariant:

Proposition 3.5

Subsets of the form (3.1) are invariant under the flow of (2.6), (3.4).

The full proof can be found in Appendix A.2, but we include a short sketch here: For the synchronized state this is clear, since it is represented by a Dirac measure and push-forwards of those, as considered in (2.6), are still Dirac measures. If a population is in splay state, the evolution operator \(({\mathcal {K}}_\sigma \mu (t))(\phi )\) is independent of \(\phi \), as one can see by conducting a phase shift. As \(\mathrm {D}\) is invariant under rotations the splay state is dynamically invariant, too.

Remark 3.6

Because intersections of invariant sets are again invariant, any combination of \(\mathrm {S},\mathrm {D}\) and \((\mu _\sigma )_{\sigma \in [M]}\) is also invariant.

4.1 Stability of phase synchronized states

What is the (asymptotic) stability of phase synchrony? For networks for finitely many oscillators, (linear) stability is determined by the derivative of the coupling function at zero [3]. For mean-field limits, previous work has predominantly considered networks with sinusoidal coupling. Carillo et al. [17] considered the stability of the synchronized state with respect to the Wasserstein-1 distance: For a single population with coupling function \(g(\phi ) = \sin (\phi )\), the authors proved that whenever the initial measure is contained in one half of the circle \({\mathbb {S}}\) and \(K>0\), the measure valued solution converges to a synchronized state in the Wasserstein-1 metric as \(t\rightarrow \infty \) [17, Theorem 4.1]. However, requiring the initial measure to be contained in one half of the unit circle does not capture all generic perturbations of the synchronized state with respect to this metric. Moreover, this result depends on the specific coupling function \(\sin \). Benedetto et al. [8] derived similar result for a continuity equation that describes the evolution of identical phase oscillators for \(g(\phi ) = \sin (\phi )\): Whenever a initial density is not stationary, it converges weakly to a measure of the form \(\delta = c_1\delta _\theta + c_2\delta _{\theta +\pi }\), where \(\theta \in {\mathbb {S}}, c_1,c_2\ge 0\) and \(c_1+c_2=1\) [8, Theorem 3.1]. Moreover, the measure converges to full synchrony if the initial measure is non-atomic. While in this case weak convergence of measures to a synchronized state is equivalent to convergence of the measure to a synchronized state with respect to the Wasserstein-1 metric, the result depends on the simple sinusoidal coupling function. Note that the special case of sinusoidal coupling possesses additional structure such that the theory developed by Watanabe and Strogatz [53] can be applied to understand the dynamics in the global phase space; higher-harmonics and generic nonpairwise interactions break this structure.

We here elucidate the stability of phase synchrony (and, by extension synchrony patterns, where some populations are fixed in synchronized or splay state) for general coupling functions with nontrivial higher-harmonics and nonpairwise interactions. Moreover, our main Theorems 3.16 and 3.22 yield statements about stability with respect to generic perturbations of phase synchrony with respect to the Wasserstein-1 metric.

Phase configurations in which populations is synchronized are dynamically invariant. Note that phase-synchronized are sets rather than points. More specifically, for a single population the synchronized state is the set

Similarly, if there are multiple populations, the all-synchronized state is

Thus, we have to consider the stability of these sets.

Notation 3.7

For an ease of notation, we will write

-

\(W_1(\mathrm {S}, \mu ) = \inf _{\delta \in \mathrm {S}} W_1(\delta , \mu )\), for \(\mu \in {\mathcal {P}}({\mathbb {S}})\),

-

\(\mu (t)\rightarrow \mathrm {S}\) as \(t\rightarrow \infty \) if \(\lim _{t\rightarrow \infty } W_1(\mathrm {S},\mu (t))=0\), for \(\mu \in C_{{\mathcal {P}}({\mathbb {S}})} \),

-

\({\mathbb {B}}(\mathrm {S}, \epsilon ) = \bigcup _{\delta \in \mathrm {S}} {\mathbb {B}}(\delta , \epsilon )\),

where \({\mathbb {B}}(\delta , \epsilon )\) denotes the ball centered at a measure \(\delta \) of radius \(\epsilon \) in the Wasserstein-1 metric.

The following definition is the natural variant of (Lyapunov) stability in our setting:

Definition 3.8

The set \(\mathrm {S}^M\) is stable if for all \(\sigma \in [M]\) and all neighborhoods \(U_\sigma \subset {\mathcal {P}}({\mathbb {S}})\) of \(\mathrm {S}\) there exist neighborhoods \(V_\sigma \) of \(\mathrm {S}\) such that for any \(\mu ^\mathrm {in} = (\mu _1^\mathrm {in},\dots ,\mu _M^\mathrm {in})\in V_1\times \dots \times V_M\), the solution \(\mu (t)\) of (2.6), (3.4) satisfies \(\mu (t)\in U_1\times \dots \times U_M\) for all \(t\ge 0\).

Of course, one often does not only want to show stability of solutions staying near an invariant set but also that the solutions tend towards the invariant set:

Definition 3.9

The set \(\mathrm {S}^M\) is asymptotically stable if it is stable and, additionally, there exists a neighborhood \(V = V_1\times \dots \times V_M\subset {\mathcal {P}}({\mathbb {S}})^M\) such that for all \(\mu ^\mathrm {in}\in V\) the solution of (2.6), (3.4) satisfies \(\mu _\sigma (t) \rightarrow \mathrm {S}\) as \(t\rightarrow \infty \) for all \(\sigma \in [M]\).

Remark 3.10

The two Definitions 3.8 and 3.9 are formulated in terms of the topology created by the distance \(\max _{\sigma \in [M]} W_1(\mathrm {S}, \mu _\sigma )\). However, instead of taking the maximum, one can also sum over \(W_1(\mathrm {S}, \mu _\sigma )\), to get a definition that resembles the metric used to prove existence and uniqueness of (2.6) more closely. However, as these topologies are equivalent, we use Definitions 3.8–3.9 in the topology generated by \(\max _{\sigma \in [M]} W_1(\mathrm {S}, \mu _\sigma )\), as this setting seems easier to work with.

Remark 3.11

The two Definitions 3.8 and 3.9 also make sense when considered with the more general coupling (2.7). However, we only work with them in the context of the velocity fields (3.4).

Since it turns out that results about stability and asymptotic stability of the all-synchronized state are dependent on the derivative of \(g_\sigma (\alpha ,\gamma )\) with respect to \(\gamma \), evaluated at \((\alpha ,\gamma )=(0,0)\), we introduce the following abbreviations:

Let us now assume that \(a_\sigma >0 \text { for all }\sigma \in [M]\) and

for all \(\sigma \in [M]\) and all \(\alpha \in (-\eta ,\eta )^{\left|r^\sigma \right|}, \phi \in (-\eta ,\eta )\). We will later impose conditions on \(\kappa >0\) and then choose \(\eta >0\) accordingly to (3.5). First, however, we illustrate that one cannot generically expect asymptotic stability.

4.1.1 No generic asymptotic stability

This section illustrates that the all-synchronized state cannot be asymptotically stable under a generic condition, which is in this case \(a_\sigma \ne 0\) for at least one \(\sigma \in [M]\). Assume that we do not have generic asymptotic stability for \(M=1\). Generalizing this to M populations can then be easily done by applying the results for one population to an invariant subset of the form \(\mathrm {S}\cdots \mathrm {S}\mu _\sigma \mathrm {S}\cdots \mathrm {S}\), with \(\sigma \in [M]\) chosen such that \(a_\sigma \ne 0\). No asymptotic stability in this subset with one free population then yields no asymptotic stability in the whole system with M free populations.

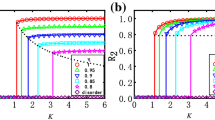

Thus, we only consider the case \(M=1\), so we assume \(a_1\ne 0\). The idea is to construct invariant cluster states that are arbitrarily close to the synchronized state with respect to the Wasserstein-1 metric. When the coupling function is given by a pure sine, such cluster states have their total mass split up onto two points at the opposite side of the circle, such that the phase difference between these two points is \(\pi \). Since \(\sin (\pi )=0\) their invariance is confirmed. Consequently, in this case, the existence of invariant clusters is clear. We constructively show that invariant cluster states that get arbitrarily close to the synchronized state also exist for general coupling functions. Along this family of cluster states, no asymptotic convergence can take place. To accomplish this construction, consider a perturbation of the synchronized state of the form

for \(\phi _1^\mathrm {in},\phi _2^\mathrm {in},\in {\mathbb {S}}\) and \(n\in {\mathbb {N}}_{\ge 1}\). Now, \(\mu (t):=\mu _1(t)\) obeys the system of characteristic Eqs. (2.6) and (3.4) with \(M=1\). As the push-forward of \(\mu ^\mathrm {in}\), specifically the convex combination of two Dirac distributions, is again a convex combination of two Diracs, the solution \(\mu (t)\) is given by \(\mu (t) = \left( 1-\frac{1}{n}\right) \delta _{\phi _1(t)} + \frac{1}{n}\delta _{\phi _2(t)}\) for \(\phi _1(t) = \Phi (t,\phi _1^\mathrm {in},\mu ^\mathrm {in})\) and \(\phi _2(t) = \Phi (t,\phi _2^\mathrm {in},\mu ^\mathrm {in})\). Using the notation \(\chi = \left|r^1 \right|\), their difference \(\Psi (t):=\phi _2(t)-\phi _1(t)\) satisfies the differential equation

Obviously, \(\mu ^\mathrm {in}\in \mathrm {S}\) if and only if \(\phi _1^\mathrm {in} = \phi _2^\mathrm {in}\) and further, \(\mu (t)\rightarrow \mathrm {S}\) if and only if \(|\Psi |_{\mathbb {S}}\rightarrow 0\). However, as we have assumed \(a_1\ne 0\), for each large enough n, there exist \(\Psi ^0_n\) with \(|\Psi ^0_n |_{\mathbb {S}}>0\) such that \(f_n(\Psi ^0_n) = 0\). Consequently, if \(\Psi (0)=\Psi ^0_n\), \(\Psi (t)=\Psi ^0_n\) for all \(t\ge 0\).

Now suppose for contradiction that \(\mathrm {S}\) was asymptotically stable. Then, according to Definition 3.9, there must exist a neighborhood V of \(\mathrm {S}\) such that for all \(\mu ^\mathrm {in}\in V\), the solution of (2.6), (3.4) satisfies \(\mu (t)\rightarrow \mathrm {S}\) as \(t\rightarrow \infty \). Then, there also has to exist \(\epsilon _V>0\) with \({\mathbb {B}}(\mathrm {S},\epsilon _V)\subset V\). On the one hand, choosing n such that \(\frac{\pi }{n}<\epsilon _V\) and the initial measure according to (3.6) with \(\phi _2^\mathrm {in}-\phi _1^\mathrm {in} = \Psi _n^0\) yields

so \(\mu ^\mathrm {in}\in V\). On the other hand \(\phi _2(t)-\phi _1(t) = \Psi _n^0\) for all \(t\ge 0\) and thus \(\mu (t)\) does not converge to \(\mathrm {S}\), which contradicts asymptotic stability.

We illustrate the fact of no generic asymptotic stability at an example:

Example 3.12

In the easiest case, there is only one population \(M=1\), no higher-order interactions, i.e. \(r^1 = ()\), and the coupling function is given by \(g_1(\gamma ) = \sin (\gamma )\). Then, the velocity field (3.4) is given by

In this case, the function \(f_n(\Psi )\) is just given by \(f_n(\Psi ) = \sin (\Psi )\), independent of n. Therefore, \(\Psi (t)\) satisfies \({\dot{\Psi }}(t) = -\sin (\Psi (t))\). Apart from the trivial equilibrium at \(\Psi =0\), there is another equilibrium at \(\Psi ^0_n = \pi \). Consequently, cluster states of the form \((1-\frac{1}{n})\delta _{\phi _1(t)} + \frac{1}{n} \delta _{\phi _2(t)}\) remain invariant over time if \(\phi _2^\mathrm {in}-\phi _1^\mathrm {in} = \pi \). Since this analysis applies for any n, there is a family of invariant cluster states, that converges to the synchronized state if \(n\rightarrow \infty \). On the one hand, asymptotic stability of the synchronized state means that every initial configuration in a small neighborhood of the synchronized state converges to it, see Definition 3.9. On the other hand, in this case, every small neighborhood around the synchronized state contains other invariant states. Therefore, when taking these invariant states as initial configurations, one can see that the synchronized state cannot be asymptotically stable.

4.1.2 Stability

Next, we are going to show that large classes of generic systems do admit at least stability of the synchronized state for large parameter regions. We want to remark that results from this section rely on the general notation defined in Sect. 1 and on the dynamics specific notation introduced at the beginning of Sect. 3.

Lemma 3.13

Let \(\xi _1,\xi _2\in {\mathbb {S}}\). For any \(\sigma \in [M], \mu ^\mathrm {in}\in {\mathcal {P}}({\mathbb {S}})^M\) and \(\phi _1(t) := \Phi _\sigma (t,\xi _1,\mu ^\mathrm {in})\) and \(\phi _2(t):=\Phi _\sigma (t,\xi _2,\mu ^\mathrm {in})\), the mass of \(\mu _\sigma (t)\) on the arc \((\phi _1(t), \phi _2(t))\), as defined in (1.4), i.e.,

remains constant over time.

Proof

By continuity of \(\Phi _\sigma (t,\xi ,\mu ^\mathrm {in})\) with respect to t and \(\xi \), \(\Phi ^{-1}_\sigma (t,(\phi _1(t), \phi _2(t)),\mu ^\mathrm {in}) = (\phi _1(0),\phi _2(0)) = (\xi _1,\xi _2)\), where the inverse is taken only with respect to the \(\xi \) variable. By (2.6b),

for all \(t\ge 0\). Therefore, the integral on the left-hand side in indeed independent of t. \(\square \)

Lemma 3.14

For all \(\sigma \in [M]\) and any two particles \(\phi _1^\sigma (t) := \Phi _\sigma (t,\xi _1^\sigma ,\mu ^\mathrm {in})\) and \(\phi _2^\sigma (t):=\Phi _\sigma (t,\xi _2^\sigma ,\mu ^\mathrm {in})\), we define the phase difference \(\Psi _\sigma (t) := \phi _2^\sigma (t)-\phi _1^\sigma (t)\in [0,2\pi )\). Let \(m^\mathrm {inside}_\sigma \) denote the \(\mu _\sigma \)-mass inside the interval \((\phi _1^\sigma (t),\phi _2^\sigma (t))\), which is by Lemma 3.13 independent of t. Then, there exists a constant \(C>0\) such that whenever \(0<\Psi _\sigma (t)<\eta \) for all \(\sigma \in [M]\), they satisfy

Proof

Let us consider a decomposition of the probability measures \(\mu _\sigma (t)\) into two measures \(\mu _\sigma ^\text {inside}(t)\) and \(\mu _\sigma ^\text {outside}(t)\) with

and \({\text {supp}}(\mu _\sigma ^\text {inside}(t))\subset ((\phi _1^\sigma (t), \phi _2^\sigma (t))\), \({\text {supp}}(\mu _\sigma ^\text {outside}(t))\subset {\mathbb {S}}{\setminus }((\phi _1^\sigma (t), \phi _2^\sigma (t))\). Then, a calculation shows

Here, the equality \((*)\) was achieved by decomposing each measure \(\mu _i\) into its components according to (3.7) and rearranging terms such that every integrand with an integral running over at least one measure of the type \(\mu ^\text {outside}\) is contained in the part “integrals over \(\mu ^\text {outside}\)”. We can easily estimate these integrals from above by first combining the integrals into a single one running over \(\mu _\sigma ^\text {outside}\) (with the integrand still consisting of integrals) and then taking the supremum norm C of the integrand. As the total mass of \(\mu _\sigma (t)\) equals 1 and the \(\mu _\sigma \)-mass inside the interval \((\phi _1^\sigma (t),\phi _2^\sigma (t))\) is \(m^\mathrm {inside}_\sigma \), the mass outside this interval evaluates to \(1-m^\mathrm {inside}_\sigma \). Consequently, the terms summarized in “integrals over \(\mu ^\text {outside}\)” can be bounded from above by \(C(1-m^\mathrm {inside}_\sigma )\). The inequality \((**)\) is based on linear approximation and the fact that \(\Psi _\sigma (t)<\eta \).

\(\square \)

Lemma 3.15

Let \(\mu \in {\mathcal {P}}({\mathbb {S}})\) be a probability measure on the circle, \(\xi _1,\xi _2\in {\mathbb {S}}\) and

Then, \(W_1(\mathrm {S}, \mu ) < (\xi _2-\xi _1)m^\mathrm {inside} + \pi (1-m^\mathrm {inside})\).

Proof

Using (1.5a), we calculate

\(\square \)

We can not put the previous lemmas together and formulate our main theorem:

Theorem 3.16

If the coupling functions \(g_\sigma \) are continuously differentiable, i.e., \(g_\sigma \in C^1({\mathbb {S}}^{\left|r^\sigma \right|}\times {\mathbb {S}})\), and they satisfy \(a_\sigma >0\) for all \(\sigma \in [M]\) then, the set of all-synchronized states \(\mathrm {S}^M\) is stable.

Proof

To verify Definition 3.8, let \(U_1,\dots ,U_M\) be neighborhoods of \(\mathrm {S}\) and choose \(\epsilon _U\) such that \({\mathbb {B}}(\mathrm {S},\epsilon _U)\subset U_\sigma \) for all \(\sigma \in [M]\). Further, let \(\eta >0\) be such that (3.5) is fulfilled with \(\kappa = \min _{\sigma \in [M]} a_\sigma /2=:a_0/2\). Now, first choose \(\zeta >0\) with \(\zeta < \min (\frac{\eta }{2},\frac{\epsilon _U}{4})\) and then \(\epsilon _V>0\) so small, that both

with C coming from Lemma 3.14, and \(\epsilon _V < \frac{\epsilon _U\zeta }{2\pi }\). To satisfy Definition 3.8 we can then take \(V_\sigma = {\mathbb {B}}(\mathrm {S},\epsilon _V)\) for all \(\sigma \in [M]\).

To see that indeed \(\mu _\sigma (t)\in {\mathbb {B}}(\mathrm {S},\epsilon _U)\subset U_\sigma \) for all \(t\ge 0\) provided that \(\mu ^\mathrm {in}_\sigma \in V_\sigma \), we take \(\mu ^\mathrm {in}_\sigma \in V_\sigma \) and \(\xi _1,\dots ,\xi _M\in {\mathbb {S}}\) with \(W_1(\delta _{\xi _\sigma }, \mu ^\mathrm {in}_\sigma )<\epsilon _V\). The representation of the Wasserstein-1 distance (1.5a) yields

To see this, note that

Dividing by \(\zeta \) yields (3.9). As a result,

If we now trace the 2M particles defined by \(\phi _1^\sigma (t) := \Phi _\sigma (t,\xi _\sigma -\zeta , \mu ^\mathrm {in})\) and \(\phi _2^\sigma (t):=\Phi _\sigma (t,\xi _\sigma +\zeta ,\mu ^\mathrm {in})\), Lemma 3.13 yields

and

for all \(t\ge 0\). Next, we apply Lemma 3.14 to obtain that the phase differences \(\Psi _\sigma (t) := \phi _2^\sigma (t)-\phi _1^\sigma (t)\) satisfy

which stays valid if \(\Psi _\sigma (t)<\eta \) for all \(\sigma \in [M]\). First note, that the choice of \(\epsilon _V\) such that (3.8) holds true, yields that for \(\Psi _\sigma (t) = 2\zeta \), the right-hand side of (3.11) is negative:

Therefore, for \(\Psi _\sigma (t) = 2\zeta \), the derivative satisfies \({\dot{\Psi }}_\sigma (t)<0\) if all other components satisfy \(\Psi _i(t)<\eta \) for all \(i\ne \sigma \). To be precise, the region

is invariant under the flow of (3.10). To see that, first note that \(\Psi _\sigma (0) = 2\zeta \) for all \(\sigma = 1,\dots ,M\), so \({\mathcal {R}}\) is effectively a hyper cube \({\mathcal {R}} = [0,2\zeta ]^M\). For given \(\sigma \), the component \(\Psi _\sigma (t)\) can not leave the hyper cube through 0, because that would mean that the two particles \(\phi _1^\sigma (t)\) and \(\phi _2^\sigma (t)\) collide. A trajectory \((\Psi _1(t), \dots , \Psi _M(t))\) also can not leave \({\mathcal {R}}\) by one component exceeding the value \(2\zeta \). Suppose, for a contradiction that there is a time \(t^\star >0\) such that \(\Psi _\sigma (t^\star ) = 2\zeta \) for one \(\sigma \in [M]\) and let \(t^\star \) be the first time that happens. Then, however, all components still satisfy \(\Psi _\sigma (t^\star ) \le 2\zeta < \eta \) and thus (3.11) is valid. By the calculation (3.12) \({\dot{\Psi }}_\sigma (t^\star )<0\), so \({\mathcal {R}}\) is indeed invariant.

Consequently, for all \(\sigma = 1,\dots ,M\) and all \(t\ge 0\), we have \(\Psi _\sigma (t)< 2\zeta \) and thus, by Lemma 3.15,

So indeed \(\mu _\sigma (t)\in {\mathbb {B}}(\mathrm {S},\epsilon _U)\subset U_\sigma \) for all \(t\ge 0\). This verifies Definition 3.8 and therefore concludes the proof. \(\square \)

4.1.3 Almost asymptotic stability

One might now hope that although we do not have asymptotic stability, we can expect asymptotic stability of large classes of initial conditions as the family of steady states constructed above is a rather small part of phase space.

Before stating theorems regarding asymptotic stability, we need to introduce the concept of phase differences, as this concept becomes important in the subsequent proofs. Similarly to the original system (2.6), the system of phase differences describes the temporal evolution of oscillators, which can be grouped into populations, on the circle. Unlike the original system (2.6), in the system of phase differences, the position of the oscillators is not given in absolute coordinates but instead with respect to reference oscillators. The system of phase differences is given by

with

Recasting the system in terms of phase differences, reduces the continous symmetry operation that shifts all phases (in a single population) by a constant amount; cf. [3] for finite-dimensional systems and [14] for mean-field limits. The following example makes this reduction to phase differences explicit for a system consisting of finitely many discrete oscillators.

Example 3.17

The finite-dimensional Kuramoto model for N identical oscillators is given by the system of ordinary differential equations

To obtain the system of phase differences (with respect to the first oscillator), we define \(\psi _k:=\phi _k-\phi _1\). These new variables then fulfill the system of phase differences

The system (3.13), (3.14) generalizes this concept to the mean-field limit of multiple population coupled via higher-order interactions.

Notation 3.18

Let \(\zeta \in {\mathbb {S}}\). When using the notation \(m_\zeta \), we refer to the function \(m_\zeta :{\mathbb {S}}\rightarrow {\mathbb {S}}\) with \(m_\zeta (\phi ) = \phi -\zeta \).

Lemma 3.19

Let \(\zeta _1,\dots ,\zeta _M\in {\mathbb {S}}, \mu ^\mathrm {in}\in {\mathcal {P}}({\mathbb {S}})^M\), suppose that \(\mu (t)\) solves the system (2.6), (3.4) and let \(\Phi _\sigma (t,\xi ^\mathrm {in}_\sigma ,\mu ^\mathrm {in})\) be its mean-field characteristic flow. Now define

and

for \(\sigma \in [M]\). Then, \(\nu (t)\) and \(\Psi _\sigma (t,\xi ^\mathrm {in}_\sigma ,\nu ^\mathrm {in})\) solve the system (3.13), (3.14).

The proof of this Lemma can be found in the Appendix A.3. The Lemma basically generalizes Example 3.17 to the infinite-dimensional system. Then, \(\Phi _\sigma (t,\zeta _\sigma ,\mu ^\mathrm {in})\) takes the role of \(\phi _1\) in Example 3.17. It can be seen as the position of reference oscillators we have talked about at the beginning of this section.

Remark 3.20

Lemma 3.19 is especially useful because the only operation used to create the measures \(\nu _\sigma (t)\) from the measures \(\mu _\sigma (t)\) is a rotation by \(\Phi _\sigma (t,\zeta _\sigma ,\mu ^\mathrm {in})\) around the circle. Therefore, \(W_1(\mathrm {S},\nu _\sigma (t)) = W_1(\mathrm {S},\mu _\sigma (t))\) and \(\mu _\sigma (t)\rightarrow \mathrm {S}\) if and only if \(\nu _\sigma (t)\rightarrow \mathrm {S}\).

Lemma 3.21

If the coupling functions \(g_\sigma (\alpha ,\gamma )\) are continuously differentiable and the derivative \(g_\sigma ^{(0,1)}\) is Lipschitz continuous with constant \(L_1\) then, the coupling operator \({\mathcal {F}}_\sigma \) satisfies

Proof

Follows from (3.14) and Lemma A.5. \(\square \)

Theorem 3.22

Suppose that \(a_\sigma >0\) for all \(\sigma \in [M]\) and let the coupling functions \(g_\sigma \) be chosen such that \(g^{(0,1)}_\sigma \) are Lipschitz continuous with constant \(L_1\). Further, assume that each of the functions

has exactly two zeros around the circle, the trivial one at 0 and another one at \(\psi ^0_\sigma \in {\mathbb {S}}{\setminus }\{0\}\). Moreover, suppose \(b_\sigma := {\hat{g}}'(\psi ^0_\sigma ) \ne 0\) for all \(\sigma \in [M]\). Then, initial configurations in the space of densities \(\mu ^\mathrm {in}\in {\mathcal {P}}_\mathrm {ac}({\mathbb {S}})^M\), which are close enough to the all-synchronized state, converge to the all-synchronized state as \(t\rightarrow \infty \).

Remark 3.23

Note that the assumption on absolute continuity of the measures eliminates the counterexamples from Sect. 3.1.1 as only perturbations into measures with densities are allowed. This clearly shows, why studying the mean-field Vlasov–Fokker–Planck equation for densities is often easier in comparison to our goal of deriving directly the maximum information from the characteristic system.

Proof of Theorem 3.22

As the assumptions of this theorem include the assumptions of Theorem 3.16, we can choose \(\epsilon _V>0\) such that for any \(\mu ^\mathrm {in}\in {\mathbb {B}}(\mathrm {S},\epsilon _V)^M\), \(\mu (t)\in {\mathbb {B}}(\mathrm {S},\epsilon _U)^M\) for all \(t\ge 0\). To prove asymptotic stability in the space of absolutely continuous measures, let \(\mu ^\mathrm {in}\in {\mathbb {B}}(\mathrm {S},\epsilon _V)^M\cap {\mathcal {P}}_\mathrm {ac}({\mathbb {S}})^M\), with \(\epsilon _V\) specified later, and proceed analogously to the Proof of Theorem 3.16 until we get to the point when \(\phi _2^\sigma (t)-\phi _1^\sigma (t) \le 2\zeta \) for all \(\sigma \in [M]\) and \(t\ge 0\). Next, we use Lemma 3.19 in order to switch to the system of phase differences with \(\zeta _\sigma = \phi _1^{\sigma ,\mathrm {in}} = \phi ^\sigma _1(0)\). The reference oscillators in this system of phase differences are consequently given by \(\phi ^\sigma _1(t)\). Since

for all \(t\ge 0\),

Therefore, a computation similar to the one in the Proof of Theorem 3.16 shows

so the solution \(\nu (t)\) always stays close to the all-synchronized state located at the origin. In the system of phase differences, individual particles then follow the flow

However, by (3.16) and Lemma 3.21, (3.17) can be rewritten in the form

for a small perturbation \(p_\sigma \in C^1({\mathbb {S}}\times {\mathbb {R}})\). Next, we fix \(\epsilon _U\) and with that also \(\epsilon _V\) such that for all \(\nu _1,\dots ,\nu _M\in {\mathbb {B}}(\delta _0, \epsilon _U)\), \(\left\Vert p_\sigma (\cdot ,t) \right\Vert _{C^1}\) is small enough such that the flow induced by the dynamical system (3.17) is equivalent to the one induced by \({\dot{\Psi }}_\sigma = ({\mathcal {F}}_\sigma \delta _0^M)(\Psi _\sigma )\) for all \(\sigma \in [M]\). So we consider (3.18) as a perturbed one-dimensional autonomous ODE. The existence of such an \(\epsilon _U\) is guaranteed by Lemma 3.21. Specifically, this lemma states that

uniformly in t. A particular choice of \(\epsilon _U\) can be constructed as follows: As \({\hat{g}}_\sigma '(0) = a_\sigma >0\) and there are only two roots with non-vanishing derivative on the circle, \(b_\sigma <0\). Further, let us write \(\eta ^\sigma _1,\eta ^\sigma _2>0\) for radii of intervals such that \(\inf _{\alpha \in (-\eta _1^\sigma ,\eta _1^\sigma )} {\hat{g}}_\sigma '(\alpha ) > \frac{a_\sigma }{2}\) and \(\sup _{\alpha \in (\psi ^0_\sigma -\eta _2^\sigma , \phi ^0_\sigma +\eta _2^\sigma )} {\hat{g}}_\sigma '(\alpha ) < \frac{b_\sigma }{2}\). Now, \(\epsilon _U\) can be chosen such that for all \(f_\sigma \in C^1({\mathbb {S}})\) with \(\left\Vert f_\sigma \right\Vert _{C^1}< \epsilon _f^\sigma \) the following criteria are satisfied:

-

(C1)

\(\max _{\alpha \in {\mathbb {S}}{\setminus } [(-\eta _1^\sigma ,\eta _1^\sigma )\cup (-\psi ^0_\sigma -\eta _2^\sigma , -\psi ^0_\sigma +\eta _2^\sigma )]} |f_\sigma (\alpha )|\) \(\qquad < \frac{1}{2} \min _{\alpha \in {\mathbb {S}}{\setminus } [(-\eta _1^\sigma ,\eta _1^\sigma )\cup (-\psi ^0_\sigma -\eta _2^\sigma , -\psi ^0_\sigma +\eta _2^\sigma )]} |{\mathcal {F}}_\sigma \delta _0^M(\alpha )|\),

-

(C2)

\(\max _{\alpha \in (-\eta _1^\sigma , \eta _1^\sigma )} |f_\sigma '(\alpha )|\) \(\qquad < \frac{1}{2}a_\sigma \) and \(\max _{\alpha \in (-\psi ^0_\sigma -\eta _2^\sigma , -\psi ^0_\sigma +\eta _2^\sigma )} |f_\sigma '(\alpha )| < \frac{-b_\sigma }{2}\).

While (C1) ensures that \({\mathcal {F}}_\sigma \nu (t)\) has no zeros away from the roots \(-\psi _0^\sigma \) and 0 for all \(t\ge 0\), (C2) guarantees that \({\mathcal {F}}_\sigma \nu (t)\) is strictly monotonic in the two neighborhoods around the roots. This monotonicity also causes the existence of at most one zero of \({\mathcal {F}}_\sigma \nu (t)\) near the two roots. Even though the two zeros of \({\mathcal {F}}_\sigma \nu (t)\) may be varying over time, (C2) ensures that the flow of (3.17) is still exponentially contracting in \((-\eta _1^\sigma ,\eta _1^\sigma )\) and exponentially expanding in \((-\psi ^0_\sigma -\eta _2^\sigma , -\psi ^0_\sigma +\eta _2^\sigma )\). Thus, at least one of two distinct test particles starting in \((-\psi ^0_\sigma -\eta _2^\sigma , -\psi ^0_\sigma +\eta _2^\sigma )\) leaves this region and eventually ends up in \((-\eta _1^\sigma ,\eta _1^\sigma )\). Since \(\Psi _\sigma (t,0,\nu ^\mathrm {in}) = 0\) for all \(t\ge 0\) and the contracting property of \(\Psi _\sigma (t,0,\nu ^\mathrm {in})\) around 0, the particle even converges to the origin at 0. So there exists only one trajectory, which starts at an arbitrary point \(\Psi ^\mathrm {in}_\sigma \in {\mathbb {S}}\), that does not converge to 0. By assumption, \(\nu _\sigma ^\mathrm {in}(\{\Psi ^\mathrm {in}_\sigma \}) = 0\) and hence all the mass concentrates around 0. Therefore, \(W_1(\delta _0,\nu _\sigma (t))\rightarrow 0\) as \(t\rightarrow \infty \). Because this holds true for all \(\sigma \in [M]\), the all-synchronized state is asymptotically stable for absolutely continuous perturbations. \(\square \)

Remark 3.24

(Comparing finite-dimensional stability with infinite- dimensional stability) To get a system of discrete oscillators from the general system (2.6), (3.4) one starts with a discrete initial measure (2.10) and considers the evolution of it under the system (2.6), (3.4). Then, there is a finite-dimensional ODE system that describes the evolution of each discrete oscillator in each population. This system resembles the system (2.11) but has the additional assumption (3.2), (3.3). By simple linearization, the all-synchronized state \(\mathrm {S}^M\) in this finite-dimensional system can be shown to be stable if \(a_\sigma >0\) for all \(\sigma \in [M]\). While this is the same assumption of Theorem 3.16, the result is stronger. In particular, under this assumption, \(\mathrm {S}^M\) is not only stable but also asymptotically stable in the finite-dimensional system and there is no extra assumption about additional zeros of coupling functions required as in Theorem 3.22. They key reason for this missing assumption is that in the finite-dimensional system, that tracks the positions of discrete oscillators, we only consider perturbations of \(\mathrm {S}^M\) such that the system remains finite dimensional. For all perturbations of these kind, the support of the perturbed discrete measure stays close to the support of an all-synchronized state. In particular, for small perturbations, only the local behavior of the coupling function around 0 influences the dynamics. To be precise, only the values of \(g_\sigma (\alpha ,\phi )\) with \(\alpha \in (-\epsilon ,\epsilon )^{\left|r^\sigma \right|}, \phi \in (-\epsilon ,\epsilon )\) for small \(\epsilon >0\) determine the evolution of small perturbations. On the other hand, the support of a perturbed measure when considering generic perturbations, is not related in any way to the support of the original unperturbed measure. Therefore, even slight generic perturbations of \(\mathrm {S}^M\) can be influenced by values of \(g_\sigma (\alpha ,\phi )\) for all \(\alpha \in {\mathbb {S}}^{\left|r^\sigma \right|}, \phi \in {\mathbb {S}}\). This is why we need an extra assumption about zeros of \({\hat{g}}_\sigma \) in Theorem 3.22.

Example 3.25

In the easiest case, there is just one population, i.e., \(M=1\), and no higher-order coupling. Then, the velocity field along which the first population gets transported is given by

Theorem 3.16 yields the stability of the synchronized state in this system if \(f\in C^1({\mathbb {S}})\) and \(f'(0)>0\). Further, by Theorem 3.22, the synchronized state is asymptotically stable in the space of densities if furthermore the function \(\phi \mapsto f(\psi )-f(0)\) has only one root with non-vanishing derivative around the circle except 0 and \(f'\) is Lipschitz continuous.

Remark 3.26

Theorems 3.16 and 3.22 per se only apply to perturbations in all populations. However, if we exemplarily want to analyze the stability of \(\mathrm {D}\mathrm {S}\mathrm {S}\mathrm {D}\) in a network of \(M=4\) populations, Proposition 3.4 allows us to reduce the system of four populations to equations describing only the evolution of population \(\#2\) and \(\#3\) while we keep population \(\#1\) and \(\#4\) fixed in splay state. Applying Theorems 3.16 and 3.22 consequently yields criteria for the (asymptotic) stability of \(\mathrm {D}\mathrm {S}\mathrm {S}\mathrm {D}\) with respect to perturbations in the second and third population.

Example 3.27

(Heteroclinic Structures) Consider Example 3.3 again. Having put the system into the form (3.4) allows us to apply results from Sect. 3. For example Theorem 3.16 yields the stability of the all-synchronized state if the function

satisfies \(f'(0)>0\). Let us now try to investigate the stability of \(\mathrm {S}\mathrm {D}\mathrm {D}\) with respect to perturbations in the first population. Unfortunately, we cannot apply Theorem 3.16 immediately but we have to do some preparatory steps first. So we first choose \(\mu _2^\mathrm {in}(A) = \mu _3^\mathrm {in}(A) = \frac{1}{2\pi }\lambda _{\mathbb {S}}(A)\). Then, Proposition 3.5 causes the second and third population to stay in splay state for all \(t\ge 0\). The velocity field according to which \(\mu _1(t)\) is transported is given by

Therefore, the dynamics in \(\mu _1\mathrm {D}\mathrm {D}\) can be described by a single coupling function

Only now, we can apply Theorem 3.16 to see that \(\mathrm {S}\mathrm {D}\mathrm {D}\) is stable with respect to perturbations in the first population if the function \({\hat{g}}\) satisfies \({\hat{g}}'(0)>0\).

4.2 Linear stability of splay phase configurations

We now consider the stability of splay phase configurations. Our analysis builds upon ideas for traditional Kuramoto-type models. As a warm-up recall how the stability conditions are derived in a one population model with pairwise coupling, whose evolution operator (3.4) is given by

A well-known way [44, 47] for analyzing stability of the splay state in this system is to look at the mean-field (or continuity) equation

which describes the evolution of the density \(\rho (t,\phi )\). Next, insert the ansatz \(\rho (t,\phi ) = \frac{1}{2\pi } + \epsilon \eta (t,\phi )\) into the continuity equation and collects terms of order \(\epsilon \). Assuming Fourier representations

where c.c. denotes the complex conjugate of the previous term, one can derive differential equations for the evolution of the coefficients \(c_k(t)\):

Fortunately, these equations are uncoupled and linear stability of the splay state can thus simply be infered if \({\text {Im}}(a_k)>0\) for all \(k\ge 1\). In other words, when writing the coupling function \(g(\gamma )\) as linear combinations of \(\sin (\gamma )\), \(\cos (\gamma )\) and trigonometric monomes of higher order, the prefactors of \(\sin (\gamma ), \sin (2\gamma ),\dots \) have to be negative. A similar analysis yields the linear instability of the splay state if \({\text {Im}}(a_k) <0 \) for at least one k.

Now, we extend these methods to the general case of multi-population systems (2.6), (3.4) in which higher-order interactions are present.

and the densities \(\rho _\sigma (t,\phi )\) solve the continuity Eq. (2.13), that we have introduced in Sect. 2.3. Here, \(\rho ^{(r^\sigma )}\) is the shorthand notation for

As in the simple one-population case, we consider a small perturbation around the all-splay state, i.e.,

with Fourier decompositions

Further, the coupling functions \(g_\sigma :{\mathbb {S}}^{\left|r^\sigma \right|}\times {\mathbb {S}}\rightarrow {\mathbb {R}}\) are supposed to be given in terms of its Fourier expansion as well:

The requirement \(a^\sigma _{{\mathbf {0}}, 0}=0\) is not a limitation as possible non-zero values of \(a^\sigma _{{\mathbf {0}}, 0}=0\) can be absorbed into \(\omega _\sigma \).

Given these representations, we formally insert (3.19) into the continuity Eq. (2.13), then collect terms of order \({\mathcal {O}} (\epsilon )\) and finally use the Fourier representations (3.20), (3.21) to obtain

Thus, after having taken the derivative and having collected \(e^{ik\phi }\)-terms, it is easy to see that \(c_k^\sigma (t)\) obeys the differential equation

Therefore, small perturbations of population \(\sigma \) in direction of \(e^{ik\phi } + c.c.\) with \(k\ge 1\) decay on a linear level if \({\text {Re}}(-({\bar{a}}_{{\mathbf {0}},k}^\sigma + \omega _\sigma )ik) = -k{\text {Im}}(a_{{\mathbf {0}}, k}^\sigma ) < 0\). Similarly, they grow if \(-k{\text {Im}}(a_{{\mathbf {0}},k}^\sigma ) > 0\). However, it is important to note that the Eq. (3.22) are only based on a formal derivation. Assuming nonetheless that our formal calculations can be made rigorous in this way, we can summarize our results by claiming linear stability of the all-splay state if \({\text {Im}}(a_{{\mathbf {0}},k}^\sigma ) > 0\) for all \(\sigma \in [M], k=1,2,\dots \) and linear instability if \({\text {Im}}(a_{{\mathbf {0}},k}^\sigma ) < 0\) for one \(\sigma \in [M]\) and one \(k=1,2,\dots \).

Example 3.28

(Heteroclinic Structures) We continue to consider Example 3.27. In order to investigate the linear stability of \(\mathrm {D}\mathrm {D}\mathrm {D}\) we need to assume Fourier expansions

By inserting them into the representation of the coupling function g, we see that

for Fourier coefficients \(a_{l,k}\) that satisfy \(a_{{\mathbf {0}}, k} = \xi _k - K^-\zeta _{0,k} + K^+\zeta _{0,k}\). By the results obtained in this Section, the all-splay state is linearly stable if

for all \(k = 1,2,\dots \) and linearly unstable if one of these coefficients is less than 0.

These results give necessary conditions for the emergence of heteroclinic cycles involving the invariant sets \(\mathrm {S}\mathrm {D}\mathrm {D}, \mathrm {S}\mathrm {S}\mathrm {D}, \dotsc \) to exist not only in networks of finitely many oscillators but also in the mean-field limit of these systems. Note that a similar analysis is possible for the mean-field limit of networks that consist of \(M=4\) coupled oscillator populations that support heteroclinic networks with multiple cycles in [13].

Remark 3.29

There are several challenges when trying to obtain rigorous (linear) stability results. First, we have to rigorously linearize by constructing a suitable function space in which the operator F defined by

is Fréchet differentiable. Then, we have to check that the formal calculation above holds within this function space, and that we have described the spectrum completely. Finally, one has to invoke a suitable result that linear stability entails local nonlinear stability. Carrying out this full stability analysis program is beyond the scope of the current work.

5 Discussion and outlook

In this paper, we have developed a new general framework for the evolution of coupled phase oscillator populations with higher-order coupling. First, we adopted the solution theory from [39] to our general framework. We clarified existence and uniqueness of weakly continuous solutions to the characteristic system and justified the mean-field limit. In the main part of this article, we studied dynamical properties of the characteristic system. Next, we proved the stability of the all-synchronized state under certain conditions on the coupling functions. Importantly, our results do not require the coupling function to be sinusoidal as assumed in previous works. We also developed linear stability analysis for the splay state via the mean-field limit equation. Finally, we provided examples that illustrate how this result can be applied to determine stability of synchrony patterns, in which some populations are in splay state and the remaining ones are synchronized.

Although we have provided the general mathematical foundations for studying large-scale multi-population oscillator networks with higher-order coupling, there are still many open questions for future work. Here, we considered the case of populations with identical oscillators. This means that in the mean-field limit there are atomic measures that are invariant under the flow. There are two ways to break this degeneracy. First, one can assume that the intrinsic frequencies of the oscillators follow a distribution with a density as in the classical Kuramoto model; cf. [11]. Second, adding noise to the evolution leads to a diffusive terms in the Fokker–Planck equation [49]. In either case, the synchronized phase configuration is not invariant anymore and deforms to a near-synchronous stationary solution. Insights into how the stability properties derived here change through these perturbations would be desirable. Unlike the synchronized state, the splay state would stay invariant for non-identical oscillators but investigating its linear stability would be more complicated due to the frequency dependence. One expects bifurcation structures to be affected generically by higher-order coupling, e.g., being able to change super- to sub-critical transitions [31, 43].

If the network interactions contain just a single harmonic, the Watanabe–Strogatz reduction applies. Its application in the mean-field limit has so far been heuristic and one typically assumes the existence of densities [42] and it would be interesting to understand Watanabe–Strogatz theory for the characteristic Eq. (2.6) that describes the evolution of general measures. Together with nonidentical frequencies within a populations, this would be the first step towards a rigorous description of Ott–Antonsen theory in a measure theoretic sense; see also [22].

While we discussed network dynamical systems with higher-order interactions, we did not assign an explicit algebraic structure to the dynamical equations. Recently, dynamical systems on higher-order networks—whether hypergraphs or simplicial complexes—have attracted attention. While the assignment of higher-order network structure may not be unique, this perspective has its advantages: It naturally leads to limiting systems involving hypergraph variants of graphons [32, 36, 37], or more generally hypergraph variants of graphops [6, 24, 30]. In this context, one could also aim to link the stability analysis via the Vlasov–Fokker–Planck equation for hypergraphs better with direct methods on the level of the finite-dimensional ODEs for hypergraphs such as master stability functions [7, 38].

References

Acebrón, J., Bonilla, L., Vicente, C.P., Ritort, F., Spigler, R.: The Kuramoto model: A simple paradigm for synchronization phenomena. Rev. Mod. Phys. 77(1), 137–185 (2005)

Arenas, A., Díaz-Guilera, A., Kurths, J., Moreno, Y., Zhou, C.: Synchronization in complex networks. Phys. Rep. 469(3), 93–153 (2008)

Ashwin, P., Swift, J.W.: The dynamics of n weakly coupled identical oscillators. J. Nonlinear Sci. 2(1), 69–108 (1992)

Ashwin, P., Bick, C., Poignard, C.: State-dependent effective interactions in oscillator networks through coupling functions with dead zones. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 377(2160), 20190042 (2019)

Ashwin, P., Coombes, S., Nicks, R.: Mathematical Frameworks for Oscillatory Network Dynamics in Neuroscience. J. Math. Neurosci. 6(1), 1–92 (2016)

Backhausz, Á., Szegedy, B.: Action convergence of operators and graphs. Can. J. Math. 74(1), 1–50 (2022)

Battiston, F., Cencetti, G., Iacopini, I., Latora, V., Lucas, M., Patania, A., Young, J.-G., Petri, G.: Networks beyond pairwise interactions: structure and dynamics. Phys. Rep. 874, 1–92 (2020)

Benedetto, D., Caglioti, E., Montemagno, U.: On the complete phase synchronization for the kuramoto model in the mean-field limit. Commun. Math. Sci. 13(7), 1775–1786 (2015)

Bick, C.: Heteroclinic switching between chimeras. Phys. Rev. E 97(5), 050201(R) (2018)

Bick, C.: Heteroclinic Dynamics of Localized Frequency Synchrony: Heteroclinic Cycles for Small Populations. J. Nonlinear Sci. 29(6), 2547–2570 (2019)

Bick, C., Goodfellow, M., Laing, C.R., Martens, E.A.: Understanding the dynamics of biological and neural oscillator networks through exact mean-field reductions: a review. J. Math. Neurosci. 10(1), 9 (2020)

Bick, C., Gross, E., Harrington, H., Schaub, M.T.: What are higher-order networks? arXiv:2104.11329 (2021)

Bick, C., Lohse, A.: Heteroclinic Dynamics of Localized Frequency Synchrony: Stability of Heteroclinic Cycles and Networks. J. Nonlinear Sci. 29(6), 2571–2600 (2019)

Bick, C., Sclosa, D.: Mean-field limits of phase oscillator networks and their symmetries. arXiv:2110.13686 (oct 2021)

Bick, C., Timme, M., Paulikat, D., Rathlev, D., Ashwin, P.: Chaos in symmetric phase oscillator networks. Phys. Rev. Lett. 107(24), 1–4 (2011)

Buck, J., Buck, E.: Mechanism of rhythmic synchronous flashing of fireflies. Science 159(3821), 1319–1327 (1968)

Carrillo, J.A., Choi, Y.P., Ha, S.Y., Kang, M.-J., Kim, Y.: Contractivity of Transport Distances for the Kinetic Kuramoto Equation. J. Stat. Phys. 156(2), 395–415 (2014)

Daido, H.: Generic scaling at the onset of macroscopic mutual entrainment in limit-cycle oscillators with uniform all-to-all coupling. Phys. Rev. Lett. 73(5), 760–763 (1994)

Dal’Maso Peron, T.K., Rodrigues, F.A.: Collective behavior in financial markets. EPL 96(4), 48004 (2011)

Dietert, H., Fernandez, B.: The mathematics of asymptotic stability in the Kuramoto model. Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences 474(2220), 20180467 (2018)

Dong, J.G., Xue, X.: Synchronization analysis of Kuramoto oscillators. Commun. Math. Sci. 11(2), 465–480 (2013)

Engelbrecht, J.R., Mirollo, R.: Is the Ott-Antonsen manifold attracting? Phys. Rev. Res. 2(2), 023057 (2020)

Girvan, M., Newman, M.E.J.: Community structure in social and biological networks. Proc. Natl. Acad. Sci. 99(12), 7821–7826 (2002)

Gkogkas, M.A., Kuehn, C.: Graphop mean-field limits for Kuramoto-type models. arXiv:2007.02868, 1–26 (2020)

Golse, F.: Mean Field Kinetic Equations. Course of Polytechnique (2013)

Kaliuzhnyi-Verbovetskyi, D., Medvedev, G.S.: The Mean Field Equation for the Kuramoto Model on Graph Sequences with Non-Lipschitz Limit. SIAM J. Math. Anal. 50(3), 2441–2465 (2018)

Kellerer, H.G.: Markov-Komposition und eine Anwendung auf Martingale. Math. Ann. 198(3), 99–122 (1972)

Kiss, I.Z., Zhai, Y., Hudson, J.L.: Emerging coherence in a population of chemical oscillators. Science 296(5573), 1676–1678 (2002)