Abstract

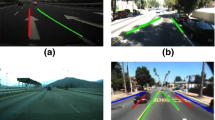

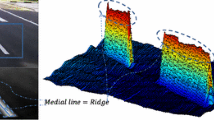

With the goal of developing an accurate and fast lane tracking system for the purpose of driver assistance, this paper proposes a vision-based fusion technique for lane tracking and forward vehicle detection to handle challenging conditions, i.e., lane occlusion by a forward vehicle, lane change, varying illumination, road traffic signs, and pitch motion, all of which often occur in real driving environments. First, our algorithm uses random sample consensus (RANSAC) and Kalman filtering to calculate the lane equation from the lane candidates found by template matching. Simple template matching and a combination of RANSAC and Kalman filtering makes calculating the lane equation as a hyperbola pair very quick and robust against varying illumination and discontinuities in the lane. Second, our algorithm uses a state transfer technique to maintain lane tracking continuously in spite of the lane changing situation. This reduces the computational time when dealing with the lane change because lane detection, which takes much more time than lane tracking, is not necessary with this algorithm. Third, false lane candidates from occlusions by frontal vehicles are eliminated using accurate regions of the forward vehicles from our improved forward vehicle detector. Fourth, our proposed method achieved robustness against road traffic signs and pitch motion using the adaptive region of interest and a constraint on the position of the vanishing point. Our algorithm was tested with image sequences from a real driving situation and demonstrated its robustness.

Similar content being viewed by others

References

Benhimane, S. and Malis, E. (2004). Real-time imagebased tracking of planes using efficient second-order minimization. IEEE/RSJ Int. Conf. Intelligent Robots and Systems, 943–948.

Borkar, A., Hayes, M., Smith, M. T. and Pankanti, S. (2009). A layered approach to robust lane detection at night. IEEE Workshop on Computational Intelligence in Vehicles and Vehicular Systems, 51–57.

Chang, J. Y., Hu, W. F., Cheng, M. H. and Chang, B. S. (2002). Digital image translational and rotational motion stabilization using optical flow technique. IEEE Trans. Consumer Electronics 48,1, 108–115.

Chen, Q. and Wang, H. (2006). A real-time lane detection algorithm based on a hyperbola-pair model. IEEE Intelligent Vehicles Symp., 510–515.

Choi, S., Kim, T. and Yu, W. (2009). Robust video stabilization to outlier motion using adaptive RANSAC. IEEE/RSJ Int. Conf. Intelligent Robots and Systems, 1897–1902.

Fishchler, M. A. and Bolles, R. C. (1981). Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Communications of the ACM, 24, 381–395.

Haralick, R. M. (1989). Monocular vision using inverse perspective projection geometry: Analytic relations. IEEE Conf. Computer Vision and Pattern Recognition, 370–378.

Haykin, S. (2001). Kalman Filtering and Neural Networks. John Wiley & Sons. New York.

Hsueh, P. C., Yeh, C. W., Cheng, C. H., Hsiao, P. Y., Jeng, M. J. and Chang, L. B. (2009). Realize a mobile lane detection system based on pocket PC portable devices. Int. Conf. Signal Processing, Computational Geometry & Artificial Vision, 135–140.

Jung, H. G., Lee, Y. H., Kang, H. J. and Kim, J. (2009). Sensor fusion-based lane detection for LKS+ACC System. Int. J. Automotive Technology 10,2, 219–228.

Lienhart, R. and Maydt, J. (2002). An extended set of haar-like features for rapid object detection. Int. Conf. Image Processing, 900–903.

Matsushita, Y., Ofek, E., Ge, W., Tang, X. and Shum, H. Y. (2006). Full-frame video stabilization with motion inpainting. IEEE Trans. Pattern Analysis and Machine Intelligence 28,7, 1150–1163.

Tsai, L. W., Hsieh, J. W., Chuang, C. H. and Fan. K. C. (2008). Lane Detection using Directional Random Walks. IEEE Intelligent Vehicles Symp., 303–306.

Viola, P. and Jones, M. (2004). Robust real-time face detection. Int. J. Computer Vision 57,2, 137–154.

Welch, G. and Bishop, G. (2006). An Introduction to the Kalman Filter. TR95-041. University North Carolina at Chapel Hill.

Wu, B. F., Lin, C. T. and Chen, Y. L. (2009). Dynamic calibration and occlusion handling algorithm for lane tracking. IEEE Trans. Industrial Electronics 56,5, 1757–1773.

Yang, J., Schonfeld, D. and Mohamed, M. (2009). Robust video stabilization based on particle filter tracking of projected camera motion. IEEE Trans. Circuits and Systems for Video Technology 19,7, 945–954.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Choi, H.C., Park, J.M., Choi, W.S. et al. Vision-based fusion of robust lane tracking and forward vehicle detection in a real driving environment. Int.J Automot. Technol. 13, 653–669 (2012). https://doi.org/10.1007/s12239-012-0064-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12239-012-0064-x