Abstract

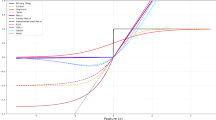

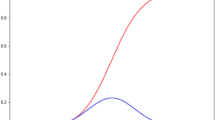

Now in the modern era of technology, everyone wants to get accurate and relevant results within a minimum period from a system. At the time of deciding to develop a deep neural network for generating the desired result, the model must be robust and efficient. This manuscript contains an original research work where two activations, namely sigmoid and Relu, are critically analyzed and studied for understanding the comparative performance of these two activations-based models. There are three deep learning libraries used for loading and preprocessing datasets such as Keras, NumPy, and Scikit-learn. The most popular MNIST dataset is used in this model. Further, the loaded dataset is divided into two parts for training and testing, and it has been done with a total number of ten iterations. The defined model is a multiclass network model having ten output classes, so that softmax activation is used in the output layer, which performs well with multiclass classification.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

De Carlos JA, Borrell J (2007) A historical reflection of the contributions of Cajal and Golgi to the foundations of neuroscience. Brain Res Rev 55:8–16. https://doi.org/10.1016/j.brainresrev.2007.03.010

Venkataramani PV (2010) Santiago Ramón y Cajal: father of neurosciences. Resonance 11(14):968–976

Schwiening CJ (2012) A brief historical perspective: Hodgkin and Huxley. J Physiol 590(11):2571–2575. https://doi.org/10.1113/jphysiol.2012.230458

Catterall WA, Raman IM, Robinson HPC, Sejnowski TJ, Paulsen O (2012) The Hodgkin-Huxley heritage: from channels to circuits. J Physiol 32(41):14064–14071. http://doi.org/10.1523/JNEUROSCI.3403-12.2012

Lettvin Y, Maturanat HR, McCulloch WS, Pitts WH (1959) What the frog’s eye tells the frog’s brain. In: Proceedings of the IRE 1940–1951

Myhrvold C (2013) In a frog’s eye. MIT Technology Review

Olazaran M (1996) A sociological study of the official history of the perceptrons controversy. Soc Stud Sci 611–659

Seising R (2018) The emergence of fuzzy sets in the decade of the perceptron—Lotfi A Zadeh and Frank Rosenblatt’s research work on pattern classification. Mathematics 6(7):110. https://doi.org/10.3390/math6070110

Minsky M, Papert S (1970) Perceptrons: an introduction to computational geometry. Inf Control 17(5):501–522. http://doi.org/10.1016/S0019-9958(70)90409-2

Szandała T (2020) Review and comparison of commonly used activation functions for deep neural networks. Bio-Inspired Neurocomput 203–224

Sun J, Binder A (2019) Generalized pattern attribution for neural networks with sigmoid activations. IJCNN 1–9. http://doi.org/10.1109/IJCNN.2019.8851761

Banerjee C, Mukherjee T, Pasiliao E (2019) An empirical study on generalizations of the ReLU activation function. In: ACM southeast conference, pp 164–167. http://doi.org/10.1145/3299815.3314450

Ketkar N (2017) Introduction to keras, deep learning with python, pp 95–109

Bisong E (2019) Introduction to scikit-learn. In: Building machine learning and deep learning models on Google cloud platform, pp 215–229. http://doi.org/10.1007/978-1-4842-4470-8

Harris CR, Millman KJ, van der Walt SJ, Gommers R (2020) Array programming with NumPy. Nature 585(7825):357–362. http://doi.org/10.1038%2Fs41586-020-2649-2

Datasets API. https://keras.io/api/datasets/. Accessed on Jan 2021

Models API. https://keras.io/api/models/. Accessed on Jan 2021

Keras layers API. https://keras.io/api/layers/. Accessed on Jan 2021

Utilities. https://keras.io/api/utils/. Accessed on Jan 2021

Callbacks API. https://keras.io/api/callbacks/. Accessed on Jan 2021

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Waoo, A.A., Soni, B.K. (2021). Performance Analysis of Sigmoid and Relu Activation Functions in Deep Neural Network. In: Sheth, A., Sinhal, A., Shrivastava, A., Pandey, A.K. (eds) Intelligent Systems. Algorithms for Intelligent Systems. Springer, Singapore. https://doi.org/10.1007/978-981-16-2248-9_5

Download citation

DOI: https://doi.org/10.1007/978-981-16-2248-9_5

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-16-2247-2

Online ISBN: 978-981-16-2248-9

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)