Abstract

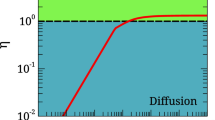

We study properties of multi-layered, interconnected networks from an ensemble perspective, i.e. we analyze ensembles of multi-layer networks that share similar aggregate characteristics. Using a diffusive process that evolves on a multi-layer network, we analyze how the speed of diffusion depends on the aggregate characteristics of both intra- and inter-layer connectivity. Through a block-matrix model representing the distinct layers, we construct transition matrices of random walkers on multi-layer networks, and estimate expected properties of multi-layer networks using a mean-field approach. In addition, we quantify and explore conditions on the link topology that allow to estimate the ensemble average by only considering aggregate statistics of the layers. Our approach can be used when only partial information is available, like it is usually the case for real-world multi-layer complex systems.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

Note that even though the intra-layer networks are empty initially, there is a number of inter-layer links which provide connectivity across the layers, similar to a bipartite network.

References

Parshani, R., Buldyrev, S.V., Havlin, S.: Critical effect of dependency groups on the function of networks. Proc. Natl. Acad. Sci. 108, 1007–1010 (2011)

Gao, J., Buldyrev, S.V., Stanley, H.E., Havlin, S.: Networks formed from interdependent networks. Nat. Phys. 8, 40–48 (2012)

Gómez, S., Díaz-Guilera, A., Gómez-Gardeñes, J., Pérez-Vicente, C.J.: Moreno, Y., Arenas, A.: Diffusion dynamics on multiplex networks. Phys. Rev. Lett. 110, 028701 (2013)

Garas, A.: Reaction-diffusion processes on interconnected scale-free networks (2014). arXiv:1407.6621

Boccaletti, S., Bianconi, G., Criado, R., del Genio, C.I., Gómez-Gardeñes, J., Romance, M., Sendiña-Nadal, I., Wang, Z., Zanin, M.: The structure and dynamics of multilayer networks. Phys. Rep. 544, 1–122 (2014)

Blanchard, P., Volchenkov, D.: Random Walks and Diffusions on Graphs and Databases. Springer, Berlin/Heidelberg (2011). ISBN:978-3-642-19592-1

Rosenthal, J.S.: Convergence rates for Markov chains. SIAM Rev. 37, 387–405 (1995)

Chung, F.: Laplacians and the Cheeger inequality for directed graphs. Ann. Comb. 9, 1–19 (2005)

Lovász, L.: Random walks on graphs: a survey. Comb., Paul Erdos Eighty 2, 1–46 (1993)

De Domenico, M., Sole, A., Gómez, S., Arenas, A.: Navigability of interconnected networks under random failures. Proc. Natl. Acad. Sci. 111, 8351–8356 (2014)

Solé-Ribalta, A., De Domenico, M., Kouvaris, N.E., Díaz-Guilera, A., Gómez, S., Arenas, A.: Spectral properties of the Laplacian of multiplex networks. Phys. Rev. E 88, 032807 (2013)

Kivelä, M., Arenas, A., Barthelemy, M., Gleeson, J.P., Moreno, Y., Porter, M.A.: Multilayer networks. J. Complex Netw. 2, 203–271 (2014)

De Domenico, M., Solé-Ribalta, A., Cozzo, E., Kivelä, M., Moreno, Y., Porter, M.A., Gómez, S., Arenas, A.: Mathematical formulation of multilayer networks. Phys. Rev. X 3, 041022 (2013)

Fiedler, M.: A property of eigenvectors of nonnegative symmetric matrices and its application to graph theory. Czechoslov. Math. J. 25(4), 619–633 (1975)

Gfeller, D., De Los Rios, P.: Spectral coarse graining of complex networks. Phys. Rev. Lett. 99, 038701 (2007)

Martín-Hernández, J., Wang, H., Van Mieghem, P., D’Agostino, G.: Algebraic connectivity of interdependent networks. Phys. A: Stat. Mech. Appl. 404, 92–105 (2014)

Grabow, C., Grosskinsky, S., Timme, M.: Small-world network spectra in mean-field theory. Phys. Rev. Lett. 108: 218701 (2012)

Radicchi, F., Arenas, A.: Abrupt transition in the structural formation of interconnected networks. Nat. Phys. 9, 717–720 (2013)

Fiedler, M.: Algebraic connectivity of graphs. Czechoslov. Math. J. 23, 298–305 (1973)

Acknowledgements

N.W., A.G. and F.S. acknowledge support from the EU-FET project MULTIPLEX 317532.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

Note: Unless stated otherwise, here vectors are considered to be row-vectors and multiplication of vectors with matrices are left multiplications.

We assume a multi-layer network G consisting of L layers \(G_{1},\ldots,G_{L}\) and n nodes. A single layer G s contains n s nodes and therefore \(\sum _{s=1}^{L}n_{s} = n\). For a multi-layer network G we define the supra-transition matrix that can be represented in block structure according to the layers:

Each T st contains all the transition probabilities from nodes in G s to nodes in G t . Assuming Eq. (3.8) it follows that \(\mathbf{T}_{st} =\alpha _{st}\mathbf{R}_{st}\) where R st is a row stochastic matrix. This means that all T st are scaled transition matrices. The factor α st represents the weighted fraction of all links starting in G s that end up in G t .

In this respect we define the aggregated transition matrix \(\mathfrak{T}\) of dimension L,

Each vector v of dimension n can be split according to the layer-separation given by G,

Each component v (k) has exactly dimension n k . We define the layer-aggregated vector \(\mathfrak{v} = (\mathfrak{v}_{1},\ldots,\mathfrak{v}_{L})\) of dimension L as follows

We use the bracket notation [v] i to represent the i-th entry of the vector v. Analogously, by [v M] i we mean the i-th entry w i that represents the multiplication of v with a matrix M, i.e. w = v M. Further, by | v | we indicate the sum of the entries of v, \(\vert v\vert =\sum _{i}v_{i} =\sum _{i}[v]_{i}\).

Theorem 1

For a multi-layer network G consisting of L layers we assume the supra-transition matrix T to consist of block matrices T st such that for all s,t ∈{ 1,…,L}, \(\mathbf{T}_{st} =\alpha _{st}\mathbf{R}_{st}\) where \(\alpha _{st} \in \mathbb{Q}\) and R st is a row stochastic transition matrix. The multi-layer aggregation is defined by \(\mathfrak{T} =\{\alpha _{st}\}_{st}\) . If an eigenvalue \(\lambda\) of the matrix T corresponds to an eigenvector v with a layer-aggregation \(\mathfrak{v}\) that satisfies \(\mathfrak{v}\neq 0\) then \(\lambda\) is also an eigenvalue of \(\mathfrak{T}\).

Proof

Assume v is a left eigenvector of T corresponding to the eigenvalue \(\lambda\). Therefore, it holds that \(v\mathbf{T} =\lambda v\). Let v (k) be the k-th part of v that corresponds to the layer G k . We can write the matrix multiplication in block structure.

Each v (k) is a row vector which length is equal to the amount of nodes n k in G k . The transformation \(\sum _{l}v^{(l)}\mathbf{T}_{lk}\) is also a row vector with the same length as v (k). According to the eigenvalue equation it holds that for all k ∈ { 1, …, L}

Now let us denote the sum of the vector entries of v (k) as

Further, we define layer-aggregated vector consisting of this sums by \(\mathfrak{v} = \left (\mathfrak{v}_{1},\ldots,\mathfrak{v}_{n}\right )\). Note that for a general row stochastic matrix M and its multiplication with any vector v it holds that \(\sum _{j}[v]_{j} =\sum _{j}[v\mathbf{M}]_{j}\). For the components after multiplication with T we can deduce

If we multiply \(\mathfrak{v}\) with \(\mathfrak{T}\) and look at a single entry of \(\mathfrak{v}\mathfrak{T}\) we get

Hence it holds that

and therefore

Finally since T is row stochastic and \(\lambda v = v\mathbf{T}\) we have that

Therefore, \(\lambda\) is also an eigenvalue of \(\mathfrak{T}\) to the eigenvector \(\mathfrak{v}\) defined as before. It is important to note that this only holds if \(\mathfrak{v}\neq 0\).

The procedure used in the proof of the previous theorem applies to several eigenvalues of T but at most L of them. Next we give a proposition for the reversed statement of Theorem 1.

Proposition 1

Let G be a multi-layer network that consists of L layers and fulfills all of the conditions of Theorem 1 . Let \(\mathfrak{T} =\{\alpha _{st}\}_{st}\) be the multi-layer aggregation of T . If \(\lambda\) is an eigenvalue of \(\mathfrak{T}\) then \(\lambda\) is also an eigenvalue of T.

Proof

Assume that \(\lambda\) is an eigenvalue of \(\mathfrak{T}\). For each matrix there exist a left and right eigenvector that correspond to the same eigenvalue \(\lambda\). Assume the \(\mathfrak{w}\) is the right eigenvector and therefore a column vector. Hence \(\mathfrak{T}\mathfrak{w} =\lambda \mathfrak{w}\) and

Now we generate a column vector w of dimension n such that for all the layer components w (k) it holds that

Next we perform a right multiplication of w with T,

Since all R st are row stochastic matrices and w (l) contains only the value \(\mathfrak{w}_{l}\) for each entry we get \(\mathbf{R}_{st}w^{(l)} = w^{(l)}\). It follows that

And therefore \(\lambda\) is also an eigenvalue of T.

In the case of a diffusion process we are especially interested in the second-largest eigenvalue of T, denoted by \(\lambda _{2}(\mathbf{T})\), which is related to algebraic connectivity of T. In this perspective the following corollary is useful:

Corollary 1

Let G be a multi-layer network consisting of L layers that fulfill all of the conditions of Theorem 1 . Further assume that G is partitioned according to a spectral partitioning, i.e. according to the eigenvector corresponding to \(\lambda _{2}(\mathbf{T})\) , then \(\lambda _{2}(\mathbf{T}) =\lambda _{2}(\mathfrak{T})\).

Proof

In general all the eigenvectors of a transition matrix, except the eigenvector corresponding to the largest eigenvalue that is equal to one, sum up to zero. However, these eigenvectors consist of positive and negative entries that allow for a spectral partitioning. Especially the eigenvector v 2 that corresponds to the second-largest eigenvalue \(\lambda _{2}(\mathbf{T})\), can be used for the partitioning of the network. This eigenvector is related to the Fiedler vector that is also used for spectral bisection [19]. Therefore if the layer-partition of G coincides with this spectral partitioning we assure that the layer-aggregated vector of v 2 satisfies \(\mathfrak{v}_{2}\neq 0\). Considering this and Proposition 1 the corollary follows directly from Theorem 1.

Given Eq. (3.8) we can fully describe the spectrum of T based on the intra-layers transition blocks T i for i ∈ { 1, …, n} and the spectrum of \(\mathfrak{T}\). Note that with uniform columns of a matrix M we mean that each column of M contains the same value in each entry. However, this value can be different for different columns.

Proposition 2

Let T be the supra-transition matrix of a multi-layer network G that consist of L layers and satisfies Eq. ( 3.8 ). If T has off-diagonal block matrices T st , for s,t ∈{ 1,…,n} and s ≠ t, that all have uniform columns, then the spectrum of T can be decomposed as

where T s are the block matrices of T corresponding to the single layers G s and \(\lambda _{1}(\mathbf{T}_{s})\) the largest eigenvalue of T s . The eigenvalues \(\lambda _{2},\ldots,\lambda _{L}\) are attributed to the interconnectivity of layers.

Proof

To prove this statement we just have to show that all eigenvalues (except the largest one) of T s for s ∈ { 1, …, L} are also eigenvalues of T. We assume that \(\lambda\) is any eigenvalue corresponding to the eigenvector u of some block matrix T r , i.e. \(\lambda u = u\mathbf{T}_{r}\). We define a row vector v that is zero everywhere except at the position where it corresponds to T r . The vector v looks like v = (0, …, 0, u, 0, …, 0). Now we investigate what happens if we multiply this vector with the transition matrix T.

Let us take a look at the effect of the matrix multiplication on an arbitrary component v (k) with k ≠ r and recall that v (k) is equal to a zero vector 0 for k ≠ r.

Note that all eigenvectors u of a transition matrix that are not related to the largest eigenvalue sum up to zero. Therefore it holds that u T rk = 0 since T rk has uniform columns and therefore u T rk yields in a vector where each entry is equal to some multiple of | u | . In case of k = r it holds that v (k) = u and we get

Hence, it holds that \(v\mathbf{T} =\lambda v\), which means that \(\lambda\) is also an eigenvalue of T. This way we get n − L eigenvalues of T apart from the largest eigenvalue that is equal to one. The remaining eigenvalues denoted by \(\lambda _{2},\ldots,\lambda _{L}\) are not attributed to any block matrix of T. Therefore they are considered to be the interconnectivity eigenvalues.

Corollary 2

Let G be a multi-layer network consisting of L layers that satisfies Eq. ( 3.8 ) and the conditions of Proposition 2 . Then the aggregated matrix \(\mathfrak{T} =\{\alpha _{st}\}_{st}\) has spectrum

and it holds that \(\lambda _{2},\ldots,\lambda _{L} \in Spec(\mathbf{T})\).

Proof

Note that every eigenvalue \(\lambda \neq 1\) of some block matrix T r with \(\lambda u = u\mathbf{T}_{r}\) is by Proposition 2 also an eigenvalue of T. Furthermore, \(\lambda\) is attributed to the eigenvector v = (0, …, 0, u, 0, …, 0) of T. However | v | = 0 since u is an eigenvector of a transition matrix, not related to the largest eigenvalue, and therefore sums up to zero. Hence all eigenvalues fulfilling this condition are by Theorem 1 not eigenvalues of \(\mathfrak{T}\). Since \(\mathfrak{T}\) contains at least L eigenvalues that by Proposition 1 also correspond to eigenvalues of T, the remaining eigenvalues \(\lambda _{2},\ldots,\lambda _{L}\) have to also be eigenvalues of \(\mathfrak{T}\).

In the following we provide a useful proposition for the eigenvalues arising from the inter-links in case of two layers. Note that by the function T(⋅ ) applied to matrix M we indicate that T(M) is the row-normalization of M.

Proposition 3

Let G be a multi-layer network that satisfies Eq. ( 3.8 ), consisting of two networks G 1 and G 2 in separate layers. Assume that the supra-transition matrix T has the form

where T I is the transition matrix of the layer G that only consists of the inter-layer links and \(\beta \in \mathbb{Q}\) is a constant. Furthermore, assume that T 1 and T 2 have uniform columns.

Then for \(\lambda \in Spec(\mathbf{T}^{I})\) and \(\lambda \neq 1,-1\) it holds that \((1-\beta )\lambda \in Spec(\mathbf{T})\).

Proof

If v is an eigenvector to the eigenvalue \(\lambda \neq 1,-1\) of T I it holds that \(v\mathbf{T}^{I} =\lambda v\). Hence,

Because \(\lambda v^{(2)} = v^{(1)}\mathbf{T}_{12}^{I}\), we get \(\lambda ^{2}v^{(1)} = v^{(1)}\mathbf{T}_{12}^{I}\mathbf{T}_{21}^{I}\). Therefore, v (1) is also an eigenvector of the transition matrix \(\mathbf{T}_{12}^{I}\mathbf{T}_{21}^{I}\) to the eigenvalue \(\lambda ^{2}\). Note that \(\lambda \neq 1,-1\) hence \(\lambda ^{2} <1\) which implies that v (1) does not correspond to the largest eigenvalue and therefore its entries sum up to zero. The same holds for v (2) and the matrix \(\mathbf{T}_{21}^{I}\mathbf{T}_{12}^{I}\). For the multiplication of v with T we deduce that

Since T 1 and T 2 have uniform columns we get \(v^{(1)}\mathbf{T}_{1} = \mathbf{0}\) and \(v^{(2)}\mathbf{T}_{2} = \mathbf{0}\). And therefore \(v\mathbf{T} = (1-\beta )\lambda v\) and \((1-\beta )\lambda \in Spec(\mathbf{T})\).

Proposition 3 can be extended to multiple layers, however the proof is more involved and will be omitted.

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Wider, N., Garas, A., Scholtes, I., Schweitzer, F. (2016). An Ensemble Perspective on Multi-layer Networks. In: Garas, A. (eds) Interconnected Networks. Understanding Complex Systems. Springer, Cham. https://doi.org/10.1007/978-3-319-23947-7_3

Download citation

DOI: https://doi.org/10.1007/978-3-319-23947-7_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-23945-3

Online ISBN: 978-3-319-23947-7

eBook Packages: Physics and AstronomyPhysics and Astronomy (R0)