Abstract

Effective exploration techniques during mechanized tunneling are of high importance in order to prevent severe surface settlements as well as a damage of the tunnel boring machine, which in turn would lead to additional costs and a standstill in the construction process. A seismic methodology called full waveform inversion can bring a considerable improvement compared to state-of-the-art seismic methods in terms of precision. Another method of exploration during mechanized tunneling is to continuously monitor subsurface behavior and then use this data to identify disturbances through pattern recognition and machine learning techniques. Various probabilistic methods for conducting system identification and proposing an appropriate monitoring plan are developed in this regard. Furthermore, ground conditions can be determined by studying boring machine data collected during the excavation. The active and passive obtained data during performance of a shield driven machine were used to estimate soil parameters. The monitoring campaign can be extended to include above-ground structural surveillance as well as terrestrial and satellite data to track displacements of existing infrastructure caused by tunneling. The available radar data for the Wehrhahn-line project are displayed and were utilized to precisely monitor the process of anticipated uplift by injections and any subsequent ground building settlements.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

2.1 Introduction

In mechanized tunneling, which is a highly automated process, a tunnel boring machine (TBM) sequentially excavates the ground and installs the tunnel lining. The high level of automation causes restrictions for a dynamic adaptation of the construction process and makes it vulnerable for unconsidered events. Sudden geological changes may endanger the process of tunnel construction by causing unexpected surface settlements, damage to the TBM or wear of the cutting tools. Since there is only a limited access to the front shield of the TBM during the construction, its maintenance turns out to be cumbersome. Furthermore, damage of the TBM leads to construction delays which may cause accumulation of running costs (e.g. personnel costs). Early identification of geological changes enables the opportunity to initiate countermeasures by, for instance, adapting the excavation velocity, changing the cutting tools or adapting the face pressure. During the planning as well as the construction phase, exploratory drilling is typically performed to characterize properties of the ground. However, such drilling is not sufficient for estimation of the subsoil properties along the whole tunnel track since it is locally limited.

Approaches that are suitable for exploring the space directly in front of the tunnel face are provided by exploration seismics. However, state-of-the-art methods only process the onsets of directly reflected waves and therefore neglect information of later arriving waves. In order to improve today’s exploration techniques, the application of a methodology called full waveform inversion (FWI) is proposed. Here, the whole information of the seismic records is taken into account. Different FWI approaches are proposed, investigated and validated with synthetic data and experimental laboratory data in Sect. 2.2.

Another possibility for exploration during mechanized tunneling is to analyze the gathered hydro-mechanical data during the excavation process. Measuring the changes of surface settlement or pore water pressure in various locations around the tunnel line is a common practice, and through analyzing these data the geotechnical pattern of the subsoil can be recognized. Therefore, a pattern recognition approach based on supervised machine learning is proposed and investigated in Sect. 2.3. To evaluate the surrounding geomaterial based on the measurements, one should carefully consider the associated aleatory and epistemic uncertainties in such problems. Uncertainties in geomaterials are mainly due to their geologic origin, which implies their heterogeneity as well as multiphase-nature at different aggregation states. Furthermore, the boundary conditions are frequently complicated as a result of multi-phase interactions in projects such as tunneling, making the conventional approaches of using soil sample and preliminary laboratory studies on limited number of samples to predict soil parameters less trustworthy. The impact of uncertainties on tunneling performance may be precisely assessed utilizing the model adaptation approach, which is primarily concerned with the use of previously acquired datasets in the subsequent prediction and TBM steering process. In this regard, various uncertainty quantification methods like sensitivity analysis, random field and back analysis were employed.

Afterwards, a scenario-based framework pattern recognition is used to explore the geological formation ahead of TBM based on the subsoil response to the excavation process. It takes into account several frequent geometrical variations of geological scenarios in tunnel construction, such as layer change, interlayers, or a large rock boulder. As a result, in the context of pattern identification, machine learning algorithms are used to identify critical changes in geological conditions, such as incoming new soil layers or enormous rock boulders.

Besides obtained data by sensors and gauges in the subsoil, TBMs constantly monitor several variables during excavation. Therefore, one may determine ground conditions by analyzing such machine data. Among all the monitored data, data on the movement of the cutting wheel and the shield in relation to subsoil conditions for a shield driven TBM, in particular, can be considered as formative data. Parameters as thrust force on cutting wheel, torque on cutting wheel and advance speed are referred to as excavation-specific data components, and they may be used as indicators for the Earth’s resistance to excavation (ground conditions) and the condition of the cutting wheel (wheel design, tool wear, and clogging), accordingly. The relation between different parameters on various projects and their effectiveness are studied in the Sect. 2.5.

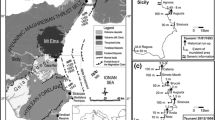

A comprehensive monitoring campaign, which is required to prevent any unexpected or serious damage to above-ground structures, may include the use of available radar data and terrestrial technologies for the above-ground observations in addition to subterranean monitoring. Terrestrial settlement monitoring includes measurements of the interplay of all (settlement) data prior to, during, and after tunnel construction. The data delivered by these technologies may be detailed to the millimeter level for monitoring the settlement or tilting of buildings in the impacted geographical extent of tunnel excavation. Using the radar data, on-site installations, such as those found in buildings, are no longer necessary. The technology captures complicated raw data signals (amplitude and phase information) provided by radar waves (electromagnetic waves) reflect on the upper mantle. These data can be utilized in visualization process besides the actual settlement monitoring. Visualized in space and time, critical conditions such as torsional deformations when underpassing structures in parallel can be recognized easier. Details about the above-ground monitoring methods and data is presented in Sect. 2.6. The following section offers a case study of monitoring displacements of existing infrastructure caused by the excavation of the Wehrhahn railway tunnel in Dusseldorf using a series of 16 TerraSAR-X photos.

2.2 Seismic Reconnaissance

Triggered by man-made explosions or natural earthquakes, energy is induced into the ground and travels through it in the form of seismic body waves. These waves have different propagation modes, e.g. compressional waves that vibrate in propagation direction and shear waves that vibrate perpendicular to the propagation direction. The propagation velocity of the different waves depends on the elastic properties of the ground. These are, in the context of seismological applications, usually described in terms of the compressional wave velocity \(v_{\text{p}}\) (or P-wave velocity) and the slower shear wave velocity \(v_{\text{s}}\) (or S-wave velocity),

rather than in terms of Young’s modulus \(E\) and Poisson’s ratio \(\nu\). The density of the ground is denoted by \(\rho\). Waves that encounter a stress free surface, e.g. the Earth’s surface, are to some part reflected. Another portion of these waves induces surface waves with very high amplitudes that propagate along the surface with a velocity slightly lower than the shear wave velocity. At geological interfaces, reflections, refractions and conversions of the seismic waves occur, whereby the amount of reflected, refracted and converted energy as well as the refraction angle depends on the material contrast.

The physical behavior of the ground can be described by the elastic wave equation

where \(\mathsf{C}(\mathbf{x})\) is the fourth-order material stiffness tensor which contains the elastic properties of the ground at the spatial position \(\mathbf{x}\). The external force vector at the time \(t\) is denoted by \(\mathbf{f}(\mathbf{x},t)\) whereas the displacement vector is denoted by \(\mathbf{u}(\mathbf{x},t)\) and its second derivative with respect to time by \(\ddot{\mathbf{u}}(\mathbf{x},t)\).

For exploration in tunneling, a non-destructive artificial signal is induced into the ground. A wave propagation scenario within a two-dimensional tunnel environment with a disturbance in front of the tunnel is illustrated by Fig. 2.1 for two different points in time.

A horizontal single-force source (triangle) at the tunnel face emits a Ricker wavelet with a peak frequency of 500 Hz. The vibration direction and amplitude of the waves are indicated by the red arrows. A rectangular shaped disturbance (indicated by its borders) with lower P- and S-wave velocities is located in front of the tunnel. The reflection of the first P-wave at the Earth’s surface can be observed in the left picture. The right picture illustrates the induced surface waves at the Earth’s surface as well as the interaction of the waves with the disturbance

The emitted seismic waves interact with anomalies and arrive at the geophones, which record the signal. The gained seismic records contain information about the geological conditions of the ground. Seismic exploration approaches utilize this information for the purpose of inferring the structure of the ground.

2.2.1 State-of-the-art Methods for Seismic Exploration in Mechanized Tunneling

State-of-the-art methods for seismic reconnaissance during mechanized tunneling are based on tomography methods. Certain parts of the seismic waveforms are evaluated, where most commonly reflected P-waves are extracted and processed for the purpose of estimating anomaly locations and properties. Two exemplary systems which are used in practice are Sonic Softground Probing (SSP) [49] and Tunnel Seismic Prediction (TSP) [92]. SSP is used for reconnaissance in soft rock. Sources and receivers are placed at the cutting wheel of the TBM and in the case that reflected waves arrive at the receivers, they are extracted from the waveform and evaluated with migration techniques. The range of SSP is specified to lie at about 40 m [101]. In TSP, sources and receivers are placed in boreholes along the tunnel wall. Also here, reflected waves are analyzed and evaluated using various geotechnical approaches. TSP’s range is given with about 150-200 m; however, the error margin increases rapidly with increasing distance to the TBM shield [20]. A methodology which is generally able to provide a detailed image of the subsoil is the concept of full waveform inversion (FWI). The method can be expected to outperform the state-of-the-art systems in terms of ranges, error margins and levels of detail since not only parts of the acquired waveforms are evaluated, but the full measured signal.

2.2.2 Full Waveform Inversion

One of the first FWI approaches is introduced by Tarantola [110, 111] for acoustic as well as elastic media. For FWI, a numerical model is set up which is able to approximate forward wave propagation described by the elastic wave equation (Eq. 2.2). The ground properties (e.g. expressed by the P-wave velocity \(v_{\text{p}}\), the S-wave velocity \(v_{\text{s}}\) and the density \(\rho\)) are discretized for the numerical application and the corresponding parameters which specify the ground model can be stored in a model \(\mathbf{m}\).

The goal of FWI is to minimize the difference of the synthetic seismic records and the seismic records from field observations by adapting the ground properties \(\mathbf{m}\) of the numerical model iteratively. The ground model which produces the lowest difference of the seismic data is assumed to be a good representation of the real ground. Since different ground formations are able to produce similar seismic records, FWI has to deal with the ambiguity of the inverse problem. For that purpose, all prior information (e.g. from exploration drilling, other exploration approaches or former construction projects) can be used to define an initial model which is close to the real subsurface in order to move the inversion to reconstruct a physically meaningful ground model. Or, in different approaches, prior knowledge may be used to implement a parametrization of the ground model directly. Different objective functions can be used to quantify the difference of the seismic records which have, depending on the FWI application, different advantages and disadvantages [13]. The least squares norm is commonly used as misfit function,

The considered time interval is denoted by \(T\), the approximated displacements of the current ground model \(\mathbf{m}\) by \(u_{r}^{s}(t;\mathbf{m})\) and the displacements from the field observations by \(\bar{u}_{r}^{s}(t)\). The squared difference is summed over the number of all receivers \(N_{\text{r}}\) and the number of all sequentially used sources \(N_{\text{s}}\).

In the last decades, many different approaches of FWI have been developed, e.g. adjoint time and frequency domain approaches, statistical FWI approaches or machine learning approaches. There exist many different successful applications of FWI, even on the continental scale (e.g. [128]). However, FWI has not been applied yet for reconnaissance in mechanized tunneling projects.

A high number of forward simulations is needed for FWI due to its iterative procedure. Therefore, a real time application with three-dimensional tunnel models is not feasible with nowadays computational resources. Since the efficiency of computational techniques and resources have increased enormously in the last decades, it can be expected that an application of FWI will become practicable in the future. Therefore, an early investigation of the potential of FWI for reconnaissance in mechanized tunneling is necessary. Due to the variety of FWI approaches, an investigation on their characteristics for different ground conditions and scenarios is recommendable.

In Sect. 2.2.3, two Bayesian FWI approaches for application in mechanized tunneling are presented. The application of adjoint inversion approaches is examined for a time domain approach in Sect. 2.2.4 and for a frequency domain approach in Sect. 2.2.6, where synthetic reference data is used for inversion. However, also a validation of the proposed inversion schemes with measured data is crucial. Since seismic measurements during today’s tunneling projects are not performed for FWI but for other exploration approaches and since rich field data, suitable for FWI, is difficult to get, a small-scale experiment is constructed in order to acquire seismic data with which some of the proposed inversion approaches are validated (Sect. 2.2.7). The validation with this experimental laboratory is valuable since severe measurement and later modeling errors are included in the inversion. Furthermore, small heterogeneities inside the specimen may be expected to have a large scattering effect on the small-scale, but a vanishing effect in later tunneling scenarios, which makes the inversion even more challenging on the small-scale concerning that point. Therefore, the validation of the methods by the acquired experimental data is evaluated to be reliable.

2.2.3 Bayesian Full Waveform Inversion

Instead of allowing the material properties to vary all over the model domain like in the upcoming adjoint methods, the dimensionality of the inverse problem can be aimed to be reduced. Dimensionality reduction can be achieved by either implementing a generally suitable but simplified parametrization of the subsoil model or even a direct parametrization (e.g. of a boulder or a layer change) which is based on prior knowledge. The search for a set of parameters with a misfit value close to the global minimum can then be performed with Bayesian inference. In this approach, a prior guess with initial uncertainties is specified. Parameter configurations are sampled and the prior guess and uncertainties are multiply updated. The output is a statistical description of the model parameters, revealing the sampled parameter configuration which is most likely representing the true subsurface. Furthermore, uncertainty quantification can be performed in order to find out at which positions anomalies could potentially be missed. If the parametrization can approximately describe the real subsurface, the proposed Bayesian FWI approaches can deliver precise results, which will be shown in the further progress of the chapter.

Two methods are proposed, where both approaches include the unscented Kalman filter (UKF) that is based on Bayesian inference. The first method is called unscented hybrid simulated annealing (UHSA) [80] and the second method is called the UKF-controlled parametric level-set method (UKF-PaLS) [79]. In this section, the working principles of UHSA and UKF-PaLS are briefly explained in words and UKF-PaLS is validated with synthetic data. For a complete description of the two methods, readers are referred to [114].

2.2.3.1 Unscented Hybrid Simulated Annealing (UHSA)

UHSA is a global optimization algorithm which combines the UKF [39] with simulated annealing (SA) [47]. The method is based on implementing prior knowledge in the form of a user-defined parametrization of the disturbance. Commonly, a coarse representation of the subsoil is already available after planning of the tunneling track, e.g. from exploratory drillings, prior seismic surveys, geological maps, or other exploration techniques. Then, for instance, if prior knowledge exists that there is a cuboid boulder somewhere in the model domain, one could define the parametrization with its center location coordinates, three edge lengths and the material properties. With respect to the parametrization, UHSA conducts a global search in order to investigate the whole parameter space. In the course of this, SA acts as the global search algorithm, proposing certain parameter configurations and UKF acts as the local search algorithm, performing a local minimization for some of those parameter configurations, where parameter configurations with comparatively small misfit functionals are more frequently chosen as a starting point for local minimization. Due to the strong dimensionality reduction, results of UHSA can be close to exact if the chosen parametrization of the disturbance is sufficient; even when experimental scenarios are considered [115, 116] or when the parametrization is not fully correct [88]. UHSA results will be demonstrated on experimental examples directly in Sect. 2.2.7.1 and Sect. 2.2.7.2.

2.2.3.2 UKF-Controlled Parametric Level-Set Method (UKF-PaLS)

The previously described method can only be applied if prior knowledge is available, which is different for UKF-PaLS. In this method, the parametric level-set method (PaLS) enables a parametrization of the disturbance domain. Radial basis functions, also called bumps, are used to define the geometry of irregularly shaped objects. Prior to inversion, the centers of the bumps are defined by the user. Placing the bumps, a region of investigation as well as a resolution is defined, where at this stage, also prior knowledge can be included. Each radial basis function is controlled by two parameters, where one parameter basically defines the radial size of the bump, and the other parameter distorts the shapes of the bumps. The UKF seeks to find the optimal configuration of level-set parameters and material properties. In order to facilitate the finding of a parameter configuration corresponding to a misfit functional which is close to the global optimum, a multi-scale approach is implemented (see e.g. [26]). This approach includes a low-pass filter with a stepwise increasing cutoff frequency into the inversion. In early iterations, the simulation and measurement data is filtered with a low cutoff frequency in order to resolve larger structures, while in later iterations, higher cutoff frequencies are used in order to resolve more details.

For UKF-PaLS, a 2D synthetic inversion scenario is presented. For more 2D as well as 3D examples, readers are referred to [114]. The computational model used for generating the synthetic measurements is shown in Fig. 2.2, left. Simulations are conducted with the spectral-element code specfem2D [118]. Two rectangular disturbance domain objects are located close to each other with higher wave velocities than the wave velocities of the background domain, where the material is considered elastic with no attenuation for both sets of material properties. The density is considered constant all over the model domain. The top surface and all tunnel walls obtain free boundary conditions, while the other model boundaries obtain absorbing boundary conditions. The initial UKF model is shown in Fig. 2.2, right. 28 bumps are aligned on a regular grid, so that \(2\times 28=56\) level-set parameters and two material properties are to be determined during inversion. The initial level-set parameters are chosen so that small areas of the disturbance material are visible. In order to perform uncertainty quantification, the double standard deviation of the initial covariance matrix of the model parameters is computed and illustrated by the medium dark gray tone (see marking). Note that for the initial model, this measure does not allow for any physical interpretation.

The inversion method is tested with two different source-receiver configurations, which are visualized together with the inversion results in Fig. 2.3 by the red (sources) and green (receivers) dots. Both configurations include 2 sources placed at the tunnel front and 7 receivers placed inside the tunnel at the front and walls. One configuration additionally includes 9 receivers located at the Earth’s surface (compare Fig. 2.3, left). With both source-receiver configurations, a precise estimation of the reference model is achieved, where both objects are clearly resolved without melting together. All front and rear boundaries seen from the tunnel are well reconstructed. Due to the lack of receivers at the Earth’s surface, top and bottom borders are slightly less precisely determined for the example shown in Fig. 2.3, right. However, differences remain small. The high precision emphasizes the gain of the dimensionality reduction since especially the resolving of rear boundaries based only on reflected waves is a difficult FWI challenge. The uncertainties which are again visualized by the medium dark gray areas show where objects are most probably missed by the inversion, where the greatest uncertainties lie in the ‘‘shadow’’ of the reconstructed objects.

2.2.4 Adjoint Time Domain Full Waveform Inversion

This section gives an overview of the implementation of the adjoint time domain inversion method into the nodal discontinuous Galerkin solver nexd [54]. The solution of the forward problem is introduced briefly, the adjoint time domain inversion method is introduced and examples for a successful inversion in 2D and 3D are given.

2.2.4.1 Forward Problem

The solution of the forward problem is an essential part of the inversion process as it has to be performed multiple times during each iteration step. In this approach the discontinuous Galerkin (DG) solver nexd developed by [53] is used to perform these calculations. nexd is based on a velocity-stress formulation of the wave equation derived by [42] and [43]. The computational model of the target region is divided into triangular or tetrahedral elements and the solution vector containing stress and particle velocity components is expanded into appropriate basis functions defined on the elements. By multiplying with a set of test functions defined on the elements and integrating over each element, the elastic wave equation is replaced by a large coupled system of ordinary differential equations. Interaction and coupling between elements is realized by numerical fluxes. Depending on the choice of basis functions, DG methods are either of modal or nodal type. Modal approaches use sets of orthogonal basis functions whereas nodal ones take multidimensional Lagrange interpolating polynomials anchored to carefully selected nodal points [31]. In the latter case, the expansion coefficients are identical to the values of the velocity-stress vector at the nodal points. As nexd is based on the nodal version of the DG method, a quick overview of the semi-discrete scheme is given: Let now \(l_{i}(\mathbf{x})\) denote the Lagrange polynomials attached to the nodes \(\mathbf{x}_{i}\) [31]. Moreover, let \(\mathbf{q}(\mathbf{x}_{j},t)\) denote the solution vector in element \(k\), representing the values of the stress and particle components at node \(\mathbf{x}_{j}\).Footnote 1 Then, using the Einstein summation convention, the system of ordinary differential equations for the expansion coefficients can be written as

where \(\mathbf{A}\), \(\mathbf{B}\) and \(\mathbf{C}\) are matrices containing the material properties of each element, \(\mathbf{M}^{k}\) is the mass matrix, \(\mathbf{S}^{k}_{x}\), \(\mathbf{S}^{k}_{y}\) and \(\mathbf{S}^{k}_{z}\) are the stiffness matrices, \(\mathbf{r}_{\text{n}}(\mathbf{x},t)\) are normal Riemann fluxes and \(\mathbf{s}(\mathbf{x}_{j},t)\) is the source term. The number of interpolation points per element is denoted by \(N_{\text{p}}\) and \(\partial D^{k}\) denotes a line element of an element edge. Equation 2.4 is a system of coupled ordinary differential equations, as the flux terms depend on the expansion coefficients of adjacent elements. A more comprehensive derivation of Eq. 2.4 is given in [53, 54].

2.2.4.2 Adjoint Inversion

The primary ingredient for the inversion process is the measured data, since this data contains the information on the model that is to be calculated during the inversion process. This data is either generated synthetically using a forward solver, e.g. specfem or nexd, or data from seismic stations deployed in the field is used. The goal of the inversion process is to recover the model \(\mathbf{\bar{m}}\), that best explains the observed data.

The search for the best model \(\mathbf{\bar{m}}\) starts with a model \(\mathbf{m}_{0}\) for which synthetic waveforms \(\mathbf{u}_{r}^{s}(t,\mathbf{m}_{0})\) are to be calculated by a forward simulation. These synthetic waveforms are compared to the observed ones, \(\mathbf{\bar{u}}\) and the misfit between the observed and measured seismograms is calculated according to Eq. 2.3. The model \(\mathbf{m}_{0}\) is adjusted iteratively into models \(\mathbf{m}_{j}\) with waveforms \(\mathbf{u}_{r}^{s}(t,\mathbf{m}_{j})\) at iteration \(j\) where \(\mathbf{m}_{j}\) should approach \(\mathbf{\bar{m}}\) with increasing \(j\) in the sense of minimizing the misfit between synthetic and observed waveforms as defined by [119].

To minimize the misfit \(\chi\) an iterative approach is used which exploits information about the misfit gradient [118]. The latter is obtained by taking the Fréchet derivative of Eq. (2.3) with respect to \(\mathbf{m}\),

Calculating the Fréchet derivatives of the wavefield with respect to \(\mathbf{m}\), \(\partial\mathbf{u}/\partial\mathbf{m}\), for all variations of \(\mathbf{m}\) would require large amounts of storage. Instead, [26] shows that the misfit gradient can be more efficiently calculated with the help of an adjoint wave field \(\mathbf{u}^{\dagger}(\mathbf{m},\mathbf{x}_{i},T-t)\), which in turn can be obtained as the time reversed result of a forward simulation with so-called adjoint sources situated at the receiver positions and defined by

Thus, the adjoint sources are the time reversed residuals between observed and predicted waveforms injected at the receiver positions and propagated into the medium.

For a perturbation of density and Lamé parameters in the subsurface, \(\Updelta m=(\Updelta\rho,\Updelta\lambda,\Updelta\mu)\), the change of misfit is given by

where the misfit kernels are introduced according to [56] as

Here, \(\boldsymbol{\epsilon}\) denotes the strain tensor and \(\mathop{\mathrm{Tr}}(\boldsymbol{\epsilon})\) its trace. The colon stands for the Frobenius inner product. The kernels are defined with a negative sign, as the misfit is to be minimized and therefore the negative misfit gradient is used. The strain tensor \(\boldsymbol{\epsilon}\) is calculated from the stress components used as variables in the simulation by inverting the isotropic stress-strain relation.

Corresponding misfit kernels for the density, \(\rho\), P-wave velocity, \(v_{\text{p}}\), and S-wave velocity, \(v_{\text{s}}\) are given by

So far, material properties and misfit kernels were defined as continuous functions of position in the subsurface. In order to make the inverse problem finite-dimensional, a discretization of these functions is required. The discretization by elements introduced in context with the forward simulation is used and the model \(\mathbf{m}\) is now considered as a vector containing all values of the material properties in the elements. This implies constant values of the material parameters within the elements and it is possible to integrate the kernels over the elements. Hence, pre-integrated element-specific misfit kernels \(\bar{K}_{\rho}^{k}\) are defined by Lamert [55] (explicitly given here for density)

where \(D_{k}\) denotes element \(k\), \(V_{k}\) its volume and \(\rho_{k}\) the value of density within this element.

As mentioned before, in the NDG method field variables at position \(\mathbf{x}\) are expanded into Lagrange polynomials anchored at the nodal points. Hence, \(\mathbf{u}^{\dagger}\) and \(\mathbf{u}\) are expressed in the form

with the Lagrange polynomials \(l_{m}(\mathbf{x})\) and \(N_{\text{p}}\) the number of nodal points. Inserting Eq. 2.11 into Eq. 2.10 and changing the order of volume and time integral results in

The volume integral on the right hand side is identical to the mass matrix used and computed in the NDG method,

Since the elements from the NDG method are used as discretization for the inversion, the mass matrix is already known and the pre-integrated kernels (Eq. 2.12) become

The element kernels \(\bar{K}_{\lambda}\) and \(\bar{K}_{\mu}\) are calculated in a similar way,

For \(\bar{K}_{\mu}^{k}\) the sum over \(n\) is applied to all components of \(\boldsymbol{\epsilon}\) first and the inner product is to be calculated before applying the sum over \(m\).

With this method, it is possible to calculate the misfit gradient without the use of an additional mesh. The values of \(\mathbf{u}^{\dagger}\) and \(\mathbf{u}\) at the nodal points \(\mathbf{x}\) are directly given by the NDG method. Moreover, since the element size is typically about half a wave length due to computational stability, the resolution potential of FWI is conserved.

2.2.5 Inversion Procedure

Based on the gradients of the misfit function the L-BFGS (Low-memory Broyden-Fletcher-Goldfarb-Shanno) [81] method is applied to find a local minimum. The L-BFGS method uses models and misfit gradients of former inversion steps to approximate the Hessian \(\mathbf{H}_{j}\) of inversion step \(j\), thus boosting the convergence of search for a minimum to superlinear behavior. As proposed by [81] the approximation of the Hessian starts by defining \(\mathbf{H}^{0}_{j}=\gamma_{j}I\) where \(I\) is the identity matrix and \(\gamma_{j}\) is a scalar which can be calculated from the model values and the misfit gradient of the latest inversion step. Afterwards, gradients and models from \(L\) former inversion steps are used to iteratively approximate \(\mathbf{H}_{j}\) based on \(\mathbf{H}^{0}_{j}\).

The elastic properties of all elements contained in model \(\mathbf{m}_{j}\) are updated in direction of the corrected gradient multiplied by a step length factor \(\alpha_{j}\),

where \(\mathbf{c}_{j}\) is a vector composed of all the element-specific misfit kernels calculated in inversion step \(j\). If \(\mathbf{H}^{0}_{j}\) is chosen diagonal, \(\mathbf{H}_{j}\) is diagonal as well and no matrix multiplication is needed to calculate \(\mathbf{H}_{j}\). Thus, the multiplication of \(\mathbf{H}_{j}\) and \(\mathbf{c}_{j}\) reduces also to a simple multiplication for each entry of \(\mathbf{c}_{j}\) instead of a matrix-vector multiplication ensuring fast computation of the model correction.

To determine \(\alpha_{j}\), two trial step length \(\alpha_{j,1}\) and \(\alpha_{j,2}\) are chosen and a forward simulation is performed for both step length and the resulting waveforms are used to calculated the corresponding misfit. A second order polynomial, \(\chi(\alpha_{j})\), is fitted to the misfit of the current model and the misfit for the two trial simulations. If existing, the minimum of the polynomial is taken as the optimal step length for the next iteration. In case the optimal step length is beyond the range of the trial step length, the maximum step length, \(\alpha_{j,2}\), is doubled. If no minimum is found, the intermediate step length \(\alpha_{j,1}\) is halved and the old value of \(\alpha_{j,1}\) is used as \(\alpha_{j,2}\). In both cases a further forward simulations is needed for the new step length. This procedure is repeated until a minimum \(\alpha_{j,0}<\alpha_{\text{min}}\leq\alpha_{j,2}\) is found or until \(\alpha_{j,1}\) or \(\alpha_{j,2}\) reach a maximum or a minimum value, respectively.

The computational cost of the procedure described above requires one forward simulation for each seismic source to obtain the wave field for the current model, one forward simulation for the adjoint wave field and two forward simulations for the test step lengths. Due to an appropriate choice of \(\gamma_{j}\) the test step lengths are not changed often. On average, 4-5 forward simulations per inversion step and per source are needed. Additional receivers do not increase the number of forward runs since the adjoint sources at the receiver positions can be fired at the same time. The presented method is most effective for a low number of sources and a high number of receivers within the observed region.

To ensure a stable and smooth convergence towards the minimum of the misfit function, a hierarchical multiscale approach is used. As suggested by several authors [12, 121, 73] the inversion starts with waveforms of low frequency content and higher frequencies are admitted gradually. In this way, large scale structures evolve at the beginning of the inversion which are gradually refined later as higher frequencies are added. Frequency content is controlled by low-pass filtering the observed waveforms and the source time function for the forward simulations in an identical way. This concept avoids the well-known cycle skipping problem where the phase difference between measured data and synthetic waveforms approaches one dominating signal period. Using low frequency content leads to a smoother misfit function making it more likely for the FWI procedure to converge to the global minimum. Adding higher frequencies gradually introduces additional detail in the model and ensures the convergence to at least a local minimum close to the global one. Without this approach, the inversion tends to converge into a shallow local minimum far away from the global one.

2.2.5.1 FWI in Two Dimensions

A common scenario during tunnel construction is the appearance of fractures in the rock mass in front of the drilling machine. In case of large scale fractures, the location of these fractures is often roughly known from geological surveys. Seismic waves interact with fractures and are partially reflected and partially transmitted at the fracture similar to a material contrast. Nevertheless, the dependency of transmission coefficient, reflection coefficient, and phase shift on the inclination angle differs strongly compared to a material contrast [86]. In this example we first generate synthetic waveforms for a fracture placed ahead of a tunnel interface and investigate the potential of locating the fracture using the inversion process. The synthetic waveforms are calculated using the 2D solver of nexd. The fracture is not directly modeled but described by an implementation of the Schoenbergs linear slip concept [104] by Möller & Friederich [72]. In this implementation, the response of the fracture is described by a characteristic frequency, \(\nu\), that is calculated according to

where \(Z_{i}\) are the impedances of the surrounding media and \(\eta\) is the specific compliance, or the inverse of the specific stiffness, of the fracture [72]. According to [86] the reflection coefficient of a fracture is calculated by

where \(\omega\) is the angular frequency of the incident wave, \(\rho\) the density of the surrounding medium, \(v_{j}\) is the P- or S-wave velocity and \(\kappa=\frac{1}{\eta}\) is the specific stiffness of the fracture.

In this example, the reflective parameters of the fracture were chosen to emulate a material contrast, were the medium in front of the fracture (closer to the source) has a P-wave velocity of 3000 m/s and an S-wave velocity of 2000 m/s and the medium behind the fracture has a P-Wave velocity of 1600 m/s and an S-wave velocity of 1000 m/s. The density is identical in both media at 2200 kg/\(\text{m}^{3}\). An inversion for this fault structure is discussed in detail by A. Lamert in [55] and [57] and will be referenced here for comparison.

Using the above parameters, reflection coefficients, \(R_{\text{p}}=0.2\) and \(R_{\text{s}}\approx 0.231\) are obtained for P- and S-waves respectively. Using these reflection coefficients, and a frequency of 500 Hz the specific stiffnesses for P-and S-wave are calculated as: \(\kappa_{\text{p}}\approx\) 50.79 GPa/m and \(\kappa_{\text{s}}\approx\) 23.31 GPa/m, leading to characteristic frequencies of the fracture

Figure 2.4 shows a comparison of the synthetic seismograms for the homogeneous background model without any reflectors and the seismograms calculated for two cases in which different reflectors were added. The first case shows the seismogram for a forward simulation were a fracture with the above parameters is added to the model (Fracture). The second case is a seismogram recorded at the same station in case of a fault (Fault). The seismograms are not identical due to the inherent difference of the reflectors used in the respective simulations. However, just by analyzing these seismograms, no assumption can be made whether the reflector is a fracture or a fault.

Displacement seismogram of the horizontal component recorded at a surface receiver at the center of the simulation domain for the homogeneous model, the model incorporating a fault, and the model incorporating a fracture. The zoom-in panel highlights waves reflected by the fault or the fracture, respectively. The amount and amplitude of reflected waves is comparable for both cases but the seismograms are not equal due to the different phase shifts introduced by the different reflectors. ([55])

Top: Homogeneous velocity model with fracture characterized by a red line (top row), inversion result after 276 iterations (center row), and inversion result for a fault with comparable reflection behavior like the fracture for comparison (bottom row). Bottom: Evolution of the misfit normalized to the misfit value of the starting model with full frequency content. Jumps correspond to an increase of the frequency content. ([55])

Figure 2.5 shows the model for the generation of the synthetic data and the results of the inversion for the fracture and for the fault. The fracture is best recovered in the P-wave velocity model as a thin low velocity zone almost at the exact position of the fracture in the initial mesh. It should be noted that a homogeneous velocity model with a fracture only defined at the element edges is used to produce the synthetic waveforms but during the inversion it is not possible to invert for the fractures itself. Instead the velocity values of the starting model are changed to represent the data and, thus, will produce an inhomogeneous velocity model without a fracture. The second observation from these results is that even though their parametrization is identical, the results from the inversion differ quite substantially.

From this data alone, no estimation of the true thickness of the fracture can be made, as the resolution of the fractures thickness highly depends on the frequency of the source wavelet. Nevertheless using these results, an operator will be able to determine if the reflector is a fracture or a fault and the location of either.

2.2.5.2 FWI in Three Dimensions

This example was originally published as part of the PhD thesis by A. Lamert [55].

To test the capabilities of the inversion procedure on three dimensional data, a synthetic test model is created with 100 m length in \(x\) direction and 60 m length in both, \(y\) and \(z\) direction. A cylindrical tunnel with a diameter of 8 m and 21 m overburden extends 25 m into the medium parallel to the \(x\)-axis. The homogeneous background model has elastic properties of \(v_{\text{p}}=3000\) m/s, \(v_{\text{s}}=1700\) m/s, and \(\rho=2000\,\text{kg/m}^{3}\) (see top row of Fig. 2.6). A spherical cave with a radius of 1.5 m is located 2 m above the tunnel line and 20 m in front of the tunnel as first inhomogeneity. The cave is modeled as a void with a free surface. A fault is located 46.5 m ahead of the tunnel where the seismic velocities change to \(v_{\text{p}}\) = 2000 m/s and \(v_{\text{s}}\) = 1100 m/s. The fault is rotated through 24.8° about the \(y\)-axis and afterwards through 20.5° about the \(z\)-axis. A seismic source is located centrally at the tunnel face pointing in the direction of \((1,0,1)\) to illuminate the ground with both, P- and S-waves. Receivers are placed at the free surface, the tunnel walls and the tunnel face to receive reflected waves as well as possible.

The starting model is a homogeneous model without the cave or the fault but with the tunnel. As elastic parameters, the bed rock properties around the tunnel are used. The mesh of this model consists of 65,747 elements and the simulation needs to be run for 8500 time steps (0.135 s simulation time) ensuring the observation of all reflected waves. With this, the simulation time for a single forward simulation is increased to almost 30 minutes compared to a couple of minutes for the 2D examples. However, the chosen size of the elements only allows to use a Ricker wavelet with a central frequency of 125 Hz leading to a spatial resolution 4 times smaller compared to the 2D examples. Increasing the frequency would lead to excessive computational times since the computational time grows with the fourth power of the frequency.

Real model (top) and inversion result after 200 iterations from three different perspectives. All panels show the positions of the two obstacles: a fault illustrated as plane and a cavern illustrated as sphere. Velocity values close to the homogeneous background model are made transparent to show clouds of inhomogeneities. Receivers are represented by grey dots, the source at the tunnel face is shown in red. ([55])

The inversion starts with a cutoff frequency of 50 Hz increasing step wise to the maximum frequency of the Ricker wavelet during the inversion. Figure 2.6 shows the final inversion result after 200 iterations from different perspectives. The color scale is chosen such that a small band close to velocity values of the homogeneous starting model is completely transparent. From both edges of the transparent velocity band, the transparency decreases to the maximum and minimum values. Thus, clouds of higher and lower wave velocities compared to the bed rock become visible. The true position of the fault and the cave are also depicted in the inversion result by a plane and a sphere, respectively.

Compared to the 2D fault example presented in [55], many more small scale inhomogeneities are created but the fault is still clearly visible. The strongest signal of the fault is created on the left hand side of the tunnel axis for the P-wave velocity model (around the coordinate (0 m, 20 m, 0 m)) since waves from this area are directly reflected back to the receivers. The strongest signal for the S-wave velocity model appear at the top (around the coordinate (35 m, 0 m, 25 m)). With the velocity values increasing in front of the fault and decreasing behind it, a change at the fault from higher to lower wave velocities is obvious. However, determining the unknown velocity value behind the fault is only possible with high uncertainties since the reconstructed values vary strongly in the region where the fault is located. Additionally, the same effect as in the 2D example appears related to the distance between tunnel and fault. Due to the increase of the wave velocity in front of the tunnel, the fault plane is shifted to slightly higher distances from the tunnel. The lower part of the fault appears curved. This is probably caused by the fact that waves from here are mainly reflected to the receivers within the tunnel and only a small portion is recorded by receivers at the surface. Thus, considering only the receivers within the tunnel, a small aperture results. By using higher frequencies within the source signal, it can be expected that most of the small inhomogeneities will disappear and a clear picture of the fault is reconstructed similar to the 2D example. However, the provided computer would need several months for such a calculation.

The size of the cavern is chosen to be slightly higher than the shortest wavelength and is comparable to the size of the mesh elements as necessary for a stable simulation of the highest frequencies. Considering the inversion result for the P-wave velocity, the cavern appears as a small cloud with decreased wave velocity values not significantly smaller compared to the inhomogeneities around it. Within the S-wave velocity model a small cloud with clearly decreased velocity values can be seen with, however, almost the double radius as the real cave. The velocity values do not decrease to very small values and thus do not give a clear indication on the presence of a cavern in that area. Using a source signal containing higher frequencies would be necessary to obtain a clear picture with more reliable velocity values. However, the example clearly shows the potential of the FWI procedure to reconstruct large and small scale objects also in three dimensions. The problems of the computational effort can be expected to get less relevant in the future with increasing computer power.

2.2.6 Adjoint Frequency Domain Full Waveform Inversion

The wave modeling and the application of FWI is more illustrative in the time domain because an accurate selection of the considered period of time and an easy identification of emerging amplitudes in the seismic records is feasible. However, the non-linearity of the inversion is increased by this abundance of information. A separate analysis of single frequency groups by a frequency domain approach allows a mitigation of the non-linearity [1] by an intuitive application of a multi-scale approach where the used frequencies are gradually increased during the inversion scheme.

Frequency domain approaches of FWI have already been studied for other applications like the inversion of seismic records of a cross-hole experiment [85] or the inversion of tomographic seismic data of a physical scale model [84].

The complex-valued frequency domain elastic wave equation

is obtained by a Fourier transformation of the time domain’s counterpart in Eq. 2.2. The complex-valued wave field \(\mathbf{u}(\mathbf{x},\omega)\) can be solved for an angular frequency \(\omega\) and the complex-valued excitation force \(\mathbf{f}(\mathbf{x},\omega)\).

An application of numerical schemes to solve Eq. 2.21 leads to a linear system of equations. Therefore, the initial factorization of the system matrix enables the computation of additional wave fields of the current ground model and the same angular frequency for other source excitations by a comparatively low increase of the computational effort. This makes the frequency domain approach favorable in terms of computational efficiency for applications with a high number of sources.

In time domain modeling, an increase of the discretization level of the ground model for modeling higher frequencies brings a need for a decrease of the time increment to ensure numerical stability. This dilemma is avoided by frequency domain modeling and therefore, the choice of the applied numerical scheme is not limited by this problem. Nevertheless, finite element approaches are favorable because the Neumann boundary condition at the free surfaces, which acts as reflecting surfaces, of the Earth’s surface as well as of the tunnel walls are fulfilled implicitly.

A major drawback of the frequency domain modeling is that the artificial borders of the considered computational domain have to be treated very carefully. If no high attenuation effects occur within the subsurface domain, the reflections from the artificial borders contaminate the whole wave field. In time domain modeling only the later points in time are affected by these reflections which can be simply neglected by a reduction of the considered time interval.

An efficient way to suppress these erroneous reflections is an application of small absorbing boundary layers at the artificial borders of the computational domain. The so-called perfectly matched layer method enables a smooth absorption of escaping waves by a coordinate stretching into the imaginary plane [9]. For shallow subsurface models like in the considered tunnel models, an application of convolutional perfectly matched layers is recommended [25].

The frequency domain Green’s functions \(\mathbf{g}(\mathbf{x},\omega)\), which are the system response to an impulse excitation, are illustrated in a shallow two-dimensional tunnel environment for excitation by a horizontal single force which is located at the front tunnel face in Fig. 2.7 for a frequency of 500 Hz. An absorption of the elastic waves within the perfectly matched layers (dashed regions) is observed. Even though a homogeneous ground model was used, it is not recognizable by the wave field whether there is a disturbance in front of the tunnel face or not.

Real part of the Green’s functions \(\operatorname{Re}(\mathbf{g})\) in \(x\)- (left) and \(y\)-direction (right) in a two-dimensional tunnel environment for a frequency of 500 Hz. Free surfaces are located at the Earth’s surface and around the tunnel. The origins of the perfectly matched layers are indicated by the dashed lines. The illustrated ground model has a homogeneous P-wave velocity \(v_{\text{p}}=3800\) m/s, S-wave velocity \(v_{\text{s}}=2200\) m/s and density \(\rho=2400\) kg/m\({}^{3}\). The applied source is a horizontal single force

Since the wave field is only solved for a limited number of currently investigated frequencies of the \(i^{\text{th}}\) frequency group the proposed misfit function of Eq. 2.3 is adapted to

The number of frequencies within the current frequency group is denoted by \(F_{i}\) whereas \((\cdot)^{\ast}\) is the complex conjugate. The complex displacements of the reference seismic records \(\bar{u}_{r}^{s}(\omega_{f})\) are calculated by a discrete Fourier transformation. A preservation of low frequency features is guaranteed by combining the increasing frequencies with low frequencies from former iteration steps.

The discrete adjoint gradient of the misfit function is calculated by differentiating the misfit in Eq. 2.22 with respect to the discretized material properties (e.g. discretized within single elements or at every node). For computing the gradient, the product rule has to be applied and the derivative of the displacement with respect to the discretized material properties is evaluated by using the according derivative of numerical representation of Eq. 2.21. The resulting system of equations can be simplified by calculating the adjoint wave field by using the negative derivative of the misfit function with respect to the displacements at the receiver stations as adjoint source [26]. The computation of the adjoint wave field in the frequency domain only needs low additional computational effort since the already factorized system matrix can be reused again.

For minimizing Eq. 2.22 by changing the material properties of the current ground model different techniques as steepest descent method, conjugate gradient method or the L-BFGS method [81] can be applied to find a suitable search direction. The step length can be evaluated by heuristic schemes or by e.g. calculating the minimum of a quadratic approximation of the misfit function with three points [107]. Singularities occur for the wave field at the sources as well as for the adjoint wave field at the receivers. Therefore, the gradient has disproportionately high changes at these positions [83] and makes an exploration of the ground in front of the tunnel more difficult. By a preconditioning of the gradient, e.g. setting the gradient equal to zero around the sources and receivers, the negative impact of this effect on the inversion scheme might be reduced.

A deconvolution of the source function into a spatially dependent impulse part \(\boldsymbol{\delta}(\mathbf{x}-\mathbf{s})\), which is equal to zero everywhere except at the considered source position \(\mathbf{s}\), and a frequency dependent part \(h(\omega)\) is in the frequency domain straightforward,

By neglecting the frequency dependent part \(h(\omega)\) for the forward wave modeling, the Green’s functions \(\mathbf{g}(\mathbf{x},\omega)\) are calculated. The displacement at the receiver station can be calculated with the Green’s functions by a convolution with the frequency dependent source function

Under the assumption that the transfer function between the ground and a receiver \(r\) is approximately equal for all receivers \(N_{\text{r}}\), a simultaneous approximation of the source signatures during every iteration of the inversion is possible. By setting the derivative of Eq. 2.22 with respect to the source function \(h^{s}(\omega)\) equal to zero to satisfy the necessary condition for a minimum [84], the source function is approximated by

The calculated source function depends on the current ground model \(\mathbf{m}\). Therefore, the source function is approximated for every iteration of a frequency group. Since the calculation of the displacements is with Eq. 2.24 only a linear operation, the already calculated Green’s functions can be reused and no additional forward simulations are performed.

If the assumptions made are valid, the frequency domain approach enables an inversion scheme in which no information of the source signature is needed in advance. This might be a great advantage since a prior estimation of the source function is cumbersome.

Results of a full waveform inversion with a frequency domain model for a two-dimensional tunnel environment with a different number and position of sources (triangles) and receivers (squares). The P-wave velocity distribution is on the left side and the S-wave velocity distribution is illustrated on the right side. In the first row the wave velocity distribution of the reference model is illustrated. The shapes of the three disturbances are indicated within the inversion results

In Fig. 2.8, the inversion results for a two-dimensional tunnel environment are illustrated, where different numbers and locations of sources and receivers are used. The resulting P-wave velocity \(v_{\text{p}}\) is shown on the left side and the S-wave velocity \(v_{\text{s}}\) on the right side. The ambient P-wave velocity is \(v_{\text{p}}=3800\) m/s and the ambient S-wave velocity is \(v_{\text{s}}=2200\) m/s, whereas the density is \(\rho=2400\) kg\(/\)m\({}^{3}\) and is assumed to be constant. The reference ground model, which is illustrated on top of Fig. 2.8, contains three disturbances in front of the tunnel track with different shapes and elastic properties. Free surfaces are applied at the Earth’s surface and at the tunnel, whereas the absorbing boundary layers are illustrated by the dashed regions.

For the computation of the wave fields, a finite element approach is used, where hierarchical higher-order shape functions [108] are employed for efficiently increasing the accuracy of the numerical scheme for higher frequencies (which lead to shorter wavelengths) by just adding additional shape functions without changing the initial mesh. For the illustrated application, the ground properties are discretized in the nodes. The used synthetic reference displacements are calculated with the same approach in advance to the several frequency groups. As reference source functions \(\bar{h}^{s}\) Ricker wavelets with a peak frequency of 500 Hz, without a time delay and a scaling factor of \(10^{6}\) are used. A simultaneous approximation of the source function by Eq. 2.25 is applied for all results in Fig. 2.8. For the first eight frequency groups only one frequency is employed. The other thirty frequency groups contain a combination of an increased frequency and a lower frequency. The inversion is performed by using 12 iterations for each frequency group. For all four examples, the displacements in both spatial directions are measured at the indicated receivers.

The used source and receiver configuration of the first example (second row of Fig. 2.8) is likely to be used for today’s exploration approaches in mechanized tunneling. Only one source is positioned in the middle of the tunnel face and the receivers are located at the tunnel face as well as at the tunnel walls. The resulting wave velocities allow only a prediction of the position of the first disturbance but neither its shape nor its actual elastic properties are quantifiable accurately. Furthermore, spurious fluctuations of the wave velocities occur which make an unambiguous prediction difficult. For the second example (third row of Fig. 2.8), a source is added at the Earth’s surface 55 m in front of the tunnel. Therefore, not only reflected waves are analyzed but also directly refracted waves of the second source are captured. The position of all three disturbances are hinted by the resulting wave velocities, especially by the S-wave velocity. However, only the shapes and properties of the first two disturbances can be predicted by the S-wave velocity. For the third example (fourth row of Fig. 2.8), only nine evenly distributed receivers are added at the Earth’s surface in comparison to the first example. The resulting wave velocities enable a good prediction of the position and the properties of all three disturbances whereas only the shape of the first two obstacles are predictable in an accurate way. A rapid increase followed by a sudden decrease of the wave velocities occur at the borders of the third disturbance, which produces a comparable reflection behavior since the gradient of the ground properties is nearly the same as the gradient of the reference ground model. This ambiguity of the seismic records has to be taken into account for evaluating the results of the full waveform inversion. The fourth example (fifth row of Fig. 2.8) combines the additional source of the second example as well as the additional receivers of the third example. An accurate prediction of the position, shape and properties of the disturbances is enabled on the basis of the inversion results.

It is observed that adding sources and receivers at the Earth’s surface improves the prediction of geological changes in front of the tunnel face. The application of sources and receivers at the Earth’s surface may not be possible in urban areas due to restrictions on the access to the surface. However, as illustrated by the first example, a prediction of the first reflection feature in front of the tunnel is already possible by only using sources and receivers at the tunnel.

As already announced, a simultaneous approximation of the source signature was used. A comparison of the real part of the reference and the approximated source signature of the first example is illustrated in Fig. 2.9. Only the reference and the approximated value of the highest frequency of the frequency groups is displayed.

The change of the real part of reference \(\bar{h}^{s}\) and approximated source signature \(h^{s}\) for the highest frequency of a frequency group \(\omega_{f,\text{max}}\) of the first full waveform inversion example (second row of Fig. 2.8) during the several iterations is displayed

A sufficient approximation of the source function is obtained which enables a full waveform inversion scheme without prior knowledge of the source signature.

Since the curvature of the tunnel as well as other effects are not considered by a two-dimensional model, more realistic examples have to be investigated by using three-dimensional tunnel models. Therefore, the proposed inversion scheme is tested on a shallow tunnel domain where the reference ground model includes a spherical disturbance in front of the tunnel track. The ambient wave velocities are \(v_{\text{p}}\) = 4000 m/s and \(v_{\text{s}}\) = 2400 m/s, whereas the properties of the sphere are \(v_{\text{p},\text{sphere}}\) = 2800 m/s and \(v_{\text{s},\text{sphere}}\) = 1700 m/s. The density is assumed to be constant with \(\rho\) = 2500 m/s. The tunnel has a diameter of 8 m and its center is located 18 m below the Earth’s surface. The sphere has a radius of 8 m and its center is on the level of the tunnel’s center with a distance of 25 m to the front tunnel face. The FWI is started again with an homogeneous ground model.

Since the computation demand for the inversion schemes is still very high for three-dimensional problems, only 16 freqeuency groups are used where the first nine groups contain only single frequencies and the other seven groups combine two frequencies within a group. The frequencies are only increased to \(f\) = 381.97 Hz because the wave field becomes more complex for higher frequencies and therefore a higher discretization would be needed. But for the used disturbance size this frequency range is sufficient.

The full waveform inversion is performed for two different configurations of sources and receivers. The first configuration only employs a source in orthogonal direction at the center of the tunnel face as well as only receivers at the tunnel face and walls. The second configuration uses additional sources and receivers at the Earth’s surface. The receivers record the displacements in all three spatial directions. All sources use a Ricker wavelet with a peak frequency of 500 Hz, without a time delay and a scaling factor of \(10^{6}\). The resulting wave velocity distributions are illustrated in Fig. 2.10, where the source and receiver positions as well as the position of the disturbance are indicated, too.

P-wave velocity \(v_{\text{p}}\) (left) and S-wave velocity \(v_{\text{s}}\) distribution (right) of the full waveform inversion results of a three-dimensional shallow tunnel domain for two different configurations of sources (red spheres) and receivers (blue spheres). The position of the spherical disturbance of the reference model is indicated by grid lines

For the first source-receiver configuration, the S-wave velocity field allows a prediction of the front face of the sphere, whereas the P-wave velocity field only provides spurious fluctuations directly in front of the area where the gradient is preconditioned (around the sources and receivers). Nevertheless, these results allow the guess that a disturbance with decreased wave velocities might be in front of the tunnel. The second source-receiver configuration leads to improved inversion results. The S-wave velocity distribution gives an accurate representation of the the position, shape and S-wave velocity of the sphere. Whereas the P-wave velocity field only gives a blurred image of the sphere but its position and the trend of the P-wave velocity are identifiable. An application of additional frequency groups containing higher frequencies would improve the representation of the sphere by the reconstructed P-wave velocity field. Therefore, these results would allow a more precise prediction of the disturbance.

The three-dimensional results demonstrate that the two-dimensional results give good insights into the influence of the different source-receiver configurations as well as that seismic reconnaissance via full waveform inversion by an adjoint frequency domain approach provides promising results for synthetic seismic records.

2.2.7 Validation with Small-Scale Laser Experiment

In order to validate the FWI methods with real data, a small-scale laser experiment is set up with which ultrasonic measurement can be acquired. The validation with ultrasonic data brings a significant gain compared to the validation with synthetically generated data since noise as well as measurement errors are naturally included. Furthermore, also modeling errors occur when setting up the forward model. All of these error types also occur during field scenarios, but not in synthetic tests, and therefore, the robustness of the FWI methods against these error types can be tested with the laboratory data. Rich field data is difficult to get, while in the small-scale experiment, a broad variety of scenarios can be tested in order to prepare the methods for a later in-situ application. A picture of the experiment with labeled components is provided in Fig. 2.11, while a sketch of the measurement chain of the experiment is illustrated in Fig. 2.12.

On the computer, the source signal is specified which is forwarded to a function generator and then to a power amplifier. Arriving at the ultrasonic transducer, the electrical signal is converted into a mechanical waveform. The transducer applies a force onto the specimen and thus initiates a seismic wave which propagates through the specimen. A laser interferometer enables the contactless recording of seismic data, where a positioning system allows the measurement at various points along predefined lines or areas. The recorded signal is forwarded to the computer for data acquisition. In order to minimize vibrational influences from the environment, the setup is placed on an optical table.

Intensive preliminary investigations are conducted with the aim to maximize the repeatability of measurements as well as their signal-to-noise ratios [114]. Firstly, it is found out that a stacking of single measurements is necessary in order to achieve a repeatable high-quality signal, where a minimum of 100 stacked measurements is recommended. Secondly, it is shown that the attachment of aluminum tape on the specimen’s surface when measuring on porous material is highly beneficial in order to improve the reflection of the laser signal. With these methods, a signal-to-noise ratio of 4322 (or 36.4 dB) could be achieved for 100 stacked measurements acquired on a concrete specimen with aluminum tape, where the signal-to-noise ratio of a single measurement without aluminum tape amounted to 11.3 (or 10.6 dB) only.

2.2.7.1 Hole Imaging in an Aluminum Block

First validation of the methods is performed with ultrasonic data acquired on an aluminum block which contains a drilling hole. Since a consideration of homogeneity and isotropy is most widely valid for aluminum, simulation results may be expected to be closer to the measurement than for rock materials. Therefore, modeling strategies such as the estimation of the material properties and the transducer’s source function can be more easily developed. More information on the experiment, the forward modeling and UHSA may be found in [114, 117]; more information on the time domain adjoint solution may be found in [55].

The aluminum block with its drilling hole is shown in Fig. 2.13 with a photo of the measurement setup in Fig. 2.13, left, and an illustration in Fig. 2.13, right. A coordinate system is placed at the center of mass of the ideal block as shown in Fig. 2.13, right. The outer dimension of the aluminum block is 200.4 mm \(\times\) 103 mm \(\times\) 100 mm, where a hole of 16 mm diameter is drilled throughout the \(y\)-dimension at \((-10,y,20)\) mm. The green dots illustrate the points of laser measurement, the red cylinder visualizes the shape of the ultrasonic transducer. The source function which is sent to the transducer is a Ricker wavelet with a central frequency of 300 kHz. In order to determine the material properties of the specimen and the transducer’s source function, a second undisturbed aluminum block with the same outer dimensions as above is used, which is illustrated in Fig. 2.14 (analogous to the illustration in Fig. 2.13). Here, only a single measurement is acquired on the opposite site of the transducer as illustrated. At all of the above-mentioned points of measurement, 200 single measurements are stacked in order to increase the signal qualities. Offsets of the records are removed by subtraction of average amplitudes before signal arrival. Bandpass filters with cutoff frequencies of 50 and 800 kHz are applied to each trace.

Before an inversion scenario can be set up, a forward model needs to be constructed. In order to estimate the material properties and the transducer’s source signature, a model corresponding to the setup shown in Fig. 2.14 is set up. Dimensions are carefully measured and a brick is modeled and meshed, where all surfaces are defined as free boundaries. The material behavior is assumed to be elastic and therefore, material properties reduce to density \(\rho\), compressional wave velocity \(v_{\text{p}}\), and shear wave velocity \(v_{\text{s}}\). Density \(\rho\) is determined and implemented into the model. With the spectral-element code specfem3D [50], numerical solutions to various gridded combinations of \(v_{\text{p}}\) and \(v_{\text{s}}\) are computed, where an ideal Ricker is used as source function. By incorporation of the acquired measurement, the misfit functionals are determined and the wave velocity combination corresponding to the smallest misfit value is picked, which is \(v_{\text{p}}\) = 6340 m/s and \(v_{\text{s}}\) = 3110 m/s. In a next step, the transducer’s source function is estimated. If it is assumed that the numerical model captures all properties of the experimental model (e.g. in terms of geometry and material properties), the source function in frequency domain \(R_{\text{exp}}(\omega)\) can be estimated as [117]

where \(R_{\text{syn}}(\omega)\) is the ideal (Ricker) source function, \(S_{\text{exp}}(\omega)\) the acquired waveform, and \(S_{\text{syn}}(\omega)\) the simulated waveform in frequency domain for the angular frequency \(\omega\).

Visualization of the acquired measurement, the synthetic response to an ideal Ricker source function, and the synthetic response to the estimated source signature shown in Fig. 2.15. The waveforms are normalized with their second local extremum

Figure 2.15 shows the estimated source function after transformation to time domain compared to the ideal Ricker source function. With the estimated waveform as source function, a new simulation is set up, where the outcoming waveform is plotted in Fig. 2.16 (green), together with the measurement (black) and the simulated wavelet generated with the ideal Ricker (gray dotted). It becomes visible that the waveform agreement to the measurement improves substantially compared to the prior estimation. The high correlation is a sign for well-fitting material properties and a well-estimated source signature, forming the basis for the upcoming inversion scenarios.

UHSA inversion

In this section, UHSA is applied to the measurement data (setup shown in Fig. 2.13), where the previously determined material properties and the estimated source signature are used. Since UHSA is based on the implementation of prior knowledge, a parametrization needs to be derived. Here, it is selected to consist of just two center coordinates \((x,z)\) respective to the coordinate system in Fig. 2.13, right, where the corresponding drilling spreads all over the specimen in \(y\)-direction. The diameter of the drilling is considered to be known which has the advantage that with this kind of parametrization with only two parameters, a relatively fast computation and a visualization of the misfit functional is possible. The time window of investigation is set to \(8.8\times 10^{-5}\) s since according to Fig. 2.16, a high agreement of experimental and synthetic waveforms is observed during this time. In a first step, a misfit landscape is computed by linear interpolation of 1024 sampled parameter configurations and visualized in Fig. 2.17.

The abscissa corresponds to the \(x\)-axis in Fig. 2.13, while the ordinate corresponds to the \(z\)-axis. Several local minima are visible; however, there is a distinct global minimum around the actual center of the boring at \((x,z)=(-10,20)\) mm. UHSA is set up for 40 cycles, where the course of the algorithm is drawn onto the misfit landscape in Fig. 2.17. It is visible that the SA algorithm rather accepts parameter configurations with smaller misfit functionals as many SA samples lying in the red regions are rejected. If a SA proposal is accepted, the UKF samples different parameter configurations with the aim to move into the direction of the neighboring local minimum, which succeeds in most cases. The global minimum region is intensively explored and the parameter configuration with the lowest misfit functional is found at \((x,z)=(-8.86,19.17)\) mm, which is close to the actual expected global minimum. The entire inversion requires 460 forward simulations within 55 hours of computation time on a 26-core computer with 2.4 GHz each and 96 GB RAM.

Time domain adjoint inversion

In this section, the results for an inversion scenario using the adjoint time domain FWI on the setup shown in Fig. 2.13 are presented. The initial model is a homogeneous model described by the material parameters determined above. The source time function used here is the green curve shown in Fig. 2.15. The computational mesh consists of 285,588 tetrahedral elements. The computation time for one single simulation on a machine with two Intel Xeon E5-2698 v4 processors with 20 cores each and 256 GB RAM on 40 threads is approximately 1.35 hours.

Inversion results of the P-wave velocity model in the left column and the S-wave velocity model in the right column from three different perspectives for the inversion changing both P- and S-wave velocities. Grey dots represent seismic stations and black dots the seismic source. ([55])

A comparison of the measured seismograms to synthetic ones showed large discrepancies for the receivers closest to the source. Consequently, only the 20 receivers farthest away from the source were used in the inversion process (see [55]). Figure 2.18 shows the inversion results, where the changes in P- and S-wave velocities are shown in three perspectives. Around the position of the drilling, a decrease of the P-wave velocity is visible. In addition, a number of other regions with altered wave velocities are observed, where possible reasons are described in [55]. For the S-wave model, on the other hand, an increase in velocity at the position of the drilling is observed. In [55], Lamert provides a detailed analysis as to why an increase rather than a decrease is observed in this instance. Using both velocity models, an inhomogeneity is identified in the vicinity of the true position of the drilling. The center is estimated by [55] at \((x,z)=(-15.5\,\text{mm},24.5\,\text{mm})\) with a radius of 6.5 mm, while the true center lies at \((x,z)=(-10.0\,\text{mm},20.0\,\text{mm})\) with a radius of 8.0 mm.

2.2.7.2 Layer Change Imaging in a Concrete Block

In this section, an experimental model with certain similarities to field models is used to acquire ultrasonic data with which UHSA and UKF-PaLS are validated. For more information, readers are referred to [114, 115]. The experimental model is shown in Fig. 2.19 with a photo in Fig. 2.19, left, and an illustration of the inner structure in Fig. 2.19, right.

The specimen is a concrete block which has a relatively large dimension in \(y\)-direction compared to the other directions, enabling thus to perform the upcoming simulations in 2D instead of in 3D for the gain of a reduced computation time. Into the block, a linear material change is incorporated, where the two materials are composed of different concrete mixtures. In order to illustrate a 2D tunnel geometry in the later simulation model, the block has a material recess at \(x=0\). For the acquisition of the ultrasonic data, two source locations are specified which are illustrated by the red dots. At each source location, two source signals are released in the form of tone burst signals centered at 80 and 100 kHz. For each source signal, data is acquired at 532 receiver points, where the locations of the laser measurements are illustrated by the green lines. In order to improve the laser reflection, aluminum tape is attached along the lines of measurement. At each point of measurement, 100 single measurements are stacked. Bandpass filters with cutoff frequencies of 10 kHz and 150 kHz are applied to each record. A \(\sqrt{t}\)-filter is applied to the measurement data in order to approximately convert the amplitudes from the 3D spreading to a 2D spreading as for instance performed in [85].