Abstract

Significant progress has been made by the advances in Generative Adversarial Networks (GANs) for image generation. However, there lacks enough understanding of how a realistic image is generated by the deep representations of GANs from a random vector. This chapter gives a summary of recent works on interpreting deep generative models. The methods are categorized into the supervised, the unsupervised, and the embedding-guided approaches. We will see how the human-understandable concepts that emerge in the learned representation can be identified and used for interactive image generation and editing.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

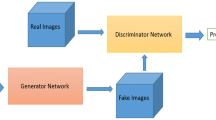

Over the years, great progress has been made in image generation by the advances in Generative Adversarial Networks (GANs) [6, 12]. As shown in Fig. 1 the generation quality and diversity have been improved substantially from the early DCGAN [16] to the very recent Alias-free GAN [11]. After the adversarial training of the generator and the discriminator, we can have the generator as a pretrained feedforward network for image generation. After feeding a vector sampled from some random distribution, this generator can synthesize a realistic image as the output. However, such an image generation pipeline doesn’t allow users to customize the output image, such as changing the lighting condition of the output bedroom image or adding a smile to the output face image. Moreover, it is less understood how a realistic image can be generated from the layer-wise representations of the generator. Therefore, we need to interpret the learned representation of deep generative models for understanding and the practical application of interactive image editing.

This chapter will introduce the recent progress of the explainable machine learning for deep generative models. I will show how we can identify the human-understandable concepts in the generative representation and use them to steer the generator for interactive image generation. Readers might also be interested in watching a relevant tutorial talk I gave at CVPR’21 Tutorial on Interpretable Machine Learning for Computer VisionFootnote 1. A more detailed survey paper on GAN interpretation and inversion can be found in [21].

This chapter focuses on interpreting the pretrained GAN models, but a similar methodology can be extended to other generative models such as VAE. Recent interpretation methods can be summarized into the following three approaches: the supervised approach, the unsupervised approach, and the embedding-guided approach. The supervised approach uses labels or classifiers to align the meaningful visual concept with the deep generative representation; the unsupervised approach aims to identify the steerable latent factors in the deep generative representation through solving an optimization problem; the embedding-guided approach uses the recent pretrained language-image embedding CLIP [15] to allow a text description to guide the image generation process.

In the following sections, I will select representative methods from each approach and briefly introduce them as primers for this rapidly growing direction.

2 Supervised Approach

GAN dissection framework and interactive image editing interface. Images are extracted from [3]. The method aligns the unit activation with the semantic mask of the output image, thus by turning up or down the unit activation we can include or remove the corresponding visual concept in the output image.

The supervised approach uses labels or trained classifiers to probe the representation of the generator. One of the earliest interpretation methods is the GAN Dissection [4]. Derived from the previous work Network Dissection [3], GAN Dissection aims to visualize and understand the individual convolutional filters (we term them as units) in the pretrained generator. It uses semantic segmentation networks [24] to segment the output images. It then calculates the agreement between the spatial location of the unit activation map and the semantic mask of the output image. This method can identify a group of interpretable units closely related to object concepts, such as sofa, table, grass, buildings. Those units are then used as switches where we can add or remove some objects such as a tree or lamp by turning up or down the activation of the corresponding units. The framework of GAN Dissection and the image editing interface are shown in Fig. 2. In the interface of GAN Dissection, the user can select the object to be manipulated and brush the output image where it should be removed or added.

Besides steering the filters at the intermediate convolutional layer of the generator as the GAN Dissection does, the latent space where we sample the latent vector as input to the generator is also being explored. The underlying interpretable subspaces aligning with certain attributes of the output image can be identified. Here we denote the pretrained generator as G(.) and the random vector sampled from the latent space as \(\mathbf{z} \), and then the output image becomes \(I = G(\mathbf{z} )\). Under different vectors, the output images become different. Thus the latent space encodes various attributes of images. If we can steer the vector \(\mathbf{z} \) through one relevant subspace and preserve its projection to the other subspaces, we can edit one attribute of the output image in a disentangled way.

We can use classifier to predict various attributes from the output image then go back to the latent space to identify the attribute boundaries. Images below show the image editing results achieved by [22].

To align the latent space with the semantic space, we can first apply off-the-shelf classifiers to extract the attributes of the synthesized images and then compute the causality between the occurring attributes in the generated images and the corresponding vectors in the latent space. The HiGAN method proposed in [22] follows such a supervised approach as illustrated in Fig. 3: (1) Thousands of latent vectors are sampled, and the images are generated. (2) Various levels of attributes are predicted from the generated images by applying the off-the-shelf classifiers. (3) For each attribute a, a linear boundary \(\mathbf{n} _a\) is trained in the latent space using the predicted labels and the latent vectors. We consider it a binary classification and train a linear SVM to recognize each attribute. The weight of the trained SVM is \(\mathbf{n} _a\). (4) a counterfactual verification step is taken to pick up the reliable boundary. Here we follow a linear model to shift the latent code as

where the normal vector of the trained attribute boundary is denoted as \(\mathbf{n} _a\) and \(I'\) is the edited image compared to the original image I. Then the difference between predicted attribute scores before and after manipulation becomes,

here F(.) is the attribute predictor with the input image, and K is the number of synthesized images. Ranking \(\varDelta a\) allows us to identify the reliable attribute boundaries out of the candidate set \(\{ \mathbf{n} _a\}\), where there are about one hundred attribute boundaries trained from step 3 of the HiGAN method. After that, we can then edit the output image from the generator by adding or removing the normal vector of the target attribute on the original latent code. Some image manipulation results are shown in Fig. 3.

Similar supervised methods have been developed to edit the facial attributes [17, 18] and improve the image memorability [5]. Steerability of various attributes in GANs has also been analyzed [9]. Besides, the work of StyleFlow [1] replaces the linear model with a nonlinear invertible flow-based model in the latent space with more precise facial editing. Some recent work uses a differentiable renderer to extract 3D information from the image GANs for more controllable view synthesis [23]. For the supervised approach, many challenges remain for future work, such as expanding the annotation dictionary, achieving more disentangled manipulation, and aligning latent space with image region.

3 Unsupervised Approach

As generative models become more and more popular, people start training them on a wide range of images, such as cats and anime. To steer the generative models trained for cat or anime generation, following the previous supervised approach, we have to define the attributes of the images and annotate many images to train the classifiers. It is a very time-consuming process.

Alternatively, the unsupervised approach aims to identify the controllable dimensions of the generator without using labels/classifiers.

SeFa [19] is an unsupervised approach for discovering the interpretable representation of a generator. It directly decomposes the pre-trained weights. More specifically, in the pre-trained generator of the popular StyleGAN [12] or PGGAN [10] model, there is an affine transformation between the latent code and the internal activation. Thus the manipulation model can be simplified as

where \(\mathbf{y} \) is the original projected code and \(\mathbf{y} '\) is the projected code after manipulation by n. From Eq. (3) we can see that the manipulation process is instance independent. In other words, given any latent code z together with a particular latent direction n, the editing can always be achieved by adding the term \(\alpha \mathbf{A} {} \mathbf{n} \) onto the projected code after the first step. From this perspective, the weight parameter \(\mathbf{A} \) should contain the essential knowledge of the image variation. Thus we aim to discover important latent directions by decomposing \(\mathbf{A} \) in an unsupervised manner. We propose to solve the following optimization problem:

where \(\mathbf{N} = [\mathbf{n} _1, \mathbf{n} _2, \cdots , \mathbf{n} _k]\) correspond to the top k semantics sorted by their eigenvalues, and \(\mathbf{A} \) is the learned weight in the affine transform between the latent code and the internal activation. This objective aims at finding the directions that can cause large variations after the projection of \(\mathbf{A} \). The resulting solution becomes the eigenvectors of the matrix \(\mathbf{A} ^T\mathbf{A} \). Those resulting directions at different layers control different attributes of the output image, thus pushing the latent code z on the important directions \(\{\mathbf {n}_1, \mathbf {n}_2, \cdots , \mathbf {n}_k\}\) facilitates the interactive image editing. Figure 4 shows some editing result.

Manipulation results from SeFa [19] on the left and the interface for interactive image editing on the right. On the left, each attribute corresponds to some \(\mathbf{n} _i\) in the latent space of the generator. In the interface, user can simply drag each slider bar associating with certain attribute to edit the output image

Many other methods have been developed for the unsupervised discovery of interpretable latent representation. Härkönen et al. [7] perform PCA on the sampled data to find primary directions in the latent space. Voynov and Babenko [20] jointly learn a candidate matrix and a classifier such that the classifier can properly recognize the semantic directions in the matrix. Peebles et al. [14] develops a Hessian penalty as a regularizer for improving disentanglement in training. He et al. [8] designs a linear subspace with an orthogonal basis in each layer of the generator to encourage the decomposition of attributes. Many challenges remain for the unsupervised approach, such as how to evaluate the result from unsupervised learning, annotate each discovered dimension, and improve the disentanglement in the GAN training process.

4 Embedding-Guided Approach

The embedding-guided approach aligns language embedding with generative representations. It allows users to use any free-form text to guide the image generation. The difference between the embedding-guided approach and the previous unsupervised approach is that the embedding-guided approach is conditioned on the given text to manipulate the image to be more flexible, while the unsupervised approach discovers the steerable dimensions in a bottom-up way thus it lacks fine-grained control.

Recent work on StyleCLIP [13] combines the pretrained language-image embedding CLIP [15] and StyleGAN generator [12] for free-form text-driven image editing. CLIP is a pretrained embedding model from 400 million image-text pairs. Given an image \(I_s\), it first projects it back into the latent space as \(\mathbf{w} _s\) using existing GAN inversion method. Then StyleCLIP designs the following optimization objective

where \(D_{CLIP}(.,.)\) measure the distance between an image and a text using the pre-trained CLIP model, the second and the third terms are some regularizers to keep the similarity and identity with the original input image. Thus this optimization objective results in a latent code \(\mathbf{w} ^*\) that generates an image close to the given text in the CLIP embedding space as well as similar to the original input image. StyleCLIP further develops some architecture design to speed up the iterative optimization. Figure 5 shows the text driven image editing results.

Some concurrent work called Paint by Word from Bau et al. [2] combines CLIP embedding with region-based image editing. It has a masked optimization objective that allows the user to brush the image to provide the input mask.

5 Concluding Remarks

Interpreting deep generative models leads to a deeper understanding of how the learned representations decompose images to generate them. Discovering the human-understandable concepts and steerable dimensions in the deep generative representations also facilitates the promising applications of interactive image generation and editing. We have introduced representative methods from three approaches: the supervised approach, the unsupervised approach, and the embedding-guided approach. The supervised approach can achieve the best image editing quality when the labels or classifiers are available. It remains challenging for the unsupervised and embedding-guided approaches to achieve disentangled manipulation. More future works are expected on the accurate inversion of the real images and the precise local and global image editing.

Notes

References

Abdal, R., Zhu, P., Mitra, N.J., Wonka, P.: StyleFlow: attribute-conditioned exploration of StyleGAN-generated images using conditional continuous normalizing flows. ACM Trans. Graph. (TOG) 40(3), 1–21 (2021)

Bau, D., et al.: Paint by word. arXiv preprint arXiv:2103.10951 (2021)

Bau, D., Zhou, B., Khosla, A., Oliva, A., Torralba, A.: Network dissection: quantifying interpretability of deep visual representations. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6541–6549 (2017)

Bau, D., et al.: Gan dissection: visualizing and understanding generative adversarial networks. In: International Conference on Learning Representations (2018)

Goetschalckx, L., Andonian, A., Oliva, A., Isola, P.: GANalyze: toward visual definitions of cognitive image properties. In: Proceedings of International Conference on Computer Vision (ICCV), pp. 5744–5753 (2019)

Goodfellow, I.J., et al.: Generative adversarial networks. In: Advances in Neural Information Processing Systems (2014)

Härkönen, E., Hertzmann, A., Lehtinen, J., Paris, S.: GANSpace: discovering interpretable GAN controls. In: Advances in Neural Information Processing Systems (2020)

He, Z., Kan, M., Shan, S.: EigenGAN: layer-wise eigen-learning for GANs. In: Proceedings of International Conference on Computer Vision (ICCV) (2021)

Jahanian, A., Chai, L., Isola, P.: On the “steerability” of generative adversarial networks. In: International Conference on Learning Representations (2019)

Karras, T., Aila, T., Laine, S., Lehtinen, J.: Progressive growing of GANs for improved quality, stability, and variation. In: International Conference on Learning Representations (2018)

Karras, T., et al.: Alias-free generative adversarial networks. In: Advances in Neural Information Processing Systems (2021)

Karras, T., Laine, S., Aila, T.: A style-based generator architecture for generative adversarial networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4401–4410 (2019)

Patashnik, O., Wu, Z., Shechtman, E., Cohen-Or, D., Lischinski, D.: StyleCLIP: text-driven manipulation of StyleGAN imagery. In: Proceedings of International Conference on Computer Vision (ICCV) (2021)

Peebles, W., Peebles, J., Zhu, J.-Y., Efros, A., Torralba, A.: The hessian penalty: a weak prior for unsupervised disentanglement. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12351, pp. 581–597. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58539-6_35

Radford, A., et al.: Learning transferable visual models from natural language supervision. arXiv preprint arXiv:2103.00020 (2021)

Radford, A., Metz, L., Chintala, S.: Unsupervised representation learning with deep convolutional generative adversarial networks. In: International Conference on Learning Representations (2015)

Shen, Y., Gu, J., Tang, X., Zhou, B.: Interpreting the latent space of GANs for semantic face editing. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9243–9252 (2020)

Shen, Y., Yang, C., Tang, X., Zhou, B.: InterFaceGAN: interpreting the disentangled face representation learned by GANs. IEEE Trans. Pattern Anal. Mach. Intell. 44(4), 2004–2018 (2020)

Shen, Y., Zhou, B.: Closed-form factorization of latent semantics in GANs. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1532–1540 (2021)

Voynov, A., Babenko, A.: Unsupervised discovery of interpretable directions in the GAN latent space. In: International Conference on Machine Learning (2020)

Xia, W., Zhang, Y., Yang, Y., Xue, J.H., Zhou, B., Yang, M.H.: Gan inversion: a survey. IEEE Trans. Pattern Anal. Mach. Intell. (2022)

Yang, C., Shen, Y., Zhou, B.: Semantic hierarchy emerges in deep generative representations for scene synthesis. Int. J. Comput. Vis. 129(5), 1451–1466 (2021)

Zhang, Y., et al.: Image GANs meet differentiable rendering for inverse graphics and interpretable 3D neural rendering. In: International Conference on Learning Representations (2021)

Zhou, B., Zhao, H., Puig, X., Fidler, S., Barriuso, A., Torralba, A.: Scene parsing through ADE20K dataset. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 633–641 (2017)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this chapter

Cite this chapter

Zhou, B. (2022). Interpreting Generative Adversarial Networks for Interactive Image Generation. In: Holzinger, A., Goebel, R., Fong, R., Moon, T., Müller, KR., Samek, W. (eds) xxAI - Beyond Explainable AI. xxAI 2020. Lecture Notes in Computer Science(), vol 13200. Springer, Cham. https://doi.org/10.1007/978-3-031-04083-2_9

Download citation

DOI: https://doi.org/10.1007/978-3-031-04083-2_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-04082-5

Online ISBN: 978-3-031-04083-2

eBook Packages: Computer ScienceComputer Science (R0)