Abstract

Additive manufacturing is evolving as a prominent aspect of the fourth industrial revolution and offers unprecedented design freedom for creating solid objects from 3D digital models. Effective contribution of metal additive manufacturing (MAM) to realization of Industry 4.0 requires improved mechanical integrity of the AM parts at a lower cost. This study aims to exploit the potential of multiscale modelling and artificial intelligence (machine learning and metamodelling) to realise reliable component-scale multi-physical field simulations for MAM. The thorough quantitative understanding of the various physical phenomena involved in MAM processes would enable a systematic optimisation of process conditions for achieving ‘first-time-right’ high-quality production.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Commerical additive manufacturing (AM) first emerged with the stereolithography (SL) process from 3D Systems in 1987 [1]. Arguably, the first reported metal AM parts were made from copper, tin and Pb–Sn solder powders through a selective laser sintering (SLS) process in 1990 [2]. German company EOS (Electro-Optical Systems), Sandia National Laboratories, Swedish company Arcam-AB and Fraunhofer institute in germany later developed metal additive manufacturing (MAM) techniques such as direct metal laser sintering (DMLS), laser engineered net shaping (LENS), electron beam melting (EBM) and selective laser melting (SLM) [1, 3]. MAM is currently a rapidly growing industry with revenue estimates projected to surpass $7’150M by 2026 in comparison to $1’030M in 2016 [4].

In the early phase of its 30-year history, AM had been primarily employed for the fabrication of conceptual and functional prototypes then known as Rapid Prototyping (RP) [5]. Recent innovations in processes and materials, and extending the applicability of the technology to metals, have transformed it to a manufacturing technology for the production of end-use parts [5,6,7]. Important factors hindering even faster growth and widespread industrial application of MAM are the high cost and uncertain mechanical performance of the AM builds [8]. Due to such problems, the business cases for the employment of this technology in many industries are rather marginal, particularly for the fabrication of components operating under severe loading conditions. Resolving the described challenges would thus lead to further progress of digital manufacturing and accelerate the realisation of the foreseen 4th industrial revolution. Due to the lack of a better alternative, trial-and-error strategies, that are costly in terms of both time and money, are often adopted to optimise the MAM process conditions [8,9,10]. Moreover, the quality of products so designed does not ultimately meet the expected requirement(s) in most safety-sensitive load-bearing applications. This ongoing research aims to contribute to this endeavour using advanced multi-physical field simulations.

2 Multi-physical Field Simulation for MAM

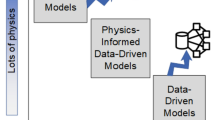

A thorough quantitative understanding of the MAM process requires insight from different modelling areas, such as thermal, mechanical, metallurgical, fluid, and thermodynamics simulations (Table 1). The extremely high computational cost of such multi-field simulations has been addressed in previous research by either decreasing the simulation domain size and/or by assuming gross simplifications. Instead, this study proposes to exploit the power of multiscale modelling and artificial intelligence (machine learning and metamodelling) to realise reliable component-scale simulations for MAM. The underlying idea originates from the incremental build characteristic of MAM and how the involved phenomena are repetitive and deterministic in nature. The variation in the characteristics of the deposited material increments does not originate from a significant change in the involved physics but only from a (modest) change in the imposed boundary conditions. Therefore, component-scale simulations of MAM process can be considered as solving a large number of nominally similar small-scale models, each with slightly different boundary conditions. The computational cost of such repetitive simulations can be significantly reduced by employing a dedicated machine learning toolbox. Systematic design of experiments would first involve a limited number of small-scale simulations to identify the sensitivity of the output parameters to the boundary conditions. Results from these simulations can then be exploited to train and cross-validate a computationally cheap surrogate (meta)model without compromising the process efficacy. This leads to a significant cost reduction for component-scale multi-physical field simulations and allows their direct implementation in construction of numerical optimisations for the fabrication of AM components with desired properties and performance.

It should be noted that this study uses metamodels as a very efficient machine learning regression tool. Devised from the field of uncertainty quantification (UQ), surrogate (meta) models are replacements of expensive computational models which can be used in analyses that require a large number of model evaluations, such as uncertainty propagation and design optimization, in a reasonable time [11]. In contrast to the typical machine learning applications which deal with a large amount of (noisy) data, a metamodel approximates a computational model by analysing the outcomes of a small number of simulation runs.

This ongoing research intends to develop a flexible simulation platform which, for a given set of MAM process conditions, trains and validates a metamodel on the basis of few small-scale high-fidelity simulations that incorporate thermal, solid mechanics, microstructural, thermodynamics, and fluid dynamics computations (Fig. 1). For a given geometry and process parameter set, the platform will use a specific metamodel for predicting a range of relevant parameters/characteristics within the MAM part – such as temperature and residual stress profiles, part distortion, spatial distribution of defects and porosity, and microstructural parameters. The prediction results will be subsequently exploited by a crystal plasticity finite element framework for assessing the mechanical integrity of MAM components. Notably, various high-end experimental facilities will be employed for designing dedicated experiments to 1) understand the involved physical phenomena, 2) derive relevant numerical models based on observations, and 3) ultimately evaluate the reliability and effectiveness of the proposed simulation strategy.

Employing a metamodel as an alternative for the conventional simulation techniques is associated with some levels of uncertainty for the obtained predictions. This uncertainty mainly depends on the robustness and representativeness of the training data set. Primarily, the uncertainty of metamodel predictions are quantifiable, and thus an uncertainty index would be included in the application of the metamodel for simulation of a component. For conditions where the involved uncertainty is unacceptable (i.e. high levels of uncertainty at critical locations of the assessed component), additional small-scale simulations for representing the situation at those locations, can be designed and added to the training pool to reduce the uncertainty level.

This paper presents the preliminary results for application of the described methodology for thermal simulation of selective laser melting (SLM) process.

Finite element model setup for 2D continuum thermal modelling of the SLM process [12].

3 Continuum Thermal Modelling

Temperature profile evolutions are perhaps the most critical information required for any type of MAM simulations. The temperature profile experienced by the molten material during MAM is very different from other manufacturing techniques, and to a great extent controls the state of defects, residual stress, microstructure, and properties of the product. Finite element (FE) analysis solves the below equation for calculation of the time-dependent temperature fields over the discretised domain:

where \(\rho \), \(c_p\), and k are respectively density, specific heat, and thermal conductivity of the material; and \(q_{vol}\) is the volumetric heat generation, e.g. due to the laser beam exposure. Contrary to the conventional continuum thermal analyses, for simulation of SLM, 1) employment of element activation or the quiet element strategy is required to simulate incremental material addition to the model, and 2) each material point can take one of the states of powder, solid or liquid. Implementation of these SLM specific considerations into commercial FE packages are often executed through user-defined subroutines. Ref [12] comprehensively describes the details for continuum thermal simulation of SLM in ABAQUS FE package. In Fig. 2 an overview of the model setup for 2D thermal simulations is shown.

High-fidelity thermal analysis of the SLM process requires a high level of discretisation in time and space (e.g. element size of 10–20 \(\upmu \text {m}\) and time-increments of a few \(\mu \text {s}\)) which hinders the full-scale analysis of a real-size component due to extremely high computational costs. However, this level of discretization is only required near the melt-pool where temperature gradients are steep and the thermal evolution rates are high. Therefore, the proposed approach breaks down the problem into two scales of local and global, where the global calculations employ a coarse mesh and larger time increments to determine the temperature profiles in regions far away from the melt-pool, while fine-mesh local simulations adaptively follow the beam location and estimate the thermal profiles at the vicinity of the melt-pool. This particular type of modelling technique combines the results of the local simulations and the global solution, thus providing reliable temperature predictions at a significantly reduced computational cost. Figure 3 shows the various steps that are taken in this multiscale modelling approach.

For concept verification, a computationally expensive finite element model that solves the whole simulation region using small time increments and a fine mesh is defined as the ‘reference’ solution. From comparing the multiscale results with the reference model, an assessment of maintained accuracy and the reduction in computational costs can be made. For instance, in the case of 2D simulation of a 2 \(\times \) 2 mm\(^2\) SLM deposition, the nodal temperatures in the multiscale approach remain nearly identical to those from the reference solution as observed in Fig. 4a. Meanwhile, the computational costs from adopting the multiscale approach are reduced by roughly an order of magnitude as shown in Fig. 4b. Extension of the adaptive multiscale idea to strongly reduce the computational costs of larger simulation domains is ongoing.

Although such a strategy significantly decreases the computational costs, it will still neither be affordable for the current industry nor for consideration in numerical process optimisation exercises. The effectiveness of employing machine learning-based approaches for further moderating the cost of MAM numerical analyses have been discussed in the following.

Employment of the described multiscale approach for thermal analysis of an SLM part involves thousands of local simulations. Due to the repetitive and deterministic nature of the heat transfer problem, it is possible to replace the numerous computationally expensive local finite element simulations with a much cheaper surrogate (meta)model.

(a) Comparison of temperature evolution for identical coordinates between the multiscale and reference simulation. (b) Comparison of calculation time for 2D simulation of the SLM process of various squares. The labels show the size of the model in \(\text {mm}^2\). The horizontal axis is based on the number of nodes in the reference simulation [12].

A number of local thermal analyses were performed to generate the temperature profiles in the vicinity of the melt-pool for a number of different build conditions. The generated data were then exploited to develop a cheap metamodel-based on sparse polynomial chaos expansion (PCE) [13] combined with principal component analysis (PCA) [14] for dimensional reduction. The effectiveness of surrogate modelling based on the PCE method has been demonstrated in a number of studies e.g. [15,16,17]. PCE surrogate modelling is typically used to replace computationally expensive models with uncertain input parameters and to efficiently compute statistics of the uncertain model outputs. The polynomial chaos expansion theory is established on the basis of polynomials orthogonal with respect to the distribution of the probabilistic model input. The mapping between input and output data that should be substituted by the metamodel is seen as a stochastic model on these data spaces and it is then expanded in terms of the orthogonal polynomial basis functions. Depending on the accuracy needed, the infinite-dimensional expansion is truncated after a certain number of elements. During the training phase, the coefficients of the PCE for the given polynomial basis will be determined using a set of training data points with corresponding model responses. Because of the high dimensionality of the data spaces (temperature data on two dimensional grid), principal component analysis is used to reduce the dimensions of both the input and the result space. The PCE metamodel is then used to model the dependence of the reduced result data on the reduced input data.

The metamodel is built to replace the local small-scale finite element simulations of the multiscale approach presented before. Thus, the results obtained through application of the metamodel can directly be integrated in the existing workflow of thermal modelling of the MAM process.

The validation of the metamodel involves evaluation of its ability to predict the temperature evolution for input settings which were not revealed during the training phase. As an example, the SLM process of a 1 \(\times \) 1 mm\(^2\) block is considered. The multiscale simulation of this setup consists of 165 local models out of which, only ten are used to train the surrogate model. Afterwards, the temperature evolution for the remaining local models is predicted using the surrogate model and a value for the prediction error (with the multiscale finite element simulation as the reference) can be computed. In Fig. 5, comparison of the nodal temperature between the surrogate model and multiscale finite element simulation at a point in the center of the deposition domain is shown. Only minor differences between the two temperature evolution curves are visible. In this exemplary setting, the surrogate model results show good agreement with the finite element simulation results while being produced at a much lower cost.

4 Concluding Remarks

This study aims to use the potential of multiscale finite element simulations in conjunction with machine learning algorithms to create a framework for reliable component-scale simulations of MAM. First, since the highly involved multi-physics phenomena is present only in the vicinity of the melt pool, numerical simulations can be separated into different scales to reduce computational costs and maintain the small degree of discretization required for capturing the physical developments during the SLM process. Second, the deterministic nature of the phenomena involved in MAM allows the repetitive small-scale local finite element simulations to be replaced by a surrogate model after generating enough data through FE simulation, for training the algorithm.

The proposed strategy has been employed for thermal simulation of SLM process which demonstrated its efficiency for reliable prediction of temperature profiles at significantly reduced computational costs. Extension of the proposed modelling strategy for radically improving the efficiency of other types of MAM simulations are under investigation.

References

Wohlers, T., Gornet, T.: History of additive manufacturing. Wohlers Rep. 24(2014), 118 (2014)

Manriquez-Frayre, J.A., Bourell, D.L.: Selective laser sintering of binary metallic powder (1990)

Sames, W.J., List, F.A., Pannala, S., Dehoff, R.R., Babu, S.S.: The metallurgy and processing science of metal additive manufacturing. Int. Mater. Rev. 61(5), 315–360 (2016)

Barroqueiro, B., Andrade-Campos, A., Valente, R., Neto, V.: Metal additive manufacturing cycle in aerospace industry: a comprehensive review. J. Manuf. Mater. Process. 3(3), 52 (2019)

Mellor, S., Hao, L., Zhang, D.: Additive manufacturing: a framework for implementation. Int. J. Prod. Econ. 149, 194–201 (2014)

Hague, R., Mansour, S., Saleh, N.: Material and design considerations for rapid manufacturing. Int. J. Prod. Res. 42(22), 4691–4708 (2004)

Huang, Y., Leu, M.C., Mazumder, J., Donmez, A.: Additive manufacturing: current state, future potential, gaps and needs, and recommendations. J. Manuf. Sci. Eng. 137(1), 014001 (2015)

Mani, M., Lane, B., Donmez, A., Feng, S., Moylan, S., Fesperman, R.: Measurement science needs for real-time control of additive manufacturing powder bed fusion processes. Technical report NIST IR 8036, National Institute of Standards and Technology, February 2015

Arrizubieta, J.I., Lamikiz, A., Cortina, M., Ukar, E., Alberdi, A.: Hardness, grainsize and porosity formation prediction on the Laser Metal Deposition of AISI 304 stainless steel. Int. J. Mach. Tools Manuf 135, 53–64 (2018)

Markl, M., Körner, C.: Multiscale modeling of powder bed-based additive manufacturing. Annu. Rev. Mater. Res. 46(1), 93–123 (2016)

Schöbi, R.: Surrogate models for uncertainty quantification in the context of imprecise probability modelling. IBK Bericht 505 (2019)

Scheel, P., Mazza, E., Hosseini, E.: Adaptive local-global multiscale approach for thermal simulation of the selective laser melting process (Under Review)

Blatman, G., Sudret, B.: Adaptive sparse polynomial chaos expansion based on least angle regression. J. Comput. Phys. 230(6), 2345–2367 (2011)

Jolliffe, I.T.: Principal Component Analysis. Springer, Heidelberg (2002)

Collaboration, E., Knabenhans, M., Stadel, J., Marelli, S., Potter, D., Teyssier, R., Legrand, L., Schneider, A., Sudret, B., Blot, L., et al.: Euclid preparation: II. The euclidemulator–a tool to compute the cosmology dependence of the nonlinear matter power spectrum. Mon. Not. R. Astron. Soc. 484(4), 5509–5529 (2019)

Torre, E., Marelli, S., Embrechts, P., Sudret, B.: Data-driven polynomial chaos expansion for machine learning regression. J. Comput. Phys. 388, 601–623 (2019)

Nagel, J.B., Rieckermann, J., Sudret, B.: Principal component analysis and sparse polynomial chaos expansions for global sensitivity analysis and model calibration: application to urban drainage simulation. Reliab. Eng. Syst. Saf. 195, 106737 (2020)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Hosseini, E., Scheel, P., Keller, F., Marelli, S., Mazza, E. (2021). Deploying Artificial Intelligence for Component-Scale Multi-physical Field Simulation of Metal Additive Manufacturing. In: Meboldt, M., Klahn, C. (eds) Industrializing Additive Manufacturing. AMPA 2020. Springer, Cham. https://doi.org/10.1007/978-3-030-54334-1_19

Download citation

DOI: https://doi.org/10.1007/978-3-030-54334-1_19

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-54333-4

Online ISBN: 978-3-030-54334-1

eBook Packages: EngineeringEngineering (R0)