Abstract

The Anterior Cruciate Ligament tear is a common medical condition that is treated using arthroscopy by pulling a tissue graft through a tunnel opened with a drill. The correct anatomical position and orientation of this tunnel is crucial for knee stability, and drilling an adequate bone tunnel is the most technically challenging part of the procedure. This paper presents, for the first time, a guidance system based solely on intra-operative video for guiding the drilling of the tunnel. Our solution uses small, easily recognizable visual markers that are attached to the bone and tools for estimating their relative pose. A recent registration algorithm is employed for aligning a pre-operative image of the patient’s anatomy with a set of contours reconstructed by touching the bone surface with an instrumented tool. Experimental validation using ex-vivo data shows that the method enables the accurate registration of the pre-operative model with the bone, providing useful information for guiding the surgeon during the medical procedure.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Arthroscopy is a modality of orthopeadic surgery for treatment of damaged joints in which instruments and endoscopic camera (the arthroscope) are inserted into the articular cavity through small incisions (the surgical ports). Since arthroscopy largely preserves the integrity of the articulation, it is beneficial to the patient in terms of reduction of trauma, risk of infection and recovery time [17]. However, arthroscopic approaches are more difficult to execute than the open surgery alternatives because of the indirect visualization and limited manoeuvrability inside the joint, with novices having to undergo a long training period [14] and experts often making mistakes with clinical consequences [16].

(a) 3D digitalisation of the bone surface: the surgeon performs a random walk on the intercondylar region using a touch-probe instrumented with a visual marker with the objective of reconstructing 3D curves on the bone surface. (b) Overlay of the pre-operative MRI with highlight of intercondylar arch: The reconstructed 3D curves are used to register the pre-operative MRI with the patient anatomy.

The reconstruction of the Anterior Cruciate Ligament (ACL) illustrates well the aforementioned situation. The ACL rupture is a common medical condition with more than 200 000 annual cases in the USA alone [16]. The standard way of treatment is arthroscopic reconstruction where the torn ligament is replaced by a tissue graft that is pulled into the knee joint through tunnels opened with a drill in both femur and tibia [3]. Opening these tunnels in an anatomically correct position is crucial for knee stability and patient satisfaction, with the ideal graft being placed in the exact same position of the original ligament to maximize proprioception [1]. Unfortunately, ligament position varies significantly across individuals and substantial effort has been done to model variance and provide anatomic references to be used during surgery [5]. However, correct tunnel placement is still a matter of experience with success rates varying broadly between low and high volume surgeons [16]. Some studies reveal levels of satisfaction of only 75\(\%\) with an incidence of revision surgeries of 10 to 15\(\%\), half of which caused by deficient technical execution [16].

This is a scenario where Computer-Aided Surgery (CAS) can have an impact. There are two types of navigation systems reported in literature: the ones that use intra-operative fluoroscopy [3], and the ones that rely in optical tracking to register a pre-operative CT/MRI or perform 3D bone morphing [6]. Despite being available for several years the market, penetration of these systems is residual because of their inaccuracy and inconvenience [6]. The added value of fluoroscopy based systems does not compensate the risk of radiation overdose, while optical tracking systems require additional incisions to attach markers which hinders acceptance because the purpose of arthroscopy is to minimize incisions. The ideal solution for Computer-Aided Arthroscopy (CAA) should essentially rely in processing the already existing intra-operative video. This would avoid the above mentioned issues and promote cost efficiency by not requiring additional capital equipment. Despite the intense research in CAS using endoscopic video [11], arthroscopic sequences are specially challenging because of poor texture, existence of deformable tissues, complex illumination, and very close range acquisition. In addition, the camera is hand-held, the lens scope rotates, the procedure is performed in wet medium and the surgeon often switches camera port. Our attempts of using visual SLAM pipelines reported to work in laparoscopy [10] were unfruitful and revealed the need of additional visual aids to accomplish the robustness required for real clinical uptake.

This article describes the first video-based system for CAA, where visual information is used to register a pre-operative CT/MRI with the patient anatomy such that tunnels can be opened in the position of the original ligament (patient specific surgery). The concept relates with previous works in CAS for laparoscopy that visually track a planar pattern engraved in a projector to determine its 3D pose [4]. We propose to attach similar fiducial markers to both anatomy and instruments and use the moving arthroscope to estimate the relative rigid displacements at each frame time instant. The scheme enables to perform accurate 3D reconstruction of the bone surface with a touch-probe (Fig. 1(a)) that is used to accomplish registration of the pre-operative 3D model or plan (Fig. 1(b)). The marker of the femur (or tibia) works as the world reference frame where all 3D information is stored, which enables to quickly resume navigation after switching camera port and overlay guidance information in images using augmented reality techniques. The paper describes the main modules of the real-time software pipeline and reports results in both synthetic and real ex-vivo experiments.

2 Video-Based Computer-Aided Arthroscopy

This section overviews the proposed concept for CAA that uses the intra-operative arthroscopic video, together with planar visual markers attached to instruments and anatomy, to perform tracking and 3D pose estimation inside the articular joint. As discussed, applying conventional SLAM/SfM algorithms [10] to arthroscopic images is extremely challenging and, in order to circumvent the difficulties, we propose to use small planar fiducial markers that can be easily detected in images and whose pose can be estimated using homography factorization [2, 9]. These visual aids enable to achieve the robustness and accuracy required for deployment in real arthroscopic scenario that otherwise would be impossible. The key steps of the approach are the illustrated in Fig. 2 and described next.

The Anatomy Marker WM: The surgeon starts by rigidly attaching a screw-like object with a flat head that has an engraved known 4 mm-side square pattern. We will refer to this screw as the World Marker (WM) because the local reference frame of its pattern will define the coordinate system with respect to which all 3D information is described. The WM can be placed in an arbitrary position in the intercondylar surface, provided that it can be easily seen by the arthroscope during the procedure. The placement of the marker is accomplished using a custom made tool that can be seen in the accompanying video.

3D Pose Estimation Inside the Articular Joint: The 3D pose \(\mathsf {C}\) of the WM in camera coordinates can be determined at each time instant by detecting the WM in the image, estimating the plane-to-image homography from the 4 detected corners of its pattern and decomposing the homography to obtain the rigid transformation [2, 9]. Consider a touch probe that is also instrumented with another planar pattern that can be visually read. Using a similar method, it is possible to detect and identify the tool marker (TM) in the image and compute its 3D pose \(\mathsf {\hat{T}}\) with respect to the camera. This allows the pose \(\mathsf {T}\) of the TM in WM coordinates to be determined in a straightforward manner by \(\mathsf {T} = \mathsf {C}^{-1}\mathsf {\hat{T}}\).

3D Reconstruction of Points and Contours on Bone Surface: The location of the tip of the touch probe in the local TM reference frame is known, meaning that its location w.r.t. the WM can be determined using \(\mathsf {T}\). A point on the surface can be determined by touching it with the touch probe. A curve and/or sparse bone surface reconstruction can be accomplished in a similar manner by performing a random walk.

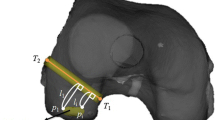

3D Registration and Guidance: The 3D reconstruction results are used to register the 3D pre-operative model, enabling to overlay the plan with the anatomy. We will discuss in more detail how this registration is accomplished in the next section. The tunnel can be opened using a drill guide instrumented with a distinct visual marker and whose symmetry axis’s location is known in the marker’s reference frame. This way, the location of the drill guide w.r.t. the pre-operative plan is known, providing real-time guidance to the surgeon, as shown in the accompanying video.

This paper will not detail the guidance process as it is more a matter of application as soon as registration is accomplished. Also note that the article will solely refer to the placement of femoral tunnel. The placement of tibial tunnel is similar, requiring the attachment of its own WM.

3 Surgical Workflow and Algorithmic Modules

The steps of the complete surgical workflow are given in Fig. 3(a). An initial camera calibration using 3 images of a checkerboard pattern with the lens scope at different rotation angles is performed. Then, the world marker is rigidly attached to the anatomy and 3D points on the bone surface are reconstructed by scratching it with an instrumented touch probe. While the points are being reconstructed and using the pre-operative model, the system performs an on-the-fly registration that allows the drilling of the tunnel to be guided. Guidance information is given using augmented reality, by overlaying the pre-operative plan with the anatomy in real time, and using virtual reality, by continuously showing the location of the drill guide in the model reference frame. As a final step, the WM must be removed. Details are given below.

(a) Different steps of the surgical workflow and (b) the rigid transformation \(\mathsf {R}, \mathbf {t}\) is determined by searching for pairs of points \(\mathbf {P}, \mathbf {Q}\) with tangents \(\mathbf {p},\mathbf {q}\) on the curve side that are a match with pairs of points \(\mathbf {\hat{P}}, \mathbf {\hat{Q}}\) with normals \(\mathbf {\hat{p}}, \mathbf {\hat{q}}\) on the surface side.

Calibration in the OR: Since the camera has exchangeable optics, calibration must be made in the OR. In addition, the lens scope rotates during the procedure, meaning that intrinsics must be adapted on the fly for greater accuracy. This is accomplished using an implementation of the method described in [13]. Calibration is done by collecting 3 images rotating the scope to determine intrinsics, radial distortion and center of rotation. For facilitating the process, acquisition is carried in dry environment and adaptation for wet is performed by multiplying the focal length by the ratio of the refractive indices [7].

Marker Detection and Pose Estimation: There are several publicly available libraries for augmented reality that implement the process of detection, identification and pose estimation of square markers. We opted for the ARAM library [2] and, for better accuracy, also used a photogeometric refinement step as described in [12] with the extension to accommodate radial distortion as in [8], making possible the accommodation of variable zoom in a future version.

Registration: Registration is accomplished using the method in [15] that uses a pair of points \(\mathbf {P}, \mathbf {Q}\) with tangents \(\mathbf {p},\mathbf {q}\) from the curve that matches a pair of points \(\mathbf {\hat{P}}, \mathbf {\hat{Q}}\) with normals \(\mathbf {\hat{p}}, \mathbf {\hat{q}}\) on the surface for determining the alignment transformation (Fig. 3(b)). The search for correspondences is performed using a set of conditions that also depend on the differential information. Global registration is accomplished using an hypothesise-and-test framework.

Instruments and Hardware Setup: The software runs in a PC that is connected in-between camera tower and display. The PC is equipped with a frame grabber Datapath Limited DGC167 in an Intel Core i7 4790 and a GPU NVIDIA GeForce GTX950 that was able to run the pipeline in HD format at 60 fps with latency of 3 frames. In addition, we built the markers, custom screw removal tool, touch probe and drill guide that can be seen in the video.

4 Experiments

This section reports experiments that assess the performance of two key features of the presented system: the compensation of the camera’s intrinsics according to the rotation of the lens and the registration of the pre-operative model with the patient’s anatomy. Tests on laboratory and using ex-vivo data are performed.

4.1 Lens Rotation

This experiment serves to assess the accuracy of the algorithm for compensating the camera’s intrinsics according to the scope’s rotation. We performed an initial camera calibration using 3 images of a calibration grid with the scope at 3 different rotation angles, which are represented with red lines in the image on the left of Fig. 4. We then acquired a 500-frame video sequence of a ruler with two 2.89 mm-side square markers 10 mm apart in wet environment. The rotation of the scope performed during the acquisition of the video is quantified in the plot on the right of Fig. 4 that shows that the total amount of rotation was more than 140\(^\circ \). The lens mark, shown in greater detail in Fig. 4, is detected in each frame for compensating the intrinsics. The accuracy of the method is evaluated by computing the relative pose between the two markers in each frame and comparing it with the ground truth pose. The low rotation and translation errors show that the algorithm properly handles lens rotation.

4.2 3D Registration

The first test regarding the registration method was performed on a dry model and consisted in reconstructing 10 different sets of curves by scratching the rear surface of the lateral condyle with an instrumented touch probe, and registering them with the virtual model shown in Fig. 5(a), providing 10 different rigid transformations. A qualitative assessment (Fig. 5(c)) of the registration accuracy is provided by representing the anatomical landmarks and the control points of Fig. 5(a) in WM coordinates using the obtained transformations. The centroid of each point cloud obtained by transforming the control points is computed and the RMS distance between each transformed point and the corresponding centroid is computed and shown in Fig. 5(f), providing a quantitative assessment of the registration accuracy. Results show that all the trials provided very similar results, with the landmarks and control points being almost perfectly aligned in Fig. 5(c) and all RMS distances being below 0.9 mm, despite the control points belonging to regions that are very distant from the reconstructed area.

The second experiment was performed on ex-vivo data and followed a similar strategy as the one on the dry model, having the difference that the total number of trials was 4. Figure 5(d) illustrates the setup of the ex-vivo experiment and Figs. 5(e) and (f) show the qualitative and qualitative analyses of the obtained result. Results show a slight degradation in accuracy w.r.t. the dry model test, which is expected since the latter is a more controlled environment. However, the obtained accuracy is very satisfactory, with the RMS distances of all control points being below 2 mm. This experiment demonstrates that our proposed system is very accurate in aligning the anatomy with a pre-operative model of the bone, enabling a reliable guidance of the ACL reconstruction procedure.

5 Conclusions

This paper presents the first video-based navigation system for ACL reconstruction. The software is able to handle unconstrained lens rotation and register pre-operative 3D models with the patient’s anatomy with high accuracy, as demonstrated by the experiments performed both on a dry model and using ex-vivo data. This allows the complete medical procedure to be guided, leading not only to a significant decrease in the learning curve but also to the avoidance of technical mistakes. As future work, we will be targeting other procedures that might benefit from navigation such as resection of Femuro Acetabular Impingement during hip arthroscopy.

References

Barrett, D.S.: Proprioception and function after anterior cruciate reconstruction. J. Bone Joint Surg. Br. 73(5), 833–837 (1991)

Belhaoua, A., Kornmann, A., Radoux, J.: Accuracy analysis of an augmented reality system. In: ICSP (2014)

Brown, C.H., Spalding, T., Robb, C.: Medial portal technique for single-bundle anatomical anterior cruciate ligament (ACL) reconstruction. Int. Orthop 37, 253–269 (2013)

Edgcumbe, P., Pratt, P., Yang, G.-Z., Nguan, C., Rohling, R.: Pico lantern: a pick-up projector for augmented reality in laparoscopic surgery. In: Golland, P., Hata, N., Barillot, C., Hornegger, J., Howe, R. (eds.) MICCAI 2014. LNCS, vol. 8673, pp. 432–439. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10404-1_54

Forsythe, B., et al.: The location of femoral and tibial tunnels in anatomic double-bundle anterior cruciate ligament reconstruction analyzed by three-dimensional computed tomography models. J. Bone Joint Surg. Am. 92, 1418–1426 (2010)

Kim, Y.: Registration accuracy enhancement of a surgical navigation system for anterior cruciate ligament reconstruction: a phantom and cadaveric study. Knee 24, 329–339 (2017)

Lavest, J.M., Rives, G., Lapreste, J.T.: Dry camera calibration for underwater applications. MVA 13, 245–253 (2003)

Lourenço, M., Barreto, J.P., Fonseca, F., Ferreira, H., Duarte, R.M., Correia-Pinto, J.: Continuous zoom calibration by tracking salient points in endoscopic video. In: Golland, P., Hata, N., Barillot, C., Hornegger, J., Howe, R. (eds.) MICCAI 2014. LNCS, vol. 8673, pp. 456–463. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10404-1_57

Ma, Y., Soatto, S., Kosecka, J., Sastry, S.: An Invitation to 3-D Vision: From Images to Geometric Models. Springer, Heidelberg (2004). https://doi.org/10.1007/978-0-387-21779-6

Mahmoud, N., et al.: ORBSLAM-based endoscope tracking and 3D reconstruction. In: Peters, T., et al. (eds.) CARE 2016. LNCS, vol. 10170, pp. 72–83. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-54057-3_7

Maier-Hein, L., et al.: Optical techniques for 3D surface reconstruction in computer-assisted laparoscopic surgery. Med. Image Anal. 17, 974–996 (2013)

Mei, C., Benhimane, S., Malis, E., Rives, P.: Efficient homography-based tracking and 3-D reconstruction for single-viewpoint sensors. T-RO 24(6), 1352–1364 (2008)

Melo, R., Barreto, J.P., Falcao, G.: A new solution for camera calibration and real-time image distortion correction in medical endoscopyinitial technical evaluation. TBE 59, 634–644 (2012)

Nawabi, D.H., Mehta, N.: Learning curve for hip arthroscopy steeper than expected. J. Hip. Preserv. Surg. 3(suppl. 1) (2000)

Raposo, C., Barreto, J.P.: 3D registration of curves and surfaces using local differential information. In: CVPR (2018)

Samitier, G., Marcano, A.I., Alentorn-Geli, E., Cugat, R., Farmer, K.W., Moser, M.W.: Failure of anterior cruciate ligament reconstruction. ABJS 3, 220–240 (2015)

Treuting, R.: Minimally invasive orthopedic surgery: arthroscopy. Ochsner J. 2, 158–163 (2000)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Raposo, C. et al. (2018). Video-Based Computer Aided Arthroscopy for Patient Specific Reconstruction of the Anterior Cruciate Ligament. In: Frangi, A., Schnabel, J., Davatzikos, C., Alberola-López, C., Fichtinger, G. (eds) Medical Image Computing and Computer Assisted Intervention – MICCAI 2018. MICCAI 2018. Lecture Notes in Computer Science(), vol 11073. Springer, Cham. https://doi.org/10.1007/978-3-030-00937-3_15

Download citation

DOI: https://doi.org/10.1007/978-3-030-00937-3_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-00936-6

Online ISBN: 978-3-030-00937-3

eBook Packages: Computer ScienceComputer Science (R0)