Abstract

Purpose: To automatically segment neurosensory retinal detachment (NRD)-associated subretinal fluid in spectral domain optical coherence tomography (SD-OCT) images by constructing a Hessian-based Aggregate generalized Laplacian of Gaussian algorithm without the use of retinal layer segmentation. Methods: The B-scan is first filtered into small blob candidate regions based on local convexity by aggregating the log-scale-normalized convolution responses of each individual gLoG filter. Two Hessian-based regional features are extracted based on the aggregate response map. Pooling with regional intensity, the feature vectors are fed into an unsupervised clustering algorithm. By voting the blob candidates into the superpixels, the initial subretinal fluid regions are obtained. Finally, an active contour with narrowband implementation is utilized to obtain integrated segmentations. Results: The testing data set with 23 longitudinal SD-OCT cube scans from 12 eyes of 12 patients are used to evaluate the proposed algorithm. Comparing with two independent experts’ manual segmentations, our algorithm obtained a mean true positive volume fraction 95.15%, positive predicative value 93.65% and dice similarity coefficient 94.35%, respectively. Conclusions: Without retinal layer segmentation, the proposed algorithm can produce higher segmentation accuracy comparing with state-of-the-art methods that relied on retinal layer segmentation results. Our model may provide reliable subretinal fluid segmentations for NRD from SD-OCT images.

This work was supported by the Natural Science Foundation of Jiangsu Province under Grant No. BK20180069, and the National Natural Science Foundation of China under Grants Nos. 61671242, 61701192, 61701222 and 61473310.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Spectral domain optical coherence tomography

- Subretinal fluid segmentation

- Neurosensory retinal detachment

- Blob segmentation

1 Introduction

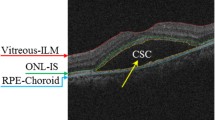

As a chronic disease, central serous chorioretinopathy (CSCR) is one of the leading causes of vision threat among middle-aged male individuals, in which serous retinal detachments such as neurosensory retinal detachment (NRD) are generally presented and treated as a prominent characteristic of CSCR. Spectral-domain optical coherence tomography (SD-OCT) imaging technology can generate 3D cubes and provide more detailed characteristics of disease phenotypes, which has become an important imaging modality for the diagnosis and treatment of CSCR [1]. Because retinal detachment is the separation of the neurosensory retina (NSR) from the underlying retinal pigment epithelium (RPE), state-of-the-art algorithms principally segment NRD regions based on layer segmentation results. For example, Wang et al. [2] utilized fuzzy level-set method to identify the boundaries. Wu et al. [3] presented an OCT Enface fundus-driven subretinal fluid segmentation method. Semi-supervised segmentation methods have also been utilized. Zheng et al. [4] used computerized segmentation combining with minimal expert interaction. Wang et al. [5] proposed a slice-wise label propagation algorithm. Supervised machine learning methods, including random forest [6] and deep learning model [7], have been introduced by treating the fluid segmentation as a binary classification problem. However, most state-of-the-art algorithms rely on the accuracy of retinal layer segmentations.

Because the subretinal fluid in SD-OCT images are typically darker than the surroundings, they can be treated as blob-like structures, which can be efficiently dealt with blob detection algorithms based on scale-space theory. One of the most widely used blob detection algorithm is Laplacian of Gaussian (LoG). The generalized Laplacian of the Gaussian (gLoG) [8] is developed to detect asymmetric structures. To further explore the convexity and elliptic shape of the blobs, Hessian-based LoG (HLoG) [9] and Hessian-based Difference of Gaussians (HDoG) [10] are proposed. Most algorithms mentioned above need to detect the candidate blobs by finding the local optimal within a large range of multi-scale Gaussian scale-space, which is, however, time consuming and sensitive to noise.

In this paper, an unsupervised blob segmentation algorithm is proposed to segment subretinal fluid in SD-OCT images. First, a Hessian-based aggregate generalized Laplacian of Gaussian (HAgLoG) algorithm is proposed by aggregating the log-scale-normalized convolution responses of each individual gLoG filter. Second, two regional features, i.e. blobness and flatness, are extracted based on the aggregate response map. Third, together with the intensity values, the features are fed into the variational Bayesian Gaussian mixture model (VBGMM) [11] to obtain the blob candidates which are voted into the superpixels to get the initial subretinal fluid regions. Finally, an active contours driven by local likelihood image fitting energy (LLIF) [12] with narrowband implementation is utilized to obtain integrated segmentations. Without retinal layer segmentation, the proposed algorithm can obtain higher segmentation accuracy compared to the state-of-the-art methods that rely on the retinal layer segmentation results.

2 Methodology

Figure 1 illustrates the pipeline of our framework. In the preprocessing phase, the B-scan as shown in Fig. 1(a) is firstly smoothed with bilateral filter as shown in Fig. 1(b). Then a mean value based threshold is used to get the region of interest (ROI) as shown in Fig. 1(c). Based on the denoised result, the proposed HAgLoG is carried out with the following three phases: (1) aggregate response construction, (2) Hessian analysis, and (3) post-pruning with VBGMM and LLIF.

2.1 Aggregate Response Construction

A blob is a region in an image that is darker (or brighter) than its surroundings with consistent intensity inside. Because the NRD in SD-OCT images are typically darker than the surroundings, in this paper, we only consider the blob with brighter surroundings. We modify the general Gaussian function by setting the kernel angle as 0. Based on the general Gaussian function in Ref. [8], let \(G(x,y,\sigma _x,\sigma _y)\) be the 2-D modified general Gaussian function defined as:

where \(\textit{A}\) is a normalization factor, and \(\sigma _x\) and \(\sigma _y\) are the scales on the x-axis and y-axis directions, respectively. The coordinates of the kernel center are set as zeros without losing generality. Based on Ref. [8], for the 2-D image f, the modified gLoG-filter (as shown in Fig. 1(d)) image \(\bigtriangledown ^2I(x,y,\sigma _x,\sigma _y)\) can be represented as

where \(\bigtriangledown ^2\) denotes the Laplacian operator, \(*\) is the convolution operation. To further improve the estimation accuracy, the convolution response is log-scale normalized [8]:

Finally, as shown in Fig. 1(e), the aggregated response map L is obtained by summing up all intermediate response maps:

2.2 Hessian Analysis

For a pixel (x, y) in the aggregated response L, the Hessian matrix is

Therefore, we can identify the dark blobs using the following proposition.

Proposition. In an aggregated response 2D image, every pixel of a dark blob has a positive definite Hessian matrix.

Proof. The Ref. [13] provided detailed relationships between the eigenvalues of Hessian matrix and geometric shape. Specifically, if a pixel belongs to a dark blob-like structure, all the eigenvalues of the corresponding Hessian matrix are positive, that is, the Hessian matrix of the pixel is positive definite.

However, the above proposition is a necessary but not sufficient property to determine the dark blobs. To further reduce the false detection, similar with Ref. [9], we give the following definition.

Definition. A blob candidate in aggregated response image is a 8-connected component of set \(U=\{(x,y)\Vert (x,y)\in L(x,y),T(x,y)=1\}\), where T(x, y) is the binary indicator: if the pixel has a positive definite Hessian matrix, then \(T(x,y)=1\); otherwise, \(T(x,y)=0\).

Instead of determining the positive matrix with eigenvalues of H(L(x,y)), we utilize the Sylvester’s criterion, which is a necessary and sufficient criterion, to determine whether a Hermitian matrix is positive-definite. In our Hessian matrix, the leading principal minors are  and

and  . Therefore, the Hessian matrix is positive definite if and only if \(D_1>0\) and \(D_2>0\). As a result, based on Proposition and Definition, we can obtain the dark blob candidates with the two leading principal minors of Hessian matrix as shown in Fig. 1(f).

. Therefore, the Hessian matrix is positive definite if and only if \(D_1>0\) and \(D_2>0\). As a result, based on Proposition and Definition, we can obtain the dark blob candidates with the two leading principal minors of Hessian matrix as shown in Fig. 1(f).

Based on the eigenvalues \(\lambda _1\) and \(\lambda _2\), Refs. [9, 13] defined two geometric features for blob detection: the likelihood of blobness \(R_B\) and the second-order structureness (flatness) \(S_B\) as:

where \(0<R_B\le 1\), when \(\lambda _1=\lambda _2\), \(R_B=1\). \(0<S_B\le 1\). The higher \(S_B\) is the more salient the blob is against the local background [10]. Both features are shown in Fig. 1(g) and (h), respectively.

2.3 Post-pruning

The input feature space is constructed by stacking the blob features \(R_B\) and \(S_B\) with average local intensity \(A_B\), and fed into VBGMM [11] to remove false positive detections and cluster the blob candidates into blob regions and non-blob regions as shown in Fig. 1(j). The detected blob regions are then voted into superpixels (as shown in Fig. 1(k)) obtained by simple linear iterative clustering (SLIC) [14] to get the initial subretinal fluid regions shown in Fig. 1(l). Generally, the subretinal fluid can be observed within several continuous B-scans. Therefore, the segmentation results of three adjacent slices are utilized to make sure the continuity of the subretinal fluid and remove the false detections. Finally, an active contours driven by LLIF [12] with narrowband implementation in Fig. 1(m) is utilized to obtain integrated segmentations in Fig. 1(n).

3 Results

In this paper, 23 longitudinal SD-OCT cube scans with only NRD from 12 eyes of 12 patients acquired with a Cirrus OCT device were used to evaluate the proposed algorithm. All the scans covered a \(6\times 6\times 2\,\mathrm{mm}^3\) area centered on the fovea with volume dimension \(512\times 128\times 1024\). This study was approved by the Institutional Review Board (IRB) of the First Affiliated Hospital of Nanjing Medical University with informed consent. Two independent experts manually drew the outlines of NRD regions based on B-scan images, which were used to generate the segmentation ground truths.

Comparison of segmentation results overlaid on original B-scan images for five example cases selected from five patients. For each row, the images shown from left to right are the segmenta-tion results obtained by LPHC, SS-KNN, RF, FLSCV, CMF, EFD and the proposed algorithm. The last column shows the manually segmentation results of Expert 1.

Comparison of segmentation results overlaid on three 3D example cases selected from three patients. For each row, the images shown from left to right are the segmentation surfaces obtained by RF, FLSCV, CMF, EFD and the proposed algorithm. The last column shows the manually segmentation surfaces of Expert 2.

Three criteria were utilized to evaluate the performances: true positive volume fraction (TPVF), positive predicative value (PPV) [3] and dice similarity coefficient (DSC). A linear analysis and Bland-Altman approach was applied for statistical correlation and reproducibility analyses.

Six state-of-the-art methods were compared, including a semi-supervised segmentation algorithm using label propagation and higher-order constraint (LPHC) [5], a stratified sampling k-nearest neighbor classifier based algorithm (SS-KNN) [15], a random forest classifier based method (RF) [6], a fuzzy level set with crosssectional voting (FLSCV) [2], a continuous max flow optimization-based method (CMF) [16] and an Enface fundus-driven method (EFD) [3].

In Fig. 2, five example B-scans selected from five patients were used to show the performance of the proposed model. LPHC can hardly obtain satisfactory segmentations without good initializations. SS-KNN may produce obvious false detections, and cannot guarantee the smoothness of the contour. FLSCV suffers from under segmentation, while RF suffers from false positive segmentations. Both CMF and EFD are sensitive to low contrast. By contrast, the proposed algorithm effectively avoid the under-segmentation and is more robust to low contrast to produce smooth and accurate segmentation results which are highly consistent with the ground truth.

To further highlight the superior performances of the proposed algorithm, Fig. 3 shows the 3-D segmentation surfaces of three example subjects selected from three different patients. Both RF and FLSCV suffer from insufficient segmentations with smaller segmentation volumes. Moreover, FLSCV may produce more misclassifications. Because of the sensitiveness to the low contrast, the surfaces obtained by CMF and EFD contains obvious protrusions. By contrast, the proposed algorithm apparently outperforms other approaches and generates the segmentation results most similar to the ground truths.

Table 1 summarizes the average quantitative results between the segmentations and manual gold standards. Overall, our model is capable of producing a higher segmentation accuracy than the other comparison methods. It should be noted both CMF and EFD rely on the layer segmentation results. Comparatively, without utilizing any layer information, our algorithm can still obtain better segmentations.

Finally, as shown in Fig. 4, a statistical correlation analysis is carried out by utilizing a linear regression analysis and Bland-Altman approach to compare the segmentation results of the proposed method with the manually segmentations from two experts. From Fig. 4(a) and (c), we can find that the proposed algorithm can produce high correlation with two experts (both \(r^2=0.99\)). The Bland-Altman plots in Fig. 4(b) and (d) also indicate stable agreement between the automated and manual segmentations.

4 Conclusion

In this paper, we propose an automatic and accurate NRD segmentation method in SD-OCT images. Our proposed model moves past the limitations that retinal layer segmentation present, is unsupervised without utilizing any training data, and automatic without using any manual interactions. Consequently, without retinal layer segmentation, the proposed algorithm can produce accurate segmentation results which are highly consistent with the ground truths. Our methods may provide reliable NRD segmentations for SD-OCT images and be useful for clinical diagnosis. Our future work mainly focus on the extension of 3D segmentation.

References

Teke, M.Y., et al.: Comparison of autofluorescence and optical coherence tomography findings in acute and chronic central serous chorioretinopathy. Int. J. Ophthalmol. 7(2), 350 (2014)

Wang, J., et al.: Automated volumetric segmentation of retinal fluid on optical coherence tomography. BOE 7(4), 1577–1589 (2016)

Wu, M., et al.: Automatic Subretinal fluid segmentation of retinal SD-OCT images with neurosensory retinal detachment guided by enface fundus imaging. IEEE-TBE 65(1), 87–95 (2018)

Zheng, Y., et al.: Computerized assessment of intraretinal and subretinal fluid regions in spectral-domain optical coherence tomography images of the retina. Am. J. Ophthalmol. 155(2), 277–286 (2013)

Wang, T., et al.: Label propagation and higher-order constraint-based segmentation of fluid-associated regions in retinal SD-OCT images. Info. Sci. 358, 92–111 (2016)

Lang, A., et al.: Automatic segmentation of microcystic macular edema in OCT. BOE 6(1), 155–169 (2015)

Xu, Y., et al.: Dual-stage deep learning framework for pigment epithelium detachment segmentation in polypoidal choroidal vasculopathy. BOE 8(9), 4061–4076 (2017)

Kong, H., et al.: A generalized Laplacian of Gaussian filter for blob detection and its applications. IEEE-TCy 43(6), 1719–1733 (2013)

Zhang, M., et al.: Small blob identification in medical images using regional features from optimum scale. IEEE-TBE 62(4), 1051–1062 (2015)

Zhang, M., et al.: Efficient small blob detection based on local convexity, intensity and shape information. IEEE-TMI 35(4), 1127–1137 (2016)

Bishop, C.M.: Pattern Recognition and Machine Learning. Springer, New York (2006)

Ji, Z., et al.: Active contours driven by local likelihood image fitting energy for image segmentation. Info. Sci. 301, 285–304 (2015)

Frangi, A.F., Niessen, W.J., Vincken, K.L., Viergever, M.A.: Multiscale vessel enhancement filtering. In: Wells, W.M., Colchester, A., Delp, S. (eds.) MICCAI 1998. LNCS, vol. 1496, pp. 130–137. Springer, Heidelberg (1998). https://doi.org/10.1007/BFb0056195

Achanta, R.: SLIC superpixels compared to state-of-the-art superpixel methods. IEEE-TPAMI 34(11), 2274–2282 (2012)

Xu, X., et al.: Stratified sampling voxel classification for segmentation of intraretinal and subretinal fluid in longitudinal clinical OCT data. IEEE-TMI 34(7), 1616–1623 (2015)

Wu, M., et al.: Three-dimensional continuous max flow optimization-based serous retinal detachment segmentation in SD-OCT for central serous chorioretinopathy. BOE 8(9), 4257–4274 (2017)

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Ji, Z. et al. (2018). Beyond Retinal Layers: A Large Blob Detection for Subretinal Fluid Segmentation in SD-OCT Images. In: Frangi, A., Schnabel, J., Davatzikos, C., Alberola-López, C., Fichtinger, G. (eds) Medical Image Computing and Computer Assisted Intervention – MICCAI 2018. MICCAI 2018. Lecture Notes in Computer Science(), vol 11071. Springer, Cham. https://doi.org/10.1007/978-3-030-00934-2_42

Download citation

DOI: https://doi.org/10.1007/978-3-030-00934-2_42

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-00933-5

Online ISBN: 978-3-030-00934-2

eBook Packages: Computer ScienceComputer Science (R0)