Abstract

We introduce a novel numerical method for solving two-sided space fractional partial differential equations in two-dimensional case. The approximation of the space fractional Riemann–Liouville derivative is based on the approximation of the Hadamard finite-part integral which has the convergence order \(O(h^{3- \alpha })\), where h is the space step size and \(\alpha \in (1, 2)\) is the order of Riemann–Liouville fractional derivative. Based on this scheme, we introduce a shifted finite difference method for solving space fractional partial differential equations. We obtained the error estimates with the convergence orders \(O(\tau +h^{3-\alpha }+ h^{\beta })\), where \(\tau\) is the time step size and \(\beta >0\) is a parameter which measures the smoothness of the fractional derivatives of the solution of the equation. Unlike the numerical methods for solving space fractional partial differential equations constructed using the standard shifted Grünwald–Letnikov formula or higher order Lubich’s methods which require the solution of the equation to satisfy the homogeneous Dirichlet boundary condition to get the first-order convergence, the numerical method for solving the space fractional partial differential equation constructed using the Hadamard finite-part integral approach does not require the solution of the equation to satisfy the Dirichlet homogeneous boundary condition. Numerical results show that the experimentally determined convergence order obtained using the Hadamard finite-part integral approach for solving the space fractional partial differential equation with non-homogeneous Dirichlet boundary conditions is indeed higher than the convergence order obtained using the numerical methods constructed with the standard shifted Grünwald–Letnikov formula or Lubich’s higher order approximation schemes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider the following space fractional partial differential equation, with \(1< \alpha <2\), \(0 \le x, y \le 1\), \(0< t < T\):

where f is a source/sink term and \(u_{0}, \varphi _{1}, \varphi _{2}, \psi _{1}, \psi _{2}\) are well-defined initial and boundary values, respectively. Here the Riemann–Liouville left-sided fractional derivative \(\, _{0}^{R} \text{D}^{\alpha }_{x} f(x)\) is defined by

Similarly, we may define the Riemann–Liouville right-sided fractional derivative as follows:

There are several ways to approximate the Riemann–Liouville fractional derivative in the literature. Meerschaert and Tadjeran [30] used the Grünwald–Letnikov formula to obtain the first-order scheme O(h) to approximate the Riemann–Liouville fractional derivative. Lubich [27] introduced the higher order schemes with order \(O(h^{p}), p=1, 2, \cdots 6,\) to approximate the Riemann–Liouville fractional derivative. Diethelm [9, 10] obtained the scheme to approximate the Riemann–Liouville fractional derivative with the convergence order \(O(h^{2-\alpha }), 0< \alpha <2\) using the Hadamard finite-part integral approach; see other higher order schemes to approximate the Riemann–Liouville fractional derivative in Li and Zeng [22].

Based on the different schemes for approximating the Riemann–Liouville fractional derivatives, many numerical methods are introduced for solving space fractional partial differential Eqs. (1)–(4): finite difference methods [4,5,6, 15,16,17,18, 21, 24, 25, 28, 29, 31,32,33,34,35,36,37,38,39,40], finite element methods [1,2,3, 7, 8, 11,12,13,14, 23, 26] and spectral methods [19, 20]. Meerschaert and Tadjeran [30] introduced a shifted finite difference method based on the Grünwald–Letnikov formula for solving the two-sided space fractional partial differential equation in one-dimensional case and proved that the convergence order of the numerical method is O(h). Meerschaert and Tadjeran [29] also considered the finite difference method for solving the fractional advection–dispersion equation in one-dimensional case using the Grünwald–Letnikov formula. The second-order shifted finite difference methods for solving fractional partial differential equations based on the Grünwald–Letnikov formula are discussed in both one- and two-dimensional cases in Tadjeran et al. [35] and Tadjeran and Meerschaert [34]. Now we turn to the Lubich’s higher order schemes. When Lubich’s higher order schemes with no shifts are applied for solving space fractional partial differential equations, the obtained finite difference methods are unstable as for using the Grünwald–Letnikov formula. With shifted Lubich higher order methods, it shows that the corresponding numerical methods for solving space fractional partial differential equations have only first-order accuracy; see [4, 5]. In [22, Section 2.2], Li and Zeng introduced other higher order schemes, for example, L2, L2C schemes, to approximate the Riemann–Liouville fractional derivative. However, to the best of our knowledge, there are no works available in the literature to use the L2 and L2C methods for solving space fractional partial differential equations. The numerical methods discussed in [22, Chapter 4] for solving space fractional partial differential equations are also based on the Grünwald–Letnikov formula and Lubich’s higher order schemes; see other recent works for solving space fractional partial differential equations in [1,2,3, 5, 21, 24, 25, 39, 40]. All the numerical methods constructed using the Grünwald–Letnikov formula or Lubich’s higher order methods for solving space fractional partial differential equations require the solution of the equation satisfies the homogeneous Dirichlet boundary condition. Otherwise, the experimentally determined convergence orders of such numerical methods are very low, e.g., see Table 4 in Example 2 in Sect. 3. Therefore, it is interesting to design some numerical methods which have the higher order convergence for solving the space fractional partial differential equation with respect to both homogeneous and non-homogeneous Dirichlet boundary conditions. The purpose of this paper is to introduce such finite difference methods for solving the space fractional partial differential equation.

Recently, Ford et al. [17] considered the finite difference method for solving the space fractional partial differential equation in one-dimensional case where the Riemann–Liouville fractional derivative is approximated using the Hadamard finite-part integral; see also [15, 16, 37]. The convergence order \(O(\tau + h^{3- \alpha }+h^{\beta }), \alpha \in (1,2), \beta >0\) of the numerical method in [17] is proved in the maximum norm for both homogeneous and non-homogeneous Dirichlet boundary conditions. In this paper, we will extend the method in Ford et al. [17] to solve space fractional partial differential equations in two-dimensional case. The corresponding error estimates in this paper are proved using a completely different way from Ford et al. [17]. The error estimates with the convergence order \(O(\tau + h^{3- \alpha }+h^{\beta }), \alpha \in (1,2), \beta >0\) hold for both homogeneous and non-homogeneous Dirichlet boundary conditions.

The main contributions of this paper are as follows.

-

(i)

A new finite difference method for solving space fractional partial differential equations in two-dimensional case is introduced and the convergence order is \(O(\tau + h^{\beta } + h^{3-\alpha }), \alpha \in (1,2), \beta >0\), where the Riemann–Liouville fractional derivative is approximated using the Hadamard finite-part integral approach.

-

(ii)

The convergence order of the finite difference method introduced in this paper is valid for both homogeneous and non-homogeneous Dirichlet boundary conditions.

The paper is organized as follows. In Sect. 2, we introduce the shifted finite difference methods for solving (1)–(4) and the error estimates are proved. In Sect. 3, we consider four numerical examples in both homogeneous and non-homogeneous Dirichlet boundary conditions with the different smoothness for the solution of the equation and show that the numerical results are consistent with the theoretical analysis.

2 The Finite Difference Method

In this section, we shall extend the method in Ford et al. [17] for solving the space fractional partial differential equation in one-dimensional case to solve space fractional partial differential equations (1)–(4) in two-dimensional case. For simplicity with the notations, we also assume that the boundary values are equal to 0, i.e., \(\varphi _{1} = \varphi _{2} = \psi _{1} =\psi _{2}=0\).

We have

Lemma 1

[17, Lemma 2.1] Let\(1< \alpha <2\)and let\(M=2 m_{0}\)where\(m_{0}\)is a fixed positive integer. Let\(0 = x_{0}< x_{1}< x_{2}< \cdots< x_{2j}< x_{2j+1}<\cdots < x_{M} =1\)be a partition of [0, 1]. Assume that\(g \in C^{3}[0, 1]\)is a sufficiently smooth function. Then, with\(j=1,2, \cdots , m_{0}\),

and, with\(j=1,2, \cdots , m_{0}-1\),

where

Further, we have, with\(l=0,1,2,\cdots , 2j\),

and

The remainder term\(R_{l}(g)\)satisfies, for every\(g \in C^{3} (0,1)\),

Similarly, we may consider the approximation of the right-sided Riemann–Liouville fractional derivative \(\, _x^R \text{D}_{1}^{\alpha } g(x)\) at \(x= x_{l}, l=0, 1, 2, \cdots , 2m_{0}-2\). Using the same argument as for the approximation of \(\, _0^R \text{D}_{x}^{\alpha } f(x)\) at \(x= x_{l}\), we can show that, with \(j=0, 1, 2, \cdots , m_{0}-1,\)

and, with \(j=0, 1, 2, \cdots , m_{0}-2,\)

Let \(M=2 m_{0}\). Let \(0 = x_{0}< x_{1}< x_{2}< \cdots< x_{j}< \cdots < x_{M}=1\) and \(0 = y_{0}< y_{1}< y_{2}< \cdots< y_{j}< \cdots < y_{M}=1\) be the partitions of [0, 1] and h the space step size. Let \(0 = t_{0}< t_{1}< t_{2}< \cdots< t_{n}< \cdots < t_{N}=T\) be the time partition of [0, T] and \(\tau\) the time step size. At the point \((t_{n+1}, x_{l}, y_{m})\), where l, m will be specified later, we have

where \(f_{l, m}^{n+1} = f(t_{n+1}, x_{l}, y_{m})\).

To obtain a stable finite difference method, we will consider the following shifted equation:

where the errors produced by the shifted terms are denoted by

We assume that \(\, _{0}^{R} \text{D}^{\alpha }_{x} u(t, x, y), \, _{0}^{R} \text{D}^{\alpha }_{y} u(t, x, y)\) satisfy the following Hölder conditions.

Assumption 1

For any \(x^{*}, x^{**}, y^{*}, y^{**} \in \mathbb {R}\), there exist constants \(C>0\) and \(\beta >0\) such that

We also assume that \(\, _{x}^{R} \text{D}^{\alpha }_{1} u(t, x, y), \, _{y}^{R} \text{D}^{\alpha }_{1} u(t, x, y)\) satisfy the following Hölder conditions.

Assumption 2

For any \(x^{*}, x^{**}, y^{*}, y^{**} \in \mathbb {R}\), there exist constants \(C>0\) and \(\beta >0\) such that

Remark 1

To make Assumptions 1 and 2 hold, we need to assume that the solution u satisfies some regularity conditions. In some circumstances, such conditions are easy to check, for example, when \(\, _{0}^{R} \text{D}^{\alpha }_{x} v(x) \in C^{1}[0, 1]\), we have, with \(\beta =1\),

Similarly, we can consider \(\, _{x}^{R} \text{D}^{\alpha }_{1} v(x)\).

We now turn to the discretization scheme of (10). Discretizing \(u_{t}\) at \(t=t_{n+1}\) using the backward Euler method and discretizing \(\, _{0}^{R} \text{D}^{\alpha }_{x}, \, _{x}^{R} \text{D}^{\alpha }_{1}, \, _{0}^{R} \text{D}^{\alpha }_{y}, \, _{y}^{R} \text{D}^{\alpha }_{1}\) at \(x= x_{l+1}, x= x_{l-1}, y= y_{m+1}, y= y_{m-1}\), respectively, using the Diethem’s finite difference method introduced in Lemma 1, we obtain

where \(S^{n+1}_{l, m}\) can be defined as \(S_{2j}^{n+1}\) in [17, (27)] and the weights \(w_{k, l+1}^{(1)}, w_{k, m+1}^{(2)}, w_{k, M-(l-1)}^{(3)}, w_{k, M-(m-1)}^{(4)}\) in (12) are defined by (7) and (8). Further, we denote \(l_{i}^{(1)}, l_{i}^{(2)}, m_{j}^{(1)},m_{j}^{(2)}\) by the following, with \(i = 1, 2, \cdots , m_{0}-1, \; j=1, 2, \cdots , m_{0}-1\),

and

Then we have

Lemma 2

[17, Lemma 2.3] Let\(1< \alpha < 2\). The coefficients\(w^{(s)}_{k, 2p}, s=1, 2, 3, 4, \; p=1, 2, \cdots , m_{0}\)defined in (12) satisfy

Let \(U_{l, m}^{n} \approx u(t_{n}, x_{l}, y_{m})\) denote the approximate solution of \(u(t_{n}, x_{l}, y_{m})\). We define the following finite difference method for solving (1)–(4):

where \(Q_{l, m}^{n+1}\) is some approximation of \(S_{l, m}^{n+1}\), defined as in [17, (30)] which satisfies

Now we come to our main theorem in this work.

Theorem 1

Assume that\(u(t_{n}, x_{l}, y_{m})\)and\(U_{l, m}^{n}\)are the solutions of (12) and (16), respectively. Assume that Assumptions 1and 2hold. Then there exists a norm\(\Vert \cdot \Vert\)such that

Proof

Let \(e_{l,m}^{n} = u(t_{n}, x_{l}, y_{m}) - U_{l, m}^{n}\). Subtracting (16) from (12), we get the following error equation, with \(\lambda = \tau /h^{\alpha }\):

where

Rearranging (17), we get

that is,

More precisely, we have, for \(l=1, m=1,2, \cdots , M-1\),

For \(l=2, m=1,2, \cdots , M-1\), we have

In general, for \(l=M-1, m=1,2, \cdots , M-1\), we have

Thus, we may write (18) as the following matrix form:

where

and

Here

where

and, with \(E= I_{(M-1) \times (M-1)}\),

We shall show that there exists a norm \(\Vert \cdot \Vert\) such that

Assume (24) holds at the moment, we have, by (23), noting that \(n \tau = t_{n} \le T\),

where we use the fact \(e^{0} =0\).

It remains to show (24). It suffices to show all the eigenvalues of A are greater than or equal to 1, which implies that all the eigenvalues of \(A^{-1}\) are less than or equal to 1. If all the eigenvalues of \(A^{-1}\) are less than or equal to 1, then there exists some norm \(\Vert \cdot \Vert\) such that \(\Vert A^{-1} \Vert \le 1\) [33]. To show all the eigenvalues of A are greater than or equal to 1, we may use the well-known Gershgorin lemma.

Let

We have

which imply that

By Lemma 2, we get

which implies that all the eigenvalues \(\mu\) of A satisfy, by Gershgorin lemma,

that is, all the eigenvalues \(\mu\) of A are greater than 1 which implies (24).

Together these estimates complete the proof of Theorem 1.

3 Numerical Examples

We shall consider in this section four numerical examples to illustrate that the numerical results are consistent with our theoretical results.

Example 1

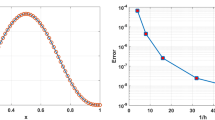

Consider, with \(1< \alpha <2\), \(0 \le x, y \le 2\) [6],

where

It is easy to check that \(u(t, x, y) =4{\text{e}}^{-t} x^2(2-x)^2y^2 (2-y)^2\) is the exact solution.

Note that the error estimate satisfies, by Theorem 1, with \(\gamma = \min (3- \alpha , \beta )\),

In the numerical method (16), we simply ignore the errors \(\sigma _{l, m}^{n+1}\) in (11) which are produced by the shifted terms. Of course, if we use the numerical methods (16) to calculate the approximate solutions, the spatial error should be \(O(h^{\beta } + h^{3-\alpha })\). Since the exact solutions are given in our numerical examples, the errors \(\sigma _{l, m}^{n+1}\) in (11) produced by the shifted terms can be calculated exactly. Thus, the convergence order should be \(O( h^{3-\alpha })\) if we include \(\sigma _{l, m}^{n+1}\) in the numerical method (16). In general, we do not know the exact solutions of the equation. In such case, we may approximate \(\sigma _{l, m}^{n+1}\) using the computed solutions \(U^{n}\) to improve the convergence orders. In all our numerical simulations in this section, the numerical method (16) will include \(\sigma _{l, m}^{n+1}\) defined by (11), which makes the experimentally determined order of convergence (EOC) independent of \(\beta >0\).

We will observe the convergence orders with respect to the space step size. To see this, we shall choose sufficiently small time step size \(\tau = 2^{-10}\) and the different space step sizes \(h_{l} = 2^{-l}, \, l=2, 3, 4, 5, 6\) such that the computational error is dominated by the space step size \(O(h^{3-\alpha }), 1< \alpha <2\). Denote \(\Vert e_{l}^{N} \Vert = \Vert U^{N} - u(t_{N}) \Vert\) the \(L^{2}\) norm of the error at \(t_{N}=1\) calculated with the step size \(h_{l}\). We then have

which implies that

Hence, the convergence order satisfies

The experimentally determined orders of convergence (EOC) for the numerical method (16) are provided in Table 1 with respect to the different \(\alpha\). We observe that the convergence order is indeed \(O(h^{3-\alpha })\) which is consistent with Theorem 1.

Next, we solve the equation in Example 1 using the finite difference method introduced in Meerschaert and Tadjeran [30] where the Riemann–Liouville fractional derivatives are approximated using the Grünwald–Letnikov formula which requires the solution of the equation satisfies the homogeneous Dirichlet boundary condition; see some other shifted and weighted Grünwald difference operator to approximate the Riemann–Liouville fractional derivative in [36]. The convergence order of the finite difference method in [30] is O(h) and we indeed observe this in Table 2 for solving (33)–(35). From now on, we call the finite difference method in Meerschaert and Tadjeran [30] as “the shifted Grünwald–Letnikov method ”.

Example 2

In this example, we will consider the following space fractional partial differential equation with non-homogeneous Dirichlet boundary conditions, with \(1< \alpha <2\), \(0 \le x, y \le 2\):

where

It is easy to see that \(u(t, x,y) =4\text{e}^{-t} x^2(2-x)^2 y^2 (2-y)^2 +5\) is the exact solution of the equation.

In Table 3, we show the convergence orders using the numerical method (16). We see that for some \(\alpha\), the convergence orders can reach \(O(h^{3- \alpha })\) and for some other \(\alpha\) the convergence orders are less than \(O(h^{3- \alpha })\). But in most cases, the convergence orders of the numerical method (16) are greater than 1 for solving (30)–(32) with the non-homogeneous Dirichlet boundary conditions.

In Table 4, we use “the shifted Grünwald–Letnikov method” introduced in Meerschaert and Tadjeran [30] for solving (30)–(32). We observe that the convergence orders are very low because of the non-homogeneous boundary conditions. From Tables 3 and 4, we observe that the numerical method (16) introduced in this paper has higher order convergence than “the shifted Grünwald–Letnikov method” introduced in Meerschaert and Tadjeran [30] for solving space fractional partial differential equations with non-homogeneous boundary conditions.

In the next example, we shall investigate the convergence orders of the numerical method (16) for solving space fractional partial differential equations where the solutions of the equations are not sufficiently smooth.

Example 3

Consider, with \(1< \alpha <2\), \(0 \le x, y \le 1\) [6],

where

Here the exact solution has the form \(u(t, x, y) =\text{e}^{-t} x^{\alpha _{1}}y^{\alpha _{1}}\). We will consider two different \(\alpha _{1}\): the nonsmooth solution case with \(\alpha _{1}= \alpha\) and the smooth solution case with \(\alpha _{1}=3\).

For the case \(\alpha _{1}= \alpha\), we have, there exists some constant C,

which implies that the following Lipschitz condition holds

for any \(\beta >0\).

In Table 5, we obtain the experimentally determined orders of convergence (EOC) for the different \(\alpha = 1.2, 1.4, 1.6, 1.8\). Since the solution is not sufficiently smooth, the convergence orders are less than \(O(h^{3-\alpha })\) as we expected.

For the smooth solution case with \(\alpha _{1}=3\), in Table 6, we observe that the convergence orders are almost \(3- \alpha\) as we expected.

In our final example, we consider a two-sided space fractional partial differential equation.

Example 4

Consider, with \(1< \alpha <2\), \(0 \le x, y \le 2\) [30],

where \(u(t, x, y) =4 \text{e}^{-t} x^2 (2-x)^2y^2 (2-y)^2\) is the exact solution.

In Table 7, we observe that the convergence orders of the numerical method (16) for solving this equation are also \(O(h^{3- \alpha })\) as we expected.

4 Conclusions

In this paper, we construct a new and reliable finite difference method for solving the space fractional partial differential equations. The error estimates are proved and the convergence order of the numerical method depends on the smoothness of the solution of the equation. The convergence orders are proved for both homogeneous and non-homogeneous Dirichlet boundary conditions. Numerical examples show that the proposed numerical method in this paper has much higher convergence order than the shifted Grünwald–Letnikov method proposed in Meerschaert and Tadjeran [30] for solving space fractional partial differential equations with non-homogeneous boundary conditions.

References

Bu, W., Tang, Y., Yang, J.: Galerkin finite element method for two-dimensional Riesz space fractional diffusion equations. J. Comput. Phys. 276, 26–38 (2014)

Bu, W., Tang, Y., Wu, Y., Yang, J.: Crank–Nicolson ADI Galerkin finite element method for two-dimensional fractional FitzHugh–Nagumo monodomain model. Appl. Math. Comput. 257, 355–364 (2015)

Bu, W., Tang, Y., Wu, Y., Yang, J.: Finite difference/finite element method for two-dimensional space and time fractional Bloch–Torrey equations. J. Comput. Phys. 293, 264–279 (2015)

Chen, M., Deng, W.: Fourth order scheme for the space fractional diffusion equations. SIAM J. Numer. Anal. 52, 1418–1438 (2014)

Chen, M., Deng, W.: Fourth order difference approximations for space Riemann–Liouville derivatives based on weighted and shifted Lubich difference operators. Comm. Comput. Phys. 16, 516–540 (2014)

Choi, H.W., Chung, S.K., Lee, Y.J.: Numerical solutions for space fractional dispersion equations with nonlinear source terms. Bull. Korean Math. Soc. 47, 1225–1234 (2010)

Deng, W.: Finite element method for the space and time fractional Fokker–Planck equation. SIAM J. Numer. Anal. 47, 204–226 (2008)

Deng, W., Hesthaven, J.S.: Discontinuous Galerkin methods for fractional diffusion equations. ESAIM:M2AN 47, 1845–1864 (2013)

Diethelm, K.: Generalised compound quadrature formulae for finite-part integrals. IMA J. Numer. Anal. 17, 479–493 (1997)

Diethelm, K.: An algorithm for the numerical solution of differential equations of fractional order. Electron. Trans. Numer. Anal. 5, 1–6 (1997)

Ervin, V.J., Roop, J.P.: Variational formulation for the stationary fractional advection–dispersion equation. Numer. Methods Partial Differ. Equ. 22, 558–576 (2006)

Ervin, V.J., Roop, J.P.: Variational solution of fractional advection dispersion equations on bounded domains in \(\mathbb{R}^{d}\). Numer. Methods Partial Differ. Equ. 23, 256–281 (2007)

Ervin, V.J., Heuer, N., Roop, J.P.: Numerical approximation of a time dependent nonlinear, space-fractional diffusion equation. SIAM J. Numer. Anal. 45, 572–591 (2007)

Fix, G.J., Roop, J.P.: Least squares finite-element solution of a fractional order two-point boundary value problem. Comput. Math. Appl. 48, 1017–1033 (2004)

Ford, N.J., Xiao, J., Yan, Y.: Stability of a numerical method for space-time-fractional telegraph equation. Comput. Methods Appl. Math. 12, 1–16 (2012)

Ford, N.J., Rodrigues, M.M., Xiao, J., Yan, Y.: Numerical analysis of a two-parameter fractional telegraph equation. J. Comput. Appl. Math. 249, 95–106 (2013)

Ford, N.J., Pal, K., Yan, Y.: An algorithm for the numerical solution of two-sided space-fractional partial differential equations. Comput. Methods Appl. Math. 15, 497–514 (2015)

Ilic, M., Liu, F., Turner, I., Anh, V.: Numerical approximation of a fractional-in-space diffusion equation II: with non-homogeneous boundary conditions. Frac. Calc. Appl. Anal. 9, 333–349 (2006)

Li, X.J., Xu, C.J.: A space-time spectral method for the time fractional diffusion equation. SIAM J. Numer. Anal. 47, 2108–2131 (2009)

Li, X.J., Xu, C.J.: Existence and uniqueness of the weak solution of the space-time fractional diffusion equation and a spectral method approximation. Commun. Comput. Phys. 8, 1016–1051 (2010)

Li, C., Zeng, F.: Finite difference methods for fractional differential equations. Int. J. Bifurc. Chaos 22, 1230014 (2012)

Li, C., Zeng, F.: Numerical Methods for Fractional Calculus. Chapman and Hall/CRC, New York (2015)

Li, Z., Liang, Z., Yan, Y.: High-order numerical methods for solving time fractional partial differential equations. J. Sci. Comput. 71, 785–803 (2017)

Liu, F., Chen, S., Turner, I., Burrage, K., Anh, V.: Numerical simulation for two-dimensional Riesz space fractional diffusion equations with a nonlinear reaction term. Cent. Eur. J. Phys. 11, 1221–1232 (2013)

Liu, F., Zhuang, P., Turner, I., Anh, V., Burrage, K.: A semi-alternating direction method for a 2-D fractional FitzHugh–Nagumo monodomain model on an approximate irregular domain. J. Comput. Phys. 293, 252–263 (2015)

Liu, Y., Yan, Y., Khan, M.: Discontinuous Galerkin time stepping method for solving linear space fractional partial differential equations. Appl. Numer. Math. 115, 200–213 (2017)

Lubich, C.: Discretized fractional calculus. SIAM J. Math. Anal. 17, 704–719 (1986)

Lynch, V.E., Carreras, B.A., del-Castillo-Negrete, D., Ferreira-Mejias, K.M., Hicks, H.R.: Numerical methods for the solution of partial differential equations of fractional order. J. Comput. Phys. 192, 406–442 (2003)

Meerschaert, M.M., Tadjeran, C.: Finite difference approximations for fractional advection–dispersion flow equations. J. Comput. Appl. Math. 172, 65–77 (2004)

Meerschaert, M.M., Tadjeran, C.: Finite difference approximations for two-sided space-fractional partial differential equations. Appl. Numer. Math. 56, 80–90 (2006)

Meerschaert, M.M., Benson, D.A., Baeumer, B.: Multidimensional advection and fractional dispersion. Phys. Rev. E 59, 5026–5028 (1999)

Meerschaert, M.M., Scheffler, H., Tadjeran, C.: Finite difference methods for two-dimensional fractional dispersion equation. J. Comput. Phys. 211, 249–261 (2006)

Shen, S., Liu, F.: Error analysis of an explicit finite difference approximation for the space fractional diffusion equation with insulated ends. ANZIAM J. 46, C871–C887 (2005)

Tadjeran, C., Meerschaert, M.M.: A second-order accurate numerical method for the two-dimensional fractional diffusion equation. J. Comput. Phys. 220, 813–823 (2007)

Tadjeran, C., Meerschaert, M.M., Scheffler, H.: A second-order accurate numerical approximation for the fractional diffusion equation. J. Comput. Phys. 213, 205–213 (2006)

Tian, W., Zhou, H., Deng, W.: A class of second order difference approximations for solving space fractional diffusion equations. Math. Comp. 84, 1703–1727 (2015)

Yan, Y., Pal, K., Ford, N.J.: Higher order numerical methods for solving fractional differential equations. BIT Numer. Math. 54, 555–584 (2014)

Yang, Q., Liu, F., Turner, I.: Stability and convergence of an effective numerical method for the time-space fractional Fokker-Planck equation with a nonlinear source term. Int. J. Diff. Eqs. 2010, 464321 (2010). https://doi.org/10.1155/2010/464321

Zeng, F., Liu, F., Li, C., Burrage, K., Turner, I., Anh, V.: A Crank–Nicolson ADI spectral method for a two-dimensional Riesz space fractional nonlinear reaction-diffusion equation. SIAM J. Numer. Anal. 52, 2599–2622 (2014)

Zhang, N., Deng, W., Wu, Y.: Finite difference/element method for a two-dimensional modified fractional diffusion equation. Adv. Appl. Math. Mech. 4, 496–518 (2012)

Acknowledgements

Y. Wang’s research was supported by the Natural Science Foundation of Luliang University (XN201510).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Wang, Y., Yan, Y. & Hu, Y. Numerical Methods for Solving Space Fractional Partial Differential Equations Using Hadamard Finite-Part Integral Approach. Commun. Appl. Math. Comput. 1, 505–523 (2019). https://doi.org/10.1007/s42967-019-00036-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42967-019-00036-7

Keywords

- Riemann–Liouville fractional derivative

- Space fractional partial differential equation

- Error estimates