Abstract

An inverse procedure is developed and tested to recover functional and structural information from global signals of brains activity. The method assumes a leaky-integrate and fire model with excitatory and inhibitory neurons, coupled via a directed network. Neurons are endowed with a heterogenous current value, which sets their associated dynamical regime. By making use of a heterogenous mean-field approximation, the method seeks to reconstructing from global activity patterns the distribution of in-coming degrees, for both excitatory and inhibitory neurons, as well as the distribution of the assigned currents. The proposed inverse scheme is first validated against synthetic data. Then, time-lapse acquisitions of a zebrafish larva recorded with a two-photon light sheet microscope are used as an input to the reconstruction algorithm. A power law distribution of the in-coming connectivity of the excitatory neurons is found. Local degree distributions are also computed by segmenting the whole brain in sub-regions traced from annotated atlas.

Similar content being viewed by others

1 Introduction

The unmatched ability of the brain to cope with an extraordinarily large plethora of complex tasks, carried out in parallel, ultimately resides in the intricate web of interlinked connections which define the architecture of the embedding neurons’ network. Structural and functional information, as inferred from direct measurements of neuronal activity, under different experimental conditions, are fundamental pieces of a jigsaw puzzle of how the brains works, from simple organisms to more complicated creatures, across phylogenetic scales. Suitable methods have been developed which build on statistical mechanics tools, e.g. maximum entropy principles (Schneidman et al. 2006; Cocco et al. 2009), to resolve the functional map that orchestrates the coordinated firing of neurons dislocated in different portions of the brain. One source of heterogeneity in the brain is network topology. Indeed neurons may also display different intrinsic dynamics, leading to a significant impact on the functioning of the brain (Tripathy et al. 2013). Neuronal excitability, namely the ability of neurons to respond to external inputs, is finely controlled through inhibitory/excitatory balance (Dehghani et al. 2016; Kaczmarek and Levitan 1987). Furthermore, individual neurons can display a variable degree of inherent excitability, a source of spatial quenched disorder which reflects back in the ensuing activation patterns.

Motivated by this, in Adam et al. (2020) we proposed and tested against both synthetic and real data, an inverse scheme to quantify the statistics of neurons’ excitability, while inferring, from global activity measurements, the, a priori unknown, distribution of network connectivities. The method employs an extended model of Leaky-Integrate and Fire (LIF) neurons, with short-term plasticity. Only excitatory neurons are accounted for in Adam et al. (2020). These are assumed to be coupled via a directed network and display a degree of heterogeneity in the associated current, which sets the firing regime in which a neuron operates. The inverse scheme builds on the celebrated Heterogenous Mean-Field (HMF) approximation (Barrat et al. 2008; Dorogovtsev et al. 2008; Pastor-Satorras and Vespignani 2001; Vespignani 2012) and seeks to recover the distribution of the (in-degree) connectivity of (excitatory) neurons, concurrently with the distribution of the assigned currents, denoted by a. The HMF approximation was previously employed in Burioni et al. (2014), di Volo et al. (2014), di Volo et al. (2016), and Adam et al. (2019) to reconstruct the topology of an underlying network from artificially generated data, meant to mimic neuronal signals. In Adam et al. (2020) the approach was generalized so as to account for the dynamical heterogeneity, as stemming from the intrinsic degree of individual neurons’ excitability. As mentioned above, individual excitability acts as a key component of the dynamics and yields irregular patterns of activity like those displayed in real measurements. The reconstruction scheme was applied in Adam et al. (2020) to longitudinal wide-field fluorescence microscopy data of cortical functionality in groups of awake mice and enabled us to identify altered distributions in neuron excitability immediately after the stroke, and in agreement with earlier observation (Schiene et al. 1996; Neumann-Haefelin et al. 1995; Berger et al. 2019). Conversely, rehabilitation allowed to recover a distribution similar to pre-stroke conditions.

The goal of this work is to push forward the reconstruction algorithm by accounting for the simultaneous presence of both excitatory and inhibitory neurons, in a refined variant of the inversion scheme proposed in Adam et al. (2020). Notice that already in di Volo et al. (2016) intertangled families of excitatory and inhibitory neurons have been considered, but only in a simplified setting where currents were assumed to be homogeneous. Relaxing this ansatz proves however mandatory when aiming at bridging the gap between theory and experiments, a challenge that we shall hereafter tackle. In particular, we will recast the dynamics of the examined LIF model in a reduced setting by grouping in different classes (excitatory and inhibitory) neurons which bear distinct values of the current a and of the connectivity k.

Our extended inverse method aims at computing the distribution of the currents, as well as the distributions of the connectivities, for respectively excitatory and inhibitory neurons, via an iterative scheme which self-consistently identifies the classes of neurons needed to interpolate the global activity field, supplied as an input to the algorithm. First, the performance of the method is evaluated in silico, against synthetically generated data. We then move forward to considering a direct application of the developed technique to custom-made two-photon Light Sheet (2PLS) microscope, optimized for high-speed (1 Hz) volumetric imaging of zebrafish larva (ZL, Danio rerio). Near infrared (NIR) light is used for excitation, covering a wavelength range that is not visible to the larva in order not to induce unwanted visual responses. Hence 2PLS microscopy allows to record whole-brain activity with high temporal and spatial resolution, by preventing undesired external bias (Wolf et al. 2015; de Vito et al. 2020; de Vito et al. 2020). The experimental input is processed with a properly devised methodology to return a spatially resolved raster plot for the spiking activity of neurons over time, which provides the ideal input for the reconstruction method to work. A power law distribution of the in-coming connectivity of excitatory neurons is found, which is robust over a significant range of the imposed fraction of inhibitory neurons. Local degree distributions are also recovered by partitioning the whole brain in bound sub-domains, traced from annotated atlas (Randlett et al. 2015; Kunst et al. 2019). When manipulating experimental data one cannot distinguish among the contributions resulting from different neurons (excitatory vs. inhibitory). A procedure is however developed which allows for the degree distribution of the excitatory neurons to be determined, while accounting for the role exerted by the population of inhibitory ones.

The paper is organized as follows. Next section is devoted to introducing the reconstruction scheme and to challenge its performance against synthetically produced data. We will then turn to presenting the experimental platform and discuss the details that relate to data processing. Processed data are supplied as an input to the mathematical reconstruction scheme to yield the results which are presented in Section 3.2. Finally we will sum up and draw our conclusions.

2 General mathematical framework

2.1 The model

We use a Leaky Integrate and Fire (LIF) model to mimic the dynamics of individual neurons. Consider a pool of N LIF neurons and denote by vi(t) the membrane potential of neuron i. Further, label with \({I}_{i}^{syn}(t)\) the synaptic current due to the incoming connections with other neurons of the collection.

The dynamics of the membrane potential is hence ruled by the following equation

where ai stands for the external input current of neuron i. This is a crucial quantity, as it sets the dynamics of the corresponding neuron, in the uncoupled regime. The critical value ai = 1 separates between quiescent and active (spiking) regimes. When vi reaches the threshold value vth, neuron i emits a spike and the membrane potential vi is reset to the base value vr. Following (di Volo et al. 2016), the membrane potential is rescaled by a suitable amount to have the spike threshold set at vth = 1 and the rest potential at vr = 0.

The Tsodyks, Uziel, and Markram model (Tsodyks et al. 1998; Tsodyks and Markram 1997) is assumed to describe the interactions among neurons, i.e. their coupling dynamics. More specifically, the dynamics of a synapse is expressed in terms of fractions of three different neurotransmitter states: active (yij(t)), available (xij(t)) and inactive (zij(t)), where i and j stand respectively for post-synaptic and pre-synaptic neurons. The obvious constraint xij(t) + yij(t) + zij(t) = 1 applies, for any time t.

During a spike of the pre-synaptic neuron, a fraction uij of neurotransmitters in the available state is activated. The time evolution of the three states

takes as input the spike train \(S_{j}(t)={\sum }_{m} \delta (t-t_{j}^{*}(m))\) of pre-synaptic neuron j emitting its n-th spike at time tj(n) (Tsodyks et al. 2000). All variables can be rescaled to work with adimensional units (Burioni et al. 2014; di Volo et al. 2014; 2016). The time is rescaled to the membrane time constant τm = 30ms. The adimensional time constants are consequently set to τin = 0.2, \({{\tau }_{r}^{i}}=3.4\) if the post-synaptic neuron i is inhibitory, or \({{\tau }_{r}^{i}}=26.6\) if it is excitatory (di Volo et al. 2016).

If the post-synaptic neuron i is excitatory, the fraction uij(t) of neurotransmitters is set to the constant value of U = 0.5. Otherwise, uij(t) evolves in time

where τf = 33.25 and Uf = 0.08 (Tsodyks et al. 2000; di Volo et al. 2016).

Equations (2a) are coupled to Eq. (1) via the synaptic current

where g is the coupling parameter and Aij stands for the elements of the network adjacency matrix A. The matrix entry is Aij = 1, if a link exists which goes from j to i, and provided j is an excitatory neuron. Conversely, Aij = − 1 if the starting node j identifies inhibitory neuron. On the other hand, if Aij = 0 a direct connection from j and i does not exist.

Equations (2a) can be cast in a more compact form so as to favour insight into the inspected processes and reduce the associated computational costs. To achieve this, we first notice that Eq. (2a) only depend on the pre-synaptic neuron j and the characteristics of the post-synaptic neuron i. Equation (2a) can hence be split into two distinct equations:

where the apexes E (Excitatory) and I (Inhibitory) reflect the specificity of the target neuron. Similar arguments apply to Eqs. (2b), (2c), and (3).

For each type of synapse, the average field, i.e. the fraction of neurotransmitters in the active state, is calculated as

where \(\mathcal {E}\) and \(\mathcal {I}\) are the ensemble of excitatory and inhibitory neurons, respectively; fI stands for the fraction of inhibitory neurons in the network. The global fields are defined as

2.2 The heterogeneous mean field ansatz

The model described in the previous section is reformulated here in terms of a Heterogeneous Mean Field (HMF) approximation. The original neurons are classified according to their characteristics. More specifically, neurons of the same type (excitatory or inhibitory) and with the same incoming connectivity k and external current a are considered identical. Therefore, L × M equivalence classes are defined, where L (M) is the number of sub-intervals in which the value range of k (a) has been divided. Moreover, we assume that neurons in the class k are subjected to a synaptic current proportional to their in-degree, i.e.,

for the excitatory and inhibitory neurons, respectively. Following this assumption, the model can be rewritten as

where (‡,∗) identify all possible pairs of post-synaptic and pre-synaptic neurons. Denote by PE(k) and PI(k) the in-degree distributions for the excitatory and inhibitory neurons, respectively, and P(a) the external current distribution. Equations (9) can be closed by the consistency relations

Taking in account the discretization of the defined classes of equivalence, Eq. (10) turns into

where we have implicitly introduced the discrete counterpart of the continuous probability distributions

2.3 Reconstruction scheme

In this section, we set up a general reconstruction scheme for recovering the a priori unknown distributions for the in-degree PE(k) and PI(k), as well as the external current P(a). This is achieved by interpolating the available global fields YE(t) and YI(t), under the simplified HMF descriptive framework. The main steps of the reconstruction algorithm are schematically depicted in Fig. 1a.

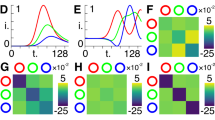

a Schematic outline of the reconstruction procedure. The global fields YE(t) and YI(t) constitute the inputs of the model in the HMF approximation (i). Different choices for the probability distributions PE(k), PI(k), and P(a) are iteratively tested in order to find the best match between the input fields and the reconstructed fields, as obtained by using the equations displayed in the red box (ii). b Outcome of the reconstruction procedure: the true probability distributions of a synthetic network are compared with those obtained with the proposed reconstruction method. A random network with N = 5000 is considered here. The fraction of inhibitory neurons is set to fI = 0.05. The number of classes defined in the HMF approximation for the in-degree and the external current is L = 250 and M = 250 respectively. c Comparison between the true global fields and the ones obtained via the reconstructed distributions. The plot in the inset is a zoom in of a peak. D-E) Outputs of the reconstruction are compared with the true external current probability distribution P(a) and the true in-degree distribution PE(k) for the excitatory neurons of the same network; the network is made of N = 1000 neurons of which a fraction fI = 0.2 are inhibitors. In the HMF approximation one hundred classes have been defined for both the in-degree and external current, namely, L = 100 and M = 100. In D) the correct fraction of inhibitory neurons is taken into account, while in E) the inhibitory neuron effects are not considered

As already mentioned, we assume the global fields YE(t) and YI(t) to be given. Then we integrate Eq. (9), by using these global fields YE(t) and YI(t) as inputs to the model. The equations are initialized with variables (\(v_{k,a}(t_{0}), {y}_{k,a}^{(\dagger ,*)}(t_{0}), {z}_{k,a}^{(\dagger ,*)}(t_{0})\)) randomly drawn from a uniform distribution, and generated so as to respect the constraints \(v^{\dagger }_{k,a}(t_{0})<1\) and \(y_{k,a}^{(\dagger ,*)}(t_{0}) + z_{k,a}^{(\dagger ,*)}(t_{0})<1\). Forced by the external fields YE(t) and YI(t), the governing equations are integrated forward and the variables \(y_{k,a}^{\dagger *}(t)\) are stored for each class (k,a), type of synapse (‡,∗), and time t. This process is repeated for H independent realizations of the initial conditions. The average fraction of neurotransmitters in the active state, for each class (k,a) and synapse type, is computed at any time of observation t, i.e., \(\left \langle y_{k,a}^{(\dagger ,*)}(t)\right \rangle =1/H{\sum }_{h=1}^{H}\left ({y}_{k,a}^{(\dagger ,*)}(t)\right )_{h}\).

Then, the approximated global fields \(\tilde {Y}_{\dagger *}\) are calculated, via Eq. (11), for an initial guess of the distributions PE(k), PI(k), and P(a). These latter are then recursively modified so as to improve the correspondence between the approximated fields and their true homologues Y‡∗.

Formally, for each (‡,∗) ∈{EE,EI,IE,II}, we aim at minimizing the function

Note that the arguments of the above function are the target probability distributions P∗(k) and P(a) that one aims at inferring.

The iterative algorithm operates as follows. The distribution P(a) is initially frozen to a given profile and the quantities \(y_{k_{l}}^{\dagger *}(t)={\sum }_{m=1}^{M} P(a_{m}) \left \langle {y}_{k_{l},a_{m}}^{\dagger *}(t)\right \rangle \) are hence evaluated. The inverse problem yields therefore

where the first row reflects the normalization condition. The problem is hence reduced to a linear system that can be readily solved to obtain a first estimate of the distributions PE(k) and PI(k).

As second step, the in-degree distributions PE(k) and PI(k) are fixed to the solutions found at the previous iteration. Similar to step one, we evaluate the quantities \({y}_{a_{m}}^{\dagger *}(t)={\sum }_{l=1}^{L} P_{*}(k_{l}) \left \langle {y}_{k_{l},a_{m}}^{\dagger *}(t)\right \rangle \) and formulate the linear problem

The above problem can be solved to obtained an updated estimate for the P(a). The overall procedure, consisting of two nested steps, is iterated until a maximum number of allowed cycles is reached, or, alternatively, the stopping criterion is eventually met.

Before proceeding in the analysis, we introduce a slightly modified notation. The in-degree k is normalized to the size of the network N. In formulae we will set \(\tilde {k}=k/N\), with \(\tilde {k}\in [0,1]\). From hereon the distributions that constitute the target of the reconstruction scheme will be hence expressed as function of \(\tilde {k}\), instead of k.

3 Application to data

3.1 Synthetic data

In this section we test the proposed reconstruction protocol against synthetic data. The reconstruction scheme was successfully validated on synthetic data for the case of homogeneous external current in di Volo et al. (2016) and Adam et al. (2020). Here, we test the reconstruction method on synthetic networks of excitatory and inhibitory neurons, assuming a quenched distribution of heterogeneous external currents. To this end we generate a random graph with N nodes whose structural characteristic is contained in the signed N × N adjacency matrix A, which specifies the existence of (directed) links among pairs of adjacent nodes. Following the convention introduced above, negative entries (Aij = − 1) indicate that the starting node (j) is of the inhibitory type, whereas for positive elements (Aij = 1) j belongs to the family of excitatory neurons. The network generation procedure is conceived so as to return a bell shaped distribution for both \(P_{E}(\tilde {k})\) and \(P_{I}(\tilde {k})\) (see Fig. 1b). Quenched disorder in the input currents is introduced, the assigned currents being distributed according to a uni-modal profile P(a) (see Fig. 1b). These are the exact distributions that we eventually seek to recover via the aforementioned reconstruction algorithm. Note that the domain of definition of P(a) includes the bifurcation value a = 1.

With this setting, Eqs. (1) and (2a) are integrated and the fields YE and YI are calculated by using Eqs. (6) and (7). This is possible because we have access to all information which pertain to the network architecture and to the heterogenous collection of randomly generated currents.

The recorded global fields YE and YI are used as inputs to the reconstruction scheme presented in the previous section. Figure 1b shows the comparison between true and estimated distributions, at the end of the reconstruction procedure and for one generation of the synthetic network. By inserting the estimated distributions into Eq. (11), we obtain the global fields \(\tilde {Y}_{E}\) and \(\tilde {Y}_{I}\). The comparison between estimated (\(\tilde {Y}_{E},\tilde {Y}_{I}\)) and true (YE,YI) global fields, as obtained by working on the index space, is presented in Fig. 1c. The agreement is excellent for both the inhibitory and the excitatory components.

When working with experimental data, however, one cannot isolate the contributions stemming from different neurons, grouped according to their specific traits (excitatory vs. inhibitory). This implies that the sums in Eq. (6), i.e. the input to the envisaged reconstruction scheme, cannot be in general accessed, as it was instead the case when working in the framework of the synthetic model considered above. To overcome this intrinsic limitation, we propose and test an alternative route, which performs adequately well when challenged against synthetic data. The idea is to propose an approximated version of the input Eqs. (6). To this end, we extend the sums which run on the excitatory neurons to all neurons and compute, under this approximation, the fields YEE and YIE. To validate this hypothesis we operate with synthetic networks with unclassified neurons. It can be shown that the approximation for the fields YEE,YIE correctly describes YEE, but not YIE. This conclusion is supported by systematic numerical investigations (data not shown), that we carried out by varying the relative proportion of excitatory and inhibitory neurons. Building on the above, we therefore write:

which enables us to compute the approximated field YEI as

Finally, we can estimate YE as:

Remark that the above expression for YE is obtained without grouping the neurons in excitatory and inhibitory classes, but provided fI, the fraction of inhibitory neurons, is eventually known. As we lack information on the corresponding field YI, we can run the reconstruction scheme in a simplified setting which is solely targeted to reconstructing the probability distributions P(a) and \(P_{E}(\tilde {k})\). In Fig. 1d, the results of the revisited inversion method are displayed, having set fI to the correct value, i.e. assuming the relative proportion of excitatory and inhibitory neurons which has been effectively employed in generating the synthetic dataset. The reconstruction algorithm is still capable of returning a faithful representation of both P(a) and \(P_{E}(\tilde {k})\). Conversely, when fI is set to zero, the reconstruction scheme compensates for the missing inhibitory component by predicting a reduced average connectivity of the excitatory population, as compared to the correct value assumed in the data generation scheme, see Fig. 1e. In the following, we will apply the reconstruction scheme in this latter version to the analysis of 2PLS microscope images of living zebrafish larva.

3.2 Experimental data

In this section we apply our reconstruction framework to calcium fluorescence microscopy data of zebrafish larva brain. Indeed, each time a neuron fires an action potential, the strong depolarization occurring triggers a rapid and transient increase of intracellular calcium concentration (Baker et al. 1971). Thus, following calcium-dependent fluorescence dynamics represents an indirect measurement of neuronal spiking activity (Grienberger and Konnerth 2012). A description of the experimental set up is provided in the Methods section.

3.2.1 Data processing

In order to apply the inverse scheme to real data, it is necessary to pre-process the wide field calcium images with the purpose of first identifying the location of the neurons. From individual traces of each spotted neuron, we will single out the spiking events, record the times of occurrence and build up the corresponding raster plot. This will serve as input to the reconstruction algorithm.

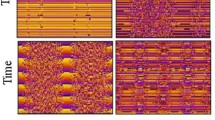

To this aim, data are first downsampled to 2 × 2 pixels so that the new pixel size is comparable to the neurons’ nuclear size (Fig. 2a). For every new pixel, the maximum value of the calcium fluorescence is calculated and only the pixels with maximum intensity projection (MIP) above a threshold are identified as neurons. The threshold is fixed to the average MIP value over all the image pixels. Furthermore, we operate a moving average to remove low frequency fluctuation (Fig. 2b) and select as presumed neurons the pixels that show large asymmetry in the recorded traces. To implement this step, we compute the skewness from individual time series of the calcium activity and classify as neurons the pixels that yield a sufficiently skewed signalFootnote 1. Figure 2c outlines the different phases of the process for one of the layers of the collection. More specifically, in Fig. 2c.i the MIP of the down-sampled pixels are depicted, in grey scale. In Fig. 2c.ii a binary representation of the whole brain is displayed where only pixels with MIP above the fixed threshold are highlighted. Lastly, in Fig. 2c.iii only the pixels which exhibit a strong asymmetric signal, i.e. the neurons with skewness above the imposed threshold, are shown. At the end of the selection process the number of identified neurons is around (1 ÷ 3) × 103 for each layer, which correspond to a total of 49 × 103.

Main steps of experimental data elaboration. Every layer of the imaged 3D zebrafish brain is spatially downsampled, as shown in panel a in order to obtain signals from pixel ensembles of size comparable to a neuron (2 × 2 pixels). b Detrending for slow oscillations by subtraction of moving average. c Results of neurons selection for one of the layers: (i) raw data, (ii) selection of pixels with maximum value above a fixed threshold, and (iii) only pixels with skeweness larger than 0.4. At the end of the neurons selection procedure the number of identified neurons in the whole brain is about 5 ⋅ 104. d Procedure to obtain the experimental raster plot starting from calcium fluorescence time series of the selected neurons. A spike is identified by its upwards threshold crossing time

Once the neurons are identified, we proceed to construct the raster plot. To this aim, for each selected neuron we analyze the time series of the calcium fluorescence to remove the background noise and detect events, which we call spikes. More specifically, a spike is defined as the time of threshold crossing. The thresholds are set to the mean value of the recorded time series plus two times the associated standard deviation. In addition, in order to avoid double detections due to noise, we discarded all events that succeeded the previous event by less than a minimum inter-event interval of 5 data points (5 seconds)Footnote 2 (Chen et al. 2013). The general overview of the spike trains emitted by neurons in a sample layer results in a raster plot (Fig. 2d). Time is on the horizontal axis, whereas the vertical axis displays the neuron indices. Each spike of neuron i is associated with a red dot in the row i, at the corresponding time of spiking.

3.2.2 Results

As described in the previous section, we process 3D calcium fluorescence data so as to identify pixels containing neurons. Figure 3 shows the results of this identification for eight different layers of the zebrafish brain. Colors reflect the average cross-correlationFootnote 3 at lag zero of each neuron with all other selected neurons of the brain. The higher the correlation, the more reddish the color displayed. The patterns of correlations are rather symmetric, an observation which can be interpreted as an a posteriori validation of the implemented procedure for automatic neurons selection. A movie which allows to navigate across successive layers of the whole 3D stack can be found in the SM.

The processing of data explained above allows us to obtain an experimental raster plot describing the events, or spikes, associated to each neuron. Indeed, the raster plot contains information about the spike train function Si(t), for all neurons i, and can be readily employed to recover the global field YE to be supplied as an input to the reconstruction scheme. More explicitly, the experimentally determined Si(t) is used to integrate Eqs. (2a) and (3), by breaking the coupling with Eq. (1) which sets the evolution of the membrane potentialFootnote 4. This is of great advantage since Eq. (1) contains the specific information about the network connections, i.e., the adjacency matrix elements, which are a priori unknown. In other words, the raster plot provides us a way to compute the global field (input of the reconstruction process described in Section 2.3) without knowing the underlying structure of the network.

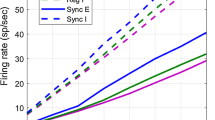

Since the true fraction fI of inhibitory neurons is unknown, the global field YE in the approximated form [Eq. (17)] is computed for different values of fI. In particular, we first reconstruct the in-degree distribution \(P_{E}(\tilde {k})\) for the excitatory neurons and the external current distribution P(a) for different values of fI and we store the resultsFootnote 5. Secondly, we computed the reconstruction error \(MSE=1/T{{\sum }_{t}^{T}}(Y(t)-\tilde {Y}(t))^{2}\) for all the considered values of fI, comparing the estimated field \(\tilde {Y}_{E}\) with the one used as input in the reconstruction scheme. Figure 4a reports on this comparison in the case of fI = 0.05. In Fig. 4b, the MSE is plotted against different values of fI. Small errors are found over a large (and biologically meaningful) interval of values for fI, approximately fI ∈ (0,0.4]. For this reason, we focus on five different choices of fI, i.e., fI = 0.05,0.1,0.2,0.3,0.4, to explore a wide range of results for the reconstruction scheme, when sampling the region of parameters in which the interpolation of the experimental time series proves accurate. The reconstructed distributions P(a) and \(P_{E}(\tilde {k})\) are plotted in Figs. 4c,d. For every choice of fI, over the spanned interval, the reconstructed distributions show common features. In particular, the external current distribution P(a) is peaked in the vicinity of the critical value a = 1 (Fig. 4c). The neurons associated to a > 1 get self-excited and promote the activation of other neurons which would be instead quiescent in the uncoupled limit. The small bumps that are found for relatively large values of the intensities a can be traced back to the high frequency component of the signal to be interpolated, and, as such, bear limited fundamental interests. The large-scale dynamics of the recorded time-series, including the heterogeneous modulation of the macroscopic field oscillations, is instead encoded in the distribution of intensities that define the bulk of P(a), i.e. the limited excerpt of curve which is found in correspondence of the leftmost portion of the support in a.

Reconstruction of the two distributions P(a) and \(P_{E}(\tilde {k})\) from calcium fluorescence microscopy data of zebrafish larva brain. a Comparison between the global field obtained from the experimental raster plot and the one that follows the reconstructed distributions \(P_{E}(\tilde {k})\) and P(a), for a fraction fI = 0.05 of inhibitory neuron. b Mean square error \(MSE=1/T{{\sum }_{t}^{T}}(Y(t)-\tilde {Y}(t))^{2}\) for different choices of fI. c-d Reconstructed probability distributions P(a) and \(P(\tilde {k})\) for five different choices of fI. For each setting, we ran H = 50 independent realizations of the iterative reconstruction scheme, starting from different initial conditions. The histograms represent the mean values and the error bars stands for the associated variance. e In-degree probability distributions in logarithmic scale and their best linear fitting

The reconstructed in-degree distributions \(P_{E}(\tilde {k})\) for the excitatory neurons, at different values of fI, are depicted in Fig. 4e, in log-log scale. Although over a limited support in \(\tilde {k}\), the obtained distributions seem to display a power law decay, \(P_{E}(\tilde {k})\propto \tilde {k}^{-\alpha }\), the characteristic exponent α being only modestly influenced by the chosen value of fI. Our findings suggest that excitatory neurons are organized in a network with few hubs and many more peripheral nodes. This result is consistent with the findings of previously published studies where the network degree distribution has been identified as scale-free (Avitan et al. 2017; Pastore et al. 2018).

As an additional test, we partition the full set of identified nodes in 10 different populations, reflecting distinct anatomical regions, as follows available atlases (Naumann et al. 2010; Kunst et al. 2019). The reconstruction algorithm is then applied to each of the selected region, treated as independent from the surrounding context, so as to access the local degree distribution. Results, displayed in Fig. 5, are in line with those reported in Fig. 4. Moreover, neurons characterized by a significant connectivity, the above referenced hubs, seem unevenly distributed across different anatomical regions.

4 Conclusions

Reconstructing structural and functional information from brain activity represents a topic of outstanding importance, which can in principle trigger applied and fundamental fallout. In Adam et al. (2020) we presented, and successfully tested, an inverse scheme which aimed at inferring the distributions of both firing rates and networks connectivity, from global activity fields. The method builds on the Leaky-Integrate and Fire (LIF) model which we modified by the inclusion of quenched disorder, in the assigned individual currents. The imposed degree of heterogeneity in the currents yields non trivial a-periodic patterns, which resemble those recorded in vivo. The dynamical model considered in Adam et al. (2020) solely included the population of excitatory neurons. Starting from these background, we here have generalized the reconstruction procedure of Adam et al. (2020) so as to account for the simultaneous presence of both excitatory and inhibitory neurons, while still dealing with the effect of the current heterogeneity. The dynamics of the examined multi-species LIF model is recast in a simplified framework, by grouping together neurons that belong to the same class (inhibitory vs. excitatory), while sharing the similar currents and in-degree. The output of the reduced model, driven by the excitatory and inhibitory global fields, is self-consistently used to seed an iterative scheme which seeks at fitting the supplied fields, via suitably adjusting the unknown distributions. These latter are the distributions of the incoming degrees for, respectively, excitatory and inhibitory neurons, as well as the distribution of the imposed currents. The method is tested on synthetic data and yields satisfying performances. Having in mind applications to real data, we also dealt with a setting where it is not possible to separate the contribution that pertains to the excitatory component from that stemming from the inhibitory counterpart. In this case, we propose and test a procedure which enables to recover the distribution of incoming degrees for the network of excitatory neurons (assumed predominant), while gauging the role exerted by inhibitors.

The devised protocol is then applied to whole-brain functional data resulting from light-sheet calcium imaging of a zebrafish larva. The experimental input is processed with an automatic procedure which allows us to identify putative neurons , and to extract their fluorescence signal. Remarkably, the cross-correlation maps produced show a high grade of clusterization, which faithfully matches the anatomical boundaries of multiple brain regions identified using zebrafish brain atlases (Randlett et al. 2015; Kunst et al. 2019). From the calcium signal displayed by each selected neuron we build up an experimental representation of the raster plot of the spiking activity of the zebrafish brain, which forms the input to the reconstruction scheme. A power law distribution of the in-coming connectivity of excitatory neurons is found, which is only modestly affected by the imposed fraction of inhibitors. Local degree distributions are also reconstructed by analysing the signal from specific regions, which correspond to distinct anatomical areas. Interestingly, the anatomical districts considered in the analysis can be divided into two different groups, according to the reconstructed probability distributions of both their excitatory incoming connections \(P_{E}(\tilde {k})\) and excitability P(a). The first group, including dorsal thalamus, medial tegmentum, superior raphe, hindbrain and spinal cord, is characterized by higher connections and lower excitability. Conversely, the second group, comprising telencephalon, habenulae, optic tectum and cerebellum, is described by lower connections and higher excitability. The reconstruction scheme reflects the specific functional connectivity of the larval brain during spontaneous activity precisely under these experimental conditions. Indeed, during measurements the zebrafish larva is embedded in agarose, and thus exposed to a diffused tactile stimulation, which could explain the higher incoming connections calculated for the dorsal thalamus, a sensory relay station (Northcutt 2008; Mueller 2012). Furthermore, despite being mechanically and pharmacologically immobilized, larval attempts to escape the restrained condition could account for the higher incoming excitatory connections calculated for dorsal raphe (whose activity has been correlated with arousal state, vigilance and responsiveness (Yokogawa et al. 2012)) and for the most caudal regions, namely hindbrain and spinal cord, responsible for the initiation of motor behaviours (Garcia-Campmany et al. 2010; Kinkhabwala et al. 2011). Moreover, in this scenario we observe a lower probability distribution of incoming excitatory connections for cerebellum. This result may be associated to the function of motor coordination and refinement (Heap et al. 2013; Kaslin and Brand 2016) of this region, typically related to the actual execution of a movement. Finally, since the measurement is performed in complete darkness, the larva is not exposed to any visual cue (the IR laser used for two-photon excitation is not perceived by the larval visual system) and this could explain the lower incoming connections calculated for the optic tectum, the main retinorecipient structure in the zebrafish brain (Sajovic and Levinthal 1982; Gebhardt et al. 2019). The large-scale oscillations in the recorded time series reflect back in the recovered distribution of currents: a significant fraction of neurons appear to operate in the quiescent state, while a minority self-excite to orchestrate the dynamics of the ensemble. The existence of possible correlations between individual connectivities and associated neurons’ currents cannot be resolved within the proposed approach and defines an interesting target for future investigations.

Studies show that inhibitory neurons have a higher excitability with respect to excitatory neurons in the cortex. This feature can be accounted for in the model by making the parameter a dependent on the type of neuron,i.e. by introducing explicitly aE and aI in Eqs. 9. These changes would call for a modified inverse scheme, which should be targeted to reconstructing the distributions of aE and aI. This extension of the current reconstruction scheme is left for future work.

5 Materials and methods

5.1 Validity of the HMF approximation

We here challenge the predictive ability of the HMF approximation. To this end, we first calculate the average inter-spike interval (ISI) – the average distance in time between successive spikes – for the system in its original formulation, i.e. in the space of the nodes. The computed ISI is confronted to the homologous quantity obtained under the HMF scenario. The comparison is drawn in Fig. 6a, for both excitatory and inhibitory neurons, and confirm the accuracy of the reduced HMF scheme.

a The ISI is computed for both excitatory (red symbols, top panel) and inhibitory (purple symbols, bottom panel) neurons. The prediction based on the HMF approximation yields the continuous curves. Here N = 1000 and fI = 0.2. b The relative error 〈ΔYEE/YEE〉t committed when using the approximation Eq. (15) is plotted for different values of fI

5.2 Validity of the Y EE approximation for the experimental reconstruction

As previously mentioned, we made use of approximation Eq. (15) to estimate the field YEE to be supplied as the experimental input in the reconstruction scheme. We can evaluate the error made when implementing this latter approximation by comparing it with the exact form for YEE defined in Eq. (6). This is of course possible when dealing with synthetically generated data. Referring to the approximated field with \({Y}_{EE}^{appx}\), we find:

Hence, the error we commit by using the approximated field \(Y_{EE}^{appx}\) is:

and it increases as fI increases. Figure 6b shows 〈ΔYEE/YEE〉t for different values of fI. As expected, the approximation gets worse as fI increases. The non-linearity in the error plot is originated from the term YEI − YEE, that depends on the fraction of inhibitory fI. The approximation gets worse as fI increases and, therefore, it can be safetly used only for sufficiently small values of fI. This could be also one of the reasons for the large errors reported in Fig. 4b for relatively large values of fI.

5.3 Experimental setup

The experimental optical setup employed is a modified version of the setup described in detail in (de Vito et al. 2020). Briefly, 930 nm NIR light is generated by a pulsed titanium-sapphire oscillator (Chameleon Ultra II, Coherent) and conditioned by a pulse compressor (PreComp, Coherent). After being attenuated by a combination of a half-wave plate and a Glan–Thompson polarizer, the beam passes through an electro-optical modulator that periodically switches its polarization plane orientation between two states: parallel or orthogonal to the optical table surface. Then a pair of retarders are used to pre-compensate for polarization distortions. After that, the beam is routed to the galvanometric mirror assembly and scanned by a resonant galvanometric mirror (CRS-8 kHz, Cambridge Technology) along larval rostro-caudal direction, to generate the digitally-scanned LS, and by a closed-loop galvanometric mirror (6215H, Cambridge Technology) along larval dorso-ventral direction. Finally, the beam is relayed to an objective (XLFLUOR4X/340/0,28,Olympus), placed at the lateral side of the larva, by a scan-lens (50 mm focal length), a tube-lens (75 mm focal length) and a pair of relay lenses (250 mm and 200 mm focal lengths).

Differently from the setup described in de Vito et al. (2020), a polarizing beam splitter (PBS) is present between the tube-lens and the first relay lens and the optical components downstream the PBS are duplicated on the opposite side of the larva. In this way the excitation light is steered on one optical arm or on the other depending on its instantaneous polarization orientation by the PBS.

The detection arm of the microscope is identical to what described in de Vito et al. (2020). A water-immersion objective (XLUMPLFLN20XW, Olympus) placed on the dorsal side of the larva collects the emitted green fluorescent light while being scanned along the axial dimension by an objective scanner (PIFOC P-725.4CD, Physik Instrumente) synchronously with the closed-loop galvanometric mirror oscillations. The optical image is then spectrally filtered (FF01-510/84-25 nm BrightLine®;, Semrock) and projected on a camera (ORCA-Flash4.0 V3, Hamamatsu) sensor by a sequence of two tube lenses (300 mm and 200 mm focal lengths) and an objective (UPLFLN10X2, Olympus).

The larvae were imaged with volumetric acquisitions (frequency: 1 Hz) composed by 31 planes spaced by 5 μm and with a pixel size of about 2 × 2 μm2.

We employed a transgenic strain of zebrafish larvae Tg(elavl3:H2B-GCaMP6s) (Vladimirov et al. 2014; Müllenbroich et al. 2018) in homozygous albino background that express the fluorescent calcium indicator GCaMP6s under a pan-neuronal promoter and with a nuclear localization. Sample mounting was performed as described in Turrini et al. (2017). Briefly, before the acquisition each larva was immersed in a solution of the paralyzing agent d-tubocurarine (2 mM; 93750, Sigma-Aldrich), included in 1.5% (w/v) low gelling temperature agarose (A9414, Sigma-Aldrich) in fish water (150 mg/L Instant Ocean, 6.9 mg/L NaH2PO4, 12.5 mg/L Na2HPO4, pH 7.2) and mounted on a custom-made glass support immersed in thermostated fish water. The animals were maintained according to standard procedures (Westerfield 2000) and observed at 4 days post fertilization. Fish maintenance and handling were carried out in accordance with European and Italian law on animal experimentation (D.L. 4 March 2014, no. 26).

Notes

The skewness threshold is here put to 0.4. In doing so we select a number of putative neurons which is comparable to the known size of a zebrafish larva brain (Naumann et al. 2010)

An additional analysis has been carried out using a minimum inter-event interval of 2 data points. From the corresponding raster plot, the global fields have been calculated and they appear indistinguishable from the ones obtained using a minimum inter-event interval of 5 data points.

Cross-correlation measures the similarity between two series at different time shifts, or lags. In formulae, the cross-correlation between two vectors xt and yt at lag τ is defined as the expected value of the product of the shifted copy of xt and the complex conjugate of yt, i.e., \(R_{\tau }(x,y)=\mathbf {E}(x_{t+\tau }y_{t}^{*})\), where the asterisk denotes the complex conjugation.

The reactions parameters are set to the nominal values as declared above (di Volo et al. 2016). The same parameters are assumed in the reconstruction, and, in this respect, the model equations acts as a filter to transform the supplied fluorescence data in the ideal input for the inverse procedure to run.

References

Adam, I., Cecchini, G., Fanelli, D., Kreuz, T., Livi, R., di Volo, M., Allegra Mascaro, A.L., Conti, E., Scaglione, A., Silvestri, L., & Pavone, F.S. (2020). Inferring network structure and local dynamics from neuronal patterns with quenched disorder. Chaos, Solitons and Fractals, 140, 110235. https://doi.org/10.1016/j.chaos.2020.110235.

Adam, I., Fanelli, D., Carletti, T., & Innocenti, G. (2019). Reactive explorers to unravel network topology. The European Physical Journal B, 92(5), 99. https://doi.org/10.1140/epjb/e2019-90700-3.

Avitan, L., Pujic, Z., Mölter, J., Van De Poll, M., Sun, B., Teng, H., Amor, R., Scott, E.K., & Goodhill, G.J. (2017). Spontaneous Activity in the Zebrafish Tectum Reorganizes over Development and Is Influenced by Visual Experience. Current Biology, 27(16), 2407. https://doi.org/10.1016/j.cub.2017.06.056.

Baker, P., Hodgkin, A., & Ridgway, E. (1971). Depolarization and calcium entry in squid giant axons. The Journal of Physiology, 218(3), 709.

Barrat, A., Barthelemy, M., & Vespignani, A. (2008). Dynamical processes on complex networks. Cambridge: Cambridge University Press.

Berger, D., Varriale, E., van Kessenich, L.M., Herrmann, H.J., & de Arcangelis, L. (2019). Three cooperative mechanisms required for recovery after brain damage. Scientific Reports, 9(1), 15858. https://doi.org/10.1038/s41598-019-50946-y.

Burioni, R., Casartelli, M., di Volo, M., Livi, R., & Vezzani, A. (2014). Average synaptic activity and neural networks topology: a global inverse problem. Scientific Reports, 4, 4336. https://doi.org/10.1038/srep04336.

Chen, T.W., Wardill, T.J., Sun, Y., Pulver, S.R., Renninger, S.L., Baohan, A., Schreiter, E.R., Kerr, R.A., Orger, M.B., Jayaraman, V., Looger, L.L., Svoboda, K., & Kim, D.S. (2013). Ultrasensitive fluorescent proteins for imaging neuronal activity. Nature, 499(7458), 295. https://doi.org/10.1038/nature12354.

Cocco, S., Leibler, S., & Monasson, R. (2009). Neuronal couplings between retinal ganglion cells inferred by efficient inverse statistical physics methods. Proceedings of the National Academy of Sciences, 106(33), 14058.

Dehghani, N., Peyrache, A., Telenczuk, B., Le Van Quyen, M., Halgren, E., Cash, S.S., Hatsopoulos, N.G., & Destexhe, A. (2016). Dynamic Balance of Excitation and Inhibition in Human and Monkey Neocortex. Scientific Reports, 6, 23176. https://doi.org/10.1038/srep23176.

Dorogovtsev, S.N., Goltsev, A.V., & Mendes, J.F. (2008). Critical phenomena in complex networks. Reviews of Modern Physics, 80(4), 1275.

Garcia-Campmany, L., Stam, F.J., & Goulding, M. (2010). From circuits to behaviour: motor networks in vertebrates. Current Opinion in Neurobiology, 20(1), 116.

Gebhardt, C., Auer, T.O., Henriques, P.M., Rajan, G., Duroure, K., Bianco, I.H., & Del Bene, F. (2019). An interhemispheric neural circuit allowing binocular integration in the optic tectum. Nature Communications, 10(1), 1.

Grienberger, C., & Konnerth, A. (2012). Imaging calcium in neurons. Neuron, 73(5), 862.

Heap, L., Goh, C.C., Kassahn, K.S., & Scott, E.K. (2013). Cerebellar output in zebrafish: an analysis of spatial patterns and topography in eurydendroid cell projections. Frontiers in Neural Circuits, 7, 53.

Kaczmarek, L.K., & Levitan, I.B. (1987). Neuromodulation: the biochemical control of neuronal excitability. Oxford: Oxford University Press.

Kaslin, J., & Brand, M. (2016). The zebrafish cerebellum. In Essentials of Cerebellum and Cerebellar Disorders (Springer), pp 411–421.

Kinkhabwala, A., Riley, M., Koyama, M., Monen, J., Satou, C., Kimura, Y., Higashijima, S.I., & Fetcho, J. (2011). A structural and functional ground plan for neurons in the hindbrain of zebrafish. Proceedings of the National Academy of Sciences, 108(3), 1164.

Kunst, M., Laurell, E., Mokayes, N., Kramer, A., Kubo, F., Fernandes, A.M., Förster, D., Dal Maschio, M., & Baier, H. (2019). A cellular-resolution atlas of the larval zebrafish brain. Neuron, 103(1), 21.

Mueller, T. (2012). What is the thalamus in zebrafish?. Frontiers in Neuroscience, 6, 64.

Müllenbroich, M.C., Turrini, L., Silvestri, L., Alterini, T., Gheisari, A., Tiso, N., Vanzi, F., Sacconi, L., & Pavone, F.S. (2018). Bessel beam illumination reduces random and systematic errors in quantitative functional studies using light-sheet microscopy. Frontiers in Cellular Neuroscience, 12, 315.

Naumann, E.A., Kampff, A.R., Prober, D.A., Schier, A.F., & Engert, F. (2010). Monitoring neural activity with bioluminescence during natural behavior. Nature Neuroscience, 13(4), 513.

Neumann-Haefelin, T., Hagemann, G., & Witte, O. (1995). Cellular correlates of neuronal hyperexcitability in the vicinity of photochemically induced cortical infarcts in rats in vitro. Neuroscience Letters, 193 (2), 101—104. https://doi.org/10.1016/0304-3940(95)11677-o.

Northcutt, R.G. (2008). Forebrain evolution in bony fishes. Brain Research Bulletin, 75(2-4), 191.

Pastor-Satorras, R., & Vespignani, A. (2001). Epidemic spreading in scale-free networks. Physical review letters, 86(14), 3200.

Pastore, V.P., Massobrio, P., Godjoski, A., & Martinoia, S. (2018). Identification of excitatory-inhibitory links and network topology in large-scale neuronal assemblies from multi-electrode recordings. PLOS Computational Biology, 14(8), 1. https://doi.org/10.1371/journal.pcbi.1006381.

Randlett, O., Wee, C.L., Naumann, E.A., Nnaemeka, O., Schoppik, D., Fitzgerald, J.E., Portugues, R., Lacoste, A.M., Riegler, C., Engert, F., & et al. (2015). Whole-brain activity mapping onto a zebrafish brain atlas. Nature Methods, 12(11), 1039.

Sajovic, P., & Levinthal, C. (1982). Visual cells of zebrafish optic tectum: mapping with small spots. Neuroscience, 7(10), 2407.

Schiene, K., Bruehl, C., Zilles, K., Qu, M., Hagemann, G., Kraemer, M., & Witte, O.W. (1996). Neuronal Hyperexcitability and Reduction of GABAA-Receptor Expression in the Surround of Cerebral Photothrombosis. Journal of Cerebral Blood Flow & Metabolism, 16(5), 906. 10.1097/00004647-199609000-00014.

Schneidman, E., Berry, M.J., Segev, R., & Bialek, W. (2006). Weak pairwise correlations imply strongly correlated network states in a neural population. Nature, 440(7087), 1007.

Tripathy, S.J., Padmanabhan, K., Gerkin, R.C., & Urban, N.N. (2013). Intermediate intrinsic diversity enhances neural population coding. Proceedings of the National Academy of Sciences, 110(20), 8248. https://doi.org/10.1073/pnas.1221214110.

Tsodyks, M.V., & Markram, H. (1997). The neural code between neocortical pyramidal neurons depends on neurotransmitter release probability. Proceedings of the National Academy of Sciences, 94(2), 719.

Tsodyks, M., Pawelzik, K., & Markram, H. (1998). Neural networks with dynamic synapses. Neural Computation, 10(4), 821.

Tsodyks, M., Uziel, A., & Markram, H. (2000). Synchrony generation in recurrent networks with frequency-dependent synapses. The Journal of Neuroscience, 20(1), 825.

Turrini, L., Fornetto, C., Marchetto, G., Müllenbroich, M., Tiso, N., Vettori, A., Resta, F., Masi, A., Mannaioni, G., Pavone, F., & et al. (2017). Optical mapping of neuronal activity during seizures in zebrafish. Scientific Reports, 7(1), 1.

Vespignani, A. (2012). Modelling dynamical processes in complex socio-technical systems. Nature physics, 8(1), 32.

Vladimirov, N., Mu, Y., Kawashima, T., Bennett, D.V., Yang, C.T., Looger, L.L., Keller, P.J., Freeman, J., & Ahrens, M.B. (2014). Light-sheet functional imaging in fictively behaving zebrafish. Nature Methods, 11(9), 883.

Volman, V., Baruchi, I., Persi, E., & Ben-Jacob, E. (2004). Generative modelling of regulated dynamical behavior in cultured neuronal networks. Physica A: Statistical Mechanics and its Applications, 335(1-2), 249.

Westerfield, M. (2000). The zebrafish book: a guide for the laboratory use of zebrafish. http://zfin.org/zf_info/zfbook/zfbk.html.

Wolf, S., Supatto, W., Debrégeas, G., Mahou, P., Kruglik, S.G., Sintes, J.M., Beaurepaire, E., & Candelier, R. (2015). Whole-brain functional imaging with two-photon light-sheet microscopy. Nature Methods, 12(5), 379.

Yokogawa, T., Hannan, M.C., & Burgess, H.A. (2012). The dorsal raphe modulates sensory responsiveness during arousal in zebrafish. The Journal of Neuroscience, 32(43), 15205.

de Vito, G., Fornetto, C., Ricci, P., Müllenbroich, C., Sancataldo, G., Turrini, L., Mazzamuto, G., Tiso, N., Sacconi, L., Fanelli, D., & et al. (2020). Two-photon high-speed light-sheet volumetric imaging of brain activity during sleep in zebrafish larvae. In Neural Imaging and Sensing 2020 (International Society for Optics and Photonics), vol. 11226, p. 1122604.

de Vito, G., Ricci, P., Turrini, L., Gavryusev, V., Muellenbroich, M.C., Tiso, N., Vanzi, F., Silvestri, L., & Pavone, F.S. (2020). Effects of excitation light polarization on fluorescence emission in two-photon light-sheet microscopy. Biomedical Optics Express, 11(8), 4651–4665. https://doi.org/10.1364/BOE.396388. Publisher: Optical Society of America.

de Vito, G., Turrini, L., Fornetto, C., Ricci, P., Müllenbroich, C., Sancataldo, G., Trabalzini, E., Mazzamuto, G., Tiso, N., Sacconi, L., & et al. (2020). Two-photon light-sheet microscopy for high-speed whole-brain functional imaging of zebrafish neuronal physiology and pathology. In Neurophotonics (International Society for Optics and Photonics), vol. 11360, p. 1136004.

di Volo, M., Burioni, R., Casartelli, M., Livi, R., & Vezzani, A. (2014). Heterogeneous mean field for neural networks with short-term plasticity. Physical Review. E, statistical, nonlinear, and soft matter physics, 90(2), 022811. https://doi.org/10.1103/physreve.90.022811.

di Volo, M., Burioni, R., Casartelli, M., Livi, R., & Vezzani, A. (2016). Neural networks with excitatory and inhibitory components: Direct and inverse problems by a mean-field approach. Physical Review E, 93(1), 012305.

Funding

Open Access funding provided by Università degli Studi di Firenze

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that they have no conflict of interest.

Additional information

Action Editor: Alain Destexhe

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chicchi, L., Cecchini, G., Adam, I. et al. Reconstruction scheme for excitatory and inhibitory dynamics with quenched disorder: application to zebrafish imaging. J Comput Neurosci 49, 159–174 (2021). https://doi.org/10.1007/s10827-020-00774-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10827-020-00774-1