Abstract

Coding games are widely used to teach computational thinking (CT). Studies have broadly investigated the role of coding games in supporting CT learning in formal classroom contexts, but there has been limited exploration of their use in informal home-based settings. This study investigated the factors that motivated students to use a coding game called Coding Galaxy in a home-based setting. It explored the connections between the students’ perceptions of and usage of the tool. An 11-day intervention was conducted at a primary school in Hong Kong with 104 participants. The students’ perceptions of the game were collected via questionnaires and information on their use of the tool was extracted from log files. Results indicated that coding motivation and feeling of enjoyment were predictors of the actual use of the game, with coding motivation the dominant factor. Focus group interviews were also conducted to further explore the students’ motivation to play the game. Through comparisons of active and inactive users, the qualitative findings supported the quantitative results, indicating that students who were more intrinsically motivated tended to be more active in using the game. The implications of the study for researchers and practitioners in CT education are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In today’s digital society, computation has permeated various aspects of our lives, and computational skills have gradually become critical. In recent years, the need to cultivate computational thinking (CT) skills has been stressed and enormous efforts have been made to promote CT education. The emergence of CT can be traced back to 1980, when Seymour Papert proposed the term “algorithmic thinking” to describe “the art of deliberately thinking like a computer, according, for example, to the stereotype of a computer program that proceeds in a step-by-step, literal, mechanical fashion” (Papert, 1980, p. 27). The term CT has attracted renewed attention since 2006, when Jeanette Wing conceptualized it as “an approach to solving problems, designing systems, and understanding human behavior, by drawing on the concepts fundamental to computer science” (Wing, 2006, p. 33).

Wing (2006) indicated that CT is not merely professional know-how for computer scientists but rather a set of useful skills for everyone—analytical skills that should be grasped by young people. Wing’s call for attention to the importance of CT sparked a wave of integration of CT into K–12 education (Bocconi et al., 2016). With coding, or computer programming, as the main vehicle for supporting CT learning activities (Grover & Pea, 2013), momentum has gathered worldwide for the introduction of coding to national elementary school curricula (e.g., in England, as cited in Kotsopoulos et al., 2017) and secondary school curricula (e.g., in France, as cited in Bey et al., 2019).

Meanwhile, distance learning has gradually joined structured classroom instruction as a major educational setting. COVID-19 has increased the importance of home-based and technology-enabled learning activities, as educators have become keenly aware of the need to deal with unexpected events that may interfere with conventional face-to-face teaching (Daniel, 2020). To support distance learning for coding, appropriate instructional tools are needed to allow students to undertake independent coding practice. Of the manifold learning tools for CT, including programming platforms, robotics, digital games, and unplugged activities (Shute et al., 2017), digital games have advantages in supporting learning in both formal classroom and student-centered e-learning settings, as they provide low barriers of entry for distance learners and an interactive learning environment with explicit goals in which feedback and scaffolding are provided (Giannakoulas & Xinogalos, 2018).

Many initiatives have been launched to integrate coding games into K–12 education, and their effects on learning have been widely reported, including coding acquisition (Bachu & Bernard, 2011), problem-solving skills (Kazimoglu et al., 2012), and learning attitudes (Theodoropoulos et al., 2017). The use of digital coding games has been most widely explored in the classroom setting, where students were asked to complete certain tasks in the presence of a teacher (e.g., Buffum et al., 2016; Zhao & Shute, 2019); few studies have been conducted in e-learning settings (e.g., Yücel & Rızvanoğlu, 2019). With distance learning an important setting for CT education today, more research is needed into students’ learning experiences in this context. Furthermore, researchers have concentrated on the learning effects of digital coding games, failing to study how these tools are utilized by students. This is an important consideration, given that the benefits of a learning tool are highly dependent on users’ experience of it (Pituch & Lee, 2006). To fill a gap in research on students’ learning experience of coding tools in distance learning contexts, the present study explored how a particular coding game was used by young students and investigated the factors that influenced their intention to play the game in a home-based informal setting.

2 Background

2.1 Digital games for coding

Coding for children can be traced back to the theory of constructionism (Papert, 1980), which describes how students learn by constructing things. Papert (1980) stressed the importance of engaging children in creating their own projects and reflecting on their own thinking during the learning process. Since the term “computational thinking” was re-introduced by Wing (2006), numerous initiatives have been taken to develop child-friendly coding tools (Bers, 2018) that can provide a playground for students to practice CT and coding skills (Lockwood & Mooney, 2018). These tools are associated with open task environments, in which students can create programming projects with no task constraints, or goal-oriented environments, in which concrete tasks are assigned to guide user progression (Manske et al., 2019). Digital coding games are the main representative of goal-oriented tools and offer an interactive coding environment with explicit goals and instant feedback embedded (Giannakoulas & Xinogalos, 2018). This presents low barriers to entry for novice students, who can progress in their coding practice with clear objectives and helpful scaffolding (Giannakoulas & Xinogalos, 2018).

Digital games for coding are generally designed with a puzzle interface, such as Koadable (Pila et al., 2019) and Program Your Robot (Kazimoglu et al., 2012), with players completing missions by devising coding solutions based on provided commands. Some games target novice coding learners with block-based solutions, such as Run Marco (Giannakoulas & Xinogalos, 2018) and TurtleTalk (Jung et al., 2019), and others target more experienced learners with text-based solutions, such as Code Combat (Yücel & Rızvanoğlu, 2019) and PascAl Shopper (Bachu & Bernard, 2011).

There have been numerous attempts to adopt coding games in CT education (e.g., Theodoropoulos et al., 2017; Zhi et al., 2018; Pila et al., 2019), and positive results have been widely reported. The effectiveness of these tools in supporting student learning has been reported in terms of CT skills (e.g., Kusnendar & Prabawa, 2019), coding comprehension (e.g., Bachu & Bernard, 2011), computer science concepts (Werneburg et al., 2018), and reasoning ability (Rose, 2016). In addition, several studies have shown that these tools can improve learning attitudes, such as attitudes towards computer science (Zhao & Shute, 2019) and towards coding (Yücel & Rızvanoğlu, 2019). Nonetheless, some challenges remain. Yücel and Rızvanoğlu (2019), for example, noted that students’ lack of interest in coding may damage their attitude towards coding games, and Kazimoglu et al. (2012) reported negative feedback on a coding game from students, observing that the improper design of the game rules (e.g., awards) had negatively influenced their interest in the coding game. In summary, coding games are a popular tool for learning CT and have been widely studied in the literature, with both benefits and challenges reported.

Despite these recent advances, there are three directions in which research on coding games could be further developed. First, studies have focused on formal classroom learning, in the presence of teachers, as the educational setting (e.g., Theodoropoulos et al., 2017; Pila et al., 2019). There has been little research in the context of distance learning, despite the stress placed on student-centered learning in coding education (Luo et al., 2020) due to the importance of learning by exploring in this field (Meerbaum-Salant et al., 2011). Furthermore, the development of remote education has been accelerated by disruptions to normal face-to-face teaching during the COVID-19 pandemic (Daniel, 2020). It is therefore well worth studying how students interact with coding games as learning tools in a home-based learning context.

Second, although studies have provided rich insights into the effects of coding games on learning (e.g., Theodoropoulos et al., 2017; Pila et al., 2019), explorations of what motivates students to use these learning tools are scarce. Given the barriers that have been identified to students’ productive use of coding games, such as their lack of interest in coding (Yücel & Rızvanoğlu, 2019) and lack of satisfaction with game design (Kazimoglu et al., 2012), it is worth exploring how students perceive these tools to guide educational practitioners on further improvement of the tools. More work is therefore needed to investigate the factors that drive students to use coding games.

Third, studies that have explored students’ intention to play educational games have focused on the design of these tools (e.g., Cheng et al., 2013; Giannakoulas & Xinogalos, 2018), with less attention paid to subject content. This is understandable, given that content factors are often considered outcomes of using the tools rather than motivators. However, with students now exposed to many available educational tools, it is vital to explore what drives them to choose a particular tool in the first place before they have an idea of its design. In the context of coding games, there may be factors beyond game design that affect students’ motivation (e.g., perceptions of the subject content) that have yet to be explored.

2.2 Factors influencing the actual use of digital games

A wide range of factors behind the intention to use digital tools have been explored, with the technology acceptance model (TAM) (Davis, 1993) often serving as the theoretical underpinning (e.g., Hsu & Lu, 2004, Cheng et al., 2013; Giannakoulas & Xinogalos, 2018). The TAM was developed to explain users’ intention to use a technical tool by identifying the effects of external factors on internal beliefs, attitudes, and intentions (Davis, 1993). Two factors have been put forward as playing a major role in users’ intention to use a tool (Min et al., 2019; Nikou & Economides, 2017): perceived usefulness, reflecting the degree to which an individual perceives the tool as likely to improve their performance, and perceived ease of use, reflecting the degree to which an individual perceives the tool to be simple and convenient to use (Davis, 1993). The TAM has been widely used in the game coding context, in which the effects of both of these variables have been observed (e.g., Çakır et al., 2021; Giannakos, 2013).

Apart from the features of digital games as technology systems, studies have identified specifically game-related features as factors influencing user acceptance, among which feeling of enjoyment (e.g., Ha et al., 2007; Ibrahim et al., 2011) and feeling of competence (e.g., Kim & Shute, 2015; Ryan et al., 2006) have been most widely reported. Feeling of enjoyment reflects the degree to which users perceive a game as enjoyable (Ha et al., 2007) and has been identified as a potentially important factor explaining user intentions in game-based learning environments (Giannakos, 2013). Meanwhile, as users can improve their self-esteem through successful task resolution (Giannakos, 2013), the feeling of competence they gain from solving in-game problems may also exert a major influence on their intention to use a game (Kim & Shute, 2015).

Nonetheless, as mentioned above, studies have reported that negative attitudes towards coding games may relate specifically to the subject content (e.g., students might have no interest in coding or hold the stereotypical view that coding is difficult) (Yücel & Rızvanoğlu, 2019). This observation led us to assume that attitudes towards game content could be a factor in students’ interest in coding games, and thus to include an examination of motivation, which has been identified as a major aspect of learning attitudes. It has been argued that motivation plays a critical role in student learning, as the driving force that empowers students to perform well and overcome challenges (Tohidi & Jabbari, 2012), and empirical studies have supported a close link between motivation and academic achievement (Majer, 2009; Steinmayr & Spinath, 2009). Therefore, we included factors related to students’ attitudes towards coding to examine whether coding motivation affected their intention to use a coding game.

2.3 The present study

To address the identified gaps in the literature, this study investigated the factors that influenced students’ intention to use a coding game in an informal home-based setting, covering factors from the TAM (perceived usefulness, perceived ease of use), specifically game-related features (feeling of enjoyment, feeling of competence), and perceptions of game content (coding motivation). The research model is presented in Fig. 1. The study addressed the following research questions.

-

RQ1: To what extent is students’ evaluation of a coding game (perceived usefulness, perceived ease of use, feeling of enjoyment, feeling of competence) related to their actual use of the game?

-

RQ2: To what extent are students’ attitudes towards coding (coding motivation) related to their actual use of a coding game?

-

RQ3: What motivates students to play a coding game at home?

By answering these questions, this study contributes to the literature in three ways. First, the study was conducted in an informal learning context, thus providing insights into how to motivate students when coding learning activities are conducted in a home-based setting. Second, the study shifted the emphasis of research on intention to use coding games from assessing chiefly the design of the tools to considering how students perceive the subject content. Unraveling the relationships between these factors and user intentions sheds light on the mechanisms underlying the effective adoption of coding games as tools for CT education. Third, the study examined the connections between the aforementioned factors and user intentions based on actual user gameplay data extracted from log files, thus enriching understanding of user intentions with empirical evidence.

3 Method

3.1 Participants

Students from a primary school in Hong Kong were invited to participate. This cohort was selected based on their participation in a three-year longitudinal study led by the second author on developing CT in a school-based curriculum. This helped to mitigate the challenges that might normally be faced by students using an unfamiliar coding tool in a home-based context. This group of students was judged to have had sufficient exposure to the basics of CT problems to have attained the prerequisite skills for the coding game. As a public institution with a student body that was generally middle-class or below, the school was representative of local primary schools in Hong Kong. The students were informed of the objectives of the study in advance, and 104 Grade 6 students (aged 11–12) agreed to participate.

3.2 Apparatus

The coding game adopted in this study was Coding Galaxy (CG, see Fig. 2), which has been successfully used to teach CT in Hong Kong primary schools (Zhang et al., 2021). The game is designed for young beginners before they move on to the code-making tools that are commonly used in CT or coding education. CG has mobile and Web versions, providing great flexibility for learners in terms of device type, time of play, and location of play. CG has multiple language settings (English, Spanish, Chinese, etc.) and simple controls (dragging commands into an answer panel) to suit young learners worldwide.

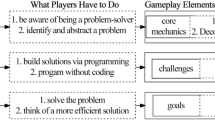

CG is a game coding platform with 200 missions requiring the use of five basic CT constructs: sequences, loops, debugging, conditionals, and functions. The mission preface is presented in Fig. 3. CG provides an arrow-based coding environment with a storyline requiring the user to help a character (an astronaut) to reach their destination while collecting as many crystals as possible (if any) on the way (see Fig. 2). To complete the task, the player needs to identify a viable route from the initial state to the destination and build it using the provided coding commands in the arrow-based coding language. To solve the puzzle, the following CT skills are needed: (1) decomposition—dividing the terrain into segments; (2) abstraction—identifying which segments are useful for developing the route and disregarding those that are irrelevant; (3) pattern recognition—identifying patterns in the structures of different segments; (4) use of algorithms—formulating a program with a set of ordered steps that can be carried out by the device; and (5) debugging—detecting and fixing errors when the program does not work as expected.

3.3 Measures

3.3.1 Pre-intervention questionnaire

Before the intervention (see Sect. 3.4 for more information), information on coding motivation (CM) was collected to gauge the students’ attitudes towards coding. According to Ryan and Deci (2000), the two major types of motivation are intrinsic motivation, which guides an individual to do something “because it is inherently interesting or enjoyable,” and extrinsic motivation, which leads an individual to complete a task “because it leads to a separable outcome” (p. 55). As intrinsic motivation best reflects the inherent tendency to want to complete a task (Ryan & Deci, 2000), we focused on measuring the students’ intrinsic motivation to code. The measure was adapted from the programming motivation scale used by Jiang and Wong (2017), which yielded proper psychometric qualities in the Chinese context. The adapted scale comprised nine items (e.g., “Learning coding is fun”) rated on a 5-point Likert scale (see Appendix A).

3.3.2 Post-intervention questionnaire

After the intervention, the factors related to the students’ perceptions of the game were assessed. Two variables from the TAM—perceived ease of use (PEU) and perceived usefulness (PU)—were measured on a 5-point Likert scale with items adapted from Davis et al. (1989); for example, “Learning how to play the game was easy for me” (PEU) and “The game improved my understanding of sequence” (PU). Two game-specific factors—feeling of enjoyment (FE) and feeling of competence (FC)—were assessed on a 5-point Likert scale with items adapted from Kim and Shute (2015), such as “Playing the game was fun” (FE) and “I felt competent in playing the game” (FC). The full questionnaire is provided in Appendix B.

3.3.3 Gameplay data

Gameplay data were extracted from the players’ game records. Data on the actions and statistics of each player (e.g., in-game performance, total time spent on a mission, number of trials for a mission) were logged by the game. In this study, we used an indicator related to the actual use of the game, mission completion, which refers to the percentage of the assigned missions that the students completed during the intervention period (the number of completed missions as a percentage of the number of assigned missions). The data for this indicator were extracted from the log files of each player.

3.3.4 Focus group interviews

To better understand the students’ user intentions, we purposively selected students for focus group interviews. Specifically, 20 active users and 20 inactive users were identified as candidates based on their actual use of the game. After ensuring an even distribution of students at different levels of academic performance, 24 of the candidate students (12 active users and 12 inactive users) were invited to participate in the group interviews. Sample questions are “What aspects of the game were attractive to you?”, “What motivated you to play the game at home?”, “What motivated you to try to get the highest score?”, “What aspects of the game were unattractive to you?”, “For what reasons did you not want to play the game at home?”, “Why did you not want to get the highest score?”, and “What did you learn from the game?” The focus group interviews were audio-recorded and transcribed verbatim. A qualitative thematic analysis was conducted by the first author using an inductive approach, with a reliability check performed by the third author.

3.4 Procedure

The intervention was implemented in June 2019 and lasted for 11 days. A procedural diagram is displayed in Fig. 4. On the first day, the pre-intervention questionnaire was administered to collect CM scores, and the students were provided with some basic instructions for playing the game. Over the following 10 days, the students were given full access to the game chapters on sequence, loops, debugging, conditionals, and functions. No constraints were imposed on the students’ usage of the tool. On the last day, the post-intervention questionnaire was administered to collect the students’ perceptions of the game design (PU, PEU, FE, and FC), after which the focus group interviews were conducted.

4 Results

4.1 Psychometrics of the measurements

To examine the psychometric properties of the selected measures, validity and reliability tests were performed based on the criteria proposed by Fornell and Larcker (1981). First, to identify construct validity, the factor loading for each item regarding its corresponding latent factor was checked, based on which the average variance extracted (AVE) and composite reliability were calculated. Second, reliability evidence was reported via Cronbach’s alpha to indicate the internal consistency of each construct. Third, analyses of discriminant validity were carried out to check that the constructs were independent of each other.

The results for construct validity and reliability are displayed in Table 1. The Cronbach’s alpha and composite reliability values were in the range of 0.72–0.92 and 0.84–0.93 respectively, all above the cutoff point of 0.7 (Nunnally, 1994), indicating the adequate reliability of each construct. The AVE values were between 0.49 and 0.76, with only one construct (coding motivation) slightly below the threshold of 0.5 (Segers, 1997). Given that this value was close to the threshold and the construct showed satisfactory parameters for other properties, the original scale was retained. Further, discriminant validity was checked to ensure that the measured constructs were independent of each other. Based on the criterion suggested by Fornell and Larcker (1981), the correlation coefficients between constructs should not be greater than the square root of the AVE for each construct. The results are reported in Table 2, in which the square root of the AVE for each of the corresponding constructs is highlighted in bold. All of the bivariate correlations were positive and significant in the range of 0.346–0.698, with no coefficients exceeding the square root of the AVE for the corresponding construct, indicating that the latent factors were independent of each other. Thus, the measures showed good psychometric qualities. Finally, the assumption of homogeneity of variance was tested to identify the influence of the attributes of the research instruments. An independent samples t-test was performed for each construct with gender as the grouping variable, and the results indicated that equality of variance was met for all constructs.

4.2 Descriptive statistics

The descriptive statistics are displayed in Table 3. Items rated on the Likert scale were converted into a numerical scale ranging from 1 (strongly disagree) to 5 (strongly agree), with a midpoint of 3. For each respondent, the mean score for the included items was calculated to represent the score for each construct. For each construct, the mean score was above the midpoint, ranging from 3 to 4. Regarding gameplay data, mission completion was reported as a percentage, with the results showing that the participants completed an average of 53.2% of the assigned missions.

4.3 Regression analyses

4.3.1 Statistical methods

Regression analyses were conducted to identify the relationships between the proposed factors and the students’ intention to use the coding game (RQ1 and RQ2). Factors related to perceptions of the game design (PEU, PU, FE, FC) and perceptions of the subject content (CM) were entered as independent variables, and the factor extracted from the gameplay data (mission completion) was selected as the dependent variable.

Regression models were selected with caution based on the characteristics of the dependent variable, with three steps followed. First, the distribution of mission completion was checked and found to be left-skewed. Next, the data were therefore log-transformed, which is a common way to transform skewed data to conform to normality (Feng et al., 2014). Third, the distribution of the log-transformed data was checked visually, indicating a normal distribution (see Fig. 5). Thereby, a linear regression model was selected and the log-transformed value of mission completion was used as the dependent variable, referred to as log-transformed mission completion (LMC). All of the statistical analyses were performed in IBM SPSS Statistics 27.

4.3.2 Results of regression analysis

Prior to conducting the linear regression analysis, the relevant assumptions were tested. First, a sample size of 104 was considered adequate given the five independent variables in the analysis (Tabachnick et al., 2007). Second, a check for normality was conducted for the dependent variable using the Shapiro–Wilk normality test, which confirmed a normal distribution (p = 0.258). Third, a visual check of the scatter plots for individual constructs was conducted, and the results revealed that the criterion for linearity was met. Fourth, the assumption of multicollinearity was examined, and it was shown that the tolerance values for the independent variables were between 0.425 and 0.761 and the variance inflation factor (VIF) ranged from 1.32 to 2.36, all indicating acceptable values (Menard, 2001). A collinearity diagnosis was then performed (see Table 4). According to the statistical output, the condition index was suitable for each dimension, and none of the dimensions had more than one value above 0.9 for the proportion of variance, indicating no collinearity problem (Hair et al., 2010). Fifth, a residual plot was examined (see Fig. 6), which displayed a fairly random dispersion around the residual = 0 line over the range of the predicted value of the dependent variable, suggesting that the data were homoscedastic and a linear model was appropriate.

After ensuring that the assumptions required for multiple regression analysis were satisfied, a hierarchical multiple linear regression analysis was conducted. The analysis was performed in three stages based on the order of inclusion of the independent variables. First, the factors stemmed from the TAM (PEU and PU) were entered. Second, the factors related to the game (FE and FC) were entered. Finally, the variable associated with the subject content (CM) was entered. The variables were entered in this order because the original hypotheses were developed from the TAM, based on which we added additional variables related to the reported factors specific to digital games, after which we extended the model with a factor related to the motivation to learn the subject content.

The regression results are displayed in Table 5. At Stage 1, PEU and PU did not contribute significantly to the model, F(2, 90) = 1.041, p = 0.357. Adding FE and FC at Stage 2 explained an additional 4.8% of the variance in LMC, but the change in R2 was non-significant, F(2, 88) = 2.271, p = 0.109. At this stage, FE was the most important predictor of LMC, explaining 4.7% of the variance. Finally, at Stage 3, the addition of CM to the regression model explained an additional 8.2% of the variance, and the change in R2 was significant, F(1, 87) = 8.455, p < 0.01. Figure 7 presents a summary of the final model. With all five independent variables included in the model, only FE (β = 0.319, p < 0.05) and CM (β = 0.329, p < 0.01) were significant predictors of LMC. The most important predictor of LMC was CM, which uniquely explained 8.2% of the variance.

4.4 Results of focus group interviews

To more deeply investigate the students’ intention to use the game (RQ3), perceptions of the game and the game-play experience were collected from active users and inactive users. The first author and the third author coded the results using an inductive approach and the results were compared to examine inter-rater reliability. The rate of agreement was desirable (91.6%) and discrepancies were resolved through discussion until a consensus was reached. Table 6 displays a summary of the themes that emerged with example quotes, categorized based on the interview questions (see Sect. 3.3.3).

The results indicate that the game was attractive to the students for its well-designed interface and simple instructions. The students were motivated to play the game for several reasons, including the exciting gameplay experience, extrinsic influence from the school, and intrinsic curiosity about their personal potential in the game. They also emphasized their desire to win the game and receive high scores. Nonetheless, some shortcomings of the game were discussed. It was noted that playing the game for a long time might lead to boredom because of the uniform playing mode across missions. In addition, some chapters might be overly challenging for young students, which might damage their confidence in proceeding with later missions. Regarding reasons for not using the game at home, a heavy workload from other subjects was proposed by both user groups, and a lack of interest in coding was reported by the inactive users. As for attempts to get the highest score, some students performed multiple trials before giving up, while other (mainly inactive) users tried fewer times, seeking to finish the task more quickly. Nevertheless, perceived improvements from playing the game were frequently reported, covering coding knowledge, problem-solving skills, and self-efficacy.

To summarize, differences were detected between the active and inactive user groups. The active users appreciated the game for its interface and task content, whereas the inactive students tended to concentrate more on the gameplay experience, noting its ease of use and the feeling of accomplishment it gave them. The active users also tended to play the game for interest and curiosity, whereas their inactive counterparts played the game for extrinsic reasons (e.g., as an assignment). Regarding the motivation to get the highest scores, the active students made more attempts to obtain the highest scores before quitting, whereas the inactive players appeared to have rushed to finish the tasks. Overall, however, the students from both groups perceived cognitive and attitudinal improvements from playing the game.

5 Discussion

To shed light on students’ motivation to use coding games in an informal context, this study explored the factors influencing students’ actual use of a coding game in a home-based setting. Based on the TAM framework and features of digital games, five factors were investigated, and significant positive effects of coding motivation and game enjoyment were found. Additionally, focus group interviews with both active and inactive users indicated that intrinsic motivation was a critical force driving students to use the game at home. These results indicate that motivation to learn the subject matter can have a great influence on students’ interest in using coding applications. The implications of the results for research and practice are as follows.

To leverage digital games as CT learning activities to support student-centered learning in classroom and distance settings, promoting acceptance of these tools is a major consideration for practitioners. The results of this study indicate that students’ motivation to code was the dominant predictor of actual use, implying that for coding games, students’ interest may derive not only from the playability of the game but also from their interest in the subject content. Interestingly, the results resonate with the qualitative findings of Yücel and Rızvanoğlu (2019). In their study, students were invited to explore a coding game called Code Combat for an hour and were then interviewed about their game experience. Several students reported negative attitudes towards the game when they were told it was related to coding, which was echoed in our findings regarding the influence of attitudes towards coding on the intention to use coding games. Although digital games are considered a playground for learning practices, acceptance of these tools may vary across individuals, and passion for the subject appears to be an important individual-level variable. Thus, for educational practitioners seeking to adopt coding games to support CT learning activities, students’ interest in coding as such should be promoted to ensure a high acceptance rate and fruitful usage of the tool.

Regarding the responses from the focus group interviews, comparisons were made between active users and inactive users, and the results chimed with our quantitative findings. A good game design (e.g., ease of use and a good award mechanism) enhanced interest in the game among the inactive users. In comparison, the importance of a positive in-game experience (e.g., enjoying the challenge and finding the game interesting) was stressed by the active users, indicating that their interest may have been aroused by intrinsic motivation (e.g., interest in coding). This implies that active and inactive users may have different sources of motivation, and it is a reasonable conjecture that intrinsic motivation drove the students to be more active in using the game. This result, synchronized with our quantitative findings, stresses the importance of promoting students’ intrinsic motivation to code.

Another major predictor was game enjoyment, which resonates with relevant studies of digital educational games (e.g., Ibrahim et al., 2011; Camilleri & Camilleri, 2019). Approaches to improving game enjoyment have been widely discussed, such as designing storylines appropriate to the target group (Yeni & Cagiltay, 2017) and allowing for offline access (Ha et al., 2007). Some specific suggestions for coding games were proposed by the participants in our focus groups, the most common of which was to add collaborative challenges. This aligns with the emerging trend of distributed pair programming as a distanced collaborative mode for solving CT and programming problems (Xu & Correia, 2021). Studies have reported that collaborative programming can lead to greater engagement among students (e.g., Wu et al., 2019), which fits the goal of increasing players’ enjoyment of coding games. A multiplayer collaborative mode is therefore suggested to developers for the future design of coding games.

The results of the present study show that ease of use and usefulness did not predict the use of the game, which contradicts the findings of a host of TAM studies of digital games (e.g., Hsu & Lu, 2004; Ha et al., 2007; Cheng et al., 2013). A possible explanation for this inconsistency is the difference in data collection method adopted by this study. Whereas previous related studies have collected user intentions through self-reported data, with players recalling the frequency with which they have used a tool (e.g., Hsu & Lu, 2004; Ibrahim et al., 2011), we collected the data directly from players’ log files. Users who enjoy using a tool probably remember their experience of it more clearly, increasing the likelihood of a correlation between the evaluative factors and user intentions. Given the different context for collecting data in the present study, the difference in results is unsurprising.

This study has rich implications for a range of stakeholders. For researchers, the study enhances theoretical understanding of the factors that can increase the use of digital coding games. Although the TAM stresses the importance of perceived ease of use and perceived usefulness as determinants of using a tool, this study of a coding game demonstrates that coding motivation and feeling of enjoyment are essential in predicting user intention in this context. These two variables explained much of the variance in the use of the coding game. Moreover, our findings can benefit educational practitioners by illustrating that students’ motivation to learn coding can be a key factor in engaging them to use coding tools. Coding games are widely applied in K–12 education for their playful and interactive features. Also, they allow flexibility for instructors regarding educational settings, being applicable for both classroom teaching and distance learning. Most importantly, the self-directed nature of these tools allow for their use when responding to emergencies (e.g., COVID-19) that hinder physical instruction. Studying the factors that influence student acceptance of coding games can provide practical guidelines for CT education practitioners seeking to implement these tools across learning contexts. Especially, although games might be enjoyable tools for teaching and learning, their actual use by students may depend not only on their format but also on the learning content. If students are interested in the content a game intends to deliver, their actual use of the tool is likely to be high. Therefore, increasing students’ interest in coding may be the key to addressing the issue of a lack of motivation to use coding applications. If this prerequisite is met, appropriate coding games will probably be more welcomed by young learners.

6 Conclusion and future research

This study explored the factors that influenced students’ intention to use a coding game. Through statistical analyses of hierarchical multiple linear regression, two factors commonly tested in TAM studies (PU, PEU) were adopted to frame the data collection, with the model extended with variables related to digital games (FE, FC) and factors linked to the subject content (CM). Focus group interviews were carried out to explore user intentions and comparisons were made between active and inactive users. The results were in line with the findings of the regression analyses. CM and FE predicted actual use, with CM being the dominant factor. The implication for researchers is that variables regarding interest in coding may explain much of the variance in the usage of coding games. For practitioners in CT education, collaborative programming can be embedded into coding applications to improve student engagement. In addition, although various coding tools are designed with motivating features, it is vital to promote students’ interest in coding per se.

There are several limitations of this study, which we intend to minimize in future studies. First, the diversity of the participants was limited in terms of age and coding experience, which may limit the generalizability of the results. In future research, we intend to recruit participants from diverse demographic backgrounds (e.g., age brackets, game experience) to allow for the exploration of moderating effects. Second, the duration of the intervention was relatively short. It is likely that the less active students had limited spare time during the 10-day period, given that the game has more than 200 missions. Future research should eliminate the effect of time pressure on user behavior and provide sufficient time for students to explore the tool. Third, although the feeling of enjoyment was identified as influencing actual use, it was surveyed after the activity, making it difficult to determine whether it was a cause or an outcome. This may be unavoidable, as perceptions of tool features cannot be collected before users try a tool. Caution is therefore suggested when interpreting results related to the tool features.

Data availability statement

The research data associated with this paper can be accessed on request from the corresponding author.

References

Bachu, E., & Bernard, M. (2011). Enhancing computer programming fluency through game playing. International Journal of Computing, 1(3).

Bers, M. U. (2018). Coding and computational thinking in early childhood: The impact of ScratchJr in Europe. European Journal of STEM Education, 3(3), 8. https://doi.org/10.20897/ejsteme/3868

Bey, A., Pérez-Sanagustín, M., & Broisin, J. (2019). Unsupervised automatic detection of learners’ programming behavior. European Conference on Technology Enhanced Learning.

Bocconi, S., Chioccariello, A., Dettori, G., Ferrari, A., Engelhardt, K., Kampylis, P., & Punie, Y. (2016). Developing computational thinking in compulsory education. European Commission, JRC Science for Policy Report, 68.

Buffum, P. S., Frankosky, M., Boyer, K. E., Wiebe, E. N., Mott, B. W., & Lester, J. C. (2016). Collaboration and Gender Equity in Game-Based Learning for Middle School Computer Science. Computing in Science & Engineering, 18(2), 18–28. https://doi.org/10.1109/MCSE.2016.37.

Çakır, R., Şahin, H., Balci, H., & Vergili, M. (2021). The effect of basic robotic coding in-service training on teachers’ acceptance of technology, self-development, and computational thinking skills in technology use. Journal of Computers in Education, 8(2), 237–265. https://doi.org/10.1007/s40692-020-00178-1

Camilleri, A., & Camilleri, M. A. (2019). The students’ perceived use, ease of use and enjoyment of educational games at home and at school. 13th Annual International Technology, Education and Development Conference. Valencia, Spain.

Cheng, Y.-M., Lou, S.-J., Kuo, S.-H., & Shih, R.-C. (2013). Investigating elementary school students’ technology acceptance by applying digital game-based learning to environmental education. Australasian Journal of Educational Technology, 29(1). https://doi.org/10.14742/ajet.65

Daniel, J. (2020). Education and the COVID-19 pandemic. Prospects, 49(1), 91–96. https://doi.org/10.1007/s11125-020-09464-3.

Davis, F. D. (1993). User acceptance of information technology: System characteristics, user perceptions and behavioral impacts. International Journal of Man-Machine Studies, 38(3), 475–487. https://doi.org/10.1006/imms.1993.1022

Davis, F. D., Bagozzi, R. P., & Warshaw, P. R. (1989). User acceptance of computer technology: A comparison of two theoretical models. Management Science, 35(8), 982–1003. https://doi.org/10.1287/mnsc.35.8.982

Feng, C., Wang, H., Lu, N., Chen, T., He, H., & Lu, Y. (2014). Log-transformation and its implications for data analysis. Shanghai Archives of Psychiatry, 26(2), 105.

Fornell, C., & Larcker, D. F. (1981). Structural equation models with unobservable variables and measurement error: Algebra and statistics. Journal of Marketing Research, 18(3), 382–388. https://doi.org/10.1177/002224378101800313

Giannakos, M. N. (2013). Enjoy and learn with educational games: Examining factors affecting learning performance. Computers & Education, 68, 429–439. https://doi.org/10.1016/j.compedu.2013.06.005

Giannakoulas, A., & Xinogalos, S. (2018). A pilot study on the effectiveness and acceptance of an educational game for teaching programming concepts to primary school students. Education and Information Technologies, 23(5), 2029–2052. https://doi.org/10.1007/s10639-018-9702-x

Grover, S., & Pea, R. (2013). Computational thinking in K–12: A review of the state of the field. Educational Researcher, 42(1), 38–43. https://doi.org/10.3102/0013189X12463051

Ha, I., Yoon, Y., & Choi, M. (2007). Determinants of adoption of mobile games under mobile broadband wireless access environment. Information & Management, 44(3), 276–286. https://doi.org/10.1016/j.im.2007.01.001

Hair, J. F., Black, W. C., Babin, B. J., & Anderson, R. E. (2010). Advanced diagnostics for multiple regression: A supplement to multivariate data analysis. Prentice Hall.

Hsu, C.-L., & Lu, H.-P. (2004). Why do people play on-line games? An extended TAM with social influences and flow experience. Information & Management, 41(7), 853–868. https://doi.org/10.1016/j.im.2003.08.014

Ibrahim, R., Khalil, K., & Jaafar, A. (2011). Towards educational games acceptance model (EGAM): A revised unified theory of acceptance and use of technology (UTAUT). International Journal of Research and Reviews in Computer Science, 2(3), 839.

Jiang, S., & Wong, G. K. (2017). Assessing primary school students’ intrinsic motivation of computational thinking. In 2017 IEEE 6th International Conference on Teaching, Assessment, and Learning for Engineering (TALE) (pp. 469–474). IEEE. https://doi.org/10.1109/TALE.2017.8252381

Jung, H., Kim, H. J., So, S., Kim, J., & Oh, C. (2019). TurtleTalk: An educational programming game for children with voice user interface. Paper presented at the Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems. https://doi.org/10.1145/3290607.3312773

Kazimoglu, C., Kiernan, M., Bacon, L., & Mackinnon, L. (2012). A serious game for developing computational thinking and learning introductory computer programming. Procedia-Social and Behavioral Sciences, 47, 1991–1999. https://doi.org/10.1016/j.sbspro.2012.06.938

Kim, Y. J., & Shute, V. J. (2015). The interplay of game elements with psychometric qualities, learning, and enjoyment in game-based assessment. Computers & Education, 87, 340–356. https://doi.org/10.1016/j.compedu.2015.07.009

Kotsopoulos, D., Floyd, L., Khan, S., Namukasa, I. K., Somanath, S., Weber, J., & Yiu, C. (2017). A pedagogical framework for computational thinking. Digital Experiences in Mathematics Education, 3(2), 154–171. https://doi.org/10.1007/s40751-017-0031-2

Kusnendar, J., & Prabawa, H. (2019). Bajo’s Adventure: An effort to develop students computational thinking skills through mobile application. Journal of Physics: Conference Series.

Lockwood, J., & Mooney, A. (2018). Computational thinking in education: Where does it fit? A systematic literary review. International Journal of Computer Science Education in Schools, 2(1), 41. https://doi.org/10.21585/ijcses.v2i1.26

Luo, F., Antonenko, P. D., & Davis, E. C. (2020). Exploring the evolution of two girls’ conceptions and practices in computational thinking in science. Computers & Education, 146, 103759. https://doi.org/10.1016/j.compedu.2019.103759

Majer, J. M. (2009). Self-efficacy and academic success among ethnically diverse first-generation community college students. Journal of Diversity in Higher Education, 2(4), 243. https://doi.org/10.1037/a0017852

Manske, S., Werneburg, S., & Hoppe, H. U. (2019). Learner Modeling and Learning Analytics in Computational Thinking Games for Education. In Data Analytics Approaches in Educational Games and Gamification Systems (pp. 187–212). Springer.

Meerbaum-Salant, O., Armoni, M., & Ben-Ari, M. (2011). Habits of programming in scratch. In Proceedings of the 16th annual joint conference on Innovation and technology in computer science education.

Menard, S. (2001). Collinearity. Applied logistic regression analysis Second Edition (Quantitative applications in the social sciences), (pp. 75–78) Sage Publications, Inc.

Min, S., So, K. K. F., & Jeong, M. (2019). Consumer adoption of the Uber mobile application: Insights from diffusion of innovation theory and technology acceptance model. Journal of Travel & Tourism Marketing, 36(7), 770–783. https://doi.org/10.1080/10548408.2018.1507866

Nikou, S. A., & Economides, A. A. (2017). Mobile-based assessment: Integrating acceptance and motivational factors into a combined model of Self-Determination Theory and Technology Acceptance. Computers in Human Behavior, 68, 83–95. https://doi.org/10.1016/j.chb.2016.11.020

Nunnally, J. C. (1994). Psychometric theory. Tata McGraw-hill education.

Papert, S. A. (1980). Mindstorms: Children, computers, and powerful ideas. Basic books.

Pila, S., Aladé, F., Sheehan, K. J., Lauricella, A. R., & Wartella, E. A. (2019). Learning to code via tablet applications: An evaluation of Daisy the Dinosaur and Kodable as learning tools for young children. Computers & Education, 128, 52–62. https://doi.org/10.1016/j.compedu.2018.09.006

Pituch, K. A., & Lee, Y.-K. (2006). The influence of system characteristics on e-learning use. Computers & Education, 47(2), 222–244. https://doi.org/10.1016/j.compedu.2004.10.007

Rose, S. (2016). Bricolage Programming and Problem Solving Ability in Young Children: An Exploratory Study. European Conference on Games Based Learning, 914.

Ryan, R. M., & Deci, E. L. (2000). Intrinsic and extrinsic motivations: Classic definitions and new directions. Contemporary Educational Psychology, 25(1), 54–67. https://doi.org/10.1006/ceps.1999.1020

Ryan, R. M., Rigby, C. S., & Przybylski, A. (2006). The motivational pull of video games: A self-determination theory approach. Motivation and Emotion, 30(4), 344–360. https://doi.org/10.1007/s11031-006-9051-8

Segers, M. S. (1997). An alternative for assessing problem-solving skills: The overall test. Studies in Educational Evaluation, 23(4), 373–398. https://doi.org/10.1016/S0191-491X(97)86216-5

Shute, V. J., Sun, C., & Asbell-Clarke, J. (2017). Demystifying computational thinking. Educational Research Review, 22, 142–158. https://doi.org/10.1016/j.edurev.2017.09.003

Steinmayr, R., & Spinath, B. (2009). The importance of motivation as a predictor of school achievement. Learning and Individual Differences, 19(1), 80–90. https://doi.org/10.1016/j.lindif.2008.05.004

Tabachnick, B. G., Fidell, L. S., & Ullman, J. B. (2007). Using multivariate statistics (Vol. 5). Boston, MA: Pearson.

Theodoropoulos, A., Antoniou, A., & Lepouras, G. (2017). How do different cognitive styles affect learning programming? Insights from a game-based approach in Greek schools. ACM Transactions on Computing Education (TOCE), 17(1), 3. https://doi.org/10.1145/2940330

Tohidi, H., & Jabbari, M. M. (2012). The effects of motivation in education. Procedia-Social and Behavioral Sciences, 31, 820-824.

Werneburg, S., Manske, S., Feldkamp, J., & Hoppe, H. U. (2018). Improving on guidance in a gaming environment to foster computational thinking. In Proceedings of the 26th International Conference on Computers in Education.

Wing, J. M. (2006). Computational thinking. Communications of the ACM, 49(3), 33–35. https://doi.org/10.1145/1118178.1118215.

Wu, B., Hu, Y., Ruis, A. R., & Wang, M. (2019). Analysing computational thinking in collaborative programming: A quantitative ethnography approach. Journal of Computer Assisted Learning, 35(3), 421–434. https://doi.org/10.1111/jcal.12348

Xu, F. & Correia, A. (2021). A systematic review of distributed pair programming based on the team effectiveness model. In Proceedings of Fifth APSCE International Conference on Computational Thinking and STEM Education 2021 (CTE-STEM).

Yeni, S., & Cagiltay, K. (2017). A heuristic evaluation to support the instructional and enjoyment aspects of a math game. Program, 51(4). https://doi.org/10.1108/PROG-07-2016-0050

Yücel, Y., & Rızvanoğlu, K. (2019). Battling gender stereotypes: A user study of a code-learning game, “Code Combat”, with middle school children. Computers in Human Behavior, 99, 352–365. https://doi.org/10.1016/j.chb.2019.05.029

Zhang, S., Wong, K. W. G., Chan, C. F. P. (2021). Achievement and effort in acquiring computational thinking concepts: a log-based analysis in a game-based learning environment. In Proceedings of Fifth APSCE International Conference on Computational Thinking and STEM Education 2021 (CTE-STEM).

Zhao, W., & Shute, V. J. (2019). Can playing a video game foster computational thinking skills? Computers & Education, 141, 103633. https://doi.org/10.1016/j.compedu.2019.103633.

Zhi, R., Lytle, N., & Price, T. W. (2018). Exploring instructional support design in an educational game for K-12 computing education. In Proceedings of the 49th ACM Technical Symposium on Computer Science Education. https://doi.org/10.1145/3159450.3159519

Acknowledgements

We would like to express our sincere gratitude to Netdragon for the full support in enabling the access to Coding Galaxy for data collection and in providing log data for running the analyses.

Funding

This project was funded by the General Research Fund of the Research Grants Council of Hong Kong (Project No. 18615216).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

None.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 A. Pre-intervention questionnaire

Items | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

Coding motivation 1. Learning coding is fun. 2. I think I have the ability to do coding well. 3. I would like to continue learning coding activities because it is useful. 4. When I participate in coding activities, I think about how much I enjoy them. 5. I think coding is fun. 6. I think coding is important. 7. I believe that participating in coding is beneficial to me. 8. I like coding very much. 9. I think learning coding activities can solve daily life problems. | |||||

1.2 B. Post-intervention questionnaire

Items | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

Perceived ease of use 1. Learning how to play the game was easy for me. 2. I found it easy to get the game to do what I wanted it to do. 3. It was easy for me to become skillful in playing the game. | |||||

Perceived usefulness 1. The coding game improved my understanding of sequence. 2. The coding game improved my understanding of loops. 3. The coding game improved my understanding of conditionals. 4. The coding game improved my understanding of functions. 5. Since playing the coding game, I am better able to explore connections between the whole and parts. 6. Since playing the coding game, I am better able to find and solve problems when they arise to make things work. 7. Since playing the coding game, I am better at problem-solving in terms of developing and testing possible solutions bit by bit. 8. Since playing the coding game, I am better at making something by building on existing code, projects or ideas. 9. Since playing the coding game, I am better at creating and expressing ideas through this new medium. 10. Since playing the coding game, I feel more empowered to ask questions about and with technology. | |||||

Enjoyment 1. I enjoyed playing the coding game very much. 2. Playing the coding game was fun. 3. I would play the coding game in my spare time. 4. I would have played the coding game for longer if I could. 5. I would recommend the coding game to my friends. | |||||

Competence 1. I felt competent in playing the coding game. 2. I felt very capable when playing the coding game. 3. My ability to play the coding game was well matched to the game’s challenges. | |||||

Rights and permissions

About this article

Cite this article

Zhang, S., Wong, G.K.W. & Chan, P.C.F. Playing coding games to learn computational thinking: What motivates students to use this tool at home?. Educ Inf Technol 28, 193–216 (2023). https://doi.org/10.1007/s10639-022-11181-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10639-022-11181-7